Caching is a fundamental concept in computer science, enhancing performance by storing frequently accessed data for quick retrieval. Understanding the nuances of caching strategies, specifically write-through and write-back caching, is crucial for optimizing system efficiency. This discussion will delve into the core principles of each method, exploring their distinct characteristics, and practical applications to provide a comprehensive understanding.

Caching is used in various computer systems, from web browsers and content delivery networks to databases and operating systems. Write-through caching ensures data consistency by immediately writing data to both the cache and the main storage. Conversely, write-back caching prioritizes speed by writing data only to the cache and delaying the write to main storage until later. The choice between these two methods depends on the specific application’s needs, considering factors like performance, data consistency, and fault tolerance.

Introduction to Caching

Caching is a fundamental technique used in computer systems to improve performance by storing frequently accessed data in a faster storage medium. This allows for quicker retrieval of information, reducing the need to access the slower, primary storage. The core principle revolves around the idea of locality of reference, which suggests that data recently accessed, or data near recently accessed data, is likely to be accessed again soon.

Core Concept of Caching

The central idea behind caching is to create a temporary storage area, often called a cache, that holds a subset of data from a larger, slower storage location. When a request for data is made, the system first checks the cache. If the data is present (a “cache hit”), it’s retrieved quickly. If the data is not in the cache (a “cache miss”), it’s fetched from the slower storage, and a copy is typically stored in the cache for future use.

This process significantly reduces access times for frequently requested data. The effectiveness of a cache is often measured by its “hit rate,” which is the percentage of requests that are satisfied by the cache.

Common Uses of Caching

Caching is implemented in various parts of computer systems to optimize performance. Several examples demonstrate the wide application of caching.

- CPU Caches: Modern CPUs incorporate multiple levels of cache (L1, L2, L3) to store frequently used instructions and data. This allows the CPU to access information much faster than if it had to fetch it from main memory (RAM). For example, a CPU might access instructions from the L1 cache in a few clock cycles, while accessing data from RAM could take hundreds of clock cycles.

- Web Browsers: Web browsers cache web pages, images, and other content to reduce loading times. When you revisit a website, the browser checks its cache for the necessary resources. If they are present, the page loads much faster than if the browser had to download everything again from the web server.

- Database Systems: Database management systems (DBMS) use caching to store frequently accessed data and query results in memory. This significantly speeds up query processing. For instance, a database might cache the results of a frequently executed query, so subsequent executions can retrieve the results directly from the cache instead of recalculating them.

- Content Delivery Networks (CDNs): CDNs use caching to store content (e.g., videos, images, and web pages) on servers geographically distributed around the world. This allows users to access content from a server that is closer to them, reducing latency and improving download speeds. For example, a user in Europe accessing a video hosted on a CDN will receive the content from a server in Europe, rather than from the original server in the United States.

- Operating Systems: Operating systems employ caching mechanisms to speed up file access, such as caching file system metadata or entire file blocks.

Benefits of Implementing Caching

Caching provides several significant benefits that enhance system performance and user experience. These advantages are essential for modern computing.

- Improved Performance: The primary benefit of caching is improved performance. By storing frequently accessed data in a faster medium, caching reduces access times and speeds up overall system responsiveness.

- Reduced Latency: Caching minimizes latency, which is the delay between a user’s request and the system’s response. This is particularly important for applications that require real-time interaction, such as web browsing and online gaming.

- Reduced Load on Primary Storage: Caching reduces the load on the primary storage (e.g., hard drives, database servers). This can prevent bottlenecks and improve the overall efficiency of the system. For example, by caching database query results, the database server experiences less load and can handle more concurrent requests.

- Increased Scalability: Caching can improve the scalability of a system. By offloading requests to the cache, the primary storage can handle more traffic without becoming overloaded. This allows the system to accommodate more users and handle larger workloads.

- Cost Savings: In some cases, caching can lead to cost savings. For example, by reducing the load on a database server, you might be able to avoid the need to upgrade to a more expensive server. Similarly, by using a CDN, you can reduce bandwidth costs.

Write-Through Caching

Write-through caching is a fundamental strategy in data storage and retrieval, offering a straightforward approach to maintaining data consistency. This method ensures that every write operation is simultaneously applied to both the cache and the underlying storage, guaranteeing that the most recent data is always available in both locations. This contrasts with other caching methods that might delay writes to storage for performance gains, potentially sacrificing immediate data consistency.

Write-Through Caching Method

The write-through caching method operates on a simple principle: every time data is written to the cache, it’s also immediately written to the main storage, such as a hard drive or a database. This dual-write process ensures data consistency, as the latest version of the data is always available in both the cache and the persistent storage. This approach is often favored in scenarios where data integrity is paramount, and the potential performance benefits of delaying writes are secondary.

This method is a cache update policy.

Data Flow Process in Write-Through Caching

The data flow in write-through caching is a sequential process that involves both the cache and the main storage. This process unfolds as follows:

- Write Request: A write request is initiated by the application. This request includes the data to be written and the memory address or key where the data should be stored.

- Cache Check: The caching system checks if the data associated with the requested address or key is already present in the cache.

- Cache Hit (Data Exists): If the data is present in the cache (a “cache hit”), the caching system proceeds to write the new data to the cache.

- Simultaneous Write: Simultaneously, the caching system writes the same data to the underlying storage (e.g., hard drive, database). This is the defining characteristic of write-through caching.

- Cache Miss (Data Does Not Exist): If the data is not present in the cache (a “cache miss”), the caching system first fetches the data from the main storage.

- Write to Cache and Storage: The data is then written to both the cache and the main storage. The data is also added to the cache.

- Confirmation: After both write operations (cache and storage) are complete, the caching system confirms the write operation to the application.

Example Scenario of Write-Through Caching

Consider an e-commerce website managing product inventory. A user updates the quantity of a specific product. A write-through cache can be used to ensure data consistency.

Diagram Description:

The diagram illustrates a simplified system with three components: a web server, a write-through cache, and a database. The web server represents the application layer where user interactions occur. The write-through cache sits between the web server and the database. The database represents the persistent storage for the product inventory data. Arrows show the data flow during a write operation.

The user’s action triggers a write operation that updates a product’s quantity. The cache is checked. If the product data exists in the cache (cache hit), the new quantity is written simultaneously to both the cache and the database. If the data is not in the cache (cache miss), the data is fetched from the database, written to the cache, and the update is written to both the cache and the database.

The web server receives confirmation after both the cache and the database have been updated.

Write-Back Caching

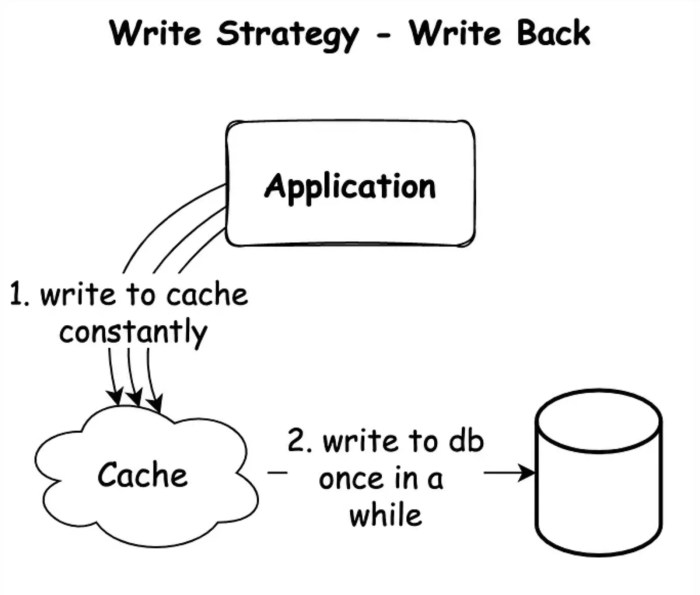

Write-back caching, also known as “write-behind” caching, offers a different approach to managing data writes compared to write-through caching. Instead of writing data to both the cache and the underlying storage simultaneously, write-back caching delays the write operation. This strategy aims to optimize performance by minimizing the number of write operations to the slower storage medium, ultimately boosting the speed of read operations.

Write-Back Caching Method

Write-back caching works by storing data modifications in the cache. These changes are initially only made to the cache memory, which is significantly faster than the main storage. The cache then periodically “flushes” or “writes back” these modified blocks of data to the underlying storage (e.g., a hard drive or database). This write-back process is typically triggered by one of several events: a timer expiring, the cache becoming full, or a specific instruction from the operating system or application.

This approach reduces the number of write operations to the slower storage, as multiple writes to the same location can be combined into a single write.

Data Flow Process in Write-Back Caching

The data flow in write-back caching follows a specific process to ensure data consistency and efficiency.

- Read Request: When a read request arrives, the cache is first checked for the requested data. If the data is present (a cache hit), it’s immediately returned to the requesting application. If the data is not in the cache (a cache miss), it’s retrieved from the main storage and stored in the cache.

- Write Request: When a write request occurs, the data is written to the cache. The cache entry is marked as “dirty,” indicating that the cache data has been modified and differs from the data in the main storage.

- Write Back: The “dirty” data in the cache is periodically written back to the main storage. This process can be triggered by several factors, such as the cache reaching its capacity, a timer expiring, or a specific instruction to flush the cache.

- Data Consistency: To maintain data consistency, mechanisms like write buffers and journaling are often employed. Write buffers temporarily hold write operations before they are committed to the main storage. Journaling records changes before they are written, enabling recovery in case of a system failure.

Example Scenario of Write-Back Caching

Consider an e-commerce website managing product inventory. The website uses a write-back cache to store frequently accessed product details.

Diagram Description: The diagram illustrates the data flow in a write-back caching system for product inventory. It consists of three main components: the Application (e-commerce website), the Cache (e.g., Redis or Memcached), and the Main Storage (e.g., a database like PostgreSQL). Arrows represent the flow of data. A two-way arrow indicates a write operation.

Scenario Steps:

- Application Request (Read): A user views a product detail page on the e-commerce website. The application checks the cache for the product details.

- Cache Hit: If the product details are in the cache (a cache hit), they are retrieved and displayed to the user.

- Cache Miss: If the product details are not in the cache (a cache miss), the application fetches them from the database (main storage). The data is then stored in the cache.

- Application Request (Write): A store administrator updates the product’s price. The application sends a write request to the cache.

- Write to Cache: The new price is written to the cache. The cache entry for that product is marked as “dirty.” The database is

not* immediately updated.

- Periodic Write-Back: At a scheduled interval or when the cache is nearing capacity, the cache controller identifies the “dirty” entries. The modified product price is written back from the cache to the database.

Diagram Elements:

- Application (E-commerce Website): The source and destination of read and write requests.

- Cache (Redis/Memcached): A component that stores frequently accessed product data. The cache is responsible for handling both reads and writes.

- Main Storage (PostgreSQL Database): The persistent storage for the product data.

- Arrows: The arrows represent data flow:

- An arrow from the Application to the Cache represents a read or write request.

- An arrow from the Cache to the Application represents the data retrieved from the cache.

- An arrow from the Main Storage to the Cache represents data fetched from the database on a cache miss.

- An arrow from the Cache to the Main Storage represents the write-back process.

In this scenario, the write-back caching strategy significantly improves performance by reducing the number of write operations to the database. The database only needs to be updated periodically, rather than for every price change, leading to faster response times for users and reduced load on the database server.

Differences

Understanding the distinctions between write-through and write-back caching is crucial for optimizing system performance and ensuring data integrity. Both methods offer solutions for accelerating data access, but they achieve this through different strategies, leading to varying trade-offs. This section compares and contrasts these two approaches, highlighting their key characteristics and implications.

Performance Comparison: Write-Through vs. Write-Back

Performance is a critical factor when evaluating caching strategies. The choice between write-through and write-back significantly impacts read and write operations.Write-through caching typically results in slower write operations because each write must update both the cache and the main storage. However, read operations can be faster if the data is present in the cache. Conversely, write-back caching generally leads to faster write operations since updates are initially made only to the cache.

Read operations can also benefit from write-back if the data is in the cache. However, write-back can experience delays if data needs to be written back to the main storage before being accessed.

Data Consistency: Write-Through and Write-Back

Data consistency is paramount in any caching system. Different approaches influence how quickly changes are reflected in the main storage and the potential for data loss.Write-through caching provides high data consistency. Every write operation immediately updates both the cache and the main storage. This minimizes the risk of data loss in the event of a system failure because the latest data is always available in the persistent storage.Write-back caching, on the other hand, has a slightly lower level of data consistency.

Data is written to the cache and held there until it is evicted or explicitly flushed to the main storage. This can lead to data loss if the system crashes before the cached data is written back. However, it also offers significant performance gains.

Advantages and Disadvantages: A Comparative Table

The following table summarizes the advantages and disadvantages of write-through and write-back caching:

| Feature | Write-Through | Write-Back |

|---|---|---|

| Write Performance | Slower. Every write requires an update to both cache and main storage. | Faster. Writes are initially made only to the cache. |

| Read Performance | Faster if data is in the cache. | Faster if data is in the cache. |

| Data Consistency | High. Data is always up-to-date in main storage. | Potentially lower. Data loss is possible if a system failure occurs before data is written back. |

| Complexity | Simpler to implement. | More complex due to the need for mechanisms to manage data flushing and cache coherence. |

| Cache Utilization | Potentially lower, as the cache is frequently updated with data that might not be needed immediately. | Higher, as frequently accessed data is retained in the cache for longer periods. |

| Risk of Data Loss | Low. | Higher. Data in the cache may be lost if not written back before a system failure. |

| Hardware requirements | Less demanding. | More demanding, requires a more sophisticated cache management system. |

Performance Considerations

Understanding the performance implications of different write strategies is crucial for optimizing caching systems. The choice between write-through and write-back significantly impacts read and write operations, directly influencing the overall performance of the system. Careful consideration of these factors is essential for designing a caching solution that aligns with the specific needs of an application.

Write-Through Caching Performance Impact

Write-through caching presents a straightforward approach to data consistency. However, this simplicity influences performance in predictable ways.Write-through caching affects read and write performance as follows:

- Read Performance: Read performance is generally good. Data is readily available in the cache if it’s a cache hit. In the case of a cache miss, the data must be fetched from the backing store, which can be slower. However, subsequent reads of the same data will be faster due to caching.

- Write Performance: Write performance can be slower. Every write operation requires updating both the cache and the backing store simultaneously. This synchronous update means that write operations are subject to the latency of the backing store, such as a database or disk.

For instance, consider an e-commerce application where a user updates their profile information. Using write-through, the profile data is written to both the cache and the database immediately. This ensures data consistency but increases the time it takes for the write operation to complete.

Write-Back Caching Performance Impact

Write-back caching aims to improve write performance by delaying the write operations to the backing store. This approach, while potentially faster for writes, introduces complexity in terms of data consistency.Write-back caching affects read and write performance as follows:

- Read Performance: Read performance is typically excellent. Data is usually served quickly from the cache. If the data is not in the cache, and if the data has been modified but not yet written back to the backing store (a “dirty” cache line), the cache must first write the modified data to the backing store before fetching the updated data.

This introduces additional complexity and potential latency.

- Write Performance: Write performance is significantly improved. Write operations are usually fast because they only update the cache. The updates to the backing store are deferred, typically batched, and written asynchronously. This reduces the latency of individual write operations. However, if the cache becomes full, or if a failure occurs before the data is written back, data loss is possible.

For example, imagine a social media platform where a user posts a new status update. With write-back caching, the update is initially written to the cache. The database is updated later, potentially in batches, improving the responsiveness of the platform.

Scenario Highlighting Performance Differences

The performance differences between write-through and write-back are most noticeable in scenarios involving a high volume of write operations. Consider a financial trading application.In this scenario:

- Write-Through: Each trade (write operation) immediately updates the cache and the database. This ensures data consistency but leads to higher latency for each trade, potentially impacting the application’s responsiveness. The application’s throughput is limited by the database write speed.

- Write-Back: Trades are initially written to the cache. This results in significantly faster write operations, enabling higher throughput and better responsiveness. The application can handle a much higher volume of trades. However, there is a risk of data loss if the cache fails before the trades are written to the database.

In the financial trading scenario, write-back caching would be more advantageous due to the need for high throughput and responsiveness. The potential for data loss would need to be mitigated through careful design, such as using redundant caches and transaction logs.

Data Consistency and Reliability

Data consistency and reliability are critical aspects of caching systems, ensuring that the data presented to users is accurate and that the system can withstand failures without significant data loss. The choice between write-through and write-back caching has a profound impact on how these two properties are managed. Understanding the trade-offs is essential for designing a caching strategy that aligns with the application’s requirements.

Ensuring Data Consistency with Write-Through

Write-through caching inherently provides a high degree of data consistency. Because every write operation simultaneously updates both the cache and the backing store (e.g., a database), the cache always contains the most up-to-date version of the data. This approach minimizes the risk of stale data being served to users.

Challenges of Data Consistency in Write-Back Caching

Write-back caching, while offering performance advantages, introduces challenges to data consistency. Data resides in the cache for a period and is only written back to the backing store periodically or under specific conditions. During this time, the cache’s data is considered the “source of truth,” potentially leading to inconsistencies if the cache is not managed carefully. These inconsistencies can arise from several factors:

- Cache Invalidation: If a separate process modifies the data in the backing store directly (bypassing the cache), the cache may hold outdated information.

- Cache Failures: If the cache server crashes before data is written back to the backing store, the data in the cache is lost.

- Concurrency Issues: Multiple clients writing to the same cached data simultaneously can lead to conflicts and data corruption if not handled correctly.

Maintaining Data Reliability in Write-Back Caching

Maintaining data reliability in write-back caching requires careful consideration of potential failure scenarios and the implementation of strategies to mitigate data loss. Several techniques can be employed:

- Write-Ahead Logging (WAL): Before modifying the cache, the write operation is logged to a durable storage medium (e.g., a disk). This log serves as a record of all changes. If the cache fails, the log can be used to replay the operations and restore the data. This is a crucial technique for ensuring data durability.

- Cache Replication: Replicating the cache across multiple servers provides redundancy. If one cache server fails, another can take over, minimizing data loss. The replication process should ensure that the replicas are synchronized and consistent.

- Periodic Flushing: Regularly flushing (writing) the cache data to the backing store minimizes the potential data loss if a failure occurs. The frequency of flushing depends on the application’s tolerance for data loss and the performance characteristics of the backing store. A shorter flush interval increases reliability but can decrease performance.

- Cache Eviction Policies: Implementing effective cache eviction policies, such as Least Recently Used (LRU) or Least Frequently Used (LFU), helps manage the cache’s memory usage and ensures that less frequently accessed data is evicted, allowing for more important data to be written back to the backing store more promptly.

- Transaction Management: Using transactions can ensure atomicity, consistency, isolation, and durability (ACID) properties for write operations. Transactions group multiple write operations into a single unit of work. If any part of the transaction fails, the entire transaction is rolled back, preserving data integrity.

- Monitoring and Alerting: Monitoring the cache’s health, including its write-back queue, replication status, and storage utilization, and setting up alerts can help detect and address potential issues before they lead to data loss.

Implementation Considerations

Implementing caching strategies requires careful planning and execution to ensure optimal performance, data integrity, and system reliability. The choice between write-through and write-back caching significantly impacts the implementation process, influencing factors such as data synchronization, cache eviction policies, and potential for data loss. Understanding these considerations is crucial for successfully integrating a caching solution into an application.

Steps for Implementing Write-Through Caching

Write-through caching simplifies the implementation process by ensuring that data is written to both the cache and the underlying storage simultaneously. This approach offers strong data consistency but can be slower due to the synchronous write operations. The following steps Artikel the key stages involved in implementing a write-through cache:

- Identify Cacheable Data: Determine which data frequently accessed and suitable for caching. This involves analyzing application access patterns and identifying read-heavy operations. Consider data volatility and the cost of cache misses.

- Choose a Cache Implementation: Select a caching solution, such as Redis, Memcached, or a custom-built cache. The choice depends on factors like scalability, performance requirements, and the existing infrastructure.

- Integrate Cache into Data Access Layer: Modify the data access layer of the application to interact with the cache. When a read request arrives:

- Check if the data exists in the cache.

- If a cache hit occurs, return the data from the cache.

- If a cache miss occurs, retrieve the data from the underlying storage, store it in the cache, and then return it to the application.

- Implement Write Operations: When a write operation occurs:

- Write the data to both the cache and the underlying storage simultaneously. This can be achieved using atomic operations or transaction management to ensure data consistency.

- Handle potential failures gracefully. If the write to the underlying storage fails, the cache should also be updated to reflect the failure, and appropriate error handling should be implemented.

- Configure Cache Eviction Policies: Define a cache eviction policy to manage cache capacity. Common policies include:

- Least Recently Used (LRU): Evicts the least recently accessed items.

- Least Frequently Used (LFU): Evicts the least frequently accessed items.

- Time-to-Live (TTL): Evicts items after a specified duration.

- Monitor and Tune: Continuously monitor the cache performance, including cache hit rates, miss rates, and latency. Tune the cache configuration, eviction policies, and data access patterns to optimize performance.

Steps for Implementing Write-Back Caching

Write-back caching offers higher performance by deferring writes to the underlying storage. This approach involves writing data only to the cache initially, and later, asynchronously writing it to the storage. However, it introduces complexities related to data consistency and potential data loss. The following steps Artikel the implementation process for a write-back cache:

- Identify Cacheable Data: Analyze application access patterns and identify data that benefits from write-back caching. Consider data volatility and the acceptable risk of data loss.

- Choose a Cache Implementation: Select a caching solution that supports write-back functionality. Options include Redis (with persistence configurations) or custom-built solutions.

- Integrate Cache into Data Access Layer: Modify the data access layer to interact with the cache.

- On a read request, check the cache. If there is a cache hit, return the data.

- If a cache miss occurs, fetch the data from the underlying storage, store it in the cache, and return it.

- Implement Write Operations:

- When a write operation occurs, write the data only to the cache.

- Mark the cached data as “dirty” or “modified” to indicate that it needs to be written to the underlying storage.

- Implement a Write-Back Mechanism: Implement a mechanism to asynchronously write the “dirty” data from the cache to the underlying storage. This can be achieved through:

- A background process or thread that periodically flushes the cache to the storage.

- A logging mechanism that tracks write operations and replays them to the storage.

- Handle Data Loss: Implement strategies to minimize data loss in case of cache failures or system crashes. This may involve:

- Regularly persisting the cache contents to disk.

- Implementing a write-ahead log (WAL) to record all write operations.

- Using replication to create redundant copies of the cache.

- Configure Cache Eviction Policies: Define eviction policies to manage cache capacity, considering that evicted “dirty” data must be written to the underlying storage before eviction.

- Monitor and Tune: Monitor cache performance, including cache hit rates, write-back latency, and data loss incidents. Tune the cache configuration, write-back frequency, and data access patterns to optimize performance and reliability.

Choosing Between Write-Through and Write-Back Caching

The decision between write-through and write-back caching depends on specific application requirements, including performance needs, data consistency requirements, and the acceptable risk of data loss. Careful consideration of these factors is essential to select the most appropriate caching strategy.

| Factor | Write-Through | Write-Back |

|---|---|---|

| Performance | Slower write operations due to synchronous writes to storage. | Faster write operations as writes are primarily to the cache, improving write performance. |

| Data Consistency | Strong data consistency; data is always consistent between the cache and the underlying storage. | Potential for data inconsistency if the cache fails before data is written back to the storage. |

| Data Loss Risk | Low risk of data loss, as data is immediately written to the underlying storage. | Higher risk of data loss if the cache fails before the data is written back. |

| Complexity | Simpler to implement, as write operations are straightforward. | More complex to implement, as it requires managing asynchronous writes and data persistence. |

| Use Cases | Applications that prioritize data consistency, such as financial transactions or critical data storage. | Applications that prioritize write performance, such as social media feeds, session management, or content delivery networks (CDNs), where some data loss is acceptable. |

For example, consider an e-commerce application. If the application needs to store customer order information, write-through caching would be preferred. This ensures that every order is immediately recorded in the database, maintaining data integrity and preventing data loss. In contrast, a social media platform displaying user posts might benefit from write-back caching. This allows for faster posting of updates, as the writes are initially handled in the cache.

If a cache failure occurs, some recent posts might be lost, but the overall impact on the user experience is minimized. The choice depends on balancing performance gains against the risk of data loss.

Write Policies and Cache Coherency

Understanding write policies and cache coherency is crucial for optimizing cache performance and ensuring data integrity, especially in multi-processor systems. While write-through and write-back are fundamental write policies, other strategies exist, and maintaining data consistency across multiple caches necessitates the use of cache coherency protocols.

Alternative Write Policies

Beyond write-through and write-back, other write policies offer different trade-offs between performance and complexity. These policies aim to balance the speed of write operations with the need for data consistency.

- Write-Once: This policy combines aspects of write-through and write-back. The first write to a cache line is handled as write-through, updating both the cache and main memory. Subsequent writes to the same cache line are handled as write-back, updating only the cache until the line is evicted. This can reduce the number of writes to main memory compared to write-through, while still ensuring the initial write is immediately reflected in main memory.

- Write-Around: This policy avoids caching writes altogether. When a write occurs, the data is written directly to main memory, bypassing the cache. This can be useful for data that is unlikely to be read again soon, preventing the cache from being polluted with infrequently used data. However, it can increase the latency of write operations since they must always access main memory.

- Write-Invalidate: This policy, often used in conjunction with other policies, focuses on invalidating copies of data in other caches when a write occurs. When a processor writes to a cache line, all other copies of that cache line in other caches are marked as invalid. This ensures that subsequent reads will go to main memory (or the cache with the valid copy) and retrieve the most up-to-date data.

This approach minimizes the need for frequent write operations to main memory, as the main memory is only updated when a cache line is evicted.

Cache Coherency Protocols

Cache coherency protocols are essential in multi-processor systems to ensure that all processors have a consistent view of the data. These protocols manage the state of cache lines and coordinate write operations to prevent data corruption.

These protocols generally rely on one of two primary strategies:

- Snooping Protocols: In snooping protocols, each cache “snoops” on the memory bus to monitor the transactions of other caches. When a cache detects a write operation to a memory location it also has cached, it takes appropriate action, such as invalidating its copy or updating its copy with the new value.

- Directory-Based Protocols: Directory-based protocols use a central directory to track the state of each cache line. The directory stores information about which caches have copies of a particular cache line and their states. When a write operation occurs, the directory is consulted to determine which caches need to be updated or invalidated.

Examples of Cache Coherency Protocols in Action

Cache coherency protocols can be illustrated with examples to understand how they work in practice.

Consider a simplified scenario with two processors, P1 and P2, and a shared memory location X. Initially, both P1 and P2 have a copy of X in their respective caches.

- Scenario: Write-Through with Snooping

- Scenario: Write-Back with Snooping (MESI Protocol)

- Scenario: Directory-Based Protocol

P1 writes a new value to X. Since write-through is used, P1’s cache and main memory are updated immediately. P1 also broadcasts a signal on the bus indicating the write. P2, “snooping” on the bus, detects this write and invalidates its copy of X in its cache. Subsequent reads by P2 will fetch the updated value of X from main memory.

P1 writes a new value to X. P1’s cache line for X transitions to the “Modified” state. No write is performed to main memory at this point. P2 attempts to read X. P2’s cache “snoops” the bus and sees P1’s cache has X in the “Modified” state.

P1 must “snoop” back to P2 the data and P2’s cache transitions to “Shared” state. When P1 eventually evicts its cache line for X, the updated value is written back to main memory. If P2 writes to X after this, P2 will invalidate P1’s copy and transition to the “Modified” state.

A directory maintains the state of each cache line. P1 writes to X. The directory is consulted to determine which other caches have a copy of X. The directory sends invalidation messages to those caches, invalidating their copies. The directory also marks the cache line as “modified” in the directory.

When P2 attempts to read X, it consults the directory, which provides the updated value from P1’s cache or retrieves the value from main memory (if P1 has already written back the value).

These examples demonstrate how cache coherency protocols maintain data consistency in different scenarios, ensuring that all processors have an accurate view of the shared data. The choice of protocol depends on the specific hardware architecture and performance requirements.

Real-World Applications

Caching strategies, including write-through and write-back, are implemented across various systems to optimize performance and manage data efficiently. Understanding their practical applications provides insights into their strengths and trade-offs.

Write-Through Caching Applications

Write-through caching is favored in scenarios where data consistency and reliability are paramount, even at the expense of some performance gains. This approach ensures that every write operation updates both the cache and the underlying storage simultaneously.

- Financial Transactions: Systems processing financial transactions, such as banking or stock trading platforms, often employ write-through caching. Every transaction needs to be immediately and permanently recorded. The immediate write to the database ensures data integrity and prevents loss in case of system failures.

- Content Delivery Networks (CDNs) for Static Content: CDNs use write-through caching for static assets like images, CSS, and JavaScript files. When a user requests a file, the CDN checks its cache. If the file is present, it serves it. If not, it retrieves the file from the origin server, caches it, and then serves it to the user. The write-through aspect ensures that any updates to the origin server’s files are immediately reflected in the CDN cache, maintaining data consistency.

- Embedded Systems: In certain embedded systems, such as those controlling industrial machinery, write-through caching might be used to ensure that critical control data is consistently written to persistent storage. This reduces the risk of data loss during power failures or system crashes.

- Logging Systems: Logging systems sometimes use write-through caching for critical log entries. This approach ensures that important events are recorded promptly, which is crucial for auditing, debugging, and security purposes.

Write-Back Caching Applications

Write-back caching is advantageous in scenarios where read performance is critical and some level of data latency or potential data loss is acceptable. This strategy optimizes for speed by deferring writes to the underlying storage.

- Database Systems: Many database systems utilize write-back caching to improve read performance. Data is read from the cache as often as possible, and writes are batched and written to disk periodically. This can dramatically increase the throughput of write operations.

- Operating System File Systems: Modern operating systems often employ write-back caching for file system operations. Data written to a file is first stored in memory (cache) and written to the disk later. This can significantly improve the perceived performance of file writes, especially for sequential writes.

- Game Consoles: Game consoles frequently use write-back caching to manage game data. Game assets are often loaded into memory and written back to the storage device (e.g., hard drive or SSD) at intervals. This approach improves loading times and gameplay smoothness.

- High-Performance Computing (HPC): HPC environments, where computational speed is critical, can benefit from write-back caching. The delayed write approach allows for optimizing write operations and maximizing the use of available memory.

Database System Scenario with Write-Back Caching

Consider a large e-commerce platform. The platform’s database stores product information, user profiles, and order details. To optimize performance, the database system employs write-back caching.

Scenario Breakdown:

- Data Retrieval: When a user requests a product page, the system first checks the cache for the product information. If the data is in the cache (a cache hit), it’s served directly, which is very fast.

- Data Modification (Write Operations): When a user adds an item to their cart, the update is written to the cache immediately. The database system then batches these write operations and flushes them to the disk periodically (e.g., every few seconds or minutes, or when the cache is full).

- Benefits:

- Improved Read Performance: Frequent reads are served from the cache, reducing the load on the database and speeding up page load times.

- Increased Write Throughput: Batching write operations reduces the number of individual disk I/O operations, allowing the system to handle a higher volume of transactions.

- Scalability: Write-back caching can contribute to the scalability of the system. By reducing the load on the database, the system can handle more concurrent users and transactions.

Potential Challenges and Considerations:

While write-back caching offers significant benefits, it also introduces the risk of data loss if the system crashes before the data in the cache is written to disk. To mitigate this, the system can employ:

- Regular Flushing: Configuring the cache to flush data to disk frequently, minimizing the potential for data loss.

- Write-Ahead Logging (WAL): Logging all write operations before they are committed to the cache, enabling data recovery in case of a failure.

- Redundancy: Implementing redundant systems to provide backup and failover capabilities.

Advanced Topics: Optimizations

Optimizing caching strategies is crucial for maximizing performance and ensuring data integrity. Various techniques can be employed to enhance both write-through and write-back caching, often tailored to specific application requirements and system architectures. Understanding these optimization strategies allows developers to make informed decisions that lead to more efficient and reliable caching solutions.

Optimizing Write-Through Caching

Write-through caching, while simpler in its implementation, can be optimized to mitigate its inherent performance overhead. These optimizations primarily focus on minimizing the latency associated with writing to both the cache and the backing store.

- Asynchronous Writes: Implementing asynchronous writes allows the cache to acknowledge the write operation to the client immediately, while the write to the backing store happens in the background. This significantly reduces the perceived latency for the client. This approach requires careful handling of potential failures in the background write operations. Consider using a message queue like Kafka or RabbitMQ to manage asynchronous writes reliably, ensuring that the data is eventually persisted even if the backing store is temporarily unavailable.

- Batching Writes: Instead of writing each individual update to the backing store immediately, batching multiple write operations into a single write can reduce the number of I/O operations. This is particularly effective when dealing with high volumes of small writes. For example, consider an e-commerce platform where users frequently update their shopping carts. Batching these updates, instead of writing each item addition or removal immediately, can substantially reduce the load on the database.

- Cache Line Optimization: Aligning data within the cache lines can improve the efficiency of data retrieval and modification. This involves organizing data in a way that minimizes the amount of data that needs to be read or written from the backing store. For example, if frequently accessed data is stored in contiguous memory locations, the cache can fetch an entire cache line containing this data, improving performance.

- Write Combining: Write combining is a hardware-level optimization where multiple write operations to the same memory region are combined into a single write operation. This is typically handled by the CPU’s write buffer. This is more effective when dealing with small, frequent writes.

- Optimized Backing Store: Selecting an appropriate backing store that can handle the write load efficiently is crucial. This may involve choosing a database with high write throughput or optimizing the database schema and indexing. For example, using SSDs instead of HDDs for the backing store can dramatically improve write performance.

Optimizing Write-Back Caching

Write-back caching offers the potential for higher performance but introduces complexities related to data consistency and potential data loss. Optimization strategies focus on reducing the risk of data loss and improving the efficiency of flushing data to the backing store.

- Efficient Flush Policies: Determining the optimal flush policy is key to write-back caching performance. This involves deciding when and how often to write data from the cache to the backing store. This can be based on various factors, including time intervals, cache usage, or specific events. A good example is a system that flushes dirty cache blocks every 5 seconds, or when the cache reaches a certain capacity threshold.

- Write Buffer Management: Effective management of the write buffer is critical to prevent data loss. This involves strategies such as implementing a write buffer that is large enough to handle bursts of write operations, and using mechanisms to protect the data in the write buffer from system failures, such as using mirrored write buffers or persistent write buffers.

- Cache Coherency Mechanisms: Implementing robust cache coherency protocols ensures data consistency across multiple caches and the backing store. This can involve using protocols like MESI (Modified, Exclusive, Shared, Invalid) or other cache-coherency algorithms to maintain data integrity.

- Prefetching: Anticipating future data needs and proactively loading data into the cache can improve performance. This involves analyzing access patterns and preloading data that is likely to be accessed soon.

- Staging Data: Staging data, where data is initially written to a temporary storage location before being written to the backing store, can help to reduce the impact of write operations on the primary storage. For example, a system could write data to a fast, temporary storage like an in-memory buffer before flushing it to the slower, persistent storage.

Combining Write-Through and Write-Back Methods

A hybrid approach, combining write-through and write-back caching, can provide a balance between performance and data consistency. This involves selectively applying write-through or write-back strategies based on data characteristics and application needs.

- Selective Write Policies: Applying write-through for critical data that requires immediate consistency, and write-back for less critical data where some data loss is acceptable. For example, in a financial application, account balance updates might use write-through to ensure immediate consistency, while user profile updates might use write-back.

- Tiered Caching: Implementing a tiered caching architecture where multiple cache layers are used, with different write policies applied to each layer. For instance, a system might have a write-back cache as the primary cache, and a write-through cache as a secondary cache.

- Dynamic Policy Switching: The caching system can dynamically switch between write-through and write-back policies based on factors such as system load, data access patterns, or the criticality of the data.

- Data Segmentation: Segmenting data into different categories, and applying different caching strategies to each segment. For example, frequently accessed, read-heavy data might use write-back, while less frequently accessed, write-heavy data might use write-through.

- Adaptive Caching: Implementing an adaptive caching strategy that automatically adjusts the write policy based on real-time performance metrics and system conditions. This involves monitoring cache hit rates, write latency, and data consistency requirements, and dynamically adjusting the caching strategy to optimize performance.

Final Wrap-Up

In conclusion, the selection between write-through and write-back caching hinges on a balance between speed and data integrity. Write-through guarantees immediate data consistency, suitable for applications where data accuracy is paramount. Write-back prioritizes performance by deferring writes, ideal for scenarios where speed is critical. By grasping the strengths and weaknesses of each approach, developers can make informed decisions, optimizing their systems for peak performance and reliability, thus ensuring efficient data management.

Key Questions Answered

What is the primary advantage of write-through caching?

The primary advantage of write-through caching is its guaranteed data consistency. Every write operation updates both the cache and the main storage simultaneously, minimizing the risk of data loss.

What is the primary advantage of write-back caching?

The primary advantage of write-back caching is improved performance. By delaying writes to main storage, write-back caching can reduce latency and increase the speed of write operations.

How does write-through caching affect read performance?

Write-through caching typically has a minimal impact on read performance, as reads primarily retrieve data from the cache. However, write operations can sometimes slow down reads due to the need to update both the cache and main storage.

What happens to data in write-back caching if there is a system failure?

If a system failure occurs in write-back caching before the data in the cache is written to main storage, the data in the cache may be lost. This is a key consideration when choosing between write-through and write-back.

Which caching method is better for a database system?

The best method depends on the database’s specific needs. Write-back can provide better performance, but write-through ensures greater data consistency. Many databases use a combination of both methods or other techniques to optimize performance and reliability.