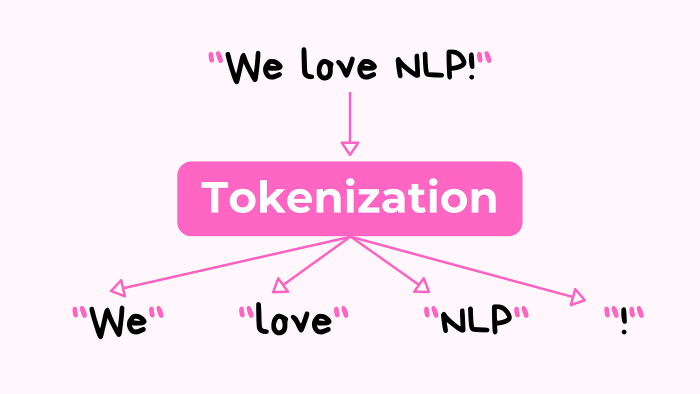

Tokenization in data security is a powerful technique, transforming sensitive data into meaningless substitutes, known as tokens. This process protects confidential information without compromising its usability. Imagine a world where your credit card number is replaced with a random string of characters – that’s tokenization in action.

This approach has evolved significantly over time, becoming a cornerstone of modern data protection strategies. Tokenization provides a robust shield against data breaches, simplifies compliance with stringent regulations, and fosters trust in an increasingly digital landscape. We will explore how tokenization works, its benefits, and its critical role in safeguarding valuable information across various industries.

Introduction to Tokenization in Data Security

Tokenization is a crucial data security process that replaces sensitive data with unique, non-sensitive values called “tokens.” These tokens have no intrinsic meaning and cannot be used to derive the original data. This approach significantly reduces the risk of data breaches by minimizing the exposure of sensitive information. Tokenization is widely used in various industries to protect confidential data like credit card numbers, social security numbers, and other personally identifiable information (PII).

Core Concept of Tokenization and Data Protection

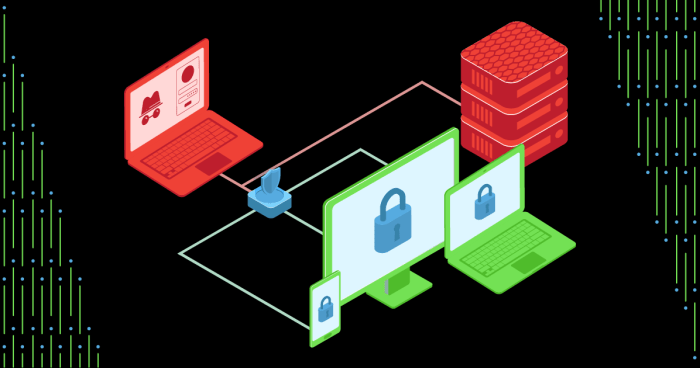

Tokenization works by substituting sensitive data with a token, which is essentially a placeholder. The original data is stored securely in a separate vault or database, and the token is used in its place for everyday operations. This means that if a system containing tokens is compromised, the attacker only gains access to the tokens, not the actual sensitive data.

The tokens are useless without access to the secure vault. The process is reversible, allowing the original data to be retrieved when needed, but only by authorized systems and users.For example, consider a credit card number. Instead of storing the actual 16-digit number, a system would store a token, such as a random alphanumeric string like “TKN-12345”. The original credit card number would be securely stored in a token vault, and only authorized systems would have the ability to retrieve the original credit card number by providing the correct token.

Brief History of Tokenization in Data Security

The evolution of tokenization is closely tied to the increasing need for robust data security measures in response to rising cyber threats.

- Early 2000s: The concept of tokenization emerged as a way to address the vulnerabilities of storing sensitive data directly. The payment card industry (PCI) was a key driver for the early adoption of tokenization.

- Mid-2000s: The rise of e-commerce and online transactions further accelerated the need for secure payment processing. Tokenization began to be implemented by payment gateways and processors to protect credit card information.

- Late 2000s – Early 2010s: Tokenization technologies matured, and standardization efforts began, leading to more secure and efficient implementations. The Payment Card Industry Data Security Standard (PCI DSS) played a significant role in driving tokenization adoption.

- Present: Tokenization is now a widely accepted and essential security practice across various industries. It continues to evolve, with advancements in encryption, key management, and cloud-based tokenization services.

Tokenization’s history is a testament to the continuous adaptation of data security practices in response to evolving threats and technological advancements. The development of tokenization reflects the ongoing effort to protect sensitive information in an increasingly digital world.

Tokenization Analogy for a Non-Technical Audience

To understand tokenization, imagine you’re checking into a hotel.

- Instead of giving the hotel clerk your actual credit card (the sensitive data), you give them a token, like a key card.

- The key card (the token) allows you to access your room, but it doesn’t reveal your credit card number.

- The hotel stores your credit card information separately and securely, linked to the key card.

- If someone steals your key card, they can’t use it to access your credit card details. They only have access to the room (the transaction).

In this analogy:

Your credit card is the sensitive data, the key card is the token, and the hotel’s secure system is the token vault.

This illustrates how tokenization protects sensitive information by replacing it with a safe, non-sensitive substitute.

How Tokenization Works

Tokenization is a sophisticated data security technique that replaces sensitive data with unique, randomly generated values called tokens. These tokens have no intrinsic meaning and are mathematically irreversible, meaning they cannot be used to deduce the original data. This process allows organizations to securely handle sensitive information, such as credit card numbers, without actually storing the original data in its raw form.

Token Generation: Creating Unique Identifiers

The creation of tokens is a critical aspect of tokenization. This process involves several key steps to ensure the uniqueness and security of each token. The goal is to create a value that is distinct, non-reversible, and meaningless to anyone who doesn’t have the key to de-tokenize it.

- Generation Process: When sensitive data needs to be tokenized, the system generates a unique token. This token is typically a string of characters or numbers. The generation process relies on algorithms and a secure tokenization system.

- Uniqueness: Each token must be unique to avoid data breaches or confusion. This is usually achieved by using a random number generator or a deterministic algorithm that guarantees uniqueness, even if the same sensitive data is tokenized multiple times.

- Irreversibility: The token must be cryptographically irreversible, meaning that the original sensitive data cannot be derived from the token. This is often achieved through the use of encryption techniques and hashing algorithms.

- Format Preservation: The token can be designed to preserve the format of the original data. For example, a credit card number token might maintain the same length and format as a real credit card number, which can be useful for certain applications, while still protecting the underlying data.

The Role of a Token Vault

The token vault is the central repository where the sensitive data and its corresponding tokens are securely stored. It is a critical component of the tokenization process. This vault is designed to be highly secure, and its management is crucial for the overall security of the system.

- Secure Storage: The primary function of the token vault is to securely store the original sensitive data and its associated tokens. This data is typically encrypted at rest and protected by robust access controls.

- Token Management: The vault manages the tokens, including their creation, storage, and retrieval. It keeps track of which token corresponds to which piece of sensitive data.

- Access Control: Access to the token vault is strictly controlled. Only authorized users and systems have access to the sensitive data. This access is typically based on the principle of least privilege, where users are granted only the minimum necessary access.

- Monitoring and Auditing: The token vault is continuously monitored for any suspicious activity. Audit logs track all access attempts and changes made to the data within the vault.

Step-by-Step Procedure for Tokenizing a Credit Card Number

Tokenizing a credit card number is a practical example of how the tokenization process works. This process typically involves several steps to ensure the security and integrity of the data.

- Data Input: A credit card number is provided as input to the tokenization system. This input might come from a customer during an online transaction or from a system processing payment information.

- Token Generation: The tokenization system generates a unique token to replace the credit card number. This process uses a cryptographic algorithm to create a random, unique value.

- Data Mapping: The system creates a mapping between the original credit card number and the newly generated token. This mapping is securely stored within the token vault.

- Data Replacement: The original credit card number is replaced with the token in the application or system. From this point forward, the token is used in place of the credit card number for all subsequent processes, such as storage, processing, and transmission.

- Secure Storage: The credit card number and its associated token are stored in the token vault. The credit card number is stored securely, typically encrypted, and access to it is tightly controlled.

- Data Usage: When the credit card number is needed, the token is used to retrieve the original data from the token vault. The system retrieves the credit card number from the vault, processes it, and then discards the original data to maintain security.

For example, consider a customer making an online purchase. Instead of storing the actual credit card number on the e-commerce website, the system tokenizes the number. The token, a randomly generated string, is then used for all subsequent processing, such as submitting the payment to the payment gateway. The original credit card number is securely stored in the token vault, and only authorized personnel can access it. This method significantly reduces the risk of data breaches, as the sensitive credit card data is never exposed within the e-commerce system.

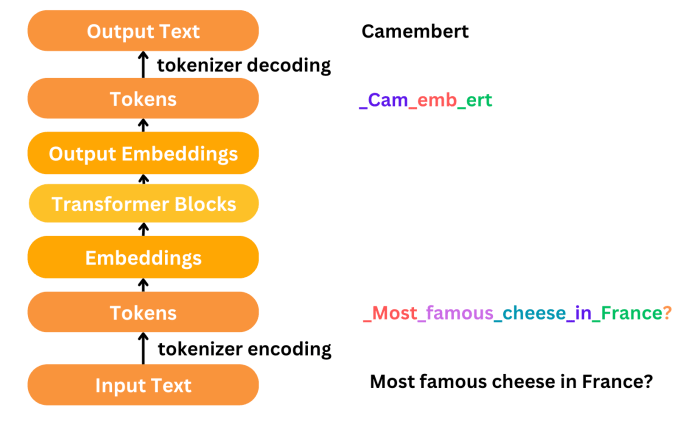

Tokenization vs. Encryption

Both tokenization and encryption are crucial data security techniques, designed to protect sensitive information. While they share the common goal of safeguarding data, they employ different methodologies and offer distinct advantages and disadvantages. Understanding these differences is essential for selecting the most appropriate security solution for a given scenario.

Comparing and Contrasting Tokenization and Encryption

Tokenization and encryption, although both aimed at data protection, differ significantly in their approach. Encryption transforms data into an unreadable format using a cryptographic key, while tokenization replaces sensitive data with a non-sensitive substitute, a token, without altering the original data’s format. The choice between them depends heavily on the specific security requirements and operational needs.

Strengths and Weaknesses of Each Method in Data Protection

Each method possesses unique strengths and weaknesses that make them suitable for different applications. Encryption offers robust security by making data unreadable without the decryption key. However, managing cryptographic keys can be complex. Tokenization, on the other hand, simplifies data handling as tokens are not cryptographically linked to the original data, reducing the risk associated with key compromise.

Differences Between Tokenization and Encryption

The following table summarizes the key differences between tokenization and encryption:

| Feature | Tokenization | Encryption | Description |

|---|---|---|---|

| Mechanism | Replaces sensitive data with a non-sensitive token. | Transforms data into an unreadable format using a cryptographic key. | Tokenization uses a substitution method, while encryption uses a mathematical algorithm. |

| Data Transformation | Preserves data format; the token typically resembles the original data format. | Alters the data format, making it unreadable without the decryption key. | Tokenization maintains the original data’s structure, while encryption significantly changes it. For example, a credit card number might be replaced by a token in a similar format, whereas encryption would result in an entirely different, unintelligible string of characters. |

| Security Approach | Security through obscurity; the token itself reveals no sensitive information. | Security through cryptography; relies on the strength of the encryption algorithm and key management. | Tokenization hides the original data, while encryption makes it unreadable. |

| Key Management | Generally simpler; tokens are not cryptographically linked to the original data, reducing key compromise risks. | Complex; requires secure key generation, storage, and management. Key compromise can expose all encrypted data. | The absence of cryptographic keys in tokenization simplifies key management compared to the complex procedures needed for encryption. A data breach of a token vault exposes only tokens, not the original data. |

Benefits of Tokenization

Tokenization offers a multitude of advantages for securing sensitive data, making it a cornerstone of modern data security strategies. By replacing sensitive data with non-sensitive tokens, organizations can significantly reduce their risk exposure and improve their overall security posture. This approach provides several tangible benefits, ranging from reduced compliance burdens to enhanced data breach response capabilities.

Reduced Scope of PCI DSS Compliance

Tokenization significantly reduces the scope of PCI DSS (Payment Card Industry Data Security Standard) compliance. The primary reason for this reduction is that the sensitive data, such as credit card numbers (PANs), is not stored or processed within the organization’s environment.Organizations that handle credit card data are subject to PCI DSS requirements, which can be complex and expensive to implement and maintain.

Tokenization minimizes these requirements by:

- Minimizing Data Storage: Since the actual credit card data is replaced with tokens, the organization does not need to store the sensitive information. This eliminates the need for many PCI DSS controls related to data storage, such as secure storage, encryption, and access controls.

- Reducing Data Transmission Requirements: Tokenization often reduces the need for sensitive data to be transmitted across networks. Instead of transmitting the actual card number, only the token is transmitted. This simplifies the requirements for secure transmission protocols and reduces the attack surface.

- Simplifying Processing Environments: Tokenization allows organizations to offload the processing of sensitive data to a token service provider (TSP). This reduces the scope of the organization’s cardholder data environment (CDE), simplifying compliance efforts and potentially reducing the costs associated with PCI DSS audits.

For example, a retail company using tokenization for online transactions can reduce its PCI DSS scope by not storing or directly processing credit card numbers on its servers. The payment gateway handles the actual card data, replacing it with a token that the retailer uses for subsequent transactions, refunds, and other payment-related activities. This shift significantly simplifies the retailer’s PCI DSS compliance efforts, reducing the need for expensive security controls and audits.

Improved Data Breach Response

Tokenization significantly improves an organization’s ability to respond to a data breach. Because the sensitive data is replaced with tokens, the impact of a breach is substantially reduced. Even if a malicious actor gains access to the organization’s systems, they only obtain the tokens, not the actual sensitive data.The advantages of tokenization in data breach response include:

- Reduced Data Loss: The primary benefit is that the sensitive data, such as credit card numbers or personally identifiable information (PII), is not compromised. The tokens are useless without the key to the token vault, which is typically managed by a trusted token service provider.

- Limited Impact of Compromise: If tokens are stolen, the attacker cannot directly use them to access the original data. The tokens are essentially meaningless without access to the token vault, which is a highly secure environment.

- Simplified Breach Notification: Data breach notification laws often require organizations to notify affected individuals when their sensitive data has been compromised. Because the actual sensitive data is not exposed, the notification requirements may be significantly reduced, depending on the specific regulations and the nature of the breach.

- Faster Recovery: With tokenization, the organization can quickly revoke the compromised tokens and issue new ones, minimizing the impact on operations. This swift action can prevent further damage and maintain customer trust.

For instance, consider a healthcare provider that uses tokenization to protect patient medical records. If a hacker breaches the provider’s database and steals the tokens representing the patient data, the attacker cannot directly access the patient’s medical information. The attacker would need to obtain the token vault key, which is securely managed by a third-party token service provider. This limits the impact of the breach and simplifies the response process, as the healthcare provider does not need to notify patients about the direct exposure of their sensitive data.

This significantly reduces the legal and reputational damage associated with a data breach.

Types of Tokenization

Tokenization offers flexibility in how sensitive data is protected. The choice of tokenization type depends on the specific needs of the organization, the types of data being protected, and the systems where the data will be used. Understanding the various types of tokenization is crucial for implementing a robust data security strategy.

Format-Preserving Tokenization

Format-preserving tokenization (FPT) is designed to create tokens that maintain the same format as the original data. This is particularly useful when the data needs to fit within existing systems and applications without requiring significant modifications. For instance, a credit card number token would have the same length and general format (e.g., the Luhn algorithm check) as the original credit card number.

- Definition: FPT generates tokens that conform to the same format as the original data, including length, data type, and character sets.

- Use Cases:

- Payment Processing: Maintaining the format of credit card numbers is critical for compatibility with payment gateways and processors.

- Legacy Systems: Systems that are not easily updated or modified can use FPT to protect sensitive data without requiring extensive code changes.

- Data Masking: FPT can be used to mask sensitive data in databases while preserving the format for testing or analytics purposes.

- Example: A 16-digit credit card number “1234-5678-9012-3456” could be tokenized to “9876-5432-1098-7654”, maintaining the same length and format.

- Advantages: Compatibility with existing systems, minimal disruption to operations.

- Disadvantages: Tokens can potentially reveal information about the original data if the format is predictable.

Non-Format-Preserving Tokenization

Non-format-preserving tokenization (NFPT) generates tokens that do not necessarily adhere to the original data format. This provides more flexibility in the token design and can enhance security by making it more difficult for attackers to infer information about the original data.

- Definition: NFPT creates tokens that do not maintain the format of the original data. The tokens can be of varying lengths and types.

- Use Cases:

- Data Storage: Protecting sensitive data in databases where format compatibility is not a primary concern.

- Compliance: Meeting regulatory requirements for data security, such as PCI DSS.

- Data Anonymization: Creating tokens that are not easily reversible to protect individual privacy.

- Example: A 16-digit credit card number “1234-5678-9012-3456” could be tokenized to a completely different format, such as “ABC123XYZ789”.

- Advantages: Increased security due to the lack of format constraints, potentially easier to implement.

- Disadvantages: May require modifications to existing systems to accommodate the new token format.

Deterministic Tokenization

Deterministic tokenization always generates the same token for the same input data. This is useful for applications where data consistency across different systems is important.

- Definition: Deterministic tokenization always maps the same input data to the same token.

- Use Cases:

- Referential Integrity: Maintaining relationships between data across different databases or systems.

- Data Matching: Matching records across datasets while protecting sensitive information.

- Auditing: Tracking data changes and maintaining data consistency.

- Example: The name “John Smith” would always generate the same token, allowing for consistent matching across different records.

- Advantages: Consistent data representation, enables data matching.

- Disadvantages: If the tokenization algorithm is compromised, all associated data can be at risk.

Non-Deterministic Tokenization

Non-deterministic tokenization generates a different token each time the same input data is tokenized. This enhances security by making it more difficult to reverse the tokenization process.

- Definition: Non-deterministic tokenization generates a different token for the same input data each time.

- Use Cases:

- Enhanced Security: Protecting data against attacks that might exploit predictable token generation.

- Data Privacy: Minimizing the risk of re-identification.

- Compliance: Meeting strict data privacy regulations.

- Example: The name “John Smith” would generate a different token each time it is tokenized.

- Advantages: Increased security, enhanced privacy.

- Disadvantages: Can complicate data matching and relationship maintenance.

Tokenization with and without Encryption

Tokenization can be implemented with or without encryption, depending on the security requirements and the sensitivity of the data.

- Tokenization without Encryption: The token is generated without the use of encryption. This approach is often used when format preservation is a key requirement. However, this approach alone may not be sufficient for high-security environments.

- Tokenization with Encryption: The tokenization process incorporates encryption to add an extra layer of security. This approach is more secure and is often used for highly sensitive data. Encryption can protect the tokens and the underlying data from unauthorized access. The use of encryption with tokenization enhances the overall security posture, providing robust protection against data breaches.

Implementing Tokenization

Implementing tokenization requires careful planning and execution to ensure the security and integrity of sensitive data. It involves integrating a tokenization system into existing infrastructure, selecting a suitable provider, and adhering to security best practices. This process transforms sensitive data into tokens, which can then be used in place of the original data for various operations, significantly reducing the risk of data breaches.

Implementing Tokenization in a Payment System

Implementing tokenization in a payment system streamlines the processing of transactions while enhancing security. The process typically involves several key steps, each crucial for protecting sensitive cardholder data.

- Integration with Payment Gateway: The payment system integrates with a tokenization provider and the existing payment gateway. When a customer enters their payment information, it is sent to the tokenization provider.

- Token Generation: The tokenization provider receives the sensitive data and generates a unique token. This token is a randomly generated string of characters that replaces the original data.

- Data Storage and Transmission: The token, rather than the actual payment information, is stored and transmitted within the payment system and across networks. This includes processing the transaction and sending confirmation.

- Transaction Processing: When a transaction needs to be processed, the token is sent to the payment gateway. The payment gateway, in turn, sends the token to the tokenization provider to retrieve the original payment information (typically the card number) to complete the transaction.

- Compliance and Auditing: The payment system must comply with all relevant regulations, such as PCI DSS, and undergo regular audits to ensure the tokenization system is secure and functioning correctly.

For example, consider an e-commerce website using tokenization. A customer enters their credit card details during checkout. Instead of storing the actual card number, the system sends it to the tokenization provider. The provider generates a token, like “tk_12345abcde,” which is then stored by the e-commerce platform. When the customer makes a purchase, the token is used to authorize the transaction.

The payment gateway sends the token to the tokenization provider to retrieve the actual card number, which is then used to process the payment. This ensures that the e-commerce platform never directly handles the sensitive card data.

Choosing a Tokenization Provider

Selecting the right tokenization provider is a critical decision that significantly impacts the security and efficiency of a tokenization implementation. Several factors must be carefully considered to ensure the chosen provider meets the organization’s specific needs and security requirements.

- Security Certifications and Compliance: The provider should have relevant security certifications, such as PCI DSS compliance, to demonstrate adherence to industry best practices. This indicates that the provider’s systems and processes have been independently assessed and meet rigorous security standards.

- Tokenization Methodologies: Understand the tokenization methods offered, such as format-preserving encryption, and ensure they align with the organization’s requirements. Different methods offer varying levels of security and functionality.

- Scalability and Performance: The provider should be able to handle the organization’s current and future transaction volumes without impacting performance. The system should be able to scale up or down as needed.

- Integration Capabilities: The provider’s system should integrate seamlessly with the organization’s existing infrastructure, including payment gateways, CRM systems, and other relevant applications.

- Data Protection and Privacy: The provider should have robust data protection measures in place, including data encryption, access controls, and data loss prevention strategies, to protect sensitive information.

- Reporting and Analytics: The provider should offer comprehensive reporting and analytics capabilities to track token usage, monitor security events, and gain insights into transaction patterns.

- Cost and Pricing: Evaluate the provider’s pricing model and ensure it aligns with the organization’s budget and usage patterns. Consider factors such as transaction fees, setup costs, and ongoing maintenance expenses.

- Vendor Reputation and Support: Research the provider’s reputation and track record in the industry. Assess the level of customer support and technical assistance offered.

For instance, a company handling a large volume of online transactions might choose a provider with robust scalability and PCI DSS compliance. Conversely, a smaller organization might prioritize cost-effectiveness and ease of integration. Thorough due diligence is essential to ensure the provider aligns with the organization’s specific needs and risk profile.

Security Best Practices When Implementing Tokenization

Implementing tokenization is not a one-time process but an ongoing effort that requires adherence to security best practices to maintain the integrity and effectiveness of the system. These practices help to mitigate risks and ensure the long-term security of sensitive data.

- Secure Key Management: Implement robust key management practices, including secure key generation, storage, rotation, and access controls. This is critical because the security of the tokens depends on the security of the keys used to generate them.

- Access Control and Authorization: Restrict access to the tokenization system and sensitive data based on the principle of least privilege. Only authorized personnel should have access to the systems and data required for their roles.

- Data Encryption: Implement encryption at rest and in transit to protect data throughout its lifecycle. This includes encrypting the tokenized data, as well as the keys used to generate and manage the tokens.

- Monitoring and Auditing: Continuously monitor the tokenization system for suspicious activity and regularly audit the system to identify and address any vulnerabilities. This includes logging all system events and access attempts.

- Regular Security Assessments: Conduct regular security assessments, including vulnerability scans and penetration testing, to identify and remediate potential weaknesses in the tokenization system.

- Incident Response Plan: Develop and maintain a comprehensive incident response plan to address any security breaches or data compromises effectively. This plan should Artikel the steps to be taken in the event of a security incident.

- Employee Training and Awareness: Provide comprehensive training to employees on tokenization security best practices and data protection policies. This helps to ensure that employees understand their roles and responsibilities in maintaining the security of the system.

- Compliance with Regulations: Ensure that the tokenization implementation complies with all relevant regulations, such as PCI DSS, GDPR, and CCPA. This includes maintaining documentation and undergoing regular audits to demonstrate compliance.

For example, a financial institution using tokenization should implement strong key management practices, including regular key rotation, to protect against potential key compromises. They should also implement robust access controls to limit access to sensitive data and systems to authorized personnel only. Regular security assessments, including penetration testing, are essential to identify and address vulnerabilities in the tokenization system. These practices are essential to maintain the integrity of the tokenization system and to protect sensitive data from unauthorized access or disclosure.

Tokenization in Different Industries

Tokenization’s adaptability makes it a valuable security solution across various sectors. Its ability to replace sensitive data with non-sensitive tokens allows organizations to protect confidential information while maintaining operational efficiency. This section explores how tokenization is applied to safeguard sensitive data in healthcare, e-commerce, and the financial sector.

Tokenization in Healthcare

The healthcare industry handles a vast amount of sensitive patient data, making it a prime target for cyberattacks. Tokenization provides a robust method to protect this information, adhering to regulations such as HIPAA (Health Insurance Portability and Accountability Act).Tokenization in healthcare involves:

- Protecting Patient Records: Tokenization can be used to replace sensitive patient identifiers like names, medical record numbers, and social security numbers with tokens. This protects patient privacy while still allowing healthcare providers to access necessary information for treatment and administrative purposes. For instance, when a patient’s information is accessed for billing, the system uses the token instead of the actual patient details.

- Securing Payment Information: When patients make payments for services, tokenization protects their credit card information. Instead of storing the actual card details, the system stores a token, which is then used for processing payments. This reduces the risk of data breaches and helps healthcare providers comply with PCI DSS (Payment Card Industry Data Security Standard) requirements.

- Enabling Secure Data Sharing: Tokenization allows for secure data sharing between healthcare providers, research institutions, and insurance companies. Sensitive data can be tokenized before being shared, ensuring that only authorized parties with the necessary access rights can view the original data. This is particularly important in research projects involving patient data.

Tokenization in E-commerce

E-commerce businesses handle a significant volume of customer payment information, making them a frequent target for cyberattacks. Tokenization plays a critical role in securing this sensitive data and building customer trust.Tokenization in e-commerce involves:

- Securing Payment Card Data: E-commerce platforms use tokenization to replace credit card numbers with tokens during transactions. When a customer enters their credit card details, the system exchanges them for a token, which is then used for processing the payment. The actual credit card number is not stored, reducing the risk of data breaches.

- Enhancing PCI DSS Compliance: Tokenization helps e-commerce businesses comply with PCI DSS requirements. By not storing sensitive cardholder data, the scope of PCI DSS compliance is significantly reduced, simplifying the compliance process and lowering associated costs.

- Improving Customer Trust: Tokenization provides an added layer of security, which helps build customer trust. Customers are more likely to make purchases on platforms that demonstrate a commitment to protecting their financial information.

Tokenization in the Financial Sector

The financial sector deals with highly sensitive financial data, making it a critical area for tokenization implementation. Banks, credit unions, and other financial institutions leverage tokenization to secure customer information and prevent fraud.Tokenization in the financial sector involves:

- Protecting Cardholder Data: Financial institutions use tokenization to secure cardholder data during transactions, storage, and processing. This includes replacing primary account numbers (PANs) with tokens.

- Securing Mobile Payments: Tokenization is a core component of mobile payment systems like Apple Pay and Google Pay. When a customer adds their credit card to a mobile wallet, the card information is tokenized, and the token is used for transactions. The actual card number is never shared with the merchant.

- Reducing Fraud: Tokenization helps reduce fraud by limiting the exposure of sensitive data. Even if a token is compromised, it is useless without the corresponding token vault and the original data.

- Enabling Secure Lending and Investment Platforms: Tokenization can be applied to secure sensitive data related to loans, investments, and other financial products. This helps protect customer information and reduces the risk of data breaches.

Tokenization Security Risks and Mitigation

Tokenization, while offering significant security advantages, introduces its own set of potential vulnerabilities. Understanding these risks and implementing robust mitigation strategies is crucial for maintaining the integrity and confidentiality of sensitive data within a tokenization system. This section explores the common security threats and the methods employed to safeguard tokenized data.

Potential Security Risks

Tokenization systems, like any security architecture, are susceptible to various threats. These risks can compromise the confidentiality, integrity, and availability of the data.

- Compromised Token Vault: The token vault, which stores the sensitive data and its corresponding tokens, is a primary target. If compromised, attackers gain access to the original data. This could happen through various means, including unauthorized access, malware, or insider threats.

- Token Reversal Attacks: While tokens are designed to be irreversible, vulnerabilities might exist that allow attackers to reverse the tokenization process, revealing the original data. Sophisticated attacks might exploit weaknesses in the tokenization algorithm or the vault’s security.

- Data Breaches in Tokenization Ecosystem: Any component of the tokenization system, including the application interfaces, the tokenization server, and the network infrastructure, can be vulnerable to data breaches. These breaches can expose tokens, the mapping between tokens and data, or even the original data if the security measures are inadequate.

- Insider Threats: Malicious or negligent insiders can pose a significant risk. Individuals with access to the token vault or the tokenization process can potentially misuse their privileges to access or manipulate sensitive data.

- Key Management Vulnerabilities: The security of the cryptographic keys used for tokenization and decryption is paramount. Weak key management practices, such as poor key generation, storage, or rotation, can create vulnerabilities that attackers can exploit.

- System Vulnerabilities: Software and hardware vulnerabilities in the tokenization system, such as bugs in the tokenization software or flaws in the underlying infrastructure, can be exploited by attackers. Regular patching and security updates are essential to mitigate these risks.

- Denial-of-Service (DoS) Attacks: Attackers can launch DoS attacks to disrupt the tokenization system’s availability, making it impossible for authorized users to access or process data. This can have severe consequences for businesses that rely on tokenization.

Mitigation Strategies

Effective mitigation strategies are essential to address the identified security risks and ensure the secure operation of a tokenization system. These strategies involve a combination of technical controls, administrative policies, and security best practices.

- Robust Access Controls: Implementing strong access controls is crucial to restrict access to the token vault and the tokenization system. This includes:

- Role-Based Access Control (RBAC): Assigning users specific roles and permissions based on their job responsibilities.

- Multi-Factor Authentication (MFA): Requiring users to provide multiple forms of authentication, such as a password and a one-time code, to verify their identity.

- Principle of Least Privilege: Granting users only the minimum necessary access rights to perform their duties.

- Encryption: Encrypting the token vault and any data in transit provides an additional layer of protection. Encryption protects the data even if unauthorized access is gained. This includes:

- Encryption at Rest: Encrypting the token vault using strong encryption algorithms, such as AES-256.

- Encryption in Transit: Encrypting data transmitted between the tokenization system components using protocols like TLS/SSL.

- Regular Auditing and Monitoring: Implementing regular audits and continuous monitoring is essential to detect and respond to security incidents. This includes:

- Security Information and Event Management (SIEM): Employing a SIEM system to collect and analyze security logs from various sources, such as the tokenization server, the database, and the network.

- Regular Penetration Testing: Conducting penetration tests to identify vulnerabilities in the tokenization system.

- Vulnerability Scanning: Regularly scanning the tokenization system for known vulnerabilities.

- Key Management: Secure key management practices are critical for protecting the cryptographic keys used in tokenization. This includes:

- Key Generation: Using strong, cryptographically secure key generation methods.

- Key Storage: Securely storing keys in hardware security modules (HSMs) or other secure key management systems.

- Key Rotation: Regularly rotating cryptographic keys to minimize the impact of key compromise.

- Data Masking and Anonymization: Implementing data masking and anonymization techniques can further reduce the risk of data breaches. These techniques involve replacing sensitive data with less sensitive alternatives.

- Data Masking: Replacing sensitive data with masked values. For example, masking a credit card number.

- Data Anonymization: Removing or altering personally identifiable information (PII) to prevent the identification of individuals.

- Incident Response Plan: Developing and implementing a comprehensive incident response plan is crucial for responding to security incidents effectively. This plan should include:

- Detection: Identifying security incidents promptly.

- Containment: Limiting the damage caused by the incident.

- Eradication: Removing the cause of the incident.

- Recovery: Restoring the system to its normal operating state.

- Post-Incident Analysis: Analyzing the incident to prevent future occurrences.

Flowchart: Tokenization System Security Measures

The following flowchart illustrates the security measures necessary to protect a tokenization system.

1. Data Input: Sensitive data enters the system.

2. Access Control:

- Yes: Is the user authenticated and authorized? If yes, proceed to step 3.

- No: Access denied. Log the attempt and alert security.

3. Tokenization Process:

- Data Encryption: Encrypt the sensitive data.

- Token Generation: Generate a unique token for the encrypted data.

- Token Vault Storage: Store the token and encrypted data mapping securely in the token vault.

4. Data Output: The token is provided to the requesting application.

5. Data Usage: The application uses the token instead of the original data.

6. Data Retrieval (Optional): If the original data is needed:

- Token Authentication: Verify the token’s validity.

- Access Control: Check user permissions.

- Data Decryption: Decrypt the data using the appropriate key.

- Data Access: Provide the decrypted data to the authorized user.

7. Monitoring and Auditing:

- Security Monitoring: Continuously monitor the system for suspicious activity.

- Regular Audits: Conduct regular security audits.

- Logging: Maintain comprehensive logs of all system activities.

8. Incident Response:

- Incident Detection: Detect and respond to security incidents.

- Containment: Limit the damage caused by the incident.

- Recovery: Restore the system to normal operation.

Tokenization and Compliance

Tokenization plays a crucial role in helping organizations meet various data protection regulations and industry-specific compliance requirements. By replacing sensitive data with tokens, tokenization minimizes the risk of data breaches and reduces the scope of compliance efforts. This section will delve into how tokenization facilitates compliance with regulations such as GDPR and PCI DSS, along with other industry-specific standards.

Tokenization and GDPR Compliance

Tokenization assists organizations in complying with the General Data Protection Regulation (GDPR) by reducing the risk associated with processing personal data. GDPR aims to protect the personal data of individuals within the European Union.Tokenization aids GDPR compliance in several ways:

- Data Minimization: Tokenization allows organizations to process only the necessary data by replacing sensitive information with tokens. This aligns with the GDPR principle of data minimization, which requires organizations to collect and process only the data needed for a specific purpose.

- Pseudonymization: Tokenization can be considered a form of pseudonymization, a technique explicitly mentioned in GDPR. Pseudonymization involves replacing personal data with pseudonyms, making it more difficult to identify individuals without additional information. This reduces the risk associated with data breaches and can ease some GDPR requirements.

- Reduced Breach Impact: In the event of a data breach, if sensitive data is tokenized, the impact is significantly reduced. Attackers gain access to tokens, which are meaningless without the corresponding token vault, thereby minimizing the risk of identity theft and financial loss.

- Enhanced Data Security: Tokenization enhances data security by removing sensitive data from the primary systems, reducing the attack surface. This is in line with the GDPR’s emphasis on implementing appropriate technical and organizational measures to ensure data security.

Tokenization and PCI DSS Compliance

Tokenization is a powerful tool for achieving and maintaining Payment Card Industry Data Security Standard (PCI DSS) compliance. PCI DSS is a set of security standards designed to ensure that all companies that process, store, or transmit credit card information maintain a secure environment.Tokenization contributes to PCI DSS compliance in the following ways:

- Reduced Scope: Tokenization significantly reduces the scope of PCI DSS compliance by removing sensitive cardholder data from the organization’s systems. The token replaces the sensitive data, and only the token is stored, processed, and transmitted. This dramatically lowers the number of systems that need to be assessed and secured under PCI DSS.

- Simplified Compliance: By minimizing the exposure of sensitive data, tokenization simplifies the compliance process. Organizations can focus their efforts on securing the token vault and the tokenization process itself, rather than having to secure the entire infrastructure where cardholder data would have been stored.

- Protection of Cardholder Data: Tokenization protects cardholder data by replacing it with tokens. Even if a breach occurs, the tokens are useless without access to the token vault. This significantly reduces the risk of fraud and financial loss.

- Compliance with Requirement 3.4: PCI DSS Requirement 3.4 mandates the protection of stored cardholder data. Tokenization directly addresses this requirement by ensuring that sensitive data is not stored in its original form.

Tokenization and Other Industry-Specific Compliance Requirements

Tokenization is adaptable and can be applied to meet various other industry-specific compliance requirements. These requirements often focus on protecting sensitive data within specific sectors.Tokenization’s adaptability makes it useful for:

- Healthcare (HIPAA): In the healthcare industry, tokenization can help organizations comply with the Health Insurance Portability and Accountability Act (HIPAA). By tokenizing protected health information (PHI), healthcare providers can reduce the risk of data breaches and protect patient privacy. Tokenization helps meet HIPAA’s security and privacy rules by minimizing the exposure of PHI.

- Financial Services: Beyond PCI DSS, financial institutions can use tokenization to comply with other regulations, such as the Gramm-Leach-Bliley Act (GLBA) in the United States. GLBA requires financial institutions to protect the privacy of customers’ personal financial information. Tokenization helps secure this data.

- Government and Public Sector: Governments and public sector organizations often handle sensitive citizen data. Tokenization can assist in complying with regulations such as the Federal Information Security Management Act (FISMA) in the United States, ensuring the confidentiality and integrity of government information.

- Other Industries: Tokenization’s benefits extend to any industry that handles sensitive data, including e-commerce, retail, and any sector needing to comply with data privacy regulations. Tokenization helps organizations meet the requirements of various data protection laws and standards, improving overall data security and reducing compliance burdens.

Future Trends in Tokenization

The landscape of data security is constantly evolving, and tokenization is poised to adapt and innovate alongside these changes. As technology advances and new threats emerge, tokenization’s role will become even more critical. This section explores emerging trends, the impact of AI, and the overall future trajectory of tokenization in safeguarding sensitive information.

Tokenization in Cloud Environments

The shift towards cloud computing has significantly altered how organizations store and process data. Tokenization is adapting to this trend, offering a secure solution for protecting data within cloud environments.Tokenization in the cloud offers several advantages:

- Enhanced Data Security: Tokens replace sensitive data, even in the cloud, reducing the risk of data breaches. If a cloud provider’s systems are compromised, the attacker only gains access to tokens, not the original data.

- Compliance with Regulations: Tokenization helps organizations meet compliance requirements like PCI DSS and GDPR by minimizing the exposure of sensitive data in the cloud.

- Scalability and Flexibility: Tokenization solutions can be deployed and scaled easily within cloud environments, allowing organizations to adapt to changing data storage needs. This flexibility is especially crucial for businesses experiencing rapid growth or those dealing with fluctuating data volumes.

- Reduced Attack Surface: By tokenizing data before it enters the cloud, the attack surface is significantly reduced. Even if the cloud environment is breached, the tokenized data is useless without the token vault.

A practical example is the use of tokenization by financial institutions using cloud services for payment processing. Instead of storing sensitive cardholder data in the cloud, the institutions can tokenize the data and store the tokens, thereby reducing the risk of data breaches and ensuring compliance with PCI DSS.

The Role of Tokenization in Protecting Data in the Age of AI

Artificial intelligence (AI) is transforming various industries, but it also introduces new data security challenges. Tokenization plays a crucial role in protecting data in the age of AI.AI’s impact on data security is multifaceted:

- Data Privacy for AI Training: AI models require vast amounts of data for training. Tokenization enables the use of sensitive data for AI training without exposing the original data. For example, healthcare organizations can tokenize patient data and use the tokens for training AI models to improve diagnostics, all while maintaining patient privacy.

- Protecting AI Model Integrity: Tokenization can be used to protect the integrity of AI models. By tokenizing the input data, the model’s output can be verified, ensuring that it hasn’t been tampered with or manipulated.

- Securing AI-Generated Data: As AI generates more data, tokenization can be used to secure this data. For instance, in the financial sector, AI might generate reports or predictions based on sensitive financial data. Tokenization can protect this generated data.

- Preventing Data Poisoning Attacks: In AI, data poisoning involves injecting malicious data into a model’s training dataset to corrupt it. Tokenization can limit the impact of these attacks by ensuring that the original sensitive data remains protected, even if the model is compromised.

For example, consider a retail company using AI to personalize customer recommendations. Tokenizing customer purchase history data allows the AI to analyze the data without revealing sensitive financial or personal information. This approach balances the benefits of AI with the critical need for data privacy.

The Future of Tokenization and its Impact on Data Security

The future of tokenization is promising, with continuous innovation and adaptation expected to address emerging security threats.Here are some key trends and predictions:

- Integration with Blockchain Technology: Tokenization and blockchain are a natural fit. Blockchain can be used to manage and track tokens, providing an immutable record of token transactions and enhancing security. This integration will likely lead to more secure and transparent data management systems.

- Increased Automation and Orchestration: Tokenization solutions will become more automated, with tools that automatically identify and tokenize sensitive data. This automation will streamline implementation and reduce the need for manual intervention.

- Rise of Hardware Security Modules (HSMs): HSMs will continue to play a crucial role in securing token vaults, providing a robust and tamper-proof environment for storing and managing tokens. This will increase the reliability and security of tokenization systems.

- Adoption in New Industries: Tokenization is expected to expand beyond its traditional applications in finance and e-commerce, finding use cases in healthcare, IoT, and other sectors where data privacy is paramount.

- Focus on Usability and Interoperability: Tokenization solutions will become more user-friendly and interoperable, making them easier to integrate into existing systems.

In conclusion, tokenization is not just a trend; it is an evolving technology that will continue to play a vital role in data security. Its adaptability to new technologies, its ability to address emerging threats, and its potential for innovation make it a cornerstone of secure data management in the future.

Last Word

In conclusion, tokenization stands as a vital pillar in data security, offering a sophisticated method to protect sensitive information. By replacing confidential data with tokens, organizations can mitigate risks, simplify compliance, and build trust. As technology continues to evolve, understanding and implementing tokenization will remain crucial for securing data in an ever-changing digital world. The future of data security will undoubtedly see further innovation and adoption of tokenization strategies.

Helpful Answers

What is the main difference between tokenization and encryption?

Tokenization replaces sensitive data with tokens, while encryption transforms data into an unreadable format using a key. Tokenization preserves the data format, while encryption does not.

Is tokenization a replacement for encryption?

No, tokenization is not a direct replacement for encryption. They are distinct methods with different strengths and weaknesses. They can also be used together to create a layered security approach.

How does tokenization help with PCI DSS compliance?

Tokenization significantly reduces the scope of PCI DSS compliance by removing sensitive cardholder data from systems, which reduces the requirements for security controls.

What are the risks associated with tokenization?

Risks include the security of the token vault, potential for token compromise, and ensuring the integrity of the tokenization process itself. Proper access controls, auditing, and robust security measures are essential.

Can tokens be reversed to obtain the original data?

No, tokens are designed to be irreversible. The token itself does not contain the original data and cannot be used to retrieve it without access to the token vault.