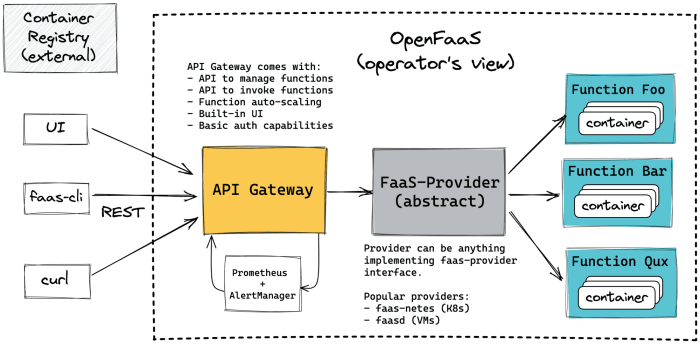

OpenFaaS, an open-source framework, presents a compelling approach to serverless functions, enabling developers to package any process as a function and deploy it with ease. This method offers a streamlined pathway for creating and managing event-driven applications, unlocking new possibilities in cloud-native architectures. The architecture of OpenFaaS simplifies the deployment and operation of functions, making it an attractive choice for both small-scale projects and large enterprise applications.

This exploration delves into the core concepts of OpenFaaS, outlining its architecture, deployment strategies, and management capabilities. We’ll examine the key components, including the gateway, function store, and function runners, providing a clear understanding of their roles in function execution. Furthermore, the guide will walk you through setting up OpenFaaS, creating your first function, and exploring advanced features such as scaling, monitoring, and integration with external tools.

This comprehensive overview will provide you with the knowledge to leverage OpenFaaS effectively.

Introduction to OpenFaaS

OpenFaaS is a framework for building serverless functions on Kubernetes and other container runtimes. Its primary purpose is to simplify the deployment and management of event-driven applications, enabling developers to package code as Docker containers and deploy them without the complexities of managing underlying infrastructure. This approach allows developers to focus on writing code rather than operational overhead.

Core Concept and Purpose

OpenFaaS centers around the concept of “Functions as a Service” (FaaS), which abstracts away the underlying infrastructure. The system enables the execution of small, self-contained pieces of code (functions) in response to events or requests. The purpose of OpenFaaS is to streamline the development and deployment lifecycle for these functions, providing a developer-friendly experience and promoting operational efficiency.

Definition of Serverless Functions and OpenFaaS Implementation

Serverless functions are pieces of code that execute in response to triggers, such as HTTP requests, database updates, or scheduled events, without the developer needing to manage the server infrastructure. OpenFaaS implements serverless functions by:

- Containerization: Each function is packaged as a Docker container, which encapsulates the code and its dependencies, ensuring consistency across different environments.

- Event-Driven Architecture: Functions are designed to react to events, allowing for flexible and scalable application design. This contrasts with traditional applications that often have a monolithic structure.

- Orchestration: OpenFaaS leverages container orchestration platforms, such as Kubernetes, to manage the lifecycle of functions, including scaling, deployment, and monitoring. Kubernetes provides the underlying infrastructure to handle function execution, scaling, and health checks.

- Invocation: Functions are invoked through HTTP requests, enabling easy integration with various services and applications. The OpenFaaS gateway handles the routing of requests to the appropriate function instances.

Key Benefits of Using OpenFaaS

OpenFaaS provides several key benefits, focusing on developer experience and operational efficiency.

- Simplified Deployment: OpenFaaS simplifies the deployment process by allowing developers to package their code as Docker containers and deploy them with a few commands. This reduces the time and effort required to deploy and manage functions.

- Scalability and Elasticity: The platform automatically scales functions based on demand, ensuring that applications can handle fluctuating workloads. Kubernetes, used as the underlying infrastructure, handles scaling based on metrics like CPU utilization or request queue length. For example, during peak traffic, OpenFaaS can automatically create more instances of a function to handle the increased load.

- Developer-Friendly Experience: OpenFaaS provides a CLI (Command Line Interface) and a UI (User Interface) to simplify function creation, deployment, and management. The focus is on providing a seamless experience for developers, allowing them to focus on writing code.

- Cost Optimization: Serverless functions typically incur costs only when they are executed, leading to potential cost savings compared to traditional server-based applications. OpenFaaS’s pay-per-use model can significantly reduce operational expenses.

- Portability: Functions deployed with OpenFaaS can be easily moved between different environments, such as development, testing, and production, due to the use of containerization. This portability reduces the risk of environment-specific issues.

- Observability and Monitoring: OpenFaaS integrates with monitoring tools like Prometheus and Grafana to provide insights into function performance and health. This enables developers to monitor the performance of their functions and troubleshoot issues quickly.

Core Components of OpenFaaS

OpenFaaS, at its core, is designed to simplify the deployment and management of serverless functions. Understanding its fundamental building blocks is crucial for effectively utilizing its capabilities. This section Artikels the key components that orchestrate the execution and lifecycle of functions within an OpenFaaS deployment.

Gateway Component Function

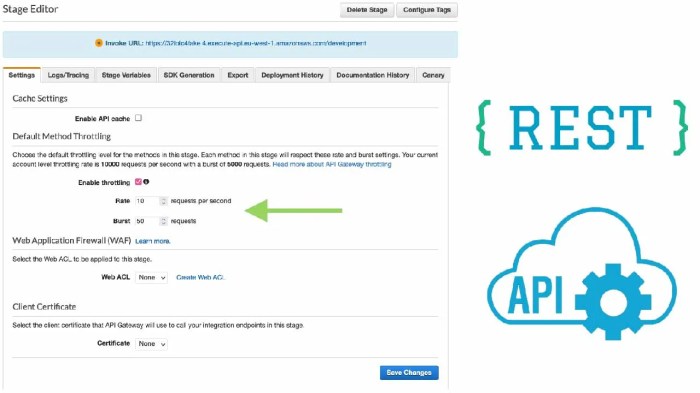

The OpenFaaS Gateway serves as the primary entry point for all function invocations. It acts as an API gateway, handling incoming HTTP requests and routing them to the appropriate function runners. It provides several critical functions.

- Request Routing: The Gateway receives HTTP requests and, based on the request’s path (e.g., `/function/my-function`), determines which function should be invoked. This routing mechanism is central to the system’s operation.

- Authentication and Authorization: The Gateway can be configured to enforce authentication and authorization policies. This can involve checking API keys, verifying user credentials, or implementing other security measures to control access to functions.

- Load Balancing: To distribute incoming requests efficiently, the Gateway employs load balancing across multiple function runners. This ensures that no single runner is overloaded, enhancing performance and reliability.

- Monitoring and Metrics: The Gateway collects and exposes metrics related to function invocations, such as invocation count, latency, and error rates. These metrics are crucial for monitoring the health and performance of the OpenFaaS deployment.

- Request Transformation: Before forwarding requests to the function runners, the Gateway can transform requests, such as adding headers or modifying the payload. This allows for flexibility in how functions are invoked and managed.

Function Store Component

The Function Store is a central repository for managing function definitions. It acts as a catalog, providing information about available functions, including their names, descriptions, and associated metadata.

- Function Discovery: The Function Store enables users to discover available functions within the OpenFaaS deployment. Users can browse the catalog to find functions that meet their needs.

- Metadata Management: Each function entry in the Function Store contains metadata, such as the function’s name, description, image name (referencing the container image), and any associated environment variables.

- Function Updates: The Function Store facilitates the deployment of new function versions or updates to existing ones. Users can update function definitions and deploy new container images.

- Integration with Other Tools: The Function Store often integrates with other tools and services, such as CI/CD pipelines and monitoring systems, to automate function deployment and management.

Function Runners Component

Function Runners are responsible for executing the functions. They manage the lifecycle of function containers, including their creation, scaling, and destruction. The runners interact with the Gateway to receive invocations and with the Function Store to obtain function definitions.

- Container Orchestration: Function Runners utilize container orchestration platforms, such as Docker Swarm or Kubernetes, to manage the function containers. They handle the deployment, scaling, and termination of containers.

- Function Execution: When a request arrives from the Gateway, the Function Runner selects an available container (or creates a new one if necessary) and forwards the request to it. The function code then executes within the container.

- Scaling: Function Runners automatically scale the number of function containers based on the demand. When the number of incoming requests increases, the runner creates more containers to handle the load. Conversely, it scales down the number of containers when the demand decreases.

- Health Checks: Function Runners perform health checks on the function containers to ensure that they are running correctly. If a container fails a health check, the runner automatically terminates it and starts a new one.

Architectural Diagram

The following diagram illustrates the interaction between the core components of OpenFaaS.

Diagram Description: The diagram depicts a simplified OpenFaaS architecture. The user initiates a request via an HTTP client (e.g., a web browser or API client). This request is directed to the OpenFaaS Gateway, which handles incoming traffic. The Gateway, based on the request path, routes the request to a Function Runner. The Function Runner, which utilizes a container orchestration platform (e.g., Docker Swarm or Kubernetes), selects or creates a container that houses the function code.

The function code executes within the container, potentially interacting with external services (not shown). The Function Runner then sends the response back to the Gateway, which, in turn, forwards it to the HTTP client. The Function Store, shown as a separate component, provides information about functions and their associated metadata to both the Gateway and the Function Runners. The Function Store manages the function definitions and their associated metadata, allowing for efficient discovery and management of functions within the system.

Setting Up OpenFaaS

Setting up OpenFaaS is a crucial step in leveraging its capabilities for serverless function deployment and management. This involves installing the necessary components, configuring the environment, and ensuring proper connectivity. This section Artikels the setup procedures for different deployment scenarios, including Docker and Kubernetes.

Installing OpenFaaS Using Docker

Docker provides a streamlined approach for installing and running OpenFaaS. The process involves pulling pre-built Docker images and configuring them for use.To install OpenFaaS with Docker, follow these steps:

- Prerequisites: Ensure Docker is installed and running on your system. Verify the Docker installation by running

docker --versionin your terminal. - Pull OpenFaaS Images: Pull the necessary Docker images for OpenFaaS core components, including the gateway, function watchdog, and UI. Execute the following commands in your terminal:

docker pull openfaas/gateway:latest

docker pull openfaas/faas-idler:latest

docker pull openfaas/function-watchdog:latest

docker pull openfaas/ui:latest - Deploy OpenFaaS: Deploy OpenFaaS using Docker Compose or manually using the Docker CLI. Docker Compose simplifies the deployment process by defining the services in a

docker-compose.ymlfile. Create adocker-compose.ymlfile with the necessary configurations for the OpenFaaS services, including ports, environment variables, and volumes. An example of the file would be:

version: "3.3"

services:

gateway:

image: openfaas/gateway:latest

ports:

8080

8080

environment:

read_timeout: 60s

write_timeout: 60s

faas_url: http://gateway:8080

basic_auth_password:

restart: always

volumes:

./data

/root/.openfaas

faas-idler:

image: openfaas/faas-idler:latest

environment:

gateway_url: http://gateway:8080

restart: always

ui:

image: openfaas/ui:latest

ports:

31112

8080

environment:

gateway_url: http://gateway:8080

restart: always

prometheus:

image: prom/prometheus:latest

ports:

9090

9090

volumes:

./prometheus.yml

/etc/prometheus/prometheus.yml

alertmanager:

image: prom/alertmanager:latest

ports:

9093

9093

volumes:

./alertmanager.yml

/etc/alertmanager/config.yml

- Deploy with Docker Compose: Run

docker-compose up -din the directory containing thedocker-compose.ymlfile to start the OpenFaaS services. The-dflag runs the containers in detached mode. - Access OpenFaaS: Access the OpenFaaS gateway through your web browser by navigating to

http://localhost:8080or the appropriate IP address and port if the deployment is not local.

Deploying OpenFaaS on a Kubernetes Cluster

Deploying OpenFaaS on a Kubernetes cluster provides scalability, high availability, and efficient resource management. This process involves setting up the necessary Kubernetes resources, such as deployments, services, and ingress controllers.The following steps are involved in deploying OpenFaaS on Kubernetes:

- Prerequisites: Ensure you have a Kubernetes cluster running and

kubectlis configured to connect to it. You can use tools like Minikube, Kind, or a cloud-based Kubernetes service (e.g., Google Kubernetes Engine, Amazon EKS, Azure AKS). - Install the OpenFaaS CLI: The OpenFaaS CLI (

faas-cli) simplifies deploying functions and managing OpenFaaS resources. Follow the instructions on the official OpenFaaS documentation for installing the CLI on your system. - Deploy OpenFaaS using Helm: Helm is a package manager for Kubernetes that simplifies the deployment of applications. Use Helm to deploy OpenFaaS by adding the OpenFaaS Helm chart repository and installing the chart.

helm repo add openfaas https://openfaas.github.io/faas-netes/

helm repo update

helm install openfaas openfaas/openfaas --namespace openfaas --create-namespace - Access the OpenFaaS Gateway: Determine the external IP address or hostname for accessing the OpenFaaS gateway. This may involve using a LoadBalancer service, an Ingress controller, or port forwarding. Check the Kubernetes services to find the gateway’s external endpoint. For example, if you are using a LoadBalancer, the external IP address will be available through the service details.

- Configure DNS (Optional): Configure DNS to map a domain name to the OpenFaaS gateway’s external IP address for easier access.

Configuring the OpenFaaS CLI (faas-cli)

The OpenFaaS CLI ( faas-cli) is a command-line tool for managing and deploying serverless functions. Configuration of the faas-cli allows users to interact with the OpenFaaS gateway, deploy functions, and manage their lifecycle.To configure the OpenFaaS CLI, follow these steps:

- Install the CLI: Install the

faas-clion your local machine. The installation method varies depending on your operating system. Instructions can be found in the OpenFaaS documentation. - Configure the Gateway URL: Set the URL of your OpenFaaS gateway so the CLI can communicate with it. This is usually done using the

faas-cliconfiguration options or environment variables.

faas-cli login -g-u -p Replace

- Test the Connection: Verify that the CLI can successfully connect to the OpenFaaS gateway. Run a command like

faas-cli listto list deployed functions. If the connection is successful, the CLI will display a list of functions (if any). - Configure Docker Registry (Optional): If you’re using a private Docker registry, configure the CLI to authenticate with the registry. This usually involves providing your registry credentials.

- Set up Function Templates (Optional): The

faas-cliuses templates to create new functions. Configure the CLI to use custom templates or ensure the default templates are available.

Creating Your First Function

Creating functions is the core operation within OpenFaaS, enabling developers to deploy and execute code snippets as serverless functions. This section details the process of creating a basic “Hello World” function, demonstrating the fundamental steps involved in function development, deployment, and testing within the OpenFaaS environment.

“Hello World” Function Creation Process

The process of creating a “Hello World” function in OpenFaaS involves writing the function code, packaging it, and deploying it to the OpenFaaS gateway. This process is streamlined using the `faas-cli`, which simplifies function creation, deployment, and management.

- Code Implementation: The function’s logic is implemented in a supported programming language. This typically involves creating a script or program that receives input, processes it, and returns an output.

- Function Packaging: The function code, along with any necessary dependencies, is packaged into a container image. This containerization ensures portability and consistent execution across different environments.

- Deployment: The container image is deployed to the OpenFaaS gateway. The gateway then manages the function’s lifecycle, including scaling, invocation, and monitoring.

- Testing: Once deployed, the function can be tested by sending requests to the gateway. The gateway forwards the requests to the function, and the function’s response is returned to the client.

Code Examples in Different Programming Languages

Here are “Hello World” function examples in Python, Node.js, and Go, showcasing the versatility of OpenFaaS in supporting multiple programming languages. These examples demonstrate the basic structure of a function and how it interacts with the OpenFaaS environment.

Python:

The Python example uses the `flask` framework to create a simple HTTP endpoint that returns “Hello, World!”. This function is designed to receive requests and respond with the specified message. The use of Flask simplifies the creation of web applications and APIs, which aligns well with the serverless architecture of OpenFaaS.

from flask import Flask, request, jsonifyapp = Flask(__name__)@app.route("/", methods=["POST"])def hello_world(): return jsonify("message": "Hello, World!")if __name__ == "__main__": app.run(debug=True, host="0.0.0.0", port=8080)Node.js:

The Node.js example utilizes the `http` module to create a basic HTTP server. This server listens for incoming requests and responds with “Hello, World!”. Node.js’s non-blocking, event-driven architecture makes it suitable for building scalable network applications, which is a valuable characteristic in serverless environments.

const http = require('http');const server = http.createServer((req, res) => res.statusCode = 200; res.setHeader('Content-Type', 'application/json'); res.end(JSON.stringify( message: 'Hello, World!' )););const port = 8080;server.listen(port, () => console.log(`Server running at http://localhost:$port/`););Go:

The Go example employs the `net/http` package to create an HTTP server. This server handles incoming requests and responds with “Hello, World!”. Go’s efficiency and concurrency features make it a strong choice for building high-performance serverless functions. The direct integration with the standard library provides simplicity in handling network requests.

package mainimport ( "fmt" "net/http")func handler(w http.ResponseWriter, r-http.Request) fmt.Fprintf(w, `"message": "Hello, World!"`)func main() http.HandleFunc("/", handler) http.ListenAndServe(":8080", nil)Deployment and Testing with `faas-cli`

The `faas-cli` simplifies the deployment and testing of functions within OpenFaaS. It streamlines the process of building, pushing, and deploying container images to the OpenFaaS gateway. It also provides commands for invoking functions and viewing logs.

The steps for deploying and testing a function using `faas-cli` typically involve:

- Creating a Function Definition: This involves creating a `yaml` file that defines the function’s name, handler, image, and other configurations. This file provides a declarative way to manage the function’s deployment settings.

- Building the Function: Using the `faas-cli build` command to build the container image for the function. This command leverages Docker to package the function code and dependencies.

- Pushing the Image: Using the `faas-cli push` command to push the container image to a container registry, such as Docker Hub or a private registry. This makes the image available for deployment to the OpenFaaS gateway.

- Deploying the Function: Using the `faas-cli deploy` command to deploy the function to the OpenFaaS gateway. This command creates a deployment in Kubernetes, using the container image from the registry.

- Testing the Function: Using the `faas-cli invoke` command to test the function by sending requests to the gateway and verifying the response. This command allows users to interact with the deployed function and validate its functionality.

Example of a function definition file (e.g., `hello-world.yml`):

version: 1.0provider: name: openfaas gateway: http://localhost:8080 # Replace with your OpenFaaS gateway addressfunctions: hello-world: lang: python3 # or node16, go1.x, etc. handler: ./hello-world # Path to your function code image: your-dockerhub-username/hello-world:latest # Replace with your Docker Hub username and image tagExample `faas-cli` commands:

# Build the functionfaas-cli build -f hello-world.yml# Push the image to the registryfaas-cli push -f hello-world.yml# Deploy the functionfaas-cli deploy -f hello-world.yml# Invoke the functionfaas-cli invoke hello-worldFunction Deployment and Management

Managing functions effectively is crucial for the successful operation of any OpenFaaS deployment. This involves not only the initial deployment but also the subsequent updates, scaling, and monitoring of functions. The deployment and management processes ensure that functions are accessible, perform as expected, and can be adapted to changing requirements. OpenFaaS provides a robust set of tools and mechanisms to streamline these tasks.

Deploying Functions to OpenFaaS

The deployment of functions in OpenFaaS leverages Docker containers. This approach allows for consistent execution across different environments. The process involves packaging the function code into a Docker image and then deploying that image to the OpenFaaS gateway.

The deployment process typically involves these steps:

- Build the Docker Image: The function code, along with any dependencies, is packaged into a Docker image. This image contains everything needed to run the function. This often uses a `Dockerfile` which specifies the base image, dependencies, and how to run the function.

- Push the Image to a Registry: The Docker image is pushed to a container registry (e.g., Docker Hub, a private registry). This makes the image accessible for deployment. The image is tagged with a unique identifier, such as the function name and a version number.

- Deploy the Function using the OpenFaaS CLI or UI: The OpenFaaS CLI (`faas-cli`) or the web UI is used to deploy the function. This involves specifying the image name and other deployment parameters, such as resource limits (CPU and memory), environment variables, and scaling options.

- OpenFaaS Gateway Manages Deployment: The OpenFaaS gateway then pulls the Docker image from the registry and creates a deployment in Kubernetes (or the underlying container orchestration system). The gateway manages the lifecycle of the function, including scaling, health checks, and routing requests.

For example, deploying a function named “hello-world” might involve these commands (using the `faas-cli`):

“`bash

faas-cli build -f hello-world.yml

faas-cli push -f hello-world.yml

faas-cli deploy -f hello-world.yml

“`

The `hello-world.yml` file would contain the function’s configuration, including the image name, function name, and other settings. The `build` command creates the Docker image, the `push` command pushes it to the registry, and the `deploy` command deploys it to OpenFaaS.

Updating and Redeploying Functions

Updating functions in OpenFaaS involves a similar process to the initial deployment, but with a focus on minimizing downtime and ensuring a smooth transition. The primary method involves redeploying a new version of the Docker image.

The typical process for updating a function is:

- Modify the Function Code: Make the necessary changes to the function’s code.

- Rebuild the Docker Image: Rebuild the Docker image with the updated code. The image should be tagged with a new version number or a tag indicating it is the latest version.

- Push the Updated Image to the Registry: Push the new Docker image to the container registry.

- Redeploy the Function: Redeploy the function using the `faas-cli` or UI, specifying the updated image name. OpenFaaS can manage rolling updates, replacing old instances with new ones gradually, minimizing downtime.

OpenFaaS also supports blue/green deployments. In a blue/green deployment strategy, two identical environments are maintained: the “blue” environment (current live version) and the “green” environment (new version). When the new version (green) is ready, traffic is gradually shifted from the blue to the green environment. This approach allows for zero-downtime deployments and easy rollbacks if issues arise. The `faas-cli` and OpenFaaS UI can be used to orchestrate such deployments.

Deployment Options: Pros and Cons

Various deployment strategies can be employed when deploying functions to OpenFaaS. The best choice depends on factors such as the desired level of automation, the complexity of the function, and the need for zero-downtime deployments. The following table summarizes different deployment options, along with their advantages and disadvantages.

| Deployment Option | Pros | Cons |

|---|---|---|

| Manual Deployment (faas-cli or UI) | Simple to implement for basic deployments; good for initial testing and development; allows for granular control over deployment parameters. | Requires manual steps for each deployment, which can be time-consuming and error-prone; not suitable for automated CI/CD pipelines; can lead to downtime if not managed carefully. |

| Automated Deployment (CI/CD Pipeline) | Highly automated, reducing manual effort; enables rapid and consistent deployments; supports automated testing and rollback capabilities; improves developer productivity. | Requires setup and configuration of a CI/CD pipeline (e.g., Jenkins, GitLab CI, GitHub Actions); can be more complex to configure initially; requires careful management of secrets and credentials. |

| Rolling Updates | Minimizes downtime by gradually replacing old function instances with new ones; allows for zero-downtime deployments; supports easy rollbacks to previous versions. | Requires careful consideration of function compatibility during updates; can lead to temporary performance degradation during the update process; requires the function to be stateless or manage state externally. |

| Blue/Green Deployments | Provides zero-downtime deployments; facilitates easy rollbacks to the previous version; minimizes the risk of deployment failures; allows for testing the new version in production before switching traffic. | Requires double the infrastructure resources (two environments); can be more complex to manage than rolling updates; requires careful planning for data synchronization between the environments. |

Function Invocation and Triggers

OpenFaaS functions, designed to be small and focused, are invoked based on events or requests. This modularity allows for flexible integration into various workflows and architectures. The core of OpenFaaS’s power lies in its ability to handle different invocation methods, enabling it to react to diverse stimuli and integrate with various systems.

Methods for Invoking OpenFaaS Functions

OpenFaaS provides several mechanisms for triggering function execution. These methods enable the functions to respond to different types of events and integrate seamlessly with existing systems.

- HTTP Requests: This is the most common method, where functions are invoked via standard HTTP calls (GET, POST, PUT, DELETE, etc.). The OpenFaaS gateway acts as the endpoint, routing requests to the appropriate function. This approach facilitates easy integration with web applications, APIs, and other HTTP-enabled services.

- Events: OpenFaaS supports event-driven invocation, allowing functions to be triggered by events from various sources. This includes events from message queues (e.g., RabbitMQ, Kafka), cloud services (e.g., AWS SQS, Azure Event Hubs), and webhooks. Event-driven architecture promotes loose coupling and scalability.

- Scheduled Tasks: Functions can be triggered based on a schedule, similar to cron jobs. This allows for automating tasks such as data processing, cleanup operations, and periodic reporting. This is often implemented using tools like the Kubernetes cronjob controller or similar scheduling mechanisms.

Using HTTP Triggers: Examples

HTTP triggers are the fundamental way to interact with OpenFaaS functions. They provide a straightforward mechanism for invoking functions via HTTP requests.

A simple example involves a function that receives a POST request containing JSON data and processes it. This function might be designed to handle data validation or transformation.

Consider a function named `process-data`. The function definition (e.g., in a `handler.py` file for Python) could look like this:

from flask import Flask, request, jsonify app = Flask(__name__) @app.route('/', methods=['POST']) def handle_request(): data = request.get_json() # Process the data processed_data = process_data_function(data) return jsonify(processed_data) def process_data_function(data): # Your data processing logic here return "status": "success", "processed_data": data if __name__ == '__main__': app.run(debug=False, host='0.0.0.0', port=8080) To invoke this function, you would send a POST request to the OpenFaaS gateway, specifying the function name and the data in the request body.

Example using `curl`:

curl -X POST -H "Content-Type: application/json" -d '"key": "value"' http://gateway.example.com:8080/function/process-data This would send a JSON payload `”key”: “value”` to the `process-data` function. The function processes the data and returns a JSON response. This setup allows you to easily create APIs, webhooks, or other HTTP-based integrations with OpenFaaS functions.

Configuring Triggers from Various Sources

Configuring triggers allows OpenFaaS functions to respond to events from various sources, enabling integration with diverse systems and workflows.

Trigger configuration typically involves defining the event source, the event format, and the function to be invoked. This often uses configuration files, annotations, or dedicated trigger components within the OpenFaaS ecosystem.

- Webhooks: Webhooks allow functions to react to events from external services. When an event occurs in the external service (e.g., a code commit in GitHub, a payment received by Stripe), the service sends an HTTP POST request to a predefined URL (the OpenFaaS function endpoint). The function then processes the data in the webhook payload.

- Message Queues: OpenFaaS can integrate with message queues such as RabbitMQ or Kafka. When a message is published to a specific queue, a configured OpenFaaS function is triggered to process that message. This is useful for building asynchronous, event-driven systems. Configuration typically involves specifying the queue name, the message format, and the function to be invoked.

- Cloud Services: OpenFaaS can be integrated with cloud-specific event sources. For example, in AWS, functions can be triggered by events from services like SQS, SNS, or CloudWatch Events. The configuration involves setting up the cloud service to send events to the OpenFaaS gateway or a dedicated event-processing component, which then triggers the function.

Scaling and Autoscaling in OpenFaaS

OpenFaaS is designed to handle fluctuating workloads efficiently by providing mechanisms for scaling and autoscaling functions. This capability ensures that resources are allocated dynamically based on demand, preventing bottlenecks and optimizing resource utilization. Effective scaling is crucial for maintaining application performance, especially in environments with variable traffic patterns.

Function Scaling Mechanisms

OpenFaaS leverages container orchestration to scale functions. When a function receives more requests than its current instances can handle, the platform automatically creates new instances (containers) to distribute the load. Conversely, if the demand decreases, OpenFaaS can scale down by removing underutilized instances. This scaling behavior is governed by several factors, including:

- Request Rate: The number of requests arriving at a function. High request rates trigger scaling events to maintain responsiveness.

- CPU and Memory Utilization: Metrics on resource consumption are monitored to determine if a function is under-provisioned and needs scaling.

- Configuration Settings: Users can configure parameters such as the minimum and maximum number of function instances, as well as the scaling behavior triggers.

The underlying container runtime, typically Kubernetes, plays a critical role in managing the scaling process. OpenFaaS interacts with Kubernetes to deploy, scale, and manage function instances.

Configuring Autoscaling for Functions

Autoscaling in OpenFaaS is typically configured using Kubernetes Horizontal Pod Autoscaler (HPA). The HPA automatically adjusts the number of pod replicas in a deployment based on observed CPU utilization, memory utilization, or custom metrics. To configure autoscaling for an OpenFaaS function, the following steps are generally involved:

- Function Definition: Define the function’s deployment in a YAML file. This file specifies the function’s container image, resource requests and limits (CPU, memory), and other configuration parameters.

- Resource Requests and Limits: Specify appropriate resource requests and limits for the function. Requests define the minimum resources the function needs, while limits set the maximum resources it can consume. Properly configuring these parameters is critical for effective autoscaling.

- HPA Configuration: Create an HPA resource that targets the function’s deployment. The HPA configuration specifies the metrics to monitor (e.g., CPU utilization), the target utilization levels, and the minimum and maximum number of replicas.

- Deployment: Deploy the function and the HPA resource to the Kubernetes cluster.

Here’s an example of an HPA configuration for an OpenFaaS function:“`yamlapiVersion: autoscaling/v1kind: HorizontalPodAutoscalermetadata: name: my-function-hpaspec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: my-function minReplicas: 1 maxReplicas: 10 targetCPUUtilizationPercentage: 80“`In this example:

- `scaleTargetRef`: Specifies the deployment to be scaled (in this case, `my-function`).

- `minReplicas`: Sets the minimum number of function instances to 1.

- `maxReplicas`: Sets the maximum number of function instances to 10.

- `targetCPUUtilizationPercentage`: Defines the target CPU utilization percentage at which the HPA will scale the function.

The HPA will automatically adjust the number of `my-function` instances to maintain the target CPU utilization.

Optimizing Function Performance and Resource Utilization

Optimizing function performance and resource utilization is crucial for cost-effectiveness and responsiveness. Several best practices can be employed:

- Resource Allocation: Carefully tune resource requests and limits for each function. Over-provisioning leads to wasted resources, while under-provisioning can cause performance degradation. Monitor function resource usage to identify optimal settings.

- Code Optimization: Optimize the function’s code for efficiency. Reduce the execution time of the function by profiling and addressing bottlenecks. Minimize dependencies and optimize data processing.

- Function Size: Keep functions focused and small. Smaller functions tend to start faster and scale more efficiently. Avoid large functions that perform multiple tasks, which can increase latency and resource consumption.

- Caching: Implement caching strategies where appropriate to reduce the load on functions and backend services. Cache frequently accessed data to improve response times.

- Monitoring and Alerting: Implement robust monitoring and alerting to track function performance and resource utilization. Use tools like Prometheus and Grafana to visualize metrics and set up alerts for performance degradation or resource exhaustion.

- Concurrency Limits: Configure concurrency limits for functions to control the number of concurrent requests they can handle. This can help prevent resource exhaustion and improve overall system stability.

These practices help ensure functions are responsive, efficient, and cost-effective.

Monitoring and Logging

OpenFaaS provides crucial capabilities for observing the performance and behavior of deployed functions. Effective monitoring and logging are essential for identifying issues, optimizing function performance, and ensuring the overall health of a serverless application. This section delves into OpenFaaS’s built-in monitoring features, integration with external tools, and the setup of logging and tracing mechanisms.

Built-in Monitoring Capabilities of OpenFaaS

OpenFaaS offers built-in monitoring through its Prometheus metrics endpoint, which provides real-time insights into function performance. This allows for immediate visibility into key metrics without requiring external integrations for basic monitoring needs.

- Metrics Provided: OpenFaaS exposes a comprehensive set of metrics, including:

- Function Invocation Count: The total number of times a function has been executed. This is a fundamental indicator of function usage.

- Function Execution Duration: The time taken for each function invocation, providing insights into performance bottlenecks.

- Function Errors: The number of errors encountered during function execution, crucial for identifying and resolving issues.

- Function Requests per Second (RPS): The rate at which functions are being invoked, reflecting the load on the system.

- Function Concurrency: The number of concurrent function instances running, indicating resource utilization.

- Prometheus Integration: The OpenFaaS gateway exposes metrics in a format that Prometheus can scrape. This integration is straightforward, requiring only configuration of Prometheus to target the OpenFaaS gateway’s metrics endpoint.

- Dashboarding in the OpenFaaS UI: The OpenFaaS UI provides basic dashboards that visualize these metrics, offering a quick overview of function performance. While useful for initial inspection, these dashboards are typically less flexible than external tools.

Integrating with External Monitoring Tools

Integrating OpenFaaS with external monitoring tools, such as Prometheus and Grafana, significantly enhances the ability to monitor and analyze function performance. This allows for more advanced visualizations, alerting, and long-term data storage.

- Prometheus Setup:

- Configuration: Prometheus is configured to scrape metrics from the OpenFaaS gateway. This typically involves adding a job to the `prometheus.yml` configuration file, specifying the gateway’s address and port.

- Data Collection: Prometheus collects metrics at regular intervals, storing them in a time-series database.

- Alerting Rules: Prometheus can be configured with alerting rules to trigger notifications based on specific metric thresholds. For example, alerts can be set up for high error rates or slow execution times.

- Grafana Integration:

- Data Source Configuration: Grafana is configured to use Prometheus as a data source.

- Dashboard Creation: Grafana dashboards are created to visualize the collected metrics. These dashboards can display various metrics, such as function invocation counts, execution durations, and error rates, over time.

- Customization: Grafana allows for extensive customization of dashboards, including the creation of custom graphs, alerts, and panels to meet specific monitoring requirements.

- Example: Setting up Prometheus and Grafana

To integrate Prometheus and Grafana, first, ensure both tools are installed and running. In the `prometheus.yml` file, add a job to scrape metrics from the OpenFaaS gateway. For example:

job_name

'openfaas'

static_configs:targets

['gateway:8080']

In Grafana, add a Prometheus data source, pointing to the Prometheus instance. Create a dashboard and add panels to display metrics such as `faas_function_invocation_total` (invocation count) and `faas_function_duration_seconds` (execution duration). This setup enables comprehensive monitoring of OpenFaaS functions.

Setting Up Logging and Tracing for Functions

Effective logging and tracing are essential for debugging, understanding function behavior, and identifying performance issues. OpenFaaS supports various logging and tracing configurations to capture and analyze function execution details.

- Logging Configuration:

- Standard Output/Error Streams: Functions can write logs to standard output (stdout) and standard error (stderr). OpenFaaS captures these streams and makes them accessible.

- Log Aggregation: For centralized logging, logs can be forwarded to a log aggregation service such as Fluentd, Elasticsearch, or a cloud-based logging service.

- Structured Logging: Implementing structured logging within functions enhances the ability to parse and analyze logs. This typically involves using a structured logging library to format log messages as JSON or other structured formats.

- Tracing Configuration:

- OpenTelemetry Integration: OpenFaaS supports integration with OpenTelemetry for distributed tracing. OpenTelemetry allows for tracking requests as they flow through multiple services.

- Instrumentation: Functions must be instrumented with an OpenTelemetry SDK to generate and export traces. This typically involves adding the SDK to the function’s code and configuring it to export traces to a tracing backend such as Jaeger or Zipkin.

- Tracing Backends: Tracing backends store and visualize trace data, providing insights into request flows, latency, and dependencies. Jaeger and Zipkin are popular open-source options.

- Steps for Setting up Logging and Tracing:

- Enable Logging: Within your functions, use standard output and error streams to log relevant information.

- Configure a Log Aggregator: Set up a log aggregation service (e.g., Fluentd, Loki) and configure OpenFaaS to forward logs. Configure the log aggregator to process and store the logs.

- Implement Tracing:

- Add the OpenTelemetry SDK to your function’s code.

- Configure the SDK to export traces to a tracing backend (e.g., Jaeger, Zipkin).

- Instrument your function’s code to create spans and track requests.

- Monitor and Analyze: Use the log aggregator and tracing backend to monitor function logs and traces. Analyze the data to identify issues, optimize performance, and gain insights into function behavior.

Advanced OpenFaaS Features

OpenFaaS offers a range of advanced features that enhance its capabilities beyond basic function deployment and execution. These features cater to more complex use cases, enabling secure, scalable, and manageable serverless applications. This section explores key advanced features like secrets management, function chaining, and database integration, alongside practical code examples.

Secrets Management

Managing secrets securely is crucial for any production environment. OpenFaaS integrates with various secret stores, providing a mechanism to inject sensitive information, such as API keys, database credentials, and other confidential data, into functions at runtime. This prevents the hardcoding of secrets within function code, reducing the risk of exposure and improving security posture.The integration typically involves:

- Secret Storage: Storing secrets in a secure location like Kubernetes Secrets, HashiCorp Vault, or cloud-provider-specific secret management services.

- Secret Injection: Injecting secrets as environment variables into function containers during deployment.

- Access Control: Implementing access control mechanisms to restrict which functions can access specific secrets.

This approach isolates secrets from the function code, making it easier to manage and rotate secrets without redeploying the functions.

Function Chaining

Function chaining allows the creation of complex workflows by linking multiple functions together. The output of one function becomes the input of the next, enabling a series of operations to be performed in a sequential or parallel manner. This promotes modularity and reusability, breaking down complex tasks into smaller, manageable functions.Function chaining can be implemented using various techniques:

- Sequential Chaining: One function triggers another function after its completion.

- Parallel Chaining: Multiple functions are invoked concurrently, and their results are aggregated.

- Asynchronous Invocation: Functions are invoked without waiting for a response, useful for background tasks.

Function chaining is especially beneficial for tasks involving data processing, ETL (Extract, Transform, Load) pipelines, and microservices architectures. For example, a function could process an image, and then another function could resize the processed image.

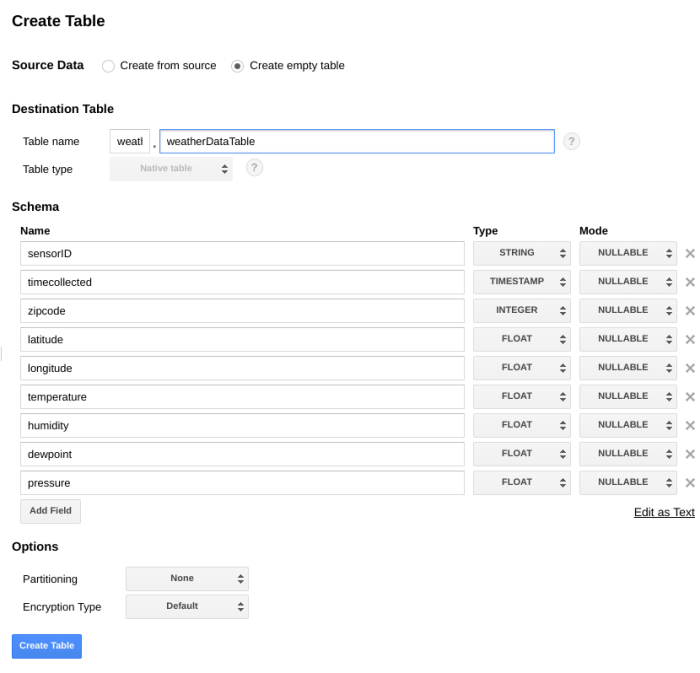

OpenFaaS with a Database

Integrating OpenFaaS functions with databases is a common requirement for building data-driven applications. This involves establishing connections to a database, performing database operations (read, write, update, delete), and returning results.The process generally involves:

- Database Connection: Establishing a connection to the database from within the function code, using database-specific drivers or libraries.

- Database Operations: Executing SQL queries or database commands to interact with the database.

- Result Handling: Processing the results returned by the database and returning them as the function’s output.

Consider an example of a function that retrieves data from a PostgreSQL database:

import psycopg2import osdef handler(event, context): try: # Retrieve database credentials from environment variables db_host = os.environ.get("DB_HOST") db_name = os.environ.get("DB_NAME") db_user = os.environ.get("DB_USER") db_password = os.environ.get("DB_PASSWORD") # Establish a database connection conn = psycopg2.connect( host=db_host, database=db_name, user=db_user, password=db_password ) cur = conn.cursor() # Execute a SQL query cur.execute("SELECT- FROM users;") rows = cur.fetchall() # Process the results results = [] for row in rows: results.append("id": row[0], "name": row[1]) # Close the connection cur.close() conn.close() return "status": "success", "data": results except Exception as e: return "status": "error", "message": str(e) This example retrieves database connection details from environment variables, connects to a PostgreSQL database, executes a query, and returns the results. The use of environment variables ensures that the database credentials are not hardcoded in the function, improving security.

Environment Variables within a Function

Environment variables provide a flexible way to configure functions without modifying their code. They allow the injection of configuration settings, secrets, and other runtime parameters.

A simple code snippet illustrating the use of environment variables:

import osdef handler(event, context): # Retrieve the value of the GREETING environment variable greeting = os.environ.get("GREETING", "Hello") name = event.get("name", "World") # Construct a greeting message message = f"greeting, name!" return "message": message In this example, the `GREETING` environment variable determines the greeting phrase. If the `GREETING` variable is not set, it defaults to “Hello”. The function can then be invoked with a payload that includes a “name” field, creating a personalized greeting. This illustrates how functions can be made more adaptable to different environments and configurations.

OpenFaaS Use Cases and Examples

OpenFaaS, as a serverless framework, proves particularly valuable across diverse applications. Its ability to execute functions in response to events makes it suitable for a wide range of scenarios, from simple tasks to complex workflows. The framework’s inherent scalability and event-driven architecture facilitate the development of resilient and cost-effective applications.

Common OpenFaaS Use Cases

OpenFaaS finds application in various domains due to its flexibility and efficiency. It’s often employed to streamline processes and reduce operational overhead.

- Image Processing: OpenFaaS can be leveraged to handle image resizing, watermarking, and format conversion. This is especially useful for content delivery networks (CDNs) and applications that require dynamic image manipulation.

- Webhooks: Webhooks are a natural fit for OpenFaaS, allowing developers to respond to events from external services. This is common for integrating with services like GitHub, Slack, and payment gateways.

- Data Transformation: Functions can be created to process and transform data from various sources, such as databases, message queues, and APIs. This is critical for ETL (Extract, Transform, Load) pipelines and data integration.

- API Gateways: OpenFaaS functions can be used to create lightweight API endpoints, acting as a gateway to backend services. This can simplify the API architecture and improve performance.

- IoT Device Management: OpenFaaS can process data streams from IoT devices, triggering actions based on sensor readings or events. This is useful for applications such as smart home automation and industrial monitoring.

Image Resizing Function Example

Building an image resizing function in OpenFaaS illustrates its practical application. The following code block and descriptions detail the process. This example utilizes the Python programming language and the Pillow library for image manipulation.

Step 1: Create a Function Definition (YAML)

Define the function’s metadata, including its name, handler (the code file), and image (Docker image). This YAML file configures the function within OpenFaaS.

“`yaml

version: 1.0

provider:

name: openfaas

gateway: http://gateway.openfaas.com:8080 # Replace with your OpenFaaS gateway URL

functions:

resize-image:

lang: python3

handler: ./resize-image

image: your-dockerhub-username/resize-image:latest # Replace with your Docker image

“`

Step 2: Write the Function Code (Python)

This Python script uses the Pillow library to resize images. It accepts an image URL as input, downloads the image, resizes it, and returns the resized image as a byte stream.

“`python

from PIL import Image

from io import BytesIO

import requests

import base64def resize_image(image_url, width=500, height=None):

try:

response = requests.get(image_url, stream=True)

response.raise_for_status()

img = Image.open(BytesIO(response.content))

if height is None:

height = int(img.size[1]

– (width / img.size[0]))

img = img.resize((width, height))

img_byte_arr = BytesIO()

img.save(img_byte_arr, format=’PNG’) # or JPEG, etc.img_byte_arr = img_byte_arr.getvalue()

return base64.b64encode(img_byte_arr).decode(‘utf-8’)

except Exception as e:

return f”Error: str(e)”def handle(req):

try:

payload = json.loads(req)

image_url = payload.get(“image_url”)

width = int(payload.get(“width”, 500))

height = payload.get(“height”)

resized_image_base64 = resize_image(image_url, width, height)

return “resized_image”: resized_image_base64

except Exception as e:

return “error”: str(e)

“`

Step 3: Build and Deploy the Function

Use the OpenFaaS CLI (faas-cli) to build and deploy the function. This involves creating a Docker image and pushing it to a container registry.

“`bash

faas-cli build -f deploy.yaml

faas-cli push -f deploy.yaml

faas-cli deploy -f deploy.yaml

“`

Step 4: Invoke the Function

Once deployed, invoke the function using a tool like `curl`. This example shows how to send a request with the image URL and desired dimensions.

“`bash

curl -X POST http://gateway.openfaas.com:8080/function/resize-image \

-H “Content-Type: application/json” \

-d ‘”image_url”: “https://example.com/image.jpg”, “width”: 300’

“`

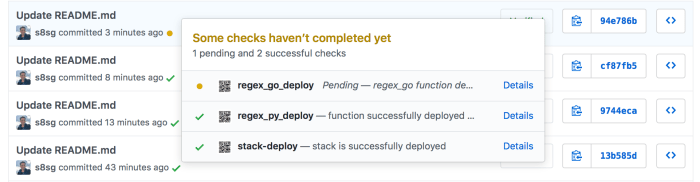

OpenFaaS in a CI/CD Pipeline Example

OpenFaaS can be seamlessly integrated into a CI/CD (Continuous Integration/Continuous Deployment) pipeline to automate function deployments and updates. Consider a scenario where code changes are automatically deployed as serverless functions.

A typical pipeline might involve the following stages:

- Code Commit: A developer commits code changes to a Git repository (e.g., GitHub, GitLab).

- Build Stage: A CI tool (e.g., Jenkins, GitLab CI, Travis CI) triggers a build based on the code commit. This stage might include unit tests, integration tests, and linting.

- Docker Image Build: The CI tool uses the `faas-cli build` command to create a Docker image for the OpenFaaS function.

- Docker Image Push: The built Docker image is pushed to a container registry (e.g., Docker Hub, Amazon ECR).

- Function Deployment: The CI tool uses the `faas-cli deploy` command to deploy the updated function to the OpenFaaS gateway. This updates the function’s code.

- Testing/Verification: Post-deployment tests, such as integration tests and end-to-end tests, are executed to verify the function’s functionality.

- Monitoring and Alerting: Monitoring tools observe the function’s performance, and alerts are configured to notify the team of any issues or errors.

This automated process enables rapid iteration, continuous delivery, and improved efficiency in deploying and managing serverless functions. The CI/CD pipeline ensures that every code change is automatically built, tested, and deployed, providing a faster development cycle and reducing the risk of manual errors. This streamlined approach is beneficial for teams focused on agility and rapid deployment of functions.

Final Thoughts

In conclusion, OpenFaaS emerges as a powerful tool for serverless function deployment, offering significant advantages in developer experience and operational efficiency. From its simple setup to advanced features like autoscaling and monitoring, OpenFaaS provides a robust platform for building scalable and resilient applications. By embracing OpenFaaS, developers can focus on writing code rather than managing infrastructure, accelerating the development lifecycle and enhancing overall productivity.

This guide has equipped you with the foundational knowledge to begin your journey with OpenFaaS, empowering you to build innovative, event-driven solutions.

Essential Questionnaire

What are the primary benefits of using OpenFaaS?

OpenFaaS offers simplified deployment and management of functions, improved developer experience, and operational efficiency. It allows for the use of any programming language, provides autoscaling, and integrates seamlessly with existing infrastructure.

How does OpenFaaS handle scaling?

OpenFaaS uses autoscaling to automatically adjust the number of function instances based on demand. This ensures optimal resource utilization and responsiveness.

Can OpenFaaS be integrated with existing CI/CD pipelines?

Yes, OpenFaaS integrates well with CI/CD pipelines. You can automate the build, testing, and deployment of functions using tools like Jenkins, GitLab CI, or GitHub Actions.

What monitoring and logging tools are compatible with OpenFaaS?

OpenFaaS integrates with popular monitoring and logging tools such as Prometheus, Grafana, and Fluentd. This allows for comprehensive monitoring of function performance and troubleshooting.