Log analysis is the process of examining system logs to understand and interpret the data contained within. This detailed examination reveals insights into system performance, user behavior, and potential security threats. From identifying performance bottlenecks to pinpointing security breaches, log analysis plays a crucial role in various industries and applications. This guide will delve into the intricacies of log analysis, exploring its various facets and practical applications.

Understanding different log types, their formats, and the tools available for analysis is essential. This exploration will encompass techniques such as pattern recognition, anomaly detection, and trend analysis, providing practical examples and illustrative data visualizations. Further, the guide will touch upon log management, security implications, and troubleshooting techniques.

Introduction to Log Analysis

Log analysis is a crucial process for understanding system behavior, identifying potential issues, and ensuring optimal performance. It involves systematically examining logs, which are detailed records of events that occur within a system or application. By analyzing these logs, administrators and developers can gain valuable insights into the workings of their systems and proactively address potential problems before they escalate.Different types of logs provide various perspectives on system activity.

Analyzing these logs in a structured manner helps in troubleshooting, security monitoring, and performance optimization. Understanding the format of these logs is key to extracting meaningful information and effectively utilizing the data.

Types of Logs

Various types of logs offer different perspectives on system activities. Analyzing these logs collectively provides a comprehensive view of the system’s health and performance. System logs document the events occurring within the operating system, while application logs record the activities of specific software applications. Security logs track security-related events, providing insights into potential threats and intrusions.

- System Logs: These logs record events pertaining to the operating system, including boot-up processes, errors, warnings, and other significant actions.

- Application Logs: Application logs focus on the activities and behaviors of specific software applications. They detail actions performed by users, errors encountered, and other relevant application-specific events.

- Security Logs: Security logs are specifically designed to monitor and record security-related events. These logs often contain information about login attempts, access violations, and other potential security breaches.

- Network Logs: These logs capture network-related activities, including connections, disconnections, and data transfer.

Log Formats

Logs are often structured in various formats, each designed to capture and present specific information. The format significantly impacts the efficiency of analysis and the extraction of useful data. Common formats include comma-separated values (CSV), simple text, and structured data formats like JSON.

- Comma-Separated Values (CSV): CSV files store data in rows and columns, separated by commas. This format is widely used for its simplicity and ease of importing into spreadsheets and databases.

- Simple Text: Simple text logs use a straightforward format, typically containing timestamps, event descriptions, and other relevant details.

- Structured Data Formats (e.g., JSON): JSON (JavaScript Object Notation) is a structured format that organizes data into key-value pairs. This structure enables more complex analysis and easier integration with other systems.

Common Log File Extensions

The file extension often indicates the type of data contained within the log file. Recognizing these extensions aids in identifying the content and appropriate tools for analysis.

| File Extension | Description |

|---|---|

| .log | Generic log file extension, commonly used for various types of logs. |

| .txt | Plain text log file, often used for simple text-based logs. |

| .csv | Comma-separated values log file, typically used for data that can be easily imported into spreadsheets. |

| .json | JavaScript Object Notation log file, storing data in a structured format. |

| .xml | Extensible Markup Language log file, using tags to define the structure of the data. |

Importance of Log Analysis

Log analysis plays a crucial role in understanding system behavior, identifying potential issues, and ensuring optimal performance. By examining detailed records of events and activities, organizations can gain valuable insights into various aspects of their operations, ranging from application performance to security threats. This allows for proactive measures to address problems and maintain a robust and reliable system environment.Log analysis provides a comprehensive understanding of system activity.

This detailed insight allows organizations to make informed decisions, leading to better performance, improved security, and reduced operational costs. A thorough analysis of log data is essential for effective troubleshooting, proactive security measures, and performance optimization in a wide range of industries.

Benefits Across Various Domains

Log analysis offers a multitude of benefits across different domains, impacting various aspects of an organization. It’s a powerful tool for diagnosing issues, improving security posture, and optimizing performance.

- Troubleshooting: Log files contain detailed records of events, enabling analysts to pinpoint the root cause of problems. For example, if a web application experiences slow response times, logs can reveal bottlenecks in the database queries or network congestion, allowing for targeted solutions.

- Security: Log analysis is vital for detecting and responding to security threats. Suspicious activities, such as unauthorized access attempts or malicious code execution, are often evident in the log data. This allows security teams to investigate, contain, and mitigate potential breaches.

- Performance Optimization: By identifying performance bottlenecks, log analysis helps organizations to improve the efficiency of their systems. For instance, logs can reveal patterns of high CPU usage or disk I/O, enabling developers to optimize code or infrastructure configurations.

Significance in Different Industries

The significance of log analysis varies depending on the industry’s specific needs and the nature of its operations.

- Finance: In the finance industry, log analysis is critical for fraud detection and regulatory compliance. Detailed records of transactions and user activities help identify suspicious patterns that could indicate fraudulent activity, ensuring adherence to financial regulations.

- Healthcare: Log analysis in healthcare ensures the confidentiality and integrity of patient data. It assists in identifying unauthorized access attempts, data breaches, and maintaining compliance with stringent healthcare regulations. This allows healthcare providers to protect sensitive patient information.

- E-commerce: Log analysis in e-commerce enables companies to monitor website performance and customer behavior. Analyzing user activity logs helps to understand customer preferences, identify areas for improvement in the shopping experience, and optimize marketing strategies. This is crucial for improving sales and customer satisfaction.

Role in Preventing and Responding to Security Incidents

Log analysis plays a vital role in both preventing and responding to security incidents.

- Incident Prevention: By analyzing logs, organizations can identify vulnerabilities and potential threats before they escalate into full-blown incidents. For example, frequent failed login attempts from a particular IP address might signal a brute-force attack, prompting security measures to be implemented.

- Incident Response: In the event of a security incident, log analysis is crucial for understanding the nature and extent of the breach. Logs provide valuable information about the attacker’s activities, enabling security teams to respond effectively and limit the damage.

Impact on Operational Efficiency

Effective log analysis leads to improved operational efficiency across all domains.

- Reduced Downtime: Troubleshooting issues swiftly through log analysis minimizes downtime and improves overall system availability. Early identification of problems prevents minor issues from escalating into major disruptions.

- Proactive Problem Solving: Log analysis enables proactive identification and resolution of potential problems before they impact users or operations. This proactive approach reduces the need for reactive solutions, improving operational efficiency.

Techniques and Methods of Log Analysis

Log analysis transcends simply reading logs; it’s a systematic process of extracting valuable insights from recorded data. Effective log analysis employs various techniques to transform raw data into actionable intelligence, allowing businesses and organizations to optimize performance, identify potential issues, and enhance decision-making. These techniques enable the identification of patterns, anomalies, and trends, ultimately leading to a deeper understanding of system behavior.

Common Log Analysis Techniques

Log analysis employs a suite of techniques to derive meaningful information. Key among these are pattern recognition, anomaly detection, and trend analysis. These methods, when used in conjunction, offer a comprehensive understanding of system operations and facilitate proactive issue resolution.

- Pattern Recognition: This technique focuses on identifying recurring patterns in logs. These patterns can signal normal operations, user behavior, or potential issues. For instance, a recurring pattern of high CPU usage during specific time periods might indicate a need for resource optimization or application performance tuning. Recognizing these patterns helps in understanding the typical operational behavior of the system.

- Anomaly Detection: This technique seeks deviations from established patterns. Anomalies can signify errors, security breaches, or unexpected system behavior. A sudden spike in error logs, or a dramatic increase in login attempts from an unusual IP address, are examples of anomalies that can trigger alerts and prompt investigation.

- Trend Analysis: This involves examining logs over a period of time to identify trends. Trends can reveal emerging issues, usage patterns, or system performance fluctuations. For example, a gradual increase in log messages related to slow database queries might indicate a need for database optimization or application tuning to maintain system performance.

Log Aggregation Tools

Log aggregation tools are critical for efficient data collection. These tools centralize logs from various sources, simplifying analysis and facilitating the detection of patterns and anomalies. These tools typically offer features like filtering, searching, and alerting, allowing for targeted analysis of specific log entries.

- Data Collection: These tools collect logs from diverse sources like application servers, databases, and network devices, consolidating them into a central repository. This central repository makes the logs readily accessible for analysis.

- Efficiency: Log aggregation streamlines the analysis process by consolidating data, eliminating the need to search through multiple disparate log files. This consolidation improves analysis speed and reduces the likelihood of missing critical information.

Log Parsing Techniques

Log parsing involves extracting specific information from logs using tools or scripting languages. This process is often necessary to make log data usable and actionable. Regular expressions and scripting languages like Python or Perl are commonly used for this task.

- Regular Expressions: Regular expressions provide a powerful way to match patterns in text, extracting relevant data points from log entries. They can isolate specific fields like timestamps, IP addresses, or error codes. A regular expression could extract the user ID, event type, and error message from a log entry.

- Scripting Languages: Scripting languages like Python offer flexibility and extensibility for log parsing. Python’s libraries can automate the parsing process, allowing for the extraction of complex information from logs. This automation can involve transforming the log data into a structured format suitable for further analysis or reporting.

Log Analysis Tools

A variety of tools are available for log analysis, each offering different functionalities. Choosing the right tool depends on the specific needs and requirements of the organization.

| Tool | Functionality |

|---|---|

| Splunk | A comprehensive platform for log management, search, and analysis. It provides powerful search capabilities and dashboards for visualizing data. |

| ELK Stack (Elasticsearch, Logstash, Kibana) | An open-source stack for log aggregation, processing, and visualization. It’s a popular choice for its flexibility and scalability. |

| Graylog | A log management and analysis platform focused on speed and ease of use. It is designed for high-volume log data. |

| Fluentd | A data collector and forwarder that collects data from various sources and forwards it to a central repository for analysis. It is often used as part of a larger log management system. |

Log Analysis Tools and Technologies

Log analysis tools are essential for extracting meaningful insights from vast amounts of log data. These tools automate the process of sifting through logs, identifying patterns, and providing actionable intelligence for troubleshooting, performance optimization, and security monitoring. Choosing the right tool depends on factors such as the volume and type of logs being analyzed, the required level of customization, and the budget constraints.

Popular Log Analysis Tools

Several powerful log analysis tools are available, each with unique features and capabilities. Popular choices include Splunk, the ELK stack (Elasticsearch, Logstash, Kibana), and Graylog. Understanding the strengths and weaknesses of each tool is crucial for selecting the most appropriate solution for specific needs.

- Splunk: Splunk is a widely used platform for log management and analysis. It offers robust search capabilities, allowing users to query logs based on various criteria. Splunk’s indexing and data storage capabilities are well-regarded, providing a powerful platform for analyzing large volumes of data. Splunk also features a comprehensive set of dashboards and visualizations, making it easy to understand trends and anomalies in the data.

However, Splunk can be expensive, particularly for organizations with large datasets.

- ELK Stack: The ELK stack (Elasticsearch, Logstash, Kibana) is an open-source, flexible, and highly scalable solution. Elasticsearch provides a distributed search and analytics engine, Logstash acts as a data pipeline for collecting and processing logs, and Kibana is a powerful visualization tool. Its open-source nature allows for significant customization, making it suitable for organizations with specific requirements. However, setting up and managing the ELK stack requires expertise in managing open-source components.

Scalability is a significant advantage, but initial setup can be more complex.

- Graylog: Graylog is another open-source log management solution. It provides a user-friendly interface for searching, analyzing, and visualizing logs. Graylog excels at handling high volumes of log data, particularly in real-time. Graylog’s focus on real-time analysis and alerting makes it valuable for security monitoring. However, its features might not be as comprehensive as those offered by commercial solutions like Splunk.

Advantages and Disadvantages of Each Tool

Each log analysis tool possesses distinct advantages and disadvantages. Carefully considering these factors is crucial for making an informed decision.

| Tool | Advantages | Disadvantages |

|---|---|---|

| Splunk | Robust search capabilities, comprehensive dashboards, good indexing/storage | High cost, can be complex to set up for smaller deployments |

| ELK Stack | Open-source, highly scalable, flexible architecture | Requires expertise for setup and management, potentially more complex than Splunk for basic use cases |

| Graylog | User-friendly interface, handles high volumes of data, strong real-time capabilities | Might not have as comprehensive features as commercial solutions, may require more customization |

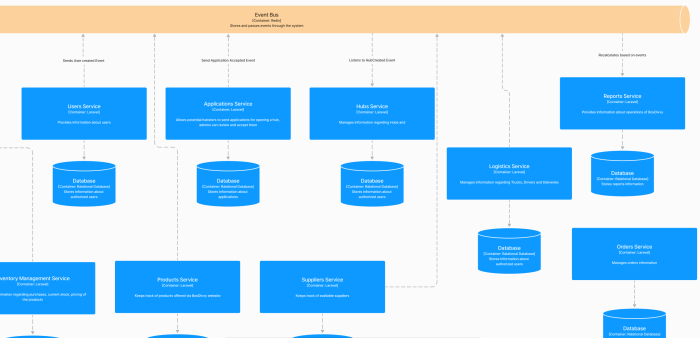

Architecture Comparison

Understanding the architecture of different log management platforms is crucial for selecting the right tool. The ELK stack, for example, relies on a distributed architecture, allowing for horizontal scaling and high availability. Splunk utilizes a centralized architecture, offering a simpler setup but potentially limiting scalability for massive datasets. Graylog often adopts a distributed approach, enabling efficient handling of high-volume data streams.

Data Visualization in Log Analysis

Data visualization plays a crucial role in transforming raw log data into actionable insights. By presenting log data in a graphical format, analysts can quickly identify patterns, trends, and anomalies that might be missed in textual reports. This visual representation allows for a more intuitive understanding of the underlying processes and behaviors, leading to faster problem resolution and improved system performance.Visual representations of log data enhance the comprehension of complex patterns and anomalies.

The human brain processes visual information significantly faster than textual data, enabling analysts to grasp intricate relationships and identify critical events more efficiently. This accelerated understanding facilitates quicker problem identification and resolution, ultimately contributing to better system management.

Effective Charts and Graphs for Log Data

Visualizing log data requires appropriate chart and graph selections. The choice depends on the type of data and the insights sought. For example, to display the frequency of error messages over time, a line graph or bar chart is ideal. These visualizations illustrate trends in error rates, aiding in the identification of recurring issues. For displaying the distribution of log events across different categories, a pie chart or a stacked bar chart can be highly effective.

These charts clearly show the relative proportion of events within each category, allowing for a quick overview of the most frequent log types. Scatter plots are useful for identifying correlations between different log attributes, for instance, correlating CPU usage with specific application errors. These visualizations help pinpoint potential relationships between seemingly disparate events. A heatmap can visually display the volume of log entries over time and across different categories, highlighting areas of high activity or unusual patterns.

Utilizing Visualization Tools for Trend and Anomaly Detection

Data visualization tools are essential for identifying trends and anomalies in log data. These tools often provide interactive features, allowing users to zoom, filter, and drill down into specific segments of the data. This interactive exploration facilitates the identification of emerging patterns and unusual behaviors. By comparing the current log data to historical data, these tools can help to identify deviations from the expected behavior, flagging potential issues or security threats.

These tools can also automatically generate alerts when anomalies are detected, enabling prompt responses and minimizing the impact of problems. The ability to quickly isolate specific log entries associated with an anomaly is critical in troubleshooting.

Creating Dashboards for Key Log Metrics

Dashboards provide a centralized view of key metrics derived from log analysis. These dashboards typically combine various charts and graphs, providing a comprehensive overview of system performance. For instance, a dashboard could display the number of errors, the average response time, and the CPU utilization rate. Visualizing these metrics in a single, easily digestible format allows for quick monitoring of overall system health.

Dashboards can also be customized to display specific metrics relevant to particular applications or processes. This customization enhances the relevance of the dashboard to the user’s needs. Alert mechanisms within the dashboard can automatically notify administrators of critical events, enabling proactive intervention and minimizing potential disruptions. For example, if the error rate exceeds a predefined threshold, an alert can be triggered, prompting immediate action.

Log Management and Storage

Effective log management is crucial for extracting actionable insights from log data. Robust storage and retrieval mechanisms are vital for ensuring that valuable information isn’t lost or inaccessible. This involves implementing efficient retention policies and designing scalable systems capable of handling increasing volumes of log data. Proper log management safeguards critical operational data and fosters a data-driven culture within organizations.

Significance of Efficient Log Storage and Retrieval

Efficient log storage and retrieval are essential for maintaining a comprehensive audit trail and enabling rapid troubleshooting. A well-structured log management system ensures easy access to historical data, allowing for the identification of patterns, anomalies, and root causes of issues. This accessibility supports quick responses to incidents, optimized performance tuning, and a better understanding of system behavior. Quick retrieval also significantly reduces the time spent on troubleshooting, saving valuable resources and enhancing operational efficiency.

Best Practices for Log Retention Policies

Effective log retention policies are essential for balancing the need to retain valuable data with storage capacity constraints. These policies should be tailored to the specific needs of each application and organization. Defining retention periods for different log types (e.g., critical errors, informational messages, debug logs) is crucial. This strategy optimizes storage utilization, preventing unnecessary data bloat, and facilitating efficient querying.

Regular reviews of retention policies are recommended to align with evolving business requirements and regulatory compliance standards.

- Categorization of Logs: Differentiating log types (e.g., error logs, access logs, audit logs) allows for tailored retention policies. For example, critical error logs might require longer retention periods for root cause analysis, while less critical logs can be purged more frequently.

- Compliance Considerations: Retention policies must adhere to industry regulations and legal requirements. Compliance regulations often dictate minimum retention periods for specific types of data, such as financial transactions or user activity logs.

- Cost-Effectiveness: Policies should be designed to minimize storage costs without compromising data availability. Strategies include data compression, archiving less frequently accessed logs, and using cloud-based storage solutions.

Designing a Scalable Log Management System

Designing a scalable log management system is crucial to accommodate the ever-increasing volume of log data generated by modern applications and infrastructure. The system should be able to handle continuous growth without impacting performance. This involves choosing appropriate storage technologies, employing robust indexing mechanisms, and leveraging cloud-based solutions for scalability and flexibility. Scalable systems ensure consistent access to logs regardless of their volume.

- Scalable Storage Options: Employing cloud storage services (e.g., Amazon S3, Azure Blob Storage) provides a scalable and cost-effective solution for log storage. These services automatically scale storage capacity as needed, minimizing the risk of storage bottlenecks.

- Distributed Logging Architecture: A distributed logging architecture can handle the high volume of log data generated by distributed systems by distributing the log collection and processing across multiple nodes. This reduces the load on a single server and ensures data integrity.

- Indexing and Query Optimization: Implementing efficient indexing mechanisms is crucial for enabling fast and accurate searches within large log datasets. Advanced search features and query optimization techniques are essential to reduce query latency and improve performance.

Comparison of Log Storage Solutions

Different log storage solutions offer varying features and capabilities. The optimal choice depends on specific requirements and budgetary constraints.

| Storage Solution | Pros | Cons |

|---|---|---|

| Cloud Storage (e.g., S3, Azure Blob Storage) | Scalability, cost-effectiveness, reliability, accessibility | Potential vendor lock-in, security considerations, network dependency |

| On-Premise Storage (e.g., NAS, SAN) | Control over data, high performance for local access | Limited scalability, higher management overhead, potentially higher cost |

| Specialized Log Management Platforms | Advanced features, comprehensive analysis tools, centralized management | Potential cost, learning curve, vendor lock-in |

Security Implications of Log Analysis

Log analysis plays a crucial role in modern cybersecurity. By meticulously examining system logs, organizations can proactively identify potential threats, swiftly respond to incidents, and maintain the integrity of their data. This analysis provides invaluable insights into user behavior, system activity, and potential malicious actions, empowering organizations to fortify their defenses and safeguard sensitive information.Understanding the intricacies of log analysis, particularly in relation to security, is essential for effectively mitigating risks.

This involves recognizing the patterns and anomalies within logs that might indicate unauthorized access, malicious code execution, or other security breaches. By carefully interpreting these patterns, organizations can effectively strengthen their security posture and protect their valuable assets.

Role of Log Analysis in Detecting Security Breaches

Log analysis serves as a critical first line of defense against security breaches. By monitoring system logs for unusual activities, suspicious login attempts, or unusual data access patterns, security analysts can identify potential threats early on. This proactive approach allows for swift intervention and minimizes the impact of a potential attack. For example, a sudden spike in failed login attempts from a specific IP address might indicate a brute-force attack, allowing immediate action to be taken to block the IP address.

Log Analysis in Incident Response and Investigation

Log analysis is indispensable during incident response and investigation. Detailed logs provide crucial information about the events leading up to and during a security incident. This data aids in pinpointing the source of the breach, the methods used, and the extent of the damage. For instance, analyzing logs after a suspected data breach can reveal which users accessed sensitive data, when the access occurred, and the actions taken during the access.

This allows security teams to understand the scope of the breach and implement necessary remediation measures.

Importance of Log Security and Protecting Sensitive Data

Log data itself often contains sensitive information, including user credentials, financial data, and other confidential details. Ensuring the security of these logs is paramount to prevent unauthorized access and data breaches. Protecting log data from unauthorized access, modification, or deletion is crucial to maintain the integrity of the analysis and the organization’s data security posture. Robust encryption and access controls are essential to protect log data from compromise.

Organizations should implement strict access controls for log analysis tools and personnel.

Security Measures for Log Analysis Tools and Platforms

Implementing strong security measures for log analysis tools and platforms is essential. This includes securing the infrastructure hosting the log analysis platform, using robust authentication and authorization mechanisms, and regularly updating software to patch vulnerabilities. Regular security audits of the log analysis tools and platforms are vital to identify potential weaknesses. The use of intrusion detection systems (IDS) and intrusion prevention systems (IPS) for log analysis platforms is also highly recommended.

- Secure Storage: Data storage should adhere to industry best practices. Encryption, access controls, and regular backups are essential. Regular audits of storage systems for vulnerabilities and compliance are recommended.

- Secure Transmission: Logs should be transmitted securely. Secure protocols like HTTPS or SSH should be used for log collection and analysis. Data transmitted over public networks should be encrypted.

- Access Control: Restrict access to log data to authorized personnel only. Implement role-based access controls (RBAC) to limit access to specific log information based on the user’s role and responsibilities.

Log Analysis in Troubleshooting

Log analysis plays a crucial role in identifying and resolving technical issues within a system. By examining system logs, administrators can pinpoint the root cause of problems, optimize performance, and understand user behavior. This proactive approach to troubleshooting minimizes downtime and enhances overall system stability.Effective troubleshooting relies on understanding the context provided by logs. Logs record events and actions, enabling analysts to trace the sequence of events leading to a problem.

Analyzing these logs helps in diagnosing the specific cause and implementing targeted solutions.

Diagnosing and Resolving Technical Issues

Log files contain detailed information about system activities, errors, and warnings. Analyzing these logs allows administrators to identify specific events that led to an issue, providing a clearer picture of the problem’s origin. This detailed information is vital for developing effective and precise solutions. For instance, if a web application is experiencing slow response times, log files might reveal database query bottlenecks, network latency issues, or resource exhaustion.

Pinpointing the specific source of the problem is key to implementing the appropriate fix.

Identifying Performance Bottlenecks

Logs are invaluable in identifying performance bottlenecks within a system. Performance logs capture metrics like CPU usage, memory consumption, network traffic, and disk I/O. By examining these metrics, administrators can identify periods of high resource utilization and pinpoint the applications or processes responsible. This analysis allows for optimized resource allocation, leading to improved system performance. For example, if a server’s CPU usage consistently spikes during peak hours, logs can pinpoint the application or service causing the overload.

Understanding User Behavior

Analyzing user activity logs helps understand user behavior within an application or system. These logs track user actions, such as login attempts, page visits, and errors encountered. This information can help administrators understand common user workflows and identify areas where users experience difficulties. This user-centric approach to analysis can lead to improved user experience and more effective system design.

For instance, if a specific feature consistently generates error messages in user logs, it indicates a potential problem with that feature’s implementation, which can be addressed by refining the user interface or backend logic.

Troubleshooting Common Issues Using Log Files

Analyzing log files for common issues involves a structured approach. The following table Artikels the steps for troubleshooting common issues using log files.

| Issue | Troubleshooting Steps |

|---|---|

| Application Crashes |

|

| Slow Response Times |

|

| Security Breaches |

|

Practical Applications of Log Analysis

Log analysis is a crucial component of modern IT infrastructure management. By examining detailed records of system activity, organizations gain valuable insights into performance, security, and user behavior. This allows for proactive issue resolution, optimized resource allocation, and improved decision-making.Log analysis transcends the realm of simple troubleshooting. It empowers companies to understand intricate system interactions, identify potential vulnerabilities, and enhance overall efficiency.

The practical applications extend across diverse sectors, from e-commerce platforms to financial institutions, demonstrating its ubiquitous value in the digital age.

Website Performance Monitoring

Understanding website performance is paramount for any online business. Log analysis provides critical data for identifying bottlenecks and optimizing user experience. Network latency, server response times, and resource utilization are all reflected in server logs, offering valuable metrics for performance evaluation. For example, a surge in error logs related to database queries might indicate a need for database optimization or increased server capacity.

This analysis allows businesses to proactively address performance issues, preventing disruptions and ensuring a smooth user experience.

Server Monitoring and Resource Management

Server logs are a rich source of information for administrators. Monitoring server resource utilization, such as CPU, memory, and disk space, helps predict potential issues before they impact services. By tracking resource consumption over time, patterns emerge that indicate resource bottlenecks or inefficient processes. This proactive approach minimizes downtime and ensures optimal server performance. For instance, high CPU usage in specific applications might signal the need for code optimization or the addition of more processing power.

Network Security

Log analysis is an indispensable tool for maintaining network security. Security logs record events such as login attempts, file access, and network traffic, providing crucial evidence for identifying and responding to security threats. Suspicious patterns, such as unusual login attempts from unknown IP addresses or unauthorized access to sensitive data, can be flagged and investigated. This proactive approach minimizes the impact of potential breaches and safeguards sensitive information.

User Activity Tracking

User activity logs provide insights into user behavior, preferences, and usage patterns. Understanding how users interact with a system allows companies to tailor their services and enhance user experience. Analysis of login times, page views, and interactions with specific features can identify trends in user behavior, enabling the creation of targeted marketing campaigns and the development of more user-friendly interfaces.

Furthermore, log data aids in the identification of potential fraud or malicious activity.

Issue Resolution in Complex Systems

Complex systems, such as large-scale applications or enterprise networks, often exhibit intricate interactions. Troubleshooting these systems requires a systematic approach. Log analysis plays a key role in identifying the root cause of issues.

| Event | Log Entry Example | Possible Issue |

|---|---|---|

| High CPU Usage | “Application X consuming 95% CPU” | Code optimization required, or additional processing power needed |

| Network Latency | “Request to server Y timed out” | Network congestion, server overload, or network configuration issue |

| Error in Database Query | “SQL error: Syntax error” | Incorrect SQL statement or database configuration problem |

A diagram illustrating this process would show a flow from initial system failure, triggering a log entry. The log entry is then analyzed to identify the source of the issue, followed by a suggested solution and a final step confirming the resolution.

Ultimate Conclusion

In conclusion, log analysis is a powerful tool for optimizing system performance, enhancing security posture, and improving operational efficiency across diverse industries. The detailed exploration of log types, analysis techniques, and tools, along with best practices for log management and visualization, equips readers with the knowledge necessary to effectively leverage log data. This comprehensive guide offers a solid foundation for understanding and implementing log analysis in various contexts.

Quick FAQs

What are some common log file extensions?

Common log file extensions include .log, .txt, .csv, and .json, among others. The specific extension used depends on the system and application generating the log.

How can log analysis help in security incident response?

Log analysis can identify suspicious patterns, unusual user activity, and potential security breaches, aiding in the rapid detection and response to security incidents. This facilitates the investigation and containment of security threats.

What are the key differences between Splunk, ELK Stack, and Graylog?

Each tool offers a unique approach to log management and analysis. Splunk excels at complex queries and dashboards, while the ELK Stack is known for its open-source nature and flexibility. Graylog provides a robust platform for real-time log ingestion and analysis.

How can log analysis be used to track user behavior?

Analyzing user activity logs can reveal insights into user journeys, preferences, and potential pain points within a system. This understanding allows for informed improvements in the user experience and the design of the system itself.