Embark on a journey into the realm of modern application deployment with “what is knative for running serverless on kubernetes” as our central focus. This exploration delves into Knative, a powerful open-source platform designed to streamline the creation and management of serverless applications directly within the Kubernetes ecosystem. It transforms Kubernetes, a robust container orchestration platform, into a dynamic environment capable of handling serverless workloads efficiently and effectively.

Knative fundamentally alters how we approach application development and deployment. It simplifies the process of building, deploying, and managing serverless applications, offering a consistent experience across various Kubernetes environments. By leveraging Kubernetes’ capabilities, Knative provides a scalable, portable, and vendor-neutral solution for running serverless functions and applications.

Introduction to Knative

Knative provides a set of Kubernetes-based components that simplify the deployment and management of modern, serverless workloads. It abstracts away the complexities of infrastructure management, allowing developers to focus on writing code. Knative integrates seamlessly with Kubernetes, leveraging its existing capabilities to offer a platform for building and running cloud-native applications.Knative enables serverless computing on Kubernetes by providing the necessary building blocks for deploying, scaling, and managing stateless containerized applications.

It offers a streamlined developer experience, automates operational tasks, and provides features such as autoscaling, traffic management, and eventing.

Core Purpose and Role in the Kubernetes Ecosystem

Knative’s primary purpose is to provide a consistent and standardized platform for building and running serverless applications on Kubernetes. It acts as a layer on top of Kubernetes, offering a higher-level abstraction that simplifies the development, deployment, and operation of containerized workloads. This is achieved by providing key components such as Knative Serving and Knative Eventing.Knative plays a crucial role in the Kubernetes ecosystem by extending Kubernetes’ capabilities to support serverless computing.

It addresses the challenges associated with deploying and managing serverless applications, such as autoscaling, request routing, and event-driven architecture. By integrating with Kubernetes, Knative leverages its existing infrastructure and ecosystem, providing a unified platform for both traditional and serverless workloads. Knative is essentially a “batteries-included” approach for serverless on Kubernetes.

Definition of Serverless Computing and Knative’s Enabling Role

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. The developer focuses on writing code and deploying it, without worrying about server provisioning, scaling, and management. The cloud provider automatically scales the application based on demand, only charging for the actual resources consumed.Knative enables serverless computing on Kubernetes by providing the necessary infrastructure components and abstractions.

It allows developers to deploy containerized applications without needing to manage the underlying infrastructure. Knative automatically scales applications based on traffic, handles request routing, and provides event-driven capabilities.Knative’s role in enabling serverless can be summarized as follows:

- Abstraction: Knative abstracts away the complexities of infrastructure management, allowing developers to focus on writing code.

- Autoscaling: Knative automatically scales applications up or down based on traffic, ensuring optimal resource utilization.

- Request Routing: Knative provides advanced request routing capabilities, such as traffic splitting and canary deployments.

- Eventing: Knative enables event-driven architectures, allowing applications to react to events from various sources.

Benefits of Using Knative for Deploying and Managing Applications

Using Knative offers several advantages for deploying and managing applications, particularly those designed for a cloud-native, serverless architecture. These benefits translate into improved developer productivity, reduced operational overhead, and enhanced application scalability and efficiency.The benefits can be Artikeld as:

- Simplified Deployment: Knative streamlines the deployment process by automating the creation and management of Kubernetes resources, such as deployments, services, and ingress.

- Automatic Scaling: Knative automatically scales applications based on traffic, ensuring that resources are allocated efficiently and that applications can handle varying workloads.

- Traffic Management: Knative provides advanced traffic management capabilities, such as traffic splitting, canary deployments, and blue-green deployments, allowing for controlled rollouts and easy experimentation.

- Event-Driven Architecture: Knative enables event-driven architectures by providing a robust eventing framework, allowing applications to react to events from various sources, such as message queues, databases, and other services.

- Developer Productivity: Knative simplifies the development and deployment process, allowing developers to focus on writing code and less on infrastructure management. This leads to increased productivity and faster time to market.

- Portability: Applications built with Knative are portable and can be deployed on any Kubernetes cluster, whether it’s on-premises, in the cloud, or a hybrid environment.

- Observability: Knative integrates with various monitoring and logging tools, providing insights into application performance and behavior.

Knative Components

Knative is designed to provide a streamlined experience for deploying and managing serverless workloads on Kubernetes. It achieves this through a set of core components that handle various aspects of the application lifecycle, from building and deploying container images to routing traffic and autoscaling. The following sections will delve into the specific components that make up Knative, starting with Serving.

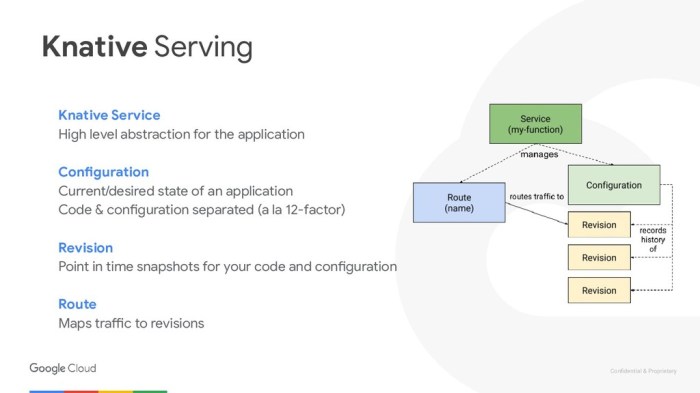

Serving Component Architecture and Functionality

Knative Serving focuses on the core responsibilities of serving serverless applications. This involves managing the lifecycle of containerized applications, routing traffic to the correct revisions, and automatically scaling resources based on demand. Its architecture is designed for high availability and scalability, making it suitable for production workloads.Knative Serving’s architecture comprises several key components:

- Activator: The activator is a crucial component, particularly when a service is scaled down to zero instances. It intercepts incoming requests and, if no instances are running, temporarily buffers them. It then starts a new pod or scales up an existing one to handle the requests. Once a pod is available, the activator forwards the buffered requests. The activator also handles the propagation of request headers, ensuring that tracing and other context-aware features work correctly.

- Autoscaler: The autoscaler continuously monitors the incoming request rate and the resource utilization of the application pods. Based on this data, it dynamically adjusts the number of pod instances to match the current demand. The autoscaler uses metrics such as request concurrency and CPU utilization to make scaling decisions. The autoscaler component integrates with Kubernetes’ Horizontal Pod Autoscaler (HPA) but adds serverless-specific optimizations, such as scaling to zero and efficiently handling cold starts.

- Networking Layer (Istio or Kourier): Knative Serving leverages a networking layer, typically Istio or Kourier, to manage traffic routing and provide advanced features like traffic splitting and canary deployments. The networking layer intercepts all incoming traffic and routes it to the appropriate application revisions. Istio provides a robust set of features, including advanced traffic management, security, and observability. Kourier is a lightweight alternative specifically designed for Knative.

- Controller: The controller is responsible for reconciling the desired state of the Knative service with the actual state in the Kubernetes cluster. It monitors the creation, updates, and deletion of Knative resources (like Services and Revisions) and ensures that the underlying infrastructure is configured correctly. The controller interacts with the Kubernetes API server to create and manage deployments, services, and other resources.

Request Routing and Autoscaling in Knative Serving

Knative Serving handles request routing and autoscaling with a sophisticated combination of components. The networking layer directs traffic to the appropriate revisions of the application, while the autoscaler dynamically adjusts the number of pod instances based on demand.The request routing process works as follows:

- Ingress: When a request arrives, it first enters the cluster through the ingress controller (e.g., Istio Gateway).

- Virtual Service: The ingress controller uses a VirtualService (in Istio) to route the traffic to the Knative service. The VirtualService is configured to direct traffic to the Knative service’s domain.

- Service: The Knative service then directs traffic to the appropriate revision of the application. It handles traffic splitting, allowing for gradual rollouts of new versions.

- Revision: Each revision represents a specific version of the application code. The traffic is routed to the active revisions.

Autoscaling is a critical feature of Knative Serving, providing efficient resource utilization and responsiveness to changes in traffic patterns.The autoscaling process is driven by the autoscaler component:

- Metric Collection: The autoscaler continuously collects metrics from the application pods, such as request concurrency and CPU utilization.

- Scaling Decisions: Based on these metrics, the autoscaler makes scaling decisions. It uses a configurable target concurrency value to determine when to scale up or down.

- Scaling Actions: If the current concurrency exceeds the target, the autoscaler scales up the number of pod instances. If the concurrency is below the target, the autoscaler scales down the number of instances, potentially to zero if there is no traffic.

Knative Serving’s autoscaling capabilities are particularly effective at handling spiky workloads, where traffic can fluctuate rapidly. This dynamic scaling ensures that resources are allocated efficiently, reducing costs and improving performance. For example, if an e-commerce website experiences a sudden surge in traffic during a flash sale, Knative Serving can automatically scale up the application to handle the increased load, preventing performance degradation.

Deploying a Simple Application Using Knative Serving

Deploying a simple application using Knative Serving involves several steps, from creating a container image to configuring the Knative service. This section will demonstrate the process of deploying a “hello world” application.

1. Prerequisites

Ensure that you have a Kubernetes cluster with Knative Serving installed. You will also need the `kubectl` command-line tool and a container registry (e.g., Docker Hub, Google Container Registry).

2. Create a Container Image

Create a simple “hello world” application, for example, a simple HTTP server in Python: “`python from flask import Flask app = Flask(__name__) @app.route(“/”) def hello(): return “Hello, Knative!” if __name__ == “__main__”: app.run(debug=True, host=’0.0.0.0′, port=8080) “` Build and push the container image to your registry: “`bash docker build -t

3. Create a Knative Service

Create a Knative Service resource using a YAML configuration file. This file defines the application’s image, resource requests/limits, and other settings. “`yaml apiVersion: serving.knative.dev/v1 kind: Service metadata: name: helloworld-service spec: template: spec: containers:

image

containerPort

8080 “` Apply the configuration to your Kubernetes cluster: “`bash kubectl apply -f helloworld.yaml “`

4. Access the Application

After a few moments, Knative will deploy the application. You can determine the URL for your application by inspecting the Knative service: “`bash kubectl get ksvc helloworld-service “` The output will include a `URL` field. You can access the application using this URL. For instance, using `curl`: “`bash curl

Knative Components

Knative provides a powerful platform for building and deploying serverless workloads on Kubernetes. Its modular design allows for the independent operation of its components, offering flexibility and scalability. One of the core components, Knative Eventing, enables the creation of event-driven architectures, crucial for modern, reactive applications.

Knative Eventing Overview

Knative Eventing is a component of Knative that enables the creation, routing, and management of events within a Kubernetes cluster. Its primary purpose is to provide a standardized way to handle events, decoupling event producers from event consumers. This decoupling facilitates the development of loosely coupled, scalable, and resilient applications. Eventing achieves this through a publish-subscribe model, allowing services to subscribe to specific event types and react accordingly.Knative Eventing is designed to address the complexities associated with event-driven architectures.

It simplifies the process of integrating different services and systems by providing a consistent interface for event handling. It also supports various event sources, including cloud providers, message queues, and custom sources, making it versatile and adaptable to diverse application requirements.

Facilitating Event-Driven Architectures

Knative Eventing significantly streamlines the implementation of event-driven architectures. It offers several key features that contribute to this:

- Event Sources: Event sources act as the originators of events. Knative Eventing supports various event sources, including:

- CloudEvents: CloudEvents is a specification for describing event data in a common format. Knative Eventing natively supports CloudEvents, ensuring interoperability with other systems and platforms that adhere to the CloudEvents standard. This standardization allows for seamless integration with diverse event sources and consumers.

- Built-in Sources: Knative provides built-in event sources for common scenarios, such as monitoring Kubernetes resources or receiving webhooks. These built-in sources simplify the process of integrating with existing systems.

- Custom Sources: Developers can create custom event sources to integrate with specific applications or systems that are not supported by the built-in sources. This extensibility allows Knative Eventing to adapt to a wide range of eventing requirements.

- Channels: Channels serve as intermediaries for event delivery. They provide a buffering mechanism, ensuring that events are not lost even if consumers are temporarily unavailable. Channels can be implemented using different technologies, such as message queues (e.g., Apache Kafka, RabbitMQ) or in-memory storage, depending on the specific needs of the application.

- Subscriptions: Subscriptions define the relationship between events and consumers. They specify which events a consumer is interested in and where those events should be delivered. Knative Eventing supports various filtering mechanisms, allowing consumers to subscribe to specific events based on event attributes.

- Triggers: Triggers are the core mechanism for routing events to consumers. They define the conditions under which an event should be delivered to a specific service. Triggers evaluate event attributes and match them against predefined criteria, ensuring that only relevant events are routed to the appropriate consumers.

By leveraging these features, Knative Eventing promotes a reactive programming model, where applications respond to events in real-time. This architecture enhances application responsiveness, scalability, and fault tolerance.

Example: An Event-Driven Application

Consider a scenario involving an e-commerce platform that uses Knative Eventing to manage order processing.

- Event Source: An event source, such as a webhook from a payment gateway, emits an event when a payment is successfully processed. This event is formatted as a CloudEvent, containing information about the order, the payment amount, and the payment method.

- Channel: The event is sent to a Knative Channel. The Channel acts as a buffer, ensuring the event is reliably delivered to subscribers.

- Trigger: A Knative Trigger filters the event based on the event type (e.g., `payment.succeeded`) and the order ID.

- Subscriber (Service): A Knative Service, which represents a serverless function, is subscribed to the Trigger. This service is responsible for:

- Updating the order status in the database to “paid.”

- Sending a confirmation email to the customer.

- Initiating the fulfillment process.

This architecture decouples the payment processing service from the order fulfillment service. If the payment gateway experiences an outage, the events are buffered by the Channel, ensuring that the order fulfillment service is not impacted. Once the payment gateway is restored, the events are delivered to the order fulfillment service, which can then process the orders. This example illustrates how Knative Eventing facilitates building a resilient and scalable event-driven application.

Knative Build

Knative Build provides a flexible and declarative framework for building container images within a Kubernetes environment. It abstracts the complexities of image building, allowing developers to focus on their application code rather than the intricacies of Dockerfiles or build tools. This facilitates automated builds as part of a CI/CD pipeline, ensuring consistent and reproducible builds across environments.

Role in Application Deployment Pipeline

Knative Build’s primary role is to streamline the image building process within the broader application deployment pipeline. It sits between the source code repository and the container registry, automating the creation of container images from source code. This automation is critical for continuous integration and continuous delivery (CI/CD) workflows, enabling rapid and reliable application updates.The following points highlight the key roles of Knative Build in the application deployment pipeline:

- Source Code Acquisition: Knative Build retrieves source code from various repositories, including Git, and prepares it for the build process.

- Image Building: It executes the build steps, typically using tools like Docker or Kaniko, to create a container image based on the source code and a defined build configuration.

- Image Storage: Knative Build pushes the built container image to a specified container registry, making it available for deployment.

- Automation and Orchestration: It integrates seamlessly with CI/CD systems, triggering builds automatically upon code changes and managing the build process within the Kubernetes cluster.

- Reproducibility: By defining build steps declaratively, Knative Build ensures that the image building process is reproducible, resulting in consistent builds across different environments.

Building Container Images

Knative Build employs a declarative approach to building container images, utilizing custom resources within Kubernetes. The core components include BuildTemplates and Builds. BuildTemplates define the steps required to build an image, while Builds represent an instance of a build based on a specific BuildTemplate.The image building process using Knative Build typically involves these steps:

- Defining a BuildTemplate: A BuildTemplate is created, specifying the build steps. These steps can include pulling source code, running build commands (e.g., `go build`, `npm install`), and pushing the resulting image to a container registry. The template is essentially a blueprint for the build.

- Creating a Build: A Build resource is created, referencing the BuildTemplate and providing any necessary parameters, such as the source code repository URL, the image name, and the container registry credentials.

- Build Execution: Knative Build orchestrates the build process within the Kubernetes cluster. It creates pods to execute the build steps defined in the BuildTemplate. The build process runs inside these pods.

- Image Push: Once the build steps are complete, the resulting container image is pushed to the specified container registry.

- Status Monitoring: Knative Build provides status information, allowing users to monitor the progress of the build and identify any errors. This information is available through Kubernetes APIs and CLI tools.

For instance, a BuildTemplate for a simple Go application might involve the following steps:

apiVersion: build.knative.dev/v1kind: BuildTemplatemetadata: name: go-builderspec: steps: -name: build-and-push image: docker.io/google/cloud-sdk:latest # Uses a pre-built image with necessary tools args: -"gcloud" -"builds" -"submit" -"--tag=$params.IMAGE" # Parameter for the image tag -"." # Context for the build

A corresponding Build would then reference this template and provide the necessary parameters, such as the image name and the source code repository.

Designing a Knative Build Pipeline

Designing a Knative Build pipeline involves defining the build steps, integrating with a source code repository, and automating the build and deployment process. This pipeline typically involves the following stages:

- Source Code Management: A Git repository stores the application’s source code. Changes to the code trigger the build process.

- Build Trigger: A trigger, often integrated with a CI/CD system or Knative Eventing, initiates the build process upon code changes (e.g., a push to the Git repository).

- Build Execution: Knative Build, using a defined BuildTemplate, retrieves the source code, builds the container image, and pushes it to a container registry.

- Image Deployment: Knative Serving, or another deployment mechanism, deploys the newly built image to the Kubernetes cluster.

- Testing and Validation: Automated tests and validation steps are executed to ensure the deployed application functions correctly.

- Rollback Strategy: A strategy for rolling back to a previous version in case of deployment failures.

A simplified example using Knative Eventing could be:

- Event Source: A GitHub event source, configured to listen for `push` events on a specific repository.

- Trigger: A Knative Trigger that listens to the GitHub event source. The trigger filters events and directs them to a Knative Build.

- Build: A Knative Build resource, referencing a BuildTemplate, to build the application’s container image.

- Service: A Knative Service to deploy the built image. The Knative Service automatically creates and manages the deployment.

This design enables an automated CI/CD workflow where code changes in the Git repository trigger a build, the resulting image is deployed, and the application is automatically updated. This approach significantly reduces the time to deploy and allows for frequent, reliable updates. The specific tools and configurations depend on the complexity of the application and the desired level of automation.

Advantages of Using Knative

Knative offers a compelling set of advantages for running serverless workloads on Kubernetes, distinguishing itself from other serverless solutions. These advantages stem from its design principles, focusing on portability, vendor independence, and developer experience. Understanding these benefits is crucial for organizations evaluating serverless options.

Portability and Vendor Independence

Knative’s core strength lies in its portability and vendor independence. Unlike many serverless platforms that lock users into a specific cloud provider, Knative runs on any Kubernetes cluster, whether on-premises, in a public cloud, or a hybrid environment. This portability offers significant advantages.

Knative’s architecture allows for deploying serverless functions and applications across different Kubernetes clusters without code modifications, fostering a true “write once, run anywhere” approach. This capability is vital for organizations seeking to avoid vendor lock-in and maintain flexibility in their infrastructure strategy. By abstracting away the underlying infrastructure, Knative enables developers to focus on application logic rather than platform-specific configurations.

This portability also reduces operational overhead, simplifying deployment and management across diverse environments.

Comparison with Other Serverless Platforms

Comparing Knative with other serverless platforms reveals its unique positioning. The following table provides a comparative analysis of Knative against several popular serverless options, focusing on key characteristics:

| Feature | Knative | AWS Lambda | Google Cloud Functions | Azure Functions |

|---|---|---|---|---|

| Infrastructure | Kubernetes-based, runs on any Kubernetes cluster | AWS-managed, proprietary infrastructure | Google Cloud-managed, proprietary infrastructure | Azure-managed, proprietary infrastructure |

| Portability | Highly portable, runs on any Kubernetes cluster | Limited portability, tied to AWS | Limited portability, tied to Google Cloud | Limited portability, tied to Azure |

| Vendor Lock-in | Low, avoids vendor lock-in | High, vendor lock-in to AWS | High, vendor lock-in to Google Cloud | High, vendor lock-in to Azure |

| Cost Model | Pay-per-use, based on resource consumption (CPU, memory) | Pay-per-use, based on invocation count and resource consumption | Pay-per-use, based on invocation count and resource consumption | Pay-per-use, based on invocation count and resource consumption |

| Customization | Highly customizable through Kubernetes configuration | Limited customization, controlled by AWS | Limited customization, controlled by Google Cloud | Limited customization, controlled by Azure |

| Open Source | Open Source | Proprietary | Proprietary | Proprietary |

| Ecosystem | Growing ecosystem, integrations with Kubernetes tools | Mature ecosystem, extensive integrations with AWS services | Mature ecosystem, extensive integrations with Google Cloud services | Mature ecosystem, extensive integrations with Azure services |

This table highlights the core differentiators. Knative’s Kubernetes-based nature provides the greatest flexibility, enabling developers to avoid vendor lock-in and leverage existing Kubernetes investments.

Knative vs. Traditional Serverless Platforms

Knative presents a compelling alternative to traditional serverless platforms, offering a different paradigm for deploying and managing serverless workloads. This comparison analyzes the key differentiators, focusing on cost, control, and flexibility. The objective is to provide a clear understanding of the trade-offs involved in choosing Knative over established solutions like AWS Lambda and Google Cloud Functions.

Cost Considerations

Cost is a critical factor when evaluating serverless platforms. Traditional serverless offerings often utilize a pay-per-use model, where users are charged based on the duration of function execution, the number of invocations, and resource consumption (memory and CPU). Knative, deployed on Kubernetes, introduces a different cost structure.

Knative’s cost is primarily determined by the underlying Kubernetes infrastructure. This means the user is responsible for the cost of the Kubernetes cluster itself, including the compute resources (virtual machines or bare metal), storage, and networking. However, Knative offers several cost optimization features.

- Resource Efficiency: Knative’s autoscaling capabilities dynamically scale the number of pods based on traffic demands. This can reduce costs by ensuring resources are only allocated when needed.

- Vendor Lock-in Mitigation: Because Knative runs on Kubernetes, it can be deployed on various cloud providers or on-premise infrastructure. This allows users to potentially choose the most cost-effective infrastructure based on their needs and market pricing.

- Cold Start Optimization: Knative’s built-in features, such as request routing and autoscaling, can mitigate the impact of cold starts, a situation where a function takes a long time to initialize, potentially increasing costs due to extended execution times.

In contrast, traditional serverless platforms may have simpler pricing models but can be less transparent regarding underlying resource utilization. For example, in AWS Lambda, costs are clearly Artikeld based on memory allocation and execution time, but users have less direct control over the underlying infrastructure.

Control and Management

The level of control and management offered by a serverless platform significantly impacts operational flexibility. Knative, due to its Kubernetes foundation, provides a high degree of control over the deployment environment.

Knative provides granular control over resource allocation, networking, and security policies. This includes the ability to configure:

- Networking: Users can define custom ingress controllers, network policies, and service meshes to control how traffic is routed and secured.

- Resource Limits: Users can set resource requests and limits for CPU and memory, ensuring efficient resource utilization and preventing resource exhaustion.

- Deployment Strategies: Knative supports advanced deployment strategies such as blue/green deployments and canary releases, enabling controlled rollouts and reducing the risk of service disruptions.

Traditional serverless platforms often abstract away much of this control, offering a simpler user experience but potentially limiting customization options. For instance, while AWS Lambda provides a simplified deployment process, users have limited control over the underlying infrastructure.

Flexibility and Portability

Flexibility and portability are essential for building resilient and adaptable applications. Knative, built on Kubernetes, excels in these areas.

Knative’s container-based approach enhances flexibility. Functions are packaged as container images, making them portable across different Kubernetes clusters, regardless of the underlying infrastructure.

- Multi-Cloud Deployments: Knative can be deployed on any Kubernetes cluster, including those hosted on different cloud providers (AWS, Google Cloud, Azure) or on-premise. This enables multi-cloud strategies and reduces vendor lock-in.

- Extensibility: Knative is designed to be extensible. Users can integrate it with other Kubernetes-native tools and services, such as service meshes (Istio, Linkerd), monitoring systems (Prometheus, Grafana), and CI/CD pipelines.

- Customization: Users can customize Knative’s behavior through Kubernetes Custom Resource Definitions (CRDs), allowing them to tailor the platform to their specific needs.

Traditional serverless platforms, while offering a simplified development experience, often tie users to their specific ecosystems. Migrating functions between platforms can be complex and time-consuming due to the proprietary nature of the underlying infrastructure.

Key Feature Differentiators

Several key features distinguish Knative from traditional serverless platforms. These features directly address common challenges in serverless computing.

- Eventing: Knative Eventing provides a robust event-driven architecture, allowing users to build applications that react to events from various sources. It offers a flexible and scalable event delivery system.

- Build: Knative Build enables the creation of container images from source code directly within the Kubernetes cluster. This streamlines the development and deployment process.

- Serving: Knative Serving handles the deployment and scaling of serverless functions, including automatic scaling, request routing, and traffic management. It efficiently manages the lifecycle of serverless applications.

- Portability: Knative’s Kubernetes foundation makes it highly portable. Applications built on Knative can be deployed across different cloud providers and on-premise infrastructure.

These features collectively provide a comprehensive serverless platform that offers greater control, flexibility, and portability than many traditional serverless offerings. For instance, the Knative Eventing system allows for more complex event-driven architectures compared to the eventing capabilities in some traditional serverless platforms.

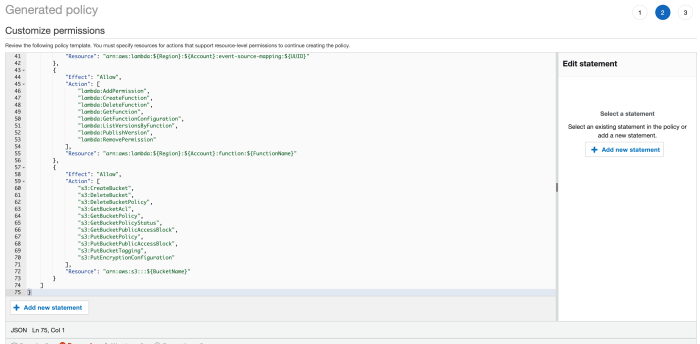

Knative Architecture and Deployment

Knative’s architecture is designed to provide a robust and scalable platform for deploying and managing serverless workloads on Kubernetes. Understanding the components and the deployment process is crucial for leveraging Knative’s capabilities. This section details the architectural elements and provides a step-by-step guide for installation and configuration.

Knative Architectural Components

Knative’s architecture is modular, consisting of several key components that work together to provide the core serverless functionalities. These components are primarily implemented as Kubernetes custom resources and controllers.

- Serving: The Serving component manages the lifecycle of serverless applications. It handles incoming requests, scales pods automatically based on traffic, and routes traffic to different revisions of the application. Key sub-components include:

- Activator: Acts as a buffer between the ingress and the application pods, handling requests during scaling events and preventing cold starts from overwhelming the application.

- Autoscaler: Monitors traffic and automatically scales the application pods based on configurable metrics like concurrency and request rate.

- Networking Layer (Istio): Leverages Istio for traffic management, including routing, traffic splitting, and canary deployments. Istio’s virtual services and gateways are used to expose Knative services.

- Eventing: The Eventing component provides a flexible eventing system that allows applications to react to events from various sources. It enables the creation of event sources, event brokers, and event consumers.

- Sources: Integrates with various event sources like cloud providers, message queues, and custom sources.

- Channels: Act as intermediaries for event delivery, providing buffering and fan-out capabilities.

- Triggers: Define the routing rules for events, specifying which events should be delivered to which consumers.

- Build: The Build component enables the creation of container images from source code. Although Knative Build is being deprecated in favor of Tekton, it remains a crucial part of understanding Knative’s historical architecture.

- Build Templates: Define the steps required to build an application, such as fetching source code, building the image, and pushing it to a container registry.

- Build Runs: Instances of a build template, representing the execution of the build process.

Deployment Process of Knative on a Kubernetes Cluster

Deploying Knative involves installing its core components on a Kubernetes cluster. The deployment process typically involves installing the Serving and Eventing components, along with any necessary dependencies, such as Istio.

The following illustrates the high-level deployment process:

- Prerequisites: Ensure a running Kubernetes cluster is available. This can be a local cluster like Minikube, Kind, or a managed Kubernetes service like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS). Also, ensure you have kubectl configured to interact with the cluster.

- Install Istio (if not already present): Knative Serving relies on Istio for traffic management. If Istio is not already installed, it must be installed first. The installation process typically involves using the Istio operator or the `istioctl` command-line tool.

- Install Knative Serving: Use the Knative installation YAML files to deploy the Serving component. This typically involves applying the YAML files to the Kubernetes cluster using `kubectl apply`.

- Install Knative Eventing: Similar to Serving, deploy the Eventing component using its installation YAML files. This involves applying the YAML files to the cluster.

- Verification: Verify the installation by checking the status of the deployed Knative resources using `kubectl get` commands.

- Configuration (Optional): Configure Knative components, such as autoscaling parameters, eventing sources, and triggers, to customize the behavior of the serverless platform.

Step-by-Step Guide for Installing and Configuring Knative

This section provides a detailed step-by-step guide for installing and configuring Knative on a Kubernetes cluster. This guide assumes you have a Kubernetes cluster and `kubectl` installed and configured.

- Install Istio (if not already present): Istio is a prerequisite for Knative Serving. Use the following steps to install Istio using `istioctl`. The exact steps might vary depending on the Istio version and your specific cluster setup. The following is a general example.

- Download the Istio release.

- Install Istio using `istioctl install –set profile=default -y`.

- Label the namespace where you will deploy Knative with the Istio sidecar injection label: `kubectl label namespace default istio-injection=enabled`. (Replace ‘default’ with the namespace you intend to use).

- Install Knative Serving:

- Download the Knative Serving release YAML file from the official Knative website or a reliable source. The file name is usually in the format `serving.yaml`.

- Apply the YAML file to your Kubernetes cluster: `kubectl apply -f serving.yaml`.

- Wait for the Knative Serving components to be deployed. You can check the status of the deployments and pods using `kubectl get deployments -n knative-serving` and `kubectl get pods -n knative-serving`.

- Install Knative Eventing:

- Download the Knative Eventing release YAML file from the official Knative website. The file name is usually in the format `eventing.yaml`.

- Apply the YAML file to your Kubernetes cluster: `kubectl apply -f eventing.yaml`.

- Wait for the Knative Eventing components to be deployed. Check the status using `kubectl get deployments -n knative-eventing` and `kubectl get pods -n knative-eventing`.

- Verify the Installation: Verify that all Knative components are running successfully.

- Check the status of the Knative Serving components: `kubectl get pods -n knative-serving`. Ensure all pods are in the `Running` state.

- Check the status of the Knative Eventing components: `kubectl get pods -n knative-eventing`. Ensure all pods are in the `Running` state.

- Verify the Istio installation by checking the status of Istio pods.

- Configure DNS (if needed): Configure DNS to allow external access to your Knative services. This typically involves setting up a wildcard DNS entry that points to the ingress gateway IP address. This step is highly dependent on the cloud provider or the environment where Kubernetes is running.

- Deploy a Sample Service: Deploy a sample Knative service to test the installation. A simple “Hello World” service can be created using a pre-built container image.

- Create a Knative Service definition (YAML file) that specifies the container image and other configurations. For example:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hello-knative

namespace: default

spec:

template:

spec:

containers:-image: gcr.io/knative-samples/helloworld-go:v0.1

ports:-containerPort: 8080

- Apply the service definition: `kubectl apply -f hello-knative.yaml`.

- Get the URL of the service: `kubectl get ksvc hello-knative -o jsonpath=’.status.url’`.

- Access the service using the URL. You should see the “Hello, world!” message.

- Create a Knative Service definition (YAML file) that specifies the container image and other configurations. For example:

- Configure Eventing (Optional): Configure event sources and triggers to enable event-driven applications. This involves deploying event sources, channels, and triggers to connect event producers to event consumers. This process requires creating YAML files for each component and applying them to the cluster using `kubectl apply`.

Use Cases and Examples of Knative

Knative’s flexibility and scalability make it suitable for a wide range of applications across diverse industries. Its ability to manage serverless workloads on Kubernetes allows organizations to streamline development, improve resource utilization, and accelerate time-to-market. The following sections detail specific use cases and examples, showcasing how Knative is deployed in real-world scenarios.

Event-Driven Applications

Event-driven architectures are a key area where Knative excels. Its Eventing component provides robust capabilities for managing and routing events, enabling the creation of responsive and scalable applications.Organizations leverage Knative Eventing to:

- Process Real-Time Data Streams: Knative can ingest and process data streams from various sources, such as IoT devices, social media feeds, or financial markets. This enables real-time analytics, anomaly detection, and immediate responses to changing conditions. For example, a smart manufacturing company might use Knative to process sensor data from its factory floor, triggering automated alerts or adjustments to production processes based on predefined rules.

- Build Microservices-Based Systems: Knative facilitates the decoupling of services, allowing them to react to events independently. This architecture supports easier updates, scaling, and fault isolation. A retail company, for instance, could employ Knative to handle order processing, inventory updates, and customer notifications as separate microservices, each triggered by specific events such as a new order or a change in stock levels.

- Implement Serverless Workflows: Knative simplifies the creation of complex workflows that react to events. This can automate tasks such as image processing, video transcoding, or data transformation. Consider a media company using Knative to automatically resize and optimize images uploaded by users, triggering these processes based on file upload events.

Web Applications and APIs

Knative’s Serving component provides a streamlined platform for deploying and managing web applications and APIs. It offers features such as autoscaling, traffic management, and versioning, making it ideal for handling dynamic workloads.Examples of Knative in web application and API deployments include:

- Deploying RESTful APIs: Knative allows developers to quickly deploy and scale APIs built using various frameworks. For example, a financial services company might use Knative to deploy APIs that provide access to real-time market data, user account information, or transaction processing services. The autoscaling capabilities of Knative ensure the APIs can handle fluctuating traffic loads efficiently.

- Running Serverless Web Applications: Knative can host the backend of web applications, allowing developers to focus on frontend development and user experience. This approach is particularly useful for applications with variable traffic patterns or those that require quick iteration cycles. A social media platform, for example, could use Knative to handle the backend logic for user profiles, content feeds, and notification services.

- Building and Deploying Microservices: Knative supports the development and deployment of microservices that power web applications. This enables organizations to decompose complex applications into smaller, more manageable components. An e-commerce platform, for instance, might use Knative to deploy microservices for product catalogs, shopping carts, and payment gateways, ensuring each service can be independently scaled and updated.

Machine Learning and Data Processing

Knative is also applicable in machine learning and data processing workflows, particularly for tasks that benefit from serverless scaling and event-driven architectures.Organizations use Knative for:

- Serving Machine Learning Models: Knative can host machine learning models, allowing them to be accessed via APIs. This is useful for applications that require real-time predictions or insights. For example, a healthcare provider could deploy a model using Knative to predict patient outcomes based on medical data, providing quick access to critical information.

- Batch Data Processing: Knative can be used to trigger and manage batch data processing jobs. This enables organizations to automate tasks such as data transformation, cleaning, and analysis. A marketing company, for instance, might use Knative to process customer data, generate reports, and segment audiences based on predefined rules.

- Data Pipeline Orchestration: Knative Eventing can orchestrate complex data pipelines, enabling data engineers to build automated workflows that ingest, process, and analyze data from various sources. A financial institution could use Knative to build a data pipeline that processes market data feeds, identifies trading opportunities, and executes trades automatically.

Examples of Organizations Using Knative

Several prominent organizations have adopted Knative to enhance their cloud-native deployments. These real-world examples highlight the versatility and benefits of the platform.

- Google: As a primary contributor to Knative, Google utilizes it internally for various services and applications. The platform leverages Knative for its own internal infrastructure, demonstrating its commitment to the technology.

- VMware: VMware provides support and services for Knative, and also integrates it into its cloud platforms. This allows VMware customers to leverage Knative for their serverless applications.

- IBM: IBM has integrated Knative into its cloud offerings, providing tools and services that simplify the deployment and management of Knative applications. This includes support for Knative on its Kubernetes platform.

These examples demonstrate that Knative is being embraced by a wide range of organizations across different industries, highlighting its potential to transform application development and deployment. The adoption of Knative continues to grow, driven by its ability to streamline serverless deployments and improve developer productivity.

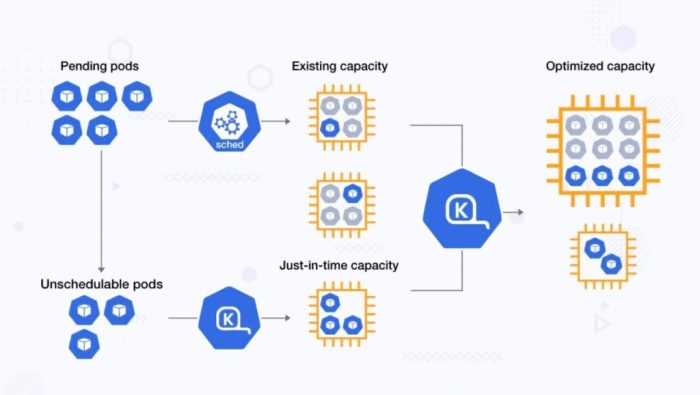

Knative’s Scalability and Performance

Knative’s design prioritizes scalability and performance, critical for serverless applications that must dynamically adapt to fluctuating workloads. Its architecture leverages Kubernetes’ underlying capabilities to provide robust autoscaling and efficient resource utilization. This section delves into Knative’s mechanisms for handling traffic spikes, its performance characteristics, and the tools available for monitoring and optimization.

Autoscaling Based on Traffic

Knative’s autoscaling is primarily driven by the Knative Serving component, which monitors incoming requests and adjusts the number of application instances (pods) accordingly. This dynamic scaling ensures that resources are efficiently allocated, preventing both over-provisioning and under-provisioning.Knative uses the Horizontal Pod Autoscaler (HPA) provided by Kubernetes, but enhances it with custom metrics and configurations tailored for serverless workloads. The HPA is configured to scale deployments based on the number of concurrent requests or the rate of requests per second (RPS).

The following points elaborate on how Knative manages autoscaling:

- Concurrency-Based Scaling: Knative monitors the number of concurrent requests being handled by each pod. If the concurrency exceeds a predefined threshold, new pods are automatically created to handle the increased load. This ensures that response times remain consistent, even during peak traffic periods.

- RPS-Based Scaling: Knative also supports scaling based on the rate of requests per second. This is particularly useful for applications where the latency of individual requests is less critical than the overall throughput. The autoscaler adjusts the number of pods based on the RPS, ensuring that the application can handle the required volume of requests.

- Cold Start Handling: Knative’s autoscaling also considers cold starts, the initial delay when a new pod is created to handle a request. To mitigate this, Knative often pre-provisions a certain number of pods, or “min-scale”, to reduce the likelihood of cold starts, or it can rapidly scale up based on observed traffic patterns.

- Scale-to-Zero: When there is no traffic, Knative can scale a service down to zero pods, conserving resources. The first request after scaling to zero triggers a cold start, which, while adding latency, ensures that resources are only used when needed.

Performance Characteristics of Knative Applications

The performance of Knative applications is influenced by several factors, including the underlying Kubernetes cluster, the application code itself, and Knative’s configuration. Several characteristics contribute to the overall performance profile.

- Request Routing: Knative Serving efficiently routes incoming requests to the appropriate application instances. This routing is managed by Istio, which provides advanced traffic management capabilities, including request splitting and canary deployments.

- Caching: Knative leverages caching mechanisms, such as HTTP caching and content delivery networks (CDNs), to reduce latency and improve response times. Caching can be configured at various levels, from the application code to the ingress controller.

- Resource Utilization: Knative’s autoscaling and resource management features help optimize resource utilization. By dynamically scaling the number of pods, Knative ensures that resources are efficiently allocated, minimizing waste and improving overall performance.

- Cold Start Latency: Cold starts, as mentioned earlier, can introduce latency. However, Knative’s configuration options, such as pre-warming pods and setting a minimum scale, can mitigate the impact of cold starts.

The impact of cold starts can be quantified. For example, consider an application deployed on Knative with a cold start latency of 500ms. If the application receives 100 requests per second and 10% of those requests trigger a cold start, the average latency increase due to cold starts would be 50ms. This calculation highlights the importance of minimizing cold start frequency and latency.

Monitoring and Optimizing Knative Deployments

Effective monitoring and optimization are crucial for ensuring the performance and reliability of Knative applications. Knative integrates with various monitoring tools and provides several options for optimizing deployments.Knative applications can be monitored using various tools, including Prometheus, Grafana, and other Kubernetes monitoring solutions. Monitoring provides insights into the performance of applications and identifies areas for optimization.

- Metrics Collection: Knative emits a variety of metrics that can be used to monitor the performance of applications. These metrics include:

- Request rates (RPS)

- Error rates

- Latency (P99, P95, P50)

- Concurrency

- Resource utilization (CPU, memory)

- Alerting: Monitoring tools can be configured to generate alerts based on predefined thresholds. For example, an alert can be triggered if the error rate exceeds a certain percentage or if the latency exceeds a specific value.

- Optimization Techniques:

- Resource Requests and Limits: Properly configuring resource requests and limits for pods is essential for optimizing performance. Setting appropriate values for CPU and memory ensures that pods have the resources they need without consuming excessive resources.

- Concurrency Configuration: Tuning the concurrency settings can significantly impact performance. The `concurrency` setting in Knative determines the number of concurrent requests a pod can handle. Finding the optimal value requires testing and experimentation.

- Application Code Optimization: Optimizing the application code itself is crucial for improving performance. This includes techniques such as code profiling, database query optimization, and caching.

By continuously monitoring and optimizing Knative deployments, developers can ensure that their applications are highly scalable, performant, and reliable.

Final Wrap-Up

In conclusion, Knative emerges as a pivotal technology for the evolution of serverless computing. It empowers developers to harness the full potential of Kubernetes, providing a flexible, scalable, and portable platform for building and deploying modern applications. From its core components like Serving and Eventing to its robust architecture and deployment capabilities, Knative is shaping the future of serverless by delivering a powerful and vendor-agnostic solution.

Its ability to handle autoscaling, manage events, and automate build processes makes it a compelling choice for organizations seeking to optimize their application development workflows.

FAQ Resource

What are the main components of Knative?

Knative comprises three primary components: Serving, Eventing, and Build. Serving handles request routing and autoscaling, Eventing facilitates event-driven architectures, and Build provides the means to build container images and automate deployment pipelines.

How does Knative differ from traditional serverless platforms like AWS Lambda?

Unlike traditional serverless platforms, Knative offers greater control, portability, and vendor independence. It runs directly on Kubernetes, allowing users to avoid vendor lock-in and manage their infrastructure. Knative also provides more flexibility in terms of customization and integration with existing Kubernetes tooling.

Is Knative suitable for all types of applications?

Knative is particularly well-suited for event-driven applications, microservices, and workloads that benefit from autoscaling and efficient resource utilization. While it can handle a wide range of applications, it might not be the best fit for applications requiring extremely low latency or those with complex state management requirements.

What are the resource requirements for running Knative?

The resource requirements for Knative depend on the size and complexity of the deployed applications. However, Knative is designed to be lightweight and efficient. It requires a Kubernetes cluster and sufficient resources (CPU, memory) to support the deployed applications and Knative’s control plane components.

How do I monitor Knative applications?

Knative applications can be monitored using standard Kubernetes monitoring tools and services. Metrics are exposed through Prometheus, and logging is integrated with Kubernetes logging systems. This allows for detailed performance analysis, troubleshooting, and optimization of Knative deployments.