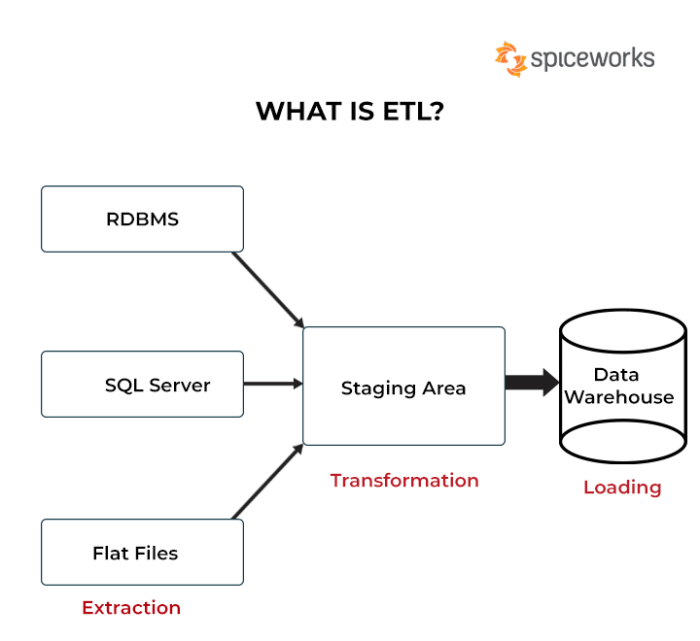

The concept of serverless ETL (Extract, Transform, Load) represents a paradigm shift in data integration, offering a dynamic and cost-effective alternative to traditional methods. This approach leverages cloud-based services to automate and manage data pipelines without the need for provisioning or managing underlying infrastructure. It emphasizes a pay-as-you-go model, allowing for optimized resource allocation and scalability tailored to fluctuating data volumes.

This exploration delves into the intricacies of serverless ETL, examining its core principles, architectural components, and operational benefits. We will analyze its advantages in terms of cost optimization, scalability, and reduced management overhead. Furthermore, we will dissect the key stages of an ETL pipeline within a serverless context, from data extraction and transformation to loading, highlighting best practices and relevant technologies.

Finally, a comparison with traditional ETL approaches will provide a comprehensive understanding of when and where serverless ETL offers the most advantageous solution.

Defining Serverless ETL

Serverless Extract, Transform, Load (ETL) represents a paradigm shift in data integration, moving away from the traditional, infrastructure-managed approach. This architecture leverages cloud-based services to automate and manage the entire ETL pipeline, offering significant advantages in scalability, cost-efficiency, and operational agility. The core principle is to eliminate the need for server provisioning and management, allowing data engineers to focus on the data itself, rather than the underlying infrastructure.

Serverless ETL Concept and Advantages

The fundamental concept of serverless ETL revolves around the execution of ETL processes without the explicit management of servers. Cloud providers offer services that automatically provision, scale, and manage the compute resources required to run ETL workloads. This “serverless” model is characterized by event-driven architectures where compute resources are allocated dynamically in response to events, such as the arrival of new data or scheduled triggers.The advantages of serverless ETL over traditional ETL processes are numerous and compelling:

- Reduced Operational Overhead: Serverless ETL eliminates the need for infrastructure provisioning, maintenance, and scaling. This reduces the operational burden on data engineering teams, freeing them to focus on data quality, transformation logic, and business insights.

- Enhanced Scalability: Serverless platforms automatically scale compute resources based on demand. This ensures that ETL pipelines can handle fluctuating data volumes and processing needs without manual intervention. The scaling happens on-demand, and it can scale up or down automatically.

- Cost Efficiency: Serverless ETL models often employ a pay-per-use pricing structure. Users are charged only for the compute resources consumed during the execution of ETL tasks. This can result in significant cost savings compared to traditional ETL, where resources are often provisioned for peak load, leading to idle capacity and wasted resources.

- Increased Agility: Serverless ETL enables faster development cycles and quicker time-to-market. Data engineers can deploy and update ETL pipelines more rapidly, allowing for quicker responses to changing business requirements.

- Improved Fault Tolerance: Serverless platforms often provide built-in fault tolerance mechanisms. If a component fails, the platform automatically restarts or re-executes the task, ensuring the continuous operation of the ETL pipeline.

Defining “Serverless” in the Context of ETL

In the context of ETL, “serverless” signifies a computing model where the cloud provider automatically manages the underlying infrastructure, including servers, operating systems, and scaling. Data engineers are not required to provision, configure, or maintain any servers. They deploy code (e.g., transformation logic, data loading scripts) and define triggers or schedules for execution. The cloud provider handles the allocation of compute resources, scaling, and management of the infrastructure.

The essential characteristics of “serverless” in ETL are:

- No Server Management: Data engineers do not manage servers, virtual machines, or containers.

- Automatic Scaling: Compute resources scale automatically based on demand, handling fluctuating workloads without manual intervention.

- Pay-per-Use Pricing: Users are charged only for the actual compute time and resources consumed.

- Event-Driven Architecture: ETL processes are often triggered by events, such as data arrival or scheduled times.

- High Availability: Serverless platforms typically offer built-in fault tolerance and high availability.

Key Components of a Serverless ETL Architecture

A typical serverless ETL architecture comprises several key components, each contributing to the overall data integration process. The specific services used may vary depending on the cloud provider and the specific requirements of the ETL pipeline, but the fundamental components remain consistent.

- Data Sources: The origin of the data, which could include databases, files, streaming sources, or other data repositories. Examples include: relational databases (e.g., MySQL, PostgreSQL), NoSQL databases (e.g., MongoDB, Cassandra), cloud storage services (e.g., Amazon S3, Google Cloud Storage), and streaming platforms (e.g., Apache Kafka, Amazon Kinesis).

- Data Ingestion/Ingest: This component is responsible for extracting data from the source systems and making it available for processing. Serverless services, such as AWS Lambda, Azure Functions, and Google Cloud Functions, are frequently used for data ingestion. These functions can be triggered by events (e.g., file uploads) or scheduled to run at specific intervals.

- Data Storage: The location where the extracted and transformed data is stored. Cloud storage services like Amazon S3, Google Cloud Storage, or Azure Blob Storage are common choices for staging and landing zones. Data warehouses like Amazon Redshift, Google BigQuery, or Azure Synapse Analytics are often used for the final storage of processed data.

- Transformation Engine: This component performs the data transformation logic. Serverless functions, container orchestration services (e.g., Kubernetes), or data processing frameworks (e.g., Apache Spark) can be employed for data transformation. The transformation logic may involve cleaning, filtering, aggregating, and enriching the data.

- Orchestration/Workflow Management: This component manages the sequence and dependencies of the ETL tasks. Serverless workflow services such as AWS Step Functions, Azure Logic Apps, and Google Cloud Composer are used to define and orchestrate the ETL pipeline.

- Monitoring and Logging: Services to monitor the performance and health of the ETL pipeline and to log events for debugging and auditing. Cloud providers offer monitoring and logging services (e.g., Amazon CloudWatch, Azure Monitor, Google Cloud Logging) to track the execution of ETL tasks, monitor resource consumption, and identify potential issues.

Key Benefits of Serverless ETL

Serverless ETL (Extract, Transform, Load) offers a compelling alternative to traditional ETL processes by leveraging cloud-based services. This architecture provides significant advantages across various operational and financial dimensions, making it a popular choice for modern data pipelines. This section details the key benefits associated with serverless ETL, focusing on cost optimization, scalability, elasticity, and operational efficiency.

Cost Optimization in Serverless ETL

Serverless ETL inherently promotes cost efficiency compared to traditional ETL approaches. The pay-per-use model eliminates the need for upfront investments in infrastructure and allows for precise resource allocation based on actual usage.The primary driver for cost savings is the elimination of idle resources. Traditional ETL systems often require provisioning of servers that are sized to handle peak workloads. However, these servers are typically underutilized during periods of low activity, leading to wasted resources and unnecessary costs.

Serverless ETL, on the other hand, automatically scales compute and storage resources up or down based on the incoming data volume and processing requirements. This dynamic scaling ensures that users pay only for the resources consumed during the execution of ETL tasks, thereby minimizing costs.Furthermore, serverless platforms often offer cost-effective pricing models for various services involved in ETL, such as data storage, data processing, and orchestration.

For instance, cloud providers typically offer tiered pricing for storage, where the cost per gigabyte decreases as the data volume increases. Similarly, the cost of compute resources may vary based on the duration of the task execution and the amount of memory and processing power required.The utilization of serverless functions, such as AWS Lambda or Azure Functions, for data transformation further contributes to cost optimization.

These functions are only invoked when triggered by an event, such as the arrival of a new data file or a scheduled time. The execution duration of these functions is typically short, and the cost is calculated based on the number of invocations and the compute time consumed. This granular pricing model allows for precise cost control and avoids the inefficiencies associated with continuously running servers.The implementation of serverless ETL can lead to significant cost reductions, particularly for workloads with variable or unpredictable data volumes.

This approach allows organizations to optimize their data processing costs and allocate resources more efficiently.

Scalability and Elasticity in Serverless ETL

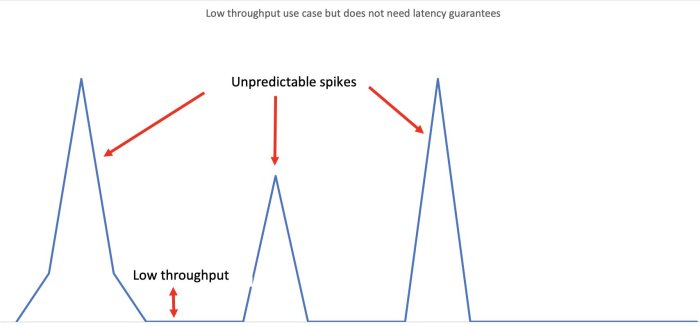

Serverless ETL architectures excel in scalability and elasticity, offering significant advantages over traditional ETL systems. These characteristics are crucial for handling fluctuating data volumes and ensuring consistent performance under varying workloads.Elasticity refers to the ability of a system to automatically scale resources up or down based on demand. Serverless ETL services are inherently elastic, meaning they can dynamically adjust the compute and storage resources to meet the changing needs of the data pipeline.

This is achieved through the use of serverless functions, managed data storage, and event-driven architectures.The scalability of serverless ETL is directly linked to its elastic nature. As data volumes increase, the serverless platform automatically provisions additional resources to handle the increased workload. This scaling is typically managed by the cloud provider, eliminating the need for manual intervention or capacity planning.

For example, when processing a large batch of data, the serverless platform can automatically launch multiple instances of a transformation function to parallelize the processing, significantly reducing the overall execution time.Consider the following scenario: a retail company collects sales data from its online store. During peak shopping seasons, the volume of sales data increases dramatically. With a serverless ETL pipeline, the system automatically scales up the compute resources to handle the increased data volume without impacting the performance of the data pipeline.

The same system can scale down the resources during periods of low activity, thereby optimizing the cost.The elasticity and scalability of serverless ETL provide the following key benefits:

- Improved Performance: By automatically scaling resources, serverless ETL ensures that data processing tasks are completed efficiently, even during peak loads.

- Reduced Operational Overhead: The cloud provider manages the infrastructure, eliminating the need for manual scaling and capacity planning.

- Cost Optimization: Resources are only consumed when needed, leading to cost savings.

- Enhanced Agility: Serverless ETL enables faster development and deployment of data pipelines, allowing organizations to respond quickly to changing business requirements.

The inherent scalability and elasticity of serverless ETL architectures make them well-suited for handling the dynamic demands of modern data processing workloads.

Operational Benefits of Serverless ETL: Reduced Management Overhead

Serverless ETL significantly reduces the operational overhead associated with managing traditional ETL systems. This reduction in management burden translates to increased efficiency, faster development cycles, and a focus on core business objectives.The primary operational benefit is the reduced need for infrastructure management. Traditional ETL systems require dedicated servers, storage, and networking infrastructure. These components need to be provisioned, configured, monitored, and maintained, which consumes significant time and resources.

Serverless ETL, on the other hand, abstracts away the underlying infrastructure, allowing developers to focus on the data transformation logic rather than the infrastructure management.The cloud provider handles the following aspects of infrastructure management:

- Server Provisioning and Management: The cloud provider automatically provisions and manages the servers required to run the ETL tasks.

- Scalability and Availability: The cloud provider ensures that the system can automatically scale to handle fluctuating workloads and provides high availability.

- Security and Compliance: The cloud provider implements security measures and ensures compliance with industry regulations.

- Monitoring and Logging: The cloud provider provides monitoring and logging tools to track the performance of the ETL pipeline.

The use of serverless functions further simplifies operational tasks. Developers can deploy and manage individual functions without having to worry about the underlying infrastructure. The cloud provider handles the execution of the functions, including scaling, security, and monitoring.Consider a scenario where a company needs to process data from multiple sources and load it into a data warehouse. With a traditional ETL system, the company would need to set up and maintain the servers, install and configure the ETL software, and manage the data pipeline.

With serverless ETL, the company can use serverless functions to extract data from the sources, transform the data, and load it into the data warehouse. The cloud provider handles the infrastructure, and the company can focus on developing the data transformation logic.The reduced management overhead associated with serverless ETL results in:

- Faster Development Cycles: Developers can focus on building and deploying data pipelines without the need to manage the underlying infrastructure.

- Increased Efficiency: The cloud provider handles the operational tasks, freeing up IT staff to focus on other critical tasks.

- Improved Agility: Serverless ETL allows organizations to respond quickly to changing business requirements and adapt to new data sources.

- Reduced Operational Costs: The reduced management overhead translates to lower operational costs.

Serverless ETL Architecture Components

The architecture of a serverless ETL pipeline is characterized by its event-driven nature and the utilization of managed services. This design allows for automatic scaling, reduced operational overhead, and pay-per-use pricing. The following sections detail the core components and their interactions within a typical serverless ETL workflow.

Design of a Typical Serverless ETL Pipeline

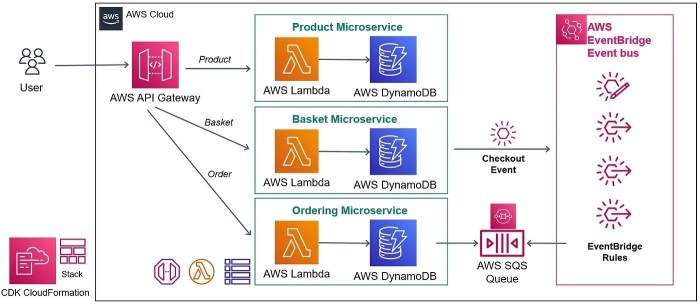

A typical serverless ETL pipeline leverages various cloud-native services to ingest, transform, and load data. The process usually starts with data ingestion from various sources, triggering the ETL workflow. Data transformation, often the most complex stage, involves cleaning, formatting, and enriching the data. Finally, the transformed data is loaded into a data warehouse or data lake for analysis.Here’s a breakdown of the components and their roles:* Data Source: The origin of the data.

This can include databases, file storage, streaming services, or other applications.

Trigger

An event that initiates the ETL process. This can be a new file arriving in a storage bucket, a database change, or a scheduled event.

Ingestion Service

This service captures data from the data source. It can involve reading files, subscribing to streams, or querying databases. Examples include Amazon S3 event notifications, Azure Blob Storage triggers, or Google Cloud Storage triggers.

Compute Service

This is the workhorse of the transformation stage. Serverless functions, like AWS Lambda, Azure Functions, or Google Cloud Functions, are triggered by the ingestion service. These functions execute the transformation logic, such as cleaning, formatting, and aggregating data.

Transformation Logic

This encompasses the code executed within the compute service. It uses libraries and frameworks for data manipulation. Examples include Pandas in Python, Spark in Scala, or specialized data processing tools.

Storage Service (Staging Area)

This provides temporary storage for intermediate data or transformed results. Cloud storage services like Amazon S3, Azure Blob Storage, or Google Cloud Storage are frequently used.

Data Warehouse/Data Lake

The destination for the transformed data. This is where the data is loaded for analysis and reporting. Examples include Amazon Redshift, Azure Synapse Analytics, Google BigQuery, or data lakes built on cloud storage.

Monitoring and Logging

These services provide visibility into the ETL pipeline’s performance and health. CloudWatch (AWS), Azure Monitor, and Google Cloud Operations are commonly used for monitoring and logging.

Orchestration Service (Optional)

For more complex pipelines, an orchestration service can manage the workflow and dependencies between different functions. Examples include AWS Step Functions, Azure Logic Apps, and Google Cloud Composer.The data flows through this pipeline in a series of event-driven steps. For instance, when a new file is uploaded to an S3 bucket, an event notification triggers an AWS Lambda function.

The Lambda function processes the file, transforms the data, and stores the transformed data in a data warehouse. The entire process is automated and scalable.

Common Data Sources in Serverless ETL

Data sources in serverless ETL are diverse, and their selection depends on the specific use case and data requirements. Here is a table showcasing some common data sources used in serverless ETL:

| Data Source | Description | Typical Integration Method | Example Use Case |

|---|---|---|---|

| Databases (SQL/NoSQL) | Relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra) store structured and unstructured data. | Database triggers, change data capture (CDC) tools, direct query via API or SDK. | Real-time replication of transactional data to a data warehouse for reporting and analytics. |

| File Storage (CSV, JSON, Parquet) | Cloud storage services (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage) store various file formats. | Event-driven triggers (e.g., new file uploaded), scheduled jobs, direct access via API or SDK. | Processing log files, customer data, or any structured data stored in files. |

| Streaming Services (Kafka, Kinesis, Event Hubs) | Real-time data streams from various sources, such as IoT devices, social media, or application logs. | Subscription to data streams via respective client libraries or connectors. | Processing real-time sensor data, social media feeds, or clickstream data for real-time analytics. |

| APIs | Data accessed via REST APIs or other web service interfaces. | API calls using HTTP requests, utilizing API gateways and authentication mechanisms. | Extracting data from SaaS applications, social media platforms, or third-party data providers. |

Common Serverless Compute Services and Their Uses

Serverless compute services are essential for executing the transformation logic within an ETL pipeline. These services offer scalability, cost-effectiveness, and simplified management. Here is a list of common serverless compute services and their typical uses:* AWS Lambda:

Typical Uses

Data transformation, event processing, API backends, and scheduled tasks. AWS Lambda is frequently used for ETL operations due to its integration with various AWS services and its ability to handle diverse workloads.

Example

Processing CSV files uploaded to Amazon S3, transforming the data, and loading it into Amazon Redshift.* Azure Functions:

Typical Uses

Web APIs, event triggers, data processing, and IoT applications. Azure Functions integrates well with other Azure services and offers support for various programming languages.

Example

Transforming data from Azure Event Hubs, cleaning the data, and storing it in Azure Data Lake Storage.* Google Cloud Functions:

Typical Uses

Event-driven functions, API endpoints, and data processing. Google Cloud Functions integrates seamlessly with other Google Cloud services.

Example

Processing data from Google Cloud Storage, transforming the data, and loading it into Google BigQuery.* Cloudflare Workers:

Typical Uses

Edge computing, web application performance, and serverless functions deployed at the edge of the network.

Example

Performing data transformations closer to the end-users, reducing latency, and improving user experience.

Data Sources and Destinations

Serverless ETL pipelines exhibit remarkable flexibility in handling diverse data sources and destinations, enabling seamless integration across various platforms and formats. This capability is crucial for modern data-driven applications, allowing organizations to ingest, process, and output data effectively, regardless of its origin or intended use. The selection of appropriate sources and destinations, alongside the ability to handle different data formats, significantly impacts the overall efficiency and effectiveness of the ETL process.

Data Sources Compatible with Serverless ETL

Serverless ETL processes are designed to be compatible with a wide range of data sources, including both cloud-based and on-premise systems. The choice of data source often dictates the specific services and connectors utilized within the ETL pipeline.

- Cloud Storage: Cloud storage services like Amazon S3, Google Cloud Storage, and Azure Blob Storage are frequently used as data sources. These services provide scalable and cost-effective solutions for storing large volumes of data in various formats. Serverless ETL functions can directly access and process data residing in these storage locations.

- Databases: Databases, both relational and NoSQL, represent a critical data source for many ETL pipelines. Examples include:

- Relational Databases: PostgreSQL, MySQL, SQL Server, and Oracle are commonly used relational databases. Serverless ETL can extract data from these databases using JDBC drivers or database-specific connectors.

- NoSQL Databases: MongoDB, Cassandra, and DynamoDB are examples of NoSQL databases. Serverless ETL pipelines can integrate with these databases through their respective APIs or dedicated connectors.

- Streaming Data Sources: Real-time data streams, such as those generated by IoT devices or social media platforms, are increasingly important. Serverless ETL can ingest streaming data from services like Amazon Kinesis, Google Cloud Pub/Sub, and Azure Event Hubs. This allows for near real-time data processing and analysis.

- APIs: Many applications expose data through APIs. Serverless ETL can connect to these APIs using HTTP requests and parse the returned data. This enables the integration of data from external services and third-party applications.

Data Destinations Integrated with Serverless ETL Pipelines

The choice of data destination depends on the intended use of the processed data. Serverless ETL pipelines offer integration with a variety of destinations, facilitating various data analysis and storage requirements.

- Data Warehouses: Data warehouses, such as Amazon Redshift, Google BigQuery, and Azure Synapse Analytics, are frequently used as data destinations. These platforms are optimized for analytical workloads and provide efficient storage and querying capabilities.

- Data Lakes: Data lakes, often based on cloud storage services like Amazon S3, are used for storing large volumes of raw and processed data. Serverless ETL can transform and load data into data lakes, enabling data scientists and analysts to perform advanced analytics.

- Databases: Processed data can be loaded into databases for operational use. This includes relational databases for structured data and NoSQL databases for flexible data models.

- Business Intelligence (BI) Tools: Serverless ETL can integrate with BI tools such as Tableau, Power BI, and Looker, providing these tools with cleaned and transformed data for reporting and visualization.

- Other Applications: Data can be delivered to other applications via APIs or messaging services. This includes integrating with machine learning models, customer relationship management (CRM) systems, and other operational systems.

Handling Different Data Formats in Serverless ETL

Serverless ETL processes must handle a variety of data formats to accommodate diverse data sources. The ability to parse, transform, and load data in different formats is essential for the overall functionality of the ETL pipeline.

- CSV (Comma Separated Values): CSV is a common format for storing tabular data. Serverless ETL functions can parse CSV files, extract data, and perform transformations. Libraries like Python’s `csv` module or specialized ETL tools provide efficient parsing capabilities.

- JSON (JavaScript Object Notation): JSON is a widely used format for data exchange. Serverless ETL can parse JSON data, extract specific fields, and transform the data into a desired format. Tools often utilize JSON parsing libraries.

- Parquet: Parquet is a columnar storage format optimized for data warehousing and analytics. Serverless ETL pipelines can read and write Parquet files, enabling efficient storage and querying of large datasets. Libraries such as Apache Parquet are commonly used.

- Other Formats: Serverless ETL can also handle other data formats such as XML, Avro, and delimited text files. Custom parsing logic or specialized libraries may be required depending on the specific format.

ETL Processes

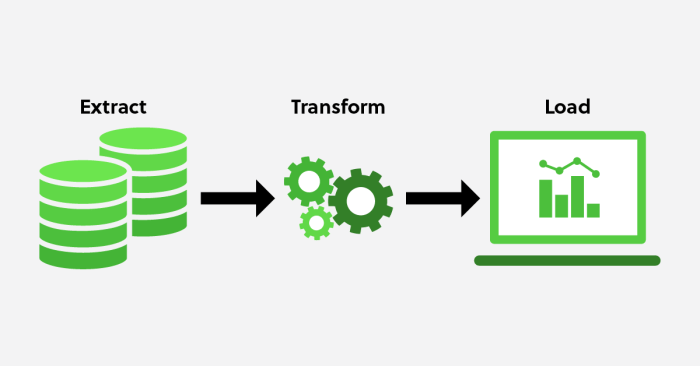

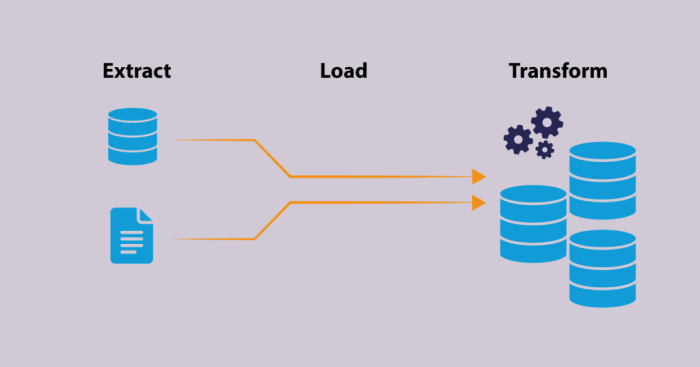

The Extract, Transform, and Load (ETL) process forms the core of data integration within a serverless architecture. This sequence facilitates the movement of data from its source, through a series of manipulations, and finally to its destination, such as a data warehouse or a data lake. Each phase – extraction, transformation, and loading – presents unique challenges and opportunities in a serverless context, leveraging the scalability and cost-effectiveness of cloud-based services.

Extraction Phase

The extraction phase initiates the ETL process by retrieving data from various sources. The choice of extraction method depends on the data source’s nature, format, and accessibility.Data extraction strategies often vary based on the source system’s capabilities. Common methods include:

- Bulk Extraction: This involves extracting the entire dataset from the source system in a single operation. This method is suitable for initial data loads or when the source system doesn’t support incremental extraction. A common example involves extracting a complete database table to a cloud storage service.

- Incremental Extraction: This method retrieves only the changes made to the data since the last extraction. This is more efficient for ongoing ETL processes, reducing the volume of data transferred and the processing time. Techniques include change data capture (CDC) and log-based extraction. CDC captures changes in the source database, and log-based extraction parses transaction logs to identify modified records.

Consider a scenario where a serverless function is triggered by a database’s CDC stream, extracting only new or updated records.

- Real-time Extraction: Data is extracted and processed as it becomes available in real-time. This approach is common for streaming data sources, such as IoT devices or social media feeds. Serverless functions, triggered by data streams, can perform real-time extraction and transformation. For example, extracting and processing clickstream data from a website in real-time to provide immediate analytics.

- API-Based Extraction: Data is extracted by making calls to the source system’s APIs. This method is prevalent when interacting with SaaS applications or cloud services. Serverless functions can be designed to interact with APIs, fetching data in a structured format. A typical case is retrieving data from a CRM system using its REST API.

- File-Based Extraction: Data is extracted from files stored in various formats, such as CSV, JSON, or Parquet. This approach is suitable for processing data from flat files, log files, or data dumps. A serverless function might be triggered by the upload of a CSV file to a cloud storage bucket to extract and process the data.

Transformation Phase

The transformation phase involves cleansing, enriching, and restructuring the extracted data to prepare it for loading into the destination. This stage is crucial for ensuring data quality and compatibility with the target system.Data transformation encompasses a wide range of techniques:

- Data Cleansing: This focuses on correcting or removing errors, inconsistencies, and inaccuracies in the data. Techniques include handling missing values (e.g., imputation), correcting typos, and standardizing data formats. For example, replacing invalid date formats with a standardized format.

- Data Enrichment: This involves adding new information to the data to enhance its value. This could include looking up external data sources, joining data from multiple sources, or calculating new fields. For example, adding geographic coordinates to customer addresses using a geocoding service.

- Data Aggregation: This involves summarizing data to provide insights. Examples include calculating sums, averages, counts, and other statistical measures. This is commonly used to generate reports and dashboards. For instance, calculating the total sales per month from transaction data.

- Data Filtering: This focuses on selecting a subset of data based on specific criteria. This reduces the data volume and focuses on the relevant data. For example, filtering out transactions from a specific region or date range.

- Data Type Conversion: This transforms data from one data type to another. This can include converting strings to numbers, dates to timestamps, etc. For example, converting a text-based date field to a date data type.

- Data Standardization: This involves applying consistent formatting and naming conventions to data. This can include standardizing units of measure, currency formats, and text casing. For example, converting all product names to uppercase.

- Data Deduplication: This involves identifying and removing duplicate records. This ensures data integrity and prevents skewed results. For instance, identifying and removing duplicate customer records based on email address.

The selection of transformation techniques depends on the specific requirements of the data and the target system. Serverless functions provide the flexibility to implement a variety of transformation logic.

Loading Phase

The loading phase involves writing the transformed data into the destination system. The choice of loading method depends on the target system’s capabilities, performance requirements, and data volume.Best practices for data loading into destinations include:

- Batch Loading: This approach involves loading data in batches, which can improve efficiency, especially for large datasets. This method is often used with data warehouses or data lakes.

- Incremental Loading: This loads only the new or changed data, minimizing loading time and resource consumption. This approach is suitable for near real-time analytics and continuous data integration.

- Parallel Loading: This loads data concurrently, utilizing multiple threads or processes to improve performance. This is beneficial when loading data into systems with high I/O capabilities.

- Data Partitioning: This divides the data into smaller, manageable chunks for loading. This can improve performance and scalability. Partitioning can be based on time, geography, or other relevant criteria.

- Error Handling and Logging: Implementing robust error handling and logging mechanisms is essential for monitoring the loading process and identifying and resolving issues.

- Data Validation: Validating the loaded data against predefined rules and constraints ensures data quality and consistency.

- Optimization for Target System: Tailoring the loading process to the specific characteristics of the target system can improve performance. For example, using bulk loading APIs or optimized data formats.

The loading phase should be carefully designed to ensure data integrity, performance, and scalability. Serverless services often provide optimized connectors and APIs for loading data into various destinations.

Tools and Technologies for Serverless ETL

The selection of appropriate tools and technologies is crucial for the successful implementation of serverless ETL processes. These tools must seamlessly integrate with cloud-based services, offer scalability, and provide robust data transformation capabilities. The choice depends on various factors, including the complexity of the data transformations, the data volume, and the specific cloud provider ecosystem.

Popular Serverless ETL Tools

Several popular tools have emerged as leading choices for serverless ETL, each with its strengths and weaknesses. Understanding these options is essential for selecting the best fit for a given project. These tools generally provide features for data ingestion, transformation, and loading, all operating within a serverless framework.

- AWS Glue: AWS Glue is a fully managed ETL service provided by Amazon Web Services (AWS). It offers a visual interface for building ETL workflows, supports various data sources and destinations, and automatically scales resources to handle data processing tasks. Glue uses Apache Spark for its underlying processing engine.

- Azure Data Factory: Azure Data Factory (ADF) is a cloud-based data integration service offered by Microsoft Azure. It allows users to create, schedule, and orchestrate data pipelines for data ingestion, transformation, and loading. ADF supports a wide range of connectors and transformation activities.

- Google Cloud Dataflow: Google Cloud Dataflow is a fully managed, serverless data processing service provided by Google Cloud Platform (GCP). It is based on Apache Beam, a unified programming model for batch and stream data processing. Dataflow automatically scales resources to handle data processing workloads.

- Apache Beam: Apache Beam is an open-source, unified programming model that enables the development of both batch and streaming data processing pipelines. While not a standalone ETL tool, Beam can be used with various runners, including Google Cloud Dataflow, Apache Flink, and Apache Spark, to execute data pipelines in a serverless or managed environment.

Comparison of Serverless ETL Tools

A comparative analysis of serverless ETL tools highlights the differences in features and capabilities. The following table provides a high-level comparison, noting key features and considerations for each tool.

| Feature | AWS Glue | Azure Data Factory | Google Cloud Dataflow | Apache Beam |

|---|---|---|---|---|

| Cloud Provider | AWS | Azure | GCP | Multi-Cloud (with appropriate runners) |

| Programming Model | Visual Interface, Python, Scala | Visual Interface, Python, .NET, PowerShell | Java, Python | Java, Python |

| Underlying Engine | Apache Spark | Various (e.g., Spark, Azure Data Lake Analytics) | Apache Beam (using runners) | Various (using runners: Flink, Spark, Dataflow) |

| Data Source Connectors | Wide range of AWS services, databases, file formats | Extensive connectors for Azure services, databases, and on-premise data sources | Connectors for GCP services, databases, and various file formats | Depends on the runner (e.g., Dataflow provides extensive connectors) |

| Data Transformation Capabilities | Built-in transformations, custom code (Python, Scala) | Wide range of built-in activities, custom code (Python, .NET, etc.) | Apache Beam transforms, custom code | Apache Beam transforms, custom code |

| Pricing Model | Pay-per-use (based on compute time and data processed) | Pay-per-activity (based on data movement and transformation) | Pay-per-use (based on compute time and data processed) | Pay-per-use (based on the chosen runner’s pricing) |

Integration with Event-Driven Architectures

Event-driven architectures enhance serverless ETL processes by enabling real-time data ingestion and processing. This integration allows for immediate responses to events, such as the arrival of new data, changes in existing data, or system notifications. This approach significantly improves the efficiency and responsiveness of data pipelines.

- Example: AWS Glue and Amazon S3 Events: In an event-driven architecture, when a new file is uploaded to an Amazon S3 bucket, an event is triggered. AWS Lambda can be configured to listen for these events. When a new file is detected, Lambda can trigger an AWS Glue job. The Glue job then processes the file, transforms the data, and loads it into a data warehouse or data lake.

This entire process is serverless, with resources scaling automatically based on the workload.

- Example: Azure Data Factory and Azure Event Grid: Azure Data Factory can be integrated with Azure Event Grid to trigger data pipelines based on events. For instance, when a new file is created in Azure Blob Storage, an event is sent to Event Grid. Event Grid then triggers an Azure Data Factory pipeline. The pipeline can then ingest the file, transform the data, and load it into a destination such as Azure Synapse Analytics.

This setup enables real-time data processing and automated workflows.

- Example: Google Cloud Dataflow and Pub/Sub: Google Cloud Dataflow can be integrated with Google Cloud Pub/Sub to process streaming data in real-time. Pub/Sub is a messaging service that allows applications to send and receive messages asynchronously. When a new message arrives in Pub/Sub, it triggers a Dataflow pipeline to process the data. The pipeline can then transform the data and load it into a data warehouse or data lake.

This setup supports real-time data ingestion and processing.

Implementing a Serverless ETL Pipeline

Building a serverless ETL pipeline involves a structured approach, from design and implementation to error handling and monitoring. This section provides a practical guide to setting up a serverless ETL process, addressing key considerations for a robust and scalable data transformation workflow.

Designing a Basic Serverless ETL Pipeline for a Specific Use Case

The design of a serverless ETL pipeline must align with the specific requirements of the use case. Consider a scenario where data from a relational database (e.g., PostgreSQL) needs to be extracted, transformed, and loaded into a data warehouse (e.g., Amazon Redshift) for analytical purposes. The design process should include these steps:

- Data Source Identification: Determine the source database schema, including tables, data types, and data volume. Understanding the data structure is crucial for effective extraction.

- Data Extraction: Implement a serverless function (e.g., AWS Lambda) to extract data from the source database. This function would use database connection libraries (e.g., psycopg2 for Python) to connect to the database and retrieve data based on defined queries.

- Data Transformation: Another serverless function performs data transformations. This includes data cleaning, data type conversions, and data enrichment. The transformation logic should be modular and easily maintainable. For example, converting date formats or joining data from multiple sources.

- Data Loading: A final serverless function loads the transformed data into the data warehouse. This function handles data formatting and bulk loading operations, optimizing for performance.

- Orchestration: Use a serverless orchestration service (e.g., AWS Step Functions) to manage the workflow. Step Functions will coordinate the execution of the Lambda functions in the correct order, handling dependencies and error conditions.

- Storage: Intermediate data storage (e.g., Amazon S3) can be used for staging the extracted and transformed data before loading it into the data warehouse.

This pipeline design allows for scalability, cost-effectiveness, and simplified management.

Step-by-Step Guide for Setting Up a Serverless ETL Process Using a Chosen Cloud Provider

Setting up a serverless ETL process requires a methodical approach, using a cloud provider like AWS. This section provides a detailed, step-by-step guide using AWS services.

- Setting Up the Environment:

- Create an AWS account or use an existing one.

- Configure the AWS CLI and install the necessary SDKs for your chosen programming language (e.g., Python).

- Define the AWS region where the resources will be deployed.

- Creating the Data Source:

- Set up a PostgreSQL database in the chosen region.

- Populate the database with sample data representing the data source.

- Creating the Data Warehouse:

- Set up an Amazon Redshift cluster in the chosen region.

- Create a database schema within Redshift for the transformed data.

- Creating the Lambda Functions:

- Extraction Function:

- Create a Lambda function in Python.

- Write code to connect to the PostgreSQL database, extract data from specified tables, and store it in an S3 bucket as CSV files.

- Include error handling and logging within the function.

- Transformation Function:

- Create a Lambda function in Python.

- Retrieve data from the S3 bucket, perform data transformations (e.g., cleaning, formatting), and store the transformed data in another S3 bucket.

- Implement transformation logic based on the data requirements.

- Loading Function:

- Create a Lambda function in Python.

- Retrieve the transformed data from the second S3 bucket.

- Use the `COPY` command to load data into the Redshift cluster.

- Include error handling and logging.

- Extraction Function:

- Configuring S3 Buckets:

- Create two S3 buckets: one for extracted data and one for transformed data.

- Configure the necessary IAM permissions for the Lambda functions to access the S3 buckets.

- Configuring IAM Roles:

- Create IAM roles for each Lambda function, granting them the necessary permissions: access to the database, access to S3 buckets, and the ability to invoke other services.

- Setting Up Step Functions:

- Create a Step Functions state machine to orchestrate the workflow.

- Define the state machine’s workflow: Extraction function -> Transformation function -> Loading function.

- Configure error handling and retry mechanisms within the state machine.

- Testing and Validation:

- Test the entire pipeline by triggering the Step Functions state machine.

- Verify that data is extracted, transformed, and loaded into the Redshift cluster correctly.

- Monitor the execution logs for errors and performance issues.

- Deployment and Scheduling:

- Deploy the complete solution.

- Schedule the Step Functions state machine to run automatically at defined intervals (e.g., daily, hourly) using CloudWatch Events.

This step-by-step guide provides a clear roadmap for implementing a serverless ETL process on AWS.

Demonstrating How to Handle Errors and Implement Monitoring in a Serverless ETL Pipeline

Error handling and monitoring are crucial for ensuring the reliability and maintainability of a serverless ETL pipeline. Robust error handling prevents data loss and ensures data integrity, while effective monitoring helps identify and resolve issues quickly.

- Error Handling Strategies:

- Function-Level Error Handling: Implement error handling within each Lambda function. Use `try-except` blocks to catch exceptions and log detailed error messages. For example, if a database connection fails, the function should log the error and potentially retry the connection.

- Retry Mechanisms: Implement retry mechanisms for transient errors. AWS Step Functions provides built-in retry capabilities. Configure retries with exponential backoff to handle temporary issues like network problems.

- Dead-Letter Queues (DLQs): Use DLQs (e.g., Amazon SQS) to handle messages that fail processing. Failed data transformations can be sent to a DLQ for manual review and reprocessing.

- Circuit Breakers: Implement circuit breakers to prevent cascading failures. If a service repeatedly fails, a circuit breaker can temporarily stop sending requests to that service.

- Monitoring Implementation:

- CloudWatch Metrics: Use Amazon CloudWatch to monitor key metrics. This includes:

- Lambda Function Metrics: Execution time, invocations, errors, and throttles.

- Step Functions Metrics: Execution time, state transitions, errors, and throttles.

- S3 Metrics: Number of objects, storage size, and data transfer.

- Redshift Metrics: CPU utilization, disk space, and query performance.

- CloudWatch Logs: Enable detailed logging for all Lambda functions and Step Functions. Use structured logging to facilitate analysis and debugging.

- Alerting: Set up CloudWatch alarms to trigger notifications based on specific metrics thresholds. For example, create an alarm if the number of errors in a Lambda function exceeds a certain limit.

- Dashboards: Create CloudWatch dashboards to visualize key metrics and track the overall health of the ETL pipeline.

- Tracing: Implement distributed tracing (e.g., AWS X-Ray) to track the flow of requests through the pipeline. This helps identify performance bottlenecks and pinpoint the source of errors.

- CloudWatch Metrics: Use Amazon CloudWatch to monitor key metrics. This includes:

- Example of Error Handling and Monitoring Implementation:

- Extraction Function: The extraction function attempts to connect to the database. If the connection fails, it logs the error, retries a few times with exponential backoff, and then sends a notification to an SNS topic if the connection persists.

- Transformation Function: The transformation function logs the input data size and the size of the transformed data. If the transformation fails for a specific record, the function logs the error, stores the failed record in a DLQ (e.g., Amazon SQS), and increases the `TransformationErrors` metric in CloudWatch.

- Loading Function: The loading function monitors the Redshift load process. If the load fails, the function logs the error, retries, and increases the `LoadErrors` metric. An alarm is set up in CloudWatch to trigger a notification if the number of load errors exceeds a defined threshold.

Effective error handling and monitoring are vital for a successful and reliable serverless ETL pipeline.

Security Considerations in Serverless ETL

Serverless ETL architectures, while offering significant advantages in scalability and cost-efficiency, introduce unique security challenges. The distributed nature of serverless functions, data storage, and processing services necessitates a proactive and multi-layered security approach. This section Artikels best practices, secret management strategies, and vulnerability mitigation techniques crucial for securing serverless ETL pipelines.

Data Encryption and Access Control

Data encryption and access control are fundamental to protecting sensitive data throughout the ETL lifecycle. Implementing these measures ensures confidentiality and integrity, minimizing the risk of unauthorized access or data breaches.

- Data Encryption: Encryption protects data at rest and in transit.

- Encryption at Rest: Employ server-side encryption (SSE) with managed keys provided by the cloud provider for object storage services (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage). This ensures that data stored within these services is encrypted with keys managed by the provider, simplifying key management.

- Encryption in Transit: Utilize Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt data during transit between data sources, processing functions, and data destinations. This protects data from eavesdropping and tampering during transmission. For example, use HTTPS for API calls and secure protocols like SFTP for file transfers.

- Access Control: Implement robust access control mechanisms to restrict access to data and resources based on the principle of least privilege.

- Identity and Access Management (IAM): Leverage IAM services (e.g., AWS IAM, Azure Active Directory, Google Cloud IAM) to define roles and permissions. Grant only the necessary permissions to each serverless function and service account. For example, a function responsible for reading data from a source should only have read access to that source.

- Network Security: Configure network security groups or virtual networks to restrict network access to serverless functions and data stores. For example, functions might be configured to only allow access from specific IP ranges or within a virtual private cloud (VPC).

- Data Masking and Anonymization: Implement data masking and anonymization techniques to protect sensitive data during processing. This involves replacing or removing sensitive information like Personally Identifiable Information (PII) before it is stored or shared.

Managing Secrets and Credentials Securely

Securely managing secrets and credentials is critical in serverless ETL environments, where functions and services often require access to sensitive information such as database passwords, API keys, and authentication tokens. Improper handling of secrets can lead to significant security breaches.

- Secret Management Services: Utilize dedicated secret management services provided by cloud providers (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager). These services provide secure storage, retrieval, and rotation of secrets.

- Example: In AWS, you can store database credentials in Secrets Manager and configure your Lambda function to retrieve them securely. The function would be granted permission to access the secret through an IAM role, ensuring that the credentials are not hardcoded in the function’s code.

- Environment Variables: Store sensitive configuration parameters as environment variables within the serverless function’s configuration. While not as secure as dedicated secret management services, they provide a level of separation between code and configuration.

- Best Practice: Avoid storing highly sensitive information (like passwords) directly in environment variables. Instead, store references to secrets managed by a dedicated secret management service.

- Rotation and Access Control: Implement automated secret rotation to minimize the impact of compromised credentials. Regularly rotate secrets (e.g., database passwords, API keys) to reduce the attack surface. Control access to secrets using IAM roles and policies, ensuring that only authorized functions and services can access them.

Identifying and Mitigating Security Vulnerabilities

Serverless ETL pipelines are susceptible to various security vulnerabilities. Proactive identification and mitigation of these vulnerabilities are crucial for maintaining a secure environment.

- Vulnerability Scanning: Regularly scan serverless functions and dependencies for known vulnerabilities. Use automated scanning tools to identify and address potential security flaws in your code.

- Example: Use tools like OWASP Dependency-Check to scan your project’s dependencies for known vulnerabilities. This helps identify and address vulnerabilities in third-party libraries before they can be exploited.

- Input Validation and Sanitization: Validate and sanitize all inputs to prevent injection attacks (e.g., SQL injection, command injection). Implement input validation at the entry points of your ETL pipeline to ensure that data conforms to expected formats and ranges.

- Example: If your ETL pipeline processes data from a web form, validate the data before it is used in database queries or other operations.

Sanitize user-provided input to prevent malicious code from being injected.

- Example: If your ETL pipeline processes data from a web form, validate the data before it is used in database queries or other operations.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security incidents.

- Logging: Log all relevant events, including authentication attempts, access to sensitive resources, and errors. Centralize logs for analysis and alerting.

- Monitoring: Monitor metrics such as function invocation counts, error rates, and network traffic. Set up alerts to notify you of suspicious activity.

- Example: Use a logging service (e.g., AWS CloudWatch Logs, Azure Monitor, Google Cloud Logging) to collect and analyze logs from your serverless functions. Configure alerts to notify you of unusual activity, such as a sudden spike in function invocations or unauthorized access attempts.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities in your serverless ETL pipeline.

- Purpose: Security audits and penetration tests help identify weaknesses in your security posture and provide recommendations for improvement. This ensures that your security controls are effective and up-to-date.

Monitoring and Logging in Serverless ETL

Effective monitoring and logging are critical components of any serverless ETL pipeline. Serverless architectures, by their nature, are distributed and ephemeral, making traditional debugging and performance analysis methods challenging. Robust monitoring and logging practices provide visibility into pipeline execution, enabling proactive identification and resolution of issues, optimization of performance, and ensuring data quality. Without these, troubleshooting becomes significantly more difficult, potentially leading to data inconsistencies, missed deadlines, and increased operational costs.

Importance of Monitoring and Logging

Monitoring and logging are essential for maintaining the health, performance, and reliability of serverless ETL pipelines. They provide the necessary insights to understand how the pipeline is functioning, identify bottlenecks, and address potential problems before they impact data processing.

- Real-time Visibility: Monitoring dashboards provide real-time views of pipeline execution, including the status of individual tasks, data volumes processed, and resource utilization. This allows for immediate detection of anomalies or performance degradation.

- Performance Optimization: By analyzing logs and monitoring metrics, you can identify performance bottlenecks, such as slow-running functions or inefficient data transformations. This information can be used to optimize the pipeline for improved throughput and reduced latency.

- Error Detection and Troubleshooting: Comprehensive logging captures detailed information about errors and failures, including stack traces, error messages, and timestamps. This information is invaluable for quickly diagnosing and resolving issues.

- Data Quality Assurance: Monitoring can track data validation failures, data inconsistencies, and other data quality issues. This enables proactive measures to ensure data integrity and accuracy.

- Compliance and Auditing: Logs provide an audit trail of all pipeline activities, which is essential for compliance with regulatory requirements and internal auditing processes.

Implementing Logging and Monitoring with Cloud Provider Tools

Cloud providers offer a variety of tools and services specifically designed for monitoring and logging in serverless environments. These tools provide centralized logging, real-time monitoring, and alerting capabilities.

- AWS CloudWatch (AWS): CloudWatch is a comprehensive monitoring and logging service offered by AWS. It allows you to collect, analyze, and visualize logs, metrics, and events from your serverless ETL pipelines.

- Logging: AWS Lambda functions, which are commonly used in serverless ETL, automatically send logs to CloudWatch Logs. You can also explicitly log messages from your code using the `console.log()` function in Node.js or the `print()` function in Python. These logs include timestamps, log levels (e.g., INFO, WARNING, ERROR), and custom messages that provide context about the pipeline’s execution.

- Metrics: CloudWatch automatically collects metrics for Lambda functions, such as invocation count, duration, errors, and throttles. You can also define custom metrics to track specific aspects of your pipeline’s performance, such as the number of records processed or the size of the data being transformed.

- Dashboards: CloudWatch allows you to create dashboards to visualize metrics and logs in real-time. These dashboards provide a consolidated view of your pipeline’s health and performance, allowing you to quickly identify issues and trends. A typical dashboard might display the number of invocations, the average duration, and the error rate of a Lambda function that processes incoming data.

- Log Insights: CloudWatch Log Insights enables you to query and analyze your logs using a powerful query language. This allows you to search for specific events, filter logs based on criteria, and identify patterns in your data. For example, you could use Log Insights to find all errors that occurred within a specific time period or to identify the functions that are generating the most logs.

- Azure Monitor (Azure): Azure Monitor is the monitoring service for Azure resources. It provides a centralized platform for collecting, analyzing, and acting on telemetry data from your serverless ETL pipelines.

- Application Insights: Application Insights is a feature of Azure Monitor that provides application performance monitoring (APM) capabilities. It automatically collects telemetry data from your Azure Functions, including requests, dependencies, exceptions, and performance counters. This data is used to generate dashboards, alerts, and visualizations that provide insights into your pipeline’s performance.

- Log Analytics: Log Analytics is a powerful log management service within Azure Monitor. It allows you to collect and analyze logs from various sources, including Azure Functions, storage accounts, and other Azure services. You can use Log Analytics to query your logs, create custom dashboards, and set up alerts.

- Metrics Explorer: Metrics Explorer allows you to visualize metrics collected by Azure Monitor. You can create charts and dashboards to track the performance of your Azure Functions, such as the number of invocations, the average duration, and the error rate.

- Google Cloud Monitoring (GCP): Google Cloud Monitoring (formerly Stackdriver) is a comprehensive monitoring service for Google Cloud Platform (GCP) resources. It provides real-time monitoring, alerting, and logging capabilities.

- Cloud Logging: Cloud Logging is a centralized logging service that collects logs from various GCP services, including Cloud Functions, Cloud Storage, and BigQuery. You can use Cloud Logging to search, filter, and analyze your logs. Logs include timestamps, log levels, and custom messages that provide context about the pipeline’s execution.

- Cloud Monitoring: Cloud Monitoring allows you to create dashboards, set up alerts, and visualize metrics from your GCP resources. It automatically collects metrics for Cloud Functions, such as invocation count, duration, and errors. You can also define custom metrics to track specific aspects of your pipeline’s performance.

- Cloud Trace: Cloud Trace provides distributed tracing capabilities, allowing you to track the flow of requests through your serverless ETL pipeline. This is particularly useful for identifying performance bottlenecks and understanding the dependencies between different components.

Setting Up Alerts and Notifications

Proactive alerting is essential for quickly identifying and responding to pipeline failures or performance issues. Cloud providers offer various alerting mechanisms that can be configured to trigger notifications based on specific conditions.

- Defining Alerting Rules: Define alert rules based on critical metrics and log patterns. For example, you might set up an alert to trigger when the error rate of a Lambda function exceeds a certain threshold, or when the latency of a data transformation step exceeds a predefined value.

- Notification Channels: Configure notification channels to receive alerts. This can include email, SMS, Slack, PagerDuty, or other communication channels.

- Example: AWS CloudWatch Alarm: In AWS CloudWatch, you can create an alarm that triggers when the `Errors` metric of a Lambda function exceeds a specific threshold. The alarm can then be configured to send a notification to an SNS topic, which in turn can send an email or trigger other actions.

For example, to set up an alarm for a Lambda function named “MyETLFunction” with a threshold of 10 errors in a 5-minute period, you would configure a CloudWatch alarm with the following settings:

- Metric: `Errors`

- Namespace: `AWS/Lambda`

- Dimension Name: `FunctionName`

- Dimension Value: `MyETLFunction`

- Statistic: `Sum`

- Period: `300 seconds` (5 minutes)

- Threshold: `>` 10

- Example: Azure Monitor Alert: In Azure Monitor, you can create an alert rule that triggers when the `FunctionExecutionErrors` metric of an Azure Function exceeds a certain threshold. The alert can then be configured to send a notification to an email address or trigger other actions, such as creating a support ticket.

- Example: Google Cloud Monitoring Alert: In Google Cloud Monitoring, you can create an alert policy that triggers when the `function/execution_count` metric for a Cloud Function drops below a specified threshold, which might indicate a failure in triggering or running the function. This policy can then notify via email or other channels.

Serverless ETL vs. Traditional ETL: A Comparison

Comparing serverless ETL with traditional ETL approaches reveals significant differences in scalability, cost, and operational overhead. The choice between these two paradigms depends heavily on specific project requirements, data volumes, and the organization’s existing infrastructure and expertise. Understanding the nuances of each approach is crucial for making informed decisions about data integration strategies.

Scalability and Cost Comparison

The primary differentiators between serverless and traditional ETL lie in their scalability and cost models. Traditional ETL often requires upfront investment in hardware and software, leading to fixed costs regardless of actual data processing needs. Serverless ETL, on the other hand, offers on-demand scalability and a pay-per-use pricing structure, allowing resources to scale up or down automatically based on the workload.

This flexibility can translate into significant cost savings, especially for workloads with fluctuating data volumes.

To illustrate these differences, consider a table comparing the pros and cons of both approaches:

| Feature | Serverless ETL | Traditional ETL |

|---|---|---|

| Scalability | Highly scalable; automatically adjusts to changing workloads. Resources are provisioned on-demand, eliminating the need for manual scaling. | Scalability is often limited by hardware capacity and the need for manual configuration. Scaling can be time-consuming and expensive. |

| Cost | Pay-per-use model. Costs are directly proportional to resource consumption (e.g., compute time, data processed, storage used). Reduced operational overhead. | Fixed costs associated with hardware, software licenses, and infrastructure maintenance. Costs are incurred regardless of actual data processing needs. Higher operational overhead. |

| Operational Overhead | Minimal. The cloud provider manages infrastructure, reducing the need for IT staff to manage servers and software updates. Focus on data transformation logic. | Significant. Requires dedicated IT staff to manage servers, software, and infrastructure. Involves tasks like patching, backups, and capacity planning. |

| Implementation Complexity | Potentially lower, especially for simple data pipelines. Requires familiarity with cloud services and serverless architectures. Can be complex for intricate data transformations. | Can be complex, depending on the tools and techniques used. Requires expertise in ETL tools and infrastructure management. |

Suitability for Different Scenarios

The suitability of serverless ETL versus traditional ETL depends on several factors, including data volume, processing complexity, and budget constraints. Serverless ETL excels in scenarios characterized by fluctuating data volumes, unpredictable workloads, and the need for rapid deployment and scalability. Traditional ETL remains a viable option for organizations with existing infrastructure investments, highly complex transformation requirements, and strict compliance or security needs.

- Serverless ETL is Most Suitable for:

- Data Lakes and Data Warehouses: Processing and integrating data from various sources into a data lake or data warehouse, particularly when data volumes fluctuate. For example, a retail company using cloud-based ETL to ingest sales data, web logs, and social media feeds to analyze customer behavior and improve marketing campaigns.

- Real-time Data Processing: Processing streaming data in real-time for applications like fraud detection, anomaly detection, or real-time dashboards. For instance, a financial institution using serverless ETL to analyze transaction data in real-time to identify and prevent fraudulent activities.

- Event-Driven Architectures: Building data pipelines triggered by events, such as file uploads or database updates. For example, an e-commerce company using serverless ETL to automatically process customer orders, update inventory, and trigger email notifications.

- Small to Medium-Sized Datasets: Processing datasets where the compute requirements are manageable.

- Traditional ETL is Most Suitable for:

- High Data Volumes and Complex Transformations: Handling massive datasets and performing intricate data transformations where optimized performance is critical. An example would be a large telecommunications company processing terabytes of call detail records daily for billing and network optimization.

- On-Premises Infrastructure: Organizations with significant investments in on-premises hardware and software, where migrating to the cloud is not feasible or desirable.

- Strict Security and Compliance Requirements: Industries with stringent data security and compliance regulations, such as healthcare or finance, where data residency and control are paramount. For example, a healthcare provider using on-premises ETL to process patient data while adhering to HIPAA regulations.

- Legacy Systems Integration: Integrating data from legacy systems that are not easily migrated to the cloud.

Ultimate Conclusion

In conclusion, serverless ETL provides a robust and efficient framework for modern data integration challenges. By leveraging the inherent benefits of serverless computing, organizations can achieve greater agility, reduce operational costs, and improve data processing performance. The scalability and flexibility offered by this approach make it ideally suited for handling the ever-increasing volumes and velocity of data. While the choice between serverless and traditional ETL depends on specific use cases and organizational requirements, serverless ETL stands out as a powerful solution for organizations seeking to optimize their data pipelines and derive maximum value from their data assets.

Question & Answer Hub

What is the primary advantage of serverless ETL regarding cost?

The primary cost advantage lies in the pay-per-use model, where you only pay for the compute resources consumed during data processing, eliminating the need for idle infrastructure.

How does serverless ETL handle data transformation?

Serverless ETL utilizes compute services (e.g., AWS Lambda, Azure Functions) to execute transformation logic on incoming data. This can include cleaning, formatting, and aggregating data based on defined rules.

What are some common data destinations for serverless ETL pipelines?

Common destinations include data warehouses (e.g., Amazon Redshift, Google BigQuery), data lakes (e.g., Amazon S3, Azure Data Lake Storage), and databases (e.g., PostgreSQL, MySQL).

What security measures are typically employed in serverless ETL?

Security best practices include data encryption, access control using IAM roles, secure secret management, and regular security audits to protect data integrity.