The realm of serverless computing offers a paradigm shift in software architecture, and at its core lies the serverless API gateway proxy integration. This model provides a crucial bridge between client applications and backend services, enabling a highly scalable, cost-effective, and secure approach to API management. This discussion delves into the intricacies of this integration, exploring its core components, comparing it to direct integration, and showcasing its advantages across various use cases.

We will analyze the technical aspects of this architectural pattern, focusing on its practical implications and strategic benefits.

This integration essentially acts as a sophisticated intermediary, handling incoming API requests and routing them to the appropriate backend service. This intermediary also manages crucial aspects such as authentication, authorization, request transformation, and response handling. This design enables developers to focus on building the core business logic of their applications without the burden of managing infrastructure, leading to faster development cycles and improved resource utilization.

We’ll examine the components involved, including the API gateway and the backend services, and illustrate how they interact to facilitate seamless communication.

Introduction to Serverless API Gateway Proxy Integration

Serverless API gateway proxy integration represents a paradigm shift in how APIs are designed, deployed, and managed. This approach leverages the scalability and cost-effectiveness of serverless computing to create a highly available and resilient system for handling API requests. It allows developers to focus on business logic without managing the underlying infrastructure, leading to faster development cycles and reduced operational overhead.The core concept involves using an API gateway to act as a front-end for backend serverless functions.

The gateway receives incoming API requests, authenticates and authorizes them, and then proxies these requests to the appropriate serverless functions. The serverless functions execute the required business logic and return the response, which is then passed back through the gateway to the client.

Definition of Serverless API Gateway Proxy Integration

Serverless API gateway proxy integration is a method of connecting client applications to backend services using a serverless API gateway. The gateway acts as an intermediary, receiving client requests, managing routing, authentication, authorization, and then forwarding the requests to serverless functions. These functions process the requests and return responses, which the gateway then delivers back to the client. This entire process is designed to be managed without the need for provisioning or managing servers.

Benefits of Serverless API Gateway Proxy Integration

Serverless API gateway proxy integration offers several advantages that contribute to its increasing popularity. These benefits encompass aspects such as cost efficiency, scalability, and ease of management.

- Cost-Effectiveness: Serverless architectures, including API gateway proxy integrations, are often more cost-effective than traditional server-based approaches. The pay-per-use model of serverless functions means that you only pay for the compute time your functions consume. This can lead to significant cost savings, especially for applications with fluctuating traffic patterns. For example, consider an e-commerce website that experiences a surge in traffic during a holiday sale.

With a traditional server setup, you’d need to provision enough capacity to handle the peak load, even if it’s only needed for a short period. Serverless allows you to scale up automatically during the sale and then scale back down when the traffic subsides, avoiding unnecessary costs.

- Scalability: Serverless API gateways automatically scale to handle the volume of requests. The underlying infrastructure scales up or down dynamically based on demand, ensuring that your API remains responsive and available even during peak loads. This scalability is often achieved through the use of managed services that handle the underlying infrastructure. For example, AWS API Gateway, Google Cloud API Gateway, and Azure API Management automatically scale to accommodate increasing traffic.

- Reduced Operational Overhead: Serverless architectures reduce the operational burden associated with managing servers. Developers do not need to provision, configure, or maintain servers. This allows them to focus on writing code and building features rather than managing infrastructure. The API gateway handles tasks such as security, routing, and monitoring, freeing up developers to concentrate on the core business logic.

- Faster Development Cycles: The combination of serverless functions and API gateways accelerates development cycles. Developers can deploy and update API endpoints quickly, without having to worry about infrastructure. This leads to faster time-to-market for new features and improvements. The ability to quickly iterate and deploy changes is a significant advantage in today’s fast-paced development environment.

- Increased Availability and Resilience: Serverless API gateways are typically designed for high availability. They are often deployed across multiple availability zones, and they automatically handle failures. This ensures that your API remains available even if one of the underlying components fails. For example, if one of the serverless functions fails, the API gateway can automatically route traffic to another instance of the function.

Core Components of the Integration

The serverless API gateway proxy integration relies on a well-defined architecture where distinct components interact to facilitate request routing and processing. Understanding these components and their interactions is crucial for grasping the operational dynamics of this architecture. The core components work together seamlessly to provide a scalable, efficient, and cost-effective solution for managing API traffic.

API Gateway

The API gateway is the central entry point for all client requests. Its primary responsibilities involve receiving incoming requests, routing them to the appropriate backend services, and handling various aspects of API management. The gateway acts as a single point of contact, shielding the backend services from direct client interaction.The API gateway’s key functions are:

- Request Routing: The gateway analyzes incoming requests based on the URL path, HTTP method, headers, and other parameters. It then forwards the requests to the corresponding backend service or function. This routing mechanism is typically configured using rules or mappings that define the relationship between API endpoints and backend resources. For example, a request to `/users` might be routed to a serverless function that retrieves user data.

- Authentication and Authorization: The gateway enforces security policies by authenticating and authorizing incoming requests. It validates user credentials (e.g., API keys, OAuth tokens) and verifies that the user has the necessary permissions to access the requested resources. This ensures that only authorized clients can access the backend services.

- Request Transformation: The gateway can transform incoming requests to match the format expected by the backend service. This may involve modifying headers, body content, or query parameters. For instance, the gateway might translate a request from JSON to XML or add default values to missing parameters.

- Response Transformation: Similarly, the gateway can transform the responses from the backend service before sending them back to the client. This might involve formatting the response, masking sensitive data, or adding additional information.

- Rate Limiting and Throttling: The gateway implements rate limiting and throttling mechanisms to protect the backend services from overload. It limits the number of requests a client can make within a specified time window. This helps prevent denial-of-service (DoS) attacks and ensures fair resource allocation.

- Monitoring and Logging: The gateway monitors API traffic, collects performance metrics, and logs all requests and responses. This data is essential for troubleshooting, performance analysis, and capacity planning. It allows developers to gain insights into API usage patterns and identify potential issues.

Backend Service

The backend service, often implemented as a serverless function, is responsible for processing the actual business logic and responding to client requests. The backend service focuses on executing the core functionality of the API. Serverless functions, such as those provided by AWS Lambda, Google Cloud Functions, or Azure Functions, are a common choice for the backend service in a serverless architecture.

These functions execute code in response to events, such as HTTP requests.The backend service’s core functions are:

- Business Logic Execution: The backend service executes the code that handles the specific task requested by the client. This includes retrieving data from databases, processing data, performing calculations, and interacting with other services. For example, a backend service might process a request to create a new user account by validating input data, storing the user information in a database, and sending a confirmation email.

- Data Access: The backend service interacts with databases, file storage, and other data sources to retrieve and store data. It uses appropriate APIs and SDKs to connect to these data sources and perform data operations. For instance, a backend service might query a database to retrieve a list of products or upload a file to a cloud storage service.

- External Service Integration: The backend service can integrate with other external services, such as payment gateways, notification services, and third-party APIs. This allows the API to perform more complex tasks and provide richer functionality. For example, a backend service might use a payment gateway to process a credit card transaction or send a notification to a user via an SMS service.

- Response Generation: The backend service generates the response to be sent back to the client. This includes formatting the data, setting the appropriate HTTP status code, and adding any necessary headers. The response is then returned to the API gateway.

Communication Flow

The communication flow between the API gateway and the backend service follows a well-defined sequence of steps. The process begins when a client initiates a request.The communication flow steps are:

- Client Request: The client sends an HTTP request to the API gateway. The request includes the URL, HTTP method, headers, and body.

- API Gateway Receives Request: The API gateway receives the request and performs authentication, authorization, and any necessary transformations.

- Request Routing: The API gateway uses routing rules to determine the appropriate backend service to handle the request.

- Request Forwarding: The API gateway forwards the request to the selected backend service. This typically involves sending the request to the serverless function.

- Backend Service Processing: The backend service receives the request, executes the business logic, and interacts with data sources or other services as needed.

- Response Generation: The backend service generates a response, including the data and HTTP status code.

- Response Return: The backend service returns the response to the API gateway.

- Response Transformation (Optional): The API gateway can optionally transform the response before sending it back to the client.

- Client Response: The API gateway sends the response back to the client.

The integration enables a decoupled architecture, allowing for independent scaling and management of the API gateway and backend services. The serverless nature of the backend services ensures automatic scaling based on demand, providing high availability and cost efficiency.

Proxying vs. Direct Integration

This section analyzes the contrasting approaches to integrating serverless API gateways: proxy integration and direct integration. Understanding the nuances of each method is crucial for making informed architectural decisions that optimize performance, security, and maintainability. The choice between proxy and direct integration significantly impacts the overall design and operational characteristics of a serverless API.

Comparing Proxy Integration with Direct Integration

The core distinction between proxy and direct integration lies in how the API gateway interacts with the backend services. Proxy integration acts as an intermediary, forwarding requests to and responses from the backend, while direct integration bypasses this intermediary, allowing the gateway to directly invoke the backend function.

| Feature | Proxy Integration | Direct Integration | Notes |

|---|---|---|---|

| Request Handling | API Gateway receives the request, forwards it to the backend service (e.g., a Lambda function), and then relays the response back to the client. | API Gateway directly invokes the backend service (e.g., a Lambda function) using the service’s native integration capabilities. | The key difference is the level of involvement of the API gateway in processing the request and response. |

| Advantages |

|

| The advantages are context-dependent, with each approach excelling in different scenarios. |

| Disadvantages |

|

| The disadvantages highlight the trade-offs inherent in each integration method. |

| Use Cases |

|

| Understanding the specific use case is key to selecting the appropriate integration strategy. |

Benefits of Serverless API Gateway Proxy Integration

Serverless API Gateway proxy integration offers a compelling set of advantages for modern application development, significantly improving various aspects of the development lifecycle. This architectural pattern provides substantial benefits in terms of scalability, cost-effectiveness, security, and developer productivity, leading to more efficient and resilient applications.

Scalability Advantages

The inherent scalability of serverless infrastructure, combined with the capabilities of an API Gateway, allows applications to handle fluctuating traffic demands with ease. This is achieved through automatic scaling, resource allocation, and request handling, ensuring optimal performance and availability.

- Automatic Scaling: Serverless platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, automatically scale the underlying compute resources based on the incoming request volume. The API Gateway, acting as the entry point, dynamically provisions and manages the necessary resources to handle the load, without requiring manual intervention or capacity planning. This is particularly crucial for applications experiencing unpredictable traffic spikes.

For example, a retail website during a flash sale can seamlessly handle a surge in requests without performance degradation.

- Resource Optimization: The proxy integration enables efficient resource utilization. The API Gateway intelligently routes requests to the appropriate backend services. Serverless functions are invoked only when needed, optimizing compute time and minimizing idle resources. This leads to cost savings and improved overall efficiency. For instance, a microservices architecture can leverage the API Gateway to selectively activate specific functions based on the incoming request, reducing unnecessary resource consumption.

- High Availability: Serverless platforms are designed for high availability and fault tolerance. The API Gateway, typically deployed across multiple availability zones, provides redundancy and ensures that requests are routed to healthy backend services. If a backend service becomes unavailable, the API Gateway can automatically reroute traffic to a different instance, minimizing downtime and ensuring continuous service availability. Consider an application that leverages multiple database instances; the API Gateway can automatically switch between them if one fails.

Cost-Effectiveness Benefits

The serverless model, combined with the proxy integration pattern, offers significant cost advantages compared to traditional infrastructure-based approaches. This cost efficiency stems from a pay-per-use model, reduced operational overhead, and optimized resource utilization.

- Pay-per-use Pricing: Serverless platforms charge only for the compute time and resources consumed by the backend functions. This eliminates the need to provision and pay for idle resources, resulting in substantial cost savings, especially for applications with variable workloads. For instance, an application that experiences peak usage during specific hours of the day only incurs costs during those periods, avoiding the expense of maintaining provisioned resources around the clock.

- Reduced Operational Overhead: Serverless platforms handle the underlying infrastructure management, including server provisioning, patching, and scaling. This reduces the operational burden on development teams, allowing them to focus on writing code and building features. The API Gateway further simplifies operations by handling tasks such as request routing, authentication, and authorization. The reduced overhead translates to lower operational costs and increased developer productivity.

- Optimized Resource Consumption: As mentioned earlier, the proxy integration facilitates efficient resource utilization. Backend functions are only invoked when necessary, and resources are allocated dynamically based on demand. This optimization minimizes wasted resources and further reduces costs. The API Gateway’s caching capabilities can also reduce the load on backend services, further lowering costs.

Security Enhancements

Serverless API Gateway proxy integration enhances the security posture of applications by providing a centralized point for security enforcement, implementing robust access controls, and mitigating potential threats.

- Centralized Security Enforcement: The API Gateway acts as a central point for security policies, such as authentication, authorization, and rate limiting. This centralized approach simplifies security management and ensures consistency across all API endpoints. This is crucial for maintaining a secure and compliant environment. For example, all API requests can be routed through the API Gateway, where they can be authenticated using a common authentication provider.

- Robust Access Controls: The API Gateway enables granular access control, allowing developers to define specific permissions for each API endpoint and user role. This ensures that only authorized users can access sensitive data and functionality. This control prevents unauthorized access and mitigates the risk of data breaches. Consider an API that exposes user profiles; the API Gateway can restrict access to specific profile fields based on user roles.

- Threat Mitigation: The API Gateway provides built-in features for threat mitigation, such as request validation, input sanitization, and protection against common attacks like denial-of-service (DoS) attacks. The API Gateway can also integrate with web application firewalls (WAFs) to provide additional protection. This helps to secure the application from various threats. For example, the API Gateway can automatically block requests that exceed a defined rate limit, preventing a DoS attack.

Improved Development Workflow

Serverless API Gateway proxy integration streamlines the development process, leading to faster development cycles, improved developer productivity, and increased agility.

- Faster Development Cycles: Serverless platforms enable rapid prototyping and deployment. Developers can focus on writing code and building features without the overhead of managing infrastructure. The API Gateway simplifies API management tasks, such as routing, versioning, and monitoring. This accelerates the development process. For example, a developer can quickly deploy a new API endpoint by defining it in the API Gateway and connecting it to a serverless function.

- Increased Developer Productivity: Serverless platforms automate many infrastructure management tasks, freeing up developers to focus on their core responsibilities. The API Gateway simplifies API management, reducing the time and effort required to manage APIs. This increases developer productivity and allows them to deliver features faster. The reduction in operational overhead empowers developers to concentrate on coding and innovation.

- Enhanced Agility: The serverless architecture promotes agility and flexibility. Developers can easily adapt to changing requirements and deploy updates quickly. The API Gateway facilitates seamless integration with various backend services, allowing developers to easily integrate new services and functionalities. This enhances the application’s ability to respond to evolving business needs. Consider the ability to add new features or services without significant infrastructure changes.

Use Cases and Applications

The serverless API gateway proxy integration model finds applicability across a wide spectrum of scenarios, streamlining application development, enhancing security, and optimizing resource utilization. Its inherent flexibility and scalability make it a compelling choice for various industries and use cases, especially those characterized by dynamic workloads and fluctuating traffic patterns. The following sections delineate specific application areas and illustrative examples.

Common Use Cases

The proxy integration pattern is frequently adopted to address several common architectural requirements. These include, but are not limited to, enhancing API security, simplifying microservices communication, and enabling seamless integration with legacy systems.

- API Security and Access Control: A proxy acts as a central point for managing API access. This includes authentication (verifying user identity), authorization (determining what a user can access), and rate limiting (controlling the number of requests). This approach simplifies security configuration and enforcement, allowing for consistent security policies across all backend services. For instance, an e-commerce platform might use a proxy to authenticate user requests before they reach the product catalog or payment processing services.

This isolates the backend services from the complexities of authentication and authorization, improving their security posture.

- Microservices Communication: In a microservices architecture, services often need to communicate with each other. A proxy can mediate these interactions, handling service discovery (locating services), load balancing (distributing traffic across multiple instances of a service), and request routing. This simplifies the communication logic within the microservices, allowing them to focus on their core functionalities. A financial services company, for example, could use a proxy to route requests between its user authentication service, transaction processing service, and reporting service.

- Legacy System Integration: A proxy can serve as a bridge between modern applications and legacy systems that may not have native API capabilities. The proxy translates requests and responses between the modern API format and the legacy system’s interface. This allows organizations to modernize their applications without having to completely rewrite their legacy infrastructure. A healthcare provider, for example, could use a proxy to integrate a modern patient portal with its existing electronic health record (EHR) system, allowing patients to access their medical information through a modern and user-friendly interface.

- Traffic Management and Monitoring: Proxies can provide valuable insights into API usage patterns by logging and monitoring request and response data. This information can be used to optimize API performance, identify bottlenecks, and detect potential security threats. API traffic monitoring can also provide crucial data for capacity planning and resource allocation. A software-as-a-service (SaaS) provider, for instance, could monitor API traffic to identify peak usage times and scale its infrastructure accordingly, ensuring optimal performance for its customers.

Industries Benefiting from this Approach

Several industries benefit significantly from serverless API gateway proxy integration due to the advantages it offers in terms of scalability, cost efficiency, and operational agility. These industries typically have dynamic workloads, require robust security measures, and seek to modernize their IT infrastructure without incurring high operational costs.

- E-commerce: E-commerce platforms benefit from the scalability and flexibility of serverless API gateway proxy integration. This allows them to handle peak traffic during sales events and provide a secure and reliable user experience. The proxy can handle authentication, authorization, and rate limiting, protecting the backend services from malicious attacks. For example, during a flash sale, the proxy can dynamically scale to handle a surge in requests, ensuring that the website remains responsive and available to customers.

- Financial Services: Financial institutions can leverage serverless API gateway proxy integration to secure their APIs and ensure compliance with regulatory requirements. The proxy can enforce strong authentication and authorization policies, protect against denial-of-service (DoS) attacks, and provide detailed audit logs. For instance, a banking application could use a proxy to securely expose its payment processing APIs, ensuring that all transactions are authenticated and authorized before being processed.

- Healthcare: Healthcare providers can use this approach to integrate their legacy systems with modern applications, such as patient portals and electronic health record (EHR) systems. The proxy can translate requests and responses between the modern API format and the legacy system’s interface, allowing for seamless data exchange. Furthermore, the proxy can help to ensure compliance with HIPAA regulations by enforcing secure access controls and logging all API requests.

- Media and Entertainment: Media companies can use serverless API gateway proxy integration to manage their content delivery networks (CDNs) and provide a consistent user experience across different devices. The proxy can handle request routing, caching, and content transformation, optimizing the delivery of multimedia content. For example, a streaming service could use a proxy to dynamically scale its CDN to handle peak viewing times and ensure that content is delivered efficiently to users around the world.

- Software as a Service (SaaS): SaaS providers can use this integration to manage their APIs, handle user authentication and authorization, and provide a consistent user experience across all of their services. The proxy can also be used to monitor API usage, identify bottlenecks, and optimize API performance. For example, a project management platform could use a proxy to manage its API, allowing users to access their projects and tasks from different devices and applications.

Implementation Methods and Procedures

Implementing a serverless API Gateway proxy integration involves several methods, each with its own set of advantages and considerations. The choice of method often depends on the specific requirements of the application, the complexity of the API, and the existing infrastructure. This section details the primary implementation approaches, outlining the steps and configuration options associated with each.

Methods for Implementing Proxy Integration

Several methods can be employed to implement a serverless API Gateway proxy integration, catering to different architectural preferences and technical requirements. Each method offers a distinct approach to forwarding requests and responses between the API Gateway and the backend service.

- Custom Authorizers: This method utilizes custom authorizers, typically implemented as Lambda functions, to authenticate and authorize requests before they reach the backend. This approach allows for fine-grained control over access to the backend service. The authorizer function can validate tokens, check permissions, and modify the request headers before passing the request to the backend.

- Lambda Proxy Integration: This is a common method where the API Gateway directly invokes a Lambda function. The Lambda function then acts as a proxy, forwarding the request to the backend service and returning the response. This method offers flexibility and control over request transformation and response handling.

- HTTP Proxy Integration: In this method, the API Gateway directly forwards requests to an HTTP endpoint, such as a backend service hosted on a server or another cloud service. The API Gateway handles the request and response lifecycle, making this a straightforward approach.

- AWS AppSync Integration: For GraphQL APIs, AWS AppSync can be integrated as a proxy. AppSync handles the resolution of GraphQL queries and mutations by proxying requests to various data sources, including Lambda functions, HTTP endpoints, and databases.

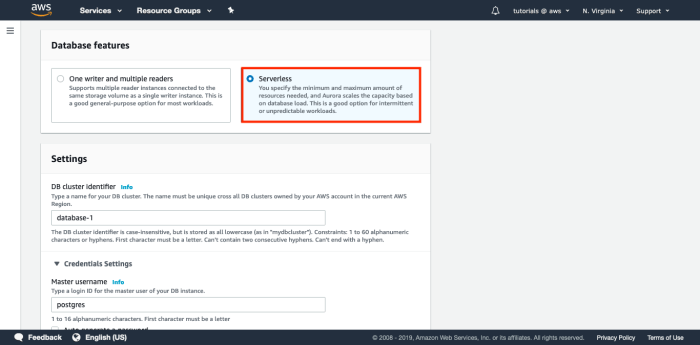

Typical Steps for Setting Up a Proxy

Setting up a serverless API Gateway proxy involves a series of sequential steps. The exact steps may vary slightly depending on the chosen implementation method and the specific API Gateway service (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway), but the general process remains consistent.

- Define the API: Begin by defining the API’s structure, including the resources, methods (GET, POST, PUT, DELETE, etc.), and request/response models. This definition typically involves specifying the API’s endpoints, request parameters, and expected response formats (e.g., JSON).

- Choose the Integration Type: Select the appropriate integration type based on the backend service and the desired level of control. This could be a Lambda proxy integration, an HTTP proxy integration, or a custom authorizer.

- Configure the Backend: Configure the backend service (e.g., Lambda function, HTTP endpoint) to handle the incoming requests. This includes deploying the Lambda function code, configuring the HTTP endpoint, and ensuring the backend is accessible from the API Gateway.

- Configure the Integration: Within the API Gateway, configure the integration settings to connect the API’s methods to the backend service. This involves specifying the backend endpoint, request mapping templates, and response mapping templates.

- Configure Request and Response Transformations (if needed): If necessary, define request and response mapping templates to transform the request and response data between the API Gateway and the backend service. This can involve modifying headers, body content, or query parameters.

- Deploy and Test: Deploy the API Gateway configuration and test the API endpoints to ensure that requests are correctly proxied to the backend service and responses are returned as expected. This includes testing different scenarios, such as valid and invalid requests.

- Configure Security and Monitoring: Implement security measures, such as API keys, authentication, and authorization, to protect the API. Also, set up monitoring and logging to track API usage, performance, and errors.

Common Configuration Options

Serverless API Gateway proxy integrations offer a range of configuration options to tailor the API’s behavior and performance. These options provide flexibility in managing the API’s functionality, security, and scalability.

- Request Mapping Templates: These templates transform incoming requests before they are sent to the backend service. They can be used to modify headers, body content, and query parameters. For example, a request mapping template can add an API key to the request headers.

- Response Mapping Templates: These templates transform the responses received from the backend service before they are returned to the client. They can be used to modify the response body, headers, and status codes. For example, a response mapping template can filter sensitive information from the response body.

- API Keys and Usage Plans: API keys and usage plans control access to the API and manage API usage. API keys are unique identifiers that clients use to access the API. Usage plans define the rate limits and quotas for API requests.

- Authentication and Authorization: Implement authentication and authorization mechanisms to secure the API. This can include using API keys, OAuth 2.0, or custom authorizers.

- Caching: Enable caching to improve API performance and reduce the load on the backend service. Caching stores frequently accessed responses, so they can be retrieved quickly without hitting the backend.

- Rate Limiting and Throttling: Implement rate limiting and throttling to protect the backend service from overload. Rate limiting restricts the number of requests a client can make within a specific time period. Throttling limits the overall number of requests the API can handle.

- Monitoring and Logging: Configure monitoring and logging to track API usage, performance, and errors. This includes logging API requests and responses, monitoring API latency, and setting up alerts for errors.

Security Considerations and Best Practices

Securing a serverless API Gateway proxy integration is paramount to protect sensitive data, ensure service availability, and maintain the integrity of the system. This involves a multi-layered approach that considers both the API Gateway itself and the backend services it proxies. Failure to adequately address security vulnerabilities can lead to data breaches, denial-of-service attacks, and reputational damage.

API Gateway Security Hardening

The API Gateway serves as the primary point of entry for all requests, making it a critical component for security. Proper configuration and management of the gateway are essential to mitigate potential threats.

- Authentication and Authorization: Implement robust authentication mechanisms, such as API keys, OAuth 2.0, or JWT (JSON Web Tokens), to verify the identity of clients. Define clear authorization policies to control which clients can access specific API endpoints and resources. This prevents unauthorized access and enforces the principle of least privilege.

- Input Validation and Sanitization: Validate and sanitize all incoming requests to prevent injection attacks, such as SQL injection and cross-site scripting (XSS). This involves checking the format, length, and content of all inputs, and rejecting or transforming malicious payloads.

- Rate Limiting and Throttling: Implement rate limiting and throttling to protect against denial-of-service (DoS) attacks. These mechanisms restrict the number of requests a client can make within a specific timeframe, preventing a single client from overwhelming the backend services.

- Web Application Firewall (WAF): Integrate a WAF to filter malicious traffic and protect against common web application vulnerabilities. A WAF can detect and block attacks such as SQL injection, cross-site scripting, and bot attacks.

- Encryption in Transit: Enforce HTTPS (TLS/SSL) for all API traffic to encrypt data in transit. This protects sensitive information from being intercepted and compromised during communication between the client, API gateway, and backend services.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities. This involves simulating attacks to assess the effectiveness of security controls and identify areas for improvement.

- Logging and Monitoring: Implement comprehensive logging and monitoring to track API usage, detect suspicious activity, and provide insights into security incidents. This includes logging all API requests and responses, as well as monitoring for anomalies and potential threats.

Backend Service Security Measures

Securing the backend services behind the API Gateway is equally crucial. Even with a secure gateway, vulnerabilities in the backend can expose sensitive data and compromise the entire system.

- Secure Coding Practices: Adhere to secure coding practices to prevent vulnerabilities such as buffer overflows, cross-site request forgery (CSRF), and insecure direct object references. This includes using secure libraries, validating input, and properly handling errors.

- Database Security: Protect databases by implementing strong authentication and authorization mechanisms, encrypting sensitive data at rest, and regularly backing up data. Limit access to databases based on the principle of least privilege.

- Network Segmentation: Segment the network to isolate backend services from the public internet and other potentially compromised systems. This limits the impact of a security breach.

- Regular Patching and Updates: Keep all software, including operating systems, libraries, and frameworks, up to date with the latest security patches and updates. This mitigates known vulnerabilities.

- Secrets Management: Securely store and manage sensitive credentials, such as API keys, database passwords, and encryption keys. Use a secrets management service or vault to protect these secrets from unauthorized access.

- Monitoring and Alerting: Implement monitoring and alerting to detect and respond to security incidents. This includes monitoring for suspicious activity, such as unauthorized access attempts, unusual network traffic, and system errors.

- Service-to-Service Authentication: When backend services communicate with each other, implement service-to-service authentication to verify their identities and ensure that only authorized services can access specific resources. This can be achieved using mutual TLS or other authentication mechanisms.

Best Practices Summary

The following table summarizes key security best practices for serverless API Gateway proxy integrations:

| Area | Best Practice | Description |

|---|---|---|

| API Gateway | Authentication & Authorization | Implement robust authentication and authorization mechanisms to control access to API endpoints. |

| API Gateway | Input Validation | Validate and sanitize all incoming requests to prevent injection attacks. |

| API Gateway | Rate Limiting & Throttling | Protect against DoS attacks by limiting the number of requests. |

| API Gateway | WAF Integration | Use a WAF to filter malicious traffic. |

| API Gateway | HTTPS Enforcement | Encrypt all API traffic using HTTPS. |

| API Gateway | Regular Audits | Conduct security audits and penetration testing. |

| API Gateway | Logging & Monitoring | Implement comprehensive logging and monitoring. |

| Backend Services | Secure Coding | Adhere to secure coding practices. |

| Backend Services | Database Security | Protect databases with strong security measures. |

| Backend Services | Network Segmentation | Segment the network to isolate backend services. |

| Backend Services | Patching & Updates | Keep all software up to date. |

| Backend Services | Secrets Management | Securely manage sensitive credentials. |

| Backend Services | Monitoring & Alerting | Implement monitoring and alerting. |

| Backend Services | Service-to-Service Authentication | Implement authentication between backend services. |

Monitoring and Logging Strategies

Effective monitoring and logging are critical for maintaining the health, performance, and security of a serverless API gateway proxy integration. They provide insights into API behavior, enable rapid troubleshooting, and support compliance requirements. Without robust monitoring and logging, detecting and resolving issues becomes significantly more challenging, potentially leading to service disruptions and security vulnerabilities.

Importance of Monitoring and Logging

Monitoring and logging play distinct but complementary roles in ensuring a reliable and secure API gateway proxy integration. Monitoring provides real-time visibility into the performance and availability of the API, allowing for proactive identification of issues. Logging, on the other hand, captures detailed information about API requests and responses, enabling in-depth analysis and auditing. The synergy between the two is crucial for comprehensive system management.

- Proactive Issue Detection: Monitoring systems continuously track key performance indicators (KPIs) such as latency, error rates, and throughput. This allows for the early detection of anomalies and performance degradation, enabling timely intervention before they impact users.

- Rapid Troubleshooting: When issues arise, logging provides the detailed information needed to diagnose the root cause. Logs contain request details, response codes, error messages, and timestamps, enabling developers to pinpoint the source of problems quickly.

- Performance Optimization: Analyzing performance metrics gathered through monitoring and logging can identify bottlenecks and areas for optimization. For example, identifying slow-performing API endpoints can inform decisions about caching, code optimization, or infrastructure scaling.

- Security Auditing and Compliance: Logs are essential for security audits and compliance requirements. They provide a record of all API interactions, including user authentication, authorization, and data access. This information can be used to detect and investigate security incidents, track user activity, and demonstrate compliance with regulations.

- Business Intelligence: Analyzing API usage patterns can provide valuable business insights. Logs can be used to track which APIs are most popular, identify user demographics, and understand how users are interacting with the API. This information can inform product development, marketing strategies, and business decisions.

Strategies for Monitoring API Performance

Monitoring API performance involves tracking a range of metrics to assess the health and efficiency of the API gateway proxy integration. These metrics should be collected and analyzed continuously to identify potential problems and optimize performance.

- Latency: Measures the time it takes for an API request to be processed and a response to be returned. High latency can indicate performance bottlenecks. Latency can be broken down further to show how long the request spends in each step (e.g., DNS resolution, connection establishment, time to first byte, content download).

- Error Rates: The percentage of API requests that result in errors. High error rates indicate issues with the API, such as incorrect configurations, failing dependencies, or code bugs. Monitoring different error codes (e.g., 4xx, 5xx) provides more granular insights into the nature of the errors.

- Throughput: The number of API requests processed per unit of time (e.g., requests per second, transactions per minute). Low throughput can indicate performance limitations or resource constraints. Throughput monitoring is crucial for capacity planning and scaling.

- Availability: The percentage of time the API is operational and accessible. Low availability can result from service outages or other disruptions. Monitoring availability ensures that the API is consistently available to users.

- Traffic Volume: The number of API requests received over a specific period. Tracking traffic volume helps understand API usage patterns and detect spikes in traffic that could indicate denial-of-service attacks.

- Resource Utilization: Monitoring resource usage, such as CPU, memory, and network bandwidth, helps identify resource bottlenecks. For example, if CPU utilization is consistently high, it might indicate a need for scaling or code optimization.

- External Dependencies: Monitor the performance of any external services or databases that the API depends on. Slow performance in these dependencies can directly impact the API’s overall performance.

Monitoring tools such as Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring provide comprehensive solutions for collecting, analyzing, and visualizing these metrics. These tools offer dashboards, alerts, and automated responses to ensure prompt action when issues arise.

Setting up Logging for Debugging and Auditing

Setting up effective logging involves configuring the API gateway proxy integration to capture relevant information about each API request and response. The logs should be detailed enough to facilitate debugging and auditing while maintaining data privacy and security.

- Log Levels: Implement different log levels (e.g., DEBUG, INFO, WARN, ERROR, FATAL) to control the amount of information captured. DEBUG logs are typically used for detailed troubleshooting, while ERROR logs capture critical errors that require immediate attention.

- Request and Response Details: Log the following information for each API request:

- Timestamp

- Request method (e.g., GET, POST)

- Request URL

- Request headers (e.g., User-Agent, Content-Type)

- Request body (carefully, considering sensitive data)

- Client IP address

- User identifier (if authenticated)

- Response status code

- Response headers

- Response body (carefully, considering sensitive data)

- Processing time

- Error Handling and Logging: Implement robust error handling and logging to capture error messages, stack traces, and context information. This is crucial for diagnosing and resolving issues.

- Security Considerations:

- Data Masking: Mask or redact sensitive data (e.g., passwords, credit card numbers) from logs to protect user privacy.

- Access Control: Implement access controls to restrict who can view and access the logs.

- Encryption: Encrypt logs at rest and in transit to protect them from unauthorized access.

- Centralized Logging: Aggregate logs from all components of the API gateway proxy integration into a centralized logging system (e.g., Amazon CloudWatch Logs, Azure Monitor Logs, Google Cloud Logging). This makes it easier to search, analyze, and correlate logs from different sources.

- Log Retention: Define a log retention policy that aligns with compliance requirements and business needs. Determine how long logs should be stored and archived.

- Log Analysis and Alerting: Set up log analysis and alerting to automatically detect anomalies, security threats, and performance issues. For example, configure alerts to be triggered when error rates exceed a certain threshold or when suspicious activity is detected.

The implementation of logging can vary based on the specific serverless platform and API gateway used. For example, AWS Lambda functions can use the `console.log()` function to write logs, which are then automatically sent to CloudWatch Logs. Azure Functions and Google Cloud Functions offer similar logging capabilities, integrating seamlessly with their respective logging services. The use of structured logging formats (e.g., JSON) makes it easier to parse and analyze logs programmatically.

Advanced Topics and Considerations

Serverless API Gateway proxy integrations offer a powerful architecture for managing and scaling APIs. However, their implementation necessitates careful consideration of advanced concepts to optimize performance, security, and maintainability. This section delves into these advanced topics, providing insights into request transformation, data mapping, and illustrating the request/response flow within this architecture.

Request Transformation and Data Mapping

Effective API gateway proxy integrations often require transforming incoming requests and mapping data between the client, the API gateway, and the backend services. This is crucial for ensuring compatibility, security, and the efficient processing of data.Data mapping and request transformation generally involve several key operations:

- Request Header Manipulation: Modifying headers like `Content-Type`, `Authorization`, or custom headers to adapt requests for the backend service. This might involve adding security tokens, adjusting content negotiation preferences, or passing contextual information. For example, a proxy might add an `X-Forwarded-For` header to pass the client’s IP address to the backend service.

- Request Body Transformation: Converting the request body’s format or structure. This could include converting XML to JSON, validating the request body against a schema, or masking sensitive data. Consider a scenario where a legacy backend service expects XML, while the API receives JSON. The API gateway would perform the necessary transformation.

- URL Path Rewriting: Modifying the URL path to route requests correctly to the backend service. This is frequently used when the client’s perceived URL structure differs from the backend service’s actual structure. For instance, the API gateway might map `/api/v1/products` to the backend’s `/internal/products`.

- Response Header Manipulation: Adjusting the response headers from the backend service before sending them to the client. This can involve adding CORS headers, modifying caching directives, or masking internal server details.

- Response Body Transformation: Transforming the response body, such as converting data formats, filtering data, or aggregating data from multiple backend services. This is critical for adapting the backend’s response to the client’s needs or for security purposes. An example would be stripping out sensitive data from a response before it is sent to the client.

The complexity of data mapping and request transformation can vary significantly. Simple transformations can be handled using built-in features of the API gateway. More complex transformations may require the use of scripting languages (e.g., JavaScript, Python) or integration with external services. For example, API gateways often support JavaScript-based transformations to handle complex data manipulations. The choice of approach depends on the specific requirements and the capabilities of the API gateway.

Simplified Request/Response Flow Illustration

The following illustration provides a simplified view of the request/response flow in a serverless API gateway proxy integration, with annotated steps. This clarifies how the components interact and how requests and responses are processed.The diagram depicts a client (e.g., a web browser or mobile app) interacting with a serverless API Gateway, which in turn proxies requests to a backend service (e.g., a serverless function or a microservice).

Step 1: Client Request

The client initiates an HTTP request (e.g., a GET request to `/api/products`) to the API gateway’s endpoint. This request includes headers and, potentially, a request body.

Step 2: API Gateway Processing (Request)

The API gateway receives the request and performs several actions:

- Authentication and Authorization: Validates the client’s credentials and verifies the client’s permissions to access the requested resource.

- Request Transformation: Modifies the request headers, body, or URL as required by the backend service. For instance, it might add an `Authorization` header or rewrite the URL.

- Routing: Determines the appropriate backend service based on the request’s path, method, and other criteria.

Step 3: Backend Service Processing

The API gateway forwards the transformed request to the selected backend service. The backend service processes the request, potentially interacting with databases or other resources.

Step 4: Backend Service Response

The backend service generates an HTTP response, including headers, a response body (containing the data), and a status code (e.g., 200 OK, 404 Not Found).

Step 5: API Gateway Processing (Response)

The API gateway receives the response from the backend service and performs the following:

- Response Transformation: Modifies the response headers and/or body, if necessary. This could involve converting data formats, filtering data, or adding CORS headers.

- Monitoring and Logging: Records the request and response details for monitoring and troubleshooting purposes.

Step 6: Client Response

The API gateway forwards the transformed response to the client. The client receives the response and displays the data or takes appropriate action.

This simplified illustration shows the core flow. In reality, the process can be more complex, involving multiple backend services, caching, and other features. However, this flow captures the fundamental interactions between the client, the API gateway, and the backend services in a serverless API gateway proxy integration.

Ending Remarks

In conclusion, the serverless API gateway proxy integration presents a compelling solution for modern API management, offering enhanced scalability, cost optimization, and improved security. By understanding the core components, comparing it to alternative approaches, and recognizing its practical applications, developers can leverage this integration pattern to build robust, efficient, and scalable applications. From streamlined development workflows to optimized resource utilization, this approach empowers organizations to embrace the full potential of serverless computing, fostering innovation and agility in a rapidly evolving technological landscape.

The strategic implementation of these concepts will prove vital for the success of projects in the future.

Q&A

What are the primary benefits of using a serverless API gateway proxy integration?

Key benefits include scalability (automatic scaling based on demand), cost-effectiveness (pay-per-use model), improved security (centralized security management), and simplified development workflows (reduced infrastructure management).

How does a serverless API gateway handle authentication and authorization?

The API gateway can handle authentication (verifying user identity) and authorization (determining user permissions) using various methods like API keys, OAuth, or JWT. This centralized approach simplifies security management.

What are the potential drawbacks of using a serverless API gateway proxy integration?

Potential drawbacks include increased latency (due to the added hop), vendor lock-in (depending on the chosen API gateway provider), and the need for careful monitoring and logging to troubleshoot issues.

How does request transformation work in a serverless API gateway?

The API gateway can transform incoming requests (e.g., changing headers, modifying payloads) to match the format expected by the backend service. This simplifies integration with different backend systems.

What tools are commonly used to implement a serverless API gateway proxy integration?

Popular tools include AWS API Gateway, Azure API Management, Google Cloud API Gateway, and serverless framework. These tools provide features for defining APIs, managing security, and monitoring performance.