In today’s fast-paced digital world, website and application performance is paramount. Slow loading times can lead to frustrated users and lost opportunities. One of the most effective strategies for enhancing performance and ensuring a seamless user experience is implementing a distributed cache. This technology stores frequently accessed data across multiple servers, allowing for faster retrieval and reduced strain on the primary data sources.

This exploration delves into the core concepts of distributed caching, examining its architecture, benefits, and various use cases. We will uncover how distributed caches significantly improve scalability, performance, and overall cost efficiency, making them a critical component for modern web applications and database systems. Furthermore, this comprehensive guide will highlight the key components, data distribution strategies, and implementation technologies, providing a solid understanding of how to leverage distributed caching for optimal performance.

Introduction to Distributed Caching

Distributed caching is a crucial technique in modern software architecture, designed to enhance application performance and scalability. It involves storing data across multiple servers, creating a cache cluster that can handle significantly higher workloads than a single-server solution. This approach minimizes the load on backend databases and improves response times for end-users.

Core Concept and Architecture

The fundamental concept behind a distributed cache is to distribute the storage and retrieval of cached data across a network of servers. This architecture offers several key advantages, including increased capacity, improved fault tolerance, and enhanced scalability.The architecture typically involves the following components:

- Cache Nodes: These are individual servers or instances that store cached data. They form the cluster.

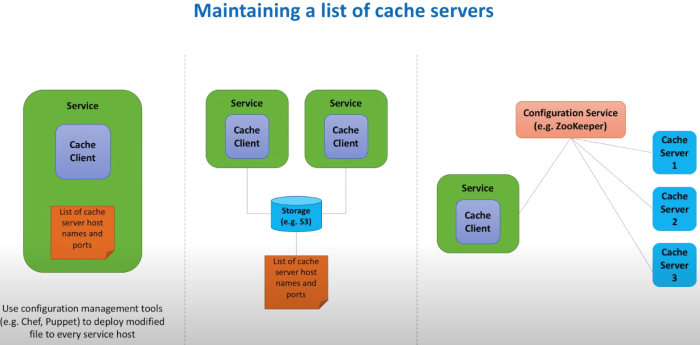

- Cache Client: This is the application component that interacts with the cache. It sends requests to retrieve or store data.

- Cache Manager (or Coordinator): This component manages the cache cluster, handling tasks such as data distribution, node discovery, and failure detection. Not all distributed caches have a dedicated manager; some use distributed consensus algorithms.

- Network: The network infrastructure that connects the cache nodes and the cache clients.

The cache client typically uses a hashing algorithm to determine which cache node holds a particular piece of data. This allows for efficient data retrieval and distribution across the cluster. For example, consistent hashing is often employed to minimize data movement when nodes are added or removed from the cluster.

Definition and Purpose

A distributed cache is a system that stores data across multiple servers to improve the performance and scalability of applications. Its primary purpose is to reduce the load on backend data stores, such as databases, by caching frequently accessed data in memory. This results in faster response times and improved user experience. It also allows the backend database to handle fewer requests, which can improve its performance and reduce costs.

Distributed Cache vs. Local Cache

A crucial distinction exists between distributed caches and local caches. Understanding this difference is essential for choosing the appropriate caching strategy.

- Local Cache: A local cache resides within the same process or on the same server as the application. It’s fast but limited in capacity and susceptible to data loss if the application or server crashes. Examples include in-memory caches within a Java application or a browser cache.

- Distributed Cache: A distributed cache resides on a separate cluster of servers, offering significantly larger capacity, fault tolerance, and scalability. If one cache server fails, the data can be retrieved from another server in the cluster. Examples include Memcached, Redis, and Apache Cassandra.

The key difference lies in the scope and resilience. A local cache is suitable for small-scale applications or caching data that is specific to a single instance. A distributed cache is preferred for larger applications that require high availability, scalability, and the ability to handle a large volume of data. For instance, consider an e-commerce website. A local cache might be used to cache user session data on each web server, while a distributed cache could be used to cache product catalogs and frequently accessed user profiles across the entire infrastructure.

This distributed approach ensures that the website can handle a large number of concurrent users and maintain fast response times even during peak traffic.

Architectural Components

A distributed cache system’s effectiveness stems from its architectural design. Understanding the key components and their interactions is crucial for grasping how these systems operate and deliver performance benefits. The architecture typically involves several interconnected elements, each playing a specific role in caching data efficiently and reliably.

Cache Nodes

Cache nodes are the fundamental building blocks of a distributed cache. They are individual servers or instances that store the cached data.

- Data Storage: Each node is responsible for storing a portion of the cached data. This data is typically stored in memory (RAM) for fast access, but can also be persisted to disk for durability.

- Key-Value Store: Cache nodes function as key-value stores, where data is accessed using unique keys. When a client requests data, it provides a key, and the cache node retrieves the corresponding value if it exists.

- Capacity and Scalability: The capacity of a cache node determines the amount of data it can store. To handle increasing data volumes and user traffic, cache nodes can be scaled horizontally by adding more nodes to the cluster.

- Example: Imagine a web application caching product details. Each cache node might store details for a specific range of product IDs, allowing for efficient retrieval based on the product key.

Cache Clients

Cache clients are applications or services that interact with the distributed cache to store and retrieve data.

- Data Requests: Clients initiate requests to the cache for data retrieval. They provide the key associated with the desired data.

- Data Updates: Clients also update the cache by storing new data or modifying existing data.

- Communication: Clients communicate with the cache nodes through a network, typically using protocols like TCP or UDP.

- Libraries and SDKs: Client libraries and software development kits (SDKs) often provide an abstraction layer, simplifying interaction with the cache and handling tasks like connection management and data serialization.

- Example: A mobile app might use a cache client to retrieve user profile data from the cache. If the data isn’t in the cache (a cache miss), the client would fetch it from the primary data source (e.g., a database) and then store it in the cache for future use.

Cache Manager / Coordinator

The cache manager or coordinator is a critical component responsible for managing the overall cache cluster and ensuring data consistency and availability.

- Data Distribution: It manages how data is distributed across the cache nodes, often using techniques like consistent hashing to determine which node is responsible for a particular key.

- Node Monitoring: It monitors the health and status of the cache nodes, detecting failures and taking corrective actions like data replication or failover.

- Data Replication: To ensure data durability and availability, the cache manager often replicates data across multiple nodes. If a node fails, the replicated data can be served from another node.

- Cache Eviction: It implements eviction policies to remove data from the cache when it reaches its capacity or when data becomes stale. Common eviction policies include Least Recently Used (LRU), Least Frequently Used (LFU), and Time-to-Live (TTL).

- Example: A cache manager might use consistent hashing to distribute data across 10 cache nodes. When a client requests data with key “product_123”, the cache manager uses a hash function to determine which node is responsible for storing and serving that data. The manager also ensures that if a node goes down, the data is still available from a replicated copy on another node.

Communication Layer

The communication layer facilitates the interaction between cache clients, cache nodes, and the cache manager. It defines the protocols and mechanisms for data transfer and management.

- Protocols: This layer uses protocols like TCP, UDP, or HTTP for communication. The choice of protocol depends on factors like performance requirements, network characteristics, and the complexity of the data being transferred.

- Data Serialization: It handles the serialization and deserialization of data, converting data structures into a format suitable for transmission over the network. Common serialization formats include JSON, Protocol Buffers, and MessagePack.

- Network Topology: It manages the network topology and ensures that clients and nodes can communicate effectively, often using techniques like load balancing and service discovery.

- Example: When a cache client requests data, the communication layer might use HTTP to send the request to the cache manager, which then forwards the request to the appropriate cache node. The data is serialized into JSON format before being transmitted over the network.

Data Storage (Within Nodes)

Within each cache node, data is stored using specific data structures and storage mechanisms.

- In-Memory Storage: The most common approach is to store data in RAM for fast access. This is typically achieved using hash tables or other data structures optimized for lookups.

- Disk-Based Storage: For larger datasets or when persistence is required, data can be stored on disk. This often involves using a database or a file system.

- Data Structures: The choice of data structures depends on the data access patterns. Hash tables are ideal for key-value lookups, while other structures like sorted sets or Bloom filters may be used for specific use cases.

- Example: A cache node might use a hash table to store cached user session data. The key would be the user’s session ID, and the value would be the user’s session information. This allows for very fast retrieval of user session data based on the session ID.

Data Distribution Strategies

Distributing data effectively across the nodes of a distributed cache is crucial for performance, scalability, and fault tolerance. The choice of a data distribution strategy significantly impacts how data is located, retrieved, and managed within the cache. Several methods exist, each with its own trade-offs in terms of complexity, efficiency, and resilience to node failures.

Data Distribution Methods

Various methods are employed to distribute data across cache nodes, each with different characteristics and implications. These strategies aim to ensure data is spread evenly, minimizing hotspots and maximizing cache efficiency.

- Hashing-based Distribution: This is a common approach where a hash function is used to map data keys to specific cache nodes. The hash function takes the key as input and produces a hash value, which is then used to determine the node responsible for storing that data. The simplest form is to use the modulo operator (%) with the number of nodes.

For example, if there are three nodes, the key’s hash value is calculated, and the result modulo 3 determines the node.

- Range-based Distribution: In this strategy, the data is divided into ranges, and each range is assigned to a specific cache node. This is often used in conjunction with a directory service or a lookup table that maps data ranges to node addresses. This method can be efficient for range queries but can be less flexible than hashing-based approaches when nodes are added or removed.

- Directory-based Distribution: A central directory or metadata store maintains information about the location of each data item. When a client needs to retrieve data, it first queries the directory to find the appropriate node. This approach offers flexibility in managing data distribution but introduces a single point of failure and can become a bottleneck if the directory is not designed for high performance and scalability.

- Consistent Hashing: Consistent hashing is a more advanced technique designed to minimize the impact of node additions and removals. It maps both data keys and cache nodes to the same hash space (usually a circular space). When a node is added or removed, only a small fraction of the data needs to be remapped, reducing the overhead associated with these operations.

Comparing Data Distribution Strategies

Each data distribution strategy presents different advantages and disadvantages. Choosing the right one depends on the specific requirements of the caching system, including data size, access patterns, and the need for high availability.

| Strategy | Advantages | Disadvantages |

|---|---|---|

| Hashing-based | Simple to implement, good data distribution (if the hash function is good). | Node failures require rehashing of the entire data set, potential for uneven distribution with poor hash functions. |

| Range-based | Efficient for range queries. | Less flexible when nodes are added or removed, can lead to hotspots. |

| Directory-based | Provides flexibility in data placement. | Single point of failure, potential bottleneck. |

| Consistent Hashing | Minimizes data remapping on node changes, good for dynamic environments. | More complex to implement than simple hashing. |

Scenario: Locating Data with Consistent Hashing

Consistent hashing is a robust strategy that minimizes data movement when nodes are added or removed. Let’s illustrate how it works with an example.

Setup: Imagine a distributed cache with three nodes (Node A, Node B, and Node C). A consistent hash ring is created, where both the nodes and the data keys are mapped to points on the ring. The hash function distributes both the nodes and data keys evenly around the ring.

Data Placement: A data key, “user:123”, is hashed to a point on the ring. The cache node responsible for storing “user:123” is the first node encountered clockwise from the key’s position on the ring. For example, if “user:123” hashes to a point between Node B and Node C, then Node C will store the data.

Node Addition: Suppose a new node, Node D, is added to the cache. Node D is also hashed and placed on the ring. Only the data keys whose positions fall between Node D and the next node clockwise (e.g., Node C) will need to be remapped to Node D. The vast majority of data remains unaffected, minimizing disruption.

Node Removal: If Node B fails, the data stored on Node B is remapped to the next node clockwise (Node C). Again, only a small fraction of the data needs to be moved, preserving data availability.

Illustrative Example:

Imagine the hash ring with nodes A, B, and C positioned around the ring. Key “user:123” hashes to a point between B and C, so it’s stored on C. When D is added between B and C, only the keys previously assigned to C that fall between D and C will be remapped to D. All other data remains on their original nodes. When B fails, all the keys assigned to B are now assigned to C.

This scenario demonstrates how consistent hashing minimizes data migration during node changes, ensuring high availability and performance in a dynamic distributed cache environment. The example shows a simplified version, and in real-world implementations, virtual nodes or “replicas” are often used to improve data distribution and fault tolerance. For instance, Redis Cluster utilizes consistent hashing to distribute data, supporting automatic failover and scalability.

Benefits

Distributed caching offers significant advantages, particularly in enhancing system scalability and performance. By strategically distributing data across multiple nodes, distributed caching architectures can accommodate increasing workloads and reduce the time it takes to retrieve information. This leads to a more responsive and efficient system.

Scalability and Performance

Distributed caching fundamentally improves a system’s ability to scale and perform efficiently. It does this by distributing the cache across multiple servers, which enables the system to handle a larger volume of requests and data. This architecture is designed to meet the evolving needs of modern applications.

Scalability

Distributed caching provides superior scalability by distributing the caching load across multiple servers. This means that as the demand for data increases, more servers can be added to the cache cluster, thereby increasing the overall capacity and processing power of the system. This horizontal scaling approach contrasts with the limitations of single-server caching, which can quickly become a bottleneck as the workload grows.

Performance Enhancement

Distributed caching dramatically enhances performance, primarily by reducing latency and improving throughput. By storing frequently accessed data closer to the users or applications, the time required to retrieve this data is significantly reduced. This leads to faster response times and a more responsive user experience.For example, consider a social media platform. Without distributed caching, each request for a user’s profile information might require querying a database.

With distributed caching, the profile information is stored in the cache, allowing for much faster retrieval.To illustrate the performance gains, let’s compare read and write operations with and without distributed caching:

| Operation | Without Distributed Caching | With Distributed Caching | Explanation |

|---|---|---|---|

| Read Operation (e.g., retrieving user profile) | Database query (e.g., 100ms) | Cache lookup (e.g., 10ms) | With distributed caching, the data is retrieved from the cache, which is significantly faster than querying the database. |

| Write Operation (e.g., updating user status) | Database write (e.g., 50ms) | Cache update (e.g., 10ms) + Database write (e.g., 50ms, asynchronous) | Distributed caching can improve write performance by updating the cache first and then asynchronously updating the database. |

The table demonstrates that distributed caching significantly reduces the time required for both read and write operations, especially for read operations. This reduction in latency contributes directly to improved application performance and a better user experience. The asynchronous nature of write operations, common in distributed caching, further enhances performance by preventing write operations from blocking read operations. This optimization is a key benefit of using a distributed cache.

Benefits

Distributed caching offers a multitude of advantages, significantly enhancing system performance, scalability, and resilience. Beyond improved speed and reduced latency, these systems provide critical benefits related to availability and fault tolerance, ensuring continuous operation even in the face of failures.

High Availability and Fault Tolerance

Distributed caches are engineered to provide high availability and fault tolerance, crucial characteristics for maintaining continuous service and data integrity. They achieve this through redundancy, replication, and intelligent failover mechanisms. These features ensure that the system can withstand component failures without significant disruption.High availability is primarily achieved through data replication and the distribution of cache nodes. Data is often replicated across multiple nodes, meaning that if one node fails, the data is still accessible from other nodes.

This redundancy eliminates single points of failure. Load balancing distributes client requests across multiple cache nodes, further preventing any single node from becoming overloaded and failing. The distributed nature of the cache allows the system to continue operating even if some nodes are unavailable.Fault tolerance is a key design principle in distributed caching. It is achieved through a combination of techniques, including:

- Data Replication: Data is replicated across multiple nodes. This ensures that if one node fails, the data is still available on other nodes. Replication strategies can vary, including synchronous replication (where all replicas are updated simultaneously) and asynchronous replication (where updates are propagated with a delay).

- Automatic Failover: If a node fails, the system automatically redirects requests to a healthy node. This process is often managed by a cluster management system that monitors the health of each node.

- Node Monitoring: Systems continuously monitor the health of cache nodes. If a node becomes unresponsive or exhibits performance issues, the system can take proactive measures, such as removing it from the active pool or triggering a failover.

- Data Partitioning: Data is partitioned across multiple nodes, so each node is responsible for a subset of the overall data. This reduces the impact of a single node failure, as only a portion of the data is affected.

Consider a scenario where a distributed cache, implemented with Redis, has three nodes (Node A, Node B, and Node C) and uses a master-slave replication setup. Data is replicated from the master (Node A) to the slaves (Node B and Node C).

- Initial State: All three nodes are operational, and Node A is the master, handling all write operations. Nodes B and C are slaves, replicating data from Node A.

- Node Failure: Node A, the master, experiences a hardware failure and becomes unavailable.

- Detection and Failover: The monitoring system detects the failure of Node A. The system then promotes one of the slave nodes (e.g., Node B) to become the new master.

- Client Redirection: Clients that were previously connected to Node A are automatically redirected to Node B (the new master).

- Data Consistency: Node B, now the master, continues to serve client requests. Data is still available due to replication. Depending on the replication strategy, there might be a small window of data loss if asynchronous replication was used, but the system continues to operate.

- Recovery: Once Node A is repaired, it can be re-integrated into the cluster as a slave node, re-establishing the replication setup.

Benefits

Distributed caching offers several advantages that can significantly improve application performance, scalability, and resilience. These benefits extend beyond performance, encompassing cost efficiency, which is a crucial factor for businesses of all sizes.

Cost Efficiency

Implementing a distributed cache can lead to substantial cost savings in infrastructure. By reducing the load on primary data stores and optimizing resource utilization, businesses can avoid unnecessary expenses.The factors that contribute to cost efficiency include:

- Reduced Database Load: Caching frequently accessed data minimizes the number of requests to the primary database. This, in turn, reduces the need for expensive database resources, such as more powerful servers, additional database instances, and increased storage capacity. The less strain on the database, the less you need to spend on it.

- Lower Server Costs: By caching data closer to the application, the application servers can handle a greater volume of requests with fewer resources. This can lead to a reduction in the number of application servers required, thereby lowering hardware, hosting, and operational costs.

- Optimized Resource Utilization: Distributed caches allow for more efficient use of existing infrastructure. By offloading tasks from the primary data stores and application servers, businesses can make better use of their existing resources and avoid unnecessary upgrades.

- Decreased Bandwidth Costs: Caching frequently accessed data reduces the amount of data that needs to be transferred across the network. This can lead to significant savings in bandwidth costs, especially for applications that handle large volumes of data.

To illustrate the cost savings, consider a simplified comparison of infrastructure costs with and without a distributed cache:

| Component | Without Distributed Cache | With Distributed Cache | Cost Difference |

|---|---|---|---|

| Database Server (e.g., CPU, RAM, Storage) | High (due to increased load) | Lower (reduced load) | Significant Savings |

| Application Servers (Number and Resources) | High (to handle increased database load) | Lower (cached data reduces server load) | Savings |

| Bandwidth Usage | High (more data transferred from database) | Lower (cached data reduces transfer) | Savings |

| Overall Infrastructure Costs | Higher | Lower | Significant Savings |

For example, a large e-commerce platform, using a distributed cache like Redis, might experience a 30-40% reduction in database server costs and a 15-25% reduction in application server costs, depending on the application’s specific usage patterns and data access frequency. This translates into substantial savings over time. This kind of cost efficiency makes distributed caching an attractive solution for businesses looking to optimize their infrastructure spending.

Use Cases

Distributed caching plays a pivotal role in enhancing the performance, scalability, and overall user experience of web applications. By storing frequently accessed data closer to the users, distributed caching reduces latency, minimizes database load, and ensures a more responsive application. Its application spans a wide array of scenarios, making it a fundamental component of modern web application architectures.

Web Application Performance Improvement Examples

Web applications often experience performance bottlenecks due to the high volume of requests they handle. Distributed caching mitigates these issues by storing frequently accessed data in a distributed cache, thereby reducing the load on backend databases and accelerating response times.For example, consider an e-commerce website. Without caching, every time a user browses a product page, the application might need to query the database for product details, pricing, and availability.

This can be slow, especially during peak traffic periods. However, with distributed caching, the product information can be stored in the cache. When a user requests a product page, the application first checks the cache. If the information is available (a cache hit), it is served directly from the cache, resulting in significantly faster loading times. If the information is not available (a cache miss), the application retrieves it from the database and then stores it in the cache for future requests.Another example involves news websites.

News articles, especially popular ones, are accessed frequently. Storing these articles in a distributed cache allows the website to serve them quickly to a large number of users, without overwhelming the database. This leads to a better user experience and can handle large traffic spikes without performance degradation.

Caching Frequently Accessed Data Implementation

Implementing caching for frequently accessed data like user profiles and session information is crucial for web application performance. Here’s how it’s typically done:

- User Profiles: User profile data, including details like name, email, preferences, and recent activity, is frequently accessed.

- Cache Strategy: Cache user profile data using the user’s unique identifier (e.g., user ID) as the key. The cache entry can be updated whenever the user profile is modified.

- Implementation: Upon user login, fetch the user profile from the database and store it in the distributed cache. Subsequent requests for the user profile can be served directly from the cache. When the user updates their profile, update the cache entry with the new information.

- Session Information: Session information, such as user authentication tokens and shopping cart contents, is essential for maintaining user state.

- Cache Strategy: Store session data in the distributed cache, using the session ID as the key. This ensures that user sessions are maintained even when the application scales across multiple servers.

- Implementation: When a user logs in, generate a session ID and store the session data (e.g., authentication token) in the cache. For each subsequent request, the application retrieves the session data from the cache using the session ID. This eliminates the need to repeatedly query the database for session information. Expire the session data after a certain period of inactivity.

- Data Freshness Considerations:

- Cache Invalidation: Implement cache invalidation strategies to ensure data freshness. When data in the database changes, the corresponding cache entries must be invalidated (removed or updated).

- Time-to-Live (TTL): Set a time-to-live (TTL) for cache entries. After the TTL expires, the cache entry is automatically removed, and the next request will fetch the data from the database and refresh the cache.

Use Cases

Distributed caching finds significant application across various domains, offering substantial performance improvements and cost savings. One particularly impactful area is database optimization, where caching strategies can dramatically alter the efficiency and responsiveness of database systems.

Databases

Databases are often the bottleneck in application performance. Distributed caching provides a powerful mechanism to alleviate this, significantly enhancing both read and write operations.Caching databases helps reduce the load on the database servers and improve response times for users. This is achieved by storing frequently accessed data in the cache, which is much faster to access than retrieving it from the database.

The database then only needs to handle requests for data not present in the cache or for write operations, reducing its workload.Here’s a scenario where a distributed cache is used to cache database query results:

A popular e-commerce website experiences high traffic, especially during peak hours. Users frequently browse product catalogs and view product details. The database, storing product information, becomes a performance bottleneck. To address this, a distributed cache is implemented in front of the database.When a user requests product details, the application first checks the cache.* If the product details are found in the cache (a cache hit), the application retrieves the data from the cache and returns it to the user, which is extremely fast.

- If the product details are not in the cache (a cache miss), the application queries the database. The database returns the product details.

- The application then stores the query result in the cache, setting an appropriate time-to-live (TTL) to ensure data freshness. This way, subsequent requests for the same product details will be served from the cache.

This caching strategy significantly reduces the load on the database. As the cache is populated with frequently accessed product data, the database experiences fewer queries, resulting in faster response times for all users. This leads to a better user experience, increased sales, and improved overall system performance. For example, a study by a large e-commerce company showed that implementing a distributed cache reduced database query times by up to 80% and increased website throughput by 30% during peak traffic.

Implementation Technologies

Implementing a distributed cache involves choosing from a variety of technologies, each with its strengths and weaknesses. The selection process hinges on factors such as performance requirements, data consistency needs, and operational complexity. This section explores popular distributed caching technologies, compares their features, and provides a practical guide to setting up a basic cache.

Popular Distributed Caching Technologies

Several technologies dominate the distributed caching landscape, offering varying levels of performance, scalability, and feature sets. Understanding the characteristics of each technology allows for informed decision-making based on specific application needs.

- Redis: Redis is an in-memory data store often used as a distributed cache. It supports a wide range of data structures, including strings, hashes, lists, sets, and sorted sets. Redis is known for its high performance and low latency, making it suitable for caching frequently accessed data. It also provides features like replication, persistence, and clustering.

- Memcached: Memcached is a high-performance, distributed memory object caching system. It’s designed to be simple and easy to use, focusing primarily on key-value storage. Memcached is well-suited for caching data that doesn’t require complex data structures or persistence. It excels in scenarios demanding high throughput and low latency.

- Apache Ignite: Apache Ignite is an in-memory computing platform that provides distributed caching, data grid, and streaming capabilities. It supports ACID transactions, SQL queries, and continuous queries. Ignite is a good choice for applications that require complex data processing and high availability.

- Hazelcast: Hazelcast is a distributed in-memory data grid that offers caching, data processing, and messaging features. It’s known for its ease of use and scalability. Hazelcast supports various data structures and provides features like data partitioning and failover.

Comparing Caching Technologies

Choosing the right caching technology requires a comparison of their features and capabilities. This comparison considers key aspects such as data structures supported, performance characteristics, and management overhead.

| Feature | Redis | Memcached | Apache Ignite | Hazelcast |

|---|---|---|---|---|

| Data Structures | Strings, Hashes, Lists, Sets, Sorted Sets | Key-Value | Key-Value, SQL-based Data Grid | Key-Value, Collections, Maps |

| Persistence | Yes (RDB and AOF) | No | Yes (optional) | Yes (optional) |

| Clustering | Yes | Yes (client-side) | Yes | Yes |

| Performance | High | Very High | High | High |

| Complexity | Moderate | Low | High | Moderate |

| Transactions | Yes (limited) | No | Yes (ACID) | Yes |

The table provides a high-level comparison; specific performance characteristics will depend on the hardware, data size, and workload.

Setting up a Basic Distributed Cache with Redis

Setting up a basic distributed cache using Redis involves several steps. This guide assumes a basic understanding of command-line interfaces and network configuration.

- Installation: Install Redis on a server. This typically involves downloading the Redis package and following the installation instructions for the operating system. For example, on Debian/Ubuntu, you can use:

sudo apt-get update && sudo apt-get install redis-server. - Configuration: Configure Redis. The default configuration often works well for basic setups. However, consider modifying the

redis.conffile to adjust settings like the port number, the maximum memory usage, and persistence options (RDB or AOF). - Start the Redis Server: Start the Redis server using the appropriate command for your operating system. Typically, this involves running

redis-serveror using a service manager like systemd (e.g.,sudo systemctl start redis-server). - Connect to Redis: Connect to the Redis server using the Redis CLI (command-line interface). You can connect by running

redis-cliin your terminal. - Set and Get Data: Use the Redis CLI to set and retrieve data. For example, to set a key-value pair:

SET mykey "Hello Redis". To retrieve the value:GET mykey. - Integrate with Application: Integrate Redis with your application code. Use a Redis client library specific to your programming language (e.g.,

redis-pyfor Python,Jedisfor Java). The client library provides APIs to connect to the Redis server, set, get, and manage data.

Challenges and Considerations

Implementing and managing a distributed cache presents a unique set of challenges. Successfully navigating these requires careful planning, consideration of various factors, and adherence to best practices. This section Artikels the key challenges, crucial considerations, and best practices for maintaining data consistency.

Challenges of Distributed Caching

Several complexities arise when deploying and managing a distributed caching system. These challenges can significantly impact performance, reliability, and operational overhead.* Data Consistency: Ensuring data consistency across multiple cache nodes is a fundamental challenge. Maintaining the same data across all caches while handling updates and deletions requires sophisticated strategies to avoid stale data.

Cache Invalidation

Efficiently invalidating outdated cache entries is crucial. Incorrect or delayed invalidation can lead to users receiving stale information. Implementations must balance timeliness and the overhead of invalidation.

Network Latency

Distributed caches are inherently affected by network latency. Communication between cache nodes and clients can introduce delays, potentially impacting application performance. Network topology and proximity of cache nodes to clients are critical considerations.

Cache Misses

Managing cache misses, which occur when data is not found in the cache, is essential. Frequent cache misses can degrade performance as the system must retrieve data from the underlying data store. Effective cache sizing and pre-warming strategies are crucial.

Fault Tolerance

Ensuring the cache system is resilient to node failures is paramount. Design considerations must include redundancy and failover mechanisms to maintain availability and prevent data loss.

Scalability

Scaling a distributed cache to meet increasing traffic demands is a constant challenge. Systems must be designed to scale horizontally, allowing for the addition of new cache nodes without significant downtime or performance degradation.

Complexity

Implementing and managing a distributed cache is inherently more complex than a single-node cache. The distributed nature of the system introduces additional operational overhead, including monitoring, maintenance, and troubleshooting.

Security

Securing the cache against unauthorized access is vital. Implementing appropriate authentication, authorization, and encryption mechanisms is necessary to protect sensitive data stored in the cache.

Considerations for Choosing a Distributed Caching Solution

Selecting the appropriate distributed caching solution involves careful evaluation of several factors to ensure it aligns with the specific needs of the application and infrastructure.* Performance Requirements: The performance requirements of the application, including read and write speeds, must be considered. Different caching solutions offer varying performance characteristics.

Data Size and Volume

The size and volume of data to be cached influence the choice of solution. Solutions must be capable of handling the expected data volume and scale accordingly.

Data Consistency Needs

The level of data consistency required determines the choice of caching strategy. Stronger consistency models often come at the cost of performance.

Scalability Requirements

The anticipated growth in traffic and data volume dictates the scalability requirements of the caching solution. The chosen solution should support horizontal scaling.

Fault Tolerance Requirements

The need for high availability and fault tolerance influences the selection. Solutions should provide built-in redundancy and failover mechanisms.

Cost Considerations

The total cost of ownership, including hardware, software, and operational expenses, must be evaluated. Open-source solutions may offer cost advantages over proprietary solutions.

Integration Capabilities

The ease of integration with existing infrastructure and applications is essential. Consider the available APIs, client libraries, and compatibility with existing systems.

Maintenance and Operational Overhead

The complexity of managing and maintaining the caching solution should be considered. Simpler solutions often reduce operational overhead.

Security Requirements

Security requirements, including data encryption, access control, and compliance considerations, must be evaluated.

Best Practices for Maintaining Data Consistency

Maintaining data consistency is critical for the reliability of a distributed cache. The following best practices help ensure data accuracy across the system.* Use a Consistent Hashing Algorithm: Employ a consistent hashing algorithm for data distribution across cache nodes. This helps minimize data movement during node additions or removals.

Implement Cache Invalidation Strategies

Utilize effective cache invalidation strategies, such as time-to-live (TTL) and write-through/write-behind approaches, to ensure data freshness.

Employ a Write-Through or Write-Behind Cache

A write-through cache writes data to both the cache and the underlying data store simultaneously. A write-behind cache writes data to the cache and asynchronously updates the data store.

Use Versioning

Implement data versioning to track changes and ensure that the most up-to-date version of the data is served.

Monitor Cache Health and Data Consistency

Continuously monitor the cache’s health, including cache hit rates, miss rates, and data consistency metrics.

Implement Replication

Replicate data across multiple cache nodes to provide redundancy and improve availability.

Use Transactions (if applicable)

For operations that require atomicity, use transactions to ensure that all changes are applied consistently.

Regularly Review and Refine Consistency Strategies

Continuously evaluate and refine the consistency strategies based on performance metrics and application requirements.

Avoid Cache Stampedes

Prevent cache stampedes, where many requests simultaneously miss the cache and overload the data store, by using techniques such as a mutex or a distributed lock.

Test Thoroughly

Conduct thorough testing to validate the data consistency strategies and ensure they meet the required levels of accuracy.

Last Recap

In conclusion, distributed caching emerges as a powerful solution for optimizing application performance, enhancing scalability, and reducing costs. By understanding its architecture, benefits, and implementation strategies, businesses can significantly improve user experience and achieve their performance goals. Embracing distributed caching is not just a technical advantage; it’s a strategic move toward building robust, efficient, and user-friendly digital experiences that can lead to business success.

Frequently Asked Questions

What is the main difference between a distributed cache and a CDN (Content Delivery Network)?

A distributed cache primarily focuses on caching dynamic content closer to the application servers, reducing database load and improving application response times. A CDN focuses on caching static content (images, CSS, JavaScript) at edge locations geographically closer to users to reduce latency.

How does a distributed cache handle data consistency?

Data consistency in a distributed cache is maintained through various strategies, including cache invalidation, time-to-live (TTL) settings, and versioning. When data changes, the cache entries are either updated or invalidated to ensure users receive the most up-to-date information.

What are the common pitfalls to avoid when implementing a distributed cache?

Common pitfalls include improperly configured cache invalidation, inadequate monitoring, and choosing the wrong caching strategy for your application. It is important to design for scalability, fault tolerance, and data consistency from the outset.

Can distributed caching replace a database?

No, a distributed cache is not a replacement for a database. It serves as a layer to improve database performance by storing frequently accessed data, reducing the load on the database. The database remains the source of truth for the data.