The realm of serverless computing, spearheaded by AWS Lambda, has revolutionized application development by enabling developers to execute code without managing servers. Lambda’s inherent strengths, including automatic scaling and pay-per-use pricing, have made it a cornerstone for many modern architectures. However, the increasing reliance on a single cloud provider raises concerns about vendor lock-in, cost optimization, and the desire for greater control over infrastructure.

This analysis delves into the various alternatives to AWS Lambda, providing a comprehensive exploration of available options, their capabilities, and their suitability for diverse use cases.

This examination extends beyond simple comparisons, dissecting the architectural nuances of each alternative. We will meticulously analyze open-source platforms, cloud provider offerings, container-based serverless solutions, and the role of serverless frameworks. The discussion incorporates cost optimization strategies, performance metrics, and critical security considerations, equipping readers with the knowledge necessary to make informed decisions about their serverless deployments. This comprehensive analysis aims to provide a practical guide for developers and architects navigating the complexities of the serverless landscape.

Introduction: Understanding the Need for Alternatives to AWS Lambda

AWS Lambda, a serverless compute service, revolutionized cloud computing by allowing developers to run code without provisioning or managing servers. This introduction explores the core functionalities of Lambda, its strengths, the challenges users face, and the scenarios where alternatives become desirable.

Core Functionalities of AWS Lambda

AWS Lambda functions operate based on an event-driven model. These functions are triggered by various events from other AWS services or external sources.

- Event Sources: Lambda supports a wide array of event sources, including:

- Amazon S3 (object creation/deletion)

- Amazon DynamoDB (table updates)

- Amazon API Gateway (HTTP requests)

- Amazon Kinesis (data streams)

- Amazon SNS (notifications)

- Amazon CloudWatch (scheduled events and metrics)

These event sources trigger the execution of Lambda functions, enabling a reactive and automated architecture.

- Execution Environment: Lambda provides a managed runtime environment. It supports multiple programming languages, including:

- Node.js

- Python

- Java

- Go

- .NET

- Ruby

Developers can upload their code as a package (typically a ZIP archive or container image) and Lambda handles the underlying infrastructure, including scaling, patching, and security.

- Scalability and Concurrency: Lambda automatically scales based on the incoming events. It handles the provisioning and management of compute resources to meet the demand. Lambda can handle a high volume of concurrent executions, providing high availability and performance.

- Cost Model: Lambda follows a pay-per-use model. Users are charged only for the compute time consumed by their functions, which is measured in milliseconds. This can result in significant cost savings compared to traditional server-based deployments, particularly for applications with intermittent workloads.

Strengths of AWS Lambda

Lambda’s design offers several advantages that contribute to its popularity.

- Serverless Architecture: Lambda abstracts away the underlying infrastructure, allowing developers to focus on writing code rather than managing servers. This reduces operational overhead and simplifies deployment processes.

- Automatic Scaling: Lambda automatically scales based on demand, eliminating the need for manual capacity planning. This ensures that applications can handle fluctuations in traffic and maintain optimal performance.

- Pay-per-Use Pricing: Lambda’s pay-per-use pricing model minimizes costs by charging only for the compute time consumed. This is particularly beneficial for applications with unpredictable workloads or infrequent usage.

- Integration with AWS Services: Lambda seamlessly integrates with other AWS services, such as S3, DynamoDB, API Gateway, and CloudWatch. This facilitates the creation of event-driven architectures and simplifies the development of complex applications.

- Rapid Deployment and Iteration: Lambda enables rapid deployment and iteration cycles. Developers can quickly deploy code changes and test their functions without waiting for server provisioning or configuration.

Challenges with AWS Lambda

Despite its benefits, Lambda presents challenges for some users.

- Vendor Lock-in: Using Lambda ties applications to the AWS ecosystem. Migrating to another cloud provider or on-premise infrastructure can be complex and time-consuming due to the specific architecture and integrations.

- Cold Starts: Lambda functions can experience cold starts, which is the delay incurred when a function is invoked for the first time or after a period of inactivity. This can impact the responsiveness of applications, especially those with low latency requirements.

- Cost Concerns: While Lambda’s pay-per-use pricing can be cost-effective, it can also become expensive for applications with high traffic volumes or long-running functions. Monitoring and optimizing function performance and resource utilization are crucial to control costs.

- Limited Execution Time: Lambda functions have a maximum execution time limit (currently 15 minutes), which can restrict the types of workloads that can be supported.

- Complexity in Debugging and Monitoring: Debugging and monitoring Lambda functions can be challenging due to the distributed nature of serverless applications. Tools and techniques are required to trace execution flows, analyze logs, and identify performance bottlenecks.

Scenarios Where Alternatives to Lambda Become Attractive

Alternatives to Lambda become attractive in specific scenarios.

- Vendor Lock-in Avoidance: When organizations want to avoid vendor lock-in and maintain the flexibility to migrate to different cloud providers or on-premise infrastructure.

- High Performance and Low Latency Requirements: When applications require consistently low latency and fast response times, Lambda’s cold start times might not be acceptable.

- Long-Running Tasks: For applications that involve long-running processes or computationally intensive workloads that exceed Lambda’s execution time limits.

- Cost Optimization: When Lambda’s cost model is not cost-effective for the specific workload, alternative solutions might offer better pricing.

- Specific Language or Framework Requirements: When the required programming language or framework is not natively supported by Lambda, or the supported versions are outdated.

Open Source Serverless Platforms

The utilization of open-source serverless platforms presents a compelling alternative to vendor-locked solutions like AWS Lambda. These platforms offer a degree of flexibility and control that is often absent in managed services, allowing for greater customization and a more intimate understanding of the underlying infrastructure. This section will explore the benefits of self-hosting serverless applications and examine several prominent open-source options.

Benefits of Open-Source Serverless Platforms

The primary advantage of opting for an open-source serverless platform lies in the increased flexibility and control it provides. This control extends to several key areas, including infrastructure, code execution environment, and deployment strategies.

- Vendor Independence: Avoiding vendor lock-in is a significant benefit. Self-hosted solutions allow users to migrate their applications between different cloud providers or even on-premises infrastructure without being tied to a specific platform’s ecosystem. This mitigates the risks associated with price changes, service outages, or changes in service offerings.

- Customization: Open-source platforms enable extensive customization of the runtime environment, libraries, and deployment pipelines. This is particularly valuable for specialized workloads or applications requiring specific dependencies or configurations that may not be readily available in managed services. For instance, a research team might need to incorporate a custom machine learning library that isn’t pre-installed in a managed serverless environment.

- Cost Control: While self-hosting can involve upfront infrastructure costs, it can potentially lead to greater cost control in the long run. Users have more direct visibility into resource utilization and can optimize their infrastructure to match their specific needs, potentially reducing costs compared to the often-opaque pricing models of managed serverless offerings.

- Enhanced Security and Compliance: Self-hosting allows for greater control over security policies and compliance requirements. Users can implement custom security measures, audit logs, and access controls that align with their specific organizational policies and regulatory needs. For example, organizations operating in highly regulated industries (e.g., finance, healthcare) often require stringent control over data security and compliance, which is often easier to achieve with self-hosted solutions.

Prominent Open-Source Serverless Platforms

Several open-source serverless platforms offer a viable alternative to AWS Lambda. These platforms provide varying feature sets and are designed to cater to diverse needs and use cases.

- Apache OpenWhisk: Apache OpenWhisk is a distributed serverless platform that supports multiple programming languages and triggers. It uses a container-based architecture, enabling developers to deploy functions as Docker containers. OpenWhisk is known for its scalability and its ability to handle high-volume workloads. A key feature is its support for event-driven programming, allowing functions to be triggered by various events, such as HTTP requests, database changes, or message queue updates.

- Kubeless: Kubeless is a Kubernetes-native serverless framework that simplifies the deployment and management of serverless functions on Kubernetes clusters. It leverages Kubernetes’ existing features, such as resource management, scaling, and networking, to provide a robust and scalable serverless environment. Kubeless supports multiple programming languages and offers a straightforward deployment process. The primary advantage is its tight integration with Kubernetes, allowing users to leverage the existing Kubernetes infrastructure and tooling.

- OpenFaaS: OpenFaaS is a serverless framework that simplifies the deployment of functions as Docker containers. It is designed to be easy to use and offers a streamlined developer experience. OpenFaaS supports multiple programming languages and integrates with various cloud providers and on-premises infrastructure. OpenFaaS is known for its ease of use and its focus on developer productivity. A key feature is its built-in API gateway and event connectors, which simplify the creation of serverless applications.

Deployment and Management Comparison

The deployment process and management overhead vary considerably among open-source serverless platforms. Understanding these differences is crucial for selecting the platform that best aligns with specific requirements and available resources.

| Platform | Deployment Process | Management Overhead | Key Considerations |

|---|---|---|---|

| Apache OpenWhisk | Functions are packaged as Docker containers or using pre-built runtimes and deployed using the OpenWhisk CLI or API. Deployment often involves defining triggers and actions. | Requires managing the underlying infrastructure, including the database, message queue, and container orchestration system. Monitoring and logging require dedicated tools and configuration. | Scalability and distributed nature. Needs careful planning and configuration to optimize performance and resource utilization. |

| Kubeless | Functions are deployed directly to a Kubernetes cluster using the Kubeless CLI or Kubernetes manifests. Deployment leverages Kubernetes’ existing features for networking, scaling, and resource management. | Leverages Kubernetes’ existing infrastructure, reducing some of the management overhead. Requires expertise in Kubernetes administration and configuration. | Integration with Kubernetes offers significant advantages for users already familiar with Kubernetes. Requires a functional Kubernetes cluster. |

| OpenFaaS | Functions are deployed as Docker containers through the OpenFaaS CLI or UI. Deployment typically involves creating a function definition and packaging the code as a Docker image. | Relatively low management overhead compared to OpenWhisk, but still requires managing the underlying Docker container platform. Monitoring and logging are often simplified through built-in integrations. | Focus on ease of use and developer productivity. Simplified deployment and management compared to other platforms. Suitable for smaller projects and teams. |

Cloud Provider Alternatives

The dominance of AWS Lambda in the serverless computing landscape necessitates a thorough examination of competing services offered by other cloud providers. This comparative analysis aims to provide a comprehensive understanding of the available alternatives, focusing on their feature sets, pricing models, and performance characteristics. This evaluation allows developers and organizations to make informed decisions based on their specific needs and priorities.

Evaluating Competing Services

Several cloud providers offer serverless computing platforms that directly compete with AWS Lambda. These services provide similar functionality, enabling developers to execute code without managing servers. Understanding the nuances of each service is critical for selecting the optimal platform.

- Google Cloud Functions: Google Cloud Functions allows developers to execute event-driven functions. It integrates seamlessly with other Google Cloud services, such as Cloud Storage, Cloud Pub/Sub, and Cloud Firestore. It supports multiple programming languages, including Node.js, Python, Go, Java, .NET, and Ruby. A key feature is its tight integration with Google Cloud’s ecosystem, making it a strong choice for projects already leveraging these services.

- Azure Functions: Azure Functions, provided by Microsoft Azure, is a serverless compute service that enables developers to run event-triggered code without managing infrastructure. It supports a wide range of programming languages, including C#, F#, Node.js, Python, Java, and PowerShell. Azure Functions offers robust integration with other Azure services, such as Azure Blob Storage, Azure Cosmos DB, and Azure Event Hubs. A significant advantage is its strong support for the .NET ecosystem and its integration with Visual Studio.

- Oracle Functions: Oracle Functions is a fully managed, serverless Functions-as-a-Service (FaaS) platform built on the open-source Fn Project. It allows developers to deploy code without managing servers or infrastructure. It supports Java, Python, Node.js, Go, and Ruby. Oracle Functions offers integration with other Oracle Cloud Infrastructure (OCI) services. A noteworthy aspect is its focus on portability, leveraging the open-source Fn Project.

Comparing Pricing Models

Pricing models for serverless computing services vary significantly across providers. Understanding these differences is crucial for cost optimization. This section compares the pricing models of AWS Lambda, Google Cloud Functions, and Azure Functions. Oracle Functions pricing is generally competitive, but specific costs can vary based on usage and the region.

| Feature | AWS Lambda | Google Cloud Functions | Azure Functions |

|---|---|---|---|

| Invocation Pricing | First 1 million requests free per month, then $0.0000002 per request | First 2 million invocations free per month, then $0.0000004 per invocation | First 1 million executions free per month, then $0.0000002 per execution |

| Compute Time Pricing | Based on duration (in milliseconds) and memory allocated | Based on compute time (in milliseconds), memory allocated, and CPU usage | Based on execution time (in seconds) and memory allocated |

| Free Tier | 1 million free requests per month, 400,000 GB-seconds of compute time | 2 million invocations, 400,000 GB-seconds of compute time | 1 million free executions, 400,000 GB-seconds of compute time |

| Other Charges | Data transfer, storage, and other service usage | Data transfer, storage, and other service usage | Data transfer, storage, and other service usage |

The pricing models often include free tiers and are based on a pay-per-use model, making them cost-effective for sporadic workloads. However, the specific costs can vary significantly depending on factors like region, memory allocation, and execution duration. It is essential to monitor usage and optimize code to minimize costs.

Comparative Analysis of Scalability and Performance

Scalability and performance are critical factors in serverless computing. These characteristics determine how well a service can handle varying workloads and the speed at which functions execute. This section examines the scalability and performance of AWS Lambda, Google Cloud Functions, and Azure Functions.

- Scalability: All three services are designed to scale automatically based on demand. They can handle a large number of concurrent requests without requiring manual intervention.

- Performance: Performance can vary based on factors such as code optimization, memory allocation, and the underlying infrastructure.

- Cold Starts: Cold starts, the time it takes for a function to initialize when invoked for the first time, can impact performance. The frequency and duration of cold starts vary across providers and can be influenced by the programming language, function size, and other factors.

Benchmarking and real-world testing are essential to determine the performance characteristics of each service for a specific workload. The choice of programming language and the optimization of the code also significantly influence performance. For example, Java functions often experience longer cold start times compared to Node.js or Python functions.

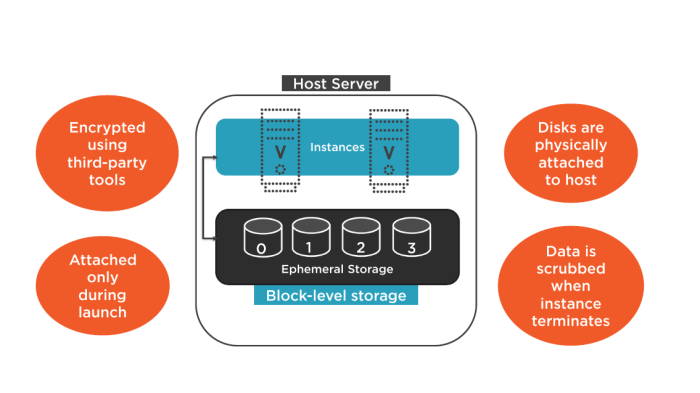

Container-Based Serverless

Container-based serverless architectures offer a compelling alternative to traditional Function-as-a-Service (FaaS) platforms like AWS Lambda. By leveraging containerization technologies, developers gain greater control over their application’s runtime environment, portability, and operational consistency. This approach is particularly beneficial for complex applications or those requiring specific dependencies and configurations not easily supported by standard FaaS offerings.

Advantages of Containerization for Serverless Workloads

Containerization, particularly with technologies like Docker and orchestration platforms like Kubernetes, presents several advantages for serverless workloads. These benefits enhance flexibility, portability, and operational efficiency compared to traditional serverless approaches.

- Enhanced Portability: Containers encapsulate the application and its dependencies into a single, portable unit. This allows serverless functions to run consistently across different environments, including local development machines, various cloud providers, and on-premise infrastructure. This portability mitigates the “works on my machine” problem and simplifies deployment.

- Dependency Management: Containerization simplifies dependency management. Developers can specify all required libraries and runtime versions within the container image, ensuring that functions always have the correct dependencies available. This eliminates compatibility issues and simplifies the build process.

- Resource Isolation: Containers provide strong resource isolation. Each container runs in its own isolated environment, preventing conflicts between different functions or applications running on the same infrastructure. This isolation enhances security and stability.

- Customization and Control: Containerization provides greater control over the function’s runtime environment. Developers can customize the operating system, install specific software packages, and configure network settings within the container image. This level of control is particularly useful for applications with complex dependencies or specific requirements.

- Improved Scalability and Efficiency: Container orchestration platforms, like Kubernetes, can automatically scale containerized serverless functions based on demand. This dynamic scaling ensures that resources are used efficiently and that the application can handle fluctuating workloads.

Process of Deploying Serverless Functions Within Containers

Deploying serverless functions within containers typically involves several key steps, from building the container image to deploying and managing the function on a container orchestration platform. The process ensures the application is packaged, portable, and ready for execution in a scalable manner.

- Container Image Creation: The process begins with creating a container image. This involves writing a Dockerfile that defines the function’s runtime environment, dependencies, and entry point. The Dockerfile specifies the base image (e.g., a specific Linux distribution with a runtime like Node.js or Python), installs necessary packages, copies the function code into the image, and sets the command to execute the function when the container starts.

For example:

FROM node:18-alpine WORKDIR /app COPY package*.json ./ RUN npm install COPY . . CMD ["node", "index.js"]This Dockerfile creates an image based on Node.js, installs dependencies, and runs the `index.js` file.

- Image Building and Tagging: After creating the Dockerfile, the container image is built using the Docker build command. The image is then tagged with a name and version number to identify it. This tag is crucial for referencing the image during deployment. For instance:

docker build -t my-function:1.0 .This command builds an image named `my-function` with the tag `1.0`.

- Image Registry Storage: The built container image is then pushed to a container registry, such as Docker Hub, Amazon Elastic Container Registry (ECR), or Google Container Registry (GCR). The registry acts as a central repository for storing and managing container images, making them accessible for deployment across different environments. Pushing the image to a registry ensures its availability for deployment:

docker push my-function:1.0 - Deployment to Container Orchestration Platform: The container image is deployed to a container orchestration platform, such as Kubernetes or serverless platforms like Knative. This involves creating deployment configurations that specify how the function should be scaled, exposed, and managed. These platforms handle the lifecycle management of the containers, including scaling, health checks, and updates. Deployment configurations are typically written in YAML format, specifying the image, resource requests, and scaling parameters.

- Function Invocation and Management: Once deployed, the serverless function can be invoked through various triggers, such as HTTP requests, message queues, or scheduled events. The container orchestration platform manages the function’s execution, automatically scaling the number of container instances based on the workload. Monitoring and logging tools are used to track the function’s performance and identify any issues.

Integration of Container-Based Serverless with CI/CD Pipelines

Integrating container-based serverless functions with CI/CD (Continuous Integration/Continuous Deployment) pipelines streamlines the development and deployment process, enabling faster release cycles and improved application reliability. This integration automates building, testing, and deploying containerized serverless functions.

- Source Code Management: The process begins with a source code repository (e.g., Git) where the function’s code and Dockerfile are stored. Every code change triggers the CI/CD pipeline.

- Continuous Integration (CI): The CI phase typically involves the following steps:

- Code Compilation and Testing: The CI pipeline compiles the code (if applicable) and runs automated tests to ensure the code changes are valid and do not introduce regressions. These tests can include unit tests, integration tests, and end-to-end tests.

- Container Image Building: The CI pipeline uses the Dockerfile to build a new container image. The image is tagged with a unique identifier, often based on the commit hash or build number, to track different versions.

- Vulnerability Scanning: The CI pipeline scans the container image for known vulnerabilities using tools like Trivy or Clair. This helps to identify and address security risks before deployment.

- Continuous Deployment (CD): The CD phase automates the deployment of the container image to the target environment:

- Image Push to Registry: The CD pipeline pushes the newly built container image to a container registry (e.g., Docker Hub, ECR).

- Deployment to Orchestration Platform: The CD pipeline updates the deployment configuration on the container orchestration platform (e.g., Kubernetes) to use the new container image. This triggers a rolling update, where the old container instances are gradually replaced with the new ones, minimizing downtime. Tools like Helm can automate this process.

- Post-Deployment Testing: The CD pipeline runs post-deployment tests to verify that the function is working correctly in the new environment. These tests can include functional tests, performance tests, and security tests.

- Monitoring and Alerting: The CD pipeline configures monitoring and alerting systems to track the function’s performance and health. This ensures that any issues are detected and addressed quickly.

- Automation Tools: Tools like Jenkins, GitLab CI, CircleCI, and GitHub Actions are commonly used to orchestrate the CI/CD pipeline. These tools provide features for defining pipelines, running tests, building container images, and deploying to various environments.

Serverless Frameworks: Streamlining Development and Deployment

Serverless frameworks offer a crucial layer of abstraction, simplifying the complexities of managing serverless applications. They provide tools for development, deployment, and monitoring, thereby reducing the operational overhead and accelerating the development lifecycle. These frameworks are designed to improve developer productivity and facilitate portability across different cloud providers.

Popular Serverless Frameworks and Multi-Cloud Support

Several serverless frameworks have gained popularity due to their comprehensive feature sets and multi-cloud capabilities. These frameworks aim to streamline the process of building and deploying serverless applications, offering a consistent developer experience regardless of the underlying cloud infrastructure.

- Serverless Framework: This is one of the most widely adopted frameworks, known for its extensive support for multiple cloud providers, including AWS, Azure, Google Cloud Platform (GCP), and others through plugins. It utilizes YAML configuration files to define serverless applications, including functions, events, and resources.

- AWS SAM (Serverless Application Model): Developed by Amazon Web Services, AWS SAM is specifically designed for building serverless applications on AWS. While primarily focused on AWS, it can be used in conjunction with other tools to manage deployments across multiple cloud providers. SAM simplifies the definition of serverless resources and provides features for local testing and debugging.

- Pulumi: Pulumi is an infrastructure-as-code platform that supports serverless deployments across multiple cloud providers. It allows developers to define infrastructure using familiar programming languages like Python, TypeScript, JavaScript, Go, and C#. This approach enables greater flexibility and control over the infrastructure compared to YAML-based configuration.

- Terraform: While primarily an infrastructure-as-code tool, Terraform can also be used to manage serverless resources across multiple cloud providers. Terraform uses a declarative configuration language (HCL) to define infrastructure components. It provides a consistent way to provision and manage resources across different cloud platforms, including functions, APIs, and databases.

Step-by-Step Procedure for Deploying a Simple Function

Deploying a simple function using a serverless framework generally involves a few key steps. This process usually involves defining the function’s code, configuring the deployment, and deploying the application to the chosen cloud provider. This example will use the Serverless Framework for demonstration.

- Installation and Setup: Install the Serverless Framework using npm or yarn:

npm install -g serverless. Configure your cloud provider credentials (e.g., AWS access keys) to allow the framework to deploy resources on your behalf. - Project Initialization: Create a new project using the framework’s template:

serverless create --template aws-nodejs --path my-service. This command generates a basic project structure with a sample function. - Code Implementation: Modify the function code (e.g., `handler.js`) to perform a specific task. For example, create a simple “hello world” function:

module.exports.hello = async (event) =>returnstatusCode: 200,body: JSON.stringify( message: 'Hello, world!' ),;; - Configuration: Edit the `serverless.yml` file to configure the function, events (e.g., HTTP endpoints, scheduled events), and other resources. Specify the provider (e.g., `aws`) and the function’s configuration, such as the handler and runtime.

- Deployment: Deploy the function to the cloud provider using the framework’s deployment command:

serverless deploy. The framework will package the code, upload it to the cloud provider, and configure the necessary infrastructure. - Testing and Verification: After deployment, test the function by invoking the endpoint or triggering the event specified in the configuration. Verify that the function executes successfully and returns the expected results.

Benefits and Drawbacks of Using Serverless Frameworks

Serverless frameworks offer several advantages, but also come with certain limitations. Understanding these trade-offs is essential for making informed decisions about adopting these frameworks.

- Benefits:

- Simplified Development: Frameworks abstract away the complexities of managing serverless infrastructure, allowing developers to focus on writing code.

- Faster Deployment: Automates the deployment process, reducing the time and effort required to deploy serverless applications.

- Infrastructure as Code: Enables infrastructure to be defined as code, promoting version control, repeatability, and collaboration.

- Multi-Cloud Support: Facilitates the deployment of applications across multiple cloud providers, enhancing portability and avoiding vendor lock-in.

- Reduced Operational Overhead: Handles the underlying infrastructure management, such as scaling and monitoring, reducing the operational burden.

- Drawbacks:

- Vendor Lock-in (Partial): While some frameworks support multiple providers, the underlying cloud provider’s specific features and services might still lead to vendor lock-in.

- Abstraction Limitations: The abstraction provided by frameworks can sometimes limit control over low-level configurations and optimizations.

- Debugging Complexity: Debugging serverless applications, especially across multiple cloud providers, can be more complex than traditional applications.

- Framework Learning Curve: Learning a new framework and its configuration language can introduce an initial learning curve.

- Cost Considerations: Although serverless is generally cost-effective, the framework’s overhead or the cloud provider’s pricing model can influence overall costs. Proper resource management and monitoring are crucial.

FaaS (Function-as-a-Service) Platforms

Function-as-a-Service (FaaS) platforms provide a serverless computing model that allows developers to execute code without managing the underlying infrastructure. These platforms abstract away server management, scaling, and resource provisioning, enabling developers to focus solely on writing and deploying code. The core principle revolves around executing small, independent functions triggered by events, offering a highly scalable and cost-effective approach to application development.

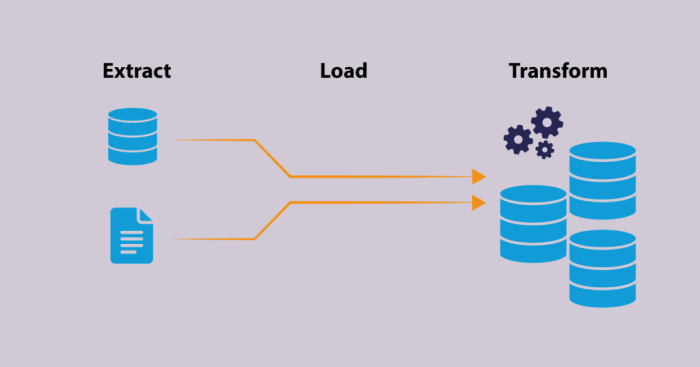

Fundamental Architecture of FaaS Platforms

The architecture of a FaaS platform typically comprises several key components working together to manage function execution. At the heart of the system is an event trigger, which can be an HTTP request, a database update, a message from a queue, or any other supported event source. This trigger activates the function execution process.Once triggered, the platform’s control plane, a critical component, handles the routing of the event to the appropriate function.

It then provisions the necessary resources, such as CPU, memory, and network connectivity, for the function’s execution environment. This environment is often a container or a sandboxed environment, ensuring isolation and security. The function code, along with its dependencies, is then executed within this environment. Upon completion, the platform collects logs, metrics, and other relevant data, making it available to the developer.

Finally, the resources allocated to the function are released, optimizing resource utilization and cost.

Execution Environments Supported by FaaS Platforms

FaaS platforms support various execution environments to provide flexibility and cater to different application requirements. These environments are designed to isolate functions, manage resources efficiently, and offer security.

- Containers: Many FaaS platforms utilize containers, such as Docker, to package and run functions. Containers provide a consistent and portable environment, allowing developers to define the function’s dependencies and runtime environment. This approach ensures that the function behaves the same way regardless of the underlying infrastructure.

- Sandboxed Environments: Some platforms use sandboxed environments, which provide a more lightweight execution environment compared to containers. Sandboxes offer a high level of isolation and security while minimizing overhead. They typically involve the use of operating system-level virtualization or other isolation techniques.

- Virtual Machines (VMs): While less common due to the increased overhead, some platforms might use VMs to provide isolation and security. VMs offer a more complete isolation but can result in slower startup times and higher resource consumption.

- Runtime Environments: FaaS platforms often provide pre-configured runtime environments, such as Node.js, Python, Java, and Go, eliminating the need for developers to manage the underlying operating system and runtime installation. This streamlines the deployment process and reduces operational complexity.

Programming Languages and Runtimes Across FaaS Platforms

The support for various programming languages and runtimes is a crucial factor when selecting a FaaS platform. Different platforms offer varying levels of support, influencing the developer’s choice based on their preferred language and the availability of required libraries and frameworks.

The following table illustrates a comparison of supported programming languages and runtimes across several FaaS platforms:

| Platform | Supported Languages | Supported Runtimes |

|---|---|---|

| AWS Lambda | Node.js, Python, Java, Go, C#, Ruby, PowerShell, C++ (via custom runtime) | Node.js (14.x, 16.x, 18.x, 20.x), Python (3.7, 3.8, 3.9, 3.10, 3.11, 3.12), Java (8, 11, 17, 21), Go (1.x), C# (.NET 6, .NET 8), Ruby (2.7, 3.2), PowerShell (7.x), C++ (via custom runtime) |

| Google Cloud Functions | Node.js, Python, Java, Go, .NET, PHP, Ruby, TypeScript | Node.js (10, 12, 14, 16, 18, 20), Python (3.7, 3.8, 3.9, 3.10, 3.11), Java (8, 11, 17, 21), Go (1.x), .NET (6, 7, 8), PHP (7.4, 8.0, 8.1, 8.2), Ruby (2.7, 3.0, 3.1), TypeScript |

| Azure Functions | C#, JavaScript, F#, Java, Python, PowerShell, TypeScript | Node.js, Python, Java, .NET (Core), PowerShell, TypeScript |

| Cloudflare Workers | JavaScript, WebAssembly | JavaScript (ECMAScript modules), WebAssembly |

| IBM Cloud Functions (Apache OpenWhisk) | Node.js, Python, Java, Swift, PHP, Go, Ruby, .NET | Node.js, Python, Java, Swift, PHP, Go, Ruby, .NET |

It’s important to note that the availability of runtimes can change over time as platforms evolve. Developers should consult the official documentation of each FaaS platform for the most up-to-date information.

Event-Driven Architectures

Event-driven architectures (EDAs) are a fundamental design pattern in modern software development, particularly relevant in the context of serverless computing. They offer a powerful way to build scalable, resilient, and loosely coupled systems. This section explores the role of EDAs within serverless, providing examples and design considerations for implementing them using alternative FaaS platforms.

Role of Event-Driven Architectures in Serverless Computing

Serverless computing and event-driven architectures are naturally complementary. Serverless functions are inherently reactive, meaning they are designed to respond to events. This reactive nature makes them ideal for building event-driven systems. In an EDA, components communicate by producing and consuming events, which are signals of state changes or occurrences. This contrasts with more traditional request-response models.

The benefits of combining serverless and EDAs include improved scalability, fault tolerance, and agility. The loose coupling inherent in EDAs allows for independent scaling of different components and reduces the impact of failures. The event-driven approach also enables easier integration with various services and facilitates rapid development cycles.

Event Sources and Triggers for Serverless Functions

A crucial aspect of event-driven systems is the identification of event sources and the definition of triggers that activate serverless functions. Numerous event sources can be used to trigger serverless functions, allowing for a wide range of applications.

- Message Queues: Services like Apache Kafka or RabbitMQ act as event sources. When a message is published to a queue, it triggers a serverless function. This is common for processing data streams, handling asynchronous tasks, and decoupling components.

- Object Storage Events: Object storage services, such as MinIO (a popular alternative to AWS S3), can trigger functions when objects are created, updated, or deleted. This is frequently used for image processing, file analysis, and data archiving.

- Database Events: Changes in a database, such as new entries, updates, or deletions, can trigger functions. This is valuable for real-time data synchronization, data validation, and triggering notifications. Examples include using triggers within databases like PostgreSQL or MySQL.

- API Gateway Events: When an API request is received by an API gateway, such as Kong or Tyk (alternatives to AWS API Gateway), it can trigger a function. This enables event-driven API design, where certain API calls initiate background processes or trigger other actions.

- Scheduling Events: Scheduled tasks, implemented using services like Cron or specialized schedulers within FaaS platforms, trigger functions at predetermined intervals. This is useful for periodic data processing, report generation, and maintenance tasks.

- Webhooks: Webhooks, essentially user-defined HTTP callbacks, can trigger functions when an external service generates an event. This allows for integration with third-party services, such as payment gateways or social media platforms.

Designing Event-Driven Systems with Alternative FaaS Platforms

Designing an event-driven system with alternative FaaS platforms requires careful consideration of event sources, triggers, and function orchestration. The specific implementation details will vary depending on the chosen platform, but the general principles remain consistent.

Consider the following example, a system for processing image uploads to an object store (MinIO, for instance):

- Event Source: MinIO, configured to send events on object creation.

- Trigger: The MinIO event triggers a serverless function.

- Function: The function receives the event data, retrieves the uploaded image from MinIO, performs image processing (e.g., resizing, format conversion), and stores the processed image back to MinIO.

- Platform: Choose a FaaS platform, like OpenFaaS or Knative, to host the function. The platform manages the function’s deployment, scaling, and execution.

The system’s workflow could be visualized as follows:

The image is uploaded to MinIO. MinIO emits an “object created” event. This event triggers a function within the FaaS platform. The function retrieves the uploaded image, performs transformations, and then stores the transformed image back in MinIO. The function execution is triggered by the event, demonstrating the core principle of event-driven architecture.

Key considerations for design:

- Idempotency: Ensure that functions are idempotent, meaning they can be executed multiple times without unintended side effects. This is critical for handling potential event duplicates.

- Error Handling: Implement robust error handling and retry mechanisms to gracefully manage failures. Dead-letter queues (DLQs) can be used to handle events that repeatedly fail processing.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track function execution, identify performance bottlenecks, and troubleshoot issues.

- Platform Specific Configuration: The specific configurations for event sources, triggers, and function deployments will vary between FaaS platforms. For example, OpenFaaS uses its own event connectors and triggers, while Knative leverages Kubernetes eventing components.

By adopting these principles, developers can build scalable, resilient, and flexible event-driven systems using alternative FaaS platforms, offering significant advantages over traditional architectures.

Cost Optimization Strategies: Managing Expenses with Alternatives

Effectively managing costs is crucial when adopting serverless architectures, regardless of the platform chosen. Understanding the nuances of pricing models, resource utilization, and deployment strategies allows for significant cost savings. This section details various techniques for optimizing serverless function costs across different platforms, compares the cost-efficiency of various FaaS platforms, and Artikels the key factors influencing the overall cost of serverless solutions.

Techniques for Optimizing Cost of Serverless Functions

Several strategies can be employed to minimize the expenses associated with serverless functions. These techniques focus on efficient resource allocation, optimized code execution, and strategic platform selection.

- Resource Allocation and Scaling: Properly configuring memory allocation for functions is essential. Over-provisioning leads to unnecessary costs, while under-provisioning can cause performance degradation. Dynamic scaling, which automatically adjusts resources based on demand, should be leveraged to avoid paying for idle capacity. For example, AWS Lambda allows you to configure memory from 128MB to 10GB, and pricing scales linearly with memory allocation.

- Code Optimization and Execution Time: Optimizing function code to reduce execution time directly impacts costs. Faster execution consumes fewer resources and reduces the duration for which you are billed. This includes efficient code, minimizing dependencies, and leveraging language-specific performance characteristics. A Python function that runs in 100ms will cost less than an identical function that runs in 500ms, given that the pricing is based on execution time and memory usage.

- Cold Start Mitigation: Cold starts, the initial latency when a function is invoked, can impact costs. Strategies to mitigate cold starts include pre-warming functions, keeping functions warm by periodically invoking them, or using container-based serverless solutions, which often have faster startup times.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems is vital. Monitoring function invocations, execution times, and error rates allows you to identify and address cost-intensive issues. Setting up alerts for unusual patterns, such as unexpected spikes in invocations or prolonged execution times, can prevent runaway costs.

- Batch Processing and Event Aggregation: Grouping multiple events into a single invocation can reduce the number of function invocations and associated costs. Batch processing is particularly effective when dealing with event-driven architectures where numerous small events trigger functions. For instance, instead of processing each message from a queue individually, aggregate them into batches for processing.

- Choosing the Right Region: Serverless platform pricing can vary by region. Consider the pricing differences between regions, especially if your application can tolerate slightly higher latency. Selecting a region with lower costs can lead to significant savings, particularly for high-volume workloads.

- Lifecycle Policies for Storage: If your serverless functions interact with storage services (e.g., object storage), implement lifecycle policies to automatically manage data. These policies can move infrequently accessed data to cheaper storage tiers, further reducing costs.

Comparison of Cost-Efficiency of Different FaaS Platforms

The cost-efficiency of FaaS platforms varies based on several factors, including pricing models, resource allocation, and execution characteristics. A comparative analysis reveals the strengths and weaknesses of each platform, helping to make informed decisions.

Cost comparison is complex because it depends heavily on the specific workload, but a general comparison can be made based on pricing models:

- AWS Lambda: AWS Lambda has a pay-per-use pricing model, charging for the compute time and memory allocated. It also offers a free tier. The cost-efficiency is high for variable workloads but can become expensive for consistently high-volume or long-running functions.

- Google Cloud Functions: Google Cloud Functions also offers a pay-per-use pricing model, with charges based on execution time, memory usage, and the number of invocations. It offers a free tier as well. Its cost-efficiency is similar to AWS Lambda, with competitive pricing.

- Azure Functions: Azure Functions also uses a pay-per-use model and provides a free tier. It has various pricing plans, including consumption plans (pay-per-use) and premium plans for dedicated resources. Azure Functions’ cost-efficiency depends on the chosen plan and workload patterns.

- OpenFaaS: OpenFaaS, being an open-source solution, does not have direct costs for the platform itself. However, the cost is tied to the underlying infrastructure on which it runs (e.g., Kubernetes). The cost-efficiency depends on the efficiency of the infrastructure management and the scaling capabilities.

- Knative: Knative, similar to OpenFaaS, relies on the underlying infrastructure, typically Kubernetes. Its cost-efficiency is highly dependent on the efficiency of the Kubernetes cluster and the scaling strategies implemented.

Illustrative Example: Consider two scenarios:

- Scenario 1: Spiky Workload. A function that is invoked infrequently, but with occasional spikes in demand. AWS Lambda, Google Cloud Functions, and Azure Functions are typically cost-effective in this scenario due to their pay-per-use pricing.

- Scenario 2: Consistent, High-Volume Workload. A function that is invoked thousands of times per minute, consistently. Dedicated infrastructure (e.g., using Azure Functions Premium Plan or a self-managed Kubernetes cluster with Knative or OpenFaaS) might be more cost-effective due to the ability to optimize resource allocation and potentially benefit from reserved instances or discounts.

Factors Influencing the Overall Cost of Serverless Solutions

The overall cost of serverless solutions is determined by a combination of factors, including platform pricing, resource utilization, and architectural choices. Understanding these factors is crucial for effective cost management.

- Function Invocations: The number of function invocations is a primary driver of cost, as most platforms charge per invocation. Optimizing the number of invocations through batching, event aggregation, and efficient event handling can significantly reduce costs.

- Execution Time: The duration for which a function runs directly impacts costs. Faster execution times translate to lower costs. Code optimization, efficient resource allocation, and choosing the right language runtime can minimize execution time.

- Memory Allocation: The amount of memory allocated to a function affects both performance and cost. Over-provisioning leads to higher costs, while under-provisioning can affect performance. Finding the optimal memory configuration is critical.

- Network Transfers: Data transfer costs, particularly for data transferred between functions and external services, can contribute significantly to the overall cost. Optimizing data transfer patterns, using local caching, and choosing cost-effective network configurations are important.

- Storage Costs: If serverless functions interact with storage services (e.g., object storage, databases), the storage costs must be considered. Selecting appropriate storage tiers, implementing lifecycle policies, and optimizing data access patterns can help reduce storage costs.

- Monitoring and Logging Costs: Monitoring and logging services, such as cloudwatch, also incur costs. Optimizing logging levels and using cost-effective monitoring solutions can reduce these expenses.

- Development and Operational Costs: While not direct platform costs, the time and resources spent on development, deployment, and operations (e.g., debugging, monitoring, and maintenance) influence the overall cost of serverless solutions. Using serverless frameworks, automated CI/CD pipelines, and infrastructure-as-code tools can improve efficiency and reduce these costs.

- Vendor Lock-in: Choosing a specific FaaS provider may lead to vendor lock-in. Consider the long-term implications of being tied to a particular platform. Open-source alternatives or platform-agnostic architectures may offer more flexibility and prevent cost implications associated with vendor lock-in.

Performance and Scalability: Evaluating Speed and Capacity

Serverless platforms are inherently designed for scalability, but the mechanisms by which they achieve this vary significantly. Understanding these differences is crucial for selecting the right platform for a given workload. Performance metrics, such as cold start times and execution duration, directly impact user experience and operational costs. This section will delve into the performance characteristics of various serverless options, focusing on how they handle scalability, methods for measuring performance, and comparative cold start times.

Scalability and Concurrency Handling

Scalability in serverless environments is primarily achieved through automatic resource allocation. The underlying mechanisms, however, differ across platforms, impacting how concurrency is managed.

- AWS Lambda: AWS Lambda scales by automatically provisioning and managing the underlying compute resources (containers) based on incoming requests. Concurrency is managed through the concept of “reserved concurrency” and “provisioned concurrency.” Reserved concurrency limits the maximum number of concurrent function invocations, while provisioned concurrency pre-warms function instances to minimize cold start times. This allows for predictable scaling, especially important for latency-sensitive applications.

- Google Cloud Functions: Google Cloud Functions utilizes a similar autoscaling approach. Google’s infrastructure dynamically adjusts the number of function instances based on traffic. The platform leverages Google’s global network to distribute function invocations across regions, improving both performance and availability. Concurrency is handled implicitly, with the platform managing the allocation of resources to meet demand.

- Azure Functions: Azure Functions scales automatically based on the number of incoming events. The platform provisions compute resources dynamically, managing concurrency based on the configured scaling settings and the underlying Azure infrastructure. Azure Functions supports various hosting plans, including consumption plans (pay-per-use) and dedicated app service plans, each influencing the scalability model.

- Open Source Serverless Platforms (e.g., Knative, OpenFaaS): Open-source platforms often rely on Kubernetes for scaling. Knative, for instance, leverages Kubernetes’ autoscaling capabilities to scale function instances based on metrics like request rate and CPU utilization. This offers greater control over the scaling behavior, allowing for customization. OpenFaaS also uses a Kubernetes-based approach, providing similar flexibility. The scalability is directly influenced by the configuration of the underlying Kubernetes cluster.

- Container-Based Serverless (e.g., AWS Fargate, Google Cloud Run): These platforms offer fine-grained control over resource allocation. Fargate and Cloud Run automatically scale the underlying container infrastructure. Concurrency is managed through the number of container instances. These platforms provide granular control over CPU and memory resources, which is beneficial for resource-intensive applications.

Methods for Measuring Serverless Function Performance

Accurate performance measurement is essential for optimizing serverless functions and comparing different platforms. Several key metrics and techniques are used.

- Cold Start Time: This is the time it takes for a function to start running when it hasn’t been recently invoked. Cold start times can significantly impact user experience, particularly for latency-sensitive applications. Measuring cold start times involves invoking the function multiple times and averaging the results. Tools like the `serverless` framework and specialized performance testing tools can automate this process.

- Execution Duration: This is the time it takes for a function to complete its execution, from the start of the invocation to its completion. Execution duration is a critical metric for cost optimization, as serverless platforms often charge based on the duration of function invocations. Measurement involves logging the start and end times within the function code.

- Memory Usage: This measures the amount of memory a function consumes during its execution. Excessive memory usage can lead to performance bottlenecks and increased costs. Monitoring tools provide insights into memory consumption patterns, enabling optimization through code adjustments or resource allocation changes.

- Throughput: This measures the number of requests a function can handle within a given time frame. It’s often expressed as requests per second (RPS). Tools like ApacheBench or JMeter are frequently used to generate load and measure throughput.

- Error Rate: The percentage of function invocations that result in errors. A high error rate indicates issues with the function code, dependencies, or underlying infrastructure. Monitoring tools collect and report error rates, allowing for proactive troubleshooting.

- Tools for Measurement:

- CloudWatch (AWS): Provides detailed metrics, logs, and monitoring capabilities for AWS Lambda functions.

- Cloud Monitoring (Google Cloud): Offers similar monitoring and logging features for Google Cloud Functions.

- Azure Monitor (Azure): Provides comprehensive monitoring for Azure Functions, including metrics, logs, and alerts.

- OpenTelemetry: An open-source observability framework for instrumenting, generating, collecting, and exporting telemetry data (metrics, logs, and traces).

- Third-party APM (Application Performance Monitoring) Tools (e.g., Datadog, New Relic): These tools offer advanced monitoring, tracing, and performance analysis capabilities.

Cold Start Time Comparison of Serverless Options

Cold start times vary significantly across serverless platforms and depend on several factors, including the programming language, function size, and platform configuration. This table provides an illustrative comparison. These values are estimates, and actual results may vary based on specific configurations and regional infrastructure.

| Platform | Programming Language | Typical Cold Start Time (ms) | Factors Influencing Cold Start |

|---|---|---|---|

| AWS Lambda | Node.js | 200 – 800 | Function size, VPC configuration, dependencies. |

| AWS Lambda | Python | 300 – 1000 | Function size, dependency imports, environment variables. |

| Google Cloud Functions | Node.js | 150 – 600 | Function size, region, container image size. |

| Google Cloud Functions | Python | 250 – 800 | Function size, initialization code, dependencies. |

| Azure Functions | Node.js | 300 – 1200 | Function size, consumption plan vs. dedicated plan, region. |

| Azure Functions | Python | 400 – 1500 | Function size, package size, configuration. |

| Knative (Kubernetes-based) | Various (Containerized) | 500 – 2000+ | Container image size, Kubernetes cluster resources, networking. |

| Cloud Run | Various (Containerized) | 100 – 500 | Container image size, CPU and memory allocation. |

As demonstrated by the table, cold start times vary considerably. Containerized serverless platforms, such as Knative, may exhibit longer cold start times due to the overhead of container initialization. Programming languages like Python, which often have larger dependencies, can also contribute to increased cold start times. Choosing the right platform and optimizing function code and dependencies are essential for minimizing cold start latency.

Using provisioned concurrency or warm-up mechanisms (where available) can mitigate cold start issues for latency-sensitive applications.

Security Considerations: Protecting Serverless Applications

The adoption of serverless architectures necessitates a robust security posture to safeguard against potential vulnerabilities. Serverless applications, due to their distributed nature and reliance on third-party services, present unique security challenges. Understanding and implementing appropriate security measures are critical for maintaining the confidentiality, integrity, and availability of data and functionality.

Access Control and Data Protection

Implementing robust access control mechanisms and data protection strategies are fundamental to securing serverless applications. This involves defining and enforcing policies that govern who can access what resources and how data is handled.

- Identity and Access Management (IAM): IAM is crucial for managing access to serverless resources. It involves creating and managing user identities, assigning permissions, and implementing multi-factor authentication (MFA). Proper IAM configuration minimizes the attack surface by restricting access to only the necessary resources. For example, a function designed to process image uploads should only have permissions to read from the upload bucket and write to the processing bucket, preventing unauthorized access to other sensitive data.

- Principle of Least Privilege: Granting only the minimum necessary permissions to each function and user significantly reduces the impact of potential security breaches. This principle limits the damage a compromised function or user can inflict. For instance, a function responsible for database updates should only have write access to specific tables and columns, preventing unauthorized modifications.

- Data Encryption: Encrypting data both in transit and at rest is a critical data protection measure. This involves encrypting data stored in databases, object storage, and other data stores. Encryption in transit ensures that data is protected while being transmitted between functions and services. Encryption at rest protects data even if the underlying storage is compromised.

- Input Validation and Sanitization: Serverless functions are often triggered by external events, making them vulnerable to malicious input. Validating and sanitizing all input data is essential to prevent common attacks like SQL injection, cross-site scripting (XSS), and command injection.

- Regular Security Audits and Penetration Testing: Conducting regular security audits and penetration tests helps identify vulnerabilities in serverless applications. These assessments should be performed by qualified security professionals to identify weaknesses in the application’s code, infrastructure, and configuration. The results of these tests should be used to remediate identified vulnerabilities and improve the overall security posture.

Security Features Offered by Different Serverless Platforms

Various serverless platforms offer built-in security features to assist developers in securing their applications. These features vary depending on the platform but generally include capabilities for access control, encryption, monitoring, and logging.

- AWS Lambda: AWS Lambda integrates with various AWS security services, including IAM, KMS (Key Management Service) for encryption, and CloudWatch for monitoring and logging. AWS also provides features like VPC (Virtual Private Cloud) integration for enhanced network security and Lambda layers for managing dependencies securely.

- Azure Functions: Azure Functions leverages Azure Active Directory (Azure AD) for identity and access management. It offers built-in encryption for data at rest and in transit, and integrates with Azure Monitor for logging and monitoring. Azure Functions also supports features like network security groups (NSGs) for controlling network traffic.

- Google Cloud Functions: Google Cloud Functions integrates with Google Cloud IAM for access control. It provides encryption options for data stored in Google Cloud Storage and Cloud SQL. Google Cloud also offers Cloud Logging and Cloud Monitoring for comprehensive logging and monitoring capabilities.

- Platform-Specific Security Features: Other serverless platforms, such as those provided by Cloudflare Workers, Knative, and OpenFaaS, also offer security features that can include features like built-in authentication, authorization, and support for secure communication protocols. These features may vary significantly between platforms.

Implementing Secure Communication Between Serverless Functions and Other Services

Secure communication between serverless functions and other services is essential for protecting sensitive data and maintaining the integrity of the application. This can be achieved using various methods, including secure protocols, encryption, and authentication mechanisms.

- HTTPS for API Calls: Utilizing HTTPS for all API calls ensures that data transmitted between functions and external services is encrypted in transit. This prevents eavesdropping and man-in-the-middle attacks. The use of HTTPS requires the use of SSL/TLS certificates, which can be managed by the serverless platform or obtained from a trusted certificate authority.

- API Gateway Authentication and Authorization: Employing an API gateway with authentication and authorization capabilities helps control access to functions. This allows for the enforcement of access policies and the validation of user identities. API gateways often support various authentication methods, such as API keys, OAuth, and OpenID Connect.

- Service-to-Service Authentication: For communication between internal services, service-to-service authentication is crucial. This can involve using mutual TLS (mTLS) or other mechanisms to verify the identity of each service before allowing communication.

- Encryption for Data Storage and Transfer: As mentioned previously, encrypting data at rest and in transit is critical. This includes encrypting data stored in databases, object storage, and other data stores. Encryption can be implemented using platform-specific features, such as KMS, or third-party encryption libraries.

- Example: Consider a scenario where a serverless function needs to access data from a database. The function should use HTTPS to communicate with the database server, and the database server should be configured to accept only authenticated connections. The function could authenticate using a service account with restricted permissions, and the database connection string should be securely stored using a secret management service.

Outcome Summary

In conclusion, the landscape of serverless computing offers a diverse array of alternatives to AWS Lambda, each with its own set of strengths and weaknesses. From open-source platforms offering unparalleled control to competing cloud provider services providing competitive pricing and features, the choices are abundant. By carefully evaluating factors such as cost, performance, scalability, and security, organizations can strategically select the best-fit solution for their specific needs.

The shift towards containerization and the utilization of serverless frameworks further enhance flexibility and streamline the development process. As the serverless paradigm continues to evolve, understanding these alternatives is crucial for maximizing efficiency, reducing costs, and building resilient, scalable applications.

Expert Answers

What are the primary benefits of using open-source serverless platforms compared to AWS Lambda?

Open-source platforms offer increased flexibility, control over infrastructure, and the ability to avoid vendor lock-in. They also allow for customization and the potential for cost savings, particularly for workloads with predictable resource usage.

How do container-based serverless solutions, such as using Docker with a serverless framework, differ from traditional Lambda deployments?

Containerization provides greater portability and consistency across different environments. It allows developers to package dependencies and configurations within containers, ensuring consistent execution and simplifying deployment pipelines. This approach also supports more complex application architectures.

What are the key considerations when comparing the pricing models of different FaaS platforms?

Key considerations include the cost per execution, memory allocation, execution duration, and the number of requests. Analyzing these factors, along with the specific workload characteristics, is crucial for optimizing costs. Some providers offer free tiers or discounts for sustained usage.

How do serverless frameworks streamline the development and deployment process?

Serverless frameworks automate infrastructure provisioning, simplify code deployment, and provide tools for managing dependencies, events, and triggers. They abstract away much of the underlying complexity, allowing developers to focus on writing code.

What are the main security considerations when using serverless functions?

Security considerations include access control, data protection, and secure communication between functions and other services. It’s crucial to implement proper authentication, authorization, and encryption to protect sensitive data and prevent unauthorized access.