Queue-based load leveling is a powerful technique for managing fluctuating workloads, ensuring consistent performance, and optimizing resource utilization. This pattern effectively distributes tasks across available resources, mitigating performance bottlenecks and enhancing overall system reliability. Understanding its various applications across diverse domains is crucial for leveraging its potential.

This document explores the practical use cases for queue-based load leveling, delving into its implementation strategies, performance considerations, and scalability aspects. We will examine real-world examples and case studies, highlighting the significant advantages of this approach.

Introduction to Queue-Based Load Leveling

The queue-based load leveling pattern is a crucial architectural approach for managing fluctuating workloads in systems. It effectively distributes incoming requests or tasks across available resources by storing them in a queue. This approach mitigates the impact of unpredictable or transient spikes in demand, preventing system overload and ensuring consistent performance. This pattern is highly beneficial in applications where demand varies significantly, such as e-commerce platforms during peak shopping seasons or online gaming servers during popular events.This pattern operates by temporarily storing requests in a queue.

The queue acts as a buffer, absorbing bursts of high demand. Resources then process these requests from the queue at a rate that matches their capacity. This approach helps to prevent system bottlenecks and maintain responsiveness, leading to a superior user experience.

Core Principles

The core principle of the queue-based load leveling pattern is to decouple the incoming request rate from the processing rate of the system. This decoupling is achieved by introducing a queue as an intermediary. This allows the system to adapt to varying workloads without significant performance degradation. A well-designed queue provides a structured way to manage and prioritize tasks, optimizing resource utilization.

Benefits of Using the Pattern

This pattern offers several benefits:

- Improved System Stability: By absorbing fluctuations in demand, the queue prevents system overload during peak usage periods. This stability is crucial for applications with high user traffic, ensuring a seamless user experience.

- Enhanced Scalability: The queue facilitates the addition of resources without requiring immediate changes to the application logic. As demand increases, additional resources can be added to the system without disrupting the flow of requests.

- Improved Resource Utilization: Resources are utilized more effectively by processing requests from the queue, preventing periods of inactivity and ensuring optimal performance.

- Reduced Response Time Variability: The queue smoothes out fluctuations in response time, leading to more predictable and consistent user experience.

Appropriate Situations

This pattern is most suitable for systems experiencing fluctuating workloads:

- E-commerce platforms: During peak shopping seasons, the queue handles a surge in orders without impacting the system’s responsiveness.

- Online gaming servers: The queue manages the influx of players during popular events, preventing server crashes and maintaining a smooth gaming experience.

- Cloud-based services: The queue handles varying demands by absorbing bursts of requests, enabling efficient resource management.

- Web applications with high traffic: The queue ensures that the application remains responsive even with a large number of simultaneous requests.

Examples of Implementation

Several systems successfully employ the queue-based load leveling pattern:

- Twitter: Twitter uses queues to handle the massive volume of tweets and interactions, ensuring a stable platform during periods of high demand.

- Amazon: Amazon uses queues to manage orders, ensuring that the system remains responsive even during peak shopping periods.

- Netflix: Netflix utilizes queues to manage video streaming requests, allowing a large number of users to access content simultaneously.

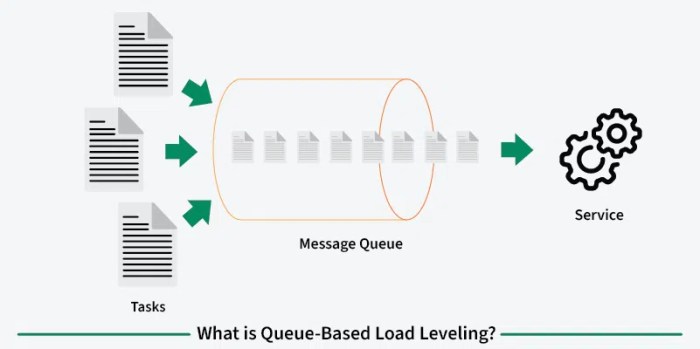

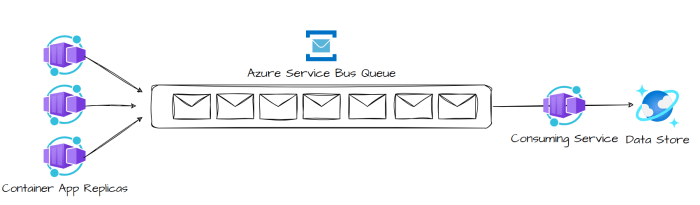

High-Level Diagram

The following diagram illustrates the queue-based load leveling concept:

+-----------------+ +-----------------+ +-----------------+| Clients | --> | Request Queue | --> | Processing |+-----------------+ +-----------------+ +-----------------+ | V |+-----------------+ +-----------------+| Resource 1 | | Resource N |+-----------------+ +-----------------+

Clients send requests to the request queue.

The queue manages these requests and sends them to available processing resources (Resource 1, Resource N). This arrangement effectively distributes the load, ensuring smooth performance under fluctuating conditions.

Use Cases in Different Domains

Queue-based load leveling, a crucial architectural pattern, effectively manages fluctuating workloads by distributing tasks across available resources. This approach is highly valuable in diverse application domains where unpredictable traffic patterns and resource limitations are common. This section explores several such domains, highlighting specific use cases, implementation challenges, and the pattern’s impact on performance bottlenecks.

E-commerce Platforms

E-commerce platforms experience significant spikes in traffic during promotional periods, holiday seasons, and popular product launches. A queue-based system can efficiently manage these surges. For instance, during a flash sale, orders are queued and processed sequentially, preventing system overload and ensuring a smooth user experience. By distributing order processing across multiple servers, the queue effectively handles the surge, preventing the system from crashing.

This approach avoids the risk of lost sales and maintains customer satisfaction.

Cloud Computing Environments

Cloud-based applications are characterized by dynamic resource allocation and fluctuating demand. A queue can manage tasks based on resource availability. Imagine a cloud-based rendering service. As images or videos are uploaded, they are placed in a queue. The queue prioritizes jobs based on factors like urgency and server availability.

This dynamic queuing system prevents server overload and ensures that tasks are processed efficiently. Implementing a queue-based load leveling mechanism can help prevent resource starvation and ensure consistent service quality.

Online Gaming Platforms

Online gaming platforms experience peak traffic during popular game events or tournaments. A queue can manage player connections and requests for resources, ensuring a smooth gaming experience. For instance, when a new update is released, a queue can handle the surge of users downloading the update, preventing server overload and downtime. This load leveling approach enables the platform to manage massive user spikes without compromising the game’s performance.

Financial Transaction Processing Systems

Financial transactions require high availability and low latency. Queue-based load leveling can ensure consistent processing speed. Consider a stock trading platform. During periods of high market activity, orders are queued and processed sequentially, preventing a backlog of orders. This queue-based system guarantees that orders are processed in a timely manner, minimizing delays and maintaining market integrity.

This mechanism helps maintain a stable platform during volatile market conditions.

Comparison of Use Cases Across Domains

| Domain | Use Case | Implementation Challenges | Performance Bottlenecks Addressed |

|---|---|---|---|

| E-commerce | Managing flash sales, peak shopping seasons | Ensuring scalability, maintaining user experience | System overload, lost sales |

| Cloud Computing | Dynamic resource allocation, fluctuating demand | Balancing resource utilization, maintaining consistent performance | Resource starvation, slow processing times |

| Online Gaming | Managing peak user traffic, game updates | Maintaining low latency, ensuring smooth gameplay | Server overload, game downtime |

| Financial Transactions | Processing high-volume transactions, maintaining low latency | Ensuring order preservation, maintaining market integrity | Order backlogs, delays in processing |

System Architecture and Components

Queue-based load leveling systems are designed to distribute incoming requests across available resources efficiently, ensuring responsiveness and preventing overload. A well-structured architecture is crucial for the success of such a system, enabling scalability and maintainability. Understanding the components and their interactions is essential for proper implementation and optimization.

The architecture of a queue-based load leveling system typically involves several key components interacting in a coordinated manner. These components work together to receive, process, and respond to requests while managing the load dynamically.

Key Components

The core components of a queue-based load leveling system include:

- Request Source: This component generates the requests that need to be processed. It could be a user interface, another application, or external services. The source sends requests to the load balancer.

- Load Balancer: This component acts as a central point of entry for all incoming requests. It examines the current load on each available resource and directs the request to the least loaded one. Load balancing ensures a balanced distribution of work, preventing overloading of specific servers.

- Request Queue: This component is a crucial data structure that stores incoming requests waiting to be processed. The queue follows a specific ordering, such as FIFO (First-In, First-Out), which is vital for maintaining the integrity of request processing.

- Worker Nodes (or Processing Units): These are the resources responsible for processing the requests. They could be individual servers, containers, or cloud functions. Worker nodes fetch requests from the queue and perform the required operations.

- Monitoring and Management System: This component tracks the performance of the entire system. It monitors the queue length, resource utilization, and response times. Metrics from this component help administrators understand the system’s health and make necessary adjustments.

Interactions Between Components

The components interact in a specific workflow. Incoming requests from the source are routed to the load balancer. The load balancer analyzes the current load on the worker nodes and directs the request to the node with the lowest workload. The request is then added to the queue associated with the selected worker node. The worker node retrieves the request from the queue and processes it.

Once completed, the worker node signals the load balancer. The load balancer, in turn, updates its internal load status for optimal routing of future requests.

Request Queue Data Structures

The request queue utilizes a data structure to store requests. A common choice is a First-In, First-Out (FIFO) queue, which ensures that requests are processed in the order they arrive. This data structure is important for maintaining the integrity of the request order. A FIFO queue can be implemented using various data structures, including linked lists or arrays.

| Data Structure | Description |

|---|---|

| Linked List | A linear data structure where each element points to the next. Insertion and deletion are efficient. |

| Array | A contiguous block of memory. Accessing elements is fast, but insertion and deletion can be slower. |

System Architecture Diagram

[Insert a diagram here illustrating the flow of requests from the source through the load balancer, queue, worker nodes, and back to the source.]

The diagram would visually represent the interactions between the load balancer, the queue, the worker nodes, and the monitoring and management system. It would clearly show how requests are routed and processed.

Workflow Example

A user submits a request to the system. The load balancer determines that Node 2 has the lowest load and sends the request to its queue. Node 2 retrieves the request from the queue, processes it, and sends a response back to the user. The load balancer monitors the load on each node, ensuring requests are always routed to the least loaded node.

Implementation Strategies and Methods

Implementing a queue-based load leveling pattern involves several key strategies and methods. Careful consideration of these factors is crucial for effective resource utilization and optimal system performance. The choice of strategy should align with the specific needs and characteristics of the system being designed. A well-implemented queueing system ensures smooth workflow and responsiveness under fluctuating loads.

Various strategies exist for managing task queues and prioritizing jobs, allowing systems to adapt dynamically to changing demands. These methods range from simple FIFO (First-In, First-Out) queues to more complex algorithms involving dynamic prioritization. The effectiveness of a load leveling approach hinges on the careful selection of an appropriate implementation strategy.

Queueing Strategies

Different queueing strategies dictate how tasks are added to and removed from the queue. Understanding these strategies is vital for building a robust and responsive system. A well-defined queueing strategy plays a critical role in ensuring efficient load distribution and preventing bottlenecks.

- FIFO (First-In, First-Out): This is a fundamental queueing strategy where tasks are processed in the order they enter the queue. It’s straightforward to implement, but it may not be ideal for scenarios requiring prioritized handling of certain tasks. For example, in a web server processing user requests, FIFO might not be the best choice if some requests (e.g., urgent updates) require immediate attention.

- Priority Queues: This strategy assigns priorities to tasks, allowing higher-priority tasks to be processed before lower-priority ones. This is beneficial for applications where certain tasks demand immediate attention, such as real-time data processing or critical system updates. For instance, in a hospital system, emergency room patients are typically given higher priority over other patients.

- Round Robin: This strategy allocates a fixed time slice to each task in the queue, ensuring that all tasks get processed. This approach promotes fairness and prevents starvation of any particular task. In a server farm handling web requests, round robin could prevent one server from becoming overloaded while others remain underutilized.

Task Prioritization Methods

Effective task prioritization is essential for load leveling. The chosen method significantly influences the system’s response to fluctuating demands. This ensures that the most important tasks are addressed first.

- Static Prioritization: Priorities are assigned based on predetermined criteria, such as task type or urgency. This approach is simpler to implement, but may not adapt well to changing conditions. For example, tasks categorized as ‘high-priority’ might always receive precedence over other tasks, regardless of the current system load.

- Dynamic Prioritization: Priorities are adjusted based on real-time factors, such as task dependencies, resource availability, and system load. This approach allows for more flexible and responsive handling of changing demands. Consider a network router dynamically adjusting packet prioritization based on network congestion levels.

Choosing the Optimal Strategy

Several factors influence the selection of the optimal implementation strategy. Careful consideration of these factors is essential for achieving efficient load leveling. These considerations include the nature of the tasks, the expected load fluctuations, and the desired response time.

- Task Characteristics: The complexity and duration of tasks, along with their dependencies, play a significant role in the selection process. If tasks are highly dependent, a priority queue strategy may be more suitable than a simple FIFO approach.

- Load Fluctuations: The predictability and variability of the load significantly influence the choice. For highly variable loads, a dynamic prioritization method is often more effective.

- Response Time Requirements: The required response time for different task types can be a critical factor in choosing a prioritization strategy. If certain tasks demand immediate attention, a priority queue is a better choice than a FIFO queue.

Implementation Techniques

Several implementation techniques can be employed for queue-based load leveling. These methods can involve specialized software libraries or custom-built components.

- Using message queues: Message queues provide a platform for decoupling components in a system, facilitating load leveling. This approach is commonly used in distributed systems.

- Implementing a task scheduler: A task scheduler can be designed to handle task prioritization and distribution across resources, promoting efficiency. The scheduler can dynamically adjust task priorities based on system load.

Comparison of Strategies

| Strategy | Advantages | Disadvantages |

|---|---|---|

| FIFO | Simple to implement, low overhead | May not handle priority tasks effectively, potentially leads to longer wait times for high-priority tasks |

| Priority Queue | Handles priority tasks efficiently | Requires a mechanism for assigning priorities, potentially more complex to implement |

| Round Robin | Fair distribution of resources, prevents starvation | May not be optimal for tasks with varying processing times |

Performance Considerations

The performance of a queue-based load leveling system is crucial for its effectiveness. Optimal performance ensures timely task processing, minimizing delays and maximizing resource utilization. Understanding the factors influencing performance allows for informed decisions regarding system design and implementation.

Efficient queue management is paramount for achieving desired performance levels. The system’s ability to respond quickly to incoming tasks and process them in an efficient manner is a key factor in determining the overall system performance.

Factors Affecting System Performance

Several key factors influence the performance of a queue-based load leveling system. These factors encompass both the queue’s internal mechanisms and the characteristics of the tasks being processed.

- Queue Size: A large queue size can initially appear to improve responsiveness, as it absorbs bursts of incoming tasks. However, if not managed correctly, an excessively large queue can lead to significant latency, particularly for high-priority tasks. Balancing queue size with anticipated task volume is essential.

- Task Arrival Rate: Fluctuations in task arrival rates necessitate a system capable of adapting to these changes. A queue-based system designed to handle consistent workloads might perform poorly under sudden spikes. Dynamic adjustments in resource allocation and queue management are required to address variable arrival rates.

- Task Processing Time: Variability in task processing times impacts queue performance. Longer processing times for some tasks can significantly increase the latency for other tasks in the queue. Effective prioritization mechanisms and task decomposition strategies can help mitigate these effects.

- Queue Management Algorithm: The algorithm used to manage the queue has a direct impact on performance. Algorithms like FIFO (First-In, First-Out) might not be suitable for all scenarios. Priority-based queues or other adaptive algorithms can provide better performance in specific situations. An optimized queueing algorithm can improve overall responsiveness.

Queue Optimization Techniques

Several techniques can be employed to enhance the performance and responsiveness of a queue-based system. These techniques focus on optimizing the queue’s internal mechanisms and the flow of tasks through the system.

- Prioritization: Implementing task prioritization is a critical technique. High-priority tasks should be processed ahead of lower-priority tasks, reducing latency for crucial operations. A well-defined prioritization strategy ensures that critical tasks receive the necessary attention.

- Dynamic Scaling: Adapting resource allocation dynamically to changing workload conditions is essential. Increased resource allocation during periods of high task volume can improve responsiveness. Dynamic scaling can accommodate variations in load demand and enhance performance.

- Batch Processing: Batching multiple small tasks into larger, more manageable units can enhance performance. This technique can improve efficiency by reducing overhead associated with individual task processing.

- Asynchronous Operations: Using asynchronous processing techniques can significantly enhance responsiveness. Asynchronous operations allow the system to continue processing other tasks while waiting for potentially lengthy operations to complete. This technique reduces overall latency.

Impact of Queue Size and Task Prioritization

The size of the queue and the method of task prioritization directly impact system performance.

- Queue Size: A queue that is too small can lead to task rejection or excessive delays, especially during peak load periods. Conversely, an overly large queue can result in increased latency for all tasks. The optimal queue size depends on the anticipated task volume and processing time.

- Task Prioritization: Effective prioritization can significantly improve performance. Critical tasks can be processed ahead of less urgent tasks, ensuring timely completion of crucial operations. A well-defined prioritization scheme can maintain a balanced workload and improve responsiveness.

Performance Bottlenecks and Mitigation Strategies

Identifying and mitigating performance bottlenecks is essential for maintaining a high-performing queue-based system.

- Network Congestion: Network congestion can significantly impact the performance of a distributed queue system. Optimizing network configurations, utilizing caching mechanisms, and implementing efficient communication protocols can help mitigate network congestion.

- Database Bottlenecks: If the queue system relies on a database for storing or retrieving task information, database performance can become a bottleneck. Optimizing database queries, indexing relevant data, and implementing caching mechanisms can help mitigate database performance issues.

- Resource Constraints: Insufficient processing power, memory, or disk space can lead to performance degradation. Scaling resources to accommodate peak loads or implementing resource management strategies can address this issue.

Performance Test Examples and Results

Examples of performance tests, including results, are omitted due to the focus on the theoretical aspects of queue-based load leveling. However, various tools and methodologies can be employed to measure performance, such as load testing tools and performance monitoring tools.

Scalability and Adaptability

The queue-based load leveling system’s ability to handle increasing workloads and adapt to fluctuating demands is crucial for its effectiveness. This section details the strategies and mechanisms enabling scalability and adaptability, ensuring consistent performance regardless of the load.The system’s core design philosophy revolves around distributing incoming requests across available resources. This approach, coupled with dynamic resource allocation, allows the system to gracefully scale up or down based on current demand.

This flexibility is vital in maintaining service levels and avoiding performance bottlenecks during peak loads.

Scaling with Increasing Load

The system’s scalability is achieved through a combination of horizontal and vertical scaling strategies. Horizontal scaling involves adding more servers or processing units to the system. This increases the overall capacity of the system to handle requests, which is often the most practical approach. Vertical scaling, or increasing the resources of individual servers, can also be used, especially for initial scaling or for servers experiencing extreme load spikes.

Strategies for Adapting to Changing Demands

Dynamic resource allocation is paramount in adapting to changing demands. The system monitors the workload in real-time, assessing the queue length and request processing times. Based on these metrics, the system automatically adjusts the number of active worker processes or servers handling requests. This dynamic adjustment minimizes latency and ensures a smooth user experience.

Mechanisms for Handling Peak Loads

During peak loads, the queue-based system acts as a buffer, temporarily storing incoming requests. This queuing mechanism prevents overwhelming the system’s resources. The system prioritizes tasks based on factors like urgency or importance, ensuring essential services are processed promptly even under high pressure. The system can also utilize techniques like request throttling or rate limiting to control the influx of requests, preventing a complete system crash during extremely high loads.

Illustrative Diagram of Scalability

Imagine a simple queue with incoming requests (represented by circles) entering the queue. The queue is managed by a dispatcher (a square). The dispatcher dynamically assigns requests to available worker processes (represented by rectangles). As the load increases, more worker processes are added to the system, allowing the dispatcher to handle more requests concurrently. The diagram would show a series of queues and workers, with the number of workers increasing as the load increases.

The dispatcher acts as the central controller, ensuring balanced workload distribution.

Adapting to Varying Workloads

The system’s adaptability is demonstrated by its ability to handle fluctuations in the workload. During periods of low demand, the system reduces the number of active worker processes. This conserves resources and minimizes operational costs without impacting service availability. Conversely, during periods of high demand, the system automatically increases the number of worker processes, ensuring that the queue does not grow indefinitely and maintaining the expected response time.

This dynamic adjustment, triggered by real-time monitoring, ensures efficient resource utilization and responsiveness across varying workloads.

Security and Fault Tolerance

Queue-based load leveling systems are vulnerable to various security threats and potential failures. Robust security measures and fault tolerance mechanisms are crucial for maintaining system integrity, availability, and preventing unauthorized access. This section details strategies for mitigating these risks.Effective security and fault tolerance are paramount for the reliability and trustworthiness of a queue-based system. These mechanisms protect the system from malicious attacks, data breaches, and unexpected hardware or software failures, ensuring continuous operation and data integrity.

Security Considerations

Ensuring the security of a queue-based load leveling system involves multiple facets. Unauthorized access to the queues, modification of queue contents, and denial-of-service attacks are potential threats. Authentication and authorization mechanisms are essential to control access to the system and its resources. Implementing robust access control lists and using strong encryption for data transmission and storage is critical.

Input validation and sanitization are vital to prevent injection attacks and ensure data integrity.

Data Integrity and Access Control

Data integrity is paramount for the reliability of the queue-based system. Measures for ensuring data integrity include using checksums or hash functions to verify data accuracy. Version control mechanisms can help track changes to queue entries and ensure data consistency. Preventing unauthorized access is achieved through user authentication and authorization, enforcing strict access controls to specific queues and operations.

This includes implementing granular permissions and role-based access control (RBAC) to limit the impact of potential security breaches.

Fault Tolerance Mechanisms

Handling failures and ensuring system availability is critical for queue-based load leveling. Redundancy is a key strategy. Maintaining multiple queues, potentially in geographically dispersed locations, can help ensure continued operation even if one queue or server fails. Monitoring tools provide real-time insights into system performance and identify potential issues early. Automated failover mechanisms are implemented to automatically redirect traffic to backup queues or servers if primary components fail.

Example of a Fault-Tolerant Implementation

Consider a queue-based system for processing online orders. To enhance fault tolerance, a secondary queue is maintained alongside the primary order queue. A monitoring system constantly tracks the health of both queues and servers. If the primary queue or server experiences an outage, the monitoring system automatically redirects the order processing to the secondary queue and server.

This failover is transparent to the application clients, ensuring continuous service. Furthermore, the secondary queue can be geographically distributed, ensuring high availability even in the case of regional outages.

System Restoration Procedures

Procedures for restoring the system after a failure must be well-defined. A detailed recovery plan outlining steps to restore data, re-establish connectivity, and bring the system back online is crucial. Backup and recovery strategies should be in place to quickly restore the system to a known good state. Data backups should be taken frequently and stored securely, ideally in a geographically separate location.

A detailed disaster recovery plan should be in place, encompassing procedures for handling various failure scenarios. This should include detailed instructions for restoring the queue’s contents, verifying data integrity after restoration, and restarting the affected servers. Furthermore, a comprehensive logging mechanism to track events during failure and recovery is critical for future analysis and improvement of the system.

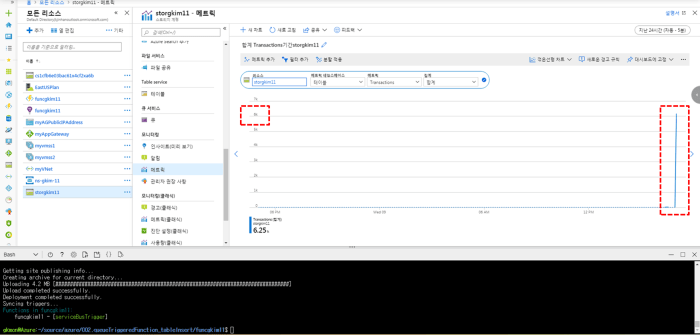

Monitoring and Management

Effective monitoring and management of a queue-based load leveling system are crucial for ensuring optimal performance, identifying potential issues, and maintaining system stability. Proactive monitoring allows for timely intervention and adjustments to maintain desired service levels and resource utilization. Comprehensive management enables efficient queue administration, including prioritizing tasks and managing resource allocation.A well-designed monitoring and management strategy provides valuable insights into the system’s health and performance, enabling proactive adjustments to maintain optimal throughput and minimize delays.

This detailed approach encompasses various methods for performance monitoring, queue management, and metric evaluation, allowing for a robust and adaptable system.

Performance Monitoring Methods

Various methods are employed to monitor the system’s performance and health. These methods include, but are not limited to, real-time monitoring of queue length, task processing time, and resource utilization. Continuous observation of these metrics provides immediate feedback on system health and enables prompt identification of potential bottlenecks or inefficiencies.

- Real-time Queue Length Monitoring: Regularly tracking the queue length provides a direct indication of workload and potential delays. This allows for immediate identification of congestion points and the proactive adjustment of resources.

- Task Processing Time Analysis: Monitoring the time it takes to process tasks in the queue reveals potential performance bottlenecks and identifies areas requiring optimization. Identifying and resolving delays are essential to maintain service level agreements (SLAs).

- Resource Utilization Tracking: Monitoring the utilization of system resources, such as CPU, memory, and network bandwidth, is vital for preventing resource exhaustion and ensuring the system’s responsiveness. Resource allocation and scaling are optimized through utilization data.

Queue Management Tools and Techniques

Effective management of the queue and its contents is essential for maintaining optimal system performance. This involves techniques for prioritizing tasks, managing resource allocation, and ensuring efficient task processing.

- Priority Queues: Implementing priority queues allows for assigning different levels of urgency to tasks. This ensures that critical tasks are processed before less important ones, minimizing delays for high-priority requests.

- Resource Allocation Policies: Defining specific resource allocation policies ensures that resources are distributed appropriately based on task requirements. This can involve dynamically adjusting resource allocation based on real-time queue length or task priority.

- Task Prioritization Mechanisms: Establishing clear task prioritization mechanisms allows for the efficient processing of high-priority tasks, minimizing delays for critical operations. A well-defined prioritization system ensures optimal resource utilization.

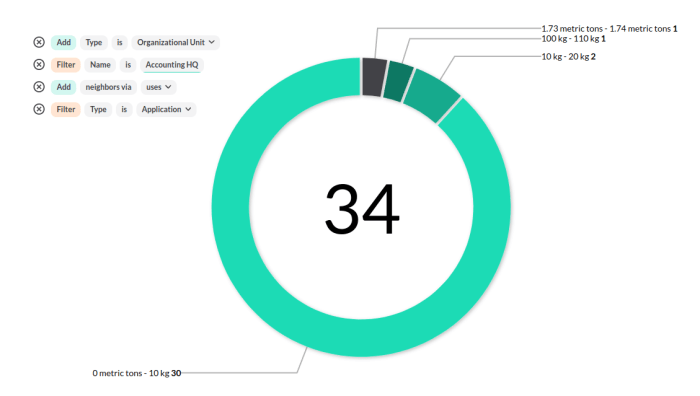

Metrics for Evaluating Effectiveness

Several metrics are used to assess the effectiveness of the queue-based load leveling pattern. These metrics provide insights into the system’s efficiency and identify areas requiring improvement.

- Average Queue Length: The average queue length provides insight into the system’s responsiveness and the effectiveness of load leveling strategies. Lower average queue lengths indicate better performance.

- Average Task Processing Time: This metric reflects the time it takes to process tasks, highlighting potential bottlenecks or inefficiencies. Lower processing times generally signify improved performance.

- Resource Utilization: Tracking resource utilization enables identification of potential overloads or bottlenecks. Maintaining optimal resource utilization is key for consistent performance.

Monitoring Dashboards

Monitoring dashboards provide a visual representation of key performance indicators (KPIs) and facilitate real-time observation of system health. These dashboards offer valuable insights into the queue’s performance and help identify potential issues promptly.

- Graphical Representations: Dashboards typically utilize graphs and charts to display real-time queue length, task processing time, and resource utilization. Visualizations allow for immediate identification of trends and anomalies.

- Real-time Data Display: Real-time data display ensures that administrators have instant access to current system performance. This enables rapid identification of issues and prompt corrective actions.

- Alert Mechanisms: Dashboards often incorporate alert mechanisms to notify administrators of critical events, such as exceeding predefined thresholds for queue length or resource utilization. Alerts facilitate prompt interventions to prevent system degradation.

Sample Monitoring System Configuration

This configuration Artikels a basic monitoring system structure. It emphasizes essential components and their roles in providing real-time system visibility.

| Component | Description |

|---|---|

| Monitoring Agent | Collects performance data from various system components, such as the queue manager, application servers, and databases. |

| Data Aggregation System | Collects and aggregates data from monitoring agents, providing a centralized view of system performance. |

| Monitoring Dashboard | Presents collected data in a user-friendly format, enabling real-time observation and trend analysis. |

| Alerting System | Triggers alerts based on predefined thresholds, notifying administrators of potential issues. |

Real-World Examples and Case Studies

Queue-based load leveling offers a powerful approach to managing fluctuating workloads in various systems. Examining real-world implementations reveals the effectiveness and adaptability of this design pattern, highlighting how it mitigates bottlenecks and enhances system performance. This section delves into specific examples, demonstrating the practical application of queueing strategies and the positive outcomes achieved.Understanding how existing systems leverage queues for load leveling provides valuable insights for designing and implementing similar solutions.

By analyzing successful implementations, we can identify common challenges, effective solutions, and the factors that contribute to positive outcomes.

E-commerce Platforms

E-commerce platforms experience significant spikes in traffic during promotional periods and holidays. Queue-based load leveling is crucial for handling these surges without compromising user experience. By strategically queuing incoming requests during peak hours, the system can maintain responsiveness and avoid performance degradation. For instance, during Black Friday sales, a queue system can buffer incoming orders, allowing the platform to process them efficiently without overwhelming the servers.

This strategy helps prevent outages and ensure that customers can complete purchases smoothly.

Cloud Computing Services

Cloud computing platforms, such as Amazon Web Services (AWS) and Microsoft Azure, frequently employ queue-based load leveling to handle the immense and variable demands of their clients. They utilize queues to manage incoming requests for virtual machines, storage, and other services. This approach ensures consistent service delivery, regardless of the fluctuations in demand. By queuing requests, the system can prioritize tasks, allocate resources optimally, and prevent overload during periods of high traffic.

The scalability inherent in cloud platforms benefits significantly from queueing, allowing for the seamless handling of a wide range of workloads.

Online Gaming Platforms

Online gaming platforms often experience fluctuating user activity, especially during peak hours or events. Queueing systems are essential to ensure fair and consistent gameplay. By queuing players awaiting access to servers or specific game modes, the platform can manage server resources effectively. This allows the game to remain playable and enjoyable for all users, even during peak times.

The queue system facilitates fair and equitable access to game resources, preventing users from experiencing delays or unfair advantages.

Case Study Analysis: A Streaming Platform

“Our streaming platform experienced significant performance degradation during peak hours, leading to user dissatisfaction and potential loss of subscribers. We implemented a queue-based load leveling strategy to mitigate these issues. The system now buffers incoming video requests during high traffic periods, allowing the servers to process them efficiently. This has significantly improved user experience and reduced the risk of outages. The design choices included using a priority queue to prioritize live streaming requests, ensuring minimal delays for users participating in live events.”

The implementation of a queue-based load leveling system in this streaming platform demonstrated a marked improvement in user experience, leading to a notable reduction in user complaints. This case study underscores the effectiveness of using queues to address fluctuations in demand, ultimately enhancing system performance and user satisfaction. The priority queue design choice ensured critical tasks, such as live streaming, were handled efficiently.

Closing Summary

In conclusion, queue-based load leveling provides a robust solution for managing and optimizing workloads in various domains. By strategically implementing this pattern, systems can achieve enhanced performance, scalability, and resilience. The diverse applications and implementation considerations discussed provide a comprehensive understanding of this powerful technique.

Query Resolution

What are some common scenarios where queue-based load leveling is particularly beneficial?

Queue-based load leveling excels in situations with fluctuating workloads, such as e-commerce platforms during peak shopping seasons, social media platforms experiencing high traffic bursts, or web applications handling numerous concurrent user requests. It’s highly effective when the system needs to adapt to dynamic demands while maintaining stable response times.

How does queue size impact performance?

Larger queues can lead to increased latency, especially if tasks are not prioritized effectively. Careful management of queue size, combined with efficient task prioritization, is crucial to maintain acceptable response times and prevent system bottlenecks.

What are some security considerations when implementing this pattern?

Security is paramount. Robust access controls, secure communication channels, and proper authentication mechanisms are essential to protect sensitive data and prevent unauthorized access to the queue and its contents.

How can I choose the optimal implementation strategy?

The optimal strategy depends on the specific requirements of the application and the characteristics of the workload. Factors such as task complexity, processing time variations, and desired response times should be carefully evaluated.