Welcome to a detailed exploration of Kubernetes Services and Ingress, two fundamental components for managing and exposing applications within a Kubernetes cluster. These tools are essential for any developer or operations professional looking to effectively deploy, scale, and manage containerized applications. Understanding their roles and how they interact is crucial for building resilient and accessible applications.

We’ll begin by demystifying Kubernetes Services, exploring their different types (ClusterIP, NodePort, LoadBalancer, and ExternalName) and their respective use cases. You’ll learn how Services act as an abstraction layer, providing a stable endpoint for accessing your Pods, regardless of their underlying infrastructure. Following this, we’ll delve into Kubernetes Ingress, which offers a more sophisticated approach to traffic management, including routing, SSL termination, and load balancing.

Through practical examples and clear explanations, this guide will equip you with the knowledge to confidently utilize these powerful Kubernetes features.

Introduction to Kubernetes Services

Kubernetes Services are a fundamental concept in the platform, designed to provide a stable and reliable way to access applications running within a Kubernetes cluster. They abstract away the complexities of the underlying Pods, enabling seamless communication and management of your applications. This introduction delves into the purpose of Services, their role in application communication, and the various types available.Kubernetes Services act as a crucial abstraction layer, simplifying how applications interact with each other and the outside world.

Without Services, applications would need to know the constantly changing IP addresses and port numbers of individual Pods, which are subject to rescheduling and scaling. Services solve this problem by providing a consistent endpoint, regardless of the underlying Pods.

Purpose of Kubernetes Services and Application Communication

The primary purpose of Kubernetes Services is to enable communication between different parts of an application, or between an application and the outside world. Services achieve this by providing a single, stable IP address and DNS name that can be used to access a set of Pods. This abstraction decouples the application from the underlying infrastructure, making it more resilient and easier to manage.

Services also facilitate load balancing, ensuring that traffic is distributed evenly across the Pods. This improves performance and availability.

Analogy for Understanding Services as an Abstraction Layer for Pods

Imagine a restaurant. The restaurant’s address and phone number represent a Kubernetes Service. The kitchen staff, who actually prepare the food, are like the Pods. The restaurant’s address and phone number remain the same, even if the kitchen staff changes (e.g., due to shift changes or staff additions). Customers (other applications or external users) only need to know the restaurant’s address to order food.

They don’t need to know the names or locations of the individual cooks. Similarly, a Service provides a stable point of contact, shielding users from the dynamic nature of the underlying Pods.

Different Types of Kubernetes Services

Kubernetes offers several Service types, each designed for a specific purpose and networking scenario. Understanding these types is crucial for designing and deploying applications effectively.

- ClusterIP: This is the default Service type. It provides a virtual IP address that is only accessible from within the cluster. It’s ideal for internal communication between different parts of an application. For instance, a frontend Pod might use a ClusterIP Service to access a backend Pod.

- NodePort: This type exposes the Service on each Node’s IP address at a static port. It makes the Service accessible from outside the cluster by using `NodeIP:NodePort`. This is often used for testing or development, but it’s generally not recommended for production deployments due to its limitations, such as requiring a static port and potentially exposing internal services to the public.

For example, a developer might use a NodePort to test a web application running in a cluster from their local machine.

- LoadBalancer: This type provisions a cloud provider’s load balancer, which then forwards traffic to the Service. It provides an external IP address and automatically load balances traffic across the Pods. This is the preferred method for exposing applications to the internet in a production environment. For instance, a web application might use a LoadBalancer Service to handle traffic from external users, distributing the load across multiple Pods for high availability.

A real-world example would be using a LoadBalancer service on Google Cloud Platform (GCP) to expose a web application to the internet.

- ExternalName: This Service type maps the Service to an external DNS name. It does not create any endpoints within the cluster. Instead, it returns a CNAME record with the external name. This is useful for accessing external services from within the cluster. For example, an application within the cluster might use an ExternalName Service to access a database service hosted outside of the cluster.

ClusterIP Services

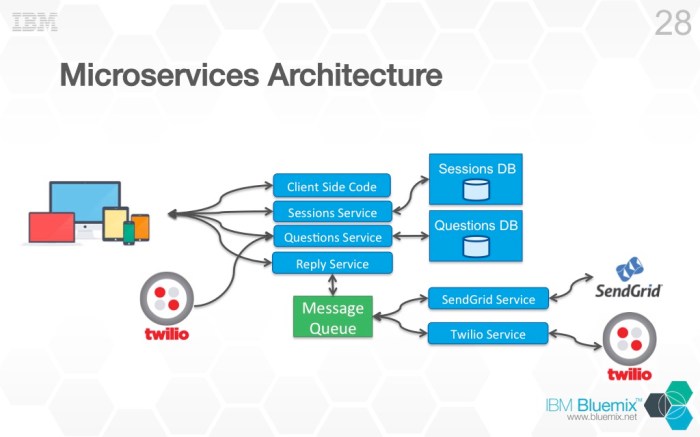

ClusterIP Services are a fundamental component of Kubernetes networking, enabling communication between pods within a cluster. They provide a stable internal IP address and DNS name for a set of pods, allowing other pods in the cluster to reliably access them. This internal routing mechanism is essential for microservices architectures and for managing the lifecycle of application deployments.

Function and Use Cases of ClusterIP Services

ClusterIP Services serve as a vital internal load balancer within a Kubernetes cluster. They expose a service on a cluster-internal IP, making the service accessible only from within the cluster. This is a common configuration for services that do not need to be directly accessed from the outside world.Here are some key use cases:

- Internal API Communication: Microservices often communicate with each other through APIs. ClusterIP Services provide a stable endpoint for these internal API calls.

- Database Access: Database services can be exposed internally using ClusterIP, ensuring that only applications within the cluster can access the database.

- Backend Processing: Services performing background tasks or data processing can be made accessible only to other services within the cluster, ensuring they are not directly exposed to external users.

- Application Deployment and Updates: ClusterIP services facilitate zero-downtime deployments. New versions of an application can be deployed and tested behind the ClusterIP service before the traffic is shifted from the old version.

Creating a ClusterIP Service with YAML Configuration

Creating a ClusterIP Service involves defining a YAML configuration file. This file specifies the service’s metadata, selector (which pods the service targets), and the port mapping.Below is an example YAML configuration file for a simple web application:“`yamlapiVersion: v1kind: Servicemetadata: name: my-web-service labels: app: my-web-appspec: selector: app: my-web-app ports:

protocol

TCP port: 80 targetPort: 8080 type: ClusterIP“`In this configuration:

- `apiVersion: v1` specifies the Kubernetes API version.

- `kind: Service` indicates that this is a service definition.

- `metadata.name: my-web-service` defines the name of the service.

- `metadata.labels: app: my-web-app` defines labels for the service.

- `spec.selector: app: my-web-app` specifies the label selector. This selects pods with the label `app: my-web-app`. The service will forward traffic to pods matching this selector.

- `spec.ports` defines the port mapping. Here, the service listens on port 80 and forwards traffic to port 8080 on the selected pods.

- `spec.type: ClusterIP` explicitly sets the service type to ClusterIP. This is the default value, but explicitly defining it is good practice.

To create the service, you would apply this YAML file using the `kubectl apply -f

Accessing a ClusterIP Service from Within the Kubernetes Cluster

Once a ClusterIP Service is created, it can be accessed from within the Kubernetes cluster using its service name or its cluster IP address.Here are the methods for accessing the service:

- Using the Service Name: Each ClusterIP Service is assigned a DNS name in the format `

. .svc.cluster.local`. Within the same namespace, you can usually access the service using just the service name (e.g., `my-web-service`). - Using the Cluster IP Address: Kubernetes assigns a cluster IP address to each ClusterIP service. This address can be used to access the service, but using the service name is generally preferred as it is more human-readable and allows for easier service relocation. You can find the cluster IP address using `kubectl get svc`.

For example, if you have a pod running within the cluster and want to access the `my-web-service` created above, you can simply make an HTTP request to `http://my-web-service` (assuming the pod has a tool like `curl` or `wget` installed). Kubernetes’ internal DNS will resolve the service name to the cluster IP address and then forward the traffic to one of the pods selected by the service.

NodePort Services

NodePort Services provide a straightforward way to expose a service to the outside world by opening a specific port on each node in your Kubernetes cluster. This allows external traffic to reach your service. While simple to configure, NodePort Services have limitations, especially in production environments. They are primarily used for testing, development, and for scenarios where direct access to a node’s IP address is acceptable or even desired.

Functionality of NodePort Services and Use Cases

NodePort Services work by opening a port on all nodes in your cluster. When a request arrives on that port on any node, Kubernetes forwards the traffic to the service and subsequently to a pod that is part of that service. This functionality allows external clients to access the service by using any node’s IP address and the specified NodePort.

- Port Assignment: When you create a NodePort Service, Kubernetes automatically assigns a port number within a configurable range (typically 30000-32767). This port is opened on all nodes in the cluster.

- Traffic Routing: When a request hits a node’s IP address on the NodePort, Kubernetes’ internal proxy (kube-proxy) intercepts the request and forwards it to one of the pods selected by the service. This selection is based on the service’s selector, which matches labels on the pods.

- Accessibility: NodePort Services are accessible from outside the cluster using any node’s public or private IP address and the assigned NodePort. This makes them easy to test and use for simple applications.

- Use Cases: NodePort Services are most appropriate for:

- Development and Testing: Quickly exposing services for testing and debugging.

- Simple Applications: Applications with low traffic volumes or that do not require sophisticated routing or load balancing.

- Learning and Demonstration: A straightforward way to understand how Kubernetes services work.

- Exposing Services in a Local Cluster: Useful for accessing services running on a local Kubernetes cluster from your host machine.

NodePort Service Configuration and Port Exposure

Configuring a NodePort Service involves defining a service resource with the `type: NodePort` field in your Kubernetes manifest. The service’s selector determines which pods are part of the service. The `ports` section specifies the port the service exposes internally (`targetPort`) and the port on which the service is accessible externally (`port`). The `nodePort` field, though optional, can be used to explicitly specify the port that will be opened on the nodes.

If not specified, Kubernetes automatically assigns a port from the configured range.

Here’s an example YAML configuration:

“`yamlapiVersion: v1kind: Servicemetadata: name: my-nodeport-servicespec: type: NodePort selector: app: my-app ports:

protocol

TCP port: 80 targetPort: 8080 nodePort: 31000“`

In this example:

- `type: NodePort` indicates a NodePort service.

- `selector: app: my-app` targets pods with the label `app: my-app`.

- `port: 80` defines the service’s internal port (the port that clients will connect to within the cluster).

- `targetPort: 8080` is the port on the pods that the service forwards traffic to.

- `nodePort: 31000` specifies the port that will be opened on all nodes in the cluster. External clients will access the service using `node_ip:31000`.

The effect of this configuration is:

- Any pod with the label `app: my-app` will be part of the service.

- The service will listen on port 80 internally.

- Traffic arriving on port 31000 of any node will be forwarded to port 8080 on the selected pods.

- Clients can access the service by using the IP address of any node in the cluster, along with port

31000. For example, if a node’s IP is `192.168.1.10`, the service can be accessed via `192.168.1.10:31000`.

Procedure for Accessing a NodePort Service from Outside the Cluster

Accessing a NodePort Service from outside the cluster is a straightforward process, as long as the nodes are reachable from the external network. The procedure involves determining the node’s IP address and the assigned NodePort.

- Identify the Node IP Address: Determine the IP address of any node in your Kubernetes cluster. This could be a public IP address if your cluster is running in the cloud or a private IP address if the cluster is behind a firewall. You can use the `kubectl get nodes -o wide` command to list all nodes and their IP addresses.

- Determine the NodePort: Find the NodePort assigned to your service. Use the command `kubectl get service

-o wide` to view the service details, including the assigned NodePort. - Access the Service: Use the node’s IP address and the NodePort to access the service. The format is typically `node_ip:node_port`. For example, if the node IP is `192.168.1.10` and the NodePort is `31000`, access the service by browsing to `http://192.168.1.10:31000` (assuming the service is serving HTTP traffic). You can also use tools like `curl` to test the connection from your local machine.

- Firewall Considerations: Ensure that any firewalls or security groups allow traffic on the NodePort. For example, if using a cloud provider, you must configure your security groups to permit inbound traffic on the NodePort.

- Load Balancing Considerations: If your cluster has multiple nodes, you might need to consider how to distribute traffic. NodePort Services don’t inherently provide load balancing. You might need to use an external load balancer to distribute traffic across the nodes if your application requires high availability or performance. This external load balancer would direct traffic to the nodes, which would then forward it to the service.

LoadBalancer Services

LoadBalancer Services provide an external IP address that directs traffic to your Kubernetes service. They are particularly useful in cloud environments where managed load balancers are readily available. These services simplify external access and traffic distribution, offering a robust solution for exposing applications to the internet.

LoadBalancer Service Operation in Cloud Environments

LoadBalancer Services leverage the infrastructure of the cloud provider. When a LoadBalancer Service is created, Kubernetes interacts with the cloud provider’s API to provision a load balancer. This load balancer is then configured to forward traffic to the nodes running the pods associated with the service. The cloud provider handles the complexities of load balancing, such as health checks, traffic distribution, and high availability.

LoadBalancer Service Configuration Example

Here’s an example of a LoadBalancer Service configuration defined in YAML:“`yamlapiVersion: v1kind: Servicemetadata: name: my-loadbalancer-servicespec: selector: app: my-app ports:

protocol

TCP port: 80 targetPort: 8080 type: LoadBalancer“`This configuration specifies a service named `my-loadbalancer-service`. It selects pods with the label `app: my-app`. It exposes port 80 and forwards traffic to port 8080 on the selected pods. The `type: LoadBalancer` directive instructs Kubernetes to provision a load balancer. Once applied, the cloud provider will assign an external IP address to the service, allowing external clients to access the application.

The external IP address is usually visible in the `kubectl get service` output.

Comparison of LoadBalancer Services and NodePort Services

LoadBalancer Services and NodePort Services both provide external access to applications running within a Kubernetes cluster, but they differ in their implementation and capabilities.

- Implementation: NodePort Services open a port on each node in the cluster and forward traffic to the service. LoadBalancer Services, on the other hand, leverage the cloud provider’s load balancing infrastructure, creating a dedicated load balancer that sits in front of the cluster.

- Complexity: NodePort Services are simpler to configure and manage, especially for small-scale deployments. LoadBalancer Services involve the interaction with the cloud provider, which can add a layer of complexity.

- Cost: NodePort Services typically don’t incur any additional costs beyond the resources used by the application. LoadBalancer Services, however, often come with associated costs from the cloud provider for the provisioned load balancer and the data transfer. The exact cost depends on the cloud provider and the load balancer’s features (e.g., traffic volume, SSL termination).

- Features: LoadBalancer Services usually offer advanced features such as health checks, SSL termination, and intelligent traffic routing, which are typically not available with NodePort Services. NodePort services provide basic external access, but lack these advanced features.

- Scalability and High Availability: LoadBalancer Services are generally more scalable and provide higher availability because the cloud provider manages the load balancer, ensuring traffic distribution across multiple nodes and automatic failover. NodePort Services rely on the availability of each node, which can be a single point of failure, especially in smaller clusters.

For instance, consider an e-commerce website. Using a LoadBalancer Service, the cloud provider can automatically scale the load balancer based on traffic demands, ensuring the website remains responsive even during peak shopping seasons like Black Friday. In contrast, a NodePort service, while functional, might struggle to handle the same traffic volume efficiently, potentially leading to performance issues. In this scenario, the advantages of a LoadBalancer Service are evident in terms of performance and scalability.

ExternalName Services

ExternalName Services provide a way to access services outside of your Kubernetes cluster, making it simple to integrate external resources into your applications. They are a powerful tool for connecting to databases, APIs, or other services hosted elsewhere, without needing to create complex network configurations.

Function of ExternalName Services

ExternalName Services serve as a DNS alias. Instead of directing traffic to a pod within the cluster, they return a CNAME record that points to the external service’s DNS name. This allows pods within the cluster to access the external service using a consistent and manageable name, simplifying service discovery and reducing the need for hardcoded IP addresses or complex routing rules.

Configuration of an ExternalName Service

Configuring an ExternalName Service involves defining a service resource with the `spec.type` set to `ExternalName` and the `spec.externalName` field set to the DNS name of the external service. This tells Kubernetes to resolve the service name to the specified external domain.Here’s an example of a YAML configuration:“`yamlapiVersion: v1kind: Servicemetadata: name: my-external-service namespace: defaultspec: type: ExternalName externalName: api.example.com ports:

port

80 targetPort: 80 protocol: TCP“`In this example:* `name: my-external-service`: This defines the name by which pods within the cluster will access the external service.

`type

ExternalName`: This specifies the service type as an ExternalName service.

`externalName

api.example.com`: This sets the external DNS name to which the service will resolve. All traffic to `my-external-service` will be routed to `api.example.com`.

`ports`

Defines the port mappings. In this case, it forwards traffic on port 80 of `my-external-service` to port 80 of `api.example.com`.After applying this configuration to your Kubernetes cluster, any pod within the `default` namespace can access the external service by simply using the DNS name `my-external-service`. Kubernetes will resolve this name to the external DNS name specified in the `externalName` field.

Beneficial Scenarios for Using ExternalName Services

ExternalName Services are particularly useful in several scenarios:

- Accessing External Databases: You can use an ExternalName Service to provide a consistent endpoint for accessing a database hosted outside the Kubernetes cluster, such as a cloud-based database service (e.g., Amazon RDS, Google Cloud SQL). This allows you to change the database instance without modifying your application’s configuration.

- Integrating with External APIs: If your application needs to interact with external APIs, an ExternalName Service simplifies the integration process. You can create a service that points to the API’s DNS name, allowing your pods to easily make API calls. This promotes a more organized and manageable approach to external service dependencies.

- Connecting to Legacy Services: For applications that need to communicate with legacy services running outside of Kubernetes, ExternalName Services provide a bridge. You can expose these legacy services through an ExternalName Service, allowing your Kubernetes-managed applications to access them seamlessly.

- Simplifying Service Discovery: When you want to abstract the underlying infrastructure of external services, ExternalName Services can be employed. Instead of hardcoding the external service’s DNS name, applications can use the service’s name within the cluster, and Kubernetes handles the resolution. This enhances flexibility and reduces the risk of application downtime.

Understanding Kubernetes Ingress

Kubernetes Ingress is a critical component for managing external access to services running within a Kubernetes cluster. It acts as an entry point, routing external traffic to the appropriate services based on rules defined in an Ingress resource. This approach provides a more sophisticated and flexible way to expose services compared to directly exposing them using NodePort or LoadBalancer services.

Purpose and Relationship to Services

Ingress serves as a single point of entry for all incoming traffic, allowing for centralized management of routing rules. It operates at the application layer (Layer 7) of the OSI model, enabling routing based on more complex criteria like hostnames and paths within a URL. Ingress relies on Services to actually forward traffic to the pods that implement the application.

In essence, Ingress defines

- how* traffic should be routed, while Services define

- where* the traffic should be routed within the cluster.

Ingress Controller Traffic Routing

Ingress controllers are responsible for implementing the rules defined in Ingress resources. They watch the Kubernetes API for changes to Ingress resources and configure a load balancer (e.g., Nginx, Traefik, HAProxy) to route traffic accordingly.For example, consider a scenario with two services: `web-service` and `api-service`. The `web-service` handles the website’s frontend, accessible at the root path `/`, while `api-service` handles the backend API, accessible at the path `/api`.

An Ingress resource could be defined as follows (simplified YAML):“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: my-ingressspec: rules:

host

example.com http: paths:

path

/ pathType: Prefix backend: service: name: web-service port: number: 80

path

/api pathType: Prefix backend: service: name: api-service port: number: 8080“`In this example:* When a user accesses `example.com/`, the Ingress controller routes the traffic to the `web-service` on port 80.

When a user accesses `example.com/api`, the Ingress controller routes the traffic to the `api-service` on port 8080.

The Ingress controller uses this configuration to update the underlying load balancer. When a request arrives, the load balancer inspects the hostname and path to determine which service to forward the request to. The load balancer then directs the traffic to the correct service, which in turn forwards the traffic to the appropriate pods.The process can be summarized as follows:

- A user sends a request to `example.com/`.

- The request reaches the Ingress controller.

- The Ingress controller examines the request based on the rules defined in the Ingress resource.

- The Ingress controller determines the target service (e.g., `web-service`).

- The Ingress controller forwards the request to the `web-service`.

- The `web-service` forwards the request to the appropriate pod.

- The pod processes the request and sends a response back to the user, following the reverse path.

Benefits of Using Ingress

Using Ingress offers several advantages over directly exposing Services via NodePort or LoadBalancer services.

- Single Entry Point: Ingress consolidates external access through a single IP address or hostname, simplifying management and reducing the number of exposed ports.

- Hostname-based Routing: Ingress enables routing based on hostnames, allowing multiple applications to be served from the same IP address. This is essential for hosting multiple websites or applications on a single cluster.

- Path-based Routing: Ingress supports path-based routing, allowing different parts of a single website or application to be served by different services. This enables complex application architectures.

- SSL/TLS Termination: Ingress controllers can handle SSL/TLS termination, offloading this task from the application and simplifying certificate management.

- Centralized Configuration: Ingress resources provide a declarative way to configure routing rules, making it easier to manage and update access policies.

- Load Balancing: Ingress controllers often integrate load balancing features, distributing traffic across multiple pods to improve performance and availability.

- Improved Security: By acting as a single point of entry, Ingress allows for centralized security policies, such as authentication, authorization, and rate limiting.

For example, consider a company that needs to expose two websites: `website1.example.com` and `website2.example.com`. Using NodePort, they would need to expose each website on a different port, which is cumbersome and difficult to manage. Using Ingress, they can define rules based on the hostname, allowing both websites to be served from the same IP address on port 80 or 443.

This significantly simplifies the deployment and management of the websites. Another example, consider a large e-commerce platform. Ingress could route traffic to different services based on the URL path. For example, requests to `/products` could be routed to a product catalog service, requests to `/cart` could be routed to a shopping cart service, and requests to `/checkout` could be routed to a payment processing service.

This allows the platform to be built using microservices, each responsible for a specific function, and managed by Ingress for external access.

Ingress Controllers

Ingress controllers are essential components in Kubernetes that provide the functionality to manage external access to services within a cluster. They act as a reverse proxy and traffic routing mechanism, enabling external clients to reach services based on defined rules. This section will explore the core concepts, popular implementations, and features of Ingress controllers.

Popular Ingress Controllers

Several Ingress controllers are available, each offering different features and capabilities. Choosing the right controller depends on the specific requirements of the application and the environment.

- Nginx Ingress Controller: One of the most widely used Ingress controllers, built on the Nginx web server. It supports a comprehensive set of features, including SSL termination, traffic shaping, and various routing rules. It’s known for its performance and stability.

- Traefik: A cloud-native edge router that is designed to be simple to deploy and configure. Traefik automatically discovers and configures itself based on the services running in the cluster. It supports dynamic configuration, making it easy to adapt to changes in the application environment.

- HAProxy Ingress Controller: Based on the HAProxy load balancer, this controller provides high performance and reliability. It’s well-suited for environments requiring advanced load balancing capabilities and security features.

- Contour: An Ingress controller based on Envoy proxy. It is designed for ease of use and provides advanced features such as automatic TLS certificate management and support for multiple namespaces.

- Ambassador: Another Ingress controller that utilizes Envoy. It focuses on developer experience and provides features such as traffic management, observability, and authentication.

Role of an Ingress Controller and Processing Ingress Rules

An Ingress controller’s primary role is to fulfill the rules defined in Ingress resources. These rules specify how external traffic should be routed to services within the cluster.

Ingress controllers operate as reverse proxies, receiving external traffic and forwarding it to the appropriate services based on the rules.

The process typically involves the following steps:

- Monitoring Ingress Resources: The controller constantly monitors the Kubernetes API for changes in Ingress resources.

- Configuring the Proxy: When an Ingress resource is created or updated, the controller configures the underlying proxy (e.g., Nginx, Envoy, HAProxy) based on the Ingress rules. This configuration defines how traffic is routed.

- Receiving Traffic: The proxy receives external traffic and applies the configured rules.

- Routing Traffic: Based on the rules (e.g., hostnames, paths), the proxy routes the traffic to the correct services.

- Handling TLS Termination: Many controllers can handle TLS termination, decrypting incoming HTTPS traffic and forwarding it to the backend services.

Common Ingress Controller Features and Functionalities

Ingress controllers offer a wide array of features to manage external access to services. The following table provides a comparison of common features and functionalities across different controllers. Note that feature availability may vary depending on the specific version and configuration.

| Feature | Nginx Ingress Controller | Traefik | HAProxy Ingress Controller | Contour |

|---|---|---|---|---|

| HTTP/HTTPS Routing | Yes | Yes | Yes | Yes |

| TLS Termination | Yes | Yes | Yes | Yes |

| Path-based Routing | Yes | Yes | Yes | Yes |

| Hostname-based Routing | Yes | Yes | Yes | Yes |

| Load Balancing | Yes | Yes | Yes | Yes |

| SSL Certificate Management (e.g., Let’s Encrypt) | Yes (via annotations) | Yes (automatic) | Yes (via annotations) | Yes (automatic) |

| Web Application Firewall (WAF) Integration | Yes (via third-party modules) | Yes (via plugins) | Yes (via configuration) | No |

| Traffic Shaping/Rate Limiting | Yes | Yes | Yes | Yes |

| Customizable Configuration | Yes (via annotations, configmaps) | Yes (via dynamic configuration) | Yes (via annotations, configmaps) | Yes (via configuration) |

| Support for gRPC | Yes | Yes | No | Yes |

Ingress Resources

Ingress resources are a crucial component of Kubernetes for managing external access to services within a cluster. They define how traffic from outside the cluster is routed to services. Ingress simplifies the process of exposing services, allowing for more flexible and controlled access compared to directly using NodePorts or LoadBalancer services.

Structure of an Ingress Resource and Its Components

An Ingress resource is defined using a YAML file and comprises several key components that specify how external traffic should be directed to services within the Kubernetes cluster. Understanding these components is essential for configuring Ingress effectively.The main components of an Ingress resource are:

- apiVersion: Specifies the API version of the Ingress resource (e.g.,

networking.k8s.io/v1). - kind: Defines the resource type, which is always

Ingress. - metadata: Contains metadata about the Ingress resource, such as its name, labels, and annotations. Annotations are used to configure specific features supported by the Ingress controller.

- spec: This section is the core of the Ingress resource and defines how traffic is routed. It includes:

- ingressClassName: (Optional) Specifies the Ingress controller that should handle this Ingress resource. If omitted, the Ingress controller may be selected by default or based on annotations.

- rules: A list of routing rules. Each rule associates a host and/or paths with a backend service.

- host: (Optional) Specifies the hostname for which the rule applies. If no host is specified, the rule applies to all incoming traffic.

- http: Contains routing information for HTTP traffic, including:

- paths: A list of path-based routing rules. Each path associates a URL path with a backend service.

- path: The URL path to match (e.g.,

/app1). - pathType: Specifies how the path should be matched (e.g.,

Prefix,Exact, orImplementationSpecific). - backend: Specifies the service and port to which traffic should be forwarded.

- path: The URL path to match (e.g.,

- paths: A list of path-based routing rules. Each path associates a URL path with a backend service.

- tls: (Optional) Specifies TLS configuration for secure connections, including:

- hosts: A list of hostnames for which TLS is enabled.

- secretName: The name of the Kubernetes Secret containing the TLS certificate and key.

Creating an Ingress Resource Configuration with Host-Based Routing

Host-based routing allows you to route traffic to different services based on the hostname in the incoming request. This is useful when you want to host multiple applications on the same IP address or domain.Here’s an example of an Ingress resource that uses host-based routing. This example assumes you have two services, service-app1 and service-app2, running in your cluster.“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: host-based-routing-ingress annotations: # Example annotation for an Ingress controller (e.g., nginx) nginx.ingress.kubernetes.io/rewrite-target: /spec: ingressClassName: nginx # Specify the Ingress controller rules:

host

app1.example.com http: paths:

path

/ pathType: Prefix backend: service: name: service-app1 port: number: 80

host

app2.example.com http: paths:

path

/ pathType: Prefix backend: service: name: service-app2 port: number: 80“`In this example:

- The

ingressClassName: nginxline specifies that the Nginx Ingress controller should handle this Ingress resource. The specific Ingress controller to use needs to be installed and configured in your Kubernetes cluster. - Two routing rules are defined under the

rulessection. - The first rule routes traffic to

app1.example.comto theservice-app1service on port 80. - The second rule routes traffic to

app2.example.comto theservice-app2service on port 80. - The

pathType: Prefixindicates that any path under the root (/) will be matched. So, requests toapp1.example.com/anythingwill be routed toservice-app1.

To apply this configuration, you would save it as a YAML file (e.g., host-based-ingress.yaml) and run kubectl apply -f host-based-ingress.yaml. Make sure your DNS is configured to point app1.example.com and app2.example.com to the external IP address of your Ingress controller (or the LoadBalancer service created by the Ingress controller).

Demonstrating How to Configure Path-Based Routing in an Ingress Resource

Path-based routing allows you to route traffic to different services based on the URL path in the incoming request. This is useful when you want to serve different parts of an application or different applications from the same domain.Here’s an example of an Ingress resource that uses path-based routing. This example also assumes you have two services, service-app1 and service-app2, running in your cluster.“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: path-based-routing-ingress annotations: # Example annotation for an Ingress controller (e.g., nginx) nginx.ingress.kubernetes.io/rewrite-target: /$1spec: ingressClassName: nginx # Specify the Ingress controller rules:

host

example.com http: paths:

path

/app1 pathType: Prefix backend: service: name: service-app1 port: number: 80

path

/app2 pathType: Prefix backend: service: name: service-app2 port: number: 80“`In this example:

- The

ingressClassName: nginxline specifies that the Nginx Ingress controller should handle this Ingress resource. - The Ingress resource uses a single rule that applies to

example.com. - The

pathssection defines the path-based routing rules. - The first rule routes traffic to

example.com/app1(and any path starting with/app1, e.g.,example.com/app1/resource) to theservice-app1service on port 80. - The second rule routes traffic to

example.com/app2(and any path starting with/app2, e.g.,example.com/app2/anotherresource) to theservice-app2service on port 80. - The annotation

nginx.ingress.kubernetes.io/rewrite-target: /$1rewrites the URL path before forwarding the request to the backend service. In this case, it removes the prefix (/app1or/app2) from the request path before forwarding it to the service. Without this annotation,service-app1would receive requests with the path/app1/resource, which is probably not what you want.

To apply this configuration, save it as a YAML file (e.g., path-based-ingress.yaml) and run kubectl apply -f path-based-ingress.yaml. Ensure your DNS is configured to point example.com to the external IP address of your Ingress controller.

Ingress Annotations

Ingress annotations provide a mechanism to configure advanced features and customize the behavior of an Ingress resource. They allow for fine-grained control over how traffic is managed and routed to the services behind the Ingress. Annotations are key-value pairs added to the `metadata` section of the Ingress resource definition, allowing you to tailor the Ingress to meet specific requirements of your application or environment.

These annotations are interpreted by the Ingress controller, which then configures the underlying load balancer or proxy accordingly.

Customization Capabilities of Ingress Annotations

Ingress annotations enable a wide range of customization options, allowing for extensive control over Ingress behavior. They are specific to the Ingress controller in use; therefore, the available annotations and their functionality vary depending on the controller implementation (e.g., Nginx, Traefik, HAProxy).

- Traffic Management: Annotations can control traffic routing, including path-based routing, host-based routing, and header-based routing. This enables complex routing rules based on various criteria.

- Load Balancing: They can influence load-balancing algorithms, session affinity, and other load-balancing parameters, ensuring optimal distribution of traffic across backend services.

- Security: Annotations allow for the configuration of security features like Web Application Firewalls (WAFs), TLS termination, and rate limiting to protect applications from various threats.

- Caching: Some annotations support caching mechanisms, improving performance by caching frequently accessed content.

- Request and Response Modification: Annotations can modify request and response headers, enabling tasks such as adding security headers or modifying the content served to the client.

- Monitoring and Logging: Annotations can be used to configure logging and monitoring, providing insights into traffic patterns and application performance.

Commonly Used Ingress Annotations

The specific annotations available depend on the Ingress controller used. However, some annotations are widely supported and used for common tasks.

- `nginx.ingress.kubernetes.io/rewrite-target`: This annotation, commonly used with the Nginx Ingress controller, rewrites the target URL before forwarding the request to the backend service. This is useful when the backend service expects a different path than the one exposed by the Ingress.

For example, if the Ingress exposes the path `/app` but the backend service expects requests at `/`, the annotation `nginx.ingress.kubernetes.io/rewrite-target: /` would rewrite the path.

- `nginx.ingress.kubernetes.io/proxy-body-size`: Sets the maximum allowed size of the client request body. This annotation helps prevent denial-of-service attacks.

- `kubernetes.io/ingress.class`: Specifies the Ingress controller class that should handle the Ingress resource. This is particularly useful when multiple Ingress controllers are deployed in the same cluster.

For example, `kubernetes.io/ingress.class: nginx` directs the Ingress to be handled by the Nginx Ingress controller.

- `cert-manager.io/cluster-issuer`: Used in conjunction with cert-manager, this annotation specifies the issuer that should be used to obtain a TLS certificate.

- `traefik.ingress.kubernetes.io/router.middlewares`: Specifies middleware configurations in Traefik. Middlewares can be used for various functionalities, such as rate limiting, authentication, and header modifications.

Configuring TLS Termination in an Ingress Resource Using Annotations

TLS termination, also known as SSL termination, is the process of decrypting encrypted traffic (HTTPS) at the Ingress controller. This allows the backend services to receive unencrypted HTTP traffic. Annotations play a crucial role in configuring TLS termination within an Ingress resource. The specific annotations used vary based on the Ingress controller.

- Using `cert-manager` and annotations: This is a popular approach. Cert-manager automates the process of obtaining and managing TLS certificates. You typically annotate the Ingress resource to indicate that you want to use `cert-manager` to obtain a certificate.

Example:

“`yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: letsencrypt-prod # or letsencrypt-staging for testing

spec:

tls:-hosts:

-example.com

secretName: example-com-tls

rules:-host: example.com

http:

paths:-path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80

“`In this example, the `cert-manager.io/cluster-issuer` annotation instructs `cert-manager` to obtain a certificate from the specified issuer (`letsencrypt-prod` in this case) and store it in a Kubernetes secret named `example-com-tls`.

The `tls` section specifies the hosts for which TLS is enabled and the name of the secret containing the TLS certificate.

- Using Ingress controller-specific annotations: Some Ingress controllers provide their own annotations for TLS configuration.

Example (Nginx Ingress Controller):

“`yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/ssl-redirect: “true” # Redirect HTTP to HTTPS

spec:

tls:-hosts:

-example.com

secretName: example-com-tls

rules:-host: example.com

http:

paths:-path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80

“`In this example, the `nginx.ingress.kubernetes.io/ssl-redirect: “true”` annotation configures the Nginx Ingress controller to redirect all HTTP traffic to HTTPS.

The `tls` section specifies the TLS configuration, including the host and the secret containing the TLS certificate. The secret, `example-com-tls`, needs to be created and populated with a valid certificate and key, either manually or using a tool like `cert-manager`.

- Storing Certificates: The TLS certificates and keys are typically stored in Kubernetes secrets. The Ingress controller reads the secret and uses the certificate to decrypt the TLS traffic.

Differences between Services and Ingress

Kubernetes Services and Ingress are both crucial components for exposing applications running within a Kubernetes cluster, but they address different aspects of network access. Understanding their distinct roles and how they complement each other is essential for effective Kubernetes deployments. This section will compare and contrast these two key concepts, highlighting their advantages, disadvantages, and practical applications.

Comparing Kubernetes Services and Ingress

Kubernetes Services primarily focus on abstracting the underlying Pods and providing a stable IP address and DNS name for accessing them within the cluster. Ingress, on the other hand, operates at the application layer (Layer 7 of the OSI model) and manages external access to Services, often including features like routing, SSL termination, and load balancing.Here’s a table summarizing the key differences:

| Feature | Service | Ingress |

|---|---|---|

| Layer of Operation | Layer 4 (TCP/UDP) | Layer 7 (HTTP/HTTPS) |

| Purpose | Abstracts Pods and provides internal access. | Manages external access and routing to Services. |

| Functionality | Load balancing within the cluster, service discovery. | Routing based on hostnames, paths, SSL termination, load balancing across multiple Services. |

| Configuration | Defined using a Service manifest. | Defined using an Ingress resource and an Ingress Controller. |

| Scope | Primarily internal to the cluster. | External access to the cluster. |

Advantages and Disadvantages of Services

Services offer several benefits for managing internal application access. However, they also have limitations, particularly when it comes to external exposure.The advantages of using Kubernetes Services include:

- Service Discovery: Services provide a consistent DNS name and IP address, enabling Pods to discover and communicate with each other, regardless of their underlying IP addresses.

- Load Balancing: Services distribute traffic across multiple Pods, ensuring high availability and improved performance within the cluster.

- Abstraction: Services abstract the complexity of Pod management, allowing for scaling and updates without impacting application access.

- Internal Access: Services facilitate communication between different parts of the application within the cluster.

The disadvantages of using Kubernetes Services include:

- Limited External Exposure: Services like NodePort and LoadBalancer can expose applications externally, but they often lack advanced routing and management features.

- Port Conflicts: NodePort Services require a specific port on each node, which can lead to port conflicts and management overhead.

- No Host-Based Routing: Services, by themselves, do not support routing based on hostnames or paths.

Advantages and Disadvantages of Ingress

Ingress provides powerful features for managing external access to applications, but it also has its own set of considerations.The advantages of using Kubernetes Ingress include:

- Host and Path-Based Routing: Ingress allows routing based on hostnames and paths, enabling multiple applications to share the same IP address.

- SSL Termination: Ingress can handle SSL termination, simplifying certificate management and improving security.

- Load Balancing: Ingress controllers often provide load balancing capabilities, distributing traffic across multiple Services.

- Centralized Configuration: Ingress consolidates external access configuration, making it easier to manage and update.

The disadvantages of using Kubernetes Ingress include:

- Dependency on Ingress Controller: Ingress functionality relies on an Ingress Controller, which needs to be deployed and configured separately.

- Complexity: Configuring Ingress can be more complex than configuring a simple Service.

- Vendor Lock-in (Potential): While Ingress is a Kubernetes resource, specific features and implementations can vary between Ingress Controllers.

Using Services and Ingress Together: A Practical Scenario

Services and Ingress are often used in conjunction to provide a robust and flexible application architecture.Consider a scenario where you have a web application deployed in a Kubernetes cluster, consisting of a frontend, a backend API, and a database.Here’s how Services and Ingress might be used:

- Backend API Service (ClusterIP): A ClusterIP Service is created for the backend API. This Service provides an internal IP address and DNS name for the frontend Pods to communicate with the API. This ensures internal communication is isolated and secure.

- Frontend Service (ClusterIP): Another ClusterIP Service is created for the frontend application, enabling internal communication.

- Database Service (ClusterIP): A ClusterIP Service is also created for the database, allowing backend pods to connect to the database securely.

- Ingress Resource: An Ingress resource is created, using an Ingress Controller like Nginx or Traefik. The Ingress resource defines rules for routing external traffic.

- Routing Rules: The Ingress resource defines routing rules based on hostnames and paths. For example:

- Traffic to `www.example.com/api` is routed to the backend API Service.

- Traffic to `www.example.com/` is routed to the frontend Service.

- SSL Termination (Optional): The Ingress resource can be configured to handle SSL termination, providing secure HTTPS access to the application.

In this scenario, the ClusterIP Services provide internal access and load balancing within the cluster, while the Ingress resource manages external access, routing, and potentially SSL termination. This combination allows for a scalable, secure, and manageable application deployment. For example, if the frontend application is scaled to 3 pods, the Ingress controller would automatically distribute the traffic across all 3 pods, based on the defined rules.

Advanced Ingress Configurations

Ingress resources in Kubernetes offer a powerful way to manage external access to services within a cluster. Beyond basic routing, they provide advanced features to handle complex requirements such as SSL termination, traffic shaping, and request modification. This section delves into these advanced capabilities, offering practical examples and configurations.

SSL Termination

SSL termination, or TLS termination, is the process of decrypting SSL/TLS encrypted traffic at the Ingress controller. This offloads the encryption/decryption workload from the backend services, improving performance and simplifying certificate management. This can be especially beneficial for services that don’t inherently handle SSL.To configure SSL termination, you need to provide the Ingress controller with the necessary SSL/TLS certificates and keys.

These can be stored as Kubernetes Secrets. The Ingress resource then specifies the certificate and key to be used for the domain.Here’s an example of an Ingress resource configuration for SSL termination:“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: example-ingress annotations: nginx.ingress.kubernetes.io/ssl-redirect: “true” # Optional: Redirect HTTP to HTTPSspec: tls:

hosts

example.com

secretName: example-tls-secret # Reference to a Secret containing the certificate and key rules:

host

example.com http: paths:

path

/ pathType: Prefix backend: service: name: example-service port: number: 80“`In this example:* `tls` section defines the SSL/TLS configuration.

- `hosts` specifies the domain name (`example.com`) for which SSL termination is enabled.

- `secretName` references a Kubernetes Secret named `example-tls-secret` which should contain the `tls.crt` (certificate) and `tls.key` (private key).

The `nginx.ingress.kubernetes.io/ssl-redirect

“true”` annotation (specific to the Nginx Ingress controller) automatically redirects HTTP traffic to HTTPS.

Load Balancing

Ingress controllers also act as load balancers, distributing traffic across multiple pods of a service. The Ingress resource itself doesn’t define the load balancing algorithm; this is typically handled by the Ingress controller’s underlying implementation (e.g., Nginx, Traefik, etc.). Common load balancing algorithms include round-robin, least connections, and IP hash. The specific algorithm used can often be configured through annotations on the Ingress resource.The load balancing behavior is determined by the Ingress controller and its configuration.

The Ingress resource directs traffic to the service, and the Ingress controller then distributes the traffic across the available pods of that service.Consider this example, where the service `my-app-service` has multiple pods:“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: my-app-ingressspec: rules:

host

myapp.example.com http: paths:

path

/ pathType: Prefix backend: service: name: my-app-service port: number: 80“`The Ingress controller will distribute traffic across all available pods associated with `my-app-service`, based on its default load balancing configuration (usually round-robin).

Rewrite Rules

Rewrite rules allow modification of the incoming request before it’s forwarded to the backend service. This is useful for tasks like:* Changing the path of the request.

- Adding or modifying request headers.

- Redirecting requests to a different URL.

These rules are typically implemented through annotations specific to the Ingress controller being used. For example, the Nginx Ingress controller provides annotations for path rewriting.Here’s an example of using the Nginx Ingress controller to rewrite the path:“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: rewrite-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: /$1spec: rules:

host

example.com http: paths:

path

/app(/|$)(.*) # Matches /app or /app/anything pathType: Prefix backend: service: name: backend-service port: number: 80“`In this example:* The Ingress controller is configured to rewrite the path using the `nginx.ingress.kubernetes.io/rewrite-target` annotation.

- The `path` in the `paths` section uses a regular expression to capture any characters after `/app/`.

- The rewrite target `/$1` replaces the original path with the captured group (represented by `$1`), effectively removing `/app` from the URL before forwarding the request to `backend-service`. So, a request to `/app/foo` will be rewritten to `/foo` before being sent to the backend service.

Implementing HTTP to HTTPS Redirection

Redirecting HTTP traffic to HTTPS is a common security practice. It ensures that all communication with your application is encrypted. This can be achieved using Ingress annotations.Here’s a configuration for HTTP to HTTPS redirection using the Nginx Ingress controller:“`yamlapiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: https-redirect-ingress annotations: nginx.ingress.kubernetes.io/ssl-redirect: “true” # Enables HTTP to HTTPS redirectionspec: rules:

host

example.com http: paths:

path

/ pathType: Prefix backend: service: name: my-app-service port: number: 80 tls: # Optional: Include TLS configuration if you have SSL/TLS enabled

hosts

example.com

secretName: tls-secret“`In this example:* The `nginx.ingress.kubernetes.io/ssl-redirect: “true”` annotation tells the Nginx Ingress controller to automatically redirect HTTP traffic to HTTPS. The `tls` section, if included, defines the SSL/TLS configuration for the domain, using a Kubernetes Secret to store the certificate and key. If you are not terminating TLS at the Ingress controller, you can omit the `tls` section.

Routing Traffic Based on Headers

Ingress resources can be used to route traffic based on various criteria, including HTTP headers. This allows for sophisticated traffic management, such as A/B testing, canary deployments, and routing requests to different services based on user agents or other header values.Here’s a blockquote example demonstrating how to route traffic based on a custom header:“`

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: header-based-routing annotations: nginx.ingress.kubernetes.io/configuration-snippet: | if ($http_x_custom_header = “value1”) proxy_pass http://service-a; if ($http_x_custom_header = “value2”) proxy_pass http://service-b; # Default route if no header matches proxy_pass http://service-c; spec: rules:

host

example.com http: paths:

path

/ pathType: Prefix backend: service: name: service-c port: number: 80

“`In this example:* The `nginx.ingress.kubernetes.io/configuration-snippet` annotation allows for custom Nginx configuration.

- The `if` statements check the value of the `X-Custom-Header` header.

- If the header is “value1”, traffic is routed to `service-a`.

- If the header is “value2”, traffic is routed to `service-b`.

- If the header doesn’t match either value, or the header is not present, traffic defaults to `service-c`.

This approach provides flexibility in directing traffic based on header values, enabling features like user-specific routing or testing different versions of an application.

Final Summary

In summary, this guide has provided a comprehensive overview of Kubernetes Services and Ingress, from their basic functionalities to advanced configurations. We’ve explored the nuances of each Service type, the advantages of using Ingress, and how to configure both to optimize your application deployments. By mastering these concepts, you’ll be well-equipped to build scalable, accessible, and robust applications within your Kubernetes environment.

Continuous learning and experimentation with these tools will further enhance your Kubernetes expertise, paving the way for more efficient and effective cloud-native application management.

Query Resolution

What is the primary difference between a Service and an Ingress?

A Service provides a stable IP address and DNS name for a set of Pods, acting as an internal load balancer within the cluster. Ingress, on the other hand, provides external access to these Services, often with features like routing, SSL termination, and load balancing.

Can I use an Ingress without a Service?

No, Ingress routes traffic to Services. Ingress relies on Services to forward traffic to the underlying Pods. You must have a Service defined before you can use Ingress to manage traffic to your application.

What are Ingress controllers, and why are they important?

Ingress controllers are implementations of the Ingress specification. They watch for changes to Ingress resources and configure a load balancer (like Nginx or Traefik) to route traffic according to the Ingress rules. They are essential for actually applying the Ingress configurations.

How do I choose an Ingress controller?

The choice of Ingress controller depends on your specific needs and environment. Consider factors such as features, performance, community support, and integration with your cloud provider. Popular choices include Nginx Ingress Controller, Traefik, and others, each offering different strengths.