The concept of infinite bandwidth, while appealing in theory, often proves to be a fallacy in real-world systems and processes. This comprehensive guide explores the pitfalls of assuming unlimited capacity and provides practical strategies to mitigate the inherent limitations. Understanding and addressing these constraints is crucial for successful project management, efficient system design, and optimized resource utilization.

This exploration delves into the complexities of finite bandwidth, examining how its limitations manifest across various domains, from software development to network design and data management. We’ll uncover the consequences of ignoring these limitations and provide actionable strategies for planning, implementing, and maintaining systems that account for realistic bandwidth constraints.

Defining the Fallacy

The “infinite bandwidth” fallacy, prevalent in various fields, arises from an oversimplified view of capacity and resource availability. It assumes that systems and processes can handle an unlimited flow of data or requests without experiencing performance degradation or bottlenecks. This assumption often leads to unrealistic expectations and potentially problematic design choices.The core concept hinges on the belief that resources, like communication channels or processing power, can accommodate an arbitrarily large volume of information or tasks.

This is a theoretical ideal, rarely reflecting reality. Practical systems inevitably face constraints. Understanding these limitations is critical for designing robust and efficient systems.

Assumptions and Limitations

The infinite bandwidth fallacy rests on several key assumptions that are frequently inaccurate in the real world. These assumptions often lead to underestimation of the true costs and challenges in designing and maintaining systems. A critical misunderstanding is the failure to account for the inherent limitations in processing, transmission, and storage capacities.

- Unbounded Capacity: Systems are often portrayed as possessing an infinite ability to handle requests or data flows. This is a false assumption. In reality, all systems have finite resources, including network bandwidth, storage capacity, and processing power.

- Constant Performance: The fallacy frequently overlooks the potential for performance degradation under high load. Systems are not designed to operate flawlessly under all conditions. Performance is dependent on the level of demand, and as load increases, so does the probability of errors and slowdowns.

- Ignoring Latency: The fallacy often ignores the delay associated with transmitting, processing, and responding to requests. These delays can accumulate, potentially causing unacceptable response times and system instability under heavy load.

- Uniform Resource Availability: It frequently overlooks the possibility of uneven resource distribution. In a distributed system, some parts might experience significant load while others remain relatively idle. This disparity can lead to bottlenecks in specific components.

Examples of the Fallacy

The infinite bandwidth fallacy manifests in various contexts, impacting design and operational decisions. These examples highlight the potential for problems when the limitations are not considered.

- Network Design: Network designers might overestimate the capacity of a network, neglecting the need for adequate routing and buffering to prevent congestion. This can lead to slowdowns and even complete network outages during peak hours.

- Software Design: Software applications can be designed to handle a fixed number of users or transactions. Without proper scaling mechanisms, these applications may become unresponsive or crash when the expected load exceeds their capacity.

- Cloud Computing: Cloud-based services can appear to offer unlimited bandwidth. However, actual bandwidth capacity is limited by the physical infrastructure. Overestimating capacity can lead to unexpected costs and performance issues, especially during periods of high demand.

Theoretical vs. Practical Bandwidth

The distinction between theoretical and practical bandwidth is crucial in understanding the fallacy. Theoretical bandwidth represents the maximum possible data transfer rate, often stated in specifications. Practical bandwidth, however, is the rate achievable in a real-world setting, often significantly lower due to various factors.

- Theoretical Bandwidth: Represents the maximum possible data transfer rate under ideal conditions. This is often a calculated value and does not account for practical limitations.

- Practical Bandwidth: The actual rate of data transfer in a real-world scenario. This is influenced by factors like network congestion, hardware limitations, and software overhead. It’s often considerably lower than theoretical bandwidth.

Consequences of Ignoring Limitations

Failing to acknowledge bandwidth limitations can have significant repercussions. These consequences range from minor performance issues to complete system failures.

- Performance Degradation: Systems may experience slowdowns, increased latency, and reduced responsiveness under high load. Users may encounter delays and frustration.

- System Instability: In extreme cases, ignoring bandwidth constraints can lead to system crashes, data loss, and security vulnerabilities.

- Increased Costs: Overestimating capacity can lead to unnecessary infrastructure investments. Underestimating it can necessitate costly upgrades to maintain performance levels.

- Security Risks: When systems are not properly scaled, they may become vulnerable to denial-of-service attacks. A system that cannot handle the influx of requests may crash, leading to downtime and possible data breaches.

Recognizing the Fallacy in Practice

The infinite bandwidth fallacy, while seemingly benign in theoretical discussions, manifests significantly in practical applications, particularly in software development, business processes, and infrastructure planning. Understanding these manifestations is crucial for avoiding unrealistic expectations and managing projects effectively. The core problem lies in the tendency to underestimate the complexity and resources required to deliver systems with seemingly boundless capacity.

Software Development Manifestations

Software development projects frequently suffer from the infinite bandwidth fallacy. Teams might underestimate the time needed for complex integrations, unforeseen edge cases, or extensive testing. This can lead to rushed development cycles, compromised code quality, and ultimately, systems that are less reliable and robust than anticipated. A common example is estimating the time to develop a feature without considering the necessary design, coding, testing, and deployment phases.

Furthermore, neglecting the potential for unforeseen issues and their resolution results in overly optimistic timelines.

Impact on Project Timelines and Budgets

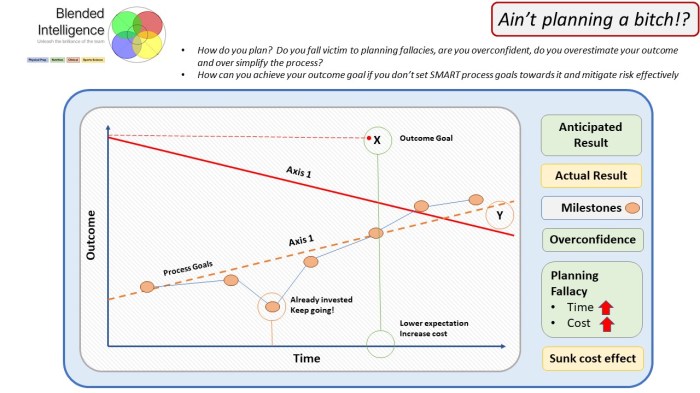

The fallacy often leads to unrealistic project timelines and budgets. Teams, driven by the illusion of unlimited resources, might overpromise on delivery dates and underestimate the cost of unforeseen challenges. This frequently results in project delays, cost overruns, and ultimately, reduced stakeholder satisfaction. For instance, a project aiming to integrate multiple legacy systems might underestimate the time required to address data discrepancies and migration issues.

Business Process Applications

Beyond software development, the infinite bandwidth fallacy impacts business processes. Businesses might assume they can seamlessly integrate new technologies or processes without considering the training, retraining, or cultural changes required. For example, a company adopting a new CRM system might underestimate the time needed to train employees on the new system and adapt their workflows. This can lead to inefficiencies, frustration, and ultimately, a failure to realize the benefits of the new system.

Network Design and Infrastructure Planning

The infinite bandwidth fallacy is also prominent in network design and infrastructure planning. Network architects might overestimate the capacity of current infrastructure or underestimate the impact of future growth. This can lead to insufficient bandwidth for expected traffic, causing performance bottlenecks and service disruptions. For instance, a company might assume their current network can handle the increasing demand of remote workers without upgrading their hardware or bandwidth allocation.

Realistic vs. Idealized Bandwidth Scenarios

| Characteristic | Realistic Bandwidth | Idealized Bandwidth |

|---|---|---|

| Initial Capacity | Limited by existing infrastructure, potentially requiring upgrades | Unlimited, accommodating all foreseen and unforeseen demands |

| Growth Capacity | Dependent on planned upgrades and scalability | Automatically adjusts to accommodate future demands |

| Traffic Patterns | Variable and potentially unpredictable, with peak loads | Uniform and predictable, with consistent demand |

| Maintenance | Requires ongoing monitoring and potential maintenance | Automatically managed and requires no maintenance |

| Cost | High upfront costs for upgrades and ongoing maintenance | Low ongoing costs with automated scaling |

This table illustrates the key differences between realistic and idealized bandwidth scenarios. Real-world scenarios involve variable traffic, limited initial capacity, and the need for ongoing maintenance, which are often neglected when the infinite bandwidth fallacy is applied.

Strategies for Mitigation

Addressing the infinite bandwidth fallacy requires a proactive and adaptable approach in software development. Ignoring finite bandwidth limitations can lead to project delays, cost overruns, and user frustration. Effective strategies for mitigation involve careful planning, efficient communication, and proactive problem-solving. These strategies ensure projects remain within reasonable timeframes and resource constraints.Software development projects often assume unlimited bandwidth, which leads to unrealistic estimations and subsequent issues.

Practical mitigation strategies recognize the limitations of real-world network conditions and incorporate them into the project lifecycle. This proactive approach ensures projects are not only feasible but also deliver value within defined constraints.

Design Strategies for Finite Bandwidth

Careful consideration of network conditions during the design phase is crucial. This includes optimizing data structures for efficient transmission and minimizing the volume of data exchanged. Utilizing techniques such as compression, caching, and message queuing can significantly reduce bandwidth consumption. Employing a modular design allows for incremental deployment and testing, enabling easier management of bandwidth needs. Data serialization and protocol selection should also be carefully considered to minimize the size of transferred data.

Planning and Managing Projects with Realistic Bandwidth Constraints

Realistic bandwidth constraints must be incorporated into project planning. This involves accurate estimations of data transfer rates, network latency, and potential congestion points. Project timelines should be adjusted to account for bandwidth-related delays. Agile methodologies, emphasizing iterative development and frequent testing, can help adapt to unexpected bandwidth limitations. Clearly defining bandwidth requirements and dependencies early in the project lifecycle will aid in successful implementation.

Improving Communication Efficiency

Efficient communication is vital for navigating bandwidth limitations. Strategies such as using asynchronous communication channels (e.g., email, message queues) can reduce the demand on real-time connections. Prioritizing essential communication channels and avoiding unnecessary data transmission can significantly improve efficiency. Optimizing communication protocols for reduced bandwidth usage, and utilizing data compression techniques when applicable, are also key. Regular communication about bandwidth limitations and potential impact on project timelines ensures alignment among team members.

Anticipating and Addressing Potential Bottlenecks

Anticipating potential bottlenecks is critical for successful project completion. Analyzing network traffic patterns, identifying potential congestion points, and proactively implementing solutions is essential. Testing different network configurations and scenarios allows for early identification of potential issues. Implementing load balancing strategies distributes traffic across multiple servers, reducing the strain on any single point of access. Thorough monitoring of network performance during testing and deployment phases is critical for proactive management of bottlenecks.

Common Pitfalls and Preventative Measures

| Pitfall | Preventative Measure |

|---|---|

| Ignoring network latency | Incorporate latency estimations in project timelines and design for asynchronous operations. |

| Insufficient data compression | Implement robust data compression algorithms and protocols. |

| Over-reliance on real-time communication | Employ asynchronous communication channels wherever possible. |

| Lack of bandwidth monitoring | Implement proactive network monitoring tools and establish regular performance reviews. |

| Unrealistic bandwidth estimations | Utilize historical data and real-world scenarios for more accurate estimations. |

Impact on System Design

Designing robust systems capable of handling fluctuating bandwidth is crucial for reliable performance. Ignoring the potential for bandwidth limitations can lead to system instability and poor user experience. Effective system design anticipates varying bandwidth conditions, ensuring smooth operation under stress and preventing catastrophic failure.

Bandwidth-Adaptive System Design

A key aspect of bandwidth-adaptive design is incorporating mechanisms that allow the system to adjust its resource allocation and operation in response to changing bandwidth conditions. This can involve dynamic scaling of resources, such as increasing server capacity during peak demand or decreasing it during lulls. Furthermore, systems should be able to prioritize critical tasks, ensuring essential services continue even when bandwidth is constrained.

Adaptive routing and load balancing strategies are vital in this context.

Queuing Systems for Bandwidth Fluctuations

Queuing systems are fundamental to managing bandwidth fluctuations. They allow the system to buffer incoming requests during periods of high bandwidth utilization. By storing requests in a queue, the system can process them sequentially, avoiding the risk of overwhelming the system’s processing capacity when bandwidth is limited. This approach allows the system to maintain responsiveness even during transient bandwidth bottlenecks.

Proper queue management is crucial; implementing prioritization schemes and managing queue lengths to prevent system lock-up is essential.

Load Balancing for Mitigating Issues

Load balancing is a vital strategy for distributing traffic across multiple servers or resources. This ensures that no single component is overwhelmed by a sudden surge in bandwidth. By distributing the load, the system can maintain responsiveness and stability even during peak demand. This prevents bottlenecks and ensures the system remains accessible and responsive under variable bandwidth conditions.

Fail-Safes for Bandwidth Limitations

Implementing fail-safes is essential to protect against complete system failure in the face of bandwidth limitations. This might include mechanisms to temporarily reduce the number of active requests or to prioritize essential services. Thresholds should be set to trigger these fail-safes. For example, if bandwidth falls below a certain level, the system might gracefully degrade by temporarily disabling non-essential features.

Table of Load Balancing Strategies

| Load Balancing Strategy | Description | Effectiveness |

|---|---|---|

| Round Robin | Distributes traffic evenly across servers by sequentially assigning requests. | Good for simple scenarios, but can lead to uneven load if servers have varying processing speeds. |

| Least Connections | Sends requests to the server with the fewest active connections. | Effective in preventing overload on specific servers. |

| Weighted Round Robin | Assigns weights to servers based on their capacity or performance. | More adaptable to variations in server performance and capacity. |

| IP Hashing | Uses the client’s IP address to determine which server handles the request. | Maintains consistent traffic routing for a given client. Can be problematic with dynamic client IP addresses. |

Data Management and Bandwidth

Effective data management is crucial for mitigating the infinite bandwidth fallacy. Optimizing storage, retrieval, and processing strategies directly impacts system performance and cost-effectiveness. Efficient data handling becomes paramount when dealing with large datasets and high-throughput demands. This section details strategies to optimize data management for bandwidth limitations.

Optimizing Data Storage and Retrieval Strategies

Efficient data storage and retrieval are vital to minimize bandwidth consumption. Techniques like data partitioning and sharding can distribute data across multiple storage units, reducing the load on any single point of access. This distributed approach allows for parallel retrieval requests, significantly enhancing overall performance. Appropriate indexing mechanisms enable quick and targeted data searches, reducing the amount of data transferred over the network.

Choosing appropriate storage mediums, such as SSDs or cloud storage solutions optimized for read/write speeds, can also substantially improve retrieval efficiency.

Data Compression Strategies

Data compression techniques are essential for reducing the volume of data transmitted over networks. Different compression algorithms offer varying degrees of compression ratios and computational overhead. Lossless compression methods, such as gzip or bzip2, preserve the original data integrity, which is critical for applications requiring precise data replication. Lossy compression, on the other hand, discards some data to achieve higher compression ratios, which is suitable for applications where some data loss is tolerable, such as image or audio files.

Proper selection of the compression algorithm is paramount, balancing the need for compression ratio with the acceptable loss in data fidelity.

Data Caching Strategies

Caching frequently accessed data locally or in intermediary servers dramatically reduces the bandwidth burden on the primary data source. Implementing a caching layer can significantly speed up data retrieval, reducing latency and response times. Various caching strategies, such as Least Recently Used (LRU) or First In First Out (FIFO), help manage cache contents, ensuring efficient use of available resources.

Properly configuring cache invalidation mechanisms is critical to prevent outdated data from being served.

Optimizing Data Pipelines and Workflows

Efficient data pipelines are essential for moving data from source to destination with minimal overhead. Streamlining the data flow, including minimizing redundant steps and optimizing data transformations, is crucial for minimizing bandwidth consumption. Employing asynchronous processing techniques can further reduce the strain on bandwidth, allowing the pipeline to continue functioning without waiting for individual tasks to complete. Monitoring data pipeline performance is essential to identify bottlenecks and optimize the workflow for maximum efficiency.

Data Compression Techniques

| Compression Technique | Description | Efficiency (Typical Compression Ratio) | Use Cases |

|---|---|---|---|

| GZIP | A lossless compression algorithm widely used for general-purpose data compression. | 50-70% | General data archiving, text files, web content |

| BZIP2 | Another lossless compression algorithm that generally provides higher compression ratios than GZIP. | 60-80% | Archiving large files, backup data, source code |

| LZMA | A lossless compression algorithm known for its high compression ratio and speed. | 70-90% | Archiving, backup, software distribution |

| PNG | Lossless compression for images. | 50-80% | Web graphics, images |

| JPEG | Lossy compression for images. | 70-95% | Photographs, images where some loss is acceptable |

Network Design Considerations

Addressing the finite nature of bandwidth necessitates careful network design. Effective strategies for mitigating the infinite bandwidth fallacy in network design involve understanding and implementing appropriate network topologies, segmentation techniques, and proactive monitoring and management. This section will delve into these crucial considerations.Network architecture must reflect the limitations of bandwidth to ensure efficient data transfer and optimal performance. Proper topology selection and proactive bandwidth management are key to preventing bottlenecks and ensuring responsiveness in today’s demanding network environments.

Network Topologies for Optimal Bandwidth Utilization

Careful consideration of network topology is essential for maximizing bandwidth utilization. Different topologies offer varying degrees of scalability, resilience, and performance characteristics.

- Star Topology: This topology, where all devices connect to a central hub, is relatively simple to implement and manage. However, a single point of failure at the central hub can disrupt the entire network. Bandwidth capacity is often shared, meaning individual device bandwidth can be affected by network traffic.

- Bus Topology: A linear arrangement of devices connected to a common cable. While simple to implement, this topology suffers from a single point of failure and contention for the shared bandwidth. Any device on the bus can interrupt data transmission for others. This makes it less efficient for high-bandwidth applications.

- Ring Topology: Data travels in a circular path through the network. This topology provides a degree of redundancy and can potentially handle more traffic than a bus topology, but a break in the ring can halt transmission.

- Mesh Topology: Multiple connections between devices provide redundancy and multiple pathways for data transmission. This is generally more robust than other topologies, and better suited for high-bandwidth applications. However, it often requires more cabling and setup complexity.

Network Segmentation to Improve Performance

Network segmentation divides the network into smaller, more manageable segments. This approach can significantly improve performance by isolating traffic and preventing congestion in one part of the network from impacting other parts.

- Improved Security: Isolating sensitive data on separate segments enhances security by limiting the impact of a breach. Attackers can be contained within a smaller segment.

- Enhanced Performance: Reducing network congestion on a specific segment prevents performance issues for devices in other segments.

- Increased Scalability: Adding new devices or services to a segmented network doesn’t impact other segments’ performance.

Proactive Monitoring and Management of Network Bandwidth

Proactive monitoring and management of network bandwidth are critical for optimizing performance. Tools and techniques can be used to identify and address bandwidth bottlenecks before they impact users or applications.

- Real-time Monitoring Tools: Tools for monitoring network traffic and bandwidth utilization provide insights into bottlenecks and areas of congestion. These insights can inform adjustments to network configurations.

- Traffic Shaping: Prioritizing certain types of traffic can improve overall network performance. For example, video conferencing might be prioritized over file transfers.

- Bandwidth Allocation: Assigning specific bandwidth limits to different applications or users ensures that no single entity monopolizes the network.

Illustrative Network Topologies and Bandwidth Implications

The following table summarizes different network topologies and their bandwidth implications. Bandwidth utilization is impacted by the number of devices, the type of traffic, and the topology itself.

| Topology | Bandwidth Implications | Example Use Case |

|---|---|---|

| Star | Central hub can become a bottleneck, sharing bandwidth. | Small office network |

| Bus | Shared bandwidth, prone to contention. | Older LAN setups |

| Ring | Can handle more traffic than a bus, but a break in the ring halts transmission. | Early token ring networks |

| Mesh | Multiple pathways for data transmission, higher resilience. | Large enterprise networks, high-bandwidth applications |

Process Improvement and Efficiency

Minimizing bandwidth consumption is crucial for system stability and cost-effectiveness. Efficient processes and streamlined workflows are key to achieving this goal. Optimizing these areas allows systems to operate with reduced strain on network resources, enhancing overall performance and reliability.

Streamlining Processes to Minimize Bandwidth Usage

Effective strategies for minimizing bandwidth usage require a systematic approach to process optimization. Identifying and eliminating redundant steps, consolidating data transfer points, and implementing data compression techniques are vital. For example, consolidating multiple data transfer points into a single, centralized hub can significantly reduce the overall bandwidth required for data exchange.

Automating Tasks to Reduce Manual Bandwidth Consumption

Automation of tasks reduces manual intervention, which often involves unnecessary data transfers and communications. Automating data backups, report generation, and other routine tasks can substantially decrease bandwidth usage. Consider using scripting languages or dedicated automation tools to streamline these operations. For instance, automating the generation of weekly reports can eliminate the need for manual uploads, significantly reducing bandwidth consumption.

Improving Communication Protocols for Enhanced Efficiency

Optimizing communication protocols is another critical aspect of process improvement. Using more efficient protocols, such as optimized HTTP/2 or WebSockets for real-time communication, can dramatically decrease the amount of data transmitted. Employing compression algorithms to reduce the size of data packets transmitted over the network is also effective.

Prioritizing Tasks Based on Bandwidth Availability

Bandwidth prioritization strategies are essential for managing network traffic effectively. Establishing a system for classifying tasks based on their urgency and bandwidth requirements is crucial. This prioritization can be based on factors such as real-time requirements, the sensitivity of the data, and the impact of delays on downstream processes. Implementing a queuing system based on these priorities can effectively manage bandwidth allocation.

Optimized Process Workflow Flowchart

| Task | Description | Bandwidth Impact | Action |

|---|---|---|---|

| Data Collection | Gathering data from various sources. | High | Optimize data collection methods (e.g., batch processing). |

| Data Processing | Processing collected data. | Moderate | Utilize efficient algorithms and minimize intermediate data transfers. |

| Data Transfer | Transferring processed data. | High | Prioritize based on urgency and implement compression techniques. |

| Data Storage | Storing the data. | Low | Utilize efficient storage methods (e.g., optimized databases). |

Communication Protocols and Bandwidth

Choosing appropriate communication protocols is crucial for minimizing bandwidth consumption and ensuring efficient data transmission. Effective selection depends on factors such as data type, transmission speed requirements, and network infrastructure. Properly implemented protocols can dramatically improve overall system performance and reduce costs associated with excessive bandwidth usage.Selecting and implementing optimal communication protocols directly impacts the efficiency and effectiveness of data transfer within a system.

Understanding the nuances of various protocols, including their strengths and weaknesses regarding bandwidth utilization, is essential for making informed decisions. Strategies for data compression and transmission prioritization, alongside a comprehensive understanding of different protocol characteristics, are vital for mitigating the infinite bandwidth fallacy.

Choosing Appropriate Communication Protocols

Effective communication protocols are paramount for efficient data transfer. Factors like the nature of the data, desired transmission speed, and the existing network infrastructure are essential considerations in protocol selection. Careful evaluation ensures the chosen protocol effectively manages bandwidth usage and meets performance requirements. Selecting the most appropriate protocol based on these factors minimizes potential issues related to bandwidth limitations and promotes optimal performance.

Data Compression Strategies

Data compression techniques play a critical role in reducing bandwidth usage. Various methods exist, ranging from simple lossless compression algorithms to sophisticated lossy compression methods. Lossless compression, such as gzip or bzip2, maintains the original data integrity, while lossy compression, like JPEG or MP3, sacrifices some data fidelity for significant size reduction. The optimal compression method depends on the sensitivity of the data to loss and the desired level of compression.

Carefully choosing the appropriate compression algorithm ensures that the data integrity is maintained while significantly reducing bandwidth consumption.

Prioritizing Data Transmission

Prioritizing data transmission based on bandwidth availability is a crucial aspect of optimizing network performance. Different data types may have varying priorities. Real-time data, such as video conferencing or online gaming, might require higher priority than non-critical data, like backups or scheduled reports. Dynamic prioritization mechanisms can adapt to changing bandwidth conditions, ensuring critical data transmission is not affected by lower priority data.

Comparison of Communication Protocols

Different communication protocols exhibit varying bandwidth efficiency. Understanding these differences is crucial for choosing the optimal protocol for a given application. A detailed comparison allows for the selection of the most appropriate protocol, enabling efficient data transmission.

Bandwidth Efficiency Comparison Table

| Protocol | Description | Bandwidth Efficiency (General Ranking) | Use Cases |

|---|---|---|---|

| TCP (Transmission Control Protocol) | Reliable, connection-oriented protocol with error checking and retransmission. | Moderate | File transfer, web browsing, email |

| UDP (User Datagram Protocol) | Connectionless protocol, faster than TCP but less reliable. | High | Streaming media, online gaming, DNS lookups |

| HTTP (Hypertext Transfer Protocol) | Application-layer protocol for transferring hypermedia documents. | Moderate | Web browsing, accessing web resources |

| MQTT (Message Queuing Telemetry Transport) | Lightweight publish/subscribe protocol for IoT applications. | Very High | Internet of Things (IoT) devices, sensor data transmission |

| FTP (File Transfer Protocol) | Connection-oriented protocol designed for file transfer. | Moderate | File transfer over networks |

Note: Bandwidth efficiency is a relative measure and can vary depending on implementation details and network conditions. The table provides a general comparison.

Measuring and Monitoring Bandwidth Usage

Accurately measuring and monitoring bandwidth usage is critical for identifying bottlenecks, optimizing network performance, and ensuring efficient resource allocation. Effective monitoring enables proactive identification of potential issues, allowing for timely adjustments and preventing service disruptions. This proactive approach is vital in today’s data-driven world where high bandwidth is often a requirement for smooth operation.Understanding bandwidth consumption patterns is essential for making informed decisions about network infrastructure upgrades, application deployments, and resource allocation.

This detailed analysis provides a comprehensive view of bandwidth utilization, enabling administrators to pinpoint areas of high consumption and optimize the network for optimal performance.

Methods for Measuring Bandwidth Consumption

Various methods exist for measuring bandwidth consumption across different systems. Network interface cards (NICs) often provide real-time bandwidth usage data. Dedicated monitoring tools and software provide comprehensive metrics, including inbound and outbound traffic, over specific time periods. Specialized network analyzers can dissect traffic at a deeper level, capturing packet-by-packet information to pinpoint bottlenecks and performance issues. Furthermore, cloud-based services and platforms offer robust monitoring solutions for analyzing bandwidth usage in cloud environments.

Tools and Techniques for Monitoring Bandwidth Usage Over Time

Monitoring tools and techniques play a vital role in tracking bandwidth usage over time. These tools often include graphical representations of bandwidth consumption, providing a clear visual representation of trends and anomalies. Real-time dashboards and reporting features are key components for continuous monitoring. Comprehensive logging and data collection allow for detailed analysis and trend identification. Furthermore, these tools can be configured to alert administrators of exceeding thresholds, allowing for immediate action to prevent service disruptions.

Identifying Bottlenecks and Performance Issues

Identifying bottlenecks and performance issues related to bandwidth requires a multi-faceted approach. Analyzing bandwidth usage patterns across different applications and network segments is crucial. High bandwidth consumption from a specific application or device can point to a potential issue. Network performance monitoring tools often provide insights into network latency and packet loss, indicators of bandwidth-related bottlenecks. Careful analysis of traffic patterns can highlight specific times of high consumption, potentially revealing recurring issues or problems related to specific applications or users.

Strategies for Tracking and Analyzing Bandwidth Trends

Tracking and analyzing bandwidth trends involves the use of historical data and forecasting tools. Historical data provides insights into bandwidth consumption patterns over time, enabling the identification of seasonal trends or usage spikes. Predictive modeling can help anticipate future bandwidth demands, enabling proactive resource allocation and infrastructure planning. Furthermore, analyzing bandwidth trends across different user groups and applications provides a granular understanding of usage patterns and allows for targeted optimization strategies.

Dashboard Design for Visualizing Bandwidth Usage Trends

A well-designed dashboard is crucial for visualizing bandwidth usage trends. The dashboard should incorporate interactive charts and graphs to display real-time and historical bandwidth consumption. Key performance indicators (KPIs), such as average bandwidth usage, peak bandwidth usage, and bandwidth utilization percentage, should be prominently displayed. Filtering options for different time periods and network segments are essential to allow for a granular analysis of usage patterns.

Color-coding can effectively highlight areas of high consumption and potential bottlenecks.

| KPI | Description | Visualization |

|---|---|---|

| Average Bandwidth Usage | The average bandwidth consumption over a specified period. | Line chart |

| Peak Bandwidth Usage | The highest bandwidth consumption observed during a period. | Bar chart |

| Bandwidth Utilization Percentage | The percentage of available bandwidth currently in use. | Gauge chart |

A well-designed dashboard facilitates quick identification of bandwidth-related issues, enabling proactive adjustments to network configuration.

Case Studies and Real-World Examples

The infinite bandwidth fallacy, while often a simplifying assumption, can lead to significant problems in real-world systems if not carefully considered. Understanding how organizations have successfully navigated bandwidth limitations and the pitfalls of ignoring them provides valuable insights into practical strategies for mitigation. This section presents case studies demonstrating successful approaches to managing bandwidth constraints, highlighting both successes and failures.

Illustrative Case Studies of Successful Bandwidth Management

Organizations often experience considerable improvements in system performance and efficiency when they actively address bandwidth limitations. Effective strategies, implemented with a focus on data management, network design, and process optimization, are key to achieving these positive outcomes.

- A large e-commerce platform, recognizing the limitations of their existing network infrastructure, invested in a tiered network architecture. This involved separating high-priority traffic (e.g., customer order fulfillment) from lower-priority traffic (e.g., marketing materials). The result was a substantial improvement in order processing time and a reduction in customer complaints related to website responsiveness. This exemplifies how strategic network design, based on understanding bandwidth needs, can improve application performance.

- A social media company, facing increasing video streaming demands, implemented a content delivery network (CDN). The CDN strategically cached popular videos across various servers globally, reducing the strain on their central servers and significantly improving the user experience. This demonstrates the effectiveness of distributed caching in handling fluctuating bandwidth demands and ensuring application reliability.

- A financial institution, facing a potential bandwidth bottleneck during peak trading hours, optimized its database queries. By reducing unnecessary data retrieval and implementing efficient caching strategies, they significantly reduced the bandwidth required for transaction processing, enhancing system stability during periods of high demand. This highlights how process improvements and optimization can significantly impact bandwidth consumption.

Examples of Problems Arising from the Infinite Bandwidth Fallacy

Ignoring bandwidth limitations can lead to significant issues, ranging from performance degradation to system failures.

- A streaming service, initially relying on a single data center, experienced severe performance issues during peak hours. This was attributed to insufficient bandwidth to handle the massive increase in concurrent video streams, resulting in buffering and a poor user experience. The service had underestimated the dynamic nature of bandwidth demand, and the issue could have been avoided by implementing a CDN.

- A cloud-based application, deployed without adequate bandwidth planning, experienced unpredictable latency and downtime during periods of high usage. This underscored the importance of proactively anticipating bandwidth requirements, including future growth and scalability needs. The lack of proactive planning resulted in a poor user experience and potential revenue loss.

Comparison of Bandwidth Management Strategies

Different organizations have adopted various approaches to manage bandwidth challenges. These approaches vary in their complexity, cost, and effectiveness.

- Some organizations focus on improving network infrastructure, such as upgrading routers and switches or implementing CDNs. Others prioritize optimizing application code and data management strategies. Still others combine both network and application optimization strategies for a holistic approach.

- A key difference lies in the proactive vs. reactive nature of these approaches. Proactive strategies involve anticipating future needs and implementing solutions before problems arise, while reactive strategies address issues as they occur. Proactive strategies are generally more cost-effective in the long run.

Summary of Case Studies

| Case Study | Bandwidth Challenge | Mitigation Strategy | Outcome |

|---|---|---|---|

| E-commerce Platform | Slow order processing | Tiered network architecture | Improved order processing time, reduced customer complaints |

| Social Media Company | High video streaming demand | Content Delivery Network (CDN) | Improved user experience, reduced strain on central servers |

| Financial Institution | Bandwidth bottleneck during peak hours | Database query optimization, caching | Enhanced system stability, reduced bandwidth consumption |

| Streaming Service | Performance issues during peak hours | Lack of CDN, single data center | Buffering, poor user experience |

| Cloud-based Application | Unpredictable latency and downtime | Insufficient bandwidth planning | Poor user experience, potential revenue loss |

Final Conclusion

In conclusion, recognizing and addressing the infinite bandwidth fallacy is paramount for achieving optimal performance and efficiency in any system or process. By implementing the strategies Artikeld in this guide, organizations and individuals can move beyond theoretical ideals and embrace the practical realities of finite bandwidth. This approach fosters more realistic project timelines, improved communication, and ultimately, greater success in the face of real-world constraints.

Top FAQs

What are some common misconceptions about bandwidth?

Many believe bandwidth is a limitless resource, overlooking the inherent constraints and potential bottlenecks in real-world systems. This leads to unrealistic expectations and potentially costly project delays.

How can I accurately assess my system’s bandwidth needs?

Careful planning and analysis, including historical data, anticipated growth, and peak usage patterns, are crucial for estimating realistic bandwidth requirements. Load testing and performance monitoring tools can help validate these assessments.

What are the most effective methods for prioritizing data transmission?

Prioritization strategies, often based on urgency and importance, can help optimize data transmission and ensure critical data is delivered efficiently while non-critical data is handled appropriately.

What are the implications of ignoring bandwidth limitations in network design?

Ignoring bandwidth limitations can lead to network congestion, slow response times, and ultimately, decreased user experience and application performance.