Embark on a journey into the world of asynchronous communication, where message queues stand as the unsung heroes of modern software architecture. This guide unveils the power of message queues, exploring their fundamental concepts, historical roots, and the transformative advantages they bring to building scalable and resilient systems. We’ll delve into the core components that make these systems tick, from producers and consumers to brokers, and discover how they orchestrate seamless data exchange.

Message queues enable different parts of an application to communicate without being directly connected, leading to increased flexibility, improved performance, and enhanced fault tolerance. This approach is particularly valuable in complex applications where components need to operate independently and handle large volumes of data. Throughout this exploration, we’ll uncover practical implementations, best practices, and real-world use cases to empower you with the knowledge to leverage message queues effectively.

Introduction to Message Queues

Message queues are a cornerstone of modern software architecture, facilitating asynchronous communication between different parts of a system. They act as intermediaries, enabling applications to exchange messages without requiring immediate processing. This decoupling enhances system resilience, scalability, and overall efficiency.

Fundamental Concept and Purpose of Message Queues

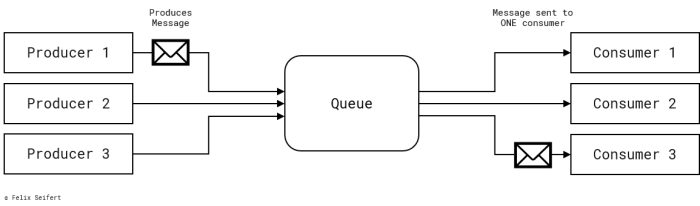

Message queues provide a robust mechanism for inter-process communication (IPC). Their primary purpose is to store messages until they are processed by a consumer. This store-and-forward approach decouples the message producer (sender) from the message consumer (receiver), allowing them to operate independently.The core functionality can be summarized as:

- Message Producers: Applications or services that create and send messages to the queue. They don’t need to wait for the message to be processed immediately.

- Message Queue: A central repository that holds messages until they are retrieved by consumers. It ensures message persistence and reliable delivery.

- Message Consumers: Applications or services that retrieve messages from the queue and process them. They work independently of the producer.

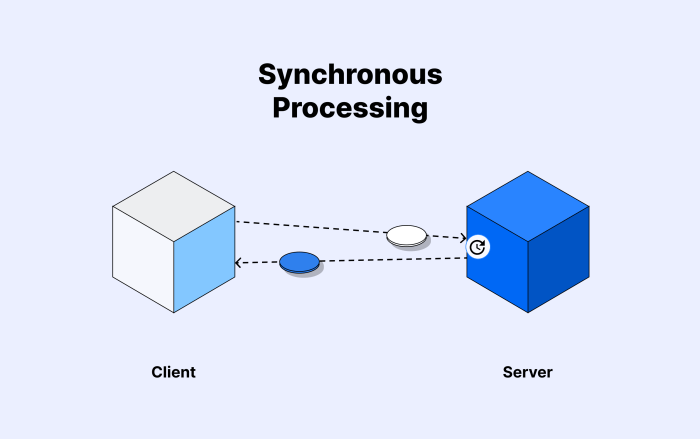

This asynchronous communication model offers significant advantages over synchronous communication, where the sender waits for a response before continuing.

History of Message Queues

The concept of message queues has evolved significantly over time. Early implementations focused on providing reliable communication in distributed systems.Some notable milestones include:

- Early Implementations (1970s-1980s): Pioneering work in distributed computing led to the development of early message-oriented middleware (MOM) systems. These systems aimed to provide reliable and asynchronous communication in environments where network failures were common.

- IBM MQSeries (1990s): IBM’s MQSeries (later WebSphere MQ, now IBM MQ) was a prominent commercial message queue implementation. It offered robust features for message management, security, and reliability, and became a standard in enterprise environments.

- Open Source Initiatives (2000s-Present): The rise of open-source message queues, such as RabbitMQ and Apache ActiveMQ, provided accessible and flexible solutions for various use cases. These systems often built upon the principles established by earlier commercial implementations, but with a focus on open standards and community contributions.

- Cloud-Native Solutions (Present): Modern message queue services, such as Amazon SQS, Google Cloud Pub/Sub, and Azure Service Bus, are offered as managed services. These services provide scalability, high availability, and integration with cloud platforms.

These historical developments have shaped the landscape of message queuing, leading to the robust and versatile systems we use today.

Advantages of Using Message Queues for Asynchronous Communication

Employing message queues for asynchronous communication offers a multitude of benefits that improve system performance, scalability, and maintainability.Key advantages include:

- Decoupling: Message queues decouple different components of an application, allowing them to operate independently. Producers and consumers don’t need to know about each other’s implementation details or availability.

- Improved Performance: By offloading tasks to a queue, producers can continue processing requests without waiting for immediate processing. This results in faster response times and improved overall system performance.

- Scalability: Message queues enable systems to scale independently. You can scale the number of consumers based on the message processing load, without affecting the producers.

- Reliability: Message queues typically provide features like message persistence and acknowledgment mechanisms to ensure reliable message delivery, even in the event of failures.

- Fault Tolerance: If a consumer fails, the messages remain in the queue and can be processed by another consumer. This enhances system resilience and fault tolerance.

- Flexibility: Message queues support various messaging patterns, such as point-to-point and publish-subscribe, allowing you to tailor communication to specific requirements.

For instance, consider an e-commerce platform. When a customer places an order, the order processing service can send a message to a queue. This message might contain order details. A separate fulfillment service, consuming messages from the queue, can then process the order, handle inventory updates, and coordinate shipping. The order processing service does not have to wait for the fulfillment service to complete its tasks.

This decoupling allows the system to handle a large number of orders concurrently, ensuring a responsive user experience, even during peak traffic.

Core Components of a Message Queue System

Understanding the core components of a message queue system is crucial for effectively utilizing this powerful technology. These components work in concert to enable asynchronous communication, decoupling applications and improving overall system resilience and scalability. Each component plays a specific role in the process of sending, storing, and delivering messages.

Producers

Producers are the applications or services that create and send messages to the message queue. They are responsible for formatting the data into a message and publishing it to a designated queue. Producers don’t need to know anything about the consumers; their sole responsibility is to generate and submit messages.

Consumers

Consumers are the applications or services that receive and process messages from the message queue. They subscribe to specific queues and are notified when new messages arrive. Consumers process these messages, performing actions based on the message content.

Broker

The broker, also known as the message queue server or message-oriented middleware (MOM), is the central component of the system. It acts as an intermediary between producers and consumers. The broker receives messages from producers, stores them in queues, and delivers them to consumers. The broker manages the queues, ensures message persistence (if configured), and handles message delivery guarantees.

Queue

A queue is a storage area within the broker where messages are temporarily held until they are consumed by consumers. Each queue has a specific name, and producers send messages to a particular queue based on the intended recipient (consumer). Consumers subscribe to one or more queues to receive messages. The queue’s characteristics, such as message persistence and ordering, are often configurable.

Interaction Example

Imagine a system where users upload images. Here’s how the components interact:

- Producer (Image Upload Service): A user uploads an image. The Image Upload Service (the producer) creates a message containing the image file path and other relevant metadata.

- Broker (Message Queue): The producer sends the message to the “image_processing” queue on the message queue broker.

- Queue (image_processing): The broker stores the message in the “image_processing” queue.

- Consumer (Image Processing Service): The Image Processing Service (the consumer) subscribes to the “image_processing” queue. When a new message arrives, the consumer retrieves it.

- Consumer (Image Processing Service): The Image Processing Service processes the image (e.g., resizing, generating thumbnails).

- Consumer (Image Processing Service): After processing, the consumer sends a confirmation message (e.g., to a “notification” queue) or updates a database.

Common Message Queue Implementations

Message queues are powerful tools, but their effectiveness hinges on the specific implementation chosen. Various message queue systems exist, each with its strengths and weaknesses. Understanding these implementations is crucial for selecting the right tool for a given task. The choice depends on factors like the required scale, performance needs, and specific features.

Popular Message Queue Systems

Several popular message queue systems are widely used in modern software architectures. These systems provide robust solutions for asynchronous communication, offering different trade-offs in terms of features, scalability, and operational complexity. The following are some of the most prominent:

- RabbitMQ: RabbitMQ is a widely adopted open-source message broker. It implements the Advanced Message Queuing Protocol (AMQP) and offers a flexible and reliable platform for message passing. RabbitMQ is known for its ease of use and robust features, making it suitable for a variety of applications.

- Apache Kafka: Kafka is a distributed streaming platform designed for high-throughput, fault-tolerant data pipelines. It is particularly well-suited for handling large volumes of data in real-time. Kafka excels at providing scalability and durability, making it a good choice for applications with demanding data processing requirements.

- Amazon Simple Queue Service (SQS): Amazon SQS is a fully managed message queuing service offered by Amazon Web Services (AWS). It provides a simple and scalable solution for decoupling applications and services. SQS integrates seamlessly with other AWS services and offers a pay-as-you-go pricing model.

Comparing Message Queue Implementations

Choosing the right message queue system involves evaluating various factors. Each system has unique characteristics, making it suitable for different use cases. A comparison based on features, scalability, and use cases will provide a clearer picture.

The following table provides a comparative overview of RabbitMQ, Apache Kafka, and Amazon SQS, highlighting key features and their implications.

| Feature | RabbitMQ | Apache Kafka | Amazon SQS |

|---|---|---|---|

| Protocol | AMQP, STOMP, MQTT | Custom (TCP-based) | HTTP/HTTPS |

| Message Ordering | Per queue (FIFO) | Per partition (FIFO) | Best-effort (not guaranteed FIFO) |

| Scalability | Vertically scalable, clustered for high availability | Horizontally scalable, designed for high throughput | Highly scalable, managed by AWS |

| Durability | Guaranteed message delivery with persistence options | Highly durable, with replication and fault tolerance | Durable, with message retention options |

| Use Cases | General-purpose messaging, task queues, microservices communication | Real-time data streaming, event processing, log aggregation | Decoupling applications, background jobs, serverless architectures |

| Management | Flexible management through web UI, CLI, and APIs | Complex, requires dedicated operations expertise | Fully managed by AWS, easy to set up and use |

| Open Source/Managed | Open Source | Open Source | Managed Service |

Producers and Consumers

In the realm of message queues, the roles of producers and consumers are fundamental. They represent the two primary actors in the asynchronous communication paradigm. Producers are responsible for generating and publishing messages, while consumers are tasked with retrieving and processing those messages. Understanding their design and implementation, including best practices for robustness, is crucial for building reliable and scalable systems.

The Role of Producers in Publishing Messages

Producers are the message originators. They create messages containing data or instructions and then publish these messages to a message queue. The message queue acts as an intermediary, decoupling the producers from the consumers. This decoupling allows producers to continue operating without waiting for consumers to process the messages immediately.The primary responsibilities of a producer include:

- Message Creation: The producer formats the data into a message. This may involve serialization (e.g., JSON, Protocol Buffers) to convert data structures into a format suitable for transmission.

- Queue Selection: The producer determines which queue to publish the message to. This decision is often based on the message’s type or the intended consumer.

- Message Publishing: The producer sends the message to the selected queue. The message queue then stores the message until it can be delivered to a consumer.

- Error Handling: Producers should implement robust error handling to deal with potential issues, such as network failures or queue unavailability.

A simplified example of a producer in Python using the RabbitMQ library (pika) might look like this:“`pythonimport pikaconnection = pika.BlockingConnection(pika.ConnectionParameters(‘localhost’))channel = connection.channel()channel.queue_declare(queue=’my_queue’)message = ‘Hello, message queue!’channel.basic_publish(exchange=”, routing_key=’my_queue’, body=message)print(f” [x] Sent ‘message'”)connection.close()“`In this example, the producer connects to a RabbitMQ server, declares a queue named ‘my_queue’, and publishes a simple text message to that queue.

The Role of Consumers in Retrieving and Processing Messages

Consumers are the message recipients. They subscribe to a message queue and retrieve messages from it for processing. Consumers are typically designed to handle messages asynchronously, which means they do not block the producer. This asynchronous nature is a key benefit of message queues.The primary responsibilities of a consumer include:

- Queue Subscription: The consumer connects to the message queue and subscribes to one or more queues.

- Message Retrieval: The consumer retrieves messages from the queue.

- Message Processing: The consumer processes the retrieved message, which might involve updating a database, sending an email, or performing other tasks.

- Acknowledgement: After successfully processing a message, the consumer typically sends an acknowledgement back to the queue. This signals that the message can be safely removed.

- Error Handling: Consumers must also handle errors, such as processing failures or data corruption. They might implement retry mechanisms or move failed messages to a dead-letter queue.

Continuing the previous example, here’s a simplified consumer in Python using pika:“`pythonimport pikaconnection = pika.BlockingConnection(pika.ConnectionParameters(‘localhost’))channel = connection.channel()channel.queue_declare(queue=’my_queue’)def callback(ch, method, properties, body): print(f” [x] Received body.decode()”)channel.basic_consume(queue=’my_queue’, on_message_callback=callback, auto_ack=True)print(‘ [*] Waiting for messages. To exit press CTRL+C’)channel.start_consuming()“`This consumer connects to RabbitMQ, subscribes to ‘my_queue’, and defines a callback function to handle incoming messages. `auto_ack=True` automatically acknowledges the message after it’s processed.

In a real-world scenario, `auto_ack` is often set to `False` for greater control over message acknowledgement.

Best Practices for Designing Robust Producers and Consumers

Designing robust producers and consumers is essential for building resilient and scalable message queue-based systems. Implementing best practices in both areas significantly improves the reliability and maintainability of the application.Key best practices include:

- Idempotency: Design consumers to handle messages idempotently. This means that processing the same message multiple times has the same effect as processing it once. This is critical in case of consumer failures and retries.

- Error Handling and Retries: Implement robust error handling in both producers and consumers. Producers should handle network errors and queue unavailability. Consumers should handle processing failures. Use retry mechanisms with exponential backoff to handle transient errors. Consider using a dead-letter queue for messages that repeatedly fail.

- Message Validation: Validate messages on both the producer and consumer sides. Producers should validate data before publishing. Consumers should validate data before processing. This helps to prevent bad data from entering the system.

- Monitoring and Logging: Implement comprehensive monitoring and logging. Monitor queue lengths, consumer health, and error rates. Log all significant events, including message publishes, receives, and processing failures.

- Configuration Management: Use configuration management to store connection details, queue names, and other settings. Avoid hardcoding these values in your code.

- Scalability: Design producers and consumers to be scalable. Producers should be able to handle increased message volumes. Consumers should be able to scale horizontally to handle increased processing loads.

- Security: Secure the message queue and the communication between producers and consumers. Use authentication, authorization, and encryption to protect sensitive data.

Example of Error Handling and Retries (Consumer-side):Imagine a consumer that processes orders. If a consumer fails to process an order (e.g., due to a database connection issue), the system should retry the processing.“`pythonimport pikaimport timedef process_order(order_data): # Simulate processing an order try: # Attempt to write to a database (example) print(f”Processing order: order_data”) # …

database write code here … print(“Order processed successfully.”) return True # Success except Exception as e: print(f”Error processing order: e”) return False # Failuredef consume_messages(): connection = pika.BlockingConnection(pika.ConnectionParameters(‘localhost’)) channel = connection.channel() channel.queue_declare(queue=’orders_queue’) def callback(ch, method, properties, body): order_data = body.decode() max_retries = 3 retry_delay = 2 # seconds for attempt in range(max_retries): if process_order(order_data): ch.basic_ack(delivery_tag=method.delivery_tag) # Acknowledge success print(“Message acknowledged.”) return else: print(f”Retrying message after retry_delay seconds (attempt attempt + 1/max_retries)…”) time.sleep(retry_delay) retry_delay

= 2 # Exponential backoff

# If all retries fail, send to a dead-letter queue (example) print(“Message failed to process after multiple retries. Sending to dead-letter queue.”) ch.basic_nack(delivery_tag=method.delivery_tag, requeue=False) #Nack and do not requeue (send to DLQ) channel.basic_consume(queue=’orders_queue’, on_message_callback=callback) channel.start_consuming()consume_messages()“`This example shows a consumer that retries processing an order up to a certain number of times.

If the processing fails after all retries, the message is moved to a dead-letter queue, allowing for manual investigation and correction. Exponential backoff is used to increase the delay between retries, preventing the system from being overwhelmed by constant retry attempts. This demonstrates a more sophisticated approach to handling potential errors and maintaining system stability. The use of `basic_ack` and `basic_nack` for acknowledgement is critical for ensuring messages are handled correctly, even in failure scenarios.

Message Formats and Serialization

Message formats and serialization are critical aspects of message queue systems. They determine how data is structured and encoded when messages are sent between producers and consumers. Choosing the right format and serialization method can significantly impact performance, efficiency, and interoperability.

Common Message Formats

Various message formats exist, each with its own strengths and weaknesses. The choice of format depends on factors such as the complexity of the data, the need for human readability, and the desired performance characteristics.

- JSON (JavaScript Object Notation): JSON is a lightweight, human-readable data format based on a subset of JavaScript. It’s widely used due to its simplicity and ease of use. JSON represents data as key-value pairs, making it relatively easy to understand and parse.

- XML (Extensible Markup Language): XML is a markup language designed to store and transport data. It uses tags to define data elements and attributes. XML is more verbose than JSON and offers features like schema validation.

- Protocol Buffers (Protobuf): Protocol Buffers are a binary format developed by Google for structured data. They offer a more compact and efficient representation of data compared to JSON and XML. Protobuf requires defining a schema for the data, which enables strong typing and versioning.

- Avro: Avro is a data serialization system that uses a schema to define data structures. It is designed for large-scale data processing and is often used with Apache Kafka. Avro provides features like schema evolution and supports both binary and JSON representations.

Importance of Message Serialization and Deserialization

Serialization and deserialization are essential processes in message queue systems. Serialization converts data structures into a format suitable for transmission over the network, while deserialization converts the received data back into usable data structures. The efficiency of these processes directly impacts the overall performance of the system.

Serialization: The process of converting data structures or objects into a format that can be stored or transmitted.

Deserialization: The reverse process of converting serialized data back into data structures or objects.

The choice of serialization method affects:

- Message Size: Smaller messages require less bandwidth and reduce network latency.

- Processing Speed: Efficient serialization and deserialization can significantly improve the speed at which messages are processed.

- Compatibility: The serialization format must be compatible with both the producer and the consumer.

Advantages and Disadvantages of JSON and Protocol Buffers for Message Serialization

JSON and Protocol Buffers are popular choices for message serialization, each with its own trade-offs.

- JSON

- Advantages:

- Human-readable and easy to understand.

- Widely supported across various programming languages and platforms.

- Simple to implement.

- Disadvantages:

- More verbose than binary formats, resulting in larger message sizes.

- Slower serialization and deserialization compared to binary formats.

- No built-in schema enforcement, leading to potential data inconsistencies if not carefully managed.

- Advantages:

- Protocol Buffers

- Advantages:

- Compact and efficient, resulting in smaller message sizes.

- Fast serialization and deserialization.

- Supports schema definition, providing data validation and versioning capabilities.

- Disadvantages:

- Not human-readable in its binary form.

- Requires a schema definition, adding an extra step to the development process.

- May have a steeper learning curve compared to JSON.

- Advantages:

Message Routing and Exchange Patterns

Message routing is a crucial aspect of message queue systems, dictating how messages are directed from producers to consumers. The efficiency and flexibility of a message queue heavily rely on the routing strategies employed. Different exchange patterns provide various mechanisms for routing messages, enabling complex communication scenarios.

Different Message Routing Strategies

Several message routing strategies are available, each designed to handle specific communication requirements. The choice of strategy depends on the application’s needs, including the desired level of message delivery, the number of consumers, and the complexity of the routing logic.

- Direct Exchange: This is the simplest routing strategy. Messages are routed to queues based on an exact match between the routing key of the message and the binding key of the queue.

- Topic Exchange: Topic exchanges route messages to queues based on pattern matching. The routing key of the message is matched against the binding keys of the queues, which can include wildcard characters like “*” (matches one word) and “#” (matches zero or more words). This enables flexible routing based on message content.

- Fanout Exchange: Fanout exchanges broadcast messages to all queues bound to the exchange, regardless of the routing key. This is useful for scenarios where every consumer needs to receive a copy of the message, such as logging or monitoring.

Direct Exchange Design Example

Direct exchanges are suitable for scenarios where messages need to be delivered to specific queues based on a precise routing key.

Imagine a system for processing customer orders. A direct exchange, named “order_exchange”, is used. Two queues are bound to this exchange:

- “payment_queue” is bound with the routing key “payment”.

- “shipping_queue” is bound with the routing key “shipping”.

When a message with the routing key “payment” is sent to “order_exchange”, it is routed exclusively to the “payment_queue”. Similarly, messages with the routing key “shipping” go to the “shipping_queue”.

Image Description: The image depicts a Direct Exchange named “order_exchange” in the center. Two queues, “payment_queue” and “shipping_queue”, are connected to the exchange. A message producer sends a message with the routing key “payment” to the exchange. The exchange, due to the binding, directs this message exclusively to the “payment_queue”. Another message producer sends a message with the routing key “shipping” to the exchange, which directs the message to “shipping_queue”.

Arrows indicate the message flow from producer to exchange and then to the respective queues. The diagram clearly illustrates the one-to-one mapping between routing keys and queues.

Topic Exchange Design Example

Topic exchanges are ideal for flexible routing based on patterns. This is particularly useful when messages have hierarchical or categorized routing keys.

Consider a news publication system. A topic exchange, named “news_exchange”, is used. Queues are bound to this exchange using different binding keys, allowing for categorized news distribution.

- A queue named “sports_news_queue” is bound with the binding key “sports.*”.

- A queue named “world_news_queue” is bound with the binding key “world.#”.

- A queue named “weather_news_queue” is bound with the binding key “weather.europe.*”.

If a message with the routing key “sports.football” is sent to “news_exchange”, it is routed to the “sports_news_queue”. If a message with the routing key “world.asia.china” is sent, it is routed to the “world_news_queue”. If a message with the routing key “weather.europe.london” is sent, it is routed to the “weather_news_queue”.

Image Description: The image shows a Topic Exchange called “news_exchange” at the center. Three queues are connected: “sports_news_queue”, “world_news_queue”, and “weather_news_queue”. The “sports_news_queue” is connected with the binding key “sports.*”. The “world_news_queue” is connected with the binding key “world.#”. The “weather_news_queue” is connected with the binding key “weather.europe.*”.

Arrows indicate the message flow. Messages with routing keys like “sports.football” (to sports queue), “world.asia.china” (to world queue), and “weather.europe.london” (to weather queue) are sent to the exchange. The exchange routes each message to the appropriate queue based on the matching binding keys and routing keys using wildcard matching rules.

Fanout Exchange Design Example

Fanout exchanges are suitable for scenarios requiring messages to be broadcast to all connected consumers.

Consider a monitoring system. A fanout exchange, named “log_exchange”, is used to distribute log messages to multiple consumers.

- A queue named “file_log_queue” is bound to “log_exchange”.

- A queue named “database_log_queue” is bound to “log_exchange”.

- A queue named “alert_log_queue” is bound to “log_exchange”.

When a log message is sent to “log_exchange”, it is replicated and delivered to all three queues: “file_log_queue”, “database_log_queue”, and “alert_log_queue”. Each consumer connected to these queues receives a copy of the log message, enabling simultaneous logging to different destinations.

Image Description: The image illustrates a Fanout Exchange called “log_exchange”. Three queues are connected to the exchange: “file_log_queue”, “database_log_queue”, and “alert_log_queue”. A message producer sends a single log message to the “log_exchange”. The exchange then duplicates the message and delivers a copy to each of the three connected queues. The diagram depicts the message flow as a single input splitting into three outputs, one for each queue, emphasizing the broadcasting nature of the fanout exchange.

All consumers connected to these queues receive the message.

Message Delivery Guarantees

Message delivery guarantees are critical aspects of message queue systems, defining the level of assurance regarding message delivery between producers and consumers. These guarantees dictate how a message queue system handles potential failures, such as network interruptions, consumer crashes, or broker outages. The choice of a specific guarantee depends on the application’s requirements for data integrity and fault tolerance. Different guarantees offer varying trade-offs between performance, complexity, and the potential for message loss or duplication.

At-Most-Once Delivery

At-most-once delivery ensures that a message is delivered to a consumer at most one time. This guarantee is the simplest to implement but offers the least reliability.To implement at-most-once delivery, the message queue system typically:

- Delivers a message to the consumer.

- Does not retry delivery if the consumer fails to acknowledge the message.

The primary trade-off is the potential for message loss. If a consumer crashes or the network fails after receiving a message but before acknowledging it, the message will be lost. This delivery guarantee is suitable for applications where message loss is acceptable, such as logging non-critical events or real-time data streams where occasional omissions are tolerable. It offers the highest performance because it minimizes overhead associated with persistence and acknowledgment handling.

An example is streaming sensor data where missing a single data point is not critical.

At-Least-Once Delivery

At-least-once delivery guarantees that a message will be delivered to a consumer at least one time. This guarantee aims to prevent message loss but may result in message duplication.Implementing at-least-once delivery typically involves:

- The producer sending a message to the message queue.

- The message queue persisting the message (e.g., writing it to disk).

- The message queue delivering the message to the consumer.

- The consumer acknowledging receipt of the message.

- If the consumer doesn’t acknowledge, the message queue redelivers the message after a timeout.

The primary trade-off is the possibility of message duplication. If the consumer acknowledges the message, but the acknowledgment is lost, the message queue might redeliver the message. This guarantee is suitable for applications where message loss is unacceptable, even if it means dealing with potential duplicates. For instance, in financial transactions, the risk of losing a transaction is significantly higher than the risk of processing a transaction twice, which can be handled through deduplication mechanisms.

To prevent duplication, consumers must be designed to handle duplicate messages, often using unique message IDs and idempotent processing.

Exactly-Once Delivery

Exactly-once delivery guarantees that a message will be delivered to a consumer exactly one time. This is the strongest guarantee and the most complex to implement.Implementing exactly-once delivery typically involves:

- Message persistence by the message queue.

- Transactional message processing.

- Deduplication mechanisms on the consumer side.

The message queue system ensures that a message is delivered once, even in the face of failures. This often involves transactions to coordinate message sending, processing, and acknowledgement. The consumer uses techniques like unique message identifiers and idempotent operations to prevent processing the same message multiple times. The message queue broker can utilize techniques like two-phase commit to ensure message delivery and acknowledgment are atomic.The primary trade-off is increased complexity and lower performance.

This guarantee is the most resource-intensive due to the need for transaction management, persistent storage, and deduplication logic. Exactly-once delivery is suitable for applications where data integrity is paramount, such as financial systems, order processing, and any system where message duplication or loss is unacceptable. A practical example is an e-commerce platform processing orders, where ensuring each order is processed precisely once is critical to avoid financial discrepancies or customer dissatisfaction.

Monitoring and Management of Message Queues

Effective monitoring and management are crucial for maintaining the health, performance, and reliability of a message queue system. Proactive monitoring allows for early detection of issues, preventing potential failures and ensuring optimal message processing. Robust management tools provide the means to diagnose problems, optimize resource allocation, and maintain overall system stability.

Strategies for Monitoring Message Queue Performance and Health

Implementing a comprehensive monitoring strategy involves a multi-faceted approach. This includes continuous collection of key metrics, setting up alerts for anomalies, and establishing processes for investigating and resolving issues. The following strategies contribute to effective monitoring:

- Real-time Monitoring Dashboards: Utilizing dashboards that provide a real-time view of the system’s performance is essential. These dashboards should display key metrics, enabling quick identification of trends and potential problems.

- Alerting Systems: Configuring alerts based on predefined thresholds for critical metrics is vital. Alerts should notify administrators of any anomalies, such as high queue sizes, slow consumer processing, or connection failures.

- Log Aggregation and Analysis: Centralized log aggregation and analysis are crucial for identifying the root cause of issues. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk can be employed to collect, analyze, and visualize logs.

- Proactive Capacity Planning: Monitoring resource utilization (CPU, memory, disk I/O) helps in planning for future capacity needs. This involves analyzing historical data to predict future demand and scale resources accordingly.

- Regular Health Checks: Implementing automated health checks to verify the functionality of the message queue system and its components is a good practice. These checks can include testing message publishing and consumption, verifying connection availability, and validating data integrity.

- Performance Testing: Conducting regular performance tests under simulated load conditions provides insights into the system’s ability to handle peak loads and identify bottlenecks.

Common Metrics to Monitor

Several key metrics should be monitored to gain a comprehensive understanding of the message queue system’s performance. These metrics provide insights into various aspects of the system, including message throughput, queue health, and consumer behavior.

- Queue Size: This indicates the number of messages currently residing in a queue. High queue sizes can indicate a backlog of messages, potentially caused by slow consumers or producer bottlenecks. Monitoring queue size helps in detecting processing delays.

- Message Throughput: Measuring the rate at which messages are published and consumed (messages per second) provides insight into the system’s processing capacity. Significant drops in throughput can indicate performance degradation.

- Consumer Lag: This represents the time difference between when a message is published and when it is consumed by a consumer. High consumer lag indicates that consumers are falling behind, potentially due to slow processing or insufficient resources.

- Message Delivery Latency: This measures the time it takes for a message to be delivered from the producer to the consumer. High latency can indicate network issues, processing delays, or other bottlenecks.

- Error Rates: Monitoring the number of failed message deliveries, rejected messages, and other errors provides insights into the system’s reliability. A high error rate indicates potential problems with message formats, consumer code, or the message queue system itself.

- Resource Utilization: Monitoring CPU usage, memory consumption, disk I/O, and network traffic provides insights into resource bottlenecks. This information is crucial for capacity planning and resource optimization.

- Connection Counts: Monitoring the number of active connections between producers, consumers, and the message queue system provides insights into system load and potential connection-related issues.

Example Dashboard

The following blockquote presents an example of a monitoring dashboard for a message queue system. The dashboard displays key metrics, providing a visual representation of the system’s health and performance. This dashboard uses simulated data to illustrate how the metrics are displayed.

Message Queue System Dashboard

Overall System Status: Healthy

Metric Value Threshold Status Queue Size (Orders Queue) 1500 messages < 2000 messages Warning Message Throughput (Orders Queue – Publish) 500 messages/second > 400 messages/second OK Message Throughput (Orders Queue – Consume) 450 messages/second > 400 messages/second OK Consumer Lag (Orders Queue) 5 seconds < 10 seconds OK Message Delivery Latency (Orders Queue) 100 milliseconds < 200 milliseconds OK Error Rate (All Queues) 0.1% < 1% OK CPU Utilization (Message Broker) 60% < 80% OK Notes: This dashboard provides a high-level overview of the system’s performance. Further investigation may be required based on the status of individual metrics. For example, the warning status for queue size indicates a need to investigate the consumers or publishers of the ‘Orders Queue’. Historical trends are also available, but not displayed here for brevity.

Security Considerations in Message Queues

Securing message queue systems is crucial for maintaining data integrity, confidentiality, and availability. Implementing robust security measures protects against unauthorized access, data breaches, and denial-of-service attacks. This section details best practices for securing message queues, focusing on authentication, authorization, encryption, and other vital security considerations.

Security Best Practices for Message Queue Systems

Securing message queue systems requires a multi-layered approach. This involves implementing various security controls to protect data at rest and in transit, as well as ensuring the overall integrity of the system.

- Authentication: Verify the identity of users, applications, and services attempting to access the message queue. Implement strong authentication mechanisms such as:

- Username and Password: A fundamental method. Ensure strong password policies are enforced, including complexity requirements and regular password rotation.

- API Keys: Unique identifiers used to authenticate applications. These keys should be treated with care and rotated periodically.

- Multi-Factor Authentication (MFA): Add an extra layer of security by requiring users to provide more than one authentication factor, such as a password and a code from a mobile app or hardware token.

- OAuth/OpenID Connect: Utilize these standards for delegated authorization, allowing users to grant access to applications without sharing their credentials directly.

- Authorization: Control what authenticated users and applications can do within the message queue system. Implement role-based access control (RBAC) to define permissions based on roles, limiting access to only the resources and operations required.

- Encryption: Protect data confidentiality by encrypting messages both in transit and at rest.

- Encryption in Transit: Use Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt communication between clients and the message queue server.

- Encryption at Rest: Encrypt the message queue data stored on disk using encryption features provided by the message queue implementation or the underlying storage system.

- Network Security: Secure the network infrastructure surrounding the message queue system.

- Firewalls: Configure firewalls to restrict access to the message queue server from unauthorized networks and hosts.

- Virtual Private Networks (VPNs): Use VPNs to create secure connections between clients and the message queue server, especially when accessing the queue over public networks.

- Network Segmentation: Isolate the message queue system within its own network segment to limit the impact of potential security breaches.

- Regular Security Audits and Monitoring: Regularly audit the message queue system to identify vulnerabilities and security misconfigurations. Implement comprehensive monitoring to detect and respond to security incidents.

- Security Information and Event Management (SIEM): Integrate the message queue logs with a SIEM system to collect, analyze, and correlate security events.

- Intrusion Detection Systems (IDS) and Intrusion Prevention Systems (IPS): Deploy IDS/IPS to detect and prevent malicious activity.

- Patch Management: Keep the message queue software and all related dependencies up-to-date with the latest security patches. Regularly scan for vulnerabilities and address them promptly.

- Least Privilege Principle: Grant users and applications only the minimum necessary permissions to perform their tasks. Avoid providing excessive access rights.

Securing Messages in Transit and at Rest

Securing messages both in transit and at rest is paramount to prevent unauthorized access and data breaches. The following approaches provide a detailed strategy for achieving this.

- Securing Messages in Transit: Implement encryption to protect messages as they travel between producers, consumers, and the message queue server.

- Transport Layer Security (TLS/SSL): Use TLS/SSL to encrypt the network connection between clients and the message queue server. This protects against eavesdropping and man-in-the-middle attacks. Configure the message queue server to require TLS connections and verify client certificates.

- Mutual TLS (mTLS): Implement mTLS to verify the identity of both the client and the server. This enhances security by ensuring that only authorized clients can connect to the message queue.

- Configuration Example (RabbitMQ): In RabbitMQ, you would enable TLS by configuring the `listeners` section in the `rabbitmq.conf` file to specify the port and certificate paths for TLS connections. The client applications would then be configured to use TLS when connecting to the server, often by specifying the `amqps` protocol and providing the necessary certificates.

- Securing Messages at Rest: Protect messages stored on disk from unauthorized access by encrypting the data.

- Encryption at Rest: Utilize encryption features provided by the message queue implementation or the underlying storage system. This prevents unauthorized access to message data if the storage medium is compromised.

- Message Queue-Specific Encryption: Some message queue systems offer built-in encryption features. For instance, you might be able to encrypt messages before they are stored in the queue.

- Storage-Level Encryption: If the message queue system uses a database or file system, leverage the encryption features provided by the storage system. For example, encrypt the hard drives or volumes where the message queue data is stored.

- Configuration Example (Kafka): In Apache Kafka, you can enable encryption at rest by configuring the `log.dirs` setting to point to an encrypted volume. Additionally, Kafka supports encryption of data in transit via TLS.

- Key Management: Securely manage the cryptographic keys used for encryption.

- Key Generation: Generate strong, unique keys using a cryptographically secure random number generator.

- Key Storage: Store keys securely, such as in a hardware security module (HSM) or a dedicated key management system (KMS). Avoid storing keys in the same location as the data they protect.

- Key Rotation: Regularly rotate encryption keys to minimize the impact of a potential key compromise.

- Data Masking and Tokenization: Protect sensitive data within messages by masking or tokenizing it.

- Data Masking: Replace sensitive data with masked values. For example, replace a credit card number with a masked version.

- Tokenization: Replace sensitive data with tokens that represent the original data. The tokens can be stored in the message queue, while the original data is stored in a secure data store.

Configuring Security Features for a Specific Message Queue Implementation

The specific steps for configuring security features vary depending on the message queue implementation. The following examples illustrate how to configure security for two popular message queue systems: RabbitMQ and Apache Kafka.

- RabbitMQ Security Configuration:

- Authentication: RabbitMQ supports several authentication mechanisms, including:

- Username and Password: Create users and assign them permissions using the RabbitMQ management interface or the `rabbitmqctl` command-line tool.

- TLS Client Certificates: Enable mTLS to authenticate clients based on their certificates. Configure the server to require client certificates and specify the certificate authority (CA) that issued the certificates.

- OAuth 2.0: Integrate RabbitMQ with an OAuth 2.0 provider to delegate authentication and authorization.

- Authorization: Use RabbitMQ’s permission system to control access to resources. Grant or deny permissions to users based on their roles.

- Permissions: Grant permissions to users to perform actions on exchanges, queues, and virtual hosts.

- Roles: Define roles with specific permissions and assign users to roles.

- Encryption in Transit: Enable TLS/SSL for client connections.

- Configuration: Configure the `listeners` section in the `rabbitmq.conf` file to specify the port and certificate paths for TLS connections.

- Certificate Management: Obtain and configure SSL/TLS certificates from a trusted certificate authority.

- Example (rabbitmq.conf):

listeners.ssl.default = 5671 ssl_options.cacertfile = /path/to/cacert.pem ssl_options.certfile = /path/to/servercert.pem ssl_options.keyfile = /path/to/serverkey.pem

- Encryption at Rest: RabbitMQ does not provide built-in encryption at rest for message content. Consider using disk encryption features provided by the underlying operating system or storage system.

- Authentication: RabbitMQ supports several authentication mechanisms, including:

- Apache Kafka Security Configuration:

- Authentication: Kafka supports several authentication mechanisms:

- SASL/PLAIN: Use usernames and passwords for authentication.

- SASL/SCRAM: Implement a more secure authentication method using SCRAM (Salted Challenge Response Authentication Mechanism).

- TLS Client Certificates: Use client certificates for authentication.

- Kerberos: Integrate Kafka with Kerberos for authentication.

- Authorization: Kafka uses an authorization plugin to control access to topics, consumer groups, and other resources. Configure access control lists (ACLs) to grant or deny permissions to users and groups.

- ACLs: Define ACLs to specify which users or groups can perform specific actions on Kafka resources.

- Super Users: Designate super users with full access to all resources.

- Encryption in Transit: Enable TLS/SSL for client connections.

- Configuration: Configure the `listeners` setting in the `server.properties` file to specify the port and certificate paths for TLS connections.

- Certificate Management: Obtain and configure SSL/TLS certificates from a trusted certificate authority.

- Example (server.properties):

listeners=PLAINTEXT://:9092,SSL://:9093 security.inter.broker.protocol=SSL ssl.keystore.location=/path/to/kafka.server.keystore.jks ssl.keystore.password=kafka.server.keystore.password ssl.key.password=kafka.server.key.password ssl.truststore.location=/path/to/kafka.server.truststore.jks ssl.truststore.password=kafka.server.truststore.password

- Encryption at Rest:

- Configuration: Configure the `log.dirs` setting in the `server.properties` file to point to an encrypted volume.

- Storage-Level Encryption: Utilize encryption features provided by the underlying storage system.

- Authentication: Kafka supports several authentication mechanisms:

Use Cases of Message Queues

Message queues offer a powerful mechanism for enabling asynchronous communication between different parts of an application or across multiple applications. This asynchronous nature allows systems to remain responsive, scalable, and resilient. They are widely adopted across various industries to solve complex problems related to data processing, task management, and system integration.

E-commerce Order Processing

Message queues play a critical role in handling the high volume of transactions common in e-commerce.

- Order Placement: When a customer places an order, the order details are published to a message queue. This prevents the user from waiting for all subsequent processes (payment verification, inventory update, shipping notification) to complete before receiving confirmation. The system responds quickly, enhancing the user experience.

- Payment Processing: A separate service consumes the order message and processes the payment. This service might communicate with a payment gateway. If the payment is successful, a confirmation message is sent back to the order processing service.

- Inventory Management: Another service consumes the order message and updates the inventory levels. If an item is out of stock, a backorder process can be initiated.

- Shipping Notifications: Once the order is shipped, a service sends shipping notifications to the customer. This service can integrate with various shipping providers.

This decoupled architecture allows for scalability. For example, the payment processing service can scale independently based on the volume of payment transactions without impacting other services. If the inventory service experiences issues, it doesn’t prevent order placement.

Financial Transactions

Message queues are crucial for ensuring the reliability and efficiency of financial systems.

- Transaction Processing: Financial transactions, such as money transfers or stock trades, can be processed asynchronously. When a transaction is initiated, it’s added to a queue. Separate services then handle tasks like account validation, ledger updates, and notifications.

- Risk Management: Risk assessment services can consume messages related to transactions to evaluate potential risks in real-time.

- Fraud Detection: Message queues allow for the real-time processing of transaction data for fraud detection. Suspicious transactions can be flagged and investigated without blocking the core transaction flow.

The use of message queues improves system resilience. If one component fails, the rest of the system can continue to operate, ensuring the integrity of financial transactions.

Healthcare Systems

Message queues facilitate the exchange of information between different healthcare systems, such as Electronic Health Records (EHRs) and billing systems.

- Patient Data Synchronization: When a patient’s information is updated in one system (e.g., an EHR), a message is sent to a queue. Other systems (e.g., billing, lab results) then consume this message to update their records. This ensures data consistency across the healthcare ecosystem.

- Appointment Scheduling: Appointment scheduling systems can use message queues to notify other systems (e.g., pharmacy, lab) about upcoming appointments.

- Lab Results Delivery: When lab results are available, they can be published to a message queue. This allows different systems to consume the results, such as the EHR, the patient portal, and the doctor’s office.

Message queues enhance interoperability between healthcare systems, improving the quality of care and reducing administrative overhead.

Log Aggregation and Analysis

Message queues are used to collect and process logs from various sources within an application or across a distributed system.

- Log Collection: Log messages from different application components are sent to a message queue.

- Log Processing: Separate services consume these log messages to perform tasks like log aggregation, analysis, and storage.

- Alerting: Based on log analysis, alerts can be generated and sent to monitoring systems or operators.

This approach enables real-time monitoring, performance analysis, and anomaly detection.

Social Media Platforms

Social media platforms utilize message queues for managing user activities and processing large amounts of data.

- Post Publishing: When a user publishes a post, the post data is added to a queue. Different services can then handle tasks like updating the user’s feed, sending notifications, and storing the post in a database.

- Notification Delivery: Message queues efficiently handle sending notifications (e.g., likes, comments) to users.

- Search Indexing: Posts are sent to a queue to be indexed for search functionality.

Message queues help manage the high volume of data and user interactions in social media platforms, providing a smooth user experience.

IoT Device Data Processing

Message queues are well-suited for handling data from IoT devices.

- Data Ingestion: Data from IoT devices (sensors, wearables) is sent to a message queue.

- Data Processing: Separate services consume the data to perform tasks like data aggregation, analysis, and storage.

- Real-time Analytics: Message queues enable real-time analytics on IoT data, allowing for immediate insights and actions.

This allows for efficient processing of the massive amounts of data generated by IoT devices, enabling real-time monitoring and control.

Mobile Application Backend

Message queues improve the performance and scalability of mobile application backends.

- Push Notifications: Message queues are used to manage and deliver push notifications to mobile devices.

- Background Tasks: Tasks like image processing, data synchronization, and sending emails can be offloaded to background workers consuming messages from a queue.

- User Authentication: Message queues can be used to handle user authentication requests.

Message queues ensure a responsive user experience and efficient use of server resources.

Recommendation Systems

Message queues are integral to recommendation systems.

- User Activity Tracking: User interactions (e.g., product views, clicks) are added to a queue.

- Recommendation Generation: Separate services consume these events to generate personalized recommendations.

- Recommendation Delivery: The generated recommendations are then delivered to users through a queue.

This architecture enables real-time recommendations based on user behavior.

Advanced Topics and Considerations

Implementing message queues effectively involves understanding not only the core concepts but also advanced techniques to ensure scalability, reliability, and resilience. This section delves into sophisticated aspects such as clustering, high availability, disaster recovery, scaling strategies, and the crucial considerations for selecting the appropriate message queue implementation. These topics are essential for building robust and production-ready systems that can handle significant workloads and remain operational even in challenging circumstances.

Message Queue Clustering, High Availability, and Disaster Recovery

Message queue systems often require clustering to achieve high availability and fault tolerance. Clustering involves distributing the message queue brokers across multiple servers, allowing the system to continue operating even if one or more brokers fail. High availability (HA) ensures that the system is always accessible, while disaster recovery (DR) focuses on restoring operations after a significant outage.

- Clustering: A clustered message queue system distributes the workload across multiple nodes. When a node fails, the remaining nodes take over its responsibilities. This architecture prevents a single point of failure and ensures continuous operation. Different clustering strategies exist, including active-active and active-passive configurations. In an active-active setup, all nodes are actively processing messages, while in an active-passive setup, one or more nodes act as backups and take over if the active node fails.

For instance, Apache Kafka uses a distributed architecture where data is replicated across multiple brokers within a cluster, ensuring data durability and availability.

- High Availability: HA is achieved through features such as automatic failover, data replication, and load balancing. When a broker fails, the system automatically detects the failure and redirects traffic to a healthy broker. Data replication ensures that messages are stored on multiple brokers, so even if one broker fails, the messages are not lost. Load balancing distributes the message processing load across the available brokers, preventing any single broker from becoming overloaded.

For example, RabbitMQ supports HA through mirroring queues across multiple nodes, where the messages are replicated to ensure availability.

- Disaster Recovery: DR involves having a plan and infrastructure in place to recover from a major outage, such as a data center failure. DR strategies include replicating the message queue system to a secondary data center or using cloud-based services for backup and recovery. Regular backups of message queue data and configurations are crucial. Testing the DR plan periodically ensures that the recovery process works as expected.

Consider a scenario where a company’s primary data center experiences a catastrophic event. With a well-defined DR plan, the message queue system can be quickly restored in a secondary data center, minimizing downtime and data loss.

Scaling Message Queue Systems for High-Volume Workloads

Scaling a message queue system involves increasing its capacity to handle a growing volume of messages and a larger number of consumers and producers. Effective scaling ensures that the system can meet the demands of high-volume workloads without performance degradation or message loss. Several techniques can be employed for horizontal and vertical scaling.

- Horizontal Scaling: This involves adding more brokers to the message queue cluster. As the message volume increases, more brokers can be added to distribute the load. This is often achieved by partitioning the message queues across multiple brokers. For example, Kafka’s partitioning mechanism allows messages to be distributed across multiple brokers based on a key, enabling parallel processing and horizontal scalability.

- Vertical Scaling: This involves increasing the resources (CPU, memory, storage) of the existing brokers. Vertical scaling can improve the performance of individual brokers, but it is limited by the hardware’s capacity. For example, increasing the RAM or the processing power of the servers that host the brokers.

- Load Balancing: Load balancing distributes the message processing load across multiple brokers or consumers. This prevents any single broker or consumer from becoming overloaded. Load balancing can be implemented at various levels, such as at the broker level, the consumer level, or using a dedicated load balancer.

- Message Partitioning: Partitioning involves dividing a queue into multiple partitions, each of which can be processed independently. This allows for parallel processing of messages, significantly improving throughput. For instance, in Kafka, topics are divided into partitions, and each partition can be processed by multiple consumers in parallel.

- Optimizing Consumer Performance: The performance of consumers is critical to overall system performance. Optimizing consumer performance involves tuning consumer configurations, such as batch size, prefetch count, and acknowledgement mechanisms. Consumers should be designed to process messages efficiently and avoid bottlenecks.

- Monitoring and Tuning: Continuous monitoring of the message queue system is essential to identify performance bottlenecks and areas for optimization. Monitoring tools provide insights into message throughput, latency, and resource utilization. Based on the monitoring data, the system can be tuned to improve performance and scalability.

Choosing the Right Message Queue Implementation for Specific Project Requirements

Selecting the appropriate message queue implementation is crucial for the success of a project. Different message queue systems offer varying features, performance characteristics, and trade-offs. The choice depends on factors such as the project’s requirements, the expected message volume, the need for reliability, the desired level of scalability, and the existing infrastructure.

- Performance Requirements: Assess the expected message throughput and latency requirements. Some message queues, like Kafka, are designed for high-throughput, while others, like RabbitMQ, prioritize reliability and advanced routing features. Consider the volume of messages that the system will need to handle.

- Reliability Requirements: Determine the importance of message delivery guarantees. Some message queues offer strong guarantees, such as at-least-once or exactly-once delivery, while others provide weaker guarantees. Consider the potential impact of message loss on the application.

- Scalability Requirements: Evaluate the need for horizontal scalability. Some message queues are designed for easy horizontal scaling, while others may require more complex configuration. Consider the expected growth in message volume and the number of consumers and producers.

- Feature Set: Evaluate the features offered by each message queue implementation. Some message queues provide advanced routing capabilities, message transformation features, and built-in monitoring tools.

- Integration with Existing Infrastructure: Consider the compatibility of the message queue implementation with the existing infrastructure, including the programming languages, operating systems, and cloud platforms used in the project.

- Community and Support: Consider the size of the community, the availability of documentation, and the level of vendor support. A large and active community can provide valuable assistance and resources.

- Cost: Consider the cost of the message queue implementation, including the cost of software licenses, hardware resources, and operational overhead. Evaluate the total cost of ownership (TCO) over the lifecycle of the project.

Final Summary

In conclusion, mastering the art of message queues is essential for building robust, scalable, and responsive applications. We’ve traversed the landscape of message queue systems, from their core components and diverse implementations to the critical aspects of message formats, routing, and security. By understanding the nuances of delivery guarantees, monitoring strategies, and advanced considerations, you’re well-equipped to harness the full potential of message queues.

Embrace the asynchronous revolution and transform the way you build and deploy your applications, ensuring they can handle the demands of today’s dynamic environments.

Quick FAQs

What are the primary benefits of using message queues?

Message queues provide several advantages, including improved application performance, enhanced scalability, increased fault tolerance, and decoupled components, making systems more flexible and easier to maintain.

How do message queues handle failures?

Message queues often incorporate mechanisms like message retries, dead-letter queues, and acknowledgments to handle failures. Producers can resend messages, and consumers can acknowledge successful processing, ensuring messages are not lost.

What is the difference between a message queue and a message broker?

A message queue is a specific data structure, while a message broker is the software that implements and manages the message queues. The broker handles the routing, storage, and delivery of messages.

Are message queues suitable for all types of applications?

Message queues are particularly well-suited for applications with asynchronous communication needs, such as background processing, event-driven architectures, and decoupling of services. They may not be the best choice for real-time, synchronous operations.

How do I choose the right message queue implementation for my project?

The choice depends on your specific requirements, including scalability needs, performance expectations, and the features offered by each implementation. Consider factors like ease of use, community support, and integration capabilities.