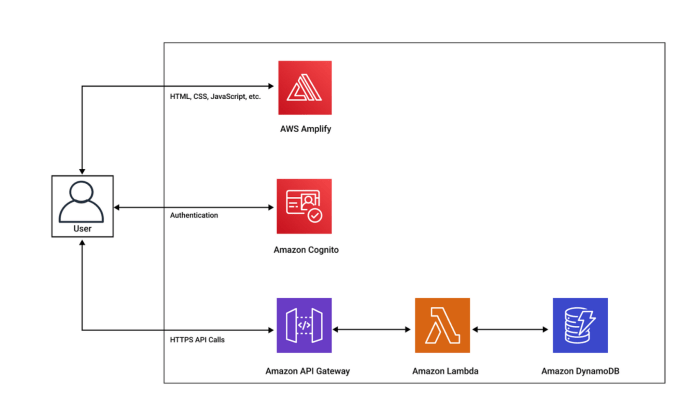

Serverless architectures, renowned for their scalability and cost-effectiveness, inherently pose challenges in managing state. Traditional stateful approaches are incompatible with the ephemeral nature of serverless functions. DynamoDB, a fully managed NoSQL database service, emerges as a pivotal solution, providing a robust and scalable means to store and retrieve state data within these dynamic environments. This exploration delves into the intricacies of leveraging DynamoDB for effective state management in serverless applications, from fundamental concepts to advanced techniques.

This analysis will dissect the core components of DynamoDB, including table design, primary keys, and secondary indexes, alongside practical implementations using the AWS CLI and SDKs. We will examine how to securely store session data, implement atomic operations to prevent data corruption, and handle concurrency issues. Furthermore, this study will explore advanced techniques like transactions and batch operations, providing real-world use cases and optimization strategies to ensure optimal performance and scalability.

Introduction to DynamoDB for Serverless State Management

DynamoDB serves as a critical component for state management in serverless architectures, addressing the inherent statelessness of these systems. By providing a fully managed NoSQL database, DynamoDB allows serverless applications to persist and retrieve data, enabling them to maintain state across invocations. This capability is essential for building complex, interactive applications that require data persistence and user session management.

Fundamental Role of DynamoDB in Managing State

DynamoDB’s primary function within a serverless environment is to store and retrieve application state. Serverless functions, by design, are stateless; each invocation operates independently, without retaining any information from previous executions. To overcome this limitation, data must be externalized and persisted. DynamoDB acts as this external store, allowing functions to save and retrieve information related to user sessions, application configurations, and any other data necessary for the application’s operation.

The database facilitates the management of complex states. For instance, consider an e-commerce application where user shopping carts, order histories, and product inventories need to be tracked. DynamoDB provides the storage and retrieval capabilities required to handle these data-intensive operations efficiently and reliably.

Overview of Serverless Architecture and Stateless Nature

Serverless architecture is characterized by its event-driven nature and the use of managed services, such as AWS Lambda, to execute code. The key characteristic is the statelessness of individual function executions. Each function invocation is independent, meaning that the function does not retain any information about previous executions. This statelessness simplifies scaling and allows for efficient resource utilization, as functions are only active when triggered by an event.

However, this also necessitates the use of external services for state management. Data persistence is crucial for storing information about users, sessions, and application configurations. DynamoDB provides a scalable and highly available solution for managing this state.

Benefits of Using DynamoDB for State Data Storage and Retrieval

DynamoDB offers several key benefits for serverless state management:

- Scalability: DynamoDB automatically scales to handle varying workloads. The database can seamlessly accommodate increasing amounts of data and request traffic without manual intervention, allowing serverless applications to grow without requiring significant infrastructure management. This is achieved through its distributed architecture, which can handle a large number of read and write operations per second.

- High Availability: DynamoDB is designed for high availability, with data replicated across multiple Availability Zones within a region. This ensures that the data remains accessible even if a failure occurs in one zone. Data durability is also a critical aspect. Data is replicated across multiple physical locations to minimize the risk of data loss.

- Cost-Effectiveness: DynamoDB’s pay-per-use pricing model aligns well with the on-demand nature of serverless applications. You only pay for the storage and throughput you consume. This can significantly reduce operational costs compared to traditional database solutions, especially for applications with variable traffic patterns. The cost efficiency is particularly noticeable during periods of low traffic when the database usage is minimal.

- Fully Managed Service: As a fully managed service, DynamoDB eliminates the operational overhead of managing a database. AWS handles tasks such as hardware provisioning, software patching, and backups. This allows developers to focus on building and deploying their applications rather than managing the underlying infrastructure. The automated backups and point-in-time recovery capabilities provide additional data protection and disaster recovery options.

- Performance: DynamoDB provides consistent, low-latency performance. The database is optimized for fast read and write operations, making it suitable for applications that require real-time data access. DynamoDB uses solid-state drives (SSDs) for storage and employs a distributed architecture to minimize latency.

DynamoDB’s ability to manage state effectively is critical for the successful implementation of serverless applications, enabling complex functionalities that would otherwise be challenging to achieve in a stateless environment.

Core Concepts

Understanding the fundamental components of Amazon DynamoDB is crucial for effective state management in serverless applications. This involves grasping how data is organized, accessed, and indexed within DynamoDB. This section will delve into the core concepts of tables, items, and attributes, providing a foundation for designing a DynamoDB schema optimized for storing and retrieving state information.

Designing a DynamoDB Table Schema for State Management

Designing a DynamoDB table schema requires careful consideration of access patterns and data relationships. The goal is to create a schema that enables efficient read and write operations while minimizing costs. A well-designed schema anticipates the types of queries that will be performed and optimizes for those access patterns. This typically involves choosing the correct primary key and, when necessary, defining secondary indexes.To illustrate this, let’s consider a table designed to manage user session data for a web application.

The table should store information about logged-in users, their session identifiers, authentication tokens, and any relevant session-specific data, such as the user’s current page or shopping cart contents.* Primary Key Selection: The primary key is the unique identifier for each item in the table. Choosing the right primary key is paramount for performance. For user session data, a common approach is to use a composite primary key consisting of a partition key and a sort key.

Partition Key

This key determines where the data is stored physically. A good choice for the partition key in our example would be the `UserID`. This ensures that all session data for a specific user is stored together, facilitating efficient retrieval of a user’s session information.

Sort Key

The sort key further organizes the data within each partition. In our example, the sort key could be the `SessionID`. This allows us to store multiple sessions for the same user (e.g., across different devices or browsers).

Data Modeling

The table structure should reflect the data’s structure. Attributes should be chosen to represent the necessary information about the user’s session.

`UserID` (String)

The unique identifier for the user (Partition Key).

`SessionID` (String)

The unique identifier for the session (Sort Key).

`AuthToken` (String)

The authentication token associated with the session.

`LastAccessed` (Number)

A timestamp indicating the last time the session was accessed.

`UserAgent` (String)

The user agent string from the browser.

`SessionData` (Map)

A map that can store session-specific data like the user’s current page or shopping cart information. This structure supports retrieving a user’s active session by providing the `UserID` and `SessionID` as input. It also supports retrieving all sessions for a user by providing the `UserID` and using a query operation with a `begins_with` filter on the `SessionID`.

Purpose of Primary Keys and Secondary Indexes in Efficient Data Access

Primary keys and secondary indexes are essential for optimizing data access in DynamoDB. They provide different ways to locate and retrieve data efficiently, improving query performance and reducing read costs. The correct use of these features is critical for building scalable and performant serverless applications.* Primary Keys: The primary key uniquely identifies each item in a DynamoDB table.

It’s composed of either a partition key alone or a partition key and a sort key (composite primary key). DynamoDB uses the partition key to determine the physical location of the data. The sort key allows for efficient sorting and filtering within a partition.

Example

In the user session example, the composite primary key (`UserID`, `SessionID`) enables quick retrieval of a specific user session based on both the user ID and the session ID. The `UserID` directs DynamoDB to the partition containing the user’s data, and the `SessionID` helps locate the specific session within that partition.

Secondary Indexes

Secondary indexes provide alternative ways to query data based on attributes other than the primary key. There are two types: global secondary indexes (GSIs) and local secondary indexes (LSIs).

Global Secondary Indexes (GSIs)

GSIs allow you to query data using a different partition key and sort key combination than the primary key. They are useful for querying data across the entire table.

Example

Suppose we want to find all sessions active within the last 15 minutes. We could create a GSI with `LastAccessed` as the partition key and `UserID` as the sort key. This would enable us to query all sessions accessed within a certain time window without having to scan the entire table. The GSI would maintain its own physical storage and would be updated automatically by DynamoDB when the base table data is updated.

Local Secondary Indexes (LSIs)

LSIs allow you to query data using a different sort key than the primary key sort key but must use the same partition key. LSIs are created at the time of table creation.

Example

Not directly applicable in our session example, but for other data, let’s say if we had an attribute for `DeviceType` (e.g., “mobile”, “desktop”) we could create an LSI with the same partition key (`UserID`) and a sort key on `DeviceType` to quickly filter sessions by device type for a given user.

Trade-offs

The use of secondary indexes can improve query performance but also introduces costs. Each index adds storage costs and increases write costs because DynamoDB must update the index whenever the base table is updated. Therefore, the decision to use secondary indexes should be based on the access patterns of the application and the trade-off between read performance and write costs.

Designing a Table with Attributes for User Session Data

A well-designed table schema for user session data includes several attributes to store critical information about each user session. This section will detail the attributes, their data types, and their roles within the table, providing an example suitable for displaying in an HTML table.Consider the following attributes for a user session table, suitable for display in an HTML table:

| Attribute Name | Data Type | Description | Example Value | Primary Key Role |

|---|---|---|---|---|

| UserID | String | Unique identifier for the user. | “user123” | Partition Key |

| SessionID | String | Unique identifier for the session. | “session456” | Sort Key |

| AuthToken | String | Authentication token for the session. | “abcdef123456” | |

| LastAccessed | Number | Timestamp of the last session activity (Unix timestamp). | 1678886400 | |

| UserAgent | String | User agent string of the browser. | “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36” | |

| SessionData | Map | A map to store session-specific data. | “currentPage”: “/home”, “cartItems”: [ “item1”, “item2” ] |

This table design allows for efficient retrieval of session data. The `UserID` and `SessionID` combination forms the primary key, ensuring quick access to specific sessions. The `LastAccessed` attribute is crucial for session management, enabling the identification of inactive sessions for cleanup. The `AuthToken` attribute securely stores the authentication token, and `SessionData` allows for storing application-specific data associated with the session.

The `UserAgent` attribute helps to identify the device and browser used for the session. The data types are chosen to optimize storage and retrieval performance.

Setting up DynamoDB

Setting up DynamoDB is a fundamental step in leveraging its capabilities for serverless state management. This section details the process of configuring DynamoDB using both the AWS Command Line Interface (CLI) and Software Development Kits (SDKs), providing practical examples for common operations. The focus is on enabling developers to interact with DynamoDB efficiently and securely.

Creating DynamoDB Tables with AWS CLI

The AWS CLI offers a command-line interface for managing AWS services, including DynamoDB. Creating a DynamoDB table involves specifying parameters such as table name, primary key schema, and provisioned throughput. This approach is particularly useful for automation and scripting.To create a DynamoDB table using the AWS CLI, the `create-table` command is employed. This command requires several parameters to define the table’s characteristics.

Here’s a breakdown:* `–table-name`: Specifies the name of the table to be created.

`–attribute-definitions`

Defines the attributes that constitute the table’s primary key and their data types.

`–key-schema`

Describes the primary key structure, specifying the attribute names and their roles (HASH or RANGE).

`–provisioned-throughput`

Configures the read and write capacity units for the table.For example, to create a table named “Users” with a primary key “UserId” (string) and a RANGE key “RegistrationDate” (number), and provisioned read/write capacity of 5 units each, the following command would be used:“`bashaws dynamodb create-table \ –table-name Users \ –attribute-definitions \ AttributeName=UserId,AttributeType=S \ AttributeName=RegistrationDate,AttributeType=N \ –key-schema \ AttributeName=UserId,KeyType=HASH \ AttributeName=RegistrationDate,KeyType=RANGE \ –provisioned-throughput ReadCapacityUnits=5,WriteCapacityUnits=5“`This command sends a request to the DynamoDB service to create the specified table.

The response will confirm the table’s creation or indicate any errors encountered. The `–attribute-definitions` parameter defines the schema for the table, specifying the attribute names and their data types. The `–key-schema` parameter specifies the primary key, identifying the hash and range keys. The `–provisioned-throughput` parameter sets the read and write capacity units, determining the table’s performance capabilities.

This setup is a basic example, and real-world applications often require more complex configurations, including secondary indexes and global tables, depending on the specific use case and access patterns. The process involves sending a `CreateTable` request to the DynamoDB service.

CRUD Operations with Python (Boto3) and Node.js (AWS SDK)

Performing Create, Read, Update, and Delete (CRUD) operations on DynamoDB items is a core aspect of data management. Both Python (using the Boto3 library) and Node.js (using the AWS SDK for JavaScript) provide robust SDKs for interacting with DynamoDB. The following code snippets illustrate basic CRUD operations.* Python (Boto3): “`python import boto3 # Initialize DynamoDB client dynamodb = boto3.resource(‘dynamodb’) table = dynamodb.Table(‘Users’) # Create table.put_item( Item= ‘UserId’: ‘user123’, ‘Username’: ‘john.doe’, ‘RegistrationDate’: 1678886400 # Unix timestamp ) # Read response = table.get_item( Key= ‘UserId’: ‘user123’, ‘RegistrationDate’: 1678886400 ) item = response.get(‘Item’) print(item) # Update table.update_item( Key= ‘UserId’: ‘user123’, ‘RegistrationDate’: 1678886400 , UpdateExpression=’SET Username = :val1′, ExpressionAttributeValues= ‘:val1’: ‘john.updated’ ) # Delete table.delete_item( Key= ‘UserId’: ‘user123’, ‘RegistrationDate’: 1678886400 ) “`* Node.js (AWS SDK): “`javascript const AWS = require(‘aws-sdk’); // Configure AWS SDK AWS.config.update( region: ‘us-east-1’ ); // Replace with your region // Create DynamoDB client const dynamodb = new AWS.DynamoDB.DocumentClient(); const tableName = ‘Users’; // Create const paramsCreate = TableName: tableName, Item: ‘UserId’: ‘user456’, ‘Username’: ‘jane.doe’, ‘RegistrationDate’: 1678972800 // Unix timestamp ; dynamodb.put(paramsCreate, (err, data) => if (err) console.error(“Unable to add item.

Error JSON:”, JSON.stringify(err, null, 2)); else console.log(“Added item:”, JSON.stringify(data, null, 2)); ); // Read const paramsRead = TableName: tableName, Key: ‘UserId’: ‘user456’, ‘RegistrationDate’: 1678972800 ; dynamodb.get(paramsRead, (err, data) => if (err) console.error(“Unable to read item.

Error JSON:”, JSON.stringify(err, null, 2)); else console.log(“GetItem succeeded:”, JSON.stringify(data.Item, null, 2)); ); // Update const paramsUpdate = TableName: tableName, Key: ‘UserId’: ‘user456’, ‘RegistrationDate’: 1678972800 , UpdateExpression: ‘SET Username = :val1’, ExpressionAttributeValues: ‘:val1’: ‘jane.updated’ ; dynamodb.update(paramsUpdate, (err, data) => if (err) console.error(“Unable to update item.

Error JSON:”, JSON.stringify(err, null, 2)); else console.log(“UpdateItem succeeded:”, JSON.stringify(data, null, 2)); ); // Delete const paramsDelete = TableName: tableName, Key: ‘UserId’: ‘user456’, ‘RegistrationDate’: 1678972800 ; dynamodb.delete(paramsDelete, (err, data) => if (err) console.error(“Unable to delete item.

Error JSON:”, JSON.stringify(err, null, 2)); else console.log(“DeleteItem succeeded:”, JSON.stringify(data, null, 2)); ); “` These code snippets demonstrate fundamental CRUD operations. The Python example uses the `boto3` library to interact with DynamoDB, while the Node.js example uses the AWS SDK for JavaScript.

Both examples first initialize a DynamoDB client. The create operation (`put_item` in Python, `put` in Node.js) adds a new item to the table. The read operation (`get_item` in Python, `get` in Node.js) retrieves an item based on its primary key. The update operation (`update_item` in Python, `update` in Node.js) modifies an existing item. Finally, the delete operation (`delete_item` in Python, `delete` in Node.js) removes an item from the table.

These are basic examples, and real-world scenarios often involve more complex operations, such as batch operations, querying, and filtering.

Configuring AWS Credentials

Properly configuring AWS credentials is essential for secure and authorized access to DynamoDB. The method for configuring credentials depends on the environment where the code is running (e.g., local development machine, AWS EC2 instance, AWS Lambda function).The most common methods for configuring AWS credentials are:* Using Environment Variables: This is a common practice for local development and containerized environments.

The AWS CLI and SDKs automatically look for the `AWS_ACCESS_KEY_ID`, `AWS_SECRET_ACCESS_KEY`, and `AWS_REGION` environment variables. For example: “`bash export AWS_ACCESS_KEY_ID=YOUR_ACCESS_KEY export AWS_SECRET_ACCESS_KEY=YOUR_SECRET_KEY export AWS_REGION=us-east-1 “`* Using the AWS CLI `configure` Command: The AWS CLI provides a convenient way to store credentials in a configuration file (usually located at `~/.aws/credentials`).

“`bash aws configure “` This command prompts for the access key ID, secret access key, region, and output format. The information is then saved to the configuration file, allowing the SDKs to automatically load the credentials.* IAM Roles (for EC2 and Lambda): When running code on AWS EC2 instances or within AWS Lambda functions, IAM roles are the recommended approach.

IAM roles provide temporary, short-lived credentials, which enhance security. The EC2 instance or Lambda function is assigned an IAM role with the necessary permissions to access DynamoDB. The SDKs automatically obtain the credentials associated with the role.* Configuration Files: The AWS SDKs and CLI look for credentials in several places, including configuration files. The location of these files can vary depending on the operating system and SDK configuration.

The `~/.aws/credentials` file is a standard location. These files typically store credentials in a structured format. For example, a credentials file might look like this: “` [default] aws_access_key_id = YOUR_ACCESS_KEY aws_secret_access_key = YOUR_SECRET_KEY region = us-east-1 “` These methods allow the AWS SDKs and CLI to authenticate and authorize requests to DynamoDB.

The use of IAM roles is generally considered the most secure approach, particularly in production environments. It is crucial to handle credentials securely and avoid hardcoding them directly into code. The choice of configuration method depends on the deployment environment and security requirements. Always prioritize security best practices when configuring AWS credentials.

Storing Session Data with DynamoDB

DynamoDB provides a robust and scalable solution for managing user session data within serverless applications. This is crucial for maintaining user context across requests, enabling features like authentication, personalization, and tracking user activity. Efficiently storing and retrieving session information directly impacts application performance and user experience.

Storing User Session Information

User session information, encompassing data such as authentication tokens, user preferences, and session timestamps, can be effectively stored within DynamoDB tables. This approach offers several advantages, including scalability, high availability, and the ability to handle a large volume of concurrent users. The structure of the DynamoDB table is critical for efficient access and management of this data.

- Table Design: A well-designed DynamoDB table is essential for storing session data. The primary key typically consists of a unique identifier, such as a session ID or user ID. The session ID, generated at the start of a user’s session, uniquely identifies the session. The user ID, linked to the user’s account, can be used for data organization and retrieval.

The choice depends on the specific access patterns required by the application. The table should also include attributes to store session data, such as:

- Authentication tokens (e.g., JWTs or access tokens)

- User preferences (e.g., language, theme)

- Session timestamps (e.g., last activity, creation time)

- Any other relevant session-specific data

- Data Serialization: Session data, which often includes complex objects, should be serialized before storage in DynamoDB. Common serialization formats include JSON and MessagePack. JSON offers good readability and is widely supported, while MessagePack can provide better performance and smaller data sizes, which can be advantageous for frequently accessed data. The choice of serialization format should consider both performance and storage efficiency.

- Example: Consider a table named `UserSessions` with the following attributes:

- `SessionId` (String, Partition Key): A unique identifier for the session.

- `UserId` (String): The ID of the user associated with the session.

- `AccessToken` (String): The authentication token.

- `Preferences` (Map): A JSON object containing user preferences.

- `LastActivity` (Number): A timestamp indicating the last time the session was accessed.

- `ExpirationTime` (Number): A timestamp indicating when the session expires (used with TTL).

- Access Patterns: The application should use efficient access patterns to retrieve and update session data. These often involve using the primary key (`SessionId` or `UserId`) to retrieve a specific session. For instance, retrieving a user’s session using `SessionId` would be a direct `GetItem` operation. Updating the `LastActivity` attribute during each request ensures that the session remains active.

Security Considerations

Security is paramount when storing sensitive data like authentication tokens and user preferences. Several security measures should be implemented to protect session data from unauthorized access and manipulation.

- Encryption: Sensitive data, such as authentication tokens, should be encrypted both in transit and at rest.

- Encryption in Transit: Utilize HTTPS (TLS/SSL) to encrypt data during transmission between the client and the server.

- Encryption at Rest: Enable encryption at rest for the DynamoDB table using AWS KMS (Key Management Service). This ensures that the data stored on the DynamoDB servers is encrypted. The KMS key should be managed securely, and access should be restricted to authorized personnel.

- Access Control: Implement strict access control policies to limit who can access the DynamoDB table and its contents.

- IAM Roles: Use AWS IAM roles to grant the serverless functions (e.g., Lambda functions) only the necessary permissions to access the DynamoDB table. Avoid using overly permissive IAM policies.

- Least Privilege Principle: Grant only the minimum necessary permissions. For example, a function that retrieves session data should only have `dynamodb:GetItem` permission, and not `dynamodb:PutItem` or `dynamodb:DeleteItem` unless absolutely required.

- Data Validation and Sanitization: Validate and sanitize all data before storing it in DynamoDB to prevent injection attacks and ensure data integrity.

- Input Validation: Validate all user inputs to prevent malicious data from being stored in the session.

- Output Encoding: Encode data before displaying it to prevent cross-site scripting (XSS) attacks.

- Token Management: Securely manage authentication tokens to prevent their compromise.

- Token Storage: Never store sensitive tokens (e.g., passwords) directly in session data. Instead, store only tokens that can be used to access the protected resources.

- Token Revocation: Implement a mechanism to revoke tokens if a user’s account is compromised or if a token is suspected of being misused. This can involve deleting the session data from DynamoDB or marking the token as invalid.

- Auditing and Monitoring: Implement auditing and monitoring to detect and respond to security incidents.

- CloudTrail: Enable AWS CloudTrail to log all API calls made to DynamoDB.

- Monitoring Metrics: Monitor DynamoDB metrics (e.g., consumed read/write capacity units, error rates) using Amazon CloudWatch to identify potential security issues or performance bottlenecks.

Using TTL (Time-to-Live) for Session Expiration

DynamoDB’s Time-to-Live (TTL) feature provides a simple and effective way to automatically expire session data. TTL automatically deletes items from a table after a specified time, reducing the need for manual session cleanup and minimizing storage costs.

- TTL Attribute: To enable TTL, you must add a special attribute to your DynamoDB table that specifies the expiration time for each session. This attribute must be a number representing the Unix epoch timestamp (seconds since January 1, 1970, 00:00:00 UTC).

- Setting the TTL Attribute: When creating or updating a session, set the TTL attribute to a future timestamp, indicating when the session should expire. For example, to expire a session after 30 minutes, you would calculate the expiration timestamp by adding 1800 seconds (30 minutes

– 60 seconds/minute) to the current time.ExpirationTime = CurrentTimestamp + 1800 - TTL Process: DynamoDB automatically scans the table and deletes items whose TTL attribute has passed. This process runs asynchronously, and the deletion time is not guaranteed to be instantaneous. The deletion process can take a few hours, depending on the table size and activity.

- Benefits of TTL:

- Automatic Cleanup: Automatically removes expired sessions, reducing the need for manual cleanup tasks.

- Cost Savings: Reduces storage costs by deleting inactive session data.

- Improved Performance: Reduces the size of the table, potentially improving query performance.

- Considerations:

- Asynchronous Deletion: The deletion process is asynchronous, so there might be a delay before expired items are removed.

- TTL Attribute Updates: You can update the TTL attribute to extend or shorten the session duration. For instance, updating `LastActivity` will usually be accompanied by updating the `ExpirationTime`.

- Error Handling: The application should handle cases where a session might still exist briefly after the TTL has passed. This might involve checking if the session exists before allowing access or providing a graceful fallback mechanism.

Implementing Atomic Operations

In serverless architectures, concurrent access to shared state is a common challenge. Without proper synchronization mechanisms, multiple function invocations can attempt to modify the same data simultaneously, leading to data corruption, inconsistencies, and unpredictable behavior. Atomic operations provide a solution by ensuring that operations on data are indivisible and execute as a single, uninterruptible unit, maintaining data integrity in the face of concurrency.

Conditional Writes for Data Integrity

Conditional writes are a fundamental feature of DynamoDB that enables atomic operations. They allow updates to an item only if a specified condition is met. This condition is expressed as a conditional expression, evaluating the current state of the item’s attributes. If the condition evaluates to true, the write operation proceeds; otherwise, it fails, preventing conflicting updates. This mechanism is crucial for preventing race conditions and ensuring data consistency when multiple processes interact with the same data.Consider the following example of updating a counter attribute safely using conditional expressions:A serverless application manages a website’s view counter.

Multiple users accessing the website concurrently trigger Lambda function invocations to increment the counter stored in a DynamoDB table. Without conditional writes, these invocations might interfere with each other, leading to lost increments and an inaccurate count.To implement a safe increment, we utilize a conditional write.The following steps are involved:

- Retrieve the current counter value: Before attempting to update the counter, the application first retrieves the current value from the DynamoDB table. This is essential for building the conditional expression.

- Construct the conditional expression: The conditional expression checks if the current counter value in DynamoDB matches the value retrieved in the previous step.

- Perform the update: The application uses the `UpdateItem` API operation in DynamoDB, providing the conditional expression. The update operation will only proceed if the current counter value in the table matches the value that was retrieved.

- Handle potential failures: If the conditional expression evaluates to false (meaning the counter value has been changed by another concurrent update), the write operation fails. The application can then retry the operation, fetch the latest counter value, and re-attempt the increment with the updated condition.

The Python code example demonstrates how to use conditional expressions to increment the counter attribute:“`pythonimport boto3dynamodb = boto3.resource(‘dynamodb’)table_name = ‘WebsiteViewCounter’table = dynamodb.Table(table_name)def increment_counter(website_id): try: response = table.update_item( Key=’website_id’: website_id, UpdateExpression=’ADD views :val’, ConditionExpression=’views = :current_views’, ExpressionAttributeValues= ‘:val’: 1, ‘:current_views’: get_current_views(website_id) # Function to get current views , ReturnValues=’UPDATED_NEW’ ) return response[‘Attributes’][‘views’] # Returns updated view count except ClientError as e: if e.response[‘Error’][‘Code’] == ‘ConditionalCheckFailedException’: # Another process updated the counter.

Retry or handle the conflict. print(“Conditional check failed. Retrying…”) return increment_counter(website_id) # Retry increment else: print(f”Error updating counter: e”) return Nonedef get_current_views(website_id): try: response = table.get_item(Key=’website_id’: website_id) if ‘Item’ in response: return response[‘Item’][‘views’] else: return 0 # If no item exists, assume views are 0 except ClientError as e: print(f”Error getting item: e”) return 0# Example usagewebsite_id = ‘mywebsite’new_view_count = increment_counter(website_id)if new_view_count is not None: print(f”View count updated to: new_view_count”)“`In this code:

- The `increment_counter` function attempts to increment the `views` attribute of an item identified by `website_id`.

- The `UpdateExpression` adds 1 to the `views` attribute.

- The `ConditionExpression` checks if the current value of the `views` attribute matches the `current_views` value retrieved before the update.

- `ExpressionAttributeValues` provide the values used in the update and condition expressions.

- If the conditional check fails, the code catches the `ConditionalCheckFailedException` and either retries the operation or handles the conflict, ensuring data integrity.

This approach guarantees that the counter is incremented correctly, even with multiple concurrent requests. If another process has already updated the counter, the conditional write fails, and the function can retry, fetching the most recent value and reapplying the increment. This ensures that no updates are lost and the counter remains accurate.By utilizing conditional writes, the serverless application maintains data consistency and prevents data corruption.

This is especially critical in scenarios with high concurrency, such as handling website traffic, processing financial transactions, or managing user sessions.

Handling Concurrency and Consistency

In serverless applications, where numerous concurrent requests interact with shared resources, managing concurrency and ensuring data consistency in DynamoDB is crucial. Choosing the right consistency model and implementing strategies to handle concurrent updates directly impacts the performance, reliability, and data integrity of the application. This section explores the consistency models offered by DynamoDB, the trade-offs between performance and consistency, and practical methods for handling concurrent updates.

DynamoDB Consistency Models

DynamoDB offers two primary consistency models: eventually consistent reads and strongly consistent reads. Understanding the differences between these models is vital for making informed decisions about data access patterns in serverless applications.

- Eventually Consistent Reads:

By default, DynamoDB provides eventually consistent reads. This means that the read operation might not reflect the most recent write. Data is typically propagated across all replicas within a second. This model prioritizes performance and availability over strict consistency. For instance, if a user updates their profile, an eventually consistent read might show the old profile information for a short period before the updated data is available.

This approach reduces latency and improves the throughput of read operations, making it suitable for scenarios where a slight delay in reflecting updates is acceptable, such as displaying social media feeds or product catalogs.

- Strongly Consistent Reads:

Strongly consistent reads guarantee that the read operation will return the most up-to-date version of the data. This means the read operation waits for all writes to complete before returning the result. This model prioritizes data accuracy over performance. For example, in a banking application, strongly consistent reads are essential when displaying account balances to ensure the information is always accurate.

While ensuring the latest data, strongly consistent reads typically have higher latency and consume more read capacity units (RCUs) compared to eventually consistent reads, potentially impacting the scalability of the application. The selection of this model depends on the application’s requirements for data accuracy and the acceptable latency.

Performance and Consistency Trade-offs

The choice between eventually consistent and strongly consistent reads involves a trade-off between performance and consistency. This trade-off is a critical consideration in serverless applications, where performance and cost-efficiency are paramount.

- Performance Implications:

Eventually consistent reads offer significantly lower latency and higher throughput than strongly consistent reads. This is because the read operation can be served from any replica without waiting for the write to propagate to all replicas. Strongly consistent reads, on the other hand, must query the primary replica or wait for data synchronization, resulting in higher latency. The difference in performance becomes more pronounced under heavy load, where the reduced latency of eventually consistent reads can prevent bottlenecks.

- Cost Implications:

Strongly consistent reads consume more read capacity units (RCUs) than eventually consistent reads. Each strongly consistent read requires more resources to ensure data accuracy, leading to higher costs. For applications with high read volumes, this difference can be substantial. Using eventually consistent reads where possible can significantly reduce costs, especially when the application is scaled.

- Application Design Considerations:

The choice between consistency models depends on the specific requirements of the application. For example, a real-time chat application might prioritize performance over strict consistency, making eventually consistent reads suitable. A financial application, however, would prioritize data accuracy, requiring strongly consistent reads for critical operations like transaction processing. Developers should carefully analyze the application’s data access patterns and performance requirements to make the optimal choice.

Handling Concurrent Updates

Concurrent updates to the same item in DynamoDB can lead to data inconsistencies if not managed correctly. Implementing strategies to handle these updates is crucial for maintaining data integrity.

- Using Conditional Writes:

Conditional writes are a powerful mechanism for handling concurrent updates. They allow you to specify a condition that must be met before the write operation is executed. This condition can check the existing value of an attribute. For example, to update a counter, you can use a conditional write that only updates the counter if its current value matches the expected value.

This prevents overwriting changes made by other concurrent updates. Conditional writes prevent the “lost update” problem.

Consider the following pseudocode example:

// Assume 'item_id' is the primary key, 'counter' is the attribute to update, and 'expected_value' is the current value

// of the counter.// Before update

current_value = getItem(item_id).counter; // Get the current value

// Perform conditional update

if (current_value == expected_value)

updateItem(item_id, counter: current_value + 1, conditionExpression: "counter = :expected_value", expressionAttributeValues: ":expected_value": expected_value);else

// Handle the condition failure (e.g., retry or log) - Implementing Error Handling and Retries:

When a conditional write fails (because another concurrent update modified the item), the operation returns an error. Implement robust error handling and retry mechanisms to manage these failures. Exponential backoff is a common retry strategy, where the wait time between retries increases exponentially. This helps to avoid overwhelming the system during periods of high contention. In a serverless environment, Lambda functions can be configured to retry failed operations automatically, further improving resilience.

For instance, consider the following example:

try

// Perform conditional write

catch (ConditionalCheckFailedException e)

// Handle the condition failure

// Implement retry logic with exponential backoff

// For example, retry after a delay (e.g., 1 second, 2 seconds, 4 seconds, etc.)

catch (Exception e)

// Handle other exceptions (e.g., network errors) - Using Atomic Counters:

DynamoDB provides atomic counters, which are designed specifically for incrementing or decrementing numeric attributes. Atomic counters are highly efficient and provide built-in concurrency handling. Using atomic counters eliminates the need for conditional writes and retries for incrementing or decrementing operations. This is the preferred approach for updating counters, tallies, or other numeric values that are frequently modified.

For example, to increment a counter:

updateItem(item_id, counter: counter + 1 , updateExpression: "ADD counter :increment", expressionAttributeValues: ":increment": 1);

- Optimistic Locking with Versioning:

Another approach is to use optimistic locking. This involves adding a version attribute to your items and incrementing it with each update. Before updating an item, you check if the version attribute matches the expected value. If it does, you update the item and increment the version. If the version doesn’t match, it means another update has occurred, and you handle the conflict.

This approach provides a way to detect and resolve concurrent updates.

Consider this pseudocode example:

// Get the item and its current version

item = getItem(item_id);

current_version = item.version;

// Perform conditional update

if (current_version == expected_version)

updateItem(item_id, data: new_data, version: current_version + 1 , conditionExpression: "version = :expected_version", expressionAttributeValues: ":expected_version": expected_version);

else

// Handle the condition failure (e.g., retry or log)

Advanced Techniques

DynamoDB offers advanced features that significantly enhance its capabilities for serverless state management. These techniques are crucial for building robust, scalable, and reliable applications, particularly when dealing with complex data relationships and performance optimization. Understanding and implementing these features allows developers to overcome limitations and maximize the potential of DynamoDB.

Transactions and Atomic Operations

Transactions in DynamoDB allow developers to perform multiple operations as a single, atomic unit. This ensures that either all operations within the transaction succeed, or none of them do, maintaining data consistency and integrity. This is particularly important in scenarios where multiple updates are interdependent.To understand the benefits of transactions, consider a simple e-commerce application. When a customer places an order, several actions need to occur atomically: decrementing the inventory count for the purchased items, creating an order record, and updating the customer’s order history.

Without transactions, there is a risk of partial failures. For instance, the inventory could be decremented, but the order record might fail to be created, leading to inconsistencies.Transactions address these challenges by providing atomicity, consistency, isolation, and durability (ACID) properties, ensuring data integrity.DynamoDB transactions are implemented using the `TransactWriteItems` and `TransactGetItems` APIs. `TransactWriteItems` allows for multiple write operations (Put, Update, Delete) within a single transaction, and `TransactGetItems` enables reading multiple items.Here’s an example demonstrating a transaction in Python using the AWS SDK for DynamoDB (boto3):“`pythonimport boto3dynamodb = boto3.client(‘dynamodb’)def process_order(customer_id, item_id, quantity): try: response = dynamodb.transact_write_items( TransactItems=[ ‘Update’: ‘TableName’: ‘Inventory’, ‘Key’: ‘item_id’: ‘S’: item_id, ‘UpdateExpression’: ‘SET quantity = quantity – :val’, ‘ExpressionAttributeValues’: ‘:val’: ‘N’: str(quantity), ‘ConditionExpression’: ‘quantity >= :val’, ‘ReturnValuesOnConditionCheckFailure’: ‘ALL_OLD’ , ‘Put’: ‘TableName’: ‘Orders’, ‘Item’: ‘order_id’: ‘S’: str(uuid.uuid4()), # Generate a unique order ID ‘customer_id’: ‘S’: customer_id, ‘item_id’: ‘S’: item_id, ‘quantity’: ‘N’: str(quantity), ‘order_date’: ‘S’: datetime.now().isoformat() , ‘Update’: ‘TableName’: ‘Customers’, ‘Key’: ‘customer_id’: ‘S’: customer_id, ‘UpdateExpression’: ‘ADD order_count :val’, ‘ExpressionAttributeValues’: ‘:val’: ‘N’: ‘1’ ] ) print(“Order processed successfully:”, response) return True except ClientError as e: if e.response[‘Error’][‘Code’] == ‘TransactionCanceledException’: print(“Transaction failed:”, e.response[‘Error’][‘Message’]) # Analyze the cancellation reasons to understand the failure cancellation_reasons = e.response[‘CancellationReasons’] for reason in cancellation_reasons: print(f”Cancellation reason: reason”) return False else: print(“An unexpected error occurred:”, e) return False“`In this example:* The code attempts to update the `Inventory` table to decrement the quantity, create a new order in the `Orders` table, and increment the `order_count` for the customer in the `Customers` table, all within a single transaction.

- The `ConditionExpression` on the `Inventory` update ensures that the transaction only proceeds if there’s enough inventory. This prevents overselling.

- If any operation within the transaction fails (e.g., insufficient inventory), the entire transaction is rolled back, and no changes are committed. The `TransactionCanceledException` provides detailed information about the failure, including the reasons for the cancellation, which is useful for debugging.

Transactions are also useful in social media applications. For instance, consider the scenario of a user liking a post. The action requires updating the post’s like count and also updating the user’s profile to reflect the like. Both updates must occur atomically.

Batch Operations

Batch operations in DynamoDB are designed to optimize data retrieval and storage by allowing multiple items to be processed in a single API call. This reduces the number of round trips to the DynamoDB service, leading to improved performance and reduced costs, particularly when dealing with a large number of items.DynamoDB provides two main batch operations: `BatchGetItem` for retrieving multiple items and `BatchWriteItem` for writing (Put or Delete) multiple items.The `BatchGetItem` operation is used to retrieve multiple items from one or more tables.

It can retrieve up to 100 items per call, with a maximum size of 16 MB.The `BatchWriteItem` operation can write up to 25 items per call (Put or Delete operations), with a maximum size of 16 MB. The write operations can be performed across multiple tables.Here’s an example using `BatchGetItem` to retrieve multiple items from a table:“`pythonimport boto3dynamodb = boto3.client(‘dynamodb’)def get_multiple_items(table_name, keys): try: response = dynamodb.batch_get_item( RequestItems= table_name: ‘Keys’: keys, ‘ConsistentRead’: True # Optional: Use consistent reads ) return response.get(‘Responses’, ).get(table_name, []) except ClientError as e: print(f”Error retrieving items: e”) return []# Example usage:keys_to_get = [ ‘id’: ‘S’: ‘item1’, ‘id’: ‘S’: ‘item2’, ‘id’: ‘S’: ‘item3’]items = get_multiple_items(‘MyTable’, keys_to_get)print(items)“`In this example:* The `get_multiple_items` function retrieves multiple items from the `MyTable` table using the provided keys.

- The `RequestItems` parameter specifies the table name, the keys of the items to retrieve, and the read consistency setting.

- The function returns a list of the retrieved items.

Here’s an example using `BatchWriteItem` to write multiple items to a table:“`pythonimport boto3dynamodb = boto3.client(‘dynamodb’)def write_multiple_items(table_name, items): try: response = dynamodb.batch_write_item( RequestItems= table_name: [ ‘PutRequest’: ‘Item’: item for item in items ] ) return response except ClientError as e: print(f”Error writing items: e”) return None# Example usage:items_to_write = [ ‘id’: ‘S’: ‘item4’, ‘name’: ‘S’: ‘Product 4’, ‘id’: ‘S’: ‘item5’, ‘name’: ‘S’: ‘Product 5’]result = write_multiple_items(‘MyTable’, items_to_write)if result: print(“Items written successfully.”)“`In this example:* The `write_multiple_items` function writes multiple items to the `MyTable` table using the `BatchWriteItem` operation.

- The `RequestItems` parameter includes a list of `PutRequest` objects, each containing an item to be written.

- The function returns the response from the DynamoDB service.

Batch operations offer significant performance advantages, especially in scenarios such as:* Data Migration: When migrating data from another data source to DynamoDB, batch operations can significantly speed up the process.

Bulk Data Loading

Loading a large dataset into DynamoDB can be optimized using batch operations.

Data Aggregation

Retrieving multiple related items for aggregation and reporting.

Scenarios Where Transactions Are Essential

Transactions are essential for maintaining data integrity in various scenarios, particularly when multiple operations are interdependent or when consistency is critical.Consider these examples:* Financial Transactions: In banking or financial applications, transactions are crucial for ensuring that account balances are updated correctly and that funds are transferred atomically. For example, when transferring money from one account to another, both accounts must be updated, and the transaction must succeed or fail as a whole to prevent data corruption.

Inventory Management

In e-commerce or supply chain management, transactions are vital for managing inventory levels. When an order is placed, the inventory must be reduced, and the order must be recorded. Transactions ensure that both operations succeed together or are rolled back to maintain data consistency.

Social Media Applications

In social media, transactions are required when users interact with content, such as liking a post, commenting, or following another user. These actions require updating multiple data points (post likes, user profile, follower lists) atomically.

Gaming Applications

In gaming, transactions are essential for managing player inventories, in-game currency, and player stats. When a player purchases an item or receives a reward, the player’s inventory and currency must be updated consistently.

Complex Data Updates

In any application where multiple related data entities must be updated simultaneously, transactions are crucial. For example, when updating a user profile, the profile information, settings, and related data might need to be updated atomically.Without transactions, these scenarios are susceptible to partial failures and data inconsistencies. Transactions provide a mechanism to ensure atomicity, consistency, isolation, and durability (ACID) properties, thereby safeguarding data integrity and preventing data corruption.

Monitoring and Troubleshooting DynamoDB Usage

Effectively monitoring and troubleshooting DynamoDB is crucial for maintaining application performance, ensuring data integrity, and optimizing costs. This section details the key metrics available for monitoring, provides guidance on identifying and resolving common issues, and Artikels a strategy for managing provisioned capacity.

CloudWatch Metrics for DynamoDB Monitoring

Understanding the available CloudWatch metrics is fundamental to proactively monitoring DynamoDB performance and identifying potential bottlenecks. These metrics provide insights into various aspects of table operations, allowing for informed decisions regarding capacity planning and optimization.

- ConsumedReadCapacityUnits and ConsumedWriteCapacityUnits: These metrics track the number of read and write capacity units consumed by your table. High values, especially when approaching provisioned limits, indicate potential performance issues. Monitoring these metrics allows you to determine if your table is under-provisioned or if your application’s read/write patterns are changing.

- ProvisionedReadCapacityUnits and ProvisionedWriteCapacityUnits: These metrics show the provisioned read and write capacity units for your table. Comparing these values with the consumed capacity units helps determine if you are adequately provisioned. Maintaining a balance between cost and performance is essential; under-provisioning can lead to throttling, while over-provisioning leads to unnecessary costs.

- ThrottledRequests: This metric indicates the number of requests that were throttled due to insufficient provisioned capacity. A high number of throttled requests directly impacts application performance, resulting in increased latency and potentially user frustration. This metric is a key indicator of under-provisioning.

- SuccessfulRequestLatency: Measures the time taken to process successful requests. Higher latency values can signal performance issues, which can stem from factors such as high contention, inefficient queries, or insufficient capacity. This metric is crucial for identifying performance degradation.

- ReturnedItemCount: Represents the number of items returned by read operations. This metric can be used to understand data access patterns and optimize queries for efficiency. Analyzing this can help to identify and correct inefficient queries that are retrieving unnecessary data.

- SystemErrors: Captures the number of internal errors encountered by DynamoDB. An increase in system errors could indicate issues within the service itself, requiring investigation with AWS support. This is a critical metric for assessing the overall health of your DynamoDB instances.

- UserErrors: This metric counts the number of errors originating from client requests, like incorrect requests or access permission issues. Analyzing user errors can provide insights into application behavior and potential client-side problems.

Identifying and Resolving Common DynamoDB-Related Issues

Proactive troubleshooting requires a systematic approach to identify and resolve issues. This section provides guidance on addressing common problems encountered when using DynamoDB.

- Throttling: The most common issue is throttling, caused by exceeding provisioned read or write capacity. To resolve this:

- Increase Provisioned Capacity: The simplest solution is to increase the provisioned read and/or write capacity units for the table. This is often a quick fix but can increase costs.

- Optimize Queries: Review and optimize queries to reduce the number of capacity units consumed per request. This can involve using efficient query patterns, indexing, and avoiding full table scans.

- Implement Backoff and Retry Logic: Implement retry mechanisms with exponential backoff in your application code to handle throttled requests gracefully. This can help mitigate the impact of throttling on user experience.

- Use Auto Scaling: Consider using DynamoDB Auto Scaling to automatically adjust provisioned capacity based on traffic patterns. This can help to maintain performance while minimizing costs.

- High Latency: High latency can be caused by a variety of factors. To resolve this:

- Insufficient Capacity: Insufficient provisioned capacity can lead to increased latency. Increase the provisioned capacity as needed.

- Inefficient Queries: Inefficient queries, such as those that scan the entire table or retrieve excessive data, can increase latency. Optimize queries to improve efficiency.

- Data Contention: High contention on frequently accessed items can lead to increased latency. Consider using techniques like sharding or optimistic locking to mitigate contention.

- Network Issues: Network latency between your application and DynamoDB can also contribute to increased latency. Ensure your application is located in the same AWS region as your DynamoDB table, and optimize network connections.

- Data Consistency Issues: Data consistency problems can arise due to various factors. To resolve this:

- Choose the Right Consistency Model: Understand the trade-offs between eventual consistency and strongly consistent reads. Choose the appropriate consistency model based on your application’s requirements.

- Use Transactions: Use DynamoDB transactions for operations that require atomicity and consistency across multiple items or tables.

- Implement Versioning: Implement versioning to track changes to items and ensure data integrity.

- Cost Overruns: DynamoDB costs can be a concern. To resolve this:

- Optimize Provisioned Capacity: Carefully provision capacity to meet your application’s needs without over-provisioning. Regularly monitor capacity utilization and adjust as needed.

- Use On-Demand Mode: Consider using DynamoDB On-Demand mode for workloads with unpredictable traffic patterns. This eliminates the need to provision capacity and allows you to pay only for what you use.

- Optimize Queries: Optimize queries to reduce the number of read and write capacity units consumed.

- Use Reserved Capacity (for Provisioned Mode): If you have a predictable workload, consider purchasing reserved capacity to reduce costs.

Analyzing and Adjusting DynamoDB Provisioned Capacity

Proper capacity planning is essential for optimizing performance and cost-efficiency. This section provides a guide for analyzing DynamoDB provisioned capacity and adjusting it as needed.

- Monitor Capacity Utilization: Continuously monitor the `ConsumedReadCapacityUnits` and `ConsumedWriteCapacityUnits` metrics in CloudWatch. Analyze the percentage of provisioned capacity being used. A high utilization rate (e.g., consistently above 70-80%) indicates a need for increased capacity.

- Analyze Traffic Patterns: Identify peak traffic times and patterns. Use this information to determine the appropriate provisioned capacity for your table. If traffic is highly variable, consider using DynamoDB Auto Scaling or On-Demand mode.

- Use CloudWatch Alarms: Set up CloudWatch alarms to alert you when capacity utilization exceeds predefined thresholds. This enables you to proactively address potential performance issues. Configure alarms for `ConsumedReadCapacityUnits` and `ConsumedWriteCapacityUnits` exceeding a certain percentage of the provisioned capacity, and for `ThrottledRequests`.

- Adjust Provisioned Capacity: Adjust the provisioned read and write capacity units based on your analysis of capacity utilization and traffic patterns. Use the AWS Management Console, the AWS CLI, or the AWS SDK to make these adjustments.

- Scaling Up: When capacity utilization is consistently high, increase the provisioned capacity. Start with a small increase and monitor the results.

- Scaling Down: If capacity utilization is consistently low, reduce the provisioned capacity to optimize costs. Be cautious when scaling down; ensure that you have sufficient capacity to handle potential traffic spikes.

- Leverage DynamoDB Auto Scaling: Implement DynamoDB Auto Scaling to automatically adjust provisioned capacity based on traffic patterns. This can help to maintain performance while minimizing costs. Configure Auto Scaling with target utilization metrics and scaling policies.

- Consider On-Demand Mode: For workloads with unpredictable traffic patterns, consider using DynamoDB On-Demand mode. This eliminates the need to provision capacity and allows you to pay only for what you use. Evaluate your application’s traffic patterns to determine if On-Demand mode is a cost-effective option.

- Example: Consider a table that stores session data for a web application. Initially, the table is provisioned with 100 read capacity units and 100 write capacity units. After a week of monitoring, CloudWatch metrics reveal that the table is consistently consuming 80 read capacity units and 60 write capacity units, with no throttling. This suggests that the table is adequately provisioned.

However, during a flash sale event, the application experiences a significant increase in traffic. The CloudWatch metrics show that the table is now consuming 120 read capacity units and 150 write capacity units, and throttling is occurring. To resolve this, the provisioned read capacity units are increased to 150 and the write capacity units are increased to 200. This increase in capacity helps to accommodate the surge in traffic and prevent throttling.

After the event, the capacity can be scaled down to optimize costs.

Example Use Cases

DynamoDB’s flexibility and scalability make it a powerful tool for state management across various domains. Its ability to handle high-volume, low-latency operations is particularly advantageous in applications where data consistency and responsiveness are critical. This section explores specific use cases in e-commerce, gaming, and the Internet of Things (IoT), highlighting how DynamoDB can effectively manage state in each context.

E-commerce Applications

E-commerce platforms require robust state management to handle user sessions, shopping carts, order processing, and product catalogs. DynamoDB’s characteristics make it a suitable choice for several critical functions within an e-commerce environment.For managing shopping carts, DynamoDB offers several advantages:

- Scalability: As the number of concurrent users and shopping cart items grows, DynamoDB can scale automatically to accommodate the increased load.

- Availability: DynamoDB’s multi-AZ replication ensures high availability, minimizing downtime and ensuring users can access their shopping carts.

- Data Consistency: DynamoDB’s support for strong consistency guarantees that users always see the most up-to-date state of their shopping carts.

DynamoDB can be utilized to store and manage various e-commerce data, including:

- Shopping Cart Data: This includes the items added to a cart, quantities, prices, and any associated discounts. Each cart can be represented as an item in a DynamoDB table, with the user’s ID as the primary key.

- User Session Data: Information about logged-in users, such as their session ID, authentication tokens, and preferences, can be stored to maintain user context across requests.

- Order Data: Once a user completes a purchase, the order details, including items purchased, shipping information, and payment status, can be stored in a DynamoDB table.

- Product Catalogs: Although more commonly handled by search engines, DynamoDB can be used for product catalog data, providing fast retrieval for product details.

Gaming Applications

The gaming industry demands high performance and low latency for a seamless player experience. DynamoDB’s capabilities are well-suited for managing player profiles, game state, and leaderboards.DynamoDB’s benefits for gaming include:

- Low Latency: DynamoDB’s performance allows for real-time updates to player profiles and game state, crucial for a responsive gaming experience.

- Scalability: Games can scale to accommodate millions of players without performance degradation.

- Data Durability: DynamoDB ensures that player progress and game data are protected from data loss.

Specific use cases in gaming include:

- Player Profiles: Store player statistics, achievements, inventory, and preferences. The player’s unique ID serves as the primary key, allowing for quick retrieval of profile data.

- Game State: Manage the state of a game, including the positions of players, the status of game objects, and the current game level.

- Leaderboards: Implement leaderboards to track player rankings and display them in real-time. DynamoDB’s global secondary indexes can be used to efficiently sort and retrieve leaderboard data.

- Session Management: Manage player sessions, tracking when players log in and out, and storing session-related information.

IoT Scenarios

The Internet of Things (IoT) generates massive amounts of data from connected devices. DynamoDB can be utilized to store device data, configuration, and sensor readings.DynamoDB’s relevance in IoT includes:

- Ingestion of Time-Series Data: Efficiently store time-series data from IoT devices, such as sensor readings, device health metrics, and operational status.

- Configuration Management: Manage device configurations, including firmware versions, settings, and other device-specific parameters.

- Scalability and Availability: Accommodate the large volume of data generated by numerous connected devices.

Illustrative applications include:

- Sensor Data Storage: For example, a smart home system can use DynamoDB to store temperature readings, humidity levels, and energy consumption data from various sensors. Each reading could be stored as an item in a DynamoDB table, with the device ID and timestamp as the primary key.

- Device Configuration: Store the configuration settings for each device. These settings can be retrieved and updated as needed, allowing for remote management and control of IoT devices.

- Device State Management: Track the state of devices, such as their online/offline status, battery level, and any error conditions.

Performance Optimization Strategies

Optimizing DynamoDB performance is critical for serverless applications, directly impacting application responsiveness, cost efficiency, and scalability. This section Artikels key strategies for maximizing DynamoDB’s efficiency, ensuring optimal performance and minimizing potential bottlenecks.

Choosing the Right Primary Key and Using Indexes Effectively

The selection of the primary key and the strategic use of indexes are fundamental to DynamoDB performance. These choices dictate how data is stored and retrieved, significantly influencing read and write speeds.The primary key design directly impacts query efficiency. A well-designed primary key distributes data evenly across partitions, avoiding “hot keys” which can lead to throttling. Composite primary keys, combining a partition key and a sort key, offer flexibility in data organization and querying.* Partition Key Considerations: The partition key determines the partition where the item is stored.

It is essential to choose a partition key that distributes data evenly. Uneven distribution can lead to throttling on heavily accessed partitions.

Examples of effective partition keys include user IDs, account numbers, or event timestamps, depending on the access patterns.

Avoid using keys that result in a skewed distribution, such as timestamps in a short period or single-user IDs for a large dataset.

Sort Key Benefits

The sort key enables efficient range queries and sorting within a partition.

Sort keys allow for the retrieval of items within a specific range based on the sort key value.

Example

Retrieving all orders placed by a user within a specific date range. Choosing the right sort key allows for optimization of query results, especially for time series data.

Indexes and Their Impact

Indexes improve query performance, particularly for non-primary key attributes.

Global Secondary Indexes (GSIs)

Enable querying based on attributes other than the primary key. They provide flexibility in accessing data but incur additional write costs.

Local Secondary Indexes (LSIs)

Allow querying based on the sort key of a composite primary key. LSIs share the same partition key as the base table, offering efficient queries within a partition.

Creating indexes increases storage costs and write costs due to the need to update the index with each write operation.

Analyze query patterns to determine the necessary indexes. Avoid creating unnecessary indexes, as they can slow down write operations. When designing GSIs, consider the provisioned throughput requirements. GSIs have their own provisioned capacity settings.

The choice of primary key and index strategy directly affects the efficiency of read and write operations. A poorly designed schema can lead to significant performance degradation and increased costs.

Managing Provisioned Throughput and Avoiding Throttling

DynamoDB’s provisioned throughput model requires careful management to ensure applications can handle the expected load without throttling. Throttling occurs when an application exceeds the provisioned read or write capacity, resulting in failed requests and reduced performance.* Understanding Provisioned Capacity: DynamoDB’s provisioned capacity is defined in terms of read capacity units (RCUs) and write capacity units (WCUs).

1 RCU allows for one strongly consistent read per second of up to 4 KB, or two eventually consistent reads per second of up to 4 KB.

1 WCU allows for one write per second of up to 1 KB.

Capacity units are consumed based on item size and consistency requirements.

Monitoring and Capacity Planning

Regularly monitor DynamoDB metrics to identify potential throttling issues and optimize capacity planning.

Use CloudWatch metrics to monitor consumed RCUs and WCUs, as well as throttled requests.

Set up CloudWatch alarms to alert when capacity utilization exceeds predefined thresholds.

Capacity planning should consider peak load scenarios and anticipated growth.

Consider using Auto Scaling to automatically adjust provisioned capacity based on traffic patterns.

Optimizing Read Operations

Optimize read operations to minimize RCU consumption.

Use eventually consistent reads when possible, as they consume fewer RCUs than strongly consistent reads.

Use the `GetItem` API for single-item reads and `BatchGetItem` for reading multiple items in parallel.

Use projections to retrieve only the required attributes, reducing the amount of data read.

Implement caching to reduce the number of reads from DynamoDB.

Optimizing Write Operations

Optimize write operations to minimize WCU consumption.

Use the `PutItem` API for single-item writes and `BatchWriteItem` for writing multiple items in parallel.

Use transactions for atomic operations involving multiple items, ensuring data consistency.

Optimize item size to reduce WCU consumption.

Batching writes can significantly improve write performance.

Effective capacity planning, combined with proactive monitoring and optimization, is crucial for maintaining optimal DynamoDB performance and preventing throttling.

Checklist for Ensuring Optimal DynamoDB Performance in Serverless Applications

This checklist provides a structured approach to ensure optimal DynamoDB performance in serverless applications. Following these steps helps prevent common performance pitfalls and maintain application responsiveness.* Schema Design:

Choose an appropriate primary key strategy.

Design the primary key to distribute data evenly.

Select the right attributes to serve as sort keys.

Create indexes for frequently queried attributes.

Avoid over-indexing, as it can increase write costs.

Capacity Planning and Provisioning

Estimate the expected read and write traffic.

Provision sufficient RCUs and WCUs.

Enable Auto Scaling to dynamically adjust capacity.

Monitor CloudWatch metrics for throttling.

Set up CloudWatch alarms for capacity utilization thresholds.

Query Optimization

Use eventually consistent reads where appropriate.

Use `GetItem` for single-item reads.

Use `BatchGetItem` for multiple item reads.

Use projections to retrieve only the necessary attributes.

Optimize queries to use indexes effectively.

Implement caching to reduce DynamoDB read load.

Write Optimization

Use `BatchWriteItem` for batch writes.

Use transactions for atomic operations.

Optimize item size to reduce WCU consumption.

Code Optimization

Handle potential throttling errors gracefully.

Implement exponential backoff and retry mechanisms.

Optimize the size of data being written to and read from DynamoDB.

Monitoring and Alerting

Monitor DynamoDB metrics in CloudWatch.

Set up CloudWatch alarms for capacity utilization and throttling.

Regularly review and adjust provisioned capacity based on usage patterns.

Implementing this checklist helps ensure that DynamoDB performs optimally, contributing to the overall performance and scalability of serverless applications. Regular review and adjustment of these settings are essential for long-term performance.

Closure

In conclusion, DynamoDB is an indispensable tool for managing state in serverless applications. From fundamental CRUD operations to sophisticated transaction management, DynamoDB provides the necessary features to build resilient, scalable, and cost-effective serverless systems. By understanding the core concepts, mastering advanced techniques, and adhering to performance optimization strategies, developers can harness the full potential of DynamoDB to create robust and efficient serverless solutions across diverse application domains, from e-commerce to IoT.

Detailed FAQs

What is the difference between eventually consistent and strongly consistent reads in DynamoDB?

Eventually consistent reads offer higher performance and lower cost, but may return stale data. Strongly consistent reads guarantee the most up-to-date data, but incur higher latency and cost.

How does TTL (Time-to-Live) work in DynamoDB?

TTL allows you to automatically delete items from a table after a specified time. This is particularly useful for managing session data or temporary information, reducing storage costs, and ensuring data freshness.

What are conditional writes, and why are they important?

Conditional writes allow you to update an item only if a specific condition is met, such as the existing value of an attribute. This is crucial for preventing data corruption due to concurrent updates and ensuring data integrity.

How do I monitor the performance of my DynamoDB tables?

CloudWatch provides a range of metrics for monitoring DynamoDB performance, including consumed read/write capacity units, throttled requests, and latency. These metrics are essential for identifying performance bottlenecks and optimizing table configurations.

What are DynamoDB transactions, and when should I use them?

DynamoDB transactions allow you to perform multiple operations (e.g., reads, writes, updates) atomically, ensuring that either all operations succeed or none do. Transactions are essential for maintaining data consistency in scenarios involving multiple related items or tables.