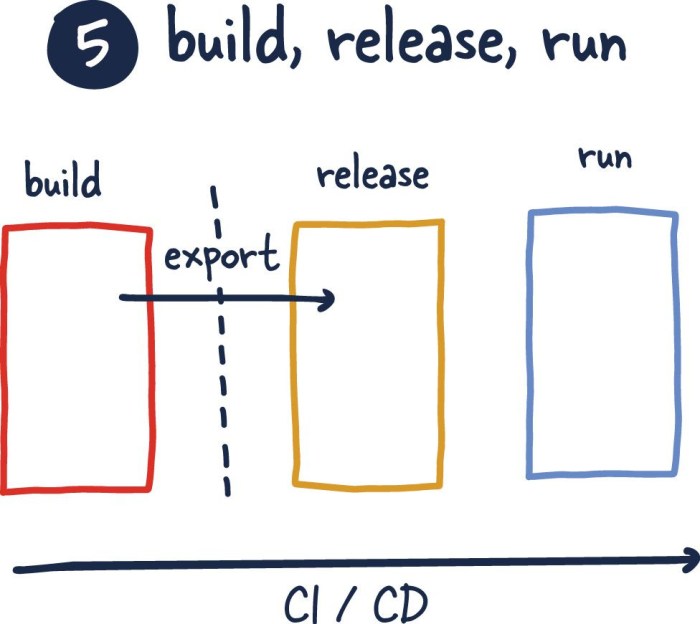

Delving into the realm of software development, the principle of strictly separating the Build, Release, and Run stages (Factor V) is paramount for achieving efficiency, reliability, and security. This approach streamlines the software development lifecycle, leading to faster deployments, reduced errors, and improved overall system stability. Understanding and implementing these distinct stages is crucial for any development team striving for excellence.

This guide explores the nuances of each stage, from the initial artifact creation in the Build stage to the final execution and monitoring within the Run stage. We will uncover the importance of strict separation, highlighting the risks of poor practices and the benefits of a well-defined process. Furthermore, we will delve into the practical aspects of automation, versioning, configuration management, rollback strategies, and security considerations to ensure a robust and secure software development pipeline.

Defining the Build, Release, and Run Stages

The Build, Release, and Run stages are fundamental pillars of the Factor V principles, emphasizing the importance of separating concerns in the software development lifecycle. This separation promotes efficiency, reliability, and maintainability. Understanding the specific activities and purposes of each stage is crucial for effective software development and deployment.

Defining the Build Stage

The Build stage encompasses the activities required to transform source code into an executable artifact. This stage focuses on compilation, testing, and packaging the software. The primary goal is to produce a deployable unit that is ready for release.Key activities within the Build stage include:

- Code Compilation: This involves translating source code written in a high-level programming language (e.g., Java, Python, C++) into machine-readable instructions or bytecode. Compilers or interpreters are used for this purpose.

- Unit Testing: Individual components or modules of the software are tested to ensure they function correctly in isolation. This helps to identify and fix bugs early in the development process.

- Integration Testing: Different modules are tested together to verify that they interact correctly. This helps to identify issues related to the interaction between different parts of the software.

- Packaging: The compiled code, along with any necessary dependencies and configuration files, is packaged into a deployable artifact. This could be a JAR file, a Docker image, or a platform-specific installer.

- Static Code Analysis: Tools are used to analyze the code for potential issues such as code style violations, security vulnerabilities, and performance bottlenecks.

Defining the Release Stage

The Release stage focuses on preparing the built artifact for deployment. This stage involves activities such as configuration, environment setup, and approval processes. The main objective is to make the software available for use in a specific environment.Key activities within the Release stage include:

- Environment Configuration: Setting up the target environment (e.g., staging, production) with the necessary infrastructure, such as servers, databases, and networking.

- Configuration Management: Configuring the application with environment-specific settings, such as database connection strings and API keys. This often involves using configuration management tools or environment variables.

- Testing in Release Environments: Performing final testing in environments that closely resemble the production environment. This helps to identify any issues that may arise during deployment.

- Approval Processes: Obtaining necessary approvals from stakeholders before deploying the software to production. This may involve security reviews, performance testing, and business sign-offs.

- Deployment Preparation: Preparing the deployment process, including scripting the deployment steps and defining rollback procedures.

Defining the Run Stage

The Run stage is concerned with the execution and operation of the deployed software. This stage focuses on monitoring, logging, and maintaining the application in a live environment. The primary goal is to ensure the software is running reliably and efficiently, and to provide support to users.Key activities within the Run stage include:

- Deployment: Deploying the software artifact to the target environment. This may involve copying files, configuring servers, and restarting services.

- Monitoring: Continuously monitoring the application’s performance, health, and resource usage. This involves using monitoring tools to collect metrics and detect anomalies.

- Logging: Logging application events, errors, and user activity to provide insights into the application’s behavior and to facilitate troubleshooting.

- Incident Management: Responding to and resolving any incidents that occur in the production environment. This may involve investigating errors, implementing workarounds, and coordinating with other teams.

- Performance Tuning: Optimizing the application’s performance by identifying and addressing bottlenecks, such as slow database queries or inefficient code.

- User Support: Providing support to users, including answering questions, resolving issues, and collecting feedback.

The Importance of Strict Separation

Strictly separating the Build, Release, and Run stages is a cornerstone of modern software development, enabling efficient and reliable software delivery. This separation ensures each stage focuses on its specific responsibilities, minimizing risks and maximizing the benefits of continuous integration and continuous delivery (CI/CD) pipelines.

Risks of Poorly Separated Stages

Failing to strictly separate the Build, Release, and Run stages introduces numerous risks that can significantly impact the software development lifecycle. These risks often manifest as increased errors, prolonged deployment times, and difficulty in troubleshooting.

- Increased Errors and Bugs: When stages are intertwined, changes in one stage can inadvertently impact others. For example, including build artifacts directly in the release process can introduce dependencies and incompatibilities. This can lead to runtime errors, unexpected behavior, and difficulty in identifying the root cause of issues.

- Prolonged Deployment Times: Poorly separated stages often result in manual steps and dependencies between stages. This can significantly increase deployment times. For instance, if the build process relies on manual intervention or if the release process involves extensive testing and validation before deployment, the overall deployment cycle becomes lengthy.

- Increased Risk of Rollback Failures: A poorly defined release process can make it difficult to roll back to a previous version if a deployment fails. If the release process directly modifies the running environment, a failed deployment can leave the system in an unstable state. This increases the risk of downtime and data loss.

- Difficulties in Troubleshooting: When stages are not clearly separated, it becomes challenging to pinpoint the source of problems. If an issue arises in production, it can be difficult to determine whether it stems from the build process, the release process, or the runtime environment. This can significantly increase the mean time to recovery (MTTR).

- Reduced Auditability and Compliance: Intertwined stages can make it difficult to track changes and maintain an audit trail. This can pose challenges for regulatory compliance and security audits.

Benefits of Well-Separated Stages

Well-separated Build, Release, and Run stages offer significant advantages in terms of deployment frequency, lead time for changes, and mean time to recovery. These improvements are critical for achieving agility, responsiveness, and overall software development efficiency.

- Increased Deployment Frequency: A well-defined CI/CD pipeline, where each stage is independent, facilitates more frequent deployments. Automating the build and release processes enables developers to deploy code changes quickly and safely. For example, companies that have embraced CI/CD practices have seen deployment frequency increase from once a month to multiple times a day, as reported by many leading tech companies.

- Reduced Lead Time for Changes: Lead time, the time it takes from code commit to production, is significantly reduced with strict stage separation. Automated testing, pre-release validation, and efficient deployment processes minimize the time required to get changes into production. This enables faster feedback loops and allows teams to respond quickly to user needs and market demands.

- Improved Mean Time to Recovery (MTTR): When stages are separated, troubleshooting and recovery become much easier. Clear separation allows teams to quickly identify the source of issues. For example, if a problem arises in production, it can be quickly determined if it is a build artifact issue, a release configuration problem, or a runtime environment issue. Automation and rollback strategies also contribute to faster recovery.

According to the 2023 Accelerate State of DevOps Report, high-performing teams consistently achieve significantly lower MTTR compared to low-performing teams.

- Enhanced Stability and Reliability: Strict separation promotes the creation of immutable infrastructure. The build process generates immutable artifacts, the release process configures the environment, and the run stage executes the application. This approach minimizes the risk of configuration drift and ensures consistency across environments, leading to increased stability and reliability.

- Improved Collaboration and Team Efficiency: When stages are clearly defined, different teams can focus on their specific areas of expertise. The build team can focus on creating artifacts, the release team on deployment and configuration, and the operations team on managing the runtime environment. This division of responsibilities improves collaboration and increases overall team efficiency.

Build Stage

The Build stage is a critical phase in the software development lifecycle, focusing on transforming source code into deployable artifacts. This stage emphasizes automation, repeatability, and consistency, laying the foundation for reliable releases and efficient operations. It is where the raw materials of software development – source code, dependencies, and configurations – are processed and transformed into ready-to-use components.

Artifact Creation Responsibilities

The primary responsibilities of the Build stage encompass several key activities, all geared toward producing deployable artifacts. These activities are performed automatically by build systems and build tools. The following points Artikel the main duties of this stage:

- Compilation: This involves translating the source code, written in a human-readable programming language, into machine-executable code or intermediate representations. The compiler checks for syntax errors and ensures the code adheres to the programming language’s rules.

- Dependency Management: This involves identifying and incorporating all necessary libraries, frameworks, and other components that the software depends on. Dependency management tools automate the process of fetching, managing versions, and linking these dependencies.

- Testing: Various tests, including unit tests, integration tests, and potentially end-to-end tests, are executed to verify the correctness and functionality of the code. Testing ensures that the software behaves as expected and that any issues are identified early in the process.

- Artifact Creation: This involves packaging the compiled code, dependencies, and any necessary configuration files into a deployable format, such as a binary, package, or container image. The artifact is the output of the build stage.

- Artifact Storage: The created artifacts are stored in a designated repository or artifact management system. This allows for versioning, access control, and easy retrieval of artifacts for deployment.

- Metadata Generation: Metadata, such as version numbers, build timestamps, and commit hashes, is generated and associated with the artifacts. This information is crucial for traceability, debugging, and auditing.

Artifact Types and Characteristics

Different types of artifacts are produced during the Build stage, each with its own characteristics and intended use. The choice of artifact type depends on the application’s requirements, deployment environment, and technology stack. The following table summarizes some common artifact types and their key features:

| Artifact Type | Description | Characteristics | Deployment Considerations |

|---|---|---|---|

| Binaries (e.g., Executables, Libraries) | Compiled code ready to be executed directly by the operating system. | Platform-specific, tightly coupled with the operating system, may require installation. | Requires matching OS, potential dependency conflicts, versioning is critical. |

| Packages (e.g., .deb, .rpm, .msi) | Bundled software components, dependencies, and installation instructions. | Designed for easy installation and management on specific operating systems, includes metadata. | Utilize package managers for installation and updates, dependency resolution is automated. |

| Containers (e.g., Docker Images) | Self-contained, isolated environments that package code, dependencies, and runtime. | Portable across different platforms, consistent execution environment, promotes isolation. | Requires a container runtime (e.g., Docker), allows for easy scaling and deployment. |

| Archives (e.g., .zip, .tar.gz) | Compressed files containing code, resources, and configuration files. | Simple to create and deploy, may require manual setup, less sophisticated dependency management. | Suitable for simpler applications or as a staging mechanism before further processing. |

Compilation, Testing, and Artifact Creation Process

The process of compiling source code, running tests, and creating deployable artifacts is typically automated through a build pipeline. The specific steps and tools used will vary depending on the project’s technology stack and requirements, but the general flow remains consistent.

The process generally involves the following steps:

- Source Code Retrieval: The build system retrieves the latest version of the source code from a version control system (e.g., Git).

- Dependency Resolution: The build system identifies and downloads all required dependencies, such as libraries and frameworks, often using a dependency management tool (e.g., Maven, npm, pip).

- Compilation: The source code is compiled into machine code or an intermediate representation. The compiler checks for syntax errors and ensures the code adheres to the programming language’s rules.

- Testing: Automated tests are executed to verify the correctness and functionality of the code. Unit tests, integration tests, and potentially end-to-end tests are run. Test results are collected and analyzed.

- Artifact Packaging: The compiled code, dependencies, and any necessary configuration files are packaged into a deployable artifact. This may involve creating a binary, a package, or a container image.

- Artifact Versioning: The artifact is assigned a version number, and metadata (e.g., build timestamp, commit hash) is associated with it.

- Artifact Storage: The created artifact is stored in an artifact repository or management system.

For example, in a Java project, the build process might use Maven. The Maven build process automatically manages dependencies, compiles the Java code, runs unit tests, and packages the compiled code into a `.jar` or `.war` file. Similarly, in a Node.js project, tools like npm or yarn are used to manage dependencies, transpile the code (if necessary), run tests, and create a distributable package.

These automated processes are crucial for creating reliable and repeatable builds.

Release Stage

The Release stage is a critical phase that bridges the gap between the completed Build stage and the actual deployment of the software. It prepares the built artifacts for deployment, ensuring they are configured correctly for the target environment and meet all necessary pre-deployment criteria. This stage is designed to minimize risks and facilitate a smooth transition from development to production.

The success of the Release stage directly impacts the reliability, security, and performance of the deployed software.

Purpose and Relationship to the Build Stage

The Release stage’s primary purpose is to transform the output from the Build stage into a deployable package. The Build stage produces the raw, compiled code and related assets. The Release stage takes these artifacts and prepares them for the specific deployment environment. This involves configuring the application, setting up the environment, and performing necessary checks. The Release stage is dependent on the Build stage; it receives its inputs from the Build stage and transforms them into a ready-to-deploy format.

The artifacts from the Build stage are considered immutable at this point; the Release stage does not modify the core code but instead configures and adapts it for deployment.

Activities in the Release Stage

Several key activities occur during the Release stage to prepare the software for deployment. These activities ensure that the application is configured correctly for the target environment and that all necessary checks are completed before deployment.

- Configuration: This involves tailoring the application’s settings to match the specific environment where it will be deployed. Configuration often includes setting database connection strings, API keys, and other environment-specific parameters. For example, different settings are used for development, staging, and production environments.

- Environment Setup: The environment where the software will run needs to be set up. This includes provisioning servers, setting up databases, and configuring any required services. For example, using infrastructure-as-code tools to automate server provisioning and configuration.

- Pre-Deployment Checks: These checks ensure that the application is ready for deployment and that potential issues are identified before they impact users. This often includes running tests (unit, integration, and end-to-end), verifying dependencies, and validating configuration files.

- Artifact Packaging: The release stage may involve packaging the application and its dependencies into a deployable format, such as a Docker image, a ZIP file, or an executable.

- Security Hardening: This involves applying security best practices to the deployable package. It includes tasks such as vulnerability scanning, code signing, and implementing access controls.

Role of Configuration Management

Configuration management plays a vital role in the Release stage. It ensures that the application is configured consistently and reliably across different environments. Effective configuration management reduces the risk of errors and makes it easier to manage deployments.

- Centralized Configuration: Centralized configuration management systems allow for storing and managing configuration data in a single, accessible location. This ensures that all deployments use the same configuration settings.

- Environment-Specific Configurations: Configuration management systems allow defining environment-specific configurations. This enables developers to use different settings for development, staging, and production environments without modifying the core code.

- Version Control: Configuration files should be version-controlled, just like the code. This allows tracking changes, rolling back to previous configurations, and ensuring consistency across deployments.

- Automation: Automating the configuration process is essential. Configuration management tools can be integrated with deployment pipelines to automatically apply the correct configuration settings during the Release stage.

Run Stage

The Run stage is the final phase of the software delivery pipeline, where the built and released application is deployed, executed, and actively monitored in a production or staging environment. This stage is critical for ensuring the application functions correctly, meets performance requirements, and provides a positive user experience. Effective management of the Run stage is essential for identifying and resolving issues quickly, maintaining system stability, and supporting ongoing application improvements.

Execution and Monitoring of the Application

The Run stage encompasses the deployment, execution, and continuous monitoring of the application. This involves several key activities to ensure the application’s smooth operation and optimal performance.The deployment process involves transferring the released application artifacts to the target environment and configuring the necessary infrastructure components. This often includes setting up servers, databases, and other dependencies. Execution involves starting the application and making it accessible to users.

Continuous monitoring is crucial during this stage, as it involves tracking the application’s performance, identifying potential issues, and ensuring the application is meeting its service-level agreements (SLAs).

- Deployment: The process of transferring and configuring the application in the target environment. This can involve various strategies, such as rolling deployments, blue/green deployments, or canary deployments, to minimize downtime and risk.

- Execution: The actual running of the application, making it available to users and processing requests. This involves managing application processes, handling user interactions, and ensuring the application is responsive and available.

- Monitoring: The continuous observation of the application’s performance, health, and behavior. This includes tracking key performance indicators (KPIs) such as response times, error rates, and resource utilization.

- Scaling: The ability to adjust the application’s resources (e.g., server instances, database connections) dynamically based on demand. This ensures the application can handle varying workloads and maintain optimal performance.

- Maintenance: Ongoing activities to keep the application running smoothly, including patching, updates, and routine checks. This can also involve proactive measures like performance tuning and capacity planning.

Simple Monitoring Dashboard Design

A well-designed monitoring dashboard provides a centralized view of the application’s health and performance, enabling operations teams to quickly identify and address issues. This section describes the components of a basic monitoring dashboard, visualizing key performance indicators (KPIs) without providing any code.The dashboard should present critical metrics in a clear and easily understandable format. This usually includes graphical representations of data trends and real-time status indicators.

A typical dashboard would contain several sections, each focusing on a specific aspect of the application’s performance.A monitoring dashboard should display the following key performance indicators:

- Application Response Time: A graph showing the average time it takes for the application to respond to user requests. This is often measured in milliseconds or seconds.

- Error Rate: A graph displaying the percentage of requests that result in errors (e.g., 500 Internal Server Errors). High error rates can indicate serious issues with the application.

- Throughput: A graph showing the number of requests the application processes per unit of time (e.g., requests per second). This indicates how efficiently the application is handling traffic.

- CPU Utilization: A graph showing the percentage of CPU resources used by the application servers. High CPU utilization may indicate performance bottlenecks.

- Memory Utilization: A graph showing the percentage of memory used by the application servers. High memory usage can lead to performance degradation or crashes.

- Database Connection Pool Size: A graph displaying the number of active database connections. This metric can reveal potential issues with database performance.

- User Activity: A graph showing the number of active users or sessions. This helps to understand application usage patterns.

- System Status: Indicators showing the overall status of critical services and components (e.g., green for healthy, red for issues).

The dashboard could use a simple grid layout, with each KPI displayed in a dedicated panel. The panels would show real-time data visualizations, such as line graphs for time-series data, gauges for percentage values, and numerical values for counts. Color-coding (e.g., green for good, yellow for warning, red for critical) would be used to quickly highlight potential issues. Alerts could be configured to trigger notifications when certain thresholds are exceeded.

For example, an alert could be sent when the application’s error rate exceeds 5% or when CPU utilization consistently remains above 80%.

Logging and Alerting Importance

Effective logging and alerting are essential components of the Run stage, providing critical insights into the application’s behavior and enabling timely responses to issues. Proper logging helps to diagnose problems, while effective alerting ensures that critical issues are brought to the attention of the operations team immediately.Logging involves capturing detailed information about the application’s operations, including events, errors, and performance metrics.

Alerting involves automatically notifying the operations team when specific conditions are met, such as high error rates or performance degradation.

- Logging: The process of recording events, errors, and other relevant information about the application’s behavior. Logging provides a detailed record of the application’s operations, which is crucial for troubleshooting issues, identifying performance bottlenecks, and understanding user behavior.

- Alerting: The process of automatically notifying the operations team when specific conditions are met. Alerts can be triggered based on predefined thresholds or patterns in the logs. Alerting ensures that critical issues are addressed promptly.

- Log Levels: Logs should be categorized by severity levels (e.g., DEBUG, INFO, WARN, ERROR, FATAL) to enable filtering and prioritization. This helps to focus on the most critical issues.

- Log Aggregation: Centralized log aggregation allows for the collection and analysis of logs from multiple sources, providing a unified view of the application’s behavior.

- Alerting Thresholds: Setting appropriate thresholds for alerts is crucial to avoid alert fatigue. Thresholds should be based on historical data and application performance characteristics.

- Notification Channels: Alerts can be delivered through various channels, such as email, SMS, and messaging platforms. The choice of channel depends on the severity of the issue and the team’s preferences.

For example, a real-world case involves an e-commerce website that experiences a sudden spike in 500 errors during a flash sale. Without proper logging, the cause of the errors might be difficult to determine, potentially resulting in significant revenue loss. With detailed logging, the operations team can quickly identify the root cause (e.g., a database connection issue) and take corrective action.

Similarly, if the website’s response time suddenly increases, an alert can be triggered, allowing the team to investigate and resolve the issue before it affects a large number of users. The combination of logging and alerting allows for proactive issue detection and faster resolution times, leading to improved application reliability and a better user experience.

Automation in Each Stage

Automation is critical for the successful implementation of strictly separated build, release, and run stages. It streamlines processes, reduces errors, and significantly improves efficiency, enabling faster development cycles and more reliable deployments. Each stage benefits from automation, from code compilation and testing to deployment and monitoring.

Automating the Build Stage

The build stage, responsible for transforming source code into deployable artifacts, is highly amenable to automation. Tools like Jenkins, GitLab CI, and GitHub Actions excel in automating this phase.The following are key aspects of automating the build stage:

- Code Compilation: Automated build systems compile the source code into executable binaries or deployable packages. This process is often triggered by code commits to a version control system. For instance, a commit to a Git repository can automatically initiate a build process using a tool like Jenkins, which then compiles the code, linking libraries, and creating the final application artifact.

- Unit Testing: Unit tests are automatically executed to verify the functionality of individual code components. Tools like JUnit (for Java), pytest (for Python), or Jest (for JavaScript) are commonly integrated into the build pipeline. If any unit tests fail, the build is typically marked as unsuccessful, preventing the release of potentially faulty code.

- Integration Testing: Integration tests verify the interaction between different software modules. These tests are also automated and run as part of the build process. Failure of integration tests, similar to unit tests, will halt the build.

- Static Code Analysis: Static code analysis tools, such as SonarQube or ESLint, are employed to identify potential code quality issues, security vulnerabilities, and adherence to coding standards. These tools analyze the source code without executing it. Reports generated from static code analysis can flag areas for improvement, enhancing code maintainability and security.

- Artifact Creation: Once the code has been compiled and tested successfully, the build system creates the necessary artifacts, such as JAR files, WAR files, Docker images, or other deployment packages. These artifacts are then stored in a repository, like Nexus or Artifactory, ready for the release stage.

Automating the Release Stage

The release stage focuses on preparing and approving the artifacts for deployment. Automation here ensures consistency and reduces the risk of human error during the release process.Automated tasks that can be performed in the release stage include:

- Artifact Validation: Validate the integrity and correctness of the artifacts produced in the build stage. This might involve checksum verification, code signing, and vulnerability scanning. For example, a release pipeline could automatically verify the checksum of a Docker image against a known-good value to ensure that the image hasn’t been tampered with.

- Configuration Management: Configure the application for the target environment. This involves setting environment variables, updating configuration files, and applying necessary infrastructure-as-code (IaC) changes. Tools like Ansible, Chef, or Puppet can be used to automate these configuration tasks.

- Release Approval: Implement automated approval workflows to ensure that the release meets specific criteria. This might involve manual approvals from stakeholders or automated checks based on pre-defined conditions, such as successful test results or security scans.

- Version Tagging: Tag the artifacts with appropriate version numbers to facilitate traceability and rollback capabilities. This is crucial for tracking releases and reverting to previous versions if necessary.

- Release Notes Generation: Automatically generate release notes summarizing the changes included in the release. This improves communication and keeps stakeholders informed about the updates.

Automating Deployment and Monitoring in the Run Stage

The run stage, encompassing deployment and ongoing operation, is heavily reliant on automation for efficiency, scalability, and reliability.Methods for automating deployment and monitoring in the run stage include:

- Automated Deployment: Use deployment tools like Kubernetes, Docker Compose, or cloud-specific services (e.g., AWS Elastic Beanstalk, Google Cloud Run) to automatically deploy the application to the target environment. These tools handle tasks such as provisioning infrastructure, configuring servers, and deploying the application artifacts.

- Rolling Updates: Implement rolling updates to minimize downtime during deployments. This involves gradually updating the application across multiple instances or servers, ensuring that at least some instances are always available.

- Health Checks and Monitoring: Implement automated health checks and monitoring to proactively detect and address issues. Tools like Prometheus, Grafana, and cloud-specific monitoring services (e.g., AWS CloudWatch, Google Cloud Monitoring) can monitor application performance, resource utilization, and error rates.

- Automated Scaling: Configure automatic scaling to dynamically adjust resources based on demand. For example, a Kubernetes cluster can automatically scale the number of pods running a specific service based on CPU usage or other metrics.

- Log Aggregation and Analysis: Implement centralized log aggregation and analysis to collect and analyze logs from various sources. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk can help identify and resolve issues.

- Incident Response Automation: Automate incident response procedures, such as automatically restarting failing services, sending alerts, and escalating issues to the appropriate teams.

Versioning and Tagging

Versioning and tagging are critical components of a robust Build, Release, and Run pipeline. They provide a mechanism for tracking changes, ensuring reproducibility, and facilitating rollbacks. Proper versioning allows teams to identify and manage specific versions of software artifacts, while tagging provides a way to mark significant points in the development lifecycle.

Importance of Versioning Artifacts

Versioning artifacts created during the Build stage is essential for several reasons, impacting the integrity, traceability, and manageability of the software. It allows for a controlled and repeatable build process, which is crucial for debugging, auditing, and compliance.

- Reproducibility: Versioning enables the recreation of any previous build. By knowing the specific version of the source code, dependencies, and build tools used, the same artifact can be rebuilt at any time. This is critical for debugging and fixing issues.

- Traceability: Version numbers provide a clear link between the built artifact and the source code, dependencies, and build configurations. This allows for easy tracing of the origin and evolution of the artifact.

- Rollback Capability: If a new release introduces problems, versioning allows for a quick and reliable rollback to a previous, stable version. This minimizes downtime and the impact on users.

- Release Management: Versioning helps to manage multiple releases simultaneously. It allows for tracking which versions are deployed in different environments (e.g., development, staging, production).

- Auditing and Compliance: Versioning provides a record of all changes made to the software, making it easier to meet regulatory requirements and conduct audits.

Designing a Simple Versioning Scheme

A well-defined versioning scheme is crucial for managing software artifacts effectively. Semantic Versioning (SemVer) is a widely adopted and recommended approach.

Semantic Versioning (SemVer) uses the format MAJOR.MINOR.PATCH.

- MAJOR: Represents incompatible API changes. Incrementing the MAJOR version indicates that significant changes have been made, and older versions are not compatible with the new version.

- MINOR: Represents added functionality in a backward-compatible manner. Incrementing the MINOR version indicates that new features have been added without breaking existing functionality.

- PATCH: Represents backward-compatible bug fixes. Incrementing the PATCH version indicates that only bug fixes have been made, and there are no new features or breaking changes.

For example, a version number of 1.2.3 would mean:

- MAJOR version is 1 (indicating initial major release or incompatible changes).

- MINOR version is 2 (indicating the addition of new features).

- PATCH version is 3 (indicating bug fixes).

Each new build will increment the patch version (e.g., 1.2.3 to 1.2.4) or the minor version if a new feature is added (e.g., 1.2.4 to 1.3.0). If there are breaking changes, the major version is incremented (e.g., 1.3.0 to 2.0.0).

Using Tags in Version Control Systems

Tags in version control systems like Git provide a mechanism to mark specific points in the repository’s history. They are often used to identify releases or significant milestones.

- Creating Tags: In Git, tags can be created using the `git tag` command. For example, `git tag v1.0.0` would create a tag named `v1.0.0` at the current commit.

- Tagging Specific Commits: It is possible to tag a specific commit by including the commit hash. This allows tagging older commits.

- Listing Tags: The `git tag` command, without any arguments, lists all tags in the repository. `git tag -l “v1.*”` can be used to list all tags matching a specific pattern.

- Pushing Tags: By default, tags are not pushed to the remote repository automatically. The command `git push origin –tags` pushes all local tags to the remote repository.

- Benefits of Tagging: Tags provide a clear, human-readable way to reference specific versions of the software. They enable easy navigation to the code associated with a particular release. They also help in automating the release process.

Configuration Management and Environments

Managing configurations effectively across different environments is crucial for the success of the build, release, and run stages. It ensures consistency, reproducibility, and the ability to deploy applications reliably in development, staging, and production environments. This section explores the strategies and tools involved in this process.

Managing Configurations for Different Environments

Configuration management across different environments involves tailoring application settings and infrastructure specifications to suit each environment’s unique requirements. Development environments typically prioritize rapid iteration and experimentation, while staging environments mirror production to facilitate testing and validation. Production environments focus on stability, security, and performance. The core principle is to avoid hardcoding environment-specific settings within the application code. Instead, configurations are externalized and managed separately.

Configuration Management Tools

Several tools streamline configuration management, enabling automation and consistency across environments. These tools often utilize declarative approaches, where the desired state of the system is specified, and the tool ensures that state is achieved.* Ansible: Ansible is an open-source automation tool that uses a simple, human-readable language (YAML) to describe system configurations. It operates agentlessly, connecting to target machines over SSH.

Ansible is well-suited for managing infrastructure, deploying applications, and automating repetitive tasks.

Example

An Ansible playbook might define the installation of a specific version of a web server, the configuration of its settings, and the deployment of application code. The playbook can then be executed across development, staging, and production environments, with environment-specific variables controlling the settings.

Chef

Chef is a configuration management tool that uses a client-server architecture. The Chef client runs on target machines and receives configuration instructions from a Chef server. Chef uses Ruby-based recipes to define configurations.

Example

A Chef recipe might define the installation and configuration of a database server. The recipe can include steps for setting up users, creating databases, and configuring network access. The Chef client on each server will execute the recipe to ensure the server meets the defined configuration.

Puppet

Puppet is another popular configuration management tool that also uses a client-server architecture. Puppet uses a declarative language to describe the desired state of a system.

Example

A Puppet manifest could define the installation of a monitoring agent and the configuration of its settings. Puppet’s agent on each server periodically checks its configuration and makes any necessary changes to match the defined state.

Best Practices for Environment-Specific Configuration

Implementing best practices for environment-specific configuration is essential for maintaining a robust and reliable deployment pipeline. These practices help ensure that applications behave predictably and consistently across different environments.

- Externalize Configuration: Store configurations outside of the application code. This can be done using environment variables, configuration files (e.g., JSON, YAML, properties files), or a dedicated configuration management service.

- Use Environment Variables: Environment variables are a convenient way to inject configuration values into applications at runtime. They are particularly useful for secrets, such as database passwords and API keys.

- Configuration Files: Configuration files allow for more complex configuration structures. These files can be version-controlled and managed alongside the application code.

- Configuration Management Tools: Leverage tools like Ansible, Chef, or Puppet to automate configuration deployment and ensure consistency across environments.

- Templating: Use templating engines (e.g., Jinja2, ERB) to generate environment-specific configuration files from a common template.

- Secrets Management: Securely store and manage sensitive information, such as passwords and API keys, using a secrets management solution (e.g., HashiCorp Vault, AWS Secrets Manager).

- Version Control: Store configuration files and templates in a version control system (e.g., Git) to track changes, enable rollbacks, and facilitate collaboration.

- Testing: Test configuration changes in a staging environment before deploying them to production.

- Immutable Infrastructure: Consider using immutable infrastructure, where servers are replaced rather than modified in place. This simplifies configuration management and reduces the risk of configuration drift.

- Least Privilege: Grant only the necessary permissions to users and services to access configuration resources.

Rollback Strategies

Rollback strategies are crucial for ensuring the stability and reliability of software deployments. They provide a safety net, allowing teams to quickly revert to a previous, known-good state in the event of a deployment failure, thereby minimizing downtime and mitigating the impact on users. Implementing well-defined rollback procedures is a critical component of a robust and resilient deployment pipeline.

Importance of Rollback Strategies

Rollback strategies are essential for maintaining application availability and user satisfaction. They are a fundamental part of any deployment process, offering the ability to recover from issues introduced during a release.

- Minimize Downtime: Rollbacks rapidly restore a functional version of the application, reducing the time users are affected by bugs or performance issues.

- Reduce Risk: They provide a safety net, mitigating the risks associated with deploying new code, configurations, or infrastructure changes.

- Improve User Experience: By quickly resolving deployment failures, rollbacks prevent negative user experiences caused by broken functionality or degraded performance.

- Increase Team Confidence: Knowing a rollback is possible empowers teams to deploy more frequently and confidently, accelerating the delivery of new features and improvements.

- Support Continuous Delivery: Rollbacks are a key enabler for continuous delivery practices, allowing for automated and frequent deployments with minimal risk.

Simple Rollback Procedure Design

A basic rollback procedure involves a few key steps to ensure a swift return to a previous working state. The exact steps will depend on the application and infrastructure.

- Detection of Failure: Implement monitoring and alerting to detect deployment failures. This includes monitoring application logs, performance metrics (e.g., response times, error rates), and user reports.

- Initiation of Rollback: When a failure is detected, the rollback process should be initiated automatically or with minimal manual intervention.

- Reversion to Previous Version: The application should be reverted to the last known-good version. This can involve restoring the previous code, configuration, and data.

- Verification: After the rollback, verify that the application is functioning correctly. This includes running automated tests and performing manual checks.

- Communication: Communicate the rollback and its impact to users and stakeholders.

Examples of Rollback Strategies

Several strategies can be employed to facilitate rollbacks, each with its own advantages and disadvantages. The choice of strategy depends on the application architecture, infrastructure, and risk tolerance.

- Blue/Green Deployments: This strategy involves maintaining two identical environments: blue (live) and green (staging). The new version is deployed to the green environment and tested. Once verified, traffic is switched from blue to green. If a problem occurs, traffic can be quickly switched back to the blue environment. This strategy minimizes downtime and provides a clean rollback path.

- Canary Releases: A small subset of users (the “canary”) receives the new version while the majority continues to use the existing version. If the canary deployment is successful, the new version is gradually rolled out to all users. If issues are detected, the canary deployment can be quickly rolled back, limiting the impact. This strategy allows for testing in production with minimal risk.

- Rolling Deployments: In a rolling deployment, the new version is deployed to a subset of servers or instances, while the remaining servers continue to serve the existing version. As the new version is deployed to more servers, the old version is removed from the servers. Rollback involves redeploying the previous version to the affected servers. This strategy allows for gradual updates and rollbacks, but it can be more complex to manage.

- Snapshot and Restore: This strategy involves taking snapshots of the application’s state (code, data, configuration) before a deployment. In case of failure, the system can be restored from the latest snapshot. This is a simple approach, but it can lead to data loss if the snapshot is not recent enough.

- Feature Flags/Toggles: Feature flags enable or disable specific features in the application. New features can be deployed to production but disabled by default. If a problem arises, the feature can be disabled using the flag, effectively rolling back the change. This is a flexible approach that allows for fine-grained control over features.

“Blue/green deployments typically require infrastructure duplication, which can be more expensive.”

“Canary releases require careful monitoring and traffic management to ensure the canary users’ experience is not significantly impacted.”

“Rolling deployments can be more complex to manage, as they require careful orchestration of updates and rollbacks across multiple servers.”

Security Considerations

Implementing strict separation between the build, release, and run stages is crucial not only for operational efficiency but also for bolstering security. Each stage presents unique vulnerabilities that must be addressed to prevent security breaches and ensure the integrity of the software. A well-defined security strategy across all stages minimizes the attack surface and protects against various threats.Understanding the security risks associated with each stage, and implementing appropriate safeguards, is essential for maintaining a secure software development lifecycle.

This section details the specific security considerations for each stage, along with best practices to mitigate risks.

Identifying Security Risks in Each Stage

Each stage in the build, release, and run process carries distinct security risks that, if left unaddressed, can compromise the entire system. Recognizing these risks is the first step toward establishing effective security measures.

- Build Stage: The build stage is vulnerable to threats like:

- Supply Chain Attacks: Malicious actors can compromise dependencies (libraries, packages) used in the build process. If a compromised dependency is used, the resulting software will also be compromised.

- Source Code Vulnerabilities: Security flaws within the source code, such as SQL injection, cross-site scripting (XSS), and buffer overflows, can be introduced during development.

- Build Environment Tampering: The build environment itself (e.g., build servers, build tools) can be targeted, allowing attackers to inject malicious code or alter the build process.

- Release Stage: The release stage introduces risks such as:

- Unauthorized Access: Access to the release process and artifacts can be exploited to deploy unauthorized or malicious software versions.

- Configuration Errors: Incorrect configuration settings can expose vulnerabilities. For example, improperly configured database credentials or open ports.

- Artifact Tampering: Attackers may attempt to modify or replace release artifacts (e.g., binaries, packages) to inject malicious code.

- Run Stage: The run stage presents the following security challenges:

- Runtime Exploits: Vulnerabilities in the deployed application or its dependencies can be exploited by attackers to gain control of the system or steal sensitive data.

- Data Breaches: Unauthorized access to sensitive data stored within the application or its associated databases.

- Denial-of-Service (DoS) Attacks: Attackers can overwhelm the application’s resources, making it unavailable to legitimate users.

Security Best Practices for the Build Stage

The build stage is the foundation for software security. Implementing robust security practices during this phase ensures that only secure and verified code is packaged and released.

- Dependency Scanning: Regularly scan all project dependencies (libraries, packages) for known vulnerabilities. Use tools like Snyk, OWASP Dependency-Check, or similar solutions to identify and remediate vulnerabilities in dependencies.

Regular dependency scanning helps to detect and prevent the introduction of known vulnerabilities from third-party components.

- Code Analysis: Implement static and dynamic code analysis to identify security vulnerabilities in the source code. Static analysis tools (e.g., SonarQube, Fortify) analyze code without executing it, while dynamic analysis tools (e.g., OWASP ZAP) test the application during runtime.

- Secure Build Environment: Secure the build environment by:

- Using hardened build servers.

- Restricting access to the build servers.

- Regularly updating build tools and operating systems.

- Implementing strong authentication and authorization mechanisms.

- Secrets Management: Avoid hardcoding secrets (e.g., API keys, database passwords) in the source code. Instead, use a secrets management system (e.g., HashiCorp Vault, AWS Secrets Manager) to store and manage sensitive information securely.

- Code Signing: Sign the built artifacts (e.g., binaries, packages) with a digital signature to verify their authenticity and integrity. This helps ensure that the software has not been tampered with during the build and release process.

- Input Validation and Sanitization: Implement robust input validation and sanitization to prevent common vulnerabilities like SQL injection and cross-site scripting (XSS).

Security Best Practices for the Release and Run Stages

The release and run stages are critical for ensuring the security of the deployed application. These stages focus on controlling access, monitoring for threats, and implementing strategies to quickly respond to security incidents.

- Access Control: Implement strict access control mechanisms to restrict access to the release process and the running application. Use role-based access control (RBAC) to grant users only the necessary permissions.

- Configuration Management: Use a configuration management system to manage application configurations securely. Implement version control for configuration files and encrypt sensitive configuration data.

- Artifact Integrity Verification: Verify the integrity of release artifacts (e.g., binaries, packages) before deployment. This can be done by comparing checksums or using digital signatures.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security incidents. Collect logs from all components of the application and use a security information and event management (SIEM) system to analyze logs for suspicious activity.

Effective monitoring provides real-time insights into system behavior, enabling prompt detection of security threats.

- Intrusion Detection and Prevention Systems (IDS/IPS): Deploy IDS/IPS to detect and prevent malicious activity in the runtime environment. IDS monitors network traffic and system activity for suspicious behavior, while IPS actively blocks malicious traffic.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and assess the effectiveness of security controls.

- Rollback Strategies: Implement robust rollback strategies to quickly revert to a previous, known-good version of the application in case of a security incident or deployment failure.

- Vulnerability Scanning: Regularly scan the running application and its dependencies for vulnerabilities. Use tools like Nessus, OpenVAS, or similar vulnerability scanners.

- Incident Response Plan: Develop and maintain an incident response plan to handle security incidents effectively. The plan should include steps for detection, containment, eradication, recovery, and post-incident analysis.

Tools and Technologies

The selection of appropriate tools and technologies is crucial for the effective implementation of the build, release, and run stages. These tools streamline processes, automate tasks, and improve the overall efficiency and reliability of the software delivery pipeline. Choosing the right combination of tools depends on project requirements, team expertise, and organizational preferences.

Tools for Build, Release, and Run Stages

The following table provides an overview of popular tools and technologies used in each stage, categorizing them for clarity. This compilation aims to offer a comprehensive look at the tools commonly employed across different aspects of software development and deployment.

| Build Stage | Release Stage | Run Stage | General (Applicable to Multiple Stages) |

|---|---|---|---|

|

|

|

|

Containerization in the Run Stage

Containerization, using technologies like Docker and Kubernetes, offers significant benefits in the run stage, contributing to improved portability, scalability, and resource utilization. It packages applications and their dependencies into isolated units, ensuring consistent behavior across different environments.

- Portability: Containers encapsulate the application and its dependencies, allowing them to run consistently across various infrastructures, from development machines to cloud environments. This “write once, run anywhere” capability minimizes environment-specific issues.

- Scalability: Container orchestration platforms, such as Kubernetes, enable automated scaling. They can dynamically adjust the number of container instances based on demand, ensuring optimal resource allocation and responsiveness to user traffic. For example, a retail website during a holiday sale might automatically scale up its containerized application instances to handle increased user load.

- Resource Efficiency: Containers share the host operating system’s kernel, making them lightweight compared to virtual machines. This leads to better resource utilization, allowing more applications to run on the same infrastructure. A real-world example is the use of containers to run microservices, which allows for efficient allocation of resources to each service based on its needs.

- Isolation: Containers provide isolation, preventing conflicts between applications. If one container crashes, it does not affect other containers running on the same host. This isolation enhances system stability.

- Simplified Deployment: Container images provide a standardized way to package and deploy applications. This simplifies the deployment process, making it faster and more reliable.

The Role of a CI/CD Pipeline

A Continuous Integration and Continuous Delivery (CI/CD) pipeline automates the software delivery process, enabling faster and more reliable releases. It integrates code changes frequently, tests them thoroughly, and automates the steps required to deploy these changes to various environments.

A CI/CD pipeline generally includes the following stages:

- Code Commit: Developers commit code changes to a version control system (e.g., Git).

- Build: The CI/CD system automatically triggers a build process, which compiles the code, runs unit tests, and packages the application into an artifact (e.g., a JAR file, a Docker image).

- Test: Automated tests, including unit tests, integration tests, and potentially end-to-end tests, are executed to ensure the quality of the build. Failure at this stage prevents the release.

- Release: If the tests pass, the artifact is released. This often involves tagging the code in the version control system and storing the artifact in a repository.

- Deploy: The artifact is deployed to a target environment (e.g., staging, production). This step may involve configuration management and orchestration tools.

- Monitor: After deployment, the application is monitored for performance and errors. This allows for rapid detection and resolution of issues.

“A well-designed CI/CD pipeline can significantly reduce the time it takes to release new features, improve software quality, and increase team productivity.”

An example of a successful CI/CD implementation is the deployment frequency increase seen by many organizations. Companies adopting CI/CD practices frequently report a dramatic rise in deployment frequency, sometimes from monthly or quarterly releases to daily or even hourly releases. This faster feedback loop allows for quicker identification of problems and accelerates the delivery of value to users.

End of Discussion

In summary, the strict separation of Build, Release, and Run stages, as defined by Factor V, is a cornerstone of modern software development. By understanding the purpose and activities within each stage, automating processes, and implementing robust security measures, development teams can significantly improve their deployment frequency, lead time for changes, and mean time to recovery. Embracing these principles will not only enhance the development process but also contribute to delivering high-quality software with greater confidence.

Helpful Answers

What is the primary goal of separating the Build, Release, and Run stages?

The primary goal is to improve the efficiency, reliability, and security of the software development process by creating distinct and independent stages, each with specific responsibilities and activities.

How does strict separation impact deployment frequency?

Strict separation allows for more frequent and reliable deployments because changes in one stage are less likely to impact the others. This leads to a faster feedback loop and quicker delivery of new features.

What are the common tools used for automating the Build stage?

Popular tools include Jenkins, GitLab CI, GitHub Actions, and Travis CI, which automate tasks like compiling code, running tests, and creating artifacts.

How can I manage configurations for different environments?

Configuration management tools like Ansible, Chef, and Puppet can be used. Best practices include storing configurations separately from code, using environment variables, and templating configurations.

What are some key security considerations in the Run stage?

Key considerations include access control, regular security audits, vulnerability scanning, and robust monitoring and logging to detect and respond to security incidents.