Data lakes, vast repositories of raw data, are transforming how organizations analyze information and drive insights. However, with the immense value they hold, data lakes have become prime targets for cyberattacks and data breaches. Therefore, understanding how to secure a data lake environment is no longer optional; it’s a critical imperative for protecting sensitive information and maintaining regulatory compliance.

This guide delves into the multifaceted aspects of data lake security, from foundational principles to advanced techniques. We will explore the essential components of a robust security posture, including access control, encryption, network security, auditing, and compliance. Our aim is to equip you with the knowledge and strategies needed to build a secure and resilient data lake environment, safeguarding your valuable data assets.

Data Lake Security Fundamentals

Securing a data lake environment is paramount for protecting sensitive information and ensuring data integrity. This involves implementing robust security measures across various aspects of the data lake architecture. The following sections detail the core security principles, data states, access control mechanisms, and encryption techniques essential for a secure data lake.

Core Security Principles

Several fundamental security principles underpin a secure data lake environment. These principles, when implemented collectively, create a strong defense against unauthorized access, data breaches, and other security threats.

- Confidentiality: Ensures that data is accessible only to authorized individuals or systems. This involves implementing access controls, encryption, and data masking techniques to protect sensitive information.

- Integrity: Guarantees the accuracy and consistency of data throughout its lifecycle. This is achieved through data validation, version control, and audit trails to detect and prevent data tampering or corruption.

- Availability: Ensures that data is accessible and usable when needed. This involves implementing redundancy, disaster recovery plans, and performance monitoring to maintain system uptime and data accessibility.

- Authentication: Verifies the identity of users or systems attempting to access the data lake. This involves using strong passwords, multi-factor authentication, and identity management systems.

- Authorization: Defines the level of access granted to authenticated users or systems. This is achieved through role-based access control (RBAC) and fine-grained permissions to restrict access to specific data or functionalities.

Data States and Security Considerations

Data within a data lake exists in various states, each requiring specific security considerations to ensure its protection. Understanding these states and their associated risks is crucial for designing effective security controls.

- Data at Rest: This refers to data stored within the data lake, such as in object storage (e.g., Amazon S3, Azure Data Lake Storage) or data warehouses. Security considerations include:

- Encryption: Encrypting data at rest protects it from unauthorized access if the storage media is compromised. This can be achieved through server-side encryption or client-side encryption.

- Access Control: Implementing strict access controls to restrict access to the storage location and data objects.

- Data Masking and Anonymization: Masking or anonymizing sensitive data to reduce the risk of exposure in case of a breach.

- Data in Transit: This refers to data being transmitted between different components of the data lake or between the data lake and external systems. Security considerations include:

- Encryption: Encrypting data in transit using protocols like TLS/SSL to protect it from eavesdropping and man-in-the-middle attacks.

- Network Security: Implementing firewalls, intrusion detection systems, and other network security measures to protect data during transmission.

- Secure Protocols: Using secure protocols such as HTTPS, SFTP, and SSH for data transfer.

- Data in Use: This refers to data being processed or accessed by applications or users within the data lake. Security considerations include:

- Access Control: Implementing fine-grained access control to restrict data access based on user roles and permissions.

- Data Masking and Tokenization: Masking or tokenizing sensitive data during processing to minimize exposure.

- Auditing and Monitoring: Monitoring data access and usage to detect and respond to suspicious activity.

Access Control and Authorization

Access control and authorization are critical components of data lake security, ensuring that only authorized users and systems can access specific data and functionalities. Implementing robust access control mechanisms minimizes the risk of unauthorized access and data breaches.

- Role-Based Access Control (RBAC): RBAC assigns permissions based on user roles, simplifying access management and ensuring consistency. Users are assigned roles, and roles are granted permissions to access specific data or perform specific actions.

- Attribute-Based Access Control (ABAC): ABAC uses attributes of users, data, and the environment to determine access permissions. This provides a more flexible and granular approach to access control.

- Fine-Grained Permissions: Granting permissions at the object or column level to restrict access to specific data elements.

- Identity and Access Management (IAM): Integrating with an IAM system to manage user identities, authentication, and authorization centrally.

- Regular Auditing and Review: Regularly auditing access permissions and reviewing user access to ensure that they are appropriate and up-to-date.

Encryption in Securing Data

Encryption plays a crucial role in protecting data at rest and in transit, rendering it unreadable to unauthorized individuals. Implementing encryption throughout the data lake architecture significantly enhances data security.

- Encryption at Rest:

- Server-Side Encryption: The storage provider encrypts the data at rest. Examples include Amazon S3 server-side encryption and Azure Storage Service Encryption.

- Client-Side Encryption: Data is encrypted by the client before being stored in the data lake. This gives the client more control over the encryption keys.

- Key Management: Securely managing encryption keys is critical. Consider using a key management service (KMS) to generate, store, and manage encryption keys.

- Encryption in Transit:

- Transport Layer Security (TLS/SSL): Encrypts data in transit between the client and the data lake components.

- Virtual Private Network (VPN): Creates a secure tunnel for data transmission over a public network.

- Secure File Transfer Protocol (SFTP): A secure protocol for transferring files.

- Key Management Best Practices:

- Key Rotation: Regularly rotating encryption keys to minimize the impact of a potential key compromise.

- Key Storage: Securely storing encryption keys using a KMS or hardware security module (HSM).

- Key Access Control: Implementing strict access controls to encryption keys to prevent unauthorized access.

Access Control and Identity Management

Effective access control and identity management are paramount for securing a data lake environment. They ensure that only authorized users and systems can access sensitive data, preventing unauthorized access, data breaches, and compliance violations. Implementing robust access controls, coupled with a well-defined identity management strategy, is a cornerstone of data lake security.

Implementing Role-Based Access Control (RBAC)

Role-Based Access Control (RBAC) simplifies access management by assigning permissions based on user roles rather than individual users. This approach streamlines administration, reduces errors, and improves security posture.RBAC implementation in a data lake environment involves several best practices:

- Define Roles Clearly: Identify and define distinct roles based on job functions and responsibilities within the organization. Examples include Data Engineers, Data Scientists, Analysts, and Auditors. Each role should reflect a specific set of responsibilities and data access requirements.

- Assign Permissions to Roles: Grant specific permissions to each role, such as read, write, execute, and delete, based on the data and resources the role requires access to. Ensure the principle of least privilege is applied, granting only the minimum necessary permissions for each role to perform its duties.

- Assign Users to Roles: Assign users to the appropriate roles based on their job functions. Regularly review and update role assignments as users’ responsibilities change.

- Implement RBAC across all Data Lake Components: Ensure RBAC is consistently applied across all components of the data lake, including storage, processing engines, and data governance tools. This ensures a unified access control model.

- Regularly Review and Audit Roles and Permissions: Conduct periodic reviews of roles, permissions, and user assignments to identify and address any potential security vulnerabilities or compliance issues. This includes removing access for users who no longer require it.

Using Identity Providers (IdPs) for Authentication and Authorization

Identity Providers (IdPs) play a crucial role in streamlining user authentication and authorization within a data lake environment. By integrating with an IdP, such as Microsoft Azure Active Directory (Azure AD), Google Workspace, or Okta, the data lake can leverage existing user identities and authentication mechanisms, simplifying user management and enhancing security.The use of IdPs involves:

- Centralized Authentication: Users authenticate through the IdP, which verifies their identity using credentials like usernames and passwords, multi-factor authentication (MFA), or other authentication methods. This centralizes the authentication process, reducing the risk of credential theft and unauthorized access.

- Federated Authorization: Once authenticated, the IdP provides authorization information, such as group memberships or claims, to the data lake. The data lake then uses this information to determine the user’s access rights based on the defined RBAC model.

- Single Sign-On (SSO): IdPs enable Single Sign-On (SSO), allowing users to access multiple data lake components and applications with a single set of credentials. This improves user experience and reduces the need for users to manage multiple passwords.

- Simplified User Management: User accounts and their attributes are managed centrally within the IdP. This simplifies user provisioning, de-provisioning, and access control updates, reducing administrative overhead.

- Enhanced Security: IdPs often provide enhanced security features, such as MFA, password policies, and threat detection capabilities, which can strengthen the overall security posture of the data lake.

Managing and Monitoring User Permissions

Effective management and monitoring of user permissions are essential for maintaining a secure data lake environment. This involves implementing processes and tools to track, control, and audit user access rights.Here are key considerations:

- Establish a Permission Management Workflow: Define a clear workflow for requesting, approving, and granting user permissions. This workflow should include documented procedures, approval processes, and audit trails.

- Utilize Access Control Lists (ACLs) and Policies: Implement ACLs and policies to control access to specific data objects and resources within the data lake. These policies should align with the defined RBAC model and grant permissions based on user roles.

- Regularly Review User Permissions: Conduct periodic reviews of user permissions to ensure that they are still appropriate and aligned with the user’s current job responsibilities. Revoke any unnecessary permissions.

- Monitor Access Logs: Implement comprehensive logging of all user access attempts and activities within the data lake. Analyze these logs to identify suspicious activity, potential security breaches, and unauthorized access attempts.

- Implement Alerting and Notifications: Configure alerts and notifications to be triggered when unusual or potentially malicious activity is detected, such as excessive failed login attempts or access to sensitive data by unauthorized users.

- Use Data Governance Tools: Leverage data governance tools to automate permission management, track data lineage, and monitor data access. These tools can help streamline access control processes and improve overall data security.

Designing a System for Regularly Reviewing and Auditing Access Privileges

A system for regularly reviewing and auditing access privileges is crucial for maintaining a secure and compliant data lake environment. This system should include scheduled reviews, automated reporting, and defined remediation processes.Here’s a suggested approach:

- Establish a Review Schedule: Define a regular schedule for reviewing access privileges, such as quarterly or semi-annually, based on the sensitivity of the data and the risk profile of the organization.

- Automate Access Reviews: Implement automated tools and processes to facilitate access reviews. This may include generating reports that list all users, their assigned roles, and their associated permissions.

- Involve Data Owners and Business Stakeholders: Engage data owners and business stakeholders in the access review process. They can provide valuable insights into the appropriateness of user permissions and identify any potential access control issues.

- Document Review Findings: Document the findings of each access review, including any identified issues, corrective actions taken, and the rationale behind any changes made.

- Implement a Remediation Process: Establish a clear process for addressing any identified access control issues. This may involve revoking unnecessary permissions, updating role assignments, or implementing additional security controls.

- Conduct Regular Audits: Conduct regular audits of access control processes to ensure that they are being followed correctly and that the system is functioning as intended.

- Use Automated Reporting: Configure automated reporting to track access privilege changes over time. This provides an audit trail and facilitates compliance reporting.

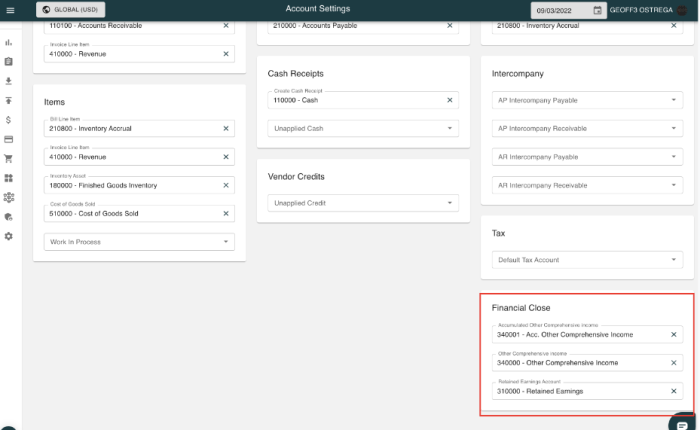

User Roles and Associated Permissions Table

The following table illustrates a sample of user roles and their associated permissions within a data lake environment. Note that the specific permissions and data access rights will vary depending on the organization’s specific needs and data classification.

| User Role | Description | Permissions | Data Access |

|---|---|---|---|

| Data Engineer | Responsible for building and maintaining the data lake infrastructure and data pipelines. | Create, read, write, execute, delete, manage storage, manage compute resources. | Access to all data for pipeline development and testing, access to infrastructure components. |

| Data Scientist | Responsible for analyzing data, building machine learning models, and generating insights. | Read, execute, access data processing tools. | Access to data for analysis and model development, potentially limited by data classification. |

| Data Analyst | Responsible for querying data, generating reports, and providing business insights. | Read, execute, access reporting tools. | Access to curated datasets and reports, access limited based on data classification and business need. |

| Auditor | Responsible for auditing data access and ensuring compliance with security policies. | Read access to audit logs, view data access policies. | Access to audit logs and metadata for compliance monitoring. |

| Data Steward | Responsible for data quality, data governance, and data catalog management. | Read, write (metadata), manage data catalog. | Access to metadata and data quality metrics, potential access to data based on data governance policies. |

| Security Administrator | Responsible for managing access control, user identities, and security configurations. | Manage users, manage roles, manage permissions, access security logs. | Access to security configurations, user management tools, and security logs. |

Network Security for Data Lakes

Network security is a crucial component of a robust data lake environment, providing the first line of defense against unauthorized access and potential data breaches. Implementing comprehensive network security measures ensures that data within the lake remains protected from external threats and internal vulnerabilities. This section focuses on network segmentation, firewall configurations, secure protocols, and remote access strategies to fortify your data lake.

Network Segmentation Strategies

Network segmentation divides the data lake’s network into isolated segments, limiting the impact of a security breach. This approach restricts lateral movement within the network, preventing attackers from easily accessing all data and resources.Here are several network segmentation strategies for data lakes:

- VLANs (Virtual LANs): VLANs logically separate network traffic, allowing administrators to group devices and resources based on function or security requirements. For example, you could create separate VLANs for data ingestion, processing, and storage.

- Subnetting: Dividing the network into subnets creates logical boundaries, limiting broadcast domains and controlling traffic flow. Each subnet can be configured with specific access controls and security policies.

- Security Zones: Security zones categorize network segments based on their sensitivity and the level of trust associated with them. Common zones include a public zone (for external access), a processing zone, and a secure data storage zone.

- Micro-segmentation: This advanced approach isolates individual workloads or applications, providing granular control over network traffic. It minimizes the attack surface by allowing only necessary communication between specific components.

Firewall Configurations for Securing Data Lake Access

Firewalls act as gatekeepers, controlling network traffic based on predefined rules. Properly configured firewalls are essential for protecting the data lake from unauthorized access.Firewall configurations should include:

- Ingress and Egress Filtering: Implement rules to allow only necessary inbound and outbound traffic. This prevents unauthorized connections and limits the potential for data exfiltration.

- Stateful Inspection: Enable stateful inspection to track the state of network connections and allow only legitimate traffic to pass.

- Application-Layer Filtering: Configure firewalls to inspect traffic at the application layer, identifying and blocking malicious activity, such as SQL injection attempts.

- Intrusion Detection and Prevention Systems (IDS/IPS): Integrate IDS/IPS to detect and block suspicious network activity, providing an additional layer of security.

- Regular Rule Reviews: Regularly review and update firewall rules to ensure they remain effective and aligned with evolving security threats.

For example, consider a data lake deployed on a cloud platform. The firewall configuration might involve:

- Allowing inbound traffic only from specific IP addresses or CIDR ranges representing trusted sources, such as internal users or authorized partners.

- Blocking all other inbound traffic by default.

- Allowing outbound traffic only to necessary destinations, such as external APIs or data sources.

- Implementing application-layer filtering to block potentially malicious requests, such as those containing suspicious payloads.

Implementing Secure Network Protocols

Secure network protocols encrypt data in transit, protecting it from eavesdropping and tampering. Implementing these protocols is critical for securing data lake communications.Here are essential secure network protocols:

- TLS/SSL (Transport Layer Security/Secure Sockets Layer): Use TLS/SSL to encrypt communication between clients and the data lake, including web applications, APIs, and data transfer protocols.

- HTTPS (Hypertext Transfer Protocol Secure): Employ HTTPS for all web-based access to the data lake, ensuring data is encrypted during transit.

- SFTP (Secure File Transfer Protocol): Utilize SFTP for secure file transfers, encrypting data and authenticating users.

- SSH (Secure Shell): Employ SSH for secure remote access to data lake servers, encrypting the communication channel.

For instance, when accessing a data lake through a web browser, ensure that the connection uses HTTPS. The browser will display a padlock icon in the address bar, indicating a secure, encrypted connection. This protects sensitive data, such as user credentials and data queries, from being intercepted.

Secure Tunnels for Remote Data Lake Access

VPNs (Virtual Private Networks) and other secure tunnels provide a secure, encrypted connection for remote users accessing the data lake. This is particularly important for users who need to access the data lake from outside the organization’s network.Here are methods for secure remote access:

- VPN (Virtual Private Network): VPNs create an encrypted tunnel between a remote user’s device and the data lake network, protecting data in transit.

- Site-to-Site VPN: This connects two networks securely, allowing resources to be shared between them.

- SSH Tunneling: SSH can be used to create secure tunnels for specific applications or services, encrypting traffic through an SSH connection.

- Remote Desktop Protocol (RDP) with Security: If using RDP, ensure it’s configured with strong encryption and authentication. Consider using a VPN to secure the RDP connection.

For example, an employee working remotely could use a VPN client to connect to the data lake network. All traffic between the employee’s device and the data lake would be encrypted, ensuring secure access to data and resources.

Network Security Measures for Data Lakes

Implementing a layered approach to network security provides comprehensive protection for data lakes.Here are the network security measures:

- Network Segmentation using VLANs, subnets, and security zones.

- Firewall configurations with ingress/egress filtering, stateful inspection, and application-layer filtering.

- Intrusion Detection and Prevention Systems (IDS/IPS) to monitor and block suspicious activity.

- Secure network protocols, including TLS/SSL, HTTPS, SFTP, and SSH.

- VPNs and secure tunnels for remote access.

- Regular security audits and vulnerability assessments to identify and address weaknesses.

- Monitoring network traffic and logs to detect and respond to security incidents.

Encryption and Data Protection

Protecting sensitive data within a data lake environment is paramount. Encryption and data protection mechanisms are crucial for maintaining confidentiality, integrity, and compliance with regulatory requirements. This section explores various methods to secure data at rest and in transit, manage encryption keys, implement data masking and anonymization, and utilize data loss prevention tools.

Encryption Methods for Data at Rest and in Transit

Data encryption is a fundamental aspect of data lake security, ensuring that data remains unreadable to unauthorized individuals. Encryption methods are applied to data both when it is stored (at rest) and when it is moving between locations (in transit).

- Data at Rest Encryption: This protects data stored within the data lake’s storage systems, such as object storage (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage) or file systems. The encryption can be implemented at the storage level, the file system level, or the application level.

- Server-Side Encryption (SSE): The storage provider encrypts the data at rest using encryption keys managed by the provider.

Examples include SSE-S3 for Amazon S3, SSE-Azure for Azure Blob Storage, and SSE-GCS for Google Cloud Storage. This method is relatively simple to implement.

- Client-Side Encryption (CSE): The data is encrypted by the client (e.g., application or user) before it is uploaded to the data lake. The client manages the encryption keys. This provides greater control over the encryption process.

- Server-Side Encryption (SSE): The storage provider encrypts the data at rest using encryption keys managed by the provider.

- Data in Transit Encryption: This protects data as it moves between the data lake and other systems or users. This is typically achieved through secure communication protocols.

- Transport Layer Security (TLS/SSL): TLS/SSL encrypts the communication channel between the client and the data lake services (e.g., API calls, web interfaces). This ensures that data transmitted over the network is protected from eavesdropping and tampering.

- IPsec: Internet Protocol Security (IPsec) can be used to encrypt network traffic at the IP layer. It provides secure communication between networks or hosts, often used for site-to-site VPN connections to the data lake.

Secure Management of Encryption Keys

Secure key management is critical to the overall security of the data lake. Compromised keys can render encryption ineffective. The following best practices are essential for managing encryption keys.

- Key Generation and Storage: Generate strong, random encryption keys using a cryptographically secure random number generator (CSPRNG). Store keys securely in a hardware security module (HSM) or a cloud-based key management service (KMS). Never store keys directly in the application code or configuration files.

- Key Rotation: Regularly rotate encryption keys to limit the impact of a potential key compromise. Establish a key rotation schedule based on industry best practices and regulatory requirements.

- Access Control: Implement strict access control policies to restrict access to encryption keys. Only authorized personnel should have access to the keys. Use role-based access control (RBAC) to manage key access.

- Auditing and Monitoring: Audit all key management operations, including key creation, rotation, and access. Monitor key usage for any suspicious activity. Implement alerts to notify security teams of potential key compromise.

- Key Destruction: Implement a secure key destruction process when keys are no longer needed. This should include securely wiping the keys from all storage locations.

Data Masking and Anonymization Techniques

Data masking and anonymization are techniques used to protect sensitive data by obfuscating or removing it, thereby reducing the risk of data breaches while still enabling data analysis and testing.

- Data Masking: This involves replacing sensitive data with realistic but fictitious values. Masking techniques can be applied to various data types, such as names, addresses, and credit card numbers.

- Substitution: Replacing actual values with similar but fictitious ones. For example, replacing a name with another name.

- Shuffling: Randomly reordering the values within a column.

- Redaction: Removing portions of data. For example, partially masking a credit card number (e.g., XXXX-XXXX-XXXX-1234).

- Data Generation: Generating synthetic data to replace the original values.

- Data Anonymization: This involves removing or altering data to make it impossible to identify the original data subject.

- Pseudonymization: Replacing identifying information with pseudonyms (e.g., unique identifiers). The original data can be linked back to the identifiers using a key, which must be securely managed.

- Generalization: Replacing specific values with broader categories. For example, replacing a specific age with an age range.

- Suppression: Removing specific data points.

- Aggregation: Combining data into groups or summaries to hide individual data points.

Use of Data Loss Prevention (DLP) Tools

Data Loss Prevention (DLP) tools are designed to monitor, detect, and prevent the unauthorized movement of sensitive data. Implementing DLP in a data lake environment helps to identify and mitigate data exfiltration risks.

- Data Discovery and Classification: DLP tools scan the data lake to identify and classify sensitive data based on predefined rules, regular expressions, and data dictionaries.

- Policy Enforcement: DLP tools enforce security policies to prevent data leakage. These policies can include blocking the transfer of sensitive data outside the data lake or encrypting data before it is moved.

- Monitoring and Alerting: DLP tools monitor data activity and generate alerts when suspicious activity is detected. This can include unauthorized access attempts, data downloads, and data transfers to untrusted locations.

- Integration with Other Security Tools: DLP tools can integrate with other security tools, such as SIEM (Security Information and Event Management) systems and incident response platforms, to provide a comprehensive security posture.

Comparison of Encryption Algorithms

Different encryption algorithms offer varying levels of security and performance. Selecting the appropriate algorithm depends on the specific security requirements, performance considerations, and regulatory compliance needs.

| Algorithm | Type | Strengths | Weaknesses |

|---|---|---|---|

| AES (Advanced Encryption Standard) | Symmetric | High security, widely adopted, fast performance, available in multiple key lengths (128, 192, 256 bits) | Requires secure key exchange; key management is critical. |

| Triple DES (3DES) | Symmetric | Widely supported, relatively simple to implement. | Slow performance, considered weak against modern attacks due to its effective key length (112 bits), vulnerable to meet-in-the-middle attacks. |

| RSA (Rivest-Shamir-Adleman) | Asymmetric | Public key cryptography, supports digital signatures, used for key exchange. | Slower performance compared to symmetric algorithms, requires careful key size selection to maintain security. |

| ECC (Elliptic Curve Cryptography) | Asymmetric | Offers strong security with smaller key sizes compared to RSA, suitable for resource-constrained environments. | More complex to implement than RSA, requires careful selection of curve parameters. |

| SHA-256 (Secure Hash Algorithm 256-bit) | Hashing | Widely used for data integrity verification, one-way function, resistant to collision attacks. | Not for encryption, used for data integrity, not confidentiality. |

Auditing and Monitoring

Auditing and monitoring are crucial for maintaining the security posture of a data lake. They provide visibility into activities, enable the detection of anomalies, and facilitate incident response. A robust auditing and monitoring strategy helps organizations meet compliance requirements, identify potential threats, and ensure the integrity of the data stored within the data lake environment.

Key Data Lake Activities for Auditing

Auditing specific activities within a data lake is essential for comprehensive security. This helps in tracking user behavior, identifying potential security breaches, and ensuring compliance with regulatory requirements.

- Data Access and Modification: Track all data access attempts, including successful and failed logins, data reads, data writes, and data modifications. Log the user identity, timestamp, data accessed, and the operation performed. For example, log all SELECT, INSERT, UPDATE, and DELETE statements executed against the data lake.

- Data Ingestion: Monitor the process of data ingestion to ensure data integrity and identify potential malicious uploads. This includes logging the source of the data, the user who initiated the ingestion, the time of ingestion, and any transformations applied. For instance, if a data lake ingests data from a third-party vendor, auditing this process is critical.

- Configuration Changes: Audit all configuration changes made to the data lake environment, including changes to access control lists (ACLs), security policies, and network settings. Record the user who made the change, the time of the change, and the specific modifications made. This allows for quick identification of unauthorized changes.

- User and Role Management: Track user account creation, modification, and deletion activities, as well as changes to user roles and permissions. This includes logging the user who performed the action, the affected user or role, and the time of the action. For example, audit any changes to user privileges that could inadvertently grant access to sensitive data.

- System Events: Monitor system-level events, such as server restarts, software updates, and security-related events (e.g., firewall rule changes). This provides insights into the overall health and security of the data lake infrastructure.

- Security Policy Violations: Log any instances where security policies are violated, such as unauthorized access attempts, failed login attempts, or attempts to access restricted data. This information is crucial for detecting and responding to security incidents.

Tools and Techniques for Monitoring Data Lake Security Events

Effective monitoring involves leveraging various tools and techniques to collect, analyze, and respond to security events within the data lake environment. The selection of tools should align with the specific needs and architecture of the data lake.

- Log Aggregation and Management: Utilize a centralized log management system to collect logs from various sources within the data lake. This includes logs from the data lake platform (e.g., Hadoop, Spark, cloud-based data lakes), operating systems, network devices, and security tools. Tools like Splunk, ELK Stack (Elasticsearch, Logstash, Kibana), and Sumo Logic are commonly used for log aggregation and analysis.

- Security Information and Event Management (SIEM): Implement a SIEM system to correlate security events from different sources, detect anomalies, and generate alerts. SIEM tools provide real-time visibility into security threats and enable faster incident response.

- Real-time Monitoring and Alerting: Set up real-time monitoring to detect suspicious activities as they occur. This involves configuring alerts based on predefined rules and thresholds. For example, an alert could be triggered when an unusual number of failed login attempts occur within a short period.

- Behavioral Analysis: Implement user and entity behavior analytics (UEBA) to establish a baseline of normal user behavior and identify deviations that may indicate a security threat. UEBA tools can detect insider threats, compromised accounts, and other malicious activities.

- Data Loss Prevention (DLP): Deploy DLP solutions to monitor data movement and prevent sensitive data from leaving the data lake. This involves scanning data for sensitive information and enforcing policies to prevent unauthorized data exfiltration.

- Network Intrusion Detection and Prevention Systems (IDS/IPS): Utilize IDS/IPS to monitor network traffic for malicious activity and prevent network-based attacks. This helps protect the data lake from external threats.

Implementing Security Information and Event Management (SIEM) Systems

Implementing a SIEM system is a critical step in enhancing the security posture of a data lake. It involves selecting a suitable SIEM solution, configuring data sources, establishing correlation rules, and integrating the SIEM with other security tools.

- SIEM Selection: Choose a SIEM solution that aligns with the data lake’s requirements, considering factors such as scalability, integration capabilities, and cost. Popular SIEM solutions include Splunk, IBM QRadar, and Microsoft Sentinel.

- Data Source Configuration: Configure the SIEM to collect logs from various data sources within the data lake, including the data lake platform, operating systems, network devices, and security tools. This may involve installing agents, configuring log forwarding, and establishing secure connections.

- Correlation Rule Development: Develop correlation rules to detect security threats and anomalies. These rules should be based on known attack patterns, security best practices, and the specific threats relevant to the data lake environment. For example, create a rule to detect multiple failed login attempts from the same IP address.

- Alerting and Reporting: Configure the SIEM to generate alerts when suspicious activities are detected. Customize the alerts to provide relevant information, such as the type of event, the affected user or resource, and the severity of the threat. Develop reports to track security events, monitor compliance, and identify trends.

- Integration with Other Tools: Integrate the SIEM with other security tools, such as incident response platforms and threat intelligence feeds, to streamline incident response and enhance threat detection capabilities.

Responding to and Investigating Security Incidents within the Data Lake

A well-defined incident response process is essential for effectively addressing security incidents within the data lake. This involves identifying, containing, eradicating, recovering from, and learning from security breaches.

- Incident Detection: Detect security incidents through real-time monitoring, SIEM alerts, and user reports.

- Incident Analysis and Validation: Investigate the incident to determine its scope, impact, and root cause. This may involve analyzing logs, examining system configurations, and interviewing affected users.

- Containment: Take steps to contain the incident and prevent further damage. This may involve isolating compromised systems, disabling compromised accounts, and blocking malicious network traffic.

- Eradication: Remove the root cause of the incident and eliminate any malicious software or artifacts. This may involve patching vulnerabilities, removing malware, and restoring compromised data.

- Recovery: Restore affected systems and data to a known good state. This may involve restoring data from backups, re-imaging compromised systems, and verifying data integrity.

- Post-Incident Activities: Conduct a post-incident review to identify lessons learned and improve the incident response process. This may involve updating security policies, strengthening security controls, and providing additional security training.

Steps for Setting Up an Auditing Process

Setting up an effective auditing process involves several key steps to ensure comprehensive coverage and efficient management of audit data. This structured approach helps maintain data lake security and compliance.

- Define Audit Objectives: Clearly define the objectives of the auditing process, such as meeting compliance requirements, detecting security breaches, and monitoring user activity.

- Identify Audit Scope: Determine the scope of the audit, including the data lake components, activities, and data sources to be audited.

- Select Audit Tools: Choose appropriate auditing tools based on the data lake platform, security requirements, and budget.

- Configure Audit Logging: Configure audit logging to capture relevant events and data. This includes specifying the data to be logged, the logging frequency, and the storage location.

- Establish Audit Retention Policies: Define retention policies for audit logs to comply with regulatory requirements and organizational policies. Consider the storage capacity and the need for long-term data analysis.

- Review and Analyze Audit Logs: Regularly review and analyze audit logs to identify security threats, anomalies, and policy violations. This may involve using SIEM tools, log analysis tools, and manual review.

- Implement Alerting and Reporting: Configure alerts to notify security teams of suspicious activities. Generate reports to track security events, monitor compliance, and identify trends.

- Establish Incident Response Procedures: Develop and document incident response procedures to address security incidents detected through auditing.

- Regularly Review and Update: Periodically review and update the auditing process to ensure its effectiveness and adapt to changes in the data lake environment and security threats.

Data Governance and Compliance

Data governance and compliance are critical components of a secure data lake environment. They establish the policies, processes, and controls necessary to manage data effectively and ensure adherence to legal and regulatory requirements. Without robust data governance, data lakes can become breeding grounds for security vulnerabilities, compliance violations, and data quality issues. This section explores the role of data governance, common compliance requirements, and best practices for managing data within a data lake, ensuring its security and integrity.

The Role of Data Governance in Securing a Data Lake

Data governance provides the framework for managing data assets throughout their lifecycle, from ingestion to disposal. It defines the roles, responsibilities, and processes for data management, ensuring data is accurate, consistent, and secure. Implementing a strong data governance program helps to mitigate risks and maintain compliance.Key aspects of data governance in a data lake environment include:

- Data Ownership: Establishing clear ownership of data assets helps to define accountability for data quality, security, and compliance. Data owners are responsible for defining data policies, ensuring data is used appropriately, and managing access controls.

- Data Quality: Implementing data quality standards and processes helps to ensure the accuracy, completeness, and consistency of data. This includes data validation, cleansing, and monitoring to identify and correct errors.

- Metadata Management: Creating and maintaining metadata about data assets helps users understand the data’s meaning, structure, and usage. Metadata management also supports data discovery, lineage tracking, and impact analysis.

- Data Security: Data governance includes policies and procedures for data security, such as access control, encryption, and data masking. These measures protect sensitive data from unauthorized access and use.

- Data Lifecycle Management: Managing the data lifecycle includes defining policies for data retention, archival, and disposal. This ensures data is stored for the appropriate period and disposed of securely when no longer needed.

- Compliance: Data governance helps to ensure compliance with relevant regulations, such as GDPR and HIPAA. This includes implementing controls for data privacy, security, and breach notification.

Compliance Requirements Relevant to Data Lakes

Data lakes often store sensitive data, making them subject to various compliance regulations. Understanding and adhering to these requirements is essential to avoid legal penalties and maintain data integrity.Common compliance requirements include:

- General Data Protection Regulation (GDPR): GDPR applies to organizations that collect, process, or store personal data of individuals within the European Union (EU). Key requirements include obtaining consent for data collection, providing data subject rights (e.g., access, rectification, erasure), and implementing data security measures. A company that doesn’t comply with GDPR can be fined up to 4% of its annual global turnover or €20 million, whichever is higher.

For example, in 2021, Amazon was fined €746 million by the Luxembourg data protection authority for GDPR violations.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA regulates the use and disclosure of protected health information (PHI) in the United States. Covered entities (e.g., healthcare providers, health plans) must implement administrative, physical, and technical safeguards to protect the confidentiality, integrity, and availability of PHI. Non-compliance can lead to significant financial penalties and criminal charges.

- California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA): These laws grant California residents specific rights regarding their personal information, including the right to know, the right to delete, and the right to opt-out of the sale of their personal information. Organizations must comply with these requirements if they do business in California and meet certain thresholds related to revenue, data volume, or data selling.

- Payment Card Industry Data Security Standard (PCI DSS): PCI DSS applies to any organization that processes, stores, or transmits credit card information. It sets standards for securing cardholder data, including requirements for network security, access control, and data encryption.

Best Practices for Data Classification and Labeling

Data classification and labeling are crucial for identifying and protecting sensitive data within a data lake. This involves categorizing data based on its sensitivity and assigning appropriate labels to guide security controls.Best practices include:

- Define Data Sensitivity Levels: Establish a clear classification scheme that categorizes data based on its sensitivity (e.g., public, internal, confidential, restricted). Define the criteria for each level, such as the potential impact of unauthorized disclosure.

- Identify and Label Data: Apply labels to data assets based on their classification. This can be done manually, automatically using data scanning tools, or through a combination of both. Labels should be consistent and easily recognizable.

- Implement Data Governance Policies: Establish policies that govern the handling of data at each sensitivity level. These policies should address access controls, encryption, data masking, and data retention.

- Automate Data Discovery and Classification: Utilize data discovery and classification tools to automate the process of identifying and labeling sensitive data. These tools can scan data lakes for sensitive information, such as personally identifiable information (PII) or financial data, and automatically assign appropriate labels.

- Train Users: Educate users about data classification policies and their responsibilities for handling sensitive data. Training should cover the different sensitivity levels, labeling conventions, and appropriate security controls.

Implementing Data Retention and Disposal Policies

Data retention and disposal policies are essential for managing the lifecycle of data within a data lake. They ensure data is stored for the required period and securely disposed of when no longer needed, minimizing risk and maintaining compliance.Key steps include:

- Define Retention Periods: Establish retention periods for different types of data based on legal, regulatory, and business requirements. These periods should be documented in a data retention policy.

- Automate Data Archiving: Implement automated processes for archiving data that is no longer actively used but needs to be retained for compliance or historical purposes. Archived data should be stored securely and accessible when needed.

- Secure Data Disposal: Define procedures for securely disposing of data when it reaches the end of its retention period. This should include data sanitization methods (e.g., data wiping, physical destruction of storage media) to prevent data breaches.

- Monitor and Audit: Regularly monitor and audit data retention and disposal processes to ensure compliance with policies and regulations. This includes verifying that data is being retained and disposed of correctly.

- Use Data Loss Prevention (DLP) Tools: Implement DLP tools to monitor and control the movement of sensitive data within the data lake and prevent unauthorized access or exfiltration. These tools can identify and block attempts to copy, share, or transmit sensitive data outside of the approved channels.

Compliance Requirements and Associated Controls

The following table Artikels common compliance requirements and associated controls relevant to data lakes. This table is not exhaustive, and specific controls may vary depending on the organization’s context and the specific regulations that apply.

| Compliance Requirement | Associated Controls |

|---|---|

| GDPR – Data Subject Rights | Data access and rectification processes, data erasure procedures, consent management mechanisms, data portability features. |

| GDPR – Data Security | Encryption of data at rest and in transit, access controls (least privilege), data masking and anonymization, regular security audits. |

| HIPAA – Privacy Rule | Policies and procedures for protecting PHI, patient consent mechanisms, business associate agreements, access controls. |

| HIPAA – Security Rule | Risk assessments, security awareness training, access controls, encryption, audit logs, data backup and recovery. |

| CCPA/CPRA – Data Subject Rights | Data access and deletion mechanisms, opt-out mechanisms for data sales, data minimization practices. |

| PCI DSS – Cardholder Data Security | Network segmentation, access controls, encryption of cardholder data, regular vulnerability scanning, secure configuration of systems. |

Data Lake Security Tools and Technologies

Securing a data lake requires a multifaceted approach, and a crucial element of this is the implementation of specialized security tools. These tools automate and streamline many security tasks, providing enhanced protection against threats and ensuring compliance with regulatory requirements. This section will delve into the leading security tools available for data lakes, their features, benefits, and integration strategies.

Leading Security Tools for Data Lakes

A variety of tools are available to address the specific security challenges of data lakes. These tools encompass various functionalities, from access control and data encryption to vulnerability scanning and threat detection. The choice of tools will depend on the specific needs and architecture of the data lake environment.

- Data Loss Prevention (DLP) Tools: DLP tools are designed to prevent sensitive data from leaving the data lake. They typically monitor data movement, identify sensitive data based on predefined rules or data classification, and enforce policies to prevent unauthorized access or exfiltration.

- Security Information and Event Management (SIEM) Systems: SIEM systems collect and analyze security-related events from various sources within the data lake environment. They provide real-time monitoring, threat detection, and incident response capabilities, helping security teams identify and respond to potential security breaches.

- Vulnerability Scanning Tools: These tools automatically scan the data lake infrastructure, including servers, applications, and network devices, for known vulnerabilities. They identify weaknesses that could be exploited by attackers and provide recommendations for remediation.

- Data Encryption Tools: Encryption tools are essential for protecting data at rest and in transit within the data lake. They use cryptographic algorithms to encrypt sensitive data, rendering it unreadable to unauthorized users.

- Access Control and Identity Management Tools: These tools manage user identities, roles, and permissions within the data lake. They enforce the principle of least privilege, ensuring that users only have access to the data and resources they need to perform their jobs.

- Data Catalog and Metadata Management Tools: While not strictly security tools, these tools play a vital role in security by providing visibility into the data stored in the data lake. They help identify sensitive data, track data lineage, and enforce data governance policies.

Features and Benefits of Each Tool

Each tool offers a unique set of features and benefits, contributing to the overall security posture of the data lake. Understanding these features is crucial for selecting the right tools for a given environment.

- DLP Tools: Features include data classification, policy enforcement, and data monitoring. Benefits include preventing data breaches, ensuring compliance with regulations, and protecting sensitive information. For example, a DLP tool can be configured to block the transfer of Personally Identifiable Information (PII) outside the data lake.

- SIEM Systems: Features include log aggregation, event correlation, threat detection, and incident response. Benefits include real-time monitoring, early threat detection, and faster incident response times. For instance, a SIEM system can detect suspicious activity, such as an unusual number of failed login attempts, and alert security teams.

- Vulnerability Scanning Tools: Features include vulnerability identification, reporting, and remediation recommendations. Benefits include proactive identification of security weaknesses, reduced attack surface, and improved security posture. An example is Nessus, a popular vulnerability scanner, which can identify misconfigurations and outdated software on servers within the data lake.

- Data Encryption Tools: Features include data encryption at rest and in transit, key management, and data masking. Benefits include protecting sensitive data from unauthorized access, ensuring data confidentiality, and complying with data privacy regulations. A common example is using encryption keys managed by a Key Management System (KMS) to encrypt data stored in cloud storage.

- Access Control and Identity Management Tools: Features include user authentication, authorization, role-based access control (RBAC), and multi-factor authentication (MFA). Benefits include controlling user access to data, enforcing the principle of least privilege, and reducing the risk of unauthorized data access.

- Data Catalog and Metadata Management Tools: Features include data discovery, data lineage tracking, and data classification. Benefits include improved data visibility, enhanced data governance, and better security. By knowing where sensitive data resides, security teams can focus their efforts on protecting the most critical assets.

Integrating Security Tools into the Data Lake Environment

Effective integration is critical to maximize the value of security tools within the data lake. This involves planning, configuration, and ongoing maintenance.

- Planning and Assessment: Start by assessing the existing security posture and identifying specific security requirements. Define the scope of each tool and how it will integrate with other components of the data lake.

- Tool Selection: Choose tools that are compatible with the data lake’s architecture, data formats, and cloud provider (if applicable). Consider factors such as scalability, performance, and integration capabilities.

- Configuration and Deployment: Configure each tool according to best practices and the specific security requirements. Deploy the tools in a phased approach to minimize disruption.

- Integration with Existing Systems: Integrate the security tools with existing security infrastructure, such as SIEM systems and monitoring tools. This enables centralized monitoring and incident response.

- Automation: Automate security tasks whenever possible, such as vulnerability scanning and data encryption. Automation reduces manual effort and improves efficiency.

- Training and Documentation: Provide training to security teams and other relevant personnel on how to use and maintain the security tools. Document the configuration, usage, and maintenance procedures.

- Monitoring and Maintenance: Continuously monitor the performance and effectiveness of the security tools. Regularly update the tools and adjust configurations as needed to address evolving threats.

Demonstrating the Use of Specific Tools for Vulnerability Scanning

Vulnerability scanning is a critical step in securing a data lake. Tools like Nessus can be used to identify and address security weaknesses. The process typically involves scanning the data lake infrastructure, analyzing the results, and remediating identified vulnerabilities.Example:

1. Scanning

A vulnerability scanner, such as Nessus, is configured to scan the data lake’s infrastructure. The scanner identifies all the hosts in the environment and begins scanning them to identify potential vulnerabilities. The scanner’s configuration includes the IP ranges to scan and the credentials required to access the hosts.

2. Analysis

The vulnerability scanner generates a report detailing the vulnerabilities found. The report includes the vulnerability’s severity, description, and potential impact. The report also provides remediation recommendations.

3. Remediation

Based on the vulnerability report, security teams take steps to remediate the identified vulnerabilities. This may involve patching software, changing configurations, or implementing security controls.

4. Verification

After remediation, the vulnerability scanner is run again to verify that the vulnerabilities have been addressed.

The following is a simplified example using Nessus:

“Nessus is configured to scan the data lake’s compute instances. After the scan completes, Nessus generates a report. This report identifies a critical vulnerability: a missing security patch on a server. The report provides detailed information about the vulnerability and recommends installing the latest security patch. The security team installs the patch and then re-runs the Nessus scan to verify that the vulnerability is resolved.This process is repeated regularly to maintain the security posture of the data lake.”

Securing Data Ingestion and Processing

Data ingestion and processing are critical components of any data lake environment. These stages are particularly vulnerable to security threats because they involve the movement, transformation, and storage of data from various sources. Implementing robust security measures at this stage is crucial to protect sensitive information and maintain the integrity of the data lake. This section Artikels the key security considerations, best practices, and implementation strategies for securing data ingestion and processing pipelines.

Security Considerations for Data Ingestion Pipelines

Data ingestion pipelines are the gateways through which data enters the data lake. Their security directly impacts the overall security posture of the data lake. Several security considerations must be addressed when designing and implementing these pipelines.

- Data Source Authentication and Authorization: Verify the identity of data sources and ensure they have the necessary permissions to ingest data. This prevents unauthorized access and data injection. For example, utilize secure APIs with authentication tokens or certificates to control access.

- Data Validation and Sanitization: Implement rigorous data validation to ensure data conforms to expected formats and ranges. Sanitize data to remove or neutralize malicious content, such as SQL injection attempts or cross-site scripting (XSS) attacks.

- Data Encryption: Encrypt data in transit using protocols like TLS/SSL and at rest using encryption keys managed by a secure key management system (KMS). This protects data confidentiality.

- Data Integrity Checks: Implement checksums or cryptographic hashes to verify data integrity during transmission and storage. This ensures data hasn’t been tampered with.

- Monitoring and Logging: Monitor data ingestion pipelines for suspicious activity, errors, and performance issues. Log all events for auditing and troubleshooting purposes. Utilize security information and event management (SIEM) systems for centralized log analysis.

- Pipeline Infrastructure Security: Secure the infrastructure supporting the ingestion pipelines, including servers, networks, and storage. This includes patching vulnerabilities, configuring firewalls, and implementing access controls.

- Compliance with Data Governance Policies: Ensure data ingestion pipelines comply with all relevant data governance policies, including data retention, data masking, and data lineage requirements.

Securing Data Processing Frameworks

Data processing frameworks, such as Apache Spark and Hadoop, are responsible for transforming and analyzing the data within the data lake. These frameworks require specific security measures to protect the data and the processing environment.

- Authentication and Authorization: Configure strong authentication mechanisms, such as Kerberos, to verify the identities of users and services accessing the framework. Implement role-based access control (RBAC) to limit access to data and resources based on user roles.

- Network Security: Isolate the data processing cluster within a secure network segment. Implement firewalls and intrusion detection systems (IDS) to monitor and control network traffic.

- Data Encryption: Encrypt data in transit between nodes within the cluster using protocols like TLS/SSL. Encrypt data at rest within the storage layer, such as HDFS, using encryption keys managed by a KMS.

- Secure Configuration: Harden the configuration of the data processing framework by disabling unnecessary services, regularly patching security vulnerabilities, and implementing secure configuration settings.

- Resource Management: Implement resource management and isolation mechanisms, such as YARN in Hadoop, to prevent resource exhaustion attacks.

- Monitoring and Auditing: Monitor the data processing framework for suspicious activity, errors, and performance issues. Log all events for auditing and compliance purposes.

- Data Masking and Anonymization: Implement data masking or anonymization techniques within the processing jobs to protect sensitive data during analysis and transformation.

Best Practices for Validating and Sanitizing Data During Ingestion

Data validation and sanitization are critical steps in protecting the data lake from malicious data and ensuring data quality.

- Define Data Schemas: Establish clear data schemas that define the expected format, data types, and ranges for incoming data.

- Input Validation: Validate all incoming data against the defined schemas. Reject or quarantine data that fails validation.

- Data Type Validation: Verify that data conforms to the expected data types (e.g., integers, strings, dates).

- Range Validation: Ensure numerical data falls within acceptable ranges.

- Format Validation: Verify data formats, such as email addresses, phone numbers, and dates, against predefined patterns.

- Sanitization: Remove or neutralize potentially harmful content from the data. This includes:

- HTML Tag Removal: Strip or encode HTML tags to prevent cross-site scripting (XSS) attacks.

- SQL Injection Prevention: Escape or parameterize user inputs to prevent SQL injection attacks.

- Malicious Code Removal: Identify and remove malicious code, such as JavaScript or shell commands.

- Regular Expressions: Use regular expressions to validate and sanitize data against complex patterns.

- Logging and Alerting: Log all validation and sanitization events. Configure alerts to notify security teams of suspicious activity or data validation failures.

Implementing Security Measures Within Data Processing Jobs

Security measures should be integrated into the data processing jobs themselves to protect data during transformation and analysis.

- Secure Coding Practices: Follow secure coding practices when developing data processing jobs. This includes:

- Input Validation: Validate all inputs to prevent injection attacks.

- Output Encoding: Encode all outputs to prevent cross-site scripting (XSS) attacks.

- Principle of Least Privilege: Grant jobs only the necessary permissions to access data and resources.

- Data Masking and Anonymization: Implement data masking or anonymization techniques to protect sensitive data during processing. This includes techniques like:

- Data Masking: Replacing sensitive data with masked values.

- Data Redaction: Removing sensitive data from the dataset.

- Data Pseudonymization: Replacing sensitive data with pseudonyms.

- Encryption: Encrypt data in transit between processing nodes and at rest within intermediate storage.

- Access Control: Enforce access control policies within the processing jobs to restrict access to sensitive data.

- Audit Logging: Implement detailed audit logging to track all data access and processing events.

- Secure Libraries and Dependencies: Use secure, up-to-date libraries and dependencies in the processing jobs. Regularly scan for and address vulnerabilities.

Best Practices Summary

The following table summarizes the best practices for securing data ingestion and processing.

| Area | Best Practice | Description |

|---|---|---|

| Data Source Authentication | Secure Authentication Mechanisms | Use strong authentication methods (e.g., certificates, tokens) to verify the identity of data sources. |

| Data Validation | Schema Validation | Validate data against predefined schemas to ensure data integrity and format compliance. |

| Data Sanitization | Input Sanitization | Sanitize data to remove or neutralize malicious content (e.g., SQL injection, XSS). |

| Data Encryption | Encryption in Transit and at Rest | Encrypt data in transit (TLS/SSL) and at rest (KMS managed keys) to protect confidentiality. |

| Network Security | Network Segmentation | Isolate data processing clusters within secure network segments and use firewalls. |

| Access Control | Role-Based Access Control (RBAC) | Implement RBAC to limit access to data and resources based on user roles. |

| Monitoring and Logging | Comprehensive Monitoring and Logging | Monitor all activities, log all events, and use SIEM systems for centralized log analysis. |

| Secure Configuration | Hardening Data Processing Frameworks | Harden the configuration of data processing frameworks by disabling unnecessary services and applying security patches. |

| Data Governance | Compliance with Data Governance Policies | Ensure data ingestion and processing pipelines comply with all relevant data governance policies, including data retention, masking, and lineage. |

Physical Security and Infrastructure

Securing the physical infrastructure that underpins a data lake is crucial for maintaining data integrity, availability, and confidentiality. Physical security encompasses the measures taken to protect the hardware, facilities, and supporting infrastructure from unauthorized access, damage, and environmental hazards. This layer of security is often overlooked but forms the foundation upon which all other security controls are built.

Securing the Underlying Cloud or On-Premise Infrastructure

Protecting the physical infrastructure involves a multifaceted approach that considers both cloud and on-premise environments. The specific measures employed will vary depending on the deployment model, but the underlying principles remain consistent.For cloud environments:

- Data Center Security: Cloud providers invest heavily in physical security for their data centers. This includes multi-factor authentication, biometric scanners, surveillance systems, and stringent access controls. These measures are designed to prevent unauthorized physical access to servers and storage devices.

- Geographic Redundancy: Leveraging multiple availability zones or regions provides geographic redundancy. In the event of a disaster affecting one location, data and services can be automatically failed over to another, ensuring business continuity. For example, AWS offers availability zones within a region and regions spread across the globe.

- Compliance Certifications: Ensure the cloud provider holds relevant certifications (e.g., ISO 27001, SOC 2) that validate their adherence to industry-standard security practices. These certifications provide an independent assessment of their security posture.

- Vendor Management: Carefully vet and manage third-party vendors who may have access to the cloud environment. Implement strong access controls and regularly audit their activities.

For on-premise environments:

- Controlled Access: Implement strict access controls to data center facilities, including physical barriers (e.g., fences, security gates), access badges, and visitor logs.

- Surveillance Systems: Deploy video surveillance systems with 24/7 monitoring to deter unauthorized access and record any incidents.

- Environmental Controls: Maintain appropriate temperature, humidity, and fire suppression systems to protect hardware from environmental hazards.

- Power and Cooling: Implement redundant power supplies (e.g., UPS systems) and cooling systems to ensure continuous operation in the event of a power outage or equipment failure.

- Server Room Security: Secure server rooms with locked doors, restricted access, and environmental monitoring systems.

Best Practices for Disaster Recovery and Business Continuity Planning

A robust disaster recovery (DR) and business continuity (BC) plan is essential for minimizing downtime and data loss in the event of a disruptive event. This plan should cover a range of potential threats, including natural disasters, human error, and cyberattacks.

- Risk Assessment: Conduct a comprehensive risk assessment to identify potential threats and vulnerabilities that could impact the data lake.

- Recovery Objectives: Define Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs).

RTO: The maximum acceptable downtime after a disaster.

RPO: The maximum acceptable data loss in the event of a disaster. - Data Backup and Replication: Implement a robust data backup and replication strategy. This includes regular backups of data to a geographically separate location and replicating critical data in real-time or near real-time.

- Failover Procedures: Document and test failover procedures to ensure that data and services can be quickly and efficiently restored in the event of a disaster.

- Regular Testing: Regularly test the DR/BC plan to validate its effectiveness and identify any weaknesses.

- Communication Plan: Establish a clear communication plan to keep stakeholders informed during a disaster.

- Training: Train personnel on the DR/BC plan and their roles and responsibilities.

Implementing Redundancy and Failover Mechanisms

Redundancy and failover mechanisms are critical for ensuring high availability and minimizing downtime. These mechanisms involve duplicating critical components and automatically switching to a backup component in the event of a failure.

- Data Replication: Replicate data across multiple storage locations or availability zones.

- Load Balancing: Distribute traffic across multiple servers to prevent overload and ensure that services remain available even if one server fails.

- Database Clustering: Implement database clustering to provide high availability and automatic failover for database services. For example, a database cluster might automatically switch to a backup node if the primary node fails.

- Network Redundancy: Utilize redundant network connections and devices to ensure that network connectivity remains available in the event of a hardware failure.

- Automated Failover: Implement automated failover mechanisms to automatically switch to a backup component or service in the event of a failure.

- Regular Testing and Monitoring: Continuously monitor the infrastructure and test failover mechanisms to ensure they are functioning correctly.

Descriptive Text for an Image Illustrating the Infrastructure Security Measures

The image depicts a data center environment, emphasizing physical security and infrastructure protection. The data center is enclosed by a high, secure perimeter fence with barbed wire, indicating a first line of defense against unauthorized physical access. Surveillance cameras are strategically placed around the perimeter and within the facility, ensuring continuous monitoring and recording of activities. Inside the data center, access is strictly controlled, with biometric scanners and card readers at entry points, preventing unauthorized entry.

Servers are housed in locked racks, and the data center maintains climate control systems to regulate temperature and humidity, protecting the hardware from environmental damage. Redundant power supplies (UPS) and generators are in place to ensure continuous operation even during power outages. The image also highlights the presence of fire suppression systems and clearly marked emergency exits, emphasizing the preparedness for various types of incidents.

The overall impression is one of robust physical security and a commitment to protecting the data lake infrastructure from a range of threats.

Threat Modeling and Vulnerability Management

Data lake environments, due to their centralized nature and the sensitivity of the data they store, are attractive targets for malicious actors. A proactive approach to security is essential, and this includes robust threat modeling and vulnerability management practices. By understanding potential threats and weaknesses, organizations can implement appropriate safeguards to protect their data assets.

The Process of Threat Modeling for Data Lake Environments

Threat modeling is a systematic process used to identify, analyze, and mitigate potential security threats within a system or environment. For data lakes, this involves understanding the specific architecture, data flows, and user access patterns to pinpoint potential vulnerabilities.The steps involved in a threat modeling process are:

- Define the Scope: Clearly define the boundaries of the data lake environment, including all components, data sources, and user roles. Identify the critical assets that need protection, such as sensitive data, processing infrastructure, and access control mechanisms.

- Understand the Architecture: Document the data lake’s architecture, including the technologies used (e.g., Hadoop, Spark, cloud services), data ingestion pipelines, data storage locations, processing engines, and access control mechanisms. Create a data flow diagram to visualize data movement.

- Identify Threats: Utilize various threat modeling methodologies (e.g., STRIDE, PASTA) to identify potential threats. Consider various threat actors, including internal users, external attackers, and malicious insiders. Analyze potential attack vectors, such as data breaches, denial-of-service attacks, and unauthorized access.

- Analyze Vulnerabilities: Assess the vulnerabilities associated with each identified threat. This involves identifying weaknesses in the system’s design, implementation, or configuration that could be exploited by attackers. Consider vulnerabilities related to data storage, access control, data processing, and network security.

- Develop Mitigation Strategies: Develop mitigation strategies to address identified threats and vulnerabilities. These strategies may involve implementing security controls, such as access controls, encryption, intrusion detection systems, and data loss prevention measures. Prioritize mitigation efforts based on the severity and likelihood of the threat.

- Implement Mitigation Strategies: Implement the selected mitigation strategies based on the established priorities. This may involve configuring security tools, updating software, and training personnel on security best practices.

- Validate and Test: Test the effectiveness of the implemented mitigation strategies through penetration testing, vulnerability assessments, and security audits. Ensure that the security controls are functioning as intended and that vulnerabilities are effectively addressed.

- Monitor and Maintain: Continuously monitor the data lake environment for security threats and vulnerabilities. Regularly review security logs, analyze security incidents, and update the threat model as the environment evolves.

Common Data Lake Vulnerabilities and Attack Vectors

Data lakes are susceptible to a variety of vulnerabilities and attack vectors. Understanding these is crucial for effective security planning.Some common vulnerabilities and attack vectors include:

- Insufficient Access Controls: Weak or misconfigured access controls can allow unauthorized users to access sensitive data. This includes improperly configured role-based access control (RBAC) and overly permissive permissions.

- Data Breaches: Data breaches can occur through various means, such as SQL injection, cross-site scripting (XSS), and phishing attacks. These attacks can lead to the exposure of sensitive data, such as personally identifiable information (PII) or financial data.

- Malware and Ransomware: Data lakes can be targeted by malware and ransomware attacks. Malware can be introduced through compromised user accounts, malicious data uploads, or vulnerabilities in data processing tools. Ransomware can encrypt data and demand a ransom for its release.

- Denial-of-Service (DoS) Attacks: DoS attacks can disrupt data lake operations by overwhelming the system with traffic. This can prevent legitimate users from accessing data and services.

- Insider Threats: Malicious or negligent insiders can pose a significant threat to data lake security. This includes employees or contractors who intentionally or unintentionally misuse their access privileges.

- Vulnerabilities in Data Processing Tools: Data processing tools, such as Spark and Hadoop, can have vulnerabilities that can be exploited by attackers. Regularly updating these tools and patching vulnerabilities is crucial.

- Unsecured APIs: APIs used to access data lake resources can be vulnerable to attacks if not properly secured. This includes vulnerabilities like authentication bypass, authorization issues, and injection attacks.