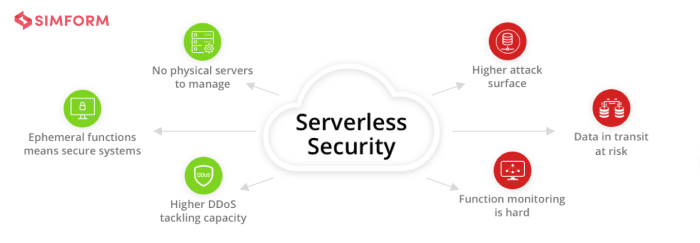

The advent of serverless computing has revolutionized application development, offering unprecedented scalability and agility. However, this paradigm shift introduces unique security challenges. Serverless architectures, by their ephemeral and distributed nature, present new attack surfaces, necessitating a proactive and vigilant approach to security monitoring. Understanding these threats and implementing robust monitoring strategies are crucial to safeguarding serverless applications and data.

This document delves into the critical aspects of monitoring for security threats within serverless environments. It explores common attack vectors, essential monitoring tools and techniques, and best practices for log aggregation, real-time threat detection, network security, identity and access management (IAM), code security, and compliance. Through a detailed examination of these areas, we aim to provide a comprehensive guide for securing serverless deployments.

Understanding Serverless Security Threats

Serverless architectures, while offering significant advantages in terms of scalability and cost-efficiency, introduce a unique set of security challenges. The distributed nature and reliance on third-party services create new attack surfaces that differ significantly from traditional infrastructure models. Understanding these threats is crucial for implementing effective security measures and protecting sensitive data.

Common Attack Vectors Targeting Serverless Architectures

Serverless functions, often triggered by various events, are vulnerable to attacks targeting the event triggers, the function code itself, and the underlying infrastructure. Exploiting these vulnerabilities can lead to data breaches, denial-of-service (DoS) attacks, and unauthorized access.

- Injection Attacks: Serverless functions can be susceptible to injection attacks, such as SQL injection or command injection, if user-supplied data is not properly sanitized before being used in database queries or system commands. For example, a malicious actor could inject a SQL query that extracts sensitive information from a database.

- Denial-of-Service (DoS) Attacks: Serverless functions can be targeted by DoS attacks, where attackers attempt to exhaust the resources of the function or the underlying cloud infrastructure. This can be achieved by flooding the function with requests, causing it to consume all available resources and become unavailable. This can also be performed by invoking the function repeatedly.

- Function Code Vulnerabilities: Vulnerabilities in the function code itself, such as insecure coding practices or the use of outdated libraries, can be exploited by attackers. This can lead to remote code execution (RCE) or other security breaches.

- Event Trigger Manipulation: Attackers can manipulate the events that trigger serverless functions, such as by sending malicious payloads or by triggering the function at an unexpected time. This can lead to unexpected behavior and security vulnerabilities.

- Supply Chain Attacks: Serverless functions often rely on third-party libraries and dependencies. Attackers can compromise these dependencies, injecting malicious code that is executed when the function is invoked.

Examples of Data Breaches Specific to Serverless Functions

Several real-world incidents highlight the vulnerabilities of serverless architectures and the potential for data breaches. These examples illustrate the importance of proactive security measures and the consequences of neglecting them.

- Misconfigured Permissions: In 2019, a misconfiguration in an AWS S3 bucket allowed unauthorized access to sensitive data. This illustrates how improperly configured permissions can lead to data leaks. Specifically, a function that was supposed to write to the bucket had full read/write access, which resulted in an attacker being able to access and modify the data.

- Insecure Function Code: Vulnerabilities within function code, such as the use of hardcoded credentials or insecure input validation, have resulted in data breaches. For instance, a function that lacked proper input validation allowed an attacker to inject malicious code, compromising sensitive information.

- Compromised Dependencies: Supply chain attacks, where malicious code is injected into third-party libraries, have been used to compromise serverless functions. When the function calls the compromised library, the attacker gains access to the function’s resources.

Impact of Misconfigurations on Serverless Security

Misconfigurations are a significant cause of security vulnerabilities in serverless environments. These errors can expose sensitive data, enable unauthorized access, and lead to various other security breaches.

- Excessive Permissions: Granting serverless functions excessive permissions, such as allowing them to access more resources than they need, increases the attack surface. An attacker who compromises a function with excessive permissions can potentially gain access to a wider range of resources.

- Insecure Storage Configurations: Misconfigured storage buckets, such as allowing public access to sensitive data, can lead to data leaks. This is a common issue where data is stored in cloud storage, and the access controls are not properly configured.

- Weak Authentication and Authorization: Inadequate authentication and authorization mechanisms can allow unauthorized users to access and execute serverless functions. This can be particularly problematic if the functions handle sensitive data or perform critical operations.

- Lack of Input Validation: Failure to validate user input can lead to injection attacks, where attackers can inject malicious code or commands. This can compromise the integrity and confidentiality of the data.

- Insufficient Logging and Monitoring: Without proper logging and monitoring, it is difficult to detect and respond to security incidents. This makes it easier for attackers to operate undetected and for extended periods.

Monitoring Tools and Techniques

Effectively monitoring a serverless environment is crucial for identifying and mitigating security threats. The ephemeral nature of serverless functions and the distributed architecture necessitate a proactive and comprehensive approach to monitoring. This involves utilizing specialized tools and integrating security monitoring into existing workflows to maintain a robust security posture.

Identifying Monitoring Tools

Several tools are available for monitoring serverless environments, each offering unique capabilities and integrations. These tools provide visibility into function execution, resource utilization, and security events. The selection of tools depends on the specific cloud provider, application requirements, and security goals.

- Cloud Provider Native Tools: Most major cloud providers offer native monitoring services designed specifically for serverless applications. For example, AWS provides CloudWatch, which allows for monitoring of logs, metrics, and events generated by AWS Lambda functions and other services. Google Cloud Platform (GCP) offers Cloud Monitoring and Cloud Logging, while Azure provides Azure Monitor and Application Insights. These tools typically offer seamless integration with other cloud services, making it easier to collect and analyze data.

They also often include pre-built dashboards and alerts for common serverless security concerns, such as function errors and unauthorized access attempts.

- Third-Party Monitoring Solutions: Several third-party vendors offer specialized monitoring solutions tailored for serverless environments. These tools often provide advanced features, such as automated threat detection, vulnerability scanning, and custom dashboards. Examples include Datadog, New Relic, and Dynatrace, which offer comprehensive monitoring capabilities, including support for various cloud providers and programming languages. These solutions often provide features like anomaly detection, allowing users to automatically identify unusual behavior that might indicate a security breach.

- Security Information and Event Management (SIEM) Systems: SIEM systems, such as Splunk, Sumo Logic, and Elastic Security, can aggregate and analyze security logs from various sources, including serverless functions, network devices, and security tools. These systems provide a centralized view of security events, enabling security teams to correlate events, identify patterns, and respond to threats effectively. SIEMs are crucial for threat detection and incident response in serverless environments, as they provide the context needed to understand the scope and impact of security incidents.

They also support compliance reporting by providing an audit trail of security events.

- Infrastructure as Code (IaC) Integration: Integrating monitoring tools with Infrastructure as Code (IaC) tools, such as Terraform or AWS CloudFormation, allows for the automated provisioning and configuration of monitoring resources alongside the serverless infrastructure. This ensures that monitoring is enabled from the outset and that changes to the infrastructure are automatically reflected in the monitoring setup. This approach helps maintain consistency and reduces the risk of misconfigurations that could compromise security.

For example, IaC can be used to define the configuration of CloudWatch alarms that trigger when a Lambda function exceeds a specific execution time or generates a certain number of errors.

Benefits of Logging and Monitoring Dashboards

Logging and monitoring dashboards provide a centralized view of the serverless environment, enabling efficient threat detection, incident response, and performance optimization. They transform raw data into actionable insights, facilitating informed decision-making and proactive security measures.

- Real-time Visibility: Dashboards provide real-time visibility into function executions, resource utilization, and security events. This allows security teams to quickly identify and respond to suspicious activity. For example, a dashboard might display a graph of function invocations over time, allowing administrators to detect spikes in activity that could indicate a denial-of-service attack.

- Centralized Log Aggregation: Logging dashboards aggregate logs from various sources, including function executions, API gateways, and other services. This centralization simplifies the process of analyzing logs and identifying security threats. For instance, logs from multiple Lambda functions can be aggregated and filtered to identify a specific user attempting to access restricted resources.

- Customizable Alerts and Notifications: Monitoring dashboards enable the creation of custom alerts and notifications based on specific criteria, such as function errors, unauthorized access attempts, or unusual resource usage. These alerts notify security teams of potential threats, enabling prompt investigation and remediation. For example, an alert can be configured to trigger when a Lambda function attempts to access a database without proper authentication.

- Performance Monitoring and Optimization: Dashboards provide insights into the performance of serverless functions, including execution time, memory usage, and cold start times. This information can be used to optimize function performance, reduce costs, and improve the user experience. For instance, a dashboard might show that a specific function is experiencing high latency due to excessive database queries, prompting developers to optimize the function’s code or database interactions.

- Incident Response and Forensics: Detailed logs and metrics provided by dashboards are invaluable during incident response and forensic investigations. They provide the context needed to understand the scope of a security incident, identify the root cause, and prevent future occurrences. For example, logs can be used to track the actions of a compromised user, identify the compromised resources, and determine the extent of the damage.

Integrating Security Monitoring with CI/CD Pipelines

Integrating security monitoring into CI/CD pipelines ensures that security checks are performed throughout the software development lifecycle. This proactive approach helps identify and address security vulnerabilities early in the development process, reducing the risk of deploying insecure code to production.

- Automated Security Scanning: Integrate automated security scanning tools, such as static analysis tools and vulnerability scanners, into the CI/CD pipeline. These tools analyze code for security vulnerabilities and identify potential weaknesses before deployment. For example, a static analysis tool can identify insecure coding practices, such as hardcoded credentials or SQL injection vulnerabilities. Vulnerability scanners can detect known vulnerabilities in third-party libraries and dependencies.

- Security Testing as Part of the Build Process: Incorporate security testing as part of the build process. This includes unit tests, integration tests, and end-to-end tests that specifically focus on security aspects, such as authentication, authorization, and data validation. For example, integration tests can verify that a Lambda function correctly authenticates users and authorizes access to protected resources.

- Automated Deployment and Configuration of Monitoring Resources: Automate the deployment and configuration of monitoring resources as part of the CI/CD pipeline. This ensures that monitoring is enabled from the outset and that changes to the infrastructure are automatically reflected in the monitoring setup. For example, the pipeline can automatically configure CloudWatch alarms and dashboards when deploying a new Lambda function.

- Real-time Monitoring and Alerting: Integrate real-time monitoring and alerting into the CI/CD pipeline. This allows for the detection of security threats and performance issues during deployment. For example, the pipeline can trigger alerts if a new Lambda function generates a high number of errors or experiences unexpected latency.

- Feedback Loops and Iterative Improvement: Establish feedback loops to incorporate security findings and monitoring data into the development process. This enables developers to learn from security incidents and improve the security posture of the application over time. For example, security teams can review the results of security scans and testing, and provide feedback to developers on how to address identified vulnerabilities.

Log Aggregation and Analysis

Centralized log aggregation and robust analysis are fundamental to effective security monitoring in serverless environments. The ephemeral nature of serverless functions and the distributed architecture necessitate a system that can collect, store, and analyze logs from various sources in a unified manner. This allows security teams to identify anomalies, track potential threats, and respond proactively to security incidents.

Design of a Centralized Log Aggregation System

Designing a centralized log aggregation system for serverless applications requires careful consideration of scalability, cost-effectiveness, and ease of integration. The system must be capable of handling the high volume of logs generated by numerous function invocations and diverse services.A typical architecture involves several key components:

- Log Sources: These are the origins of the logs. In a serverless context, these include:

- AWS Lambda functions (and their execution logs)

- API Gateway logs

- CloudWatch logs (or equivalent from other cloud providers)

- Application logs generated by the functions themselves

- Log Collection Agents: These agents are responsible for gathering logs from the sources. In a serverless architecture, these agents can be:

- Native cloud provider logging integrations (e.g., AWS CloudWatch Logs)

- Custom log shippers (e.g., Fluentd, Logstash, or custom-built solutions) deployed within the functions or as sidecars.

- Log Transport: This component handles the secure and reliable transmission of logs from the collection agents to the central storage. Considerations include:

- Using secure protocols like HTTPS or TLS.

- Implementing mechanisms for buffering and retrying failed transmissions.

- Choosing a message queue or streaming service (e.g., Amazon Kinesis, Apache Kafka) for high-throughput log ingestion.

- Log Storage: This component provides a durable and scalable repository for storing the aggregated logs. Options include:

- Cloud-native storage solutions (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage)

- Dedicated log management platforms (e.g., Splunk, Sumo Logic, Elastic Stack)

- Database solutions optimized for log data (e.g., Amazon Elasticsearch Service)

- Log Analysis and Visualization: This component provides the tools and capabilities for analyzing the logs and presenting the insights in a meaningful way. These include:

- Log parsing and indexing.

- Querying and filtering capabilities.

- Dashboards and visualizations for monitoring and alerting.

Methods for Analyzing Logs to Detect Suspicious Activities

Analyzing logs effectively is crucial for detecting and responding to security threats. This process involves a combination of automated analysis techniques and manual review, focusing on identifying anomalous patterns and behaviors.Several methods are employed:

- Anomaly Detection: This involves identifying deviations from the baseline behavior of the system. This can be achieved through:

- Statistical analysis: Calculating statistical measures such as mean, standard deviation, and percentiles for key metrics (e.g., request volume, error rates, latency).

- Machine learning: Training models to detect unusual patterns in log data. For example, using clustering algorithms to group similar log events or using classification models to identify malicious activity.

- Rule-Based Detection: This involves defining rules that trigger alerts when specific patterns are detected in the logs. Examples include:

- Identifying failed login attempts.

- Detecting unauthorized access to sensitive resources.

- Monitoring for unusual network traffic patterns.

- Threat Intelligence Integration: Integrating threat intelligence feeds to identify known malicious actors, IP addresses, and domains. This allows for proactive blocking and alerting on known threats.

- Behavioral Analysis: This involves analyzing the behavior of users, applications, and infrastructure over time to identify deviations from expected patterns. For example, tracking the frequency and timing of API calls or monitoring changes to configuration settings.

- Log Correlation: Correlating events from different log sources to gain a comprehensive view of the security posture. This helps to identify complex attacks that may span multiple systems or services.

Examples of Log Query Structures to Identify Anomalies

Effective log queries are essential for extracting valuable insights from the aggregated log data. The structure of these queries should be tailored to the specific threat being investigated and the log management platform being used.Here are examples, presented with a conceptual query language, and their interpretations:

- Detecting Excessive Error Rates:

SELECT COUNT(*) AS error_count, AVG(latency) AS avg_latency, function_name FROM logs WHERE status_code >= 500 AND timestamp BETWEEN now()

-5m AND now() GROUP BY function_name ORDER BY error_count DESC LIMIT 10This query identifies functions experiencing a high error rate (status code 500 or greater) within the last 5 minutes, along with average latency, and sorts them by the number of errors. This can help identify functions that may be experiencing performance issues or are under attack.

- Identifying Suspicious Login Attempts:

SELECT COUNT(*) AS failed_attempts, user_ip, user_agent FROM logs WHERE event_type = ‘login_failed’ AND timestamp BETWEEN now()

-1h AND now() GROUP BY user_ip, user_agent ORDER BY failed_attempts DESC LIMIT 10This query identifies IP addresses and user agents associated with a high number of failed login attempts within the last hour. It can help detect brute-force attacks or attempts to compromise user accounts.

- Monitoring for Unauthorized Resource Access:

SELECT user_id, resource_accessed, action, COUNT(*) AS access_count FROM logs WHERE event_type = ‘access_denied’ AND timestamp BETWEEN now()

-24h AND now() GROUP BY user_id, resource_accessed, action ORDER BY access_count DESCThis query identifies users and the resources they are trying to access that are denied within the last 24 hours, showing the number of attempts. It helps in identifying potential privilege escalation attempts or unauthorized access attempts.

- Detecting Unusual API Call Patterns:

SELECT api_endpoint, COUNT(*) AS call_count, AVG(latency) AS avg_latency FROM logs WHERE api_key = ‘suspicious_api_key’ AND timestamp BETWEEN now()

-1h AND now() GROUP BY api_endpoint ORDER BY call_count DESCThis query identifies API endpoints accessed by a suspicious API key within the last hour, along with call counts and average latency. It can help detect malicious activity such as data exfiltration or unauthorized API usage. The example shows the use of a specific API key to track a potential compromised key.

Real-time Threat Detection

Implementing real-time threat detection is crucial for swiftly identifying and responding to security incidents within a serverless environment. This proactive approach allows security teams to mitigate potential damage before threats escalate. The effectiveness of this detection relies on the ability to analyze incoming data streams, establish baselines, and promptly trigger alerts when deviations occur.

Real-time Alerting Systems for Security Incidents

Real-time alerting systems are essential components of a robust security posture. These systems continuously monitor various data sources, such as logs, metrics, and network traffic, to identify potential threats. The key is to configure these systems to respond rapidly and accurately to suspicious activities.

- System Architecture: Real-time alerting systems typically comprise data ingestion pipelines, processing engines, and notification mechanisms. Data ingestion collects raw data from diverse sources. The processing engine analyzes the data, applying detection rules and anomaly detection algorithms. Finally, the notification mechanism delivers alerts to the appropriate stakeholders, such as security analysts or automated incident response systems.

- Data Sources: Comprehensive monitoring involves integrating various data sources. These sources include:

- Cloud Provider Logs: AWS CloudTrail, Azure Monitor, and Google Cloud Audit Logs provide detailed information about user activity, API calls, and resource changes.

- Application Logs: Application logs contain valuable insights into application behavior, errors, and potential vulnerabilities.

- Network Traffic: Analyzing network traffic can reveal malicious activities, such as port scans, unauthorized access attempts, and data exfiltration.

- Security Information and Event Management (SIEM) Systems: SIEM systems aggregate and correlate data from multiple sources, providing a centralized view of security events and facilitating advanced threat detection.

- Alerting Mechanisms: Effective alerting systems employ various notification methods. These include:

- Email Notifications: Sending email alerts to security teams provides a direct and timely communication channel.

- SMS Notifications: SMS alerts can be used for critical incidents, ensuring immediate notification.

- Integration with Incident Management Systems: Integrating alerts with incident management systems streamlines the incident response process.

- Chat Notifications: Sending alerts to collaboration platforms like Slack or Microsoft Teams allows for real-time communication and collaboration among security teams.

Automated Incident Response Triggers

Automated incident response (AIR) plays a critical role in responding to security incidents rapidly and consistently. AIR systems automate predefined actions based on specific triggers, minimizing the time required to contain and remediate threats. This automation improves the overall security posture.

- Examples of Triggers:

- Unauthorized API Calls: Detection of API calls from unknown IP addresses or unusual locations can trigger automatic actions, such as blocking the IP address or revoking API keys.

- Unusual Resource Access: Unusual access patterns to sensitive resources, such as database instances or storage buckets, can initiate automated responses, such as isolating the affected resource or terminating the access.

- Malware Detection: The detection of malware on serverless functions or within storage buckets can trigger automated actions, such as quarantining the infected files or disabling the function.

- Failed Login Attempts: A high number of failed login attempts from a specific IP address can trigger automated actions, such as blocking the IP address or enforcing multi-factor authentication.

- Automated Actions:

- Isolation: Isolating compromised resources, such as serverless functions or database instances, prevents further lateral movement and limits the impact of a security incident.

- Containment: Containing the spread of a threat involves actions such as blocking malicious IP addresses, revoking compromised credentials, and disabling affected services.

- Remediation: Remediation actions aim to eliminate the threat and restore the system to a secure state. This may involve patching vulnerabilities, removing malware, or restoring data from backups.

- Notification: Automatically notifying security teams or relevant stakeholders about the incident ensures prompt awareness and facilitates manual investigation and intervention.

Strategies for Minimizing False Positives in Alert Systems

Minimizing false positives is critical for maintaining the effectiveness of real-time threat detection systems. False positives can lead to alert fatigue, decreased responsiveness, and wasted resources. Implementing strategies to reduce false positives improves the accuracy and reliability of the system.

- Baseline Behavior: Establishing a baseline of normal behavior is essential. This involves analyzing historical data to understand typical patterns of activity. Deviations from the baseline can then be flagged as potential threats.

- Contextual Analysis: Adding context to alerts enhances accuracy. This includes correlating events from multiple data sources to gain a comprehensive understanding of the situation. For example, combining information about user activity, network traffic, and application logs can provide a more accurate assessment of a potential threat.

- Rule Tuning: Regularly tuning detection rules is necessary to minimize false positives. This involves adjusting the sensitivity of rules based on feedback from security analysts and observed behavior.

- Whitelisting: Implementing whitelisting allows for identifying trusted sources or activities that should be excluded from alerting. For example, whitelisting known IP addresses or specific user agents can reduce false positives.

- Machine Learning: Utilizing machine learning techniques can improve the accuracy of threat detection. Machine learning algorithms can identify subtle patterns and anomalies that may be missed by rule-based systems.

- Alert Prioritization: Prioritizing alerts based on severity and potential impact allows security teams to focus on the most critical incidents first. This can be achieved by assigning risk scores to alerts or using threat intelligence feeds to enrich the alerts.

Network Security Monitoring

Network security monitoring in serverless environments requires a fundamentally different approach compared to traditional infrastructure. The ephemeral nature of serverless functions, coupled with the lack of direct control over the underlying network infrastructure, presents significant challenges. Effective monitoring necessitates a shift from traditional network-based tools to solutions that integrate with serverless platform services and leverage event-driven architectures. This ensures visibility into network traffic and security events within the dynamic and rapidly changing serverless landscape.

Implementing Network Security Monitoring

Implementing network security monitoring in a serverless environment involves a multi-faceted approach, focusing on collecting, analyzing, and responding to network-related events. This requires careful consideration of the serverless platform’s capabilities and integration with security tools.

- Leveraging Platform-Provided Logs: Serverless platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, provide detailed logs of function invocations, network requests, and other relevant events. These logs are crucial for understanding network behavior and identifying potential security threats. Analyzing these logs is the first step in monitoring.

- Integrating with Security Information and Event Management (SIEM) Systems: SIEM systems provide a centralized platform for collecting, analyzing, and correlating security events from various sources. Integrating serverless logs with a SIEM allows for comprehensive threat detection and incident response.

- Utilizing API Gateway Logs: API Gateways often act as the entry point for serverless applications. Monitoring API Gateway logs provides insights into request patterns, traffic volume, and potential malicious activity. This data is important to understand the usage of the API.

- Employing Network-Level Security Tools (Where Applicable): While direct access to the underlying network infrastructure is limited, some serverless platforms offer features like VPC (Virtual Private Cloud) support or network security groups. These features can be used to apply network-level security controls and monitor traffic.

- Implementing Intrusion Detection and Prevention Systems (IDS/IPS): Some serverless platforms support integration with IDS/IPS solutions. These systems can analyze network traffic for malicious activity and automatically block or alert on suspicious events.

- Monitoring DNS Requests: Monitoring DNS requests can help identify malicious domain lookups and other network-related threats. This is a very important step in monitoring the network.

Challenges of Monitoring Network Traffic

Monitoring network traffic in a serverless setup presents several challenges due to the dynamic and ephemeral nature of the environment. These challenges necessitate a proactive and adaptable approach to security monitoring.

- Ephemeral Nature of Functions: Serverless functions are typically short-lived, making it difficult to capture and analyze network traffic associated with a specific function invocation.

- Lack of Direct Network Control: Serverless providers manage the underlying network infrastructure, limiting direct access for network monitoring tools.

- Scalability and Volume of Data: Serverless applications can scale rapidly, generating a large volume of log data that needs to be processed and analyzed in real-time.

- Complexity of Distributed Architectures: Serverless applications often involve complex architectures with multiple functions, APIs, and services, making it challenging to correlate network events across different components.

- Data Privacy and Compliance: Serverless environments often handle sensitive data, requiring robust security measures to protect data privacy and comply with regulations.

Comparing Network Security Monitoring Solutions

Several network security monitoring solutions are available for serverless environments, each with its strengths and weaknesses. The choice of solution depends on the specific requirements and the serverless platform being used.

- Cloud Provider Native Solutions: Cloud providers like AWS, Azure, and Google Cloud offer native security monitoring services, such as AWS CloudWatch, Azure Monitor, and Google Cloud Operations. These services provide built-in logging, monitoring, and alerting capabilities. They are easy to integrate.

- Third-Party SIEM and Security Tools: Third-party SIEM and security tools, such as Splunk, Sumo Logic, and Datadog, provide more advanced features for log aggregation, analysis, and threat detection. These solutions often offer integrations with multiple cloud providers and serverless platforms.

- API Gateway-Specific Solutions: Solutions specifically designed for API gateways, such as those offered by API security vendors, provide detailed insights into API traffic, security vulnerabilities, and potential threats. They help to analyze API traffic.

- Custom Solutions: In some cases, organizations may need to develop custom solutions to meet their specific security monitoring needs. This may involve building custom log aggregation pipelines, threat detection rules, and alerting mechanisms.

Identity and Access Management (IAM) Monitoring

Effective monitoring of Identity and Access Management (IAM) is crucial in serverless environments to mitigate the risk of unauthorized access and data breaches. IAM controls govern access to resources, and therefore, any misconfiguration or malicious activity related to IAM can have severe consequences. This section details methods for monitoring IAM roles and permissions, procedures for detecting unauthorized access attempts, and best practices for IAM auditing.

Monitoring IAM Roles and Permissions

Monitoring IAM roles and permissions requires a multi-faceted approach, incorporating continuous assessment of configurations and activity. This includes scrutinizing role definitions, permission assignments, and the actual use of those permissions.

- Regular Audits of Role Configurations: This involves periodic reviews of IAM roles, including their attached policies and trust relationships. Ensure roles are defined with the principle of least privilege, granting only the necessary permissions. This process should involve comparing current configurations against established security baselines. For instance, an audit might reveal that a specific role has unnecessary access to a sensitive database, which should be immediately addressed.

- Analyzing Permission Usage: Monitor the actual usage of permissions granted to roles. This involves tracking which API calls are being made by each role and correlating these calls with the assigned permissions. Tools can provide logs detailing the calls. If a role is consistently using a small subset of its assigned permissions, it may indicate that the role is over-privileged and needs adjustment.

- Monitoring for Policy Changes: Implement alerts for any modifications to IAM policies, including both changes to existing policies and the creation of new ones. Unapproved changes to policies can introduce vulnerabilities. A notification system should trigger an alert to the security team whenever a policy is altered, enabling immediate review and validation.

- Analyzing Trust Relationships: Closely examine the trust relationships defined for each role. Trust relationships determine which entities (e.g., AWS accounts, services) can assume a role. Ensure that trust relationships are configured correctly and limit the scope of entities that can assume a role to only those that need it. For example, if a role is trusted by an external account, verify that this trust is absolutely necessary and that the external account is properly secured.

Detecting Unauthorized Access Attempts

Detecting unauthorized access attempts involves establishing mechanisms to identify suspicious activities, such as attempts to assume roles without authorization, the use of compromised credentials, or the unusual access patterns.

- Monitoring for Role Assumption Failures: Implement monitoring for failed attempts to assume IAM roles. These failures often indicate a malicious actor attempting to gain unauthorized access. These logs will provide details like the source IP address and the IAM user or role involved in the failed attempt.

- Analyzing Unusual Login Patterns: Monitor for unusual login patterns, such as logins from unexpected locations or at unusual times. This requires establishing a baseline of normal login behavior. Any deviation from this baseline should trigger an alert. For example, a sudden login from a geographic location that is not typical for the organization warrants immediate investigation.

- Monitoring for Unauthorized API Calls: Track API calls made by IAM users and roles. This includes identifying calls to sensitive APIs that should only be accessed by authorized users or roles. If a role is making API calls to delete data or modify security settings without authorization, an alert should be generated immediately.

- Implementing Anomaly Detection: Employ anomaly detection techniques to identify unusual activity that may indicate a security breach. This involves using machine learning algorithms to analyze log data and identify deviations from established patterns. These systems can identify suspicious behavior, such as unusual API call frequency or the use of privileged commands by an unexpected user or role.

Best Practices for IAM Auditing

IAM auditing is essential for maintaining a secure serverless environment. Regular audits help to identify vulnerabilities, ensure compliance with security policies, and verify the effectiveness of IAM controls.

- Establish a Regular Audit Schedule: Conduct IAM audits on a regular basis. The frequency of audits should be determined by the sensitivity of the data and the criticality of the resources being protected. More critical environments should be audited more frequently.

- Define Audit Scope: Clearly define the scope of each audit. This should include the specific IAM resources, policies, and configurations that will be reviewed. The scope should align with the organization’s security policies and compliance requirements.

- Use Automated Tools: Utilize automated tools to streamline the auditing process. These tools can automate tasks such as reviewing IAM policies, identifying over-privileged roles, and detecting potential misconfigurations. Automated tools save time and improve the accuracy of audits.

- Document Audit Findings: Document all audit findings, including any identified vulnerabilities, misconfigurations, and recommended remediation steps. The documentation should include details such as the date of the audit, the auditors involved, and the specific findings.

- Implement Remediation Procedures: Establish procedures for remediating any identified vulnerabilities or misconfigurations. These procedures should include assigning responsibility for remediation tasks, setting timelines for completion, and verifying that the remediation steps have been implemented successfully.

- Maintain Audit Trails: Preserve detailed audit trails of all IAM-related activities. These trails should include information such as who made changes, what changes were made, and when the changes were made. Audit trails are essential for forensic investigations and compliance purposes.

- Train Personnel: Provide regular training to personnel on IAM best practices and security policies. Training should cover topics such as the principle of least privilege, role-based access control, and the importance of secure credential management.

Code Security and Vulnerability Scanning

Securing serverless code is paramount to maintaining a robust and trustworthy application. Due to the distributed nature of serverless architectures, traditional security approaches are often insufficient. Identifying and mitigating vulnerabilities in the code itself is a critical step in protecting against attacks. This section explores techniques for scanning serverless function code, integrating vulnerability scanning into the development lifecycle, and applying security best practices.

Techniques for Scanning Serverless Function Code for Vulnerabilities

Various techniques can be employed to scan serverless function code for vulnerabilities. These techniques range from static analysis to dynamic analysis and depend on the programming languages used and the complexity of the application.

- Static Application Security Testing (SAST): SAST involves analyzing the source code without executing it. This method identifies potential vulnerabilities like code injection, cross-site scripting (XSS), and insecure coding practices. SAST tools examine the code for patterns that match known vulnerabilities. For example, a SAST tool might flag a function that directly concatenates user input into an SQL query, a classic example of a SQL injection vulnerability.

Several open-source and commercial SAST tools are available, such as SonarQube, Semmle, and Coverity. These tools can be integrated into the development pipeline to provide real-time feedback on code quality and security.

- Dynamic Application Security Testing (DAST): DAST, also known as black-box testing, involves testing the application from the outside, as a user would. DAST tools interact with the running application to identify vulnerabilities by sending malicious input and observing the application’s response. DAST tools can detect vulnerabilities such as cross-site scripting (XSS), SQL injection, and other input validation flaws. DAST tools are particularly useful for identifying vulnerabilities that are difficult to detect through static analysis.

Examples of DAST tools include OWASP ZAP and Burp Suite. These tools simulate attacks to test the application’s resilience.

- Software Composition Analysis (SCA): SCA focuses on identifying and managing open-source components and dependencies used in the application. SCA tools scan the code for third-party libraries and frameworks and compare them against vulnerability databases. This helps identify vulnerabilities in dependencies, such as outdated versions with known security flaws. SCA tools also provide information about license compliance. Examples of SCA tools include Snyk, WhiteSource, and Black Duck.

These tools are crucial in serverless environments, where the use of third-party libraries is common.

- Fuzzing: Fuzzing involves providing invalid, unexpected, or random data as input to the application to detect crashes, memory leaks, and other vulnerabilities. Fuzzing can be applied to both static and dynamic analysis. This technique can uncover vulnerabilities that are not easily detectable through other methods. Fuzzing tools generate a large volume of inputs to test the application’s robustness. This is particularly effective in finding vulnerabilities in complex code or areas where input validation is weak.

Designing a Process for Integrating Vulnerability Scanning into the Development Lifecycle

Integrating vulnerability scanning into the development lifecycle ensures that security is addressed from the outset. This proactive approach helps to identify and fix vulnerabilities early in the process, reducing the cost and effort required to address them later. The process should include the following steps.

- Requirements Gathering and Design Phase: Begin by defining security requirements and identifying potential threats. This involves analyzing the application’s functionality, data flows, and user interactions to determine the security needs. Design the application architecture with security in mind, including secure coding practices and input validation strategies.

- Development Phase: Integrate SAST tools into the development environment to provide real-time feedback on code quality and security. Developers should use secure coding practices, such as input validation, output encoding, and avoiding hardcoded secrets. Regularly scan the code with SAST tools to identify and address vulnerabilities.

- Testing Phase: Implement DAST and SCA tools to test the application’s security. Conduct penetration testing to simulate real-world attacks and identify vulnerabilities that may not be detected by automated tools. SCA tools should be used to identify vulnerabilities in open-source dependencies.

- Deployment Phase: Before deploying the application, ensure that all identified vulnerabilities have been addressed. Perform a final security review to verify that all security measures are in place. Automate security checks as part of the deployment pipeline to ensure consistency.

- Monitoring and Maintenance Phase: Continuously monitor the application for security threats. Use real-time threat detection and alerting systems to identify and respond to security incidents. Regularly update dependencies and apply security patches to address new vulnerabilities.

Examples of Security Best Practices for Serverless Code

Implementing security best practices in serverless code is essential for protecting against various threats. These practices cover different aspects of the code, from input validation to access control.

- Input Validation: Validate all inputs to ensure they conform to expected formats and ranges. This prevents attacks such as SQL injection, cross-site scripting (XSS), and command injection. Use regular expressions, whitelisting, and other validation techniques to sanitize user inputs.

- Output Encoding: Encode all outputs to prevent XSS attacks. Properly encode data before displaying it to users to prevent malicious scripts from being executed.

- Least Privilege: Grant serverless functions only the necessary permissions to perform their tasks. Avoid using broad permissions that could expose the application to unnecessary risks. Use IAM roles and policies to define the function’s access to other resources.

- Secure Secrets Management: Store sensitive information, such as API keys and database credentials, securely. Avoid hardcoding secrets directly into the code. Use a secrets management service, such as AWS Secrets Manager or HashiCorp Vault, to store and manage secrets securely.

- Dependency Management: Regularly update dependencies to address known vulnerabilities. Use SCA tools to identify and manage open-source components. Keep dependencies up-to-date to minimize the risk of attacks.

- Logging and Monitoring: Implement comprehensive logging and monitoring to track function execution, errors, and security events. Monitor logs for suspicious activity and set up alerts to notify administrators of potential threats.

- Code Signing: Sign code to verify its integrity and authenticity. This helps ensure that the code has not been tampered with and comes from a trusted source. Code signing provides a layer of protection against malicious code injection.

- Rate Limiting: Implement rate limiting to prevent abuse and denial-of-service (DoS) attacks. Limit the number of requests a function can handle within a specific time period. Rate limiting helps to protect the application’s resources and availability.

- Error Handling: Implement robust error handling to prevent sensitive information from being exposed. Avoid displaying detailed error messages to users that could reveal internal system information. Instead, provide generic error messages and log detailed error information for debugging purposes.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities. These audits should be performed by security professionals to ensure that the application is secure.

Compliance and Auditing

Achieving and maintaining compliance in a serverless environment is crucial for demonstrating adherence to industry standards, regulatory requirements, and internal security policies. This involves a systematic approach that encompasses defining compliance objectives, implementing necessary controls, and regularly auditing the environment to verify their effectiveness. A robust compliance strategy not only mitigates risks but also builds trust with stakeholders.

Steps for Achieving Compliance in a Serverless Environment

The process of achieving compliance in a serverless environment involves a series of well-defined steps. Each step contributes to a comprehensive compliance posture, ensuring that the serverless applications and infrastructure align with relevant regulations and standards.

- Define Compliance Scope and Objectives: Begin by identifying the specific compliance frameworks and regulations that apply to the organization and the serverless applications. This may include standards like PCI DSS, HIPAA, GDPR, or specific industry regulations. Define clear compliance objectives based on these frameworks, specifying the controls and measures required. For example, if complying with PCI DSS, the objectives might include securing cardholder data, implementing access controls, and regularly monitoring for security threats.

- Assess the Current State: Conduct a thorough assessment of the current serverless environment. This involves evaluating existing security controls, identifying gaps, and understanding the architecture. Tools like security scanners and vulnerability assessments can be used to identify weaknesses. This assessment serves as a baseline for future improvements.

- Implement Necessary Controls: Implement the security controls required to meet the defined compliance objectives. This includes configuring IAM roles and policies to enforce the principle of least privilege, enabling logging and monitoring, and implementing security best practices for code development and deployment. Consider using Infrastructure as Code (IaC) to automate the deployment and configuration of these controls.

- Automate Security Checks and Monitoring: Implement automated security checks and monitoring to continuously verify the effectiveness of the implemented controls. This can include automated vulnerability scanning, compliance checks, and security monitoring tools that alert on potential security incidents or compliance violations.

- Establish Incident Response Plan: Develop and document an incident response plan that Artikels the procedures for responding to security incidents and compliance violations. This plan should include steps for containment, eradication, recovery, and post-incident analysis. Regularly test the incident response plan to ensure its effectiveness.

- Maintain Documentation: Document all compliance-related activities, including the compliance scope, objectives, implemented controls, assessment results, and incident response procedures. This documentation is essential for demonstrating compliance to auditors and stakeholders.

- Regularly Review and Update: Continuously review and update the compliance strategy and implemented controls to adapt to changes in regulations, technology, and the threat landscape. This involves periodic audits, vulnerability assessments, and penetration testing.

Framework for Conducting Security Audits of Serverless Applications

A well-defined framework is essential for conducting effective security audits of serverless applications. This framework provides a structured approach to assess the security posture, identify vulnerabilities, and verify compliance. The framework ensures that the audit is comprehensive, consistent, and aligned with industry best practices.

- Planning and Scope Definition: Define the scope of the audit, including the specific serverless applications, services, and infrastructure to be assessed. Identify the applicable compliance requirements and security standards. Develop an audit plan that Artikels the objectives, scope, methodology, and timeline of the audit.

- Information Gathering: Gather information about the serverless environment, including the architecture, configuration, security controls, and operational procedures. This involves reviewing documentation, interviewing stakeholders, and using tools to gather data. Examples of information to gather include IAM policies, network configurations, logging configurations, and deployment pipelines.

- Assessment of Security Controls: Evaluate the effectiveness of the security controls implemented in the serverless environment. This includes assessing IAM configurations, network security, data encryption, code security, and logging and monitoring capabilities. The assessment should determine if the controls are properly implemented, configured, and maintained.

- Vulnerability Scanning and Penetration Testing: Conduct vulnerability scanning and penetration testing to identify vulnerabilities in the serverless applications and infrastructure. Vulnerability scanning involves using automated tools to identify known vulnerabilities. Penetration testing simulates real-world attacks to assess the effectiveness of security controls.

- Review of Logs and Monitoring Data: Review logs and monitoring data to identify potential security incidents, anomalies, and compliance violations. Analyze logs from various sources, such as application logs, server logs, and network logs. Verify that monitoring alerts are properly configured and that incidents are promptly addressed.

- Compliance Verification: Verify that the serverless environment complies with the applicable compliance requirements and security standards. This involves reviewing documentation, assessing the implementation of security controls, and testing their effectiveness.

- Reporting and Remediation: Prepare a comprehensive audit report that summarizes the findings, identifies vulnerabilities and weaknesses, and provides recommendations for remediation. Prioritize the identified issues based on their severity and impact. Develop a remediation plan to address the identified vulnerabilities and weaknesses.

Importance of Documenting Security Measures

Comprehensive documentation of security measures is a critical component of a robust serverless security strategy. Documentation serves multiple purposes, including facilitating compliance, enabling effective incident response, and promoting knowledge sharing. Without proper documentation, it becomes difficult to demonstrate adherence to security standards and maintain a strong security posture.

- Demonstrating Compliance: Documentation provides evidence of compliance with regulatory requirements, industry standards, and internal security policies. It allows auditors to verify that security measures are in place and effective. For instance, documenting the implementation of multi-factor authentication, access controls, and data encryption demonstrates adherence to PCI DSS requirements.

- Facilitating Incident Response: Documentation helps in the rapid and effective response to security incidents. Incident response plans, configuration details, and system diagrams provide the information needed to contain, eradicate, and recover from security breaches.

- Enabling Knowledge Sharing and Training: Documentation promotes knowledge sharing and facilitates training for team members. It provides a central repository of information about the serverless environment, security controls, and operational procedures.

- Supporting Change Management: Documentation supports effective change management by providing a baseline of the current state of the serverless environment. When changes are made, the documentation can be updated to reflect the new configurations and procedures.

- Improving Security Posture: Documentation forces a structured approach to security, which helps identify gaps and weaknesses in the security posture. The process of creating and maintaining documentation also encourages continuous improvement of security practices.

Serverless Function Security Best Practices

Securing serverless functions is paramount to protect the application and its data from potential threats. Implementing robust security measures is essential, given the distributed nature of serverless architectures and the inherent reliance on external services. This involves a multifaceted approach that encompasses code, configuration, and operational practices.

Function Security Best Practices

Adhering to best practices significantly enhances the security posture of serverless functions. These practices mitigate risks associated with code execution, data handling, and access control.

- Principle of Least Privilege: Grant functions only the necessary permissions required for their operation. Avoid broad, unrestricted access to resources. For example, a function that only reads data from a database should not have write access. This minimizes the potential damage from a compromised function.

- Input Validation and Sanitization: Validate and sanitize all input data to prevent injection attacks (e.g., SQL injection, cross-site scripting). Implement robust input validation schemas to ensure data conforms to expected formats and ranges. For instance, when accepting user input for a product ID, validate that it is an integer within a specific range.

- Secure Dependencies: Regularly update function dependencies to patch vulnerabilities. Use a dependency management tool to track and manage all dependencies. Automated scanning tools can identify and flag vulnerable dependencies, allowing for prompt remediation. For example, a security audit might reveal a vulnerable version of a library; updating the library is crucial.

- Code Signing and Integrity Checks: Implement code signing to verify the integrity of function code and prevent tampering. Use checksums or digital signatures to ensure the code executed is the authorized version. This safeguards against malicious code injection.

- Encryption at Rest and in Transit: Encrypt sensitive data both when stored (at rest) and when being transmitted (in transit). Use encryption keys managed securely, preferably by a key management service. This ensures data confidentiality. For example, encrypting data stored in a database and using HTTPS for API calls.

- Rate Limiting and Throttling: Implement rate limiting and throttling to protect functions from denial-of-service (DoS) attacks. Define limits on the number of requests a function can handle within a specific timeframe. This prevents malicious actors from overwhelming the function with requests.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration tests to identify vulnerabilities and weaknesses. Simulate real-world attacks to assess the effectiveness of security controls. These tests help to proactively address security gaps.

- Monitor Function Execution: Implement comprehensive monitoring and logging to detect and respond to security incidents. Collect logs related to function invocations, errors, and security events. This allows for timely detection of suspicious activity.

- Secure Secrets Management: Never hardcode sensitive information (e.g., API keys, database credentials) in function code. Use a secrets management service to store and manage secrets securely. This minimizes the risk of exposing sensitive data.

- Use Serverless-Specific Security Tools: Leverage security tools specifically designed for serverless environments. These tools can automate security tasks such as vulnerability scanning, dependency management, and configuration analysis.

Securing Sensitive Data Within Functions

Protecting sensitive data within serverless functions requires careful consideration of storage, access, and handling practices. Secure coding practices and the use of specialized services are crucial.

- Secret Storage: Store sensitive data, such as API keys, database credentials, and other secrets, in a secure secret management service. Avoid hardcoding secrets directly into function code or configuration files. Examples of secret management services include AWS Secrets Manager, Azure Key Vault, and Google Cloud Secret Manager. This ensures that secrets are not exposed in the codebase.

- Encryption: Encrypt sensitive data at rest and in transit. For data stored in databases, use encryption provided by the database service. For data transmitted over the network, use HTTPS. For example, encrypting customer data stored in a database.

- Data Masking and Tokenization: Mask or tokenize sensitive data to reduce the risk of exposure. Replace sensitive data with non-sensitive substitutes. For instance, masking credit card numbers or tokenizing personally identifiable information (PII).

- Input Validation and Sanitization: Validate and sanitize all input data to prevent injection attacks and data breaches. Ensure that all user-supplied data conforms to expected formats and ranges.

- Least Privilege Access: Grant functions only the necessary permissions to access data. Avoid giving functions excessive access rights.

- Data Residency and Compliance: Ensure that data is stored and processed in compliance with relevant regulations (e.g., GDPR, HIPAA). Consider data residency requirements and select regions that meet compliance needs.

- Secure Logging and Monitoring: Avoid logging sensitive data in plain text. Implement proper logging and monitoring to detect unauthorized access or data breaches.

Securing Function Triggers and Event Sources

Securing function triggers and event sources is crucial to ensure that functions are invoked only by authorized sources and in a controlled manner. This involves securing the configuration and access control mechanisms associated with triggers.

- Authentication and Authorization: Implement robust authentication and authorization mechanisms to control access to function triggers. Require authentication for all external requests to the function. For example, using API keys, JWT tokens, or OAuth.

- Trigger Configuration: Configure triggers securely. For example, when using an API Gateway trigger, ensure that the API Gateway is configured with appropriate security settings, such as authentication and authorization.

- Event Source Validation: Validate the event source that triggers the function. Ensure that events originate from trusted sources. Implement input validation to verify the data in the event.

- Access Control Policies: Define and enforce strict access control policies for function triggers. Limit the resources that a function can access based on the identity of the trigger. For example, using IAM policies to restrict access to specific S3 buckets.

- Monitoring and Auditing: Monitor function invocations and audit access to triggers. Detect and respond to unauthorized access attempts or suspicious activity.

- Rate Limiting and Throttling: Implement rate limiting and throttling on function triggers to prevent abuse and denial-of-service attacks. Limit the number of invocations from a specific source within a given time frame.

- Network Security: Secure the network environment in which the function operates. For example, configure VPCs and subnets to restrict access to function resources.

- API Gateway Security: When using API Gateway, configure API keys, usage plans, and rate limiting to protect APIs. Use authentication and authorization mechanisms to control access to API endpoints.

- Event Source Filtering: Filter events based on their content to prevent unwanted function invocations. This reduces the attack surface.

Incident Response Planning

Developing a robust incident response plan is crucial for mitigating the impact of security breaches in serverless environments. The dynamic and distributed nature of serverless architectures presents unique challenges for incident response, necessitating a proactive and well-defined approach. A comprehensive plan enables rapid detection, containment, and remediation, minimizing downtime, data loss, and reputational damage.

Creating an Incident Response Plan for Serverless Security Incidents

An effective incident response plan Artikels the procedures and responsibilities for handling security incidents. This plan must be tailored to the specific characteristics of the serverless environment, including the use of managed services, ephemeral resources, and event-driven architectures. The plan should encompass all phases of incident response: preparation, identification, containment, eradication, recovery, and post-incident activity.

Preparation involves establishing security policies, implementing security controls, and training personnel. Identification entails detecting and analyzing potential security incidents. Containment focuses on limiting the scope and impact of the incident. Eradication removes the cause of the incident. Recovery restores systems to their normal operational state.

Post-incident activity includes analyzing the incident, documenting lessons learned, and improving the incident response plan.

- Define Roles and Responsibilities: Clearly delineate roles and responsibilities for each member of the incident response team. This includes defining the incident commander, technical leads, communications lead, and other relevant roles. Each role should have a documented set of responsibilities and contact information.

- Establish Communication Channels: Define communication protocols for internal and external stakeholders. This includes establishing secure communication channels for the incident response team and procedures for communicating with legal counsel, public relations, and regulatory bodies.

- Develop Incident Classification and Prioritization: Classify incidents based on their severity and potential impact. Establish a prioritization scheme to ensure that the most critical incidents are addressed first. This should include metrics to assess the potential impact on data confidentiality, integrity, and availability.

- Create Playbooks: Develop detailed playbooks for common incident types. Playbooks should provide step-by-step instructions for containment, eradication, and recovery. These playbooks should be regularly updated and tested.

- Document and Maintain an Inventory of Assets: Maintain an up-to-date inventory of all serverless resources, including functions, APIs, databases, and storage buckets. This inventory should include the location, owner, and security classification of each asset.

- Implement Automated Alerting and Monitoring: Configure automated alerting and monitoring to detect security incidents in real-time. This includes monitoring logs, network traffic, and other relevant data sources. Alerting systems should be integrated with the incident response plan.

- Test and Exercise the Plan: Regularly test and exercise the incident response plan through simulations and tabletop exercises. This helps to identify weaknesses in the plan and ensure that the incident response team is prepared to respond effectively.

Steps to Contain and Remediate a Security Breach

The containment and remediation phases are critical for minimizing the damage caused by a security breach. Containment focuses on preventing further damage, while remediation aims to eliminate the root cause of the breach and restore the affected systems to a secure state. These steps must be performed rapidly and methodically to reduce the attack surface and prevent recurrence.

- Containment:

- Isolate Affected Resources: Immediately isolate compromised serverless functions, databases, and other resources to prevent further compromise. This might involve disabling the function, changing access permissions, or temporarily removing the affected resource from the network.

- Disable Compromised Credentials: Revoke or rotate any compromised credentials, such as API keys, access keys, and passwords. Implement multi-factor authentication (MFA) for all user accounts and service accounts.

- Implement Network Segmentation: If possible, segment the network to limit the lateral movement of attackers. This can involve creating separate virtual private clouds (VPCs) or using network access control lists (ACLs) to restrict network traffic.

- Block Malicious Traffic: Block malicious traffic identified through analysis of logs and network traffic. This can involve updating web application firewalls (WAFs) or implementing intrusion detection and prevention systems (IDPS).

- Eradication:

- Identify the Root Cause: Conduct a thorough investigation to identify the root cause of the security breach. This might involve analyzing logs, network traffic, and code to determine how the attacker gained access to the system.

- Remove Malware and Malicious Code: Remove any malware, malicious code, or backdoors that were installed by the attacker. This might involve re-imaging compromised servers, patching vulnerabilities, or cleaning up infected files.

- Patch Vulnerabilities: Apply security patches to address any vulnerabilities that were exploited by the attacker. This includes patching operating systems, libraries, and application code.

- Review and Strengthen Security Configurations: Review and strengthen security configurations to prevent similar incidents from occurring in the future. This includes reviewing access control policies, security group configurations, and other security settings.

- Recovery:

- Restore Data from Backups: Restore data from backups to recover from data loss or corruption. Verify the integrity of the backups before restoring data.

- Rebuild Compromised Resources: Rebuild compromised serverless functions, databases, and other resources from a known good state. This helps to ensure that the systems are free from malware and other malicious code.

- Verify System Integrity: Verify the integrity of the restored systems and data. This includes performing security scans and penetration tests to ensure that the systems are secure.

- Resume Normal Operations: Once the systems are secure and operational, resume normal operations. Monitor the systems closely for any signs of further compromise.

Communication Protocols During a Security Incident

Effective communication is essential during a security incident to ensure that all stakeholders are informed and that the incident is managed effectively. Communication protocols should be established in advance and followed consistently during the incident. These protocols should include internal and external communication procedures.

- Internal Communication:

- Incident Commander: The incident commander is responsible for coordinating the incident response and communicating with the incident response team.

- Technical Leads: Technical leads are responsible for providing technical expertise and guidance to the incident response team.

- Communication Lead: The communication lead is responsible for managing internal and external communications.

- Regular Updates: Provide regular updates to the incident response team on the status of the incident, the actions taken, and the next steps.

- Secure Communication Channels: Use secure communication channels, such as encrypted email or secure messaging applications, to protect sensitive information.

- External Communication:

- Legal Counsel: Consult with legal counsel to ensure that all communications comply with legal and regulatory requirements.

- Public Relations: Work with public relations to develop a communication plan for external stakeholders, such as customers, partners, and the media.

- Regulatory Bodies: Notify regulatory bodies, such as data protection authorities, of the security incident as required by law.

- Customers and Partners: Communicate with customers and partners to inform them of the incident and the steps being taken to address it. Provide clear and concise information about the impact of the incident and the actions that they need to take.

- Media: Respond to media inquiries in a timely and professional manner. Provide accurate and factual information about the incident.

- Documentation:

- Document all Communications: Document all communications, including internal and external communications, to provide a record of the incident response.

- Maintain a Communication Log: Maintain a communication log to track all communications, including the date, time, sender, recipient, and content of the communication.

Monitoring Performance and Availability

Monitoring performance and availability are critical for maintaining the operational health and reliability of serverless applications. Proactive monitoring allows for the identification of performance bottlenecks, resource constraints, and potential service disruptions, enabling timely intervention and optimization. This proactive approach ensures optimal user experience, prevents service degradation, and facilitates cost-effective resource allocation.

Monitoring Serverless Function Performance

Monitoring serverless function performance involves tracking various metrics that provide insights into function execution characteristics. These metrics help identify areas for optimization, such as inefficient code, resource over-utilization, or latency issues. Comprehensive monitoring ensures that functions operate efficiently and meet performance expectations.

- Execution Time: The duration a function takes to complete its execution. Tracking execution time reveals performance trends and potential slowdowns. For instance, a function that consistently takes longer to execute might indicate an inefficient algorithm or resource contention.

- Invocation Count: The number of times a function is executed. Monitoring invocation count helps understand function usage patterns and scale resources accordingly. An unexpected surge in invocations could indicate a denial-of-service (DoS) attempt or a misconfigured application.

- Error Rate: The percentage of function invocations that result in errors. A high error rate signals potential code defects, infrastructure problems, or external service dependencies issues. Investigating the root causes of errors is crucial for improving application reliability.

- Cold Starts: The time it takes for a function to initialize a new execution environment. Cold starts can introduce latency and affect user experience. Monitoring cold start times helps identify the frequency and impact of cold starts and optimize function configuration (e.g., increasing memory allocation) or application design (e.g., pre-warming functions).

- Memory Usage: The amount of memory consumed by a function during execution. Monitoring memory usage is vital for preventing out-of-memory errors and optimizing resource allocation. If a function consistently consumes a large amount of memory, it may indicate memory leaks or inefficient resource management.

- Concurrency: The number of concurrent function instances. Monitoring concurrency helps understand the function’s ability to handle concurrent requests and scale efficiently. High concurrency might indicate that a function is successfully handling increased traffic, while low concurrency could point to resource constraints or scaling issues.

Monitoring Serverless Service Availability

Monitoring serverless service availability focuses on ensuring that the services are accessible and functioning as expected. This involves tracking service uptime, identifying service disruptions, and measuring the impact of outages. Proactive availability monitoring is critical for maintaining user trust and preventing business losses.

- Uptime Monitoring: Continuously checking the availability of serverless services. Uptime monitoring uses external probes to simulate user requests and verify service responsiveness.

- Error Tracking: Capturing and analyzing errors that occur within the serverless environment. Centralized error logging and alerting are crucial for identifying and resolving service disruptions promptly.

- Health Checks: Regularly verifying the health of serverless components. Health checks assess the status of function dependencies, databases, and external services.

- Alerting and Notifications: Configuring automated alerts based on predefined thresholds for performance and availability metrics. Real-time notifications allow operations teams to respond quickly to service issues.

- Service Level Objectives (SLOs) and Service Level Agreements (SLAs): Defining specific performance targets and contractual agreements for service availability. Monitoring SLOs and SLAs helps ensure that the service meets the required performance levels.

Key Performance Indicators (KPIs) for Serverless Environments

The following table presents key performance indicators (KPIs) for serverless environments, providing a comprehensive overview of the performance and availability metrics to monitor. Each KPI is described, along with its significance and example thresholds.

| KPI | Description | Significance | Example Thresholds |

|---|---|---|---|

| Function Execution Time | The duration a function takes to complete its execution, measured in milliseconds. | Indicates function efficiency and performance. High execution times can lead to increased latency and costs. | Average: < 500ms, 99th percentile: < 1000ms |

| Error Rate | The percentage of function invocations that result in errors, typically expressed as a percentage. | Reflects the reliability of the function and its dependencies. A high error rate indicates potential code issues or infrastructure problems. | < 1% |

| Cold Start Time | The time it takes for a function to initialize a new execution environment, measured in milliseconds. | Impacts user experience and overall function performance. High cold start times can cause noticeable latency. | < 200ms (goal), < 500ms (acceptable) |

| Invocation Count | The number of times a function is executed over a specific period (e.g., per minute, per hour). | Reflects the function’s usage and workload. Monitoring invocation count helps in capacity planning and cost optimization. | Track trends, monitor for unexpected spikes. |

Last Point