The digital landscape is increasingly defined by its multi-cloud agility. Migrating workloads between different cloud providers is no longer a question of “if,” but “how” to achieve it efficiently and effectively. This process, involving strategic planning, meticulous execution, and continuous optimization, unlocks significant benefits, including cost savings, enhanced performance, and reduced vendor lock-in. It’s a journey requiring a deep understanding of infrastructure, application dependencies, and the nuances of each cloud environment.

This comprehensive analysis delves into the multifaceted aspects of cloud migration, exploring the motivations behind such transitions, the critical phases involved, and the diverse strategies available. From assessing current infrastructure to selecting appropriate migration methods and optimizing post-migration performance, we will dissect each step, providing insights into best practices, potential pitfalls, and the tools necessary for a successful migration. The goal is to equip readers with the knowledge to navigate this complex process confidently.

Introduction to Cloud Migration

Cloud migration, the process of moving digital assets, including applications, data, and infrastructure, from one environment to another, is a strategic undertaking driven by a complex interplay of technical, financial, and business considerations. This often involves shifting workloads between different cloud providers, on-premises infrastructure, or a combination of both. Understanding the motivations behind such migrations and the types of workloads involved is crucial for developing effective migration strategies.Cloud migration offers several strategic advantages, including increased flexibility, scalability, and cost optimization.

It’s a dynamic process, constantly evolving to meet the changing demands of modern businesses.

Motivations for Cloud Migration

The decision to migrate workloads across cloud providers is rarely made lightly. Several key drivers typically motivate organizations to undertake this complex process.

- Cost Optimization: One of the primary motivations is the potential for reducing operational expenditures. This can be achieved by leveraging more cost-effective pricing models offered by different cloud providers, optimizing resource utilization, and eliminating the need for on-premises hardware maintenance and associated costs. For example, a company might choose to migrate its data storage to a provider offering lower storage costs or utilize spot instances for compute-intensive tasks, taking advantage of discounted pricing.

- Enhanced Scalability and Performance: Cloud providers offer unparalleled scalability, allowing organizations to dynamically adjust resources based on demand. This ensures optimal performance and responsiveness, especially during peak traffic periods. Migrating to a provider with a geographically distributed infrastructure can also improve latency and user experience for global users. For instance, an e-commerce company might migrate its application to a cloud provider with robust content delivery network (CDN) capabilities to improve website loading times and handle sudden traffic surges during sales events.

- Business Agility and Innovation: Cloud migration can significantly enhance an organization’s ability to respond quickly to market changes and adopt new technologies. Cloud providers often offer a wide range of services, such as machine learning, artificial intelligence, and serverless computing, which can accelerate innovation and enable the development of new products and services. A software development company might migrate its development and testing environments to a cloud provider to access these advanced services and streamline its development lifecycle.

- Vendor Lock-in Mitigation: Multi-cloud strategies, involving the use of multiple cloud providers, are increasingly popular. Migrating workloads between providers helps to avoid vendor lock-in, reducing dependence on a single vendor and increasing flexibility. This allows organizations to choose the best services and pricing for their specific needs and avoid being constrained by a single provider’s limitations. A company could distribute its workloads across different providers, using one for compute-intensive tasks and another for data storage, optimizing for both performance and cost.

- Disaster Recovery and Business Continuity: Cloud providers offer robust disaster recovery and business continuity solutions, enabling organizations to protect their data and applications from outages and disasters. Migrating to a cloud provider with geographically diverse data centers can ensure that business operations can continue even if one region experiences an outage. A financial institution might migrate its critical applications to a cloud provider with redundant infrastructure to minimize downtime and ensure compliance with regulatory requirements.

Workload Types Suitable for Migration

Various workload types can be migrated to the cloud, each with its specific considerations and requirements. The suitability of a workload for migration depends on factors such as its complexity, dependencies, and performance requirements.

- Virtual Machines (VMs): VMs are a common workload type that can be easily migrated to the cloud. Cloud providers offer infrastructure-as-a-service (IaaS) offerings that allow organizations to provision and manage VMs in the cloud. This includes operating systems, software, and associated configurations. Tools like VMware vMotion and AWS VM Import/Export can facilitate the migration process.

- Databases: Databases are critical for many applications, and migrating them to the cloud can offer significant benefits, including improved scalability, performance, and availability. Cloud providers offer managed database services, such as Amazon RDS, Azure SQL Database, and Google Cloud SQL, which simplify database management and reduce operational overhead. Database migration often involves data replication, schema conversion, and application code adjustments.

- Applications: Applications of various types, from web applications to enterprise resource planning (ERP) systems, can be migrated to the cloud. This involves migrating the application code, data, and dependencies to a cloud environment. Application migration can be a complex process, often requiring refactoring or re-architecting the application to take advantage of cloud-native services.

- Data Storage: Data storage workloads, including object storage, file storage, and block storage, can be migrated to the cloud to benefit from scalability, cost-effectiveness, and data durability. Cloud providers offer various storage services, such as Amazon S3, Azure Blob Storage, and Google Cloud Storage, that provide different storage classes and pricing options.

- Containers: Containerized applications, built using technologies like Docker and Kubernetes, are well-suited for cloud migration. Cloud providers offer container orchestration services, such as Amazon ECS, Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE), that simplify the deployment and management of containerized applications.

Benefits of Multi-Cloud Strategies

Multi-cloud strategies involve using multiple cloud providers to host workloads. These strategies offer several advantages that can significantly enhance an organization’s cloud adoption journey.

- Avoiding Vendor Lock-in: A primary benefit is mitigating the risk of vendor lock-in. By distributing workloads across multiple providers, organizations reduce their dependence on a single vendor and gain greater flexibility. This allows them to switch providers if necessary or leverage the best services and pricing from different vendors.

- Improved Availability and Resilience: Multi-cloud deployments can enhance application availability and resilience. By deploying workloads across multiple geographically diverse cloud regions or providers, organizations can protect against outages and ensure business continuity. If one cloud provider experiences an outage, the application can continue to run on another provider.

- Optimized Cost and Performance: Multi-cloud strategies enable organizations to optimize costs and performance. They can choose the most cost-effective services and pricing models from different providers for specific workloads. For example, they might use one provider for compute-intensive tasks and another for data storage, optimizing for both performance and cost.

- Enhanced Security and Compliance: Multi-cloud deployments can improve security and compliance. Organizations can choose providers that meet specific security and compliance requirements. They can also implement security measures across multiple providers, such as centralized identity and access management (IAM) and data encryption, to protect sensitive data and applications.

- Increased Innovation and Flexibility: Multi-cloud strategies promote innovation and flexibility. Organizations can leverage the unique services and capabilities of different cloud providers, accelerating the development of new products and services. They can also adapt to changing business needs and market demands more quickly.

Assessment and Planning Phase

The Assessment and Planning phase is a critical stage in cloud migration, serving as the foundation for a successful transition. This phase involves a thorough evaluation of the existing infrastructure, strategic provider selection, and the development of a detailed migration plan. A well-executed assessment and planning phase minimizes risks, optimizes resource allocation, and ensures a smooth migration process.

Identifying Infrastructure Readiness

Determining infrastructure readiness involves a comprehensive analysis of the current IT environment to identify potential challenges and opportunities for migration. This analysis should consider various aspects of the existing infrastructure.

- Discovery and Inventory: This step focuses on creating a detailed inventory of all existing IT assets. This includes hardware, software, network configurations, and dependencies. Tools like agent-based or agentless discovery software can automate this process, providing a comprehensive view of the infrastructure. The inventory should include the operating system, installed applications, resource utilization (CPU, memory, storage, network), and inter-dependencies between different components.

For instance, a detailed inventory might reveal that a critical application relies on a specific version of a database server, which will influence the choice of cloud provider and migration strategy.

- Application Dependency Mapping: Understanding the relationships between different applications and services is crucial. Dependency mapping identifies how applications interact with each other, databases, and external services. Tools like application performance monitoring (APM) solutions and network traffic analysis can help visualize these dependencies. A complex application might depend on multiple microservices, databases, and message queues. This information is essential for planning the migration order and ensuring application functionality post-migration.

- Performance and Capacity Analysis: Evaluating the performance characteristics of the existing infrastructure is vital for ensuring that the migrated workloads perform optimally in the cloud. This involves analyzing CPU usage, memory consumption, disk I/O, and network bandwidth. Monitoring tools can provide historical data on resource utilization, which helps in determining the appropriate cloud instance sizes and configurations. For example, if a database server consistently experiences high CPU utilization, the cloud instance should be provisioned with sufficient CPU cores to handle the load.

- Security and Compliance Assessment: Evaluating the current security posture and compliance requirements is a critical step. This involves assessing the existing security controls, such as firewalls, intrusion detection systems, and access controls, and identifying any gaps that need to be addressed in the cloud environment. Compliance requirements, such as HIPAA, PCI DSS, or GDPR, must be considered when selecting a cloud provider and designing the migration strategy.

This might influence the choice of cloud regions and the implementation of specific security controls.

- Cost Analysis: A detailed cost analysis of the current on-premises infrastructure is essential for comparing it with the projected costs in the cloud. This involves calculating the costs of hardware, software licenses, power, cooling, and IT staff. Understanding these costs helps in making informed decisions about cloud provider selection and migration strategies. Cloud cost optimization tools can be used to estimate the cloud costs based on the existing resource utilization.

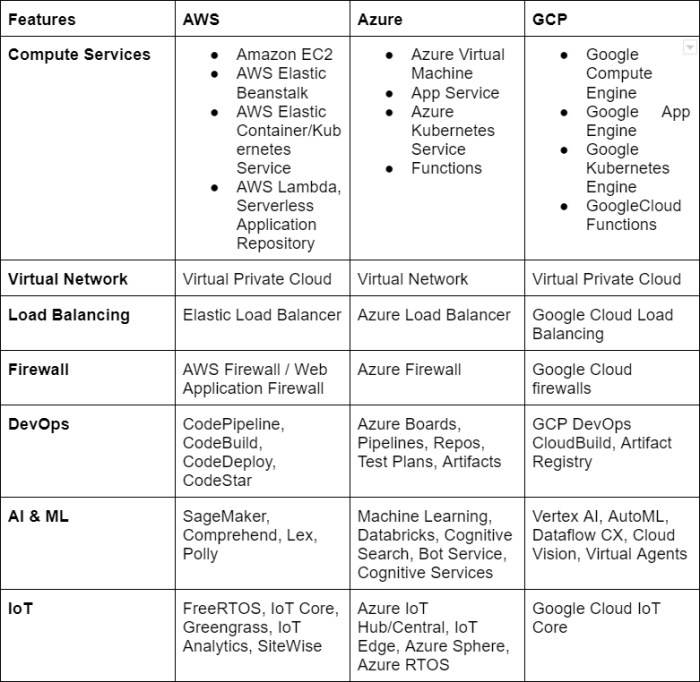

Choosing the Right Cloud Provider

Selecting the appropriate cloud provider for each workload requires careful consideration of various factors to ensure optimal performance, cost-effectiveness, and compliance. This process involves a comparative analysis of different providers based on specific requirements.

- Cost Considerations: Cloud providers offer different pricing models, including pay-as-you-go, reserved instances, and spot instances. The choice of pricing model depends on the workload’s characteristics and usage patterns. A workload with predictable resource requirements might benefit from reserved instances, while a workload that can tolerate interruptions might be suitable for spot instances. Comparing the costs of different providers for the same workload is crucial.

For example, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) have varying pricing structures for compute, storage, and networking resources. A cost analysis tool can help compare these costs based on the workload’s specific requirements.

- Performance Requirements: The performance requirements of a workload, such as latency, throughput, and scalability, are critical factors in provider selection. Different cloud providers offer varying levels of performance for compute, storage, and networking resources. For example, a high-performance computing (HPC) workload might require specialized compute instances with high CPU and memory capacity. Benchmarking the performance of different providers for the specific workload can help in making an informed decision.

For instance, a database workload might perform better on a provider that offers optimized database services with low latency and high throughput.

- Compliance and Security: Compliance with industry regulations and security requirements is a crucial factor, especially for workloads that handle sensitive data. Cloud providers offer different compliance certifications and security features. The provider’s security features, such as encryption, access controls, and threat detection, should align with the workload’s security requirements. For example, a healthcare application might require a provider that is HIPAA compliant.

Understanding the provider’s security policies, data residency options, and compliance certifications is essential.

- Service Availability and Reliability: The availability and reliability of the cloud provider’s services are critical for ensuring business continuity. The provider’s service level agreements (SLAs) should guarantee the required uptime and performance. The provider’s infrastructure, including data centers and network connectivity, should be robust and reliable. For example, a critical application might require a provider that offers high availability and disaster recovery options.

Evaluating the provider’s historical uptime, incident response procedures, and disaster recovery capabilities is important.

- Vendor Lock-in and Portability: Vendor lock-in refers to the difficulty of migrating workloads from one cloud provider to another. The extent of vendor lock-in can be assessed by examining the provider’s proprietary services and APIs. Portability refers to the ability to move workloads between different cloud providers or on-premises environments. Using open standards and technologies can reduce vendor lock-in and increase portability. For example, using containerization technologies like Docker and Kubernetes can improve portability.

Designing a Comprehensive Migration Plan

A well-defined migration plan is essential for a successful cloud migration. This plan Artikels the steps, resources, and timelines required to migrate the workloads to the cloud. The plan should address various aspects of the migration process.

- Migration Strategy Selection: Choosing the appropriate migration strategy depends on the specific requirements of each workload and the overall business objectives. Common migration strategies include:

- Rehosting (Lift and Shift): This involves migrating the workload to the cloud without making significant changes to the application.

- Replatforming: This involves migrating the workload to the cloud and making some changes to optimize it for the cloud environment.

- Refactoring: This involves redesigning and rewriting the application to take full advantage of cloud-native features.

- Repurchasing: This involves replacing the existing application with a cloud-based Software-as-a-Service (SaaS) solution.

- Retiring: This involves decommissioning the application if it is no longer needed.

The choice of strategy depends on factors such as the complexity of the application, the desired level of optimization, and the available resources.

- Workload Prioritization and Grouping: Prioritizing the workloads based on business value and dependencies is essential for creating an efficient migration plan. Grouping workloads based on their dependencies and migration requirements can streamline the migration process. For example, a group of interconnected applications might be migrated together to minimize downtime and ensure functionality.

- Timeline and Milestones: Developing a detailed timeline with specific milestones is crucial for tracking progress and managing the migration process. The timeline should include the start and end dates for each migration phase, such as assessment, planning, migration, and validation. Milestones, such as completing the migration of a specific workload or achieving a certain level of cost savings, should be defined to measure the success of the migration.

- Resource Allocation: Identifying and allocating the necessary resources, including personnel, tools, and budget, is essential for executing the migration plan. This includes the cloud infrastructure, migration tools, and the skills required to manage the migration. The budget should include the costs of cloud resources, migration tools, and professional services.

- Risk Management: Identifying potential risks and developing mitigation strategies is essential for minimizing the impact of unforeseen issues. Common risks include data loss, application downtime, security breaches, and cost overruns. Mitigation strategies might include creating backup and recovery plans, implementing security controls, and monitoring costs.

- Communication Plan: A communication plan should be developed to keep stakeholders informed about the progress of the migration. This plan should include regular updates, status reports, and communication channels. Clear communication is crucial for managing expectations and addressing any issues that arise during the migration process.

Choosing Migration Strategies

Selecting the appropriate migration strategy is a critical decision in cloud migration projects. This choice directly impacts the cost, time, and complexity of the migration process, as well as the overall performance and efficiency of the migrated workloads. A careful evaluation of the existing infrastructure, application architecture, business requirements, and desired outcomes is essential to determine the most suitable approach.

This section delves into various migration strategies, examining their characteristics, advantages, and suitability for different scenarios.

Rehosting (Lift-and-Shift) Migration Strategy

Rehosting, often referred to as “lift-and-shift,” is a migration strategy that involves moving applications and their associated infrastructure to the cloud with minimal changes. This approach typically involves replicating the existing on-premises environment, including virtual machines, operating systems, and applications, in the cloud.The advantages of rehosting include:

- Speed and Simplicity: Rehosting is generally the fastest and simplest migration strategy, as it requires minimal code changes. This can significantly reduce the time to migrate workloads to the cloud.

- Reduced Risk: Since the application code remains largely unchanged, the risk of introducing new bugs or compatibility issues is minimized.

- Cost-Effectiveness: Compared to more complex strategies like refactoring, rehosting can be more cost-effective in the short term, especially for large-scale migrations.

- Familiarity: IT teams can leverage their existing skills and knowledge of the on-premises environment, reducing the learning curve associated with cloud adoption.

Rehosting is best suited for:

- Applications with tight deadlines: Where speed to cloud is a priority.

- Applications with complex dependencies: Minimizing changes reduces the risk of breaking dependencies.

- Applications that are not easily refactored: Legacy applications or applications where the source code is unavailable or difficult to modify.

Re-platforming Migration Strategy

Re-platforming involves making some modifications to an application to take advantage of cloud-native features while maintaining the core application architecture. This approach often involves optimizing the application for the cloud environment, such as migrating to a managed database service or utilizing cloud-specific storage solutions.Re-platforming is suitable for:

- Applications that can benefit from cloud services: Applications that can leverage cloud-native features like managed databases, object storage, or content delivery networks.

- Applications where performance and scalability improvements are desired: Re-platforming allows for optimization of application performance and scalability by utilizing cloud infrastructure.

- Applications where some code changes are acceptable: This strategy requires more effort than rehosting, but less than refactoring.

Compared to rehosting, re-platforming requires more effort and planning. However, it can offer significant benefits in terms of performance, scalability, and cost optimization. For example, migrating a database from an on-premises SQL Server to a cloud-managed database service like Amazon RDS or Azure SQL Database is a common re-platforming activity.

Refactoring and Other Migration Strategies

Refactoring is the most comprehensive and time-consuming migration strategy. It involves redesigning and rewriting the application to fully leverage cloud-native features and architectures. This often includes breaking down monolithic applications into microservices, adopting serverless computing, and using cloud-native development tools.Other migration strategies include:

- Repurchasing: Replacing an existing application with a cloud-based SaaS (Software-as-a-Service) solution.

- Retiring: Discontinuing the use of an application and its associated infrastructure.

- Retaining: Keeping an application on-premises.

The following table provides a comparison of various migration strategies, outlining their pros and cons.

| Migration Strategy | Pros | Cons | Suitable Scenarios |

|---|---|---|---|

| Rehosting (Lift-and-Shift) | Fastest migration, minimal changes, reduced risk. | May not optimize for cloud, potential for increased operational costs, may not fully utilize cloud features. | Applications with tight deadlines, complex dependencies, or limited resources for code changes. |

| Re-platforming | Improved performance and scalability, leverages cloud-native features, optimized for the cloud environment. | Requires more effort and planning than rehosting, some code changes are required. | Applications that can benefit from cloud services, where performance and scalability improvements are desired. |

| Refactoring | Fully leverages cloud-native features, optimized for scalability, performance, and cost efficiency. | Most time-consuming and expensive, requires significant code changes, highest risk. | Applications where significant performance and scalability improvements are required, and the benefits outweigh the costs. |

| Repurchasing | Reduces IT burden, access to latest features, eliminates the need for application management. | Loss of control, vendor lock-in, may not meet all specific requirements. | When a suitable SaaS solution is available and meets the business requirements. |

| Retiring | Simplifies infrastructure, reduces costs, eliminates maintenance. | Loss of functionality, potential impact on business processes. | Applications that are no longer needed or used. |

| Retaining | No migration effort required, avoids potential risks. | Does not benefit from cloud advantages, may incur higher operational costs, potential for security and compliance issues. | Applications that are not suitable for the cloud due to technical or compliance constraints. |

Data Migration Techniques

Migrating data between cloud providers is a critical aspect of workload migration, demanding careful consideration of methods, tools, security, and compliance. The choice of data migration technique significantly impacts migration time, cost, and data integrity. Selecting the appropriate strategy depends on factors such as data volume, network bandwidth, downtime tolerance, and security requirements. This section details the different data migration techniques, tools, and crucial considerations for a successful migration.

Online Data Migration

Online data migration involves transferring data while the source system remains operational, minimizing downtime. This approach is suitable for scenarios where minimal interruption is essential. Several methods exist, each with its own advantages and limitations.

- Database Replication: This technique involves replicating database changes from the source cloud provider to the target cloud provider in near real-time. Services like AWS Database Migration Service (DMS), Azure Database Migration Service (DMS), and Google Cloud’s Database Migration Service (DMS) facilitate this process. The advantage is minimal downtime. The primary consideration is the need for compatible database versions and the potential for increased network bandwidth consumption during the replication process.

- Change Data Capture (CDC): CDC identifies and captures data changes in real-time. These changes are then applied to the target database. Tools like Debezium and Apache Kafka are frequently used to implement CDC. CDC is advantageous for incremental data synchronization, reducing the initial data transfer time and the overall migration window. However, it requires careful planning and configuration to handle data conflicts and ensure data consistency.

- Streaming Data Pipelines: This involves using streaming technologies like Apache Kafka or Apache Beam to move data continuously from the source to the target. Data is processed and transformed in transit. The advantage is real-time data transfer and the ability to handle large volumes of data. It demands expertise in stream processing technologies and careful design to ensure data integrity and fault tolerance.

Offline Data Migration

Offline data migration involves transferring data while the source system is unavailable, leading to downtime. This method is often chosen for large datasets where online migration is not feasible due to bandwidth limitations or cost considerations.

- Bulk Data Transfer: This technique involves exporting the data from the source system and importing it into the target system. Tools like AWS Snowball, Azure Data Box, and Google Transfer Appliance are designed for physical data transfer. The advantage is speed for large datasets, bypassing network limitations. The disadvantages include downtime during the export/import process and the need for physical device handling.

- Backup and Restore: Backups are taken from the source cloud provider and restored in the target cloud provider. This approach is straightforward, especially for virtual machines and file systems. The primary disadvantage is the downtime required for the restore process. It’s crucial to ensure that the backup and restore tools and the operating systems are compatible.

- File Transfer Protocols (FTP/SFTP): For file-based data, protocols like FTP and SFTP can be used to transfer data between cloud providers. These methods are suitable for smaller datasets or when direct network connectivity is available. Security considerations are important, and the transfer speed depends on network bandwidth.

Data Transfer Tools and Services

Cloud providers offer a range of tools and services to facilitate data migration. These tools streamline the migration process, providing features like data compression, encryption, and automated transfer scheduling.

- AWS Data Transfer Services: AWS offers several services for data migration, including AWS DataSync for online data transfer, AWS Snowball for offline bulk data transfer, and AWS DMS for database migration.

- Azure Data Transfer Services: Azure provides Azure Data Box for offline data transfer, Azure Data Factory for data integration and migration, and Azure Database Migration Service for database migration.

- Google Cloud Data Transfer Services: Google Cloud offers Google Cloud Storage Transfer Service for data transfer between cloud storage locations, Google Cloud Storage Transfer Appliance for offline data transfer, and Google Cloud Database Migration Service for database migration.

Data Security and Compliance

Data security and compliance are paramount during data migration. Protecting data confidentiality, integrity, and availability is crucial throughout the process.

- Encryption: Data should be encrypted both in transit and at rest. Use encryption protocols like TLS/SSL during transfer and encryption keys managed by the cloud provider or a dedicated key management service.

- Access Control: Implement robust access controls to restrict access to data during the migration process. This includes using appropriate IAM (Identity and Access Management) policies and adhering to the principle of least privilege.

- Data Loss Prevention (DLP): Employ DLP measures to identify and prevent sensitive data from being exposed during the migration. This may involve data masking, anonymization, or tokenization.

- Compliance: Ensure that the data migration process complies with relevant regulations, such as GDPR, HIPAA, and PCI DSS. This involves understanding the compliance requirements and implementing appropriate security controls.

- Data Integrity Verification: Implement checksums and data validation techniques to ensure data integrity during transfer. Compare the data at the source and the target to verify that no data has been lost or corrupted.

Consider the example of a retail company migrating its customer database from AWS to Google Cloud. To ensure data security, the company would encrypt the data at rest using AWS KMS before exporting it. During transfer using a service like AWS DataSync, it would ensure data encryption using TLS. Upon arrival at Google Cloud, the data would be decrypted and re-encrypted using Google Cloud KMS. Throughout the process, the company would meticulously monitor access logs and implement data loss prevention policies to prevent any unauthorized data access.

Application Migration

Application migration is a complex undertaking that involves transferring software applications, their associated data, and configurations from one cloud environment to another. This process necessitates careful planning and execution to minimize downtime, ensure data integrity, and maintain application functionality. Successful application migration is critical for achieving the benefits of cloud portability, including cost optimization, improved scalability, and enhanced disaster recovery capabilities.

Steps in Application Migration

Migrating applications involves a series of interconnected steps that must be carefully managed to ensure a smooth transition. These steps, when executed correctly, mitigate risks and pave the way for a successful migration.

- Assessment and Planning: This initial phase involves a comprehensive evaluation of the application, its dependencies, and the target cloud environment. Understanding the application’s architecture, resource utilization, and interdependencies is crucial. Planning includes selecting the appropriate migration strategy (e.g., rehosting, replatforming, refactoring), estimating costs, and defining a migration timeline. The application should be inventoried and documented, including its components (e.g., web servers, databases, application servers), configurations, and network dependencies.

- Dependency Mapping: Identify all dependencies, including libraries, frameworks, and external services. This includes both internal and external dependencies. Internal dependencies might include other applications or shared resources within the same environment. External dependencies may encompass third-party APIs, databases, or cloud services.

- Environment Preparation: Set up the target cloud environment to match the application’s requirements. This involves provisioning virtual machines, configuring networking, and installing necessary software and libraries. This is where you create the infrastructure that the application will run on after migration. The target environment should be designed to mirror the source environment as closely as possible to reduce compatibility issues.

- Code and Configuration Migration: Migrate the application’s code and configuration files. This might involve modifying the code to be compatible with the new environment. Configuration files often need to be updated to reflect the new cloud environment’s settings, such as database connection strings and API endpoints. Configuration management tools can automate and streamline this process.

- Data Migration: Migrate the application’s data, which might involve migrating databases, file storage, and other data stores. The data migration strategy must be carefully chosen to ensure data integrity and minimal downtime. Consider the size of the data, the acceptable downtime window, and the required performance levels.

- Testing: Thoroughly test the migrated application in the target environment. Testing should include functional testing, performance testing, security testing, and integration testing. The goal is to verify that the application functions correctly, meets performance requirements, and is secure. User acceptance testing (UAT) should be performed to validate that the application meets the needs of end-users.

- Cutover and Go-Live: Execute the cutover plan to switch from the source environment to the target environment. This might involve a phased rollout or a big-bang approach, depending on the application and the business requirements. Monitoring the application’s performance and availability after the cutover is essential.

- Post-Migration Optimization: Once the application is live in the target environment, optimize its performance and cost. This might involve right-sizing resources, optimizing database queries, and leveraging cloud-native services. Continuous monitoring and optimization are essential to ensure the application runs efficiently and cost-effectively.

Handling Application Downtime

Minimizing downtime is a critical consideration during application migration. Several strategies can be employed to reduce downtime and maintain business continuity.

- Choosing the Right Migration Strategy: The chosen migration strategy significantly impacts downtime. For example, rehosting (lift-and-shift) may result in shorter downtime compared to refactoring, which requires significant code changes.

- Phased Migration: Migrate the application in phases, starting with non-critical components or user groups. This allows for testing and validation in a controlled manner, reducing the impact of any issues.

- Blue/Green Deployment: Deploy the new application version (green) alongside the existing one (blue). Once the green environment is validated, switch traffic to it. This minimizes downtime because the old environment can be quickly reverted to if issues arise.

- Database Replication: Replicate the database to the target environment before the cutover. This allows for a quick switch to the target database during the cutover, minimizing downtime.

- Load Balancing: Utilize load balancers to distribute traffic between the source and target environments during the migration. This ensures that users are not impacted during the cutover.

- Automated Rollback: Develop a rollback plan that can be executed quickly if issues arise during the migration. This might involve reverting to the previous environment or rolling back to a previous version of the application.

- Communication and Planning: Clearly communicate the migration plan to all stakeholders, including users, IT staff, and management. Ensure a detailed cutover plan, including a schedule, roles, and responsibilities.

Preparing Applications for Migration: A Step-by-Step Guide

Preparing an application for migration requires a systematic approach. The following steps provide a structured guide for preparing an application for successful migration.

- Analyze Application Architecture: Thoroughly document the application’s architecture, including its components, dependencies, and data flow. Identify any potential bottlenecks or complexities that could impact the migration.

- Assess Compatibility: Evaluate the application’s compatibility with the target cloud environment. This includes assessing the operating system, programming languages, frameworks, and libraries.

- Review and Update Code: Review the application’s code for compatibility issues. Make necessary code changes to ensure it runs correctly in the target environment. This may involve updating code to use cloud-native services or adjusting for differences in operating systems or libraries.

- Configure Application: Configure the application to run in the target environment. This involves updating configuration files, setting up environment variables, and configuring networking.

- Test and Validate: Perform thorough testing to validate the application’s functionality and performance in the target environment. This includes functional testing, performance testing, and security testing.

- Plan Data Migration: Develop a detailed data migration plan, including the data migration strategy, the data migration tools, and the cutover plan. The plan should address data integrity, downtime, and data security.

- Document and Rehearse: Document the migration plan and the cutover plan. Rehearse the migration process to identify and address any potential issues before the actual migration.

Networking and Connectivity Considerations

Migrating workloads between cloud providers necessitates careful planning and execution of network configurations to ensure seamless connectivity and data transfer. This involves establishing secure and reliable communication channels between the source and target environments, minimizing downtime, and maintaining application performance. Effective network design is crucial for the success of any cloud migration strategy.

Configuring Networking and Connectivity Between Source and Target Cloud Environments

Establishing connectivity between different cloud environments requires a multifaceted approach, considering various factors such as security, performance, and cost. The specific configuration depends on the chosen migration strategy and the characteristics of the applications being migrated.

- IP Addressing and Subnetting: Careful planning of IP address ranges and subnetting is essential to avoid conflicts between the source and target environments. It’s crucial to ensure that the source and target networks do not overlap. If overlapping is unavoidable, Network Address Translation (NAT) can be used, but this adds complexity and potential performance overhead. Proper subnetting facilitates efficient routing and segmentation of network traffic.

- Firewall Configuration: Firewalls play a critical role in securing the network. During migration, firewall rules must be carefully reviewed and updated to allow necessary traffic flow between the source and target environments. Rules should be as granular as possible, specifying source and destination IP addresses, ports, and protocols to minimize the attack surface. Inconsistent firewall configurations can lead to security vulnerabilities or prevent applications from functioning correctly after migration.

- Routing Configuration: Routing configurations must be established to direct traffic between the source and target networks. This may involve configuring static routes or using dynamic routing protocols like Border Gateway Protocol (BGP) if the cloud providers support it. The routing configuration must be consistent across both environments to ensure that traffic can reach its intended destination.

- DNS Configuration: Domain Name System (DNS) plays a vital role in resolving domain names to IP addresses. During migration, DNS records must be updated to point to the new IP addresses of the migrated workloads. This process can be complex, particularly if the application uses many internal DNS records. Careful planning and execution are essential to minimize downtime during DNS propagation.

- Network Performance Optimization: Network performance can significantly impact application performance. During migration, it’s essential to monitor and optimize network performance. This includes bandwidth utilization, latency, and packet loss. Techniques like Quality of Service (QoS) can be used to prioritize critical traffic.

Virtual Private Networks (VPNs) and Other Connectivity Options

Various connectivity options are available to establish secure and reliable communication between cloud environments. The choice of connectivity method depends on the specific requirements of the migration, including security, bandwidth, latency, and cost.

- Virtual Private Networks (VPNs): VPNs create secure, encrypted tunnels over the public internet. They are a common choice for establishing connectivity between cloud environments, especially when sensitive data is being transferred. VPNs can be configured using various protocols, such as IPsec or OpenVPN. VPNs provide a relatively inexpensive way to connect networks, but the performance can be affected by internet congestion and latency.

- Direct Connect: Direct Connect provides a dedicated, private connection between the customer’s on-premises network or another cloud provider and the target cloud provider. This option offers higher bandwidth, lower latency, and improved security compared to VPNs. However, it is generally more expensive and requires a physical connection. Direct Connect is suitable for high-performance applications that require low latency and high throughput.

- Cloud Interconnect: Cloud Interconnect services enable direct, private connections between different cloud providers. These services are similar to Direct Connect but facilitate connectivity across different cloud platforms. This is an increasingly popular option for multi-cloud strategies, enabling seamless data transfer and application integration across different cloud environments.

- Peering: Peering involves establishing a direct connection between two networks. Cloud providers offer peering services that allow customers to connect their virtual networks directly. This provides high-performance connectivity with low latency. However, peering agreements are typically limited to specific regions and require mutual agreement between the parties involved.

- Connectivity for specific services: Some cloud providers offer connectivity options specifically for certain services. For example, there may be services that enable secure and high-performance access to databases or other managed services.

Network Topology Diagram

The following diagram illustrates the network topology before, during, and after migration. This visualization helps to understand the changes in network configuration throughout the migration process.

+---------------------+ +---------------------+ | Source Cloud (AWS) | | Target Cloud (Azure)| +---------------------+ +---------------------+ | | | | | Application | | Application | | (e.g., Web Server) | | (e.g., Web Server) | | | | | | Virtual Network |------| Virtual Network | | (e.g., VPC) | | (e.g., VNet) | | Subnet A | | Subnet B | | 10.0.1.0/24 | | 10.1.1.0/24 | | | | | | Security Groups | | Network Security Grp| | (Firewall Rules) | | (Firewall Rules) | | | | | +---------+-----------+ +---------+-----------+ | | | (During Migration) | | +---------------------+ | | | VPN/Direct Connect | | | | (Encrypted Tunnel) | | | +---------+-----------+ | | | | | | | +---------v-----------+ +---------v-----------+ | | | | | Internet | | Internet | | | | | +---------------------+ +---------------------+ | | | (After Migration) | | +---------------------+ | | | Application Traffic| | | | (through new paths) | | | +---------------------+ | | | | | | | +---------v-----------+ +---------v-----------+ | | | | | Users/Clients | | Users/Clients | | (Accessing App) | | (Accessing App) | +---------------------+ +---------------------+

- Before Migration: The diagram shows the source cloud environment (AWS) and the target cloud environment (Azure) as separate entities.

Each environment has its own virtual network, subnets, and security groups/network security groups. Application instances reside within these virtual networks, accessible through defined security rules. Connectivity between the source and target environments is either non-existent or minimal.

- During Migration: A secure connection, typically a VPN or Direct Connect, is established between the source and target environments. This encrypted tunnel allows for the transfer of data and traffic between the two clouds. The diagram illustrates this connection, highlighting the secure pathway for communication. This allows for data synchronization and testing.

- After Migration: After the migration is complete, the application traffic is routed through the target cloud environment (Azure). The source cloud environment (AWS) may be decommissioned, or the connection may be maintained for disaster recovery or hybrid cloud scenarios. The security groups and network security groups in the target environment are configured to allow the application to function correctly. Users and clients now access the application through the target cloud environment.

Cost Optimization Strategies During Migration

Cloud migration, while offering significant benefits, can be a costly undertaking. Effective cost optimization is crucial to maximize the return on investment (ROI) and avoid unexpected expenses. This section focuses on strategies to minimize costs throughout the migration process, encompassing planning, execution, and post-migration operations.

Factors Impacting Cloud Migration Costs

Several factors significantly influence the total cost of a cloud migration project. Understanding these elements is essential for accurate budgeting and cost control.

- Assessment and Planning: The initial assessment phase can reveal hidden complexities and potential cost drivers. Inadequate planning can lead to inaccurate resource allocation, delays, and increased costs. Thoroughly evaluating existing infrastructure, application dependencies, and data volumes is vital.

- Migration Strategy: The chosen migration strategy (e.g., rehosting, replatforming, refactoring) directly impacts cost. Rehosting (lift-and-shift) is often the fastest and least expensive approach initially, but might not be optimal for long-term cost efficiency. Replatforming and refactoring involve more upfront investment but can lead to significant cost savings through optimized resource utilization and improved application performance.

- Data Transfer: Moving large datasets to the cloud can incur significant costs, particularly if using public internet connections. Data transfer fees are often charged based on the volume of data transferred and the method used. Utilizing cost-effective data transfer methods, such as direct connections or offline data transfer services, is essential.

- Resource Utilization: Inefficient resource allocation, such as over-provisioning virtual machines (VMs) or using underutilized storage, leads to unnecessary costs. Right-sizing resources based on actual workload demands and implementing automated scaling can optimize resource utilization and reduce expenses.

- Ongoing Operations: Post-migration, operational costs, including compute, storage, networking, and data egress, contribute to the overall cloud bill. Continuously monitoring resource usage, optimizing application performance, and leveraging cost-saving features offered by cloud providers are crucial for long-term cost management.

- Skillset and Training: Lack of in-house expertise in cloud technologies can lead to reliance on external consultants, increasing project costs. Investing in training and upskilling internal teams can reduce dependency on expensive external resources and improve overall efficiency.

- Unexpected Issues: Unforeseen challenges, such as compatibility issues, performance bottlenecks, or security vulnerabilities, can arise during the migration process, leading to delays and increased costs. Comprehensive testing, robust contingency plans, and proactive issue resolution are essential to mitigate these risks.

Tips for Optimizing Costs During and After Migration

Implementing specific strategies can help minimize costs throughout the migration lifecycle. These tips focus on practical actions to achieve cost efficiency.

- Choose the Right Migration Strategy: Select the migration approach that aligns with business goals and budget constraints. While rehosting may seem cost-effective initially, assess the long-term benefits of more sophisticated strategies like replatforming or refactoring. Consider the trade-offs between speed, cost, and application modernization.

- Optimize Data Transfer: Employ cost-effective data transfer methods. Utilize cloud provider-specific services for large-scale data transfers, such as AWS Snowball or Google Transfer Appliance, to minimize data transfer fees. Schedule data transfers during off-peak hours to potentially benefit from lower network costs.

- Right-Size Resources: Accurately assess resource requirements and right-size VMs and storage. Monitor resource utilization and adjust resource allocations dynamically to match workload demands. Avoid over-provisioning resources, which leads to unnecessary costs. Leverage cloud provider tools for automated scaling and resource optimization.

- Leverage Reserved Instances and Savings Plans: Cloud providers offer various pricing models, including reserved instances and savings plans, to provide discounts for long-term resource commitments. Evaluate these options to secure discounted pricing for predictable workloads. For example, AWS Reserved Instances can offer up to 72% discount compared to on-demand pricing, depending on the instance type and commitment period.

- Automate Infrastructure Management: Automate infrastructure provisioning, configuration, and management tasks using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. Automation reduces manual effort, minimizes errors, and improves efficiency, leading to cost savings.

- Monitor and Analyze Costs: Implement robust cost monitoring and analysis tools to track cloud spending in real-time. Identify cost drivers, optimize resource usage, and detect anomalies. Utilize cloud provider-specific cost management tools and third-party solutions to gain insights into spending patterns.

- Implement a FinOps Culture: Foster a FinOps culture within the organization, involving finance, operations, and engineering teams. Promote cost awareness, collaboration, and continuous optimization efforts. Establish clear cost allocation and reporting mechanisms to track spending and identify areas for improvement.

- Decommission Unused Resources: Regularly identify and decommission unused resources, such as idle VMs, storage volumes, and network resources. Unused resources incur unnecessary costs. Implement automated resource decommissioning policies to ensure resources are terminated when no longer needed.

- Optimize Storage Costs: Choose the appropriate storage tier based on data access frequency. Utilize cheaper storage tiers for infrequently accessed data, such as archival storage. Optimize data lifecycle management policies to automatically move data between different storage tiers based on access patterns.

Cloud Provider Cost Models Comparison

Cloud providers offer various pricing models, each with its characteristics. Understanding these models is crucial for selecting the most cost-effective options. The following table compares the primary cost models offered by major cloud providers:

| Cost Model | AWS | Azure | Google Cloud |

|---|---|---|---|

| On-Demand | Pay-as-you-go pricing; no upfront commitment. Suitable for short-term workloads or unpredictable usage. | Pay-as-you-go pricing; no upfront commitment. Suitable for short-term workloads or unpredictable usage. | Pay-as-you-go pricing; no upfront commitment. Suitable for short-term workloads or unpredictable usage. |

| Reserved Instances/Committed Use Discounts | Significant discounts for committing to a specific instance type for a specific duration (1 or 3 years). | Significant discounts for committing to specific resources for a specific duration (1 or 3 years). | Sustained Use Discounts: Automatically applied for consistent resource usage. Committed Use Discounts: Offer discounts for committing to specific resource usage for a specific duration (1 or 3 years). |

| Spot Instances/Preemptible VMs | Offer significant discounts on spare compute capacity. Can be terminated with short notice. | Offer significant discounts on spare compute capacity. Can be terminated with short notice. | Offer significant discounts on spare compute capacity. Can be terminated with short notice. |

| Savings Plans | Flexible pricing model offering discounts based on consistent compute usage across various instance families. | Savings plans are available, but they are generally linked to specific compute resources and durations, much like reserved instances. | Flexible pricing model offering discounts based on consistent compute usage across various instance families. |

| Pay-as-you-go Storage | Pay for storage used, with different storage tiers based on access frequency. | Pay for storage used, with different storage tiers based on access frequency. | Pay for storage used, with different storage tiers based on access frequency. |

| Data Transfer | Charges for data transfer in and out of the cloud. Data transfer within the same region is often free. | Charges for data transfer in and out of the cloud. Data transfer within the same region is often free. | Charges for data transfer in and out of the cloud. Data transfer within the same region is often free. |

This table provides a general overview of the cost models. Specific pricing details and discounts can vary. It is essential to consult the cloud provider’s official pricing documentation for the most accurate and up-to-date information. For example, AWS Savings Plans can provide up to 72% savings compared to on-demand pricing, and Azure Reserved Instances can offer up to 70% savings.

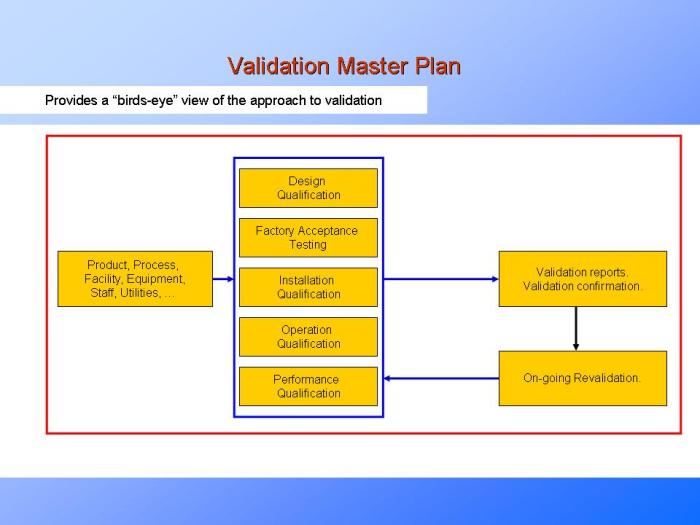

Testing and Validation Procedures

Thorough testing and validation are critical phases in cloud migration, ensuring that migrated workloads function correctly, perform adequately, and maintain security posture in the new environment. These procedures minimize risks, identify potential issues early, and ultimately guarantee a successful migration. Neglecting these steps can lead to service disruptions, data loss, and security vulnerabilities, undermining the benefits of cloud migration.

Testing Procedures for Validating Migrated Workloads

The validation process involves a series of tests designed to assess various aspects of the migrated workloads. These tests are typically conducted in a phased approach, progressing from basic functionality checks to comprehensive performance and security assessments.

- Functional Testing: This verifies that the migrated applications and services perform as expected and deliver the intended functionality.

- Functional tests focus on the core features and functionalities of the application, ensuring they operate correctly in the new environment.

- Test cases should cover all critical business processes, user workflows, and data interactions.

- Examples include testing login functionality, data input and output, reporting capabilities, and integration with other systems.

- Functional testing is often performed through a combination of manual testing and automated test suites.

- Performance Testing: Performance testing evaluates the responsiveness, stability, and scalability of the migrated workloads under various load conditions.

- Performance tests assess the system’s ability to handle expected and peak traffic volumes, ensuring that the application can maintain acceptable response times and throughput.

- Tests typically include load testing, stress testing, and endurance testing.

- Load testing simulates realistic user traffic to identify performance bottlenecks under normal operating conditions.

- Stress testing pushes the system beyond its normal capacity to determine its breaking point and identify areas for optimization.

- Endurance testing evaluates the system’s performance over extended periods to identify memory leaks or other long-term issues.

- Tools like Apache JMeter, LoadRunner, and Gatling are commonly used for performance testing.

- Security Testing: Security testing assesses the security posture of the migrated workloads, ensuring that they are protected against vulnerabilities and threats.

- Security testing includes vulnerability scanning, penetration testing, and security audits.

- Vulnerability scanning identifies known security weaknesses in the application code, infrastructure, and configurations.

- Penetration testing simulates real-world attacks to assess the effectiveness of security controls.

- Security audits review the security policies, procedures, and configurations to ensure compliance with security standards and regulations.

- Tools like Nessus, OpenVAS, and Burp Suite are frequently used for security testing.

- Integration Testing: Integration testing verifies that the migrated application or service integrates correctly with other systems and services in the cloud environment.

- Integration tests ensure that data flows seamlessly between different components and that the overall system functions as a cohesive unit.

- This may involve testing API calls, data synchronization, and communication protocols.

- User Acceptance Testing (UAT): UAT involves end-users testing the migrated application or service to ensure it meets their requirements and expectations.

- UAT provides the final validation before the workload is released to production.

- Users execute predefined test cases and provide feedback on usability, functionality, and overall performance.

Importance of Functional, Performance, and Security Testing

Each type of testing plays a crucial role in ensuring a successful cloud migration. The combined results provide a holistic view of the migrated workload’s readiness for production.

- Functional Testing Significance:

- Functional testing ensures that the core business logic and features operate as intended in the new environment.

- It validates that user interactions, data processing, and integrations with other systems work seamlessly.

- Failure to perform adequate functional testing can lead to significant disruptions, user dissatisfaction, and potentially financial losses.

- Performance Testing Significance:

- Performance testing ensures that the migrated workload can handle the expected traffic and user load without performance degradation.

- It identifies bottlenecks and performance issues that could impact user experience and business operations.

- Without proper performance testing, migrated applications may become slow, unresponsive, or even unavailable during peak usage, leading to a poor user experience and potential business losses.

- Security Testing Significance:

- Security testing is essential to protect sensitive data and prevent unauthorized access or malicious attacks.

- It identifies vulnerabilities and weaknesses in the application and infrastructure, allowing for remediation before the workload is exposed to production traffic.

- Failing to conduct security testing can expose the migrated workload to significant security risks, including data breaches, ransomware attacks, and regulatory non-compliance.

Process of Creating a Comprehensive Test Plan and Key Components

A well-defined test plan is essential for ensuring a structured and effective testing process. The plan Artikels the scope, objectives, and methodology for testing the migrated workloads.

- Defining Test Objectives: The first step is to clearly define the objectives of the testing process.

- These objectives should align with the business requirements and goals of the cloud migration.

- Examples include verifying functionality, ensuring performance targets are met, and validating security controls.

- Identifying Test Scope: Define the scope of testing, including the specific components, features, and functionalities to be tested.

- This involves identifying the critical business processes and user workflows that must be validated.

- Consider the different environments that will be tested, such as development, staging, and production.

- Developing Test Cases: Create detailed test cases that specify the steps to be performed, the expected results, and the criteria for success.

- Test cases should cover all aspects of the application, including functionality, performance, and security.

- Each test case should have a clear purpose, specific inputs, and defined outputs.

- Prioritize test cases based on their criticality and risk.

- Selecting Testing Tools: Choose the appropriate testing tools based on the type of testing required and the specific technologies used in the migrated workload.

- Examples include tools for functional testing, performance testing, security scanning, and automation.

- Consider factors such as cost, ease of use, and integration capabilities when selecting testing tools.

- Establishing Test Environment: Set up the necessary test environments that accurately reflect the production environment.

- The test environment should include all the required hardware, software, and network configurations.

- Ensure that the test environment is isolated from the production environment to prevent any unintended impact.

- Executing Tests: Execute the test cases and document the results.

- Track the progress of testing and identify any issues or defects.

- Prioritize and address defects based on their severity and impact.

- Analyzing Test Results: Analyze the test results to identify any patterns, trends, or areas of concern.

- Use the results to determine whether the migrated workload meets the defined objectives.

- Generate reports summarizing the test results and providing recommendations for improvement.

- Defining the Key Components of a Test Plan: A comprehensive test plan should include the following components:

- Test Objectives: Clearly defined goals and objectives for the testing process.

- Test Scope: Definition of the specific areas, features, and functionalities to be tested.

- Test Environment: Description of the test environment, including hardware, software, and network configurations.

- Test Cases: Detailed test cases with steps, expected results, and pass/fail criteria.

- Test Data: Description of the data used for testing, including its source and format.

- Test Schedule: Timeline for executing the tests and reporting the results.

- Testing Tools: List of the testing tools to be used.

- Roles and Responsibilities: Assignment of roles and responsibilities for each team member involved in the testing process.

- Defect Management: Procedures for reporting, tracking, and resolving defects.

- Entry and Exit Criteria: Defined criteria for starting and completing the testing process.

- Risk Assessment: Identification of potential risks and mitigation strategies.

Post-Migration Optimization and Management

Following a successful cloud migration, the focus shifts from the mechanics of moving workloads to the ongoing management and optimization of the migrated environment. This phase is critical for realizing the full benefits of cloud adoption, including improved performance, cost efficiency, and operational agility. Effective post-migration strategies ensure that the cloud environment continues to meet business requirements and evolves to support future growth.

Optimizing Performance After Migration

Post-migration performance optimization is a continuous process that aims to ensure migrated workloads operate efficiently and meet service level agreements (SLAs). This involves several key steps, focusing on resource allocation, application tuning, and infrastructure configuration.

- Resource Allocation and Scaling: Optimizing resource allocation is a foundational step. It involves right-sizing virtual machines (VMs) and other cloud resources based on actual workload demands. Monitoring tools provide insights into resource utilization, identifying bottlenecks and underutilized resources.

- Right-sizing: Analyzing CPU, memory, and storage usage to determine the optimal resource configuration for each workload. For example, a web server that consistently experiences high CPU utilization during peak hours may require a larger instance size or auto-scaling to handle the load.

- Auto-scaling: Implementing auto-scaling policies to automatically adjust the number of resources based on demand. This ensures that applications can handle fluctuations in traffic without manual intervention. Auto-scaling can be configured based on various metrics, such as CPU utilization, network traffic, or queue depth.

- Application Tuning: Optimizing the application code and configuration is crucial for performance. This involves identifying and addressing performance bottlenecks within the application itself.

- Code Optimization: Reviewing application code for inefficiencies, such as poorly written database queries, inefficient algorithms, or excessive resource usage. Profiling tools can help identify performance hotspots in the code.

- Database Optimization: Optimizing database performance by tuning database configurations, indexing tables appropriately, and optimizing query execution plans. This can significantly improve application response times.

- Caching: Implementing caching mechanisms to reduce the load on backend systems and improve response times. Caching can be applied at various levels, including the application layer, database layer, and content delivery network (CDN).

- Infrastructure Configuration: Proper configuration of the underlying infrastructure is essential for optimal performance. This includes network configuration, storage optimization, and security settings.

- Network Optimization: Configuring network settings, such as network bandwidth, latency, and throughput, to ensure efficient communication between application components and users. Implementing content delivery networks (CDNs) can improve content delivery performance for geographically distributed users.

- Storage Optimization: Selecting the appropriate storage type and configuring storage settings to optimize performance. For example, using SSD-based storage for applications with high I/O requirements can significantly improve performance.

- Load Balancing: Distributing traffic across multiple instances of an application to improve performance, availability, and scalability. Load balancers can also provide health checks to automatically route traffic away from unhealthy instances.

- Performance Testing and Monitoring: Continuous performance testing and monitoring are essential for identifying and addressing performance issues proactively.

- Load Testing: Simulating realistic user traffic to identify performance bottlenecks and ensure that the application can handle peak loads.

- Performance Monitoring: Monitoring key performance indicators (KPIs), such as response times, error rates, and resource utilization, to identify performance issues and trends.

Checklist for Managing Migrated Workloads and Ensuring Operational Efficiency

Managing migrated workloads requires a systematic approach to ensure ongoing operational efficiency and reliability. This checklist provides a structured framework for managing the cloud environment.

- Monitoring and Alerting:

- Implement comprehensive monitoring of all migrated workloads and infrastructure components.

- Configure alerts for critical events and performance thresholds.

- Regularly review and refine monitoring configurations to ensure accuracy and relevance.

- Cost Management:

- Regularly monitor cloud spending and identify cost optimization opportunities.

- Implement cost allocation tags to track spending by department, project, or application.

- Utilize cloud provider cost management tools to set budgets and receive alerts.

- Security Management:

- Implement security best practices, including access controls, encryption, and vulnerability scanning.

- Regularly review and update security configurations to address new threats and vulnerabilities.

- Monitor security logs and events for suspicious activity.

- Backup and Disaster Recovery:

- Implement a robust backup and disaster recovery plan to protect against data loss and downtime.

- Regularly test backup and recovery procedures to ensure they function correctly.

- Automate backup and recovery processes to minimize manual intervention.

- Change Management:

- Establish a formal change management process to control changes to the cloud environment.

- Document all changes and ensure proper testing before implementation.

- Maintain a change log to track all changes and their impact.

- Performance Tuning:

- Continuously monitor application and infrastructure performance.

- Identify and address performance bottlenecks through optimization and resource adjustments.

- Regularly review and refine performance tuning configurations.

- Documentation:

- Maintain up-to-date documentation of the cloud environment, including architecture diagrams, configuration settings, and operational procedures.

- Ensure documentation is easily accessible and readily available to relevant personnel.

- Regularly review and update documentation to reflect changes in the environment.

Monitoring Tools and Their Roles in Maintaining Health and Performance

Effective monitoring is essential for maintaining the health and performance of migrated workloads. Various monitoring tools provide insights into different aspects of the cloud environment, enabling proactive identification and resolution of issues.

- Cloud Provider Native Monitoring Tools: These tools are provided by the cloud provider and offer comprehensive monitoring capabilities for resources within their platform.

- Amazon CloudWatch (AWS): Provides real-time monitoring, logging, and alerting for AWS resources and applications. It monitors metrics such as CPU utilization, network traffic, and disk I/O. CloudWatch also offers custom metrics and dashboards for application-specific monitoring.

- Azure Monitor (Azure): Provides a comprehensive monitoring solution for Azure resources and applications. It collects and analyzes data from various sources, including logs, metrics, and application insights. Azure Monitor also offers alerting and automated remediation capabilities.

- Google Cloud Monitoring (GCP): Offers monitoring, logging, and alerting for Google Cloud resources and applications. It provides detailed metrics and dashboards for monitoring performance, health, and availability. Google Cloud Monitoring integrates with other Google Cloud services, such as Cloud Logging and Cloud Trace.

- Application Performance Monitoring (APM) Tools: APM tools provide detailed insights into application performance, helping to identify and resolve performance bottlenecks within the application code.

- New Relic: Offers a comprehensive APM solution that provides real-time monitoring, alerting, and analytics for applications. It monitors application performance, infrastructure, and end-user experience. New Relic provides detailed insights into code-level performance, database queries, and external service calls.

- Dynatrace: Provides AI-powered monitoring and analytics for applications and infrastructure. It automatically discovers and monitors all components of the application stack, providing real-time insights into performance and health. Dynatrace uses AI to identify and prioritize performance issues.

- AppDynamics: Offers an APM solution that provides real-time monitoring, alerting, and analytics for applications. It monitors application performance, infrastructure, and business transactions. AppDynamics provides detailed insights into code-level performance, database queries, and end-user experience.

- Infrastructure Monitoring Tools: These tools focus on monitoring the underlying infrastructure, including servers, networks, and storage.

- Prometheus: An open-source monitoring and alerting toolkit. It collects metrics from various sources and provides a powerful query language for analyzing data. Prometheus is well-suited for monitoring containerized environments.

- Grafana: An open-source data visualization and monitoring tool. It integrates with various data sources, including Prometheus, and provides customizable dashboards for visualizing metrics and alerts.

- Nagios: A popular open-source monitoring system that monitors servers, services, and applications. It provides alerting and notification capabilities and can be extended with plugins to monitor various aspects of the infrastructure.

- Log Management Tools: Log management tools collect, analyze, and visualize logs from various sources, helping to identify and troubleshoot issues.

- Splunk: A powerful log management and analytics platform that collects, indexes, and analyzes machine data. It provides advanced search and reporting capabilities and can be used to monitor security events, application logs, and infrastructure performance.

- ELK Stack (Elasticsearch, Logstash, Kibana): An open-source log management solution. Elasticsearch is a search and analytics engine, Logstash is a data processing pipeline, and Kibana is a data visualization tool. The ELK stack is used to collect, process, and visualize logs from various sources.

- Network Monitoring Tools: These tools focus on monitoring network performance, identifying network bottlenecks, and troubleshooting network-related issues.

- SolarWinds Network Performance Monitor: Provides comprehensive network monitoring capabilities, including monitoring of network devices, bandwidth usage, and application performance.

- Wireshark: A network protocol analyzer that captures and analyzes network traffic. It is used to troubleshoot network issues and analyze network performance.

Final Conclusion

In conclusion, migrating workloads between cloud providers is a strategic undertaking demanding careful planning, rigorous execution, and continuous monitoring. By understanding the various migration strategies, mastering data transfer techniques, and optimizing costs, organizations can harness the full potential of multi-cloud environments. This approach not only provides greater flexibility and resilience but also positions businesses to leverage the unique strengths of each cloud provider.

The journey, while complex, is ultimately rewarding, offering enhanced agility, cost-effectiveness, and a competitive edge in today’s dynamic digital landscape.

Common Queries

What are the primary reasons for migrating workloads to a different cloud provider?

Primary motivations include cost optimization (seeking lower prices), performance improvements (better services), compliance requirements (meeting specific regulations), disaster recovery (improving redundancy), and avoiding vendor lock-in (gaining flexibility).

What are the common challenges faced during cloud migration?

Common challenges include application downtime, data transfer complexities, security concerns, compatibility issues, cost overruns, and the need for specialized skills and expertise.

How do I choose the right migration strategy for my workload?

The optimal strategy depends on the workload type, complexity, and business requirements. Consider factors like the application’s architecture, desired level of change, budget constraints, and required downtime. Options include rehosting (lift-and-shift), re-platforming, refactoring, and re-architecting.

What is the importance of testing after migration?