Embarking on a journey to ensure system reliability often involves understanding the critical role of Factor IX, which focuses on the delicate balance between swift startup and graceful shutdown processes. This exploration delves into the intricacies of optimizing these aspects, promising a robust and resilient system capable of withstanding the challenges of operational demands.

This comprehensive guide will explore the core principles of Factor IX, examining the metrics that define fast startup, the essential requirements for graceful shutdown, and the techniques needed to implement both effectively. We’ll uncover the importance of fault tolerance, meticulous monitoring, and rigorous testing, providing a roadmap for building systems that are not only efficient but also incredibly dependable.

Understanding Factor IX and its Role in System Reliability

Factor IX, within the context of system design, focuses on ensuring that a system is resilient and can maintain its functionality even when facing unexpected events or failures. It’s a crucial aspect of building robust and dependable systems, especially in environments where downtime or data loss is unacceptable. Understanding Factor IX helps developers and architects create systems that can withstand various challenges and provide consistent service.

Function of Factor IX in a System Context

Factor IX, in the context of system design, is the principle of ensuring the system is robust and can maintain functionality despite failures. It’s a critical element in building dependable systems, especially in scenarios where downtime or data loss is unacceptable. This encompasses several key aspects, including rapid startup, graceful shutdown, and fault tolerance. These elements collectively contribute to the system’s ability to recover from errors and continue operating effectively.

It focuses on preventing cascading failures and minimizing the impact of individual component malfunctions.

Definition of System Robustness

System robustness refers to a system’s ability to function correctly and reliably under various conditions, including adverse circumstances and unexpected inputs. A robust system can handle errors, recover from failures, and adapt to changing environments without compromising its core functionality. It’s about more than just avoiding errors; it’s about designing a system that can gracefully degrade its performance or continue operating in a limited capacity when faced with challenges.

Robustness is often achieved through redundancy, fault tolerance mechanisms, and careful error handling.

Implications of Factor IX Failures

Failures in adhering to Factor IX principles can have significant consequences for a system. These implications range from minor inconveniences to catastrophic events. For example, a system lacking graceful shutdown might experience data corruption during a power outage. Without rapid startup, the system may be unavailable for extended periods following a failure, leading to significant business disruption. Without fault tolerance, a single component failure could bring down the entire system.

These failures can result in financial losses, reputational damage, and a loss of user trust. A lack of focus on Factor IX often manifests as increased downtime, data loss, and a higher cost of maintenance.

The core principles of Factor IX’s influence are:

- Fault Tolerance: The system should be designed to withstand component failures without complete system failure. This often involves redundancy and automatic failover mechanisms.

- Rapid Startup: The system should be able to quickly recover from failures and resume normal operation.

- Graceful Shutdown: The system should be able to shut down cleanly and preserve data integrity, minimizing data loss and preventing corruption.

- Error Handling: The system should be designed to anticipate and handle errors gracefully, preventing them from cascading and causing larger problems.

Defining Fast Startup

Understanding and optimizing system startup time is crucial for achieving high availability and a positive user experience. A slow startup can lead to frustration, wasted time, and potential operational inefficiencies. Conversely, a fast startup contributes significantly to system responsiveness, allowing services to become available quickly after an outage or restart. This section delves into the key metrics, trade-offs, and strategies involved in defining and achieving a fast startup.

Critical Metrics for Measuring Startup Time

Accurately measuring startup time requires the use of several key metrics. These metrics provide a comprehensive view of the startup process, allowing for targeted optimization efforts.

- Time to First Byte (TTFB): This metric measures the time it takes for the server to respond to the first request after startup. It is a crucial indicator of initial system readiness and responsiveness. A low TTFB signifies that the system is quickly processing and serving requests.

- Time to Service Availability: This metric quantifies the duration from the initiation of the startup process until all essential services are fully operational and ready to accept and process requests. It includes the time required for initialization of all dependencies.

- Time to Full Functionality: This metric measures the time it takes for all components and features of the system to be fully loaded and operational. It is often more relevant for complex applications with numerous modules or features.

- Resource Utilization During Startup: Tracking CPU, memory, and disk I/O utilization during startup is crucial. High resource utilization can indicate bottlenecks that slow down the process. Monitoring resource usage helps identify areas for optimization, such as parallelizing tasks or optimizing I/O operations.

Trade-offs Between Startup Speed and Resource Utilization

Optimizing for fast startup often involves trade-offs, primarily between speed and resource utilization. Balancing these considerations is essential for creating a robust and efficient system.

- Caching vs. Initialization: Caching frequently accessed data can significantly reduce startup time by avoiding costly operations such as database queries. However, caching consumes memory. The trade-off involves deciding what data to cache and the amount of memory to allocate.

- Lazy Loading vs. Eager Loading: Eager loading, where components are loaded immediately, can speed up the initial startup process. However, it can lead to higher resource consumption, particularly memory. Lazy loading, which delays loading until a component is needed, conserves resources but may slow down the first use of that component.

- Parallelization vs. Contention: Parallelizing startup tasks can reduce overall startup time. However, excessive parallelization can lead to resource contention, such as competing for CPU time or disk I/O, which can negate the benefits of parallelism.

- Precompilation vs. On-Demand Compilation: Precompiling code, such as ahead-of-time (AOT) compilation, can speed up startup by reducing the need for compilation during the process. This method can increase build times and the size of the deployed artifacts. On-demand compilation can reduce the size of the deployed artifacts, but it may delay the first execution.

Comparison of Startup Strategies

Various startup strategies can be employed, each with its own advantages and disadvantages. The best approach depends on the specific system requirements and constraints. The following table compares several common strategies.

| Startup Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Sequential Startup | Components are started one after another, in a predefined order. | Simple to implement; easier to debug and troubleshoot. | Slowest startup time; prone to bottlenecks. |

| Parallel Startup | Multiple components are started simultaneously. | Faster startup time; utilizes multiple cores. | More complex to implement; requires careful dependency management to avoid conflicts. |

| Lazy Loading | Components are loaded only when they are needed. | Reduces initial resource consumption; can improve perceived startup time for frequently used features. | Slower first use of components; can lead to performance degradation if not managed carefully. |

| Caching and Preloading | Frequently accessed data and components are loaded into memory during startup. | Faster access to cached data; improves responsiveness. | Increased memory consumption; requires careful management of cache invalidation. |

Impact of Hardware Configurations on Startup Performance

The hardware configuration significantly influences startup performance. The choice of hardware components can either accelerate or decelerate the startup process.

- CPU: A faster CPU with more cores can significantly improve startup time by enabling parallel processing of tasks. For example, a system with a multi-core processor can start multiple services simultaneously, whereas a single-core system must start them sequentially.

- Memory (RAM): Sufficient RAM is crucial. Insufficient RAM can lead to excessive swapping to disk, severely slowing down startup. Consider the memory footprint of all running processes.

- Storage (SSD vs. HDD): Solid-state drives (SSDs) provide significantly faster read/write speeds than traditional hard disk drives (HDDs). An SSD can reduce the time required to load operating systems, applications, and data, thus dramatically improving startup time. For instance, an operating system installed on an SSD can boot in seconds, whereas the same system on an HDD may take minutes.

- Network Interface: The network interface card (NIC) speed influences the time required to establish network connections and load network-dependent services. Faster NICs facilitate quicker access to network resources during startup.

Implementing Fast Startup Techniques

Optimizing startup speed is crucial for enhancing system responsiveness and user experience. By implementing specific techniques, you can significantly reduce the time it takes for your system to become operational. This section will explore various methods to achieve faster startup times, focusing on component loading, process parallelization, and caching strategies.

Optimizing the Loading of Critical Components

Prioritizing the loading of essential components is a key strategy for accelerating startup. This involves identifying the core elements required for initial functionality and ensuring they are loaded promptly.

- Identifying Critical Components: Determine the absolute minimum set of components needed for the system to be usable. This might include core libraries, essential drivers, and the primary user interface elements. For example, in a web application, the initial HTML, CSS, and JavaScript files required for rendering the basic page structure and interactive elements should be considered critical.

- Lazy Loading of Non-Critical Components: Defer the loading of less essential components until after the core system is operational. This can significantly reduce initial startup time. Consider a desktop application: features like advanced settings panels or secondary plugins can be loaded on demand.

- Optimized Code and Data Structures: Ensure that critical components are built with efficiency in mind. This includes optimized code, minimal dependencies, and efficient data structures. Using algorithms with lower time complexity (e.g., O(log n) instead of O(n)) can substantially speed up component initialization.

- Pre-compilation and Code Generation: Pre-compiling code or generating code at build time can reduce runtime overhead. For example, a compiler can perform optimizations that are not possible during runtime.

Best Practices for Parallelizing Startup Processes

Parallelizing startup processes involves executing multiple tasks concurrently, rather than sequentially. This approach can drastically reduce overall startup time by leveraging multi-core processors.

- Identify Independent Tasks: Analyze the startup sequence to identify tasks that can be executed independently without dependencies. For example, initializing different modules or services that do not rely on each other can be done in parallel.

- Use Multithreading or Multiprocessing: Implement multithreading or multiprocessing to execute independent tasks concurrently. Multithreading is suitable for I/O-bound tasks, while multiprocessing is often preferred for CPU-bound tasks.

- Task Scheduling and Synchronization: Implement a robust task scheduler to manage the parallel execution of tasks. Use synchronization mechanisms (e.g., mutexes, semaphores) to coordinate tasks that share resources or have dependencies.

- Monitoring and Tuning: Continuously monitor the performance of parallel startup processes and tune the system to optimize resource utilization and minimize contention. Tools like profilers can help identify bottlenecks in parallel execution.

The Role of Caching in Accelerating Startup

Caching involves storing frequently accessed data in a readily accessible location, such as memory or a local disk. This can significantly reduce the time required to load data during startup.

- Caching Static Resources: Cache static resources such as configuration files, libraries, and data that are frequently accessed during startup. This eliminates the need to read these resources from slower storage each time the system starts.

- Pre-Fetching Data: Proactively fetch data that is likely to be needed during startup and store it in the cache. For example, a game might pre-fetch level data or textures.

- Warm-up Phase: Implement a warm-up phase during startup where the system performs initial operations, such as establishing database connections or pre-compiling shaders, to populate the cache.

- Cache Invalidation Strategies: Implement appropriate cache invalidation strategies to ensure that cached data remains up-to-date. This can involve time-based invalidation, event-based invalidation, or manual invalidation.

Potential Bottlenecks During Startup and How to Avoid Them

Several factors can create bottlenecks during startup, slowing down the process. Identifying and addressing these bottlenecks is crucial for achieving fast startup times.

- I/O Operations: Excessive I/O operations, such as reading from disk, can significantly slow down startup.

- Solution: Minimize disk reads by caching data, pre-fetching data, and optimizing data storage formats.

- Network Latency: Network-dependent operations, such as connecting to external services, can introduce delays.

- Solution: Minimize network requests, use connection pooling, and implement timeouts and retries.

- Database Connections: Establishing database connections can be time-consuming.

- Solution: Use connection pooling, optimize database queries, and cache frequently accessed data.

- Unnecessary Dependencies: Excessive dependencies on external libraries or services can slow down startup.

- Solution: Minimize dependencies, load dependencies lazily, and use dependency injection to manage dependencies efficiently.

- Slow Component Initialization: Slow initialization of individual components can contribute to overall startup time.

- Solution: Optimize component initialization code, use efficient algorithms, and parallelize initialization tasks where possible.

- Lack of Parallelization: Failing to parallelize startup tasks can lead to a sequential startup process.

- Solution: Identify independent tasks and execute them concurrently using multithreading or multiprocessing.

Understanding Graceful Shutdown

A graceful shutdown is a critical process for maintaining system reliability and data integrity. It involves a controlled sequence of operations designed to bring a system down in a predictable and safe manner. This contrasts sharply with abrupt shutdowns, which can lead to data loss, corruption, and prolonged downtime. This section will delve into the core aspects of graceful shutdowns, outlining their goals, consequences of failure, and essential implementation requirements.

Understanding Graceful Shutdown: Goals and Requirements

The primary objective of a graceful shutdown is to prevent data loss and ensure system stability during the transition to an inactive state. Achieving this requires careful planning and execution.The goals of a graceful shutdown process are multifaceted and interconnected:

- Data Integrity: To ensure that all in-flight transactions are completed and that data is written to persistent storage, preventing data loss or corruption. This includes ensuring that all buffered data is flushed to disk and that open files are properly closed.

- System Consistency: To maintain the consistency of the system’s state. This involves releasing resources, closing connections, and ensuring that the system is in a known and stable state before shutdown.

- Preventing Corruption: To prevent corruption of the file system or other critical system components. This involves unmounting file systems and shutting down services in a specific order to avoid conflicts.

- Minimizing Downtime: To expedite the shutdown process as much as possible without compromising data integrity or system stability.

- Facilitating Restart: To ensure that the system can be restarted smoothly and efficiently after the shutdown. This includes saving the system’s state and configuration to allow for a quick recovery.

An abrupt shutdown, such as a power failure or a forced system halt, can have severe consequences:

- Data Loss: Unsaved data in volatile memory (RAM) is lost, and partially written data on persistent storage can become corrupted.

- Data Corruption: File systems and databases can be corrupted, leading to data inconsistencies and potential data loss.

- System Instability: The system may become unstable and unable to restart properly, requiring manual intervention to recover.

- Increased Downtime: The time required to recover from an abrupt shutdown can be significantly longer than a graceful shutdown, resulting in service interruptions and lost productivity.

- Hardware Damage: In extreme cases, abrupt shutdowns can potentially damage hardware components, especially if the power supply is not properly protected.

Implementing a graceful shutdown requires adherence to several essential requirements:

- Shutdown Signals: The system must be able to receive and respond to shutdown signals from various sources, such as the operating system, a user, or a monitoring system.

- Service Ordering: Services and applications must be shut down in a specific order to avoid dependencies and conflicts. For example, a database server should be shut down before the applications that use it.

- Resource Management: Resources such as files, network connections, and memory must be properly released during the shutdown process.

- Transaction Completion: Any in-flight transactions must be completed or rolled back to ensure data consistency.

- Data Flushing: Data in buffers and caches must be flushed to persistent storage.

- Configuration Saving: The system’s state and configuration must be saved to allow for a quick recovery.

- Error Handling: Robust error handling mechanisms are needed to handle unexpected issues during the shutdown process.

The stages of a typical graceful shutdown can be summarized as follows:

| Stage | Action | Description |

|---|---|---|

| Initiation | Receive shutdown signal. | The shutdown process begins when the system receives a shutdown signal from the operating system, a user, or an external system. |

| Preparation | Prepare for shutdown. | This stage involves preparing the system for shutdown, such as disabling new connections, completing in-flight transactions, and flushing caches to disk. |

| Shutdown | Shutdown services and release resources. | Services are shut down in a predefined order to ensure dependencies are met. Resources, such as files and network connections, are released. Data is saved and configuration is stored. |

Implementing Graceful Shutdown Procedures

Implementing a robust graceful shutdown process is critical for maintaining data integrity and ensuring system stability. A well-designed shutdown sequence minimizes data loss, prevents corruption, and allows for a controlled transition to a stopped state. This section provides a comprehensive guide to implementing such procedures.

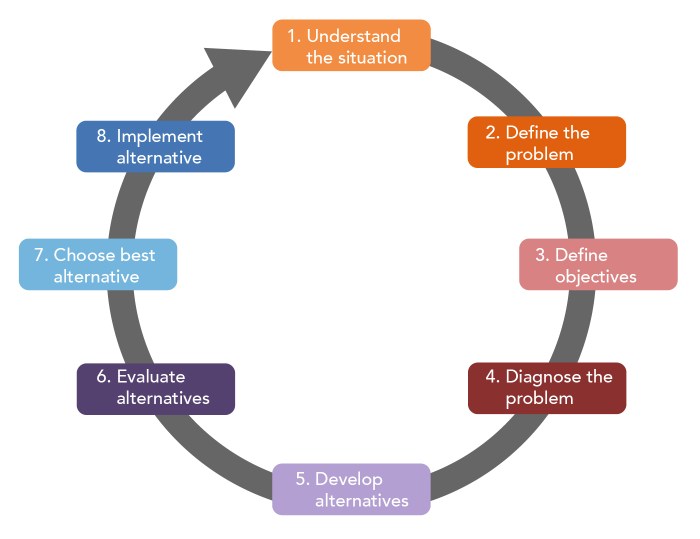

Step-by-Step Guide for Implementing a Graceful Shutdown

A systematic approach is crucial for ensuring a clean and predictable shutdown. The following steps Artikel a typical graceful shutdown procedure:

- Initiate Shutdown Signal: The process begins with the system receiving a shutdown signal. This can be triggered manually by an administrator, automatically by the operating system, or through a monitoring service.

- Prevent New Connections: Once the shutdown signal is received, the system should immediately stop accepting new connections or requests. This prevents new work from being started while the shutdown process is underway.

- Allow Existing Processes to Complete: Existing processes should be allowed to finish their current tasks. This often involves setting a timeout to prevent indefinite blocking. Implement mechanisms to identify and handle long-running operations.

- Flush Data to Persistent Storage: Any data held in memory that needs to be saved should be flushed to persistent storage (e.g., disk, database). This is critical for data integrity.

- Close Connections and Release Resources: All open connections (e.g., database connections, network sockets) should be closed, and resources (e.g., file handles, memory) should be released.

- Stop Dependent Services: If the system relies on other services, these services should be stopped in a controlled manner, often in reverse order of dependency.

- Finalize and Exit: After all operations are completed, the system can finalize any remaining tasks and exit. This might include writing final logs or performing a last-minute cleanup.

Examples of Handling Active Transactions During Shutdown

Managing active transactions during shutdown is a key aspect of data integrity. Different strategies are used depending on the nature of the transactions and the system’s architecture.

- Transaction Rollback: For transactional databases, incomplete transactions should be rolled back to ensure data consistency. This means undoing any changes made by the transaction, returning the database to its previous state. For example, if a user is in the middle of an order, the order should be cancelled, and the inventory quantities returned to their original values.

- Transaction Commit (if possible): If a transaction is close to completion and can be safely committed within a reasonable timeframe, this can be done to avoid data loss. However, this must be carefully managed to prevent the shutdown process from being blocked.

- Queuing and Retrying: Some systems use message queues to handle transactions. During shutdown, the system can stop accepting new messages, wait for the queue to drain, and then retry any failed transactions. This ensures that the work is completed even if the system experiences an interruption. For instance, an e-commerce platform can use a message queue to handle order processing. During shutdown, the system can process the queue and retry failed transactions before shutting down.

- Timeout and Abandonment: In cases where transactions cannot be completed within a reasonable time, a timeout can be set. After the timeout expires, the transaction is abandoned, and any partially completed operations are rolled back. This prevents the shutdown process from hanging indefinitely.

Methods for Ensuring Data Integrity During Shutdown

Protecting data integrity is paramount during a graceful shutdown. Several techniques are employed to minimize the risk of data loss or corruption.

- Atomic Operations: Utilize atomic operations for critical data updates. Atomic operations guarantee that a set of operations either all succeed or all fail, preventing partial updates. For example, when updating a bank account balance, use an atomic transaction to ensure the debit and credit operations are completed together.

- Data Replication: Implement data replication strategies, such as database mirroring or clustering. If one system fails, the replicated data ensures that data is available in another system.

- Regular Backups: Perform regular backups of the system’s data. Backups provide a means to restore the system to a previous state in case of data corruption or loss. This is a fundamental practice.

- Write-Ahead Logging (WAL): Employ write-ahead logging, where all changes are written to a log file before being applied to the main data store. This allows the system to replay the log and recover data in case of a crash. Most modern databases, like PostgreSQL and MySQL, utilize WAL.

- Checksums and Data Validation: Use checksums and data validation techniques to verify the integrity of data during shutdown. Checksums can detect corruption, and validation checks ensure that data meets the defined constraints.

Common Shutdown Errors and Prevention:

- Error: Data Corruption due to incomplete transactions.

- Prevention: Use transaction management features of the database, and rollback any unfinished transactions.

- Error: Resource leaks, such as unclosed file handles or database connections.

- Prevention: Ensure all resources are explicitly released during shutdown, utilizing `finally` blocks or equivalent mechanisms.

- Error: Deadlocks caused by conflicting resource access.

- Prevention: Implement a strict ordering of resource acquisition and release, or use timeout mechanisms to resolve deadlocks.

- Error: Data loss due to un-flushed buffers.

- Prevention: Ensure that all in-memory buffers are flushed to persistent storage before shutting down.

Optimizing Factor IX for Fault Tolerance

Fault tolerance is crucial for system reliability, especially when considering Factor IX, the aspects of fast startup and graceful shutdown. A fault-tolerant system continues to operate correctly despite the failure of some of its components. This section explores strategies for designing systems that can withstand failures related to startup and shutdown processes, ensuring high availability and minimizing downtime.

Minimizing Single Points of Failure in System Design

To design a robust system, identifying and eliminating single points of failure (SPOFs) is paramount. An SPOF is a component whose failure can bring down the entire system. Careful design and implementation are key to mitigating this risk.

- Redundancy: Implement redundant components for critical functionalities. If one component fails, a backup can seamlessly take over, preventing service interruption. For example, in a distributed database system, having multiple database servers ensures that if one server goes down, others can continue to serve requests.

- Load Balancing: Distribute workloads across multiple servers or resources. This prevents any single resource from being overwhelmed and also provides a level of fault tolerance. If one server becomes unavailable, the load balancer automatically redirects traffic to the remaining servers.

- Data Replication: Replicate data across multiple storage locations. This protects against data loss in case of a storage failure. Data replication is a cornerstone of fault tolerance in database systems and distributed file systems.

- Modular Design: Break down the system into independent modules. This limits the impact of a failure to a specific module, preventing it from cascading and affecting the entire system. Each module should be designed to function independently and communicate with other modules through well-defined interfaces.

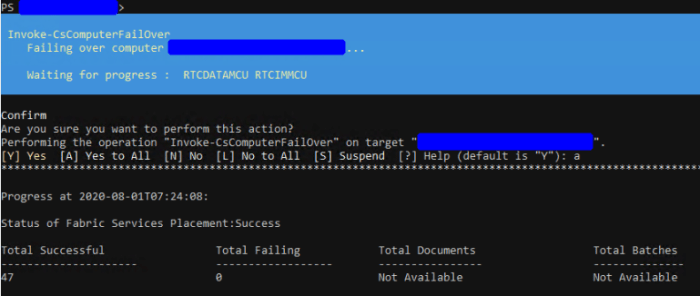

- Failover Mechanisms: Implement automatic failover mechanisms to detect failures and switch to backup components. This minimizes downtime and ensures that the system continues to operate even when a component fails. For example, a system can use a heartbeat mechanism to monitor the health of a primary server and automatically switch to a secondary server if the primary server becomes unresponsive.

Methods for Detecting and Handling Errors Related to Factor IX

Effective error detection and handling are essential for ensuring fault tolerance in startup and shutdown processes. Implementing robust monitoring and error-handling mechanisms allows the system to identify and respond to issues quickly.

- Health Checks: Implement health checks to monitor the status of critical components during startup and shutdown. These checks can verify that components are initialized correctly and that dependencies are available. For instance, a web server might check if it can connect to its database during startup.

- Logging and Monitoring: Comprehensive logging and monitoring are vital for detecting errors. Logs should capture detailed information about startup and shutdown events, including errors, warnings, and informational messages. Monitoring systems should track key metrics and alert operators to potential problems.

- Error Handling and Retries: Implement error-handling strategies, such as retrying failed operations. For example, if a service fails to start, the system could retry the startup process a few times before escalating the issue.

- Circuit Breakers: Use circuit breakers to prevent cascading failures. A circuit breaker monitors the success rate of calls to a dependent service. If the failure rate exceeds a threshold, the circuit breaker “trips” and temporarily blocks calls to that service, preventing it from consuming resources and potentially causing further problems.

- Idempotent Operations: Design operations to be idempotent, meaning that they can be executed multiple times without unintended side effects. This is particularly important during startup and shutdown, where operations might be retried.

Importance of Redundancy in Achieving High Availability

Redundancy is the cornerstone of high availability, ensuring that systems remain operational even when components fail. Implementing redundancy across critical components protects against outages and maintains service levels.

- Hardware Redundancy: Implement redundant hardware, such as servers, network devices, and storage systems. For example, using a redundant power supply unit (PSU) in a server ensures that the server can continue to operate even if one PSU fails.

- Software Redundancy: Use redundant software components, such as multiple instances of a service running on different servers. If one instance fails, the others can continue to serve requests.

- Network Redundancy: Implement redundant network connections and devices. This ensures that the system can continue to communicate even if a network link or device fails.

- Data Redundancy: Replicate data across multiple storage locations to protect against data loss. This ensures that the data is available even if one storage location fails.

- Geographical Redundancy: Deploy systems across multiple geographic locations. This protects against outages caused by natural disasters or other localized events. For example, a company might have its primary data center in one region and a backup data center in another region.

Impact of Fault Tolerance Mechanisms: A Descriptive Passage

Consider a scenario where an e-commerce platform relies on a distributed database. During the daily scheduled shutdown for maintenance, the system employs a graceful shutdown procedure. The database servers are configured with redundancy; three servers host the data, and the data is replicated across all of them. During shutdown, the system first stops accepting new transactions on each database server.

Then, it waits for all active transactions to complete and ensures that all data is synchronized across the replicas. Finally, it initiates the shutdown process for each server.If, during this process, one of the database servers encounters an error during the shutdown sequence – perhaps a corrupted file or a network issue preventing synchronization – the fault-tolerance mechanisms kick in.

The system, through its monitoring and health checks, detects the failure. It automatically activates a failover mechanism, which designates one of the remaining healthy servers as the primary, ensuring continuous availability of the data. The graceful shutdown procedure for the faulty server is then retried, or the server is isolated for troubleshooting without impacting the platform’s ability to process customer orders.

Customers remain unaware of the underlying issue. The platform’s administrators receive alerts about the failed shutdown, allowing them to investigate and address the problem, restoring full redundancy after the faulty server is repaired and brought back online. This is a real-world illustration of how redundancy and failover, combined with graceful shutdown, contribute to high availability and minimal disruption, preventing potential losses in sales and customer trust.

The platform continues to operate seamlessly, demonstrating the value of robust fault tolerance.

Monitoring and Logging for Robustness

Robustness in a system relies heavily on proactive monitoring and comprehensive logging. Effective monitoring allows for real-time assessment of system health and performance, enabling rapid identification of issues. Logging provides a detailed audit trail for troubleshooting, facilitating root cause analysis and preventing future occurrences. This section will explore key metrics, effective logging strategies, and the use of monitoring tools to enhance system robustness.

Key Metrics to Monitor for System Health and Performance

Monitoring the right metrics is crucial for maintaining a healthy and robust system. These metrics provide insights into various aspects of the system’s operation, allowing for early detection of anomalies and potential failures.

- CPU Utilization: Tracking CPU usage helps identify performance bottlenecks. High CPU utilization can indicate resource exhaustion or inefficient code.

- Memory Usage: Monitoring memory usage is essential to prevent memory leaks and ensure sufficient resources are available for operations. Sudden increases in memory consumption could signal a problem.

- Disk I/O: Disk Input/Output (I/O) performance impacts application responsiveness. Monitoring I/O metrics reveals potential disk saturation, which can degrade performance.

- Network Traffic: Network monitoring helps identify network congestion, latency issues, and potential security threats. Tracking bandwidth usage and error rates are key.

- Application Response Time: Measuring the time it takes for applications to respond to requests is crucial for user experience. Slow response times can indicate performance degradation.

- Error Rates: Monitoring error rates across all components helps identify and address software bugs or configuration problems. An increase in error rates often signals a critical issue.

- Database Performance: For systems that rely on databases, monitoring query performance, connection pools, and database server resources is vital. Slow database operations can significantly impact overall system performance.

- System Load Average: The system load average indicates the average number of processes waiting to run. High load averages suggest that the system is overloaded.

- Number of Active Connections: Tracking the number of active connections, especially in networking applications, is essential to ensure resource availability and prevent connection exhaustion.

Implementing Effective Logging Strategies for Troubleshooting

Effective logging is a cornerstone of robust system design. Well-structured logs provide a detailed history of system events, making troubleshooting and root cause analysis significantly easier.

- Structured Logging: Employ structured logging formats (e.g., JSON) to facilitate automated parsing and analysis. This allows for easier querying and filtering of log data.

- Log Levels: Utilize different log levels (e.g., DEBUG, INFO, WARN, ERROR, FATAL) to categorize log messages based on severity. This enables filtering of logs based on the context of the issue.

- Contextual Information: Include relevant contextual information in each log entry, such as timestamps, user IDs, request IDs, and component names. This helps to correlate events across different parts of the system.

- Centralized Logging: Implement a centralized logging system to collect logs from all system components in a single location. This simplifies log management and analysis.

- Log Rotation: Implement log rotation policies to manage log file size and prevent disk space exhaustion. This ensures that logs are periodically archived or deleted.

- Error Tracking: Integrate error tracking tools to automatically capture and report exceptions, errors, and warnings. This allows for quick identification and resolution of issues.

- Security Considerations: Protect sensitive information in logs, and ensure that logging practices comply with relevant security regulations. This involves avoiding logging sensitive data, such as passwords or personal information.

Utilizing Monitoring Tools to Proactively Identify Potential Issues

Monitoring tools automate the process of collecting and analyzing system metrics and logs, enabling proactive identification of potential issues. They provide real-time insights into system behavior and trigger alerts when predefined thresholds are exceeded.

- Alerting Systems: Configure alerting systems to notify administrators when critical metrics exceed predefined thresholds. These alerts should provide sufficient context for quick investigation.

- Dashboarding: Create dashboards to visualize key metrics and system health indicators. Dashboards provide a comprehensive overview of system performance and allow for quick identification of anomalies.

- Anomaly Detection: Implement anomaly detection algorithms to automatically identify unusual patterns in system behavior. This helps detect problems that might not be immediately apparent through traditional monitoring.

- Performance Monitoring: Use performance monitoring tools to track application response times, resource utilization, and other performance-related metrics. This helps identify performance bottlenecks and optimize system performance.

- Log Analysis Tools: Utilize log analysis tools to automatically parse, analyze, and correlate log data. These tools can help identify patterns, trends, and potential issues in logs.

- Infrastructure Monitoring: Implement infrastructure monitoring tools to monitor the health and availability of underlying infrastructure components, such as servers, networks, and storage devices.

Correlating Monitoring Data with Factor IX Events

Correlating monitoring data with Factor IX events is essential for understanding how system behaviors affect robustness. This helps in identifying the root cause of issues and preventing future occurrences.

| Monitoring Metric | Factor IX Event | Correlation | Impact |

|---|---|---|---|

| High CPU Utilization | Unresponsive Application | CPU exhaustion leads to slow processing of requests. | Degraded user experience; potential service outage. |

| Increased Error Rates | Failed Graceful Shutdown | Errors during operation might prevent the system from shutting down cleanly. | Data loss or corruption; potential for extended downtime. |

| Slow Database Query Performance | Delayed Fast Startup | Slow database access can delay the loading of critical data. | Extended startup time; potential for initial service unavailability. |

| High Disk I/O | Slow Shutdown | Disk I/O bottlenecks can slow down the writing of data and the cleanup process. | Prolonged shutdown time; increased risk of data inconsistency. |

Testing and Validation of Startup and Shutdown Processes

Ensuring the robustness of startup and shutdown procedures is paramount for system reliability. Rigorous testing and validation are crucial steps to identify and mitigate potential issues before they impact system availability and data integrity. This section focuses on the critical aspects of testing these processes, including test examples, failure simulation techniques, and various testing methodologies.

Test Examples to Validate Startup and Shutdown Procedures

Thorough testing requires a diverse set of test cases designed to cover various scenarios. These examples illustrate how to validate startup and shutdown behavior under different conditions.

- Successful Startup Test: This test verifies that the system starts up correctly under normal operating conditions. It checks that all services and components initialize as expected, and that the system is ready to accept requests. The test should confirm the successful loading of configurations, the establishment of network connections, and the availability of all required resources. For instance, in a web server environment, this test would ensure that the web server process starts, listens on the correct port, and serves static content.

- Shutdown with Grace Test: This test validates that the system shuts down gracefully. It ensures that all running processes terminate in an orderly fashion, data is saved, and resources are released. For example, in a database system, this test verifies that all transactions are committed, and that the database server shuts down without data corruption. A successful test should show no errors in the logs, and a clean shutdown state.

- Startup After Unexpected Shutdown Test: Simulates a scenario where the system crashes or is abruptly shut down. The test then verifies that the system can recover and restart without data loss or corruption. This test checks for the integrity of persistent data and the ability to resume operations from the last known good state. For instance, a file system might be tested to ensure it can recover from a power failure without losing data.

- Startup with Dependency Failures Test: Checks the system’s behavior when one or more of its dependencies are unavailable during startup. This test validates the system’s ability to handle failures gracefully and continue operation, or to degrade gracefully. For example, if a system depends on a network service, the test would simulate the unavailability of that service during startup and confirm that the system either continues to operate without it (if possible) or fails gracefully, providing informative error messages.

- Shutdown Under High Load Test: Evaluates the shutdown process under heavy load. This test assesses whether the shutdown process can complete successfully even when the system is handling a large number of requests or transactions. The test checks for proper resource management during shutdown, ensuring that no data is lost or corrupted, even under stress.

Best Practices for Simulating Failure Scenarios During Testing

Simulating failure scenarios is critical for identifying weaknesses in startup and shutdown procedures. The following practices enhance the effectiveness of these simulations.

- Power Cycle Simulation: Simulate a sudden power loss during various stages of startup and shutdown. This can be achieved by using power interruption tools or by manually cutting power to the system. This tests the system’s ability to recover from unexpected shutdowns.

- Dependency Failure Simulation: Simulate the failure of external dependencies such as databases, network services, or other critical components. This can be achieved by stopping or isolating these dependencies during startup or shutdown to test the system’s reaction to unavailability.

- Resource Exhaustion Simulation: Simulate resource exhaustion, such as memory leaks or disk space issues, to test the system’s ability to handle these conditions gracefully. This can be achieved by creating memory leaks or filling up disk space during startup or shutdown.

- Network Partitioning Simulation: Simulate network partitions to test the system’s resilience to network outages. This can be achieved by using network simulation tools or by manually disconnecting network connections.

- Data Corruption Simulation: Introduce data corruption to test the system’s ability to detect and recover from data integrity issues. This can be achieved by modifying data on disk or in memory to simulate data corruption.

Importance of Thorough Testing in Ensuring System Robustness

Thorough testing is indispensable for building robust systems. The following points highlight the critical role of comprehensive testing.

- Early Defect Detection: Testing helps identify and fix defects early in the development lifecycle, reducing the cost and effort required to address them later.

- Improved System Stability: Testing ensures that the system can handle unexpected events and failures gracefully, improving its overall stability.

- Enhanced Data Integrity: Testing verifies that data is protected during startup and shutdown, minimizing the risk of data loss or corruption.

- Increased Uptime: By identifying and resolving potential issues, testing helps to increase system uptime and availability.

- Compliance with Requirements: Testing ensures that the system meets all the required performance, security, and reliability standards.

Testing Methods for Startup and Shutdown Procedures

Various testing methods can be employed to validate startup and shutdown procedures.

- Unit Testing: Testing individual components or modules in isolation to ensure they function correctly during startup and shutdown. This involves writing tests for each function or method that handles startup or shutdown tasks.

- Integration Testing: Testing the interaction between different components or modules to ensure they work together seamlessly during startup and shutdown. This tests the dependencies and interactions between various parts of the system.

- System Testing: Testing the entire system to ensure it meets the required specifications for startup and shutdown. This involves testing the system as a whole, including all its components and dependencies.

- Load Testing: Testing the system under various load conditions to ensure that startup and shutdown procedures can handle high volumes of traffic or transactions.

- Performance Testing: Measuring the time it takes for the system to start up and shut down under different conditions to optimize performance.

- Fault Injection Testing: Deliberately introducing faults or failures into the system to test its ability to handle them gracefully.

- Chaos Engineering: Experimenting on a system in production to build confidence in the system’s capability to withstand turbulent conditions. This helps to proactively identify weaknesses in the system.

- Regression Testing: Re-running tests after code changes to ensure that existing functionality is not broken and that the changes have not introduced new issues in startup or shutdown.

Configuration Management and Factor IX

Configuration management is crucial for maintaining system stability, especially in the context of fast startup and graceful shutdown (Factor IX). Effective configuration practices ensure that systems consistently operate as intended, minimizing the risk of errors during critical operations. A well-defined configuration strategy is essential for a robust and reliable system.

The Role of Configuration Management in System Stability

Configuration management plays a pivotal role in system stability by controlling the settings and parameters that define a system’s behavior. It ensures consistency across environments, from development to production.

- Configuration management helps to maintain system stability by minimizing the risk of human error during deployment and updates.

- It allows for the quick and reliable restoration of a system to a known good state in case of failures.

- Centralized configuration management enables consistent behavior across all instances of an application, regardless of the environment.

- Effective configuration management facilitates easier debugging and troubleshooting by providing a clear understanding of the system’s settings.

Methods for Managing and Deploying Configuration Changes

Several methods can be used to manage and deploy configuration changes, each with its own strengths and weaknesses. The choice of method depends on the complexity of the system, the size of the team, and the specific requirements of the environment.

- Version Control Systems: Using version control systems (e.g., Git) allows tracking of configuration changes over time. This provides the ability to revert to previous configurations and understand the history of changes. Configuration files are treated like code.

- Configuration Management Tools: Tools like Ansible, Chef, Puppet, and Terraform automate the deployment and management of configurations across multiple servers or environments. These tools ensure that configurations are consistently applied.

- Configuration as Code (IaC): This approach treats infrastructure and configuration as code. This enables the automation of infrastructure provisioning and configuration management, providing greater control and consistency.

- Centralized Configuration Stores: Systems like Consul, etcd, or ZooKeeper can store configurations centrally, allowing applications to retrieve and update configurations dynamically. This promotes flexibility and ease of management.

- Immutable Infrastructure: Building and deploying immutable infrastructure involves creating new instances with the required configuration each time changes are needed, rather than modifying existing instances. This approach reduces configuration drift.

The Impact of Incorrect Configurations on Startup and Shutdown

Incorrect configurations can have significant negative impacts on both system startup and shutdown processes, potentially leading to instability, data loss, and security vulnerabilities.

- Startup Failures: Incorrect configurations can cause services to fail to start, preventing the system from becoming fully operational. For example, a database connection string pointing to the wrong server will prevent a database-dependent application from starting.

- Shutdown Issues: Incorrect shutdown configurations might result in data loss or corruption. For example, if the shutdown script doesn’t properly flush caches or save data, important information can be lost.

- Performance Degradation: Suboptimal configuration settings can lead to performance issues during both startup and runtime. For instance, an improperly configured memory allocation can cause the system to run slowly.

- Security Vulnerabilities: Incorrectly configured security settings can expose the system to security risks. For example, a misconfigured firewall rule could allow unauthorized access.

Comparison of Configuration Management Strategies

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| Version Control (Git) | Configuration files are stored and managed in a version control system, enabling tracking and rollback. | Simple to implement; provides a clear history of changes; good for small-scale projects. | Manual deployment required; does not automate configuration application across multiple servers; may not be suitable for complex deployments. |

| Configuration Management Tools (Ansible, Chef, Puppet) | Automates configuration management and deployment across multiple servers using declarative or imperative configurations. | Highly automated; ensures consistency; supports infrastructure as code; scales well. | Requires learning a new tool and its syntax; can be complex to set up initially; potential for vendor lock-in. |

| Configuration as Code (IaC) | Infrastructure and configuration are defined as code, enabling automation and version control of the entire system. | Provides full automation; enables consistent deployments; improves reproducibility; enhances collaboration. | Requires a strong understanding of infrastructure as code principles; can be complex to manage; requires a robust testing strategy. |

| Centralized Configuration Stores (Consul, etcd) | Configurations are stored centrally and accessed by applications at runtime, enabling dynamic configuration updates. | Allows for dynamic configuration updates; simplifies management of distributed systems; improves agility. | Requires a dedicated configuration store; can introduce a single point of failure; requires careful security management. |

Real-World Examples and Case Studies

The principles of Factor IX, encompassing fast startup and graceful shutdown, are crucial for building reliable and resilient systems. Examining real-world applications provides invaluable insights into the practical application of these concepts, highlighting both successes and the challenges encountered during implementation. This section explores concrete examples, analyzes difficulties, and distills crucial lessons learned from diverse scenarios.

Case Study: Cloud-Based Application with Fast Startup and Graceful Shutdown

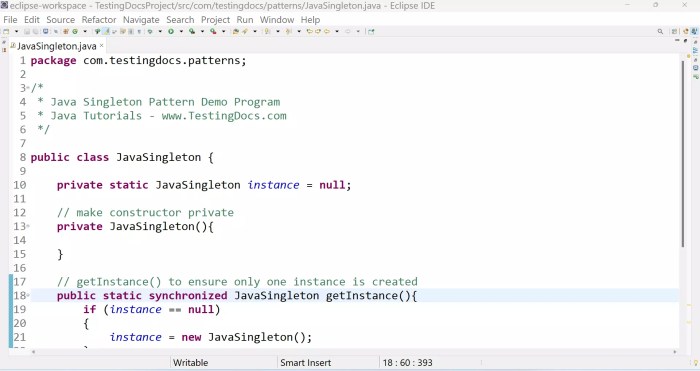

Consider a large-scale, cloud-based e-commerce platform. This platform relies heavily on its ability to rapidly scale resources up and down based on user demand. Implementing fast startup and graceful shutdown is essential for this dynamic environment.The platform’s architecture is designed for horizontal scalability, meaning it can add or remove instances of its application servers as needed. The application servers are containerized using Docker and orchestrated using Kubernetes.

This architecture facilitates rapid deployment and scaling.Fast startup is achieved through several key techniques:

- Optimized Container Images: The Docker images are built to be as small as possible, reducing download and instantiation times. This involves using a minimal base image, pre-caching dependencies, and only including necessary components.

- Connection Pooling: Database connections are established and pooled during startup, minimizing the overhead of creating new connections when handling user requests.

- Lazy Loading of Resources: Components that are not immediately required are loaded on-demand, allowing the application to become responsive quickly.

- Health Checks: Kubernetes health checks ensure that instances are only added to the load balancer after they have fully initialized and are ready to serve traffic. These checks include readiness probes to verify the application’s ability to handle requests.

Graceful shutdown is implemented to prevent data loss and ensure a smooth user experience:

- Signal Handling: The application servers are configured to gracefully handle termination signals (e.g., SIGTERM).

- Connection Draining: When a termination signal is received, the application stops accepting new requests but allows existing requests to complete.

- Data Persistence: Critical data is saved to persistent storage before shutdown. This might involve flushing caches, committing transactions, and ensuring data integrity.

- Resource Release: The application releases all allocated resources (e.g., database connections, file handles) during shutdown.

This architecture, with its focus on rapid startup and graceful shutdown, allows the e-commerce platform to handle sudden spikes in traffic during peak shopping seasons while minimizing downtime and ensuring data consistency.

Challenges Faced When Implementing Factor IX Principles

Implementing Factor IX principles is not without its difficulties. Several challenges commonly arise during the development and deployment of robust systems.

- Dependency Management: Managing dependencies, especially in complex systems, can be challenging. Ensuring that all dependencies are available and correctly configured during startup and shutdown requires careful planning and testing.

- Data Consistency: Maintaining data consistency during startup and shutdown is crucial. This often involves implementing transaction management, ensuring that data is written to persistent storage before shutdown, and handling potential failures.

- Complexity: Implementing fast startup and graceful shutdown can add complexity to the system, particularly when dealing with distributed systems or microservices architectures.

- Testing: Thoroughly testing startup and shutdown procedures is essential. This includes testing under various failure scenarios, such as network outages or hardware failures. Automated testing is critical for ensuring that these processes function correctly.

- Resource Contention: Ensuring that resources are properly released during shutdown is critical. Failure to do so can lead to resource leaks and system instability.

Lessons Learned from Real-World Scenarios

Experience gained from implementing Factor IX principles in various systems has yielded valuable insights. These lessons are essential for building robust and reliable systems.

- Prioritize Simplicity: Design the system with simplicity in mind. Avoid unnecessary complexity, which can make startup and shutdown procedures more difficult to manage.

- Automate Everything: Automate as much of the startup and shutdown process as possible. This includes building, testing, and deploying the application. Automation reduces the risk of human error and increases efficiency.

- Monitor and Log: Implement comprehensive monitoring and logging to track the startup and shutdown processes. This allows for the early detection of problems and facilitates troubleshooting.

- Test Extensively: Thoroughly test the system under various failure scenarios. Simulate network outages, hardware failures, and other potential issues to ensure that the system can handle them gracefully.

- Embrace Iteration: Factor IX implementation is an iterative process. Continuously refine the startup and shutdown procedures based on feedback and experience.

Illustrative Architecture of a Robust System

A robust system, designed with Factor IX principles in mind, typically features a well-defined architecture that supports fast startup and graceful shutdown. Consider a simplified example of a microservices-based application.

Description of the architectural components and their roles:

This diagram illustrates a simplified microservices architecture. The system is composed of several interconnected microservices, each responsible for a specific function.

The architecture includes the following key components:

- Load Balancer: Distributes incoming traffic across the available instances of each microservice. It uses health checks to ensure that traffic is only routed to healthy instances.

- Microservices: These are the individual application components, such as a user service, product service, and order service. Each microservice is designed to be independent and scalable. They are typically containerized and deployed using a container orchestration platform like Kubernetes.

- Service Registry: A central repository that maintains a list of available microservice instances and their locations. This allows microservices to discover and communicate with each other.

- Database: Provides persistent storage for the application’s data. The database is designed for high availability and data consistency.

- Message Queue: Used for asynchronous communication between microservices. It allows microservices to decouple their operations and handle events in a reliable manner.

Fast Startup Implementation:

Each microservice utilizes techniques for fast startup. The Docker images are optimized to be small, reducing the time to download and instantiate them. Database connections are established during startup and pooled for efficient use. Components are initialized in parallel where possible, and lazy loading is employed to defer the initialization of non-critical components. Health checks are implemented to ensure that each service is fully initialized and ready to serve requests before being added to the load balancer’s pool.

Graceful Shutdown Implementation:

When a microservice receives a shutdown signal, it initiates a graceful shutdown sequence. The service stops accepting new requests while allowing existing requests to complete. All in-flight transactions are completed, and data is flushed to persistent storage. The service releases all allocated resources, such as database connections and file handles. Finally, the service deregisters itself from the service registry to prevent new requests from being routed to it.

Benefits of the architecture:

This architecture is designed to be resilient and highly available. It allows the system to scale quickly and handle failures gracefully. The use of microservices, containerization, and orchestration enables fast deployment and updates. The combination of fast startup and graceful shutdown ensures that the system can recover quickly from failures and maintain data consistency. This architectural approach, incorporating Factor IX principles, enhances the overall reliability and responsiveness of the system.

Epilogue

In conclusion, mastering how to maximize robustness with fast startup and graceful shutdown (Factor IX) is paramount for achieving high availability and system stability. By understanding the underlying principles, implementing best practices, and continuously monitoring performance, we can create systems that are resilient, efficient, and capable of meeting the demands of any environment. Embracing Factor IX is not just about technical proficiency; it’s about building trust and ensuring a seamless user experience.

Popular Questions

What are the primary benefits of a graceful shutdown?

A graceful shutdown ensures data integrity by allowing in-flight transactions to complete, preventing data corruption, and providing a clean state for the next startup.

How does caching impact startup time?

Caching frequently accessed data and components can significantly reduce startup time by minimizing the need to load resources from slower storage or external sources.

What are some common causes of startup bottlenecks?

Bottlenecks can arise from slow disk I/O, excessive network requests, inefficient code, or dependencies on external services that are slow to respond. Addressing these areas can improve startup performance.

What testing methods are crucial for validating startup and shutdown procedures?

Unit tests, integration tests, and end-to-end tests are crucial. Simulating failure scenarios (e.g., power loss during shutdown) is also important to validate the robustness of the processes.

How can I minimize single points of failure in my system?

Employing redundancy (e.g., multiple servers, data replication), using load balancing, and implementing automated failover mechanisms are essential to minimize single points of failure and improve overall system resilience.