The shift towards microservices architectures offers incredible benefits, from increased agility to enhanced scalability. However, with this architectural style comes inherent complexity. Managing this complexity is crucial for realizing the full potential of microservices and avoiding the pitfalls of a distributed system that’s difficult to understand, maintain, and scale. This guide will explore the key aspects of effectively managing microservices complexity.

We’ll delve into essential topics, starting with decomposing monolithic applications and progressing through communication patterns, service discovery, API gateways, and the critical areas of monitoring, tracing, and security. We’ll also cover versioning, deployment strategies, configuration management, and service meshes, providing a holistic view of the challenges and solutions in this dynamic environment. This structured approach will equip you with the knowledge to build and operate resilient, scalable microservices applications.

Decomposition Strategies for Microservices

Microservices architecture, while offering significant benefits in terms of scalability, agility, and resilience, introduces complexity in managing the interactions and dependencies between these independent services. One of the critical steps in adopting a microservices architecture is the decomposition of a monolithic application. Choosing the right decomposition strategy is paramount to ensuring the success of the migration. This involves carefully analyzing the existing application and identifying the most appropriate way to break it down into smaller, manageable services.

Decomposition by Business Capability

Decomposition by business capability focuses on organizing microservices around the core functions of the business. This approach aligns the technical architecture with the business domain, making it easier to understand and maintain the system. Each microservice encapsulates a specific business capability, such as user management, order processing, or product catalog.

- Advantages: This strategy promotes high cohesion within each service, as related functionalities are grouped together. It also facilitates independent development and deployment, allowing teams to work on specific business areas without affecting other parts of the system. Furthermore, it simplifies scaling, as services can be scaled independently based on the demand for specific business capabilities.

- Disadvantages: Identifying and defining business capabilities can be challenging, especially in complex organizations. This approach might lead to tight coupling between services if the capabilities are not well-defined, or if dependencies are not managed carefully. It can also result in duplicated code or data across multiple services if the business capabilities overlap.

Decomposition by Subdomain

Decomposition by subdomain leverages the principles of Domain-Driven Design (DDD). It involves breaking down the application based on the different subdomains that make up the overall business domain. Each subdomain represents a specific area of expertise or responsibility within the business.

- Advantages: This strategy promotes a deep understanding of the business domain and facilitates the creation of highly cohesive services. It helps to reduce complexity by isolating different areas of the business. It also enables the creation of bounded contexts, which are independent areas of responsibility with their own models and data.

- Disadvantages: This approach requires a strong understanding of DDD and the business domain. Identifying and defining subdomains can be complex, and it may require significant upfront analysis. It might also lead to increased communication overhead between services, as different subdomains often need to interact with each other.

Decomposition by Verb (or Task)

Decomposition by verb, also known as decomposition by task, focuses on breaking down the application based on the actions or tasks that it performs. This approach is often used for simpler applications or for specific functionalities within a larger system.

- Advantages: This strategy can be relatively easy to implement, especially for applications with well-defined tasks. It allows for the creation of highly specialized services that perform specific functions. It can also facilitate the use of different technologies for different tasks.

- Disadvantages: This approach can lead to services that are highly coupled and dependent on each other. It might also result in a lack of cohesion within each service, as related functionalities might be spread across multiple services. It can be difficult to scale services independently if they are tightly coupled.

Decomposition by Entity

Decomposition by entity involves breaking down the application based on the core entities or data objects that it manages. This approach is suitable for applications that are heavily data-driven, such as e-commerce platforms or content management systems.

- Advantages: This strategy can lead to a clear separation of concerns and a well-defined data model. It allows for the creation of independent services that manage specific entities. It can also facilitate the use of different data storage technologies for different entities.

- Disadvantages: This approach might lead to tight coupling between services if the entities are highly related. It can also result in a lack of cohesion within each service, as related functionalities might be spread across multiple services. It can be challenging to manage data consistency across multiple services.

Hypothetical Scenario: E-commerce Platform Decomposition

Consider an e-commerce platform currently implemented as a monolithic application. We can illustrate the decomposition process using a table to showcase the different strategies. The platform includes functionalities such as user management, product catalog, order processing, payment processing, and shipping.

| Decomposition Strategy | Microservices Examples | Advantages and Considerations |

|---|---|---|

| By Business Capability |

|

|

| By Subdomain |

|

|

| By Entity |

|

|

The choice of the best decomposition strategy depends on the specific requirements and characteristics of the application and the business. A combination of strategies might also be appropriate. The goal is to create a system that is modular, scalable, and maintainable.

Communication Patterns Between Microservices

Managing communication between microservices is a crucial aspect of building a resilient and scalable system. The choice of communication pattern significantly impacts performance, fault tolerance, and overall system complexity. Selecting the right pattern depends on the specific requirements of each interaction, balancing the need for real-time updates with the ability to handle failures gracefully.

Synchronous and Asynchronous Communication

Synchronous communication involves a direct request-response interaction between services, where the calling service waits for a response before continuing its operation. Asynchronous communication, on the other hand, decouples the services, allowing the calling service to send a message and continue its operation without waiting for an immediate response.Synchronous communication is best suited for scenarios where immediate responses are essential, such as retrieving data needed for a user interface or completing a critical transaction.

For example, an e-commerce application might use synchronous communication to verify a user’s credit card details during checkout. This is because the checkout process cannot proceed until the payment is authorized.Asynchronous communication excels in situations where immediate responses are not critical and where decoupling services can improve scalability and fault tolerance. A good example is sending an email notification after a user registers.

The registration service can send a message to an email service and continue processing the registration without waiting for the email to be sent. If the email service fails, the registration process is not blocked, and the email can be retried later. Another example includes processing a large batch of data. A service could queue the data processing tasks, and the workers will pick them up and process them asynchronously.

Message Queues and Event-Driven Architectures

Message queues and event-driven architectures are two common approaches to implementing asynchronous communication between microservices. They provide different levels of flexibility and suitability for different use cases.Message queues, such as RabbitMQ, Kafka, or Amazon SQS, act as intermediaries between services. A service publishes a message to the queue, and other services subscribe to the queue to receive and process the messages.

Message queues offer features like message persistence, guaranteed delivery, and load balancing, making them suitable for handling high volumes of messages and ensuring reliability.Event-driven architectures build upon the concept of message queues, where services publish events (messages representing significant occurrences) and other services react to these events by subscribing to them. This architecture promotes loose coupling and allows services to react to changes in other services without direct dependencies.Message queues are well-suited for scenarios requiring reliable message delivery and decoupling of services, such as order processing, background tasks, or data synchronization.

Event-driven architectures are ideal for building highly scalable and responsive systems where services need to react to events in real-time, such as real-time dashboards, notifications, or complex business processes.For instance, consider an e-commerce platform.* Message Queue Use Case: When a customer places an order, the order service can publish a message to a message queue. A fulfillment service then consumes this message to process the order, and a shipping service consumes it to ship the order.

Event-Driven Architecture Use Case

When an item’s stock level changes, an event is published. Inventory service emits an “item.stock.updated” event. This event can trigger updates to the product catalog, the recommendations engine, and even trigger alerts to the purchasing department if the stock level falls below a certain threshold.The choice between message queues and event-driven architectures depends on the complexity of the system and the desired level of decoupling.

Message queues offer a simpler approach for basic asynchronous communication, while event-driven architectures provide more flexibility and scalability for complex systems.

Best Practices for Inter-Service Communication

Implementing effective inter-service communication requires careful consideration of several factors. Following these best practices can improve the reliability, performance, and maintainability of a microservices architecture.

- Choose the Right Communication Pattern: Select synchronous or asynchronous communication based on the specific needs of each interaction, considering factors like response time requirements and fault tolerance.

- Use API Gateways: Implement API gateways to manage external access to microservices, providing features like authentication, authorization, rate limiting, and request routing.

- Employ Service Discovery: Use service discovery mechanisms to dynamically locate and connect to services, especially in dynamic environments where service instances may change frequently. Examples include Consul, etcd, or Kubernetes DNS.

- Implement Circuit Breakers: Implement circuit breakers to prevent cascading failures. If a service becomes unavailable or unresponsive, the circuit breaker can temporarily stop sending requests to that service, allowing the system to recover.

- Use Idempotent Operations: Design operations to be idempotent, meaning that executing the same operation multiple times has the same effect as executing it once. This is crucial for handling retries in asynchronous communication.

- Implement Retry Mechanisms: Implement retry mechanisms with exponential backoff to handle transient failures. When a service fails, the calling service should retry the request after a delay, increasing the delay with each retry.

- Monitor and Log Effectively: Implement comprehensive monitoring and logging to track service performance, identify errors, and diagnose issues. Centralized logging and distributed tracing are essential for troubleshooting.

- Use a Standardized Communication Format: Choose a standardized communication format, such as JSON or Protocol Buffers, for exchanging data between services. This ensures interoperability and simplifies data serialization/deserialization.

- Consider API Versioning: Implement API versioning to allow for backward compatibility and manage changes to service APIs without breaking existing clients.

- Secure Communication: Secure communication between services using techniques like TLS/SSL encryption and authentication mechanisms such as OAuth or JWT.

Service Discovery and Registration

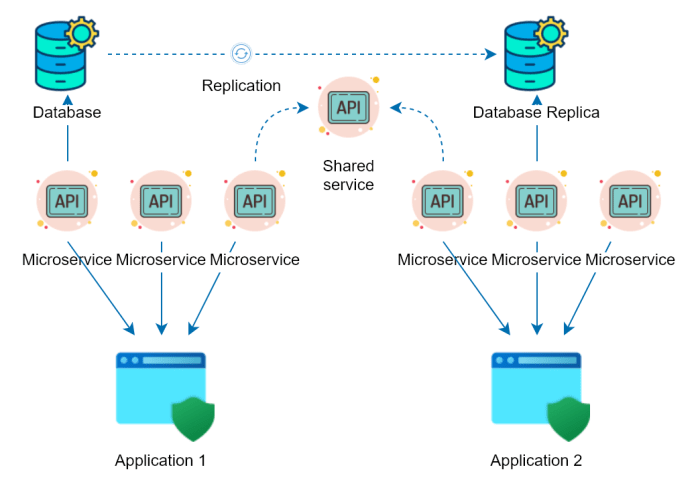

In a microservices architecture, services are designed to be independent and often deployed dynamically. This dynamism necessitates a robust mechanism for services to locate and communicate with each other. Service discovery and registration provide this critical functionality, enabling services to find and interact with other services without hardcoded addresses or dependencies. This section delves into the importance of service discovery, explores common mechanisms, demonstrates implementation, and addresses associated challenges.

Importance of Service Discovery in a Microservices Architecture

Service discovery is a fundamental aspect of microservices architecture, facilitating the seamless operation and scalability of distributed systems. Its significance stems from the inherent characteristics of microservices, which are often deployed dynamically, scaled independently, and may experience frequent updates.

- Dynamic Service Locations: Microservices often run on different hosts, containers, or even cloud instances, with their network locations (IP addresses and ports) subject to change. Service discovery ensures that services can locate each other regardless of these changes.

- Scalability and Resilience: When a service needs to scale, new instances are created. Service discovery allows other services to automatically discover and utilize these new instances, improving overall system performance and resilience. Similarly, if a service instance fails, service discovery mechanisms can route traffic to healthy instances, maintaining service availability.

- Loose Coupling: Service discovery promotes loose coupling between services. Services don’t need to know the specific network locations of other services; they only need to know the logical service name. This reduces dependencies and makes it easier to update and deploy services independently.

- Improved Fault Tolerance: Service discovery systems often incorporate health checks. This means that they can detect service failures and automatically remove unhealthy instances from the service registry, preventing traffic from being routed to unavailable services. This improves the overall fault tolerance of the system.

- Simplified Deployment and Management: Service discovery simplifies deployment and management tasks. Services can be deployed and scaled without requiring manual configuration changes in other services. This automation streamlines the deployment process and reduces the potential for human error.

Common Service Discovery Mechanisms

Several technologies are available for implementing service discovery. These tools provide the infrastructure for service registration, health checks, and service lookup.

- Consul: Consul is a service mesh solution that provides service discovery, health checks, and key-value storage. It uses a distributed, highly available architecture and offers a rich set of features, including DNS and HTTP interfaces for service discovery. Consul is often used in cloud-native environments due to its flexibility and ease of use.

- etcd: etcd is a distributed key-value store that can be used for service discovery. It’s designed to be highly available and consistent, making it suitable for storing service registration information. etcd is a core component of Kubernetes and is frequently used in containerized environments.

- Kubernetes Service Discovery: Kubernetes provides built-in service discovery through its Service resources. A Kubernetes Service acts as an abstraction layer over a set of pods, providing a stable IP address and DNS name that other pods can use to access the service. Kubernetes also offers health checks and load balancing features.

- ZooKeeper: ZooKeeper, while originally designed for coordination in distributed systems, can also be used for service discovery. It provides a hierarchical namespace for storing service information and offers features like ephemeral nodes for representing service instances. However, ZooKeeper is less commonly used for service discovery compared to Consul and etcd.

Implementing Service Registration and Discovery using Consul (Example)

Consul is a popular choice for service discovery due to its ease of use and comprehensive features. The following demonstrates how to implement service registration and discovery using Consul, assuming Consul is already running.

1. Service Registration:

Each microservice registers itself with Consul. This involves providing Consul with information about the service, such as its name, IP address, port, and health check endpoint.

Example (using a fictional ‘auth-service’ written in Python):

import consulimport requestsimport os# Consul configurationCONSUL_HOST = os.environ.get('CONSUL_HOST', 'localhost')CONSUL_PORT = int(os.environ.get('CONSUL_PORT', 8500))SERVICE_NAME = 'auth-service'SERVICE_ID = 'auth-service-1' # Unique ID for each service instanceSERVICE_ADDRESS = '192.168.1.10' # Replace with the actual IP addressSERVICE_PORT = 8000HEALTH_CHECK_URL = f"http://SERVICE_ADDRESS:SERVICE_PORT/health"# Connect to Consulc = consul.Consul(host=CONSUL_HOST, port=CONSUL_PORT)# Register the servicetry: c.agent.service.register( name=SERVICE_NAME, service_id=SERVICE_ID, address=SERVICE_ADDRESS, port=SERVICE_PORT, check=consul.Check.http(HEALTH_CHECK_URL, interval='10s') ) print(f"Service 'SERVICE_NAME' registered successfully.")except Exception as e: print(f"Error registering service: e") This Python code registers the ‘auth-service’ with Consul. It specifies the service name, a unique service ID, the service’s IP address, port, and a health check endpoint. The health check is crucial; it allows Consul to monitor the service’s health and remove unhealthy instances from the service registry.

2. Service Discovery:

Other services discover the ‘auth-service’ by querying Consul for its information.

Example (in a hypothetical ‘user-service’ also written in Python):

import consulimport requestsimport os# Consul configurationCONSUL_HOST = os.environ.get('CONSUL_HOST', 'localhost')CONSUL_PORT = int(os.environ.get('CONSUL_PORT', 8500))SERVICE_NAME = 'auth-service'# Connect to Consulc = consul.Consul(host=CONSUL_HOST, port=CONSUL_PORT)# Discover the servicetry: index, services = c.health.service(SERVICE_NAME, passing=True) if services: # Assuming only one instance is needed. service_address = services[0]['Service']['Address'] service_port = services[0]['Service']['Port'] auth_service_url = f"http://service_address:service_port" print(f"Found auth-service at: auth_service_url") # Example: Making a request to the auth-service try: response = requests.get(f"auth_service_url/api/authenticate") # Example endpoint response.raise_for_status() # Raise an exception for bad status codes print(f"Authentication successful. Response: response.text") except requests.exceptions.RequestException as e: print(f"Error communicating with auth-service: e") else: print(f"auth-service not found.")except Exception as e: print(f"Error discovering service: e") This code queries Consul for the ‘auth-service’. It retrieves the service’s address and port and then constructs the service URL. The code includes an example of making a request to the discovered service, demonstrating how other services can utilize the information obtained through service discovery.

3. Health Checks:

Health checks are vital for ensuring service availability. Consul periodically checks the health of registered services. If a health check fails, Consul removes the unhealthy service instance from its service registry.

The example above uses an HTTP health check: check=consul.Check.http(HEALTH_CHECK_URL, interval='10s')

This tells Consul to make an HTTP GET request to the specified URL (e.g., /health) every 10 seconds. The service must respond with a 200 OK status code for the health check to pass. Other check types include TCP checks and script-based checks.

Potential Challenges and Solutions for Service Discovery

Implementing service discovery in a dynamic environment presents certain challenges. Effective strategies can mitigate these issues and ensure the reliability of the system.

- Network Latency: Service discovery introduces network latency. Services must query the service discovery mechanism to locate other services.

- Solution: Employ caching mechanisms. Services can cache the discovered service information for a short period to reduce the frequency of queries to the service discovery system. Configure appropriate timeouts and retry mechanisms to handle potential network issues.

- Consistency: Ensuring consistency across the service registry is critical. Stale or inconsistent data can lead to communication failures.

- Solution: Use a strongly consistent service discovery system (e.g., etcd). Implement health checks to quickly detect and remove unhealthy service instances. Employ eventual consistency strategies with appropriate data reconciliation mechanisms to handle transient inconsistencies.

- Health Check Reliability: Health checks must be reliable. False positives or negatives can lead to service outages or unnecessary traffic redirection.

- Solution: Implement robust health checks that accurately reflect the service’s health. Consider using multiple health checks from different perspectives (e.g., application-level and infrastructure-level checks). Implement circuit breakers to protect services from failing dependencies.

- Security: Service discovery systems can be vulnerable to security threats. Attackers could potentially manipulate the service registry.

- Solution: Secure the service discovery system with authentication and authorization mechanisms. Encrypt communication between services and the service discovery system. Implement network segmentation to restrict access to the service discovery system.

- Scalability of the Service Discovery System: The service discovery system itself needs to be scalable to handle the increasing number of services and instances.

- Solution: Choose a service discovery system that is designed for scalability (e.g., Consul, etcd). Deploy the service discovery system in a highly available configuration. Monitor the performance of the service discovery system and scale it as needed.

API Gateway Implementation and Management

Managing the complexity of microservices requires careful consideration of how clients interact with the underlying services. An API gateway acts as a single entry point for all client requests, simplifying the interaction and providing a layer of abstraction. This approach decouples the client from the microservices, allowing for independent evolution and scaling of each service without impacting the client.

Role of an API Gateway in a Microservices Architecture

The API gateway is a crucial component in a microservices architecture, serving several key functions. Its primary role is to act as a reverse proxy, receiving all client requests and routing them to the appropriate microservices. This central point of contact simplifies the client-side interaction and provides several advantages.

- Request Routing: The API gateway directs incoming requests to the correct microservices based on the request’s URL, headers, or other criteria. This routing logic ensures that each request reaches the appropriate service responsible for handling it.

- Authentication and Authorization: The API gateway handles authentication, verifying the identity of the client, and authorization, determining whether the client has permission to access a specific resource. This centralized security layer simplifies security management and enforcement across all microservices.

- Rate Limiting and Throttling: The API gateway can implement rate limiting to protect microservices from being overwhelmed by excessive traffic. It can also throttle requests to ensure fair usage and prevent denial-of-service attacks.

- Monitoring and Logging: The API gateway provides a central point for monitoring and logging all API traffic. This enables detailed tracking of API usage, performance, and errors, providing valuable insights for troubleshooting and optimization.

- Transformation and Aggregation: The API gateway can transform request and response formats to accommodate different client needs. It can also aggregate data from multiple microservices into a single response, simplifying the client’s interaction with the backend services.

- Service Discovery: The API gateway can integrate with a service discovery mechanism to dynamically locate the instances of microservices. This allows the gateway to adapt to changes in the service landscape, such as scaling or service failures.

API Gateway Architecture Design

Designing an effective API gateway architecture involves considering several key components and their interactions. A well-designed gateway ensures performance, scalability, and security.

The core components of an API gateway typically include:

- Client Interface: This is the entry point for all client requests, typically exposed via HTTPS.

- Routing Engine: This component determines the appropriate microservice to forward each request to, based on predefined rules (e.g., URL paths, HTTP methods, headers).

- Authentication and Authorization Module: This module verifies the identity of the client (authentication) and ensures the client has the necessary permissions to access the requested resources (authorization). Common authentication methods include API keys, OAuth 2.0, and JWT.

- Rate Limiting and Throttling Module: This module controls the rate at which clients can access the API, protecting the backend services from overload.

- Transformation Engine: This component can transform requests and responses between the client and the microservices, for example, converting data formats or aggregating data from multiple services.

- Monitoring and Logging Module: This module collects metrics about API usage, performance, and errors, providing valuable insights for monitoring and troubleshooting. It also logs all requests and responses for auditing purposes.

- Service Discovery Integration: This allows the gateway to dynamically discover and route requests to the available instances of microservices, improving resilience and scalability.

A typical request flow through an API gateway might look like this:

- The client sends a request to the API gateway.

- The routing engine determines the appropriate microservice based on the request’s criteria.

- The authentication and authorization module verifies the client’s identity and permissions.

- The request is forwarded to the selected microservice.

- The microservice processes the request and sends a response back to the API gateway.

- The transformation engine (if configured) transforms the response.

- The API gateway sends the response back to the client.

Example of an API Gateway Configuration

Let’s consider an example using Kong, a popular open-source API gateway. This configuration demonstrates how to route traffic to a backend service and apply basic authentication.

First, you would need to install and configure Kong. Then, you would define a route to forward traffic to your backend service. This is done using the Kong Admin API, often accessed via `curl` or a similar tool.

1. Define a Service:

This creates a service representing your backend microservice.

curl -X POST http://localhost:8001/services \ --data "name=my-backend-service" \ --data "url=http://backend-service:8080"

2. Create a Route:

This defines a route that maps a specific path to the backend service.

curl -X POST http://localhost:8001/services/my-backend-service/routes \ --data "paths[]=/api/v1/data"

3. Apply Authentication (API Key):

First, create a consumer (representing a client). Then, create an API key for that consumer.

# Create a consumer curl -X POST http://localhost:8001/consumers \ --data "username=my-client" # Create an API key for the consumer curl -X POST http://localhost:8001/consumers/my-client/key-auth

4. Apply the API Key Plugin:

Enable the `key-auth` plugin on the route. This enforces API key authentication for requests to the `/api/v1/data` path.

curl -X POST http://localhost:8001/services/my-backend-service/plugins \ --data "name=key-auth" Now, when a client makes a request to `/api/v1/data`, they must include the API key in the `apikey` header or query parameter.

For example:

curl -H "apikey: YOUR_API_KEY" http://localhost:8000/api/v1/data This configuration illustrates a basic setup. Kong offers a wide array of plugins for features like rate limiting, request transformation, and more, which can be configured using the Admin API or through declarative configuration files.

API Gateway Solutions Comparison

Choosing the right API gateway solution depends on your specific needs and requirements. The following table compares several popular options based on features, performance, and cost.

Disclaimer: The information provided below is based on publicly available data and may be subject to change. Performance metrics can vary based on hardware, configuration, and workload. Always refer to the official documentation for the most up-to-date information. The ‘Cost’ column refers to the general pricing model and may vary depending on usage and specific needs.

| Feature | Kong | Apigee | AWS API Gateway | Tyk |

|---|---|---|---|---|

| Open Source | Yes | No (Freemium model) | No | Yes (with Enterprise version) |

| Routing | Advanced routing based on path, headers, and more. | Flexible routing based on various criteria. | Path-based routing with limited header support. | Routing based on path, host, and more. |

| Authentication | API Keys, OAuth 2.0, JWT, Basic Auth, and more via plugins. | API Keys, OAuth 2.0, JWT, SAML, and more. | API Keys, OAuth 2.0, JWT, and custom authorizers. | API Keys, OAuth 2.0, JWT, and more. |

| Rate Limiting | Built-in rate limiting with various algorithms. | Advanced rate limiting and quota management. | Built-in rate limiting and throttling. | Rate limiting with different algorithms. |

| Transformation | Request/response transformation via plugins (e.g., request-transformer). | Extensive transformation capabilities. | Request/response mapping and transformation. | Request/response transformation. |

| Monitoring & Logging | Detailed metrics and logging with integrations. | Comprehensive monitoring and logging dashboards. | Monitoring and logging through CloudWatch. | Built-in monitoring and logging. |

| Service Discovery | Integrations with service discovery tools (e.g., Consul, Kubernetes). | Integration with service discovery mechanisms. | Integration with AWS services. | Service discovery integrations. |

| Performance (Requests/Second) | High, depending on configuration. Can handle 10,000+ requests per second. | Very high, optimized for performance. | Scales to handle high traffic volumes. | High, can handle thousands of requests per second. |

| Cost | Free (Open Source), Enterprise version with paid features. | Freemium model, with paid tiers based on features and usage. | Pay-as-you-go based on API calls and data transfer. | Free (Open Source), Enterprise version with paid features. |

| Ease of Use | Moderate, requires configuration. | Easy to use with a comprehensive UI. | Easy to set up and manage, integrated with AWS services. | Moderate, requires configuration. |

| Scalability | Highly scalable, can be deployed in a clustered environment. | Highly scalable, designed for enterprise workloads. | Scales automatically with AWS infrastructure. | Scalable, supports clustering. |

Monitoring and Observability of Microservices

In a microservices architecture, the distributed nature of the system introduces significant complexity. Effectively monitoring and observing these services is crucial for maintaining system health, identifying issues, and ensuring optimal performance. Without robust monitoring and observability practices, troubleshooting becomes significantly more challenging, potentially leading to extended downtime and degraded user experience.

Significance of Monitoring and Observability

Observability provides insights into the internal states of a system by collecting data about its behavior. Monitoring, on the other hand, focuses on collecting and analyzing metrics to track the performance and health of a system. Together, they form the foundation for understanding, diagnosing, and improving the behavior of microservices. Effective monitoring and observability are essential for several reasons: they facilitate proactive issue detection, enabling teams to identify and resolve problems before they impact users; they accelerate root cause analysis by providing detailed data about service interactions and performance bottlenecks; and they support performance optimization by identifying areas for improvement, such as slow API calls or inefficient resource utilization.

Observability also enhances business decision-making by providing insights into how users interact with the system and how different services contribute to overall business goals.

Key Metrics to Monitor

Monitoring key metrics is essential for gaining insights into the health and performance of microservices. These metrics provide valuable data for identifying issues, optimizing performance, and ensuring a positive user experience. Several categories of metrics are critical for comprehensive monitoring:

- Latency: This measures the time it takes for a service to respond to a request. High latency can indicate performance bottlenecks, slow database queries, or network issues. Monitoring latency at different percentiles (e.g., P50, P90, P99) provides a comprehensive view of response times. For example, a P99 latency of 500ms indicates that 99% of requests are served within 500 milliseconds.

- Error Rates: This tracks the frequency of errors within a service. A high error rate can indicate code defects, dependency issues, or resource exhaustion. Monitoring error rates helps identify services that are failing and require attention. Error rates are typically expressed as a percentage of requests that result in an error.

- Throughput: This measures the number of requests processed by a service over a specific period. Monitoring throughput helps assess the service’s capacity and identify potential performance limitations. Throughput is often measured in requests per second (RPS) or transactions per second (TPS).

- Saturation: This indicates how close a service is to its resource limits, such as CPU, memory, and disk I/O. Monitoring saturation helps prevent resource exhaustion and ensures services can handle peak loads. Saturation levels are typically expressed as a percentage of resource utilization.

- Resource Utilization: Tracking the consumption of resources like CPU, memory, disk I/O, and network bandwidth provides insights into the efficiency of a service. High resource utilization can indicate inefficiencies in the code or the need for scaling.

- Dependencies: Monitoring the health and performance of external dependencies (e.g., databases, message queues, other services) is crucial. Failures in dependencies can cascade and affect the performance of the entire system.

Best Practices for Implementing Distributed Tracing

Distributed tracing is a crucial technique for understanding how requests flow through a microservices architecture. It enables the tracking of individual requests as they traverse multiple services, providing visibility into performance bottlenecks and error propagation. Implementing distributed tracing effectively involves several key practices:

- Instrumentation: Instrumenting each service to generate and propagate trace data is the foundation of distributed tracing. This involves adding code to capture relevant information about each request, such as timestamps, service names, and operation names.

- Trace Context Propagation: Ensuring that trace context is propagated across service boundaries is essential. This involves passing a unique trace identifier and other relevant information in the request headers. Popular standards like the W3C Trace Context provide a consistent way to propagate trace context.

- Trace Collection and Storage: A centralized system for collecting and storing trace data is necessary. Tools like Jaeger, Zipkin, and AWS X-Ray can be used to collect, store, and visualize traces.

- Data Analysis and Visualization: Analyzing trace data to identify performance bottlenecks and errors is critical. Visualization tools allow developers to see the flow of requests, identify slow operations, and understand how errors propagate.

- Sampling: Implementing sampling to manage the volume of trace data is important, as tracing every request can generate a significant amount of data. Sampling allows you to capture a representative subset of traces while minimizing the impact on performance.

- Contextual Logging: Enriching logs with trace identifiers allows for correlating logs with specific traces, providing a more comprehensive view of the system’s behavior.

Tools and Techniques for Logging and Alerting

Effective logging and alerting are essential for proactive issue detection and incident response in a microservices environment. This involves collecting, analyzing, and responding to log data to identify and address problems.

- Centralized Logging: Implementing a centralized logging system to collect logs from all services is critical. This enables a unified view of the system’s behavior and simplifies troubleshooting. Popular tools include the ELK stack (Elasticsearch, Logstash, Kibana), Splunk, and Sumo Logic.

- Structured Logging: Using structured logging formats (e.g., JSON) makes it easier to parse and analyze log data. Structured logs allow for efficient querying and filtering of log events.

- Log Aggregation and Analysis: Aggregating and analyzing log data to identify patterns, trends, and anomalies is important. This can involve using log analysis tools to search for specific error messages, monitor performance metrics, and detect unusual activity.

- Alerting: Setting up alerts based on log data to notify teams of critical issues is essential. Alerting systems can be configured to trigger notifications when specific error conditions are met, when performance metrics exceed thresholds, or when unusual activity is detected.

- Log Rotation and Retention: Implementing log rotation and retention policies to manage log data storage is necessary. This ensures that logs are stored efficiently and that old logs are automatically deleted or archived.

- Tools: Several tools facilitate logging and alerting in microservices environments. These include:

- ELK Stack (Elasticsearch, Logstash, Kibana): A popular open-source stack for collecting, processing, and visualizing logs.

- Splunk: A commercial platform for log management and analysis.

- Sumo Logic: A cloud-based log management and analytics platform.

- Prometheus: An open-source monitoring and alerting toolkit, often used for collecting and analyzing metrics.

- Grafana: A data visualization and monitoring tool that integrates with various data sources, including Prometheus and Elasticsearch.

- PagerDuty: A platform for incident management and alerting.

Distributed Tracing and Logging

Understanding the behavior of microservices is crucial for effective management and troubleshooting. Distributed tracing and centralized logging are indispensable tools in achieving this. They provide insights into request flows and system behavior, enabling faster debugging and performance optimization.

Understanding Request Flows with Distributed Tracing

Distributed tracing provides a comprehensive view of a request’s journey as it traverses multiple microservices. It allows developers to pinpoint bottlenecks, identify service dependencies, and understand the impact of individual service performance on the overall system.Distributed tracing works by assigning a unique trace ID to each request as it enters the system. This trace ID is then propagated along with the request as it moves through various microservices.

Each service, upon receiving the request, adds its own span, which contains information about the operation performed, its start and end times, and any relevant metadata. This data is then collected and visualized, allowing for a detailed understanding of the request’s path and performance characteristics.

Implementing Distributed Tracing with Jaeger and Zipkin

Several tools facilitate distributed tracing, with Jaeger and Zipkin being prominent examples. They offer similar functionalities but have distinct implementations and community support. Both tools rely on the OpenTracing (now OpenTelemetry) standard for instrumenting services, ensuring interoperability between different tracing systems.Here’s an example of how to instrument a simple Python service using Jaeger:“`pythonfrom jaeger_client import Configfrom opentracing import tracerfrom flask import Flask, requestapp = Flask(__name__)def init_tracer(service_name): config = Config( config= ‘sampler’: ‘type’: ‘const’, ‘param’: 1, , ‘logging’: True, , service_name=service_name, validate=True, ) return config.initialize_tracer()tracer = init_tracer(‘my-python-service’)@app.route(‘/hello’)def hello(): with tracer.start_span(‘hello-endpoint’) as span: span.log_kv(‘event’: ‘request received’) # Simulate some work import time time.sleep(0.1) span.log_kv(‘event’: ‘work completed’) return “Hello, World!”if __name__ == ‘__main__’: app.run(debug=True)“`This code snippet demonstrates the basic setup:* Initialization: The `init_tracer` function configures the Jaeger client, specifying the sampling strategy and logging settings.

Span Creation

The `tracer.start_span()` method creates a new span for the `/hello` endpoint.

Span Logging

The `span.log_kv()` method adds key-value pairs to the span, providing context and information about events.

Propagation

The OpenTracing library automatically handles the propagation of trace context (trace ID, span ID) across service boundaries when using appropriate HTTP client libraries or message queues.The visualization of the trace data in Jaeger or Zipkin would show the sequence of operations performed by the `my-python-service`, including the start and end times of each operation, allowing developers to pinpoint performance bottlenecks.Similarly, using Zipkin requires similar instrumentation steps, but with a Zipkin client library.

The core concept of creating spans, logging events, and propagating context remains consistent.

Best Practices for Centralized Logging

Centralized logging is essential for aggregating and analyzing logs from all microservices. It provides a single point of access for troubleshooting, auditing, and performance analysis. Implementing centralized logging effectively requires careful consideration of several factors.

- Consistent Log Format: Define a consistent log format (e.g., JSON) across all services. This simplifies parsing and analysis.

- Unique Identifiers: Include unique identifiers, such as trace IDs and request IDs, in each log entry to correlate logs across services.

- Log Levels: Utilize appropriate log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log messages based on their severity.

- Asynchronous Logging: Implement asynchronous logging to avoid blocking service operations.

- Centralized Log Aggregation: Use a centralized logging system (e.g., Elasticsearch, Splunk, or the ELK stack) to collect, store, and analyze logs.

- Log Rotation and Retention: Implement log rotation and retention policies to manage storage space and comply with regulatory requirements.

Designing a Sample Log Structure and Aggregation

A well-designed log structure enhances the usefulness of logs. Consider a JSON-based log structure that includes the following fields:“`json “timestamp”: “2024-10-27T10:00:00.123Z”, “service”: “user-service”, “level”: “INFO”, “trace_id”: “abc-123-xyz”, “span_id”: “456”, “request_id”: “req-789”, “message”: “User login successful”, “data”: “user_id”: “123”, “username”: “john.doe” “`This structure includes:* `timestamp`: The time the log event occurred.

`service`

The name of the service that generated the log.

`level`

The log level (e.g., INFO, WARN, ERROR).

`trace_id`

The unique identifier for the trace, used for distributed tracing.

`span_id`

The identifier for the specific span within a trace.

`request_id`

A unique identifier for the request.

`message`

A descriptive message about the event.

`data`

Additional contextual information (e.g., user ID, username).To aggregate logs from multiple services, you can use a centralized logging system like the ELK stack (Elasticsearch, Logstash, and Kibana).

1. Logstash Configuration

Configure Logstash to receive logs from all services. It parses the JSON log messages and extracts relevant fields.

2. Elasticsearch Indexing

Configure Logstash to index the parsed logs into Elasticsearch.

3. Kibana Visualization

Use Kibana to create dashboards and visualizations to analyze the logs. You can filter logs by service, level, trace ID, request ID, and other fields to identify issues and monitor system behavior.For example, to find all log entries related to a specific request, you can search for the `request_id` in Kibana. Similarly, to identify performance bottlenecks, you can correlate logs with trace data using the `trace_id` and `span_id`.

This integrated approach allows for efficient troubleshooting and performance optimization in a microservices environment.

Versioning and Deployment Strategies

Managing microservices effectively hinges on robust versioning and deployment strategies. These practices ensure that changes can be rolled out safely and efficiently, minimizing downtime and mitigating the risk of disruptions. Careful planning in these areas is essential for maintaining system stability and facilitating continuous delivery.

Importance of Versioning in Microservices

Versioning is a critical aspect of microservices architecture, enabling teams to manage changes in a controlled manner. It allows for the independent evolution of individual services without impacting the entire system. Without proper versioning, updating a service could potentially break dependencies with other services or client applications.Versioning helps achieve the following:

- Backward Compatibility: Allows newer versions of a service to work with older versions of its consumers, minimizing disruption during updates.

- Rollback Capability: Enables reverting to a previous stable version in case of issues with a new deployment.

- Independent Deployment: Facilitates the deployment of individual services without coordinating with the entire system.

- Clear Communication: Provides a clear way to communicate changes and dependencies between services.

- Testing and Debugging: Simplifies testing and debugging by allowing teams to target specific versions of services.

Versioning typically follows semantic versioning (SemVer), which uses a three-part number (MAJOR.MINOR.PATCH) to indicate changes:

- MAJOR: Indicates incompatible API changes.

- MINOR: Indicates added functionality in a backward-compatible manner.

- PATCH: Indicates backward-compatible bug fixes.

For example, if a service’s current version is 1.2.3, a bug fix might result in version 1.2.4, adding a new feature would lead to 1.3.0, and an API-breaking change would necessitate version 2.0.0.

Deployment Strategies

Deployment strategies determine how new versions of microservices are rolled out to production. The choice of strategy depends on factors like the complexity of the service, the risk tolerance, and the desired level of downtime.Here are some commonly used deployment strategies:

- Blue/Green Deployment: Involves maintaining two identical environments: Blue (current live version) and Green (new version). Traffic is switched from Blue to Green after testing, providing a near-instantaneous rollback if needed. This strategy minimizes downtime. A load balancer is used to manage the traffic switch.

- Canary Release: A small subset of users is directed to the new version (the “canary”) while the rest continue to use the existing version. This allows for testing in a real-world environment before a full rollout. If the canary performs well, the rollout is expanded gradually. This strategy allows for detecting issues early.

- Rolling Update: New instances of a service are deployed alongside existing ones, gradually replacing the old instances. This strategy provides continuous availability, but it requires careful management of dependencies and potential issues with concurrent versions.

- A/B Testing: Similar to canary releases, A/B testing involves directing different user segments to different versions of a service to compare their performance and user experience. This strategy is commonly used for testing UI changes and new features.

- Recreate Deployment: Involves shutting down the old version of a service and then deploying the new version. This strategy is simple to implement but results in downtime during the deployment.

Managing Breaking Changes and Backward Compatibility

Breaking changes in microservices can disrupt the functionality of dependent services and client applications. Careful planning and execution are essential to mitigate these risks.Here’s how to manage breaking changes and ensure backward compatibility:

- Semantic Versioning: Use SemVer to clearly communicate the nature of changes. MAJOR version increments signal breaking changes.

- API Versioning: Include the API version in the URL (e.g., /api/v1/resource) or in the request headers to allow clients to specify the desired version.

- Deprecation: Announce and support deprecated features for a period before removing them. Provide clear warnings and guidance for migration.

- Consumer-Driven Contracts: Ensure that changes to a service do not break existing contracts with its consumers. Use tools like Pact to define and verify these contracts.

- Data Migration: If schema changes are required, plan for data migration to ensure compatibility between old and new versions.

- Feature Flags: Use feature flags to enable or disable new features without deploying a new version. This allows for gradual rollouts and easy rollbacks.

Consider a scenario where a payment service needs to change the format of the payment ID. Instead of immediately changing the ID format in the current version, the service could introduce a new API version (e.g., /api/v2/payments). The old API (v1) would continue to support the old ID format, while the new API (v2) would support the new format. Clients could then migrate to the new API at their own pace.

Considerations for Rolling Back Deployments

Rolling back to a previous version is a crucial capability in microservices deployments. It provides a safety net in case of issues with a new release.The following are key considerations for rolling back deployments:

- Automation: Automate the rollback process to ensure speed and consistency.

- Monitoring: Implement comprehensive monitoring to detect issues early.

- Configuration Management: Manage configurations separately from the code to allow for quick changes during rollback.

- Data Consistency: Ensure data consistency during rollback. Consider using techniques like data migration scripts or transaction management.

- Rollback Strategy: Define a clear rollback strategy before deploying.

- Testing: Regularly test the rollback process to ensure it works as expected.

- Logging: Maintain detailed logs to help diagnose issues and track the rollback process.

For instance, if a new version of a service introduces a critical bug, a rollback can be initiated. If using a blue/green deployment, the traffic is switched back to the blue environment (the previous version). With canary releases, the traffic to the canary is removed, and the full rollout is halted. In any case, thorough monitoring and rapid response are essential for a successful rollback.

Configuration Management and Service Mesh

Microservices architectures thrive on agility and independent deployment, but this introduces complexities in managing configurations across numerous services. Centralized configuration management and the implementation of a service mesh are crucial for addressing these challenges. They provide mechanisms to control service behavior, streamline updates, and enhance overall operational efficiency.

Centralized Configuration Management Importance

Centralized configuration management is vital for maintaining consistency, enabling rapid updates, and simplifying the operational overhead of microservices. It offers a single source of truth for service configurations, allowing for easier management and reduced errors.

- Consistency: Centralized management ensures all service instances use the same configuration, reducing discrepancies and potential bugs. This consistency is critical for predictable behavior across the entire system.

- Simplified Updates: Changes to configurations can be propagated quickly and reliably to all relevant services. This allows for faster deployments and quicker responses to issues.

- Reduced Errors: By centralizing configuration, the likelihood of manual errors is minimized. Automated processes ensure configurations are applied correctly every time.

- Enhanced Security: Centralized management provides a secure location to store sensitive information, such as API keys and database credentials. This enhances the security posture of the system.

- Improved Observability: Centralized configuration management systems often provide audit trails, allowing you to track changes and understand the history of your configurations. This aids in debugging and troubleshooting.

Configuration Management Tools and Features

Several tools are available for managing configurations in a microservices environment, each with its own strengths and features. These tools typically offer features like versioning, encryption, and dynamic updates.

- Consul: Consul, developed by HashiCorp, is a service mesh and a configuration management tool. It offers key-value storage, service discovery, and health checks. Consul is well-suited for managing configurations alongside service discovery and provides a robust solution for distributed systems.

- etcd: etcd is a distributed key-value store designed for highly available systems. It’s often used for service discovery, configuration management, and coordination in distributed systems. It provides a reliable and consistent way to store and retrieve configuration data.

- ZooKeeper: ZooKeeper, originally developed at Yahoo!, is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. It is a robust solution for managing distributed applications.

- Spring Cloud Config: Spring Cloud Config is a configuration management tool specifically designed for Spring Boot applications. It provides a centralized server for managing externalized configuration properties for applications across all environments.

- Apollo: Apollo is a distributed configuration management platform developed by Ctrip. It offers features such as versioning, real-time updates, and a user-friendly interface. It is designed to be highly available and scalable.

Service Mesh Role in Managing Microservices

A service mesh is a dedicated infrastructure layer that facilitates service-to-service communication. It handles tasks like service discovery, traffic management, security, and observability, which are essential for microservices architectures. It decouples these concerns from the application code, allowing developers to focus on business logic.

- Traffic Management: Service meshes control how traffic flows between services, enabling features like load balancing, traffic splitting, and circuit breaking.

- Security: They provide features like mutual TLS (mTLS) for secure communication between services, ensuring that all traffic is encrypted.

- Observability: Service meshes provide detailed metrics, logs, and tracing data, which help in monitoring and troubleshooting microservices.

- Service Discovery: They automatically discover and route traffic to service instances, simplifying service interactions.

- Resilience: Service meshes implement features like retries, timeouts, and circuit breakers to enhance the resilience of the system.

Service Mesh Solutions Comparison

| Feature | Istio | Linkerd |

|---|---|---|

| Control Plane | Complex and feature-rich, supporting a wide range of functionalities. | Simple and lightweight, focusing on core service mesh features. |

| Data Plane | Uses Envoy proxy as its data plane, offering a wide array of features. | Uses a lightweight, purpose-built proxy (Linkerd2-proxy) optimized for performance. |

| Ease of Use | Steeper learning curve due to its complexity. | Generally easier to set up and manage, with a focus on simplicity. |

| Performance | Can have higher resource consumption due to the complexity of Envoy. | Optimized for low latency and resource efficiency. |

| Extensibility | Highly extensible, supporting a vast range of integrations and features. | Extensible through plugins, but with a more focused feature set. |

| Security | Provides robust security features, including mTLS and policy enforcement. | Offers strong security features, including mTLS and policy enforcement, with a focus on ease of use. |

| Community | Large and active community with extensive documentation and support. | Smaller, but growing community with a focus on user experience and ease of use. |

Security Considerations for Microservices

Microservices architectures, while offering numerous benefits, introduce unique security challenges. The distributed nature of microservices increases the attack surface, making it crucial to implement robust security measures at every level. This section explores specific threats, communication security, authentication, authorization, and best practices for securing microservices.

Security Threats Specific to Microservices Architectures

Microservices architectures face several security threats that differ from monolithic applications. These threats stem from the distributed nature of the system, the increased number of communication points, and the diverse technologies used.

- Increased Attack Surface: The distributed nature of microservices increases the attack surface. Each microservice, API gateway, and communication channel represents a potential entry point for attackers. This contrasts with monolithic applications where the attack surface is generally more contained.

- Inter-Service Communication Vulnerabilities: Communication between microservices is often over a network, making it vulnerable to eavesdropping, man-in-the-middle attacks, and data tampering. Securing this communication is critical.

- Dependency on External Services: Microservices often rely on external services and APIs. Security vulnerabilities in these external dependencies can expose the entire system. This requires careful vetting and monitoring of third-party services.

- Configuration Management Issues: Misconfigured services, exposed secrets, and insecure default settings can lead to significant security breaches. Effective configuration management is crucial for maintaining a secure environment.

- Data Exposure and Leakage: Microservices handle sensitive data, and improper handling can lead to data breaches. Secure data storage, encryption, and access controls are essential to prevent data exposure.

- Distributed Denial of Service (DDoS) Attacks: The distributed nature of microservices can make them more vulnerable to DDoS attacks. Attackers can target individual services or the entire system, causing service disruption.

Methods for Securing Inter-Service Communication

Securing communication between microservices is paramount. Several methods can be employed to protect data in transit and ensure the integrity of messages.

- Mutual TLS (mTLS): Mutual TLS provides strong authentication and encryption for inter-service communication. Both the client and server authenticate each other using digital certificates, ensuring that only authorized services can communicate. This prevents eavesdropping and man-in-the-middle attacks.

- Service Mesh: Service meshes like Istio and Linkerd offer built-in security features, including mTLS, traffic encryption, and access control. They manage communication between services, providing a centralized point for security policies.

- API Gateway Security: API gateways can enforce security policies, such as authentication, authorization, and rate limiting. They act as a single point of entry, protecting internal services from direct exposure.

- Encryption in Transit: All communication should be encrypted using TLS/SSL to protect data in transit. This prevents eavesdropping and ensures data confidentiality.

- Message Signing and Validation: Services can sign their messages with a digital signature to ensure data integrity and prevent tampering. The receiving service can then validate the signature to verify the message’s authenticity.

Examples of Implementing Authentication and Authorization in a Microservices Environment

Implementing authentication and authorization in a microservices environment requires careful planning. The chosen approach should be scalable, secure, and easy to manage.

- Centralized Authentication Server: A centralized authentication server (e.g., Keycloak, Auth0, or a custom solution) can handle user authentication and issue access tokens. Microservices can then validate these tokens to authorize access to resources. This approach provides a single source of truth for user identities.

- JSON Web Tokens (JWTs): JWTs are commonly used for authentication and authorization in microservices. The authentication server issues JWTs, which contain user claims and permissions. Microservices can then verify the JWT signature and extract the necessary information to authorize requests.

- Role-Based Access Control (RBAC): RBAC defines roles and assigns permissions to those roles. Users are then assigned to roles, granting them access to resources based on their assigned roles. This simplifies access management and ensures consistent authorization policies across services.

- Attribute-Based Access Control (ABAC): ABAC allows for more fine-grained access control based on attributes of the user, the resource, and the environment. This provides greater flexibility and control over access permissions.

- Example: Using JWTs with Spring Security:

Consider a scenario where a user authenticates through an API gateway. The gateway authenticates the user and issues a JWT. Subsequent requests to microservices include this JWT in the `Authorization` header. Each microservice uses Spring Security and the `spring-security-jwt` library to:

- Validate the JWT signature using a shared secret or public key.

- Extract user claims (e.g., username, roles) from the JWT.

- Use these claims to authorize access to specific resources or operations within the service.

This approach provides a secure and scalable way to manage authentication and authorization across multiple microservices.

Best Practices for Securing Microservices

Securing microservices requires a comprehensive approach that covers various aspects of the system.

- Implement a Zero Trust Architecture: Assume no trust by default. Verify every request, regardless of its origin, and enforce least privilege access.

- Secure Inter-Service Communication: Use mTLS, service meshes, and encryption to protect communication between services.

- Implement Strong Authentication and Authorization: Utilize centralized authentication servers, JWTs, and RBAC/ABAC to control access to resources.

- Secure Configuration Management: Store secrets securely, use environment variables or configuration management tools, and avoid hardcoding sensitive information.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities.

- Automate Security Practices: Automate security checks, code analysis, and deployment processes to ensure consistency and reduce human error.

- Monitor and Log Everything: Implement comprehensive monitoring and logging to detect and respond to security incidents.

- Keep Dependencies Updated: Regularly update all dependencies, including libraries and frameworks, to patch security vulnerabilities.

- Follow the Principle of Least Privilege: Grant services and users only the minimum necessary permissions to perform their tasks.

- Use API Gateways: Use API gateways to manage and secure access to microservices.

Last Point

In conclusion, successfully managing microservices complexity demands a thoughtful approach that encompasses architecture, communication, operational practices, and security. By understanding the nuances of decomposition, service discovery, API gateways, and the importance of observability, you can build a robust and scalable system. Embracing best practices for distributed tracing, versioning, and security will further fortify your microservices architecture. The journey to microservices is challenging, but with the right knowledge and strategies, you can unlock its transformative power.

Top FAQs

What is the biggest challenge in microservices?

The biggest challenge is managing the inherent complexity of a distributed system. This includes communication overhead, data consistency, service discovery, and operational complexities like monitoring and tracing.

How do you choose the right communication pattern?

The choice depends on the requirements. Synchronous communication is suitable for real-time operations, while asynchronous communication, using message queues, is better for decoupling services and handling high-volume, non-critical tasks.

What is the role of an API gateway?

An API gateway acts as a single entry point for clients, handling routing, authentication, rate limiting, and other cross-cutting concerns, simplifying client interactions with microservices.

How can I monitor the performance of my microservices?

Monitor key metrics like latency, error rates, and throughput. Implement distributed tracing to track requests across services and use centralized logging and alerting tools.

How can I secure my microservices?

Implement authentication and authorization, secure inter-service communication using protocols like TLS, and follow security best practices for each service.