Embarking on a journey to understand how to implement the fan-out pattern with serverless unveils a powerful architectural paradigm shift. This pattern, central to modern distributed systems, allows for parallel processing of incoming events, enhancing scalability and efficiency. It’s a crucial technique for scenarios demanding high throughput and responsiveness, such as processing large datasets, handling multiple API requests concurrently, or distributing tasks across various microservices.

The fan-out pattern, when implemented with serverless technologies, offers significant advantages over traditional approaches. By leveraging serverless services, developers can eliminate the complexities of infrastructure management, reduce operational costs, and achieve unparalleled scalability. This guide delves into the intricacies of designing, implementing, and optimizing a serverless fan-out architecture, providing a clear path for harnessing its potential.

Introduction to the Fan-Out Pattern with Serverless

The fan-out pattern, in a serverless architecture, is a design strategy that involves distributing a single incoming event or request to multiple downstream services or functions concurrently. This approach leverages the scalability and concurrency inherent in serverless platforms, allowing for parallel processing and significantly improved performance compared to sequential execution. It is particularly well-suited for scenarios where a task can be logically broken down into independent sub-tasks that can be executed in parallel.

Core Concept of the Fan-Out Pattern in a Serverless Context

The core concept revolves around an event trigger that initiates the fan-out process. This trigger, often a message queue or an API Gateway, receives an initial event. The fan-out function then analyzes this event and dispatches it to multiple other serverless functions, known as workers or consumers. Each worker processes a portion of the original request independently. The pattern’s efficiency lies in the parallel execution of these worker functions, dramatically reducing the overall processing time.

The fan-out function may optionally aggregate the results from the worker functions, providing a consolidated response.

Real-World Scenarios Benefiting from the Fan-Out Pattern

The fan-out pattern finds application in a variety of real-world scenarios, particularly those involving data processing, content creation, and event-driven architectures.

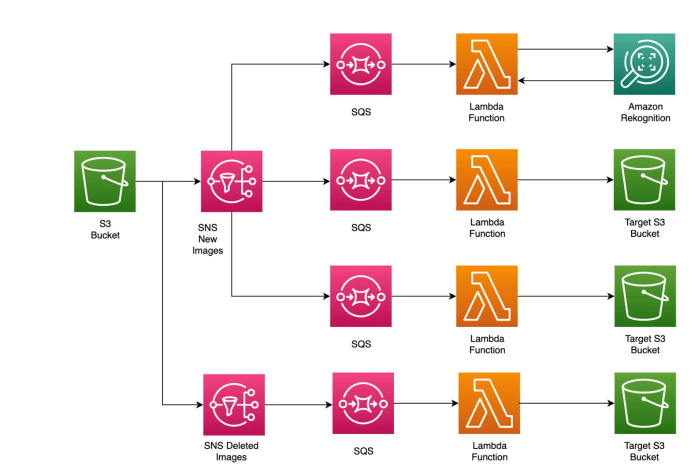

- Image Processing: When a user uploads an image, the fan-out function can trigger multiple worker functions to perform tasks such as resizing the image for different resolutions, generating thumbnails, and extracting metadata. Each of these tasks can be performed concurrently, significantly reducing the time required to process the image compared to sequential processing.

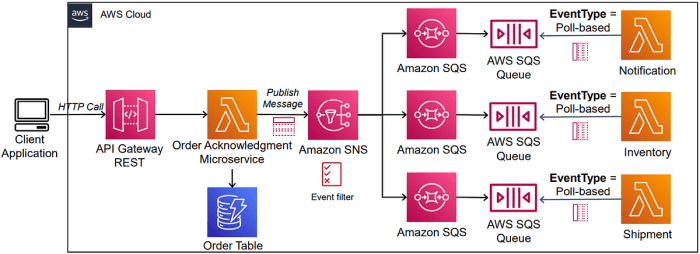

- Order Processing: In an e-commerce application, a new order event can trigger a fan-out process. This process can dispatch events to functions responsible for tasks like inventory updates, payment processing, and sending notifications to the customer and the fulfillment center. Parallel execution ensures quicker order fulfillment.

- Data Analysis and Reporting: When analyzing a large dataset, the fan-out pattern can be used to distribute the data across multiple functions, each responsible for processing a subset of the data. This parallel processing accelerates the analysis and allows for faster generation of reports and insights.

- Notification Systems: For applications that need to send notifications to multiple channels (e.g., email, SMS, push notifications), the fan-out pattern can be used to dispatch notification events to different worker functions, each handling a specific channel. This ensures that notifications are delivered efficiently across all channels.

Advantages of Using the Fan-Out Pattern Over Traditional Methods

The fan-out pattern offers several advantages over traditional methods, particularly in terms of scalability, cost-efficiency, and fault tolerance.

- Scalability: Serverless platforms automatically scale the number of function instances based on the incoming workload. The fan-out pattern inherently benefits from this auto-scaling, allowing the system to handle a high volume of events without manual intervention. In contrast, traditional systems often require manual scaling of resources, which can be time-consuming and inefficient.

- Concurrency: By executing tasks in parallel, the fan-out pattern significantly reduces processing time. This is especially beneficial for tasks that can be broken down into independent sub-tasks. Traditional methods often process tasks sequentially, leading to longer processing times.

- Cost Efficiency: Serverless platforms typically offer a pay-per-use pricing model. Because the fan-out pattern leverages parallel processing, the overall processing time is reduced, leading to lower costs compared to traditional methods that may require more resources to achieve the same level of performance.

- Fault Tolerance: In a fan-out pattern, if one worker function fails, it does not necessarily affect the other worker functions. The platform often provides mechanisms for retrying failed function invocations. This inherent fault tolerance improves the reliability of the system compared to traditional systems where a single point of failure can bring down the entire process.

- Simplified Development: Serverless platforms provide managed services for event triggers, message queues, and function execution, simplifying the development and deployment of fan-out patterns. Developers can focus on writing the business logic of the worker functions without managing the underlying infrastructure.

Serverless Technologies for Fan-Out Implementation

Implementing the fan-out pattern with serverless technologies leverages the inherent benefits of these platforms, such as automatic scaling and pay-per-use pricing. The choice of serverless platform significantly impacts the performance, cost, and scalability of the solution. Selecting the appropriate service involves understanding the nuances of each platform, considering factors like cold start times, concurrency limits, and integration capabilities with other services.

This section delves into the suitability of various serverless offerings for fan-out implementations, compares their cost structures, and analyzes their scalability characteristics.

Suitable Serverless Services for Fan-Out

Several serverless platforms provide the necessary functionality for effectively implementing the fan-out pattern. The primary services used for this purpose are function-as-a-service (FaaS) offerings, which handle the core processing logic, and message queuing or event streaming services, which manage the distribution of events to the functions.

- AWS Lambda: Amazon Web Services (AWS) Lambda is a widely adopted FaaS platform. It integrates seamlessly with other AWS services, such as Simple Queue Service (SQS), Simple Notification Service (SNS), and EventBridge, making it well-suited for fan-out implementations. Lambda’s event-driven architecture allows it to be triggered by various sources, including messages from queues or events from event buses.

- Azure Functions: Microsoft Azure Functions provides a similar FaaS offering to AWS Lambda. It supports various trigger types, including queues (Azure Storage Queues, Service Bus Queues), and event hubs, and integrates well with other Azure services. Azure Functions is a viable alternative for fan-out implementations, especially within the Azure ecosystem.

- Google Cloud Functions: Google Cloud Functions is Google Cloud’s FaaS offering. It supports triggers from Cloud Pub/Sub, Cloud Storage, and other Google Cloud services. Cloud Functions is designed to be highly scalable and can be a suitable choice for fan-out scenarios within the Google Cloud environment.

- EventBridge/SNS/SQS (AWS): These AWS services, when used in conjunction with Lambda, facilitate the fan-out pattern. EventBridge acts as an event bus, routing events to multiple targets, including Lambda functions. SNS provides a publish-subscribe mechanism for distributing messages to subscribers (e.g., Lambda functions), and SQS allows for decoupling components by queuing messages.

- Azure Event Grid/Service Bus/Storage Queues: Similar to AWS, Azure offers a suite of services that work together for fan-out. Event Grid acts as an event routing service. Service Bus and Storage Queues provide message queuing capabilities.

- Cloud Pub/Sub: Google Cloud Pub/Sub is a fully managed, real-time messaging service. It is designed to handle high-volume message ingestion and distribution. It can be used as a central component for the fan-out pattern, distributing messages to multiple Cloud Functions instances.

Cost Implications of Serverless Services

The cost of serverless services is primarily determined by the number of function invocations, the duration of execution, and the resources consumed (e.g., memory). Each platform employs a different pricing model, which can influence the overall cost of a fan-out implementation.

- AWS Lambda: AWS Lambda’s pricing is based on the number of requests and the duration of each execution. There is a free tier that covers a certain number of requests and compute time per month. Costs are incurred for compute time (measured in milliseconds) and the amount of memory allocated to the function. Pricing can be optimized by choosing the right memory allocation and optimizing the function code for faster execution.

- Azure Functions: Azure Functions pricing also follows a pay-per-use model. It offers a consumption plan, where you pay only for the compute resources your functions use, and a premium plan, which provides dedicated resources and higher execution limits. The cost depends on the number of executions, execution time, and memory consumption.

- Google Cloud Functions: Google Cloud Functions’ pricing is based on the number of invocations, compute time, and memory consumption. Google Cloud offers a free tier for a certain number of invocations and compute time per month. Pricing is calculated per GB-second, and optimizing the code for faster execution directly impacts the cost.

- Message Queuing Services: Services like SQS, Azure Service Bus, and Cloud Pub/Sub have their own pricing models. These often involve costs per message, storage costs, and transfer costs. Choosing the right queueing service and configuring it appropriately can influence the overall cost of the fan-out solution. For example, using a FIFO (First-In, First-Out) queue in SQS might incur additional charges compared to a standard queue.

- Cost Optimization Strategies:

- Function Optimization: Optimizing the function code to reduce execution time is critical. This includes techniques such as efficient code design, optimized libraries, and minimizing external dependencies.

- Memory Allocation: Choosing the appropriate memory allocation for each function is essential. Over-allocating memory increases costs, while under-allocating can lead to performance issues.

- Batching: For queue-based triggers, batching multiple messages into a single function invocation can reduce the number of invocations and potentially lower costs.

- Monitoring and Alerting: Implementing monitoring and alerting helps to identify and address cost anomalies or unexpected usage patterns.

Scalability Characteristics for Fan-Out Scenarios

Scalability is a crucial factor in fan-out implementations, as the system needs to handle a potentially large volume of incoming events and distribute them to multiple processing functions. Serverless platforms are designed with scalability in mind, but there are nuances to consider.

- AWS Lambda: AWS Lambda automatically scales by provisioning more instances of the function to handle the incoming load. The scalability is generally excellent, but there are account-level concurrency limits that can be adjusted. Lambda’s scaling is often triggered by the arrival of events from the trigger source, such as SQS or SNS.

- Azure Functions: Azure Functions also provides automatic scaling. The platform dynamically allocates resources to handle the workload. Azure Functions’ scaling behavior is dependent on the chosen plan (Consumption, Premium, or App Service plan). The Consumption plan provides the most automatic scaling, while the Premium and App Service plans offer more control over the scaling behavior.

- Google Cloud Functions: Google Cloud Functions scales automatically based on the incoming traffic. Google Cloud manages the scaling of the function instances to match the demand. Cloud Functions offers high scalability and can handle significant workloads.

- Concurrency Limits: Each serverless platform has concurrency limits that define the maximum number of function instances that can run simultaneously. Understanding these limits is crucial for designing a scalable fan-out solution. For example, AWS Lambda has a default concurrency limit per region, which can be increased upon request.

- Cold Starts: Cold starts, where a function instance needs to be initialized before processing an event, can impact performance. The frequency of cold starts depends on factors such as function code size, the selected runtime, and the platform’s caching mechanisms. Minimizing cold start times is important for achieving low-latency processing.

- Queueing Service Scalability: The scalability of the message queueing or event streaming service is also crucial. Services like SQS, Azure Service Bus, and Cloud Pub/Sub are designed to handle high throughput. They can scale to accommodate the volume of events, ensuring that the fan-out process does not become a bottleneck.

Designing the Fan-Out Architecture

Designing a robust fan-out architecture is crucial for efficiently distributing tasks and processing data in a serverless environment. This section details the architectural components and their interactions, focusing on the event flow, error handling, and retry mechanisms necessary for reliable operation. A well-designed architecture ensures scalability, fault tolerance, and optimal resource utilization.

Basic Fan-Out Architecture Diagram

The fundamental fan-out architecture leverages several serverless components to orchestrate the event distribution and processing. The diagram illustrates the flow of events from a trigger to individual processing functions.The architecture typically comprises these components:* Trigger: The entry point for the event. This can be an API Gateway, an EventBridge rule, an S3 event, or any other service that initiates the workflow.

Event Bridge/SNS Topic

The event bus that receives events from the trigger and distributes them to multiple consumers. EventBridge offers advanced filtering and routing capabilities, while SNS provides a simpler publish/subscribe model.

Processing Functions (Lambda Functions)

Individual functions that perform specific tasks based on the received event. Each function operates independently, allowing for parallel processing.

Data Storage (e.g., DynamoDB, S3)

Stores the processed data or intermediate results.

Monitoring and Logging (e.g., CloudWatch)

Collects metrics, logs, and provides insights into the system’s performance and health.The flow of events begins when the trigger activates. The trigger publishes an event to EventBridge or SNS. EventBridge, based on configured rules, routes the event to multiple Lambda functions concurrently. SNS directly publishes the event to subscribed Lambda functions. Each Lambda function processes the event independently and potentially stores the results in data storage.

Monitoring and logging are integrated to track the overall process.

Flow of Events from Trigger to Processing Functions

Understanding the event flow is key to designing an efficient and reliable fan-out system. The following steps Artikel the event’s journey from the trigger to the individual processing functions.The event flow follows these stages:

1. Event Initiation

The trigger, such as an API Gateway receiving an HTTP request or an S3 object creation event, initiates the process. The event contains the data to be processed.

2. Event Publication

The trigger publishes the event to the event bus (EventBridge or SNS). The event bus acts as a central point for distributing events to subscribers.

3. Event Routing/Subscription

EventBridge uses rules to route events based on their content (e.g., event type, data fields). SNS sends the event to all subscribed endpoints.

4. Function Invocation

The event bus triggers the execution of multiple Lambda functions concurrently. Each function receives a copy of the event.

5. Parallel Processing

Each Lambda function processes its assigned portion of the data independently. This parallel execution is the core benefit of the fan-out pattern, enabling faster processing times.

6. Data Storage and Completion

Each Lambda function processes its data and stores the results in a data store.

7. Monitoring and Logging

Throughout the process, metrics and logs are collected to monitor performance, identify errors, and track the progress of the overall operation.

Logical Flow Diagram for Error Handling and Retries

Implementing robust error handling and retry mechanisms is critical for building a resilient fan-out architecture. The following diagram illustrates the logical flow for handling errors and retries.The error handling and retry process encompasses the following stages:

1. Function Execution

Each Lambda function attempts to process the event.

2. Error Detection

If an error occurs during function execution (e.g., an exception, network issue), the function signals an error.

3. Error Handling

The function catches the error and logs the details, including the event data, error type, and timestamp.

4. Retry Mechanism

Based on the error type, a retry mechanism is triggered. This can be implemented using the Lambda function’s built-in retry behavior, a Dead Letter Queue (DLQ) with a retry policy, or custom retry logic within the function.

Lambda Function Retries

Lambda functions have built-in retry mechanisms. If an error occurs, the function can be retried automatically, depending on the error type and configured retry settings.

Dead Letter Queue (DLQ)

If the function fails repeatedly, the event is sent to a DLQ. The DLQ can be processed separately, allowing for manual intervention or further analysis.

Custom Retry Logic

Custom retry logic can be implemented within the function, allowing for more sophisticated retry strategies, such as exponential backoff.

5. Event Persistence

The event data is persisted to ensure that the event is not lost. This may involve storing the event data in a data store or using a DLQ.

6. Retry Delay

A delay is implemented between retries to avoid overwhelming downstream services. This delay can be fixed or follow an exponential backoff strategy.

7. Success or Failure

If the function succeeds after retries, the process is considered successful. If retries are exhausted, the event is considered a failure, and the failure is logged.

8. Monitoring and Alerting

Monitoring and alerting are used to track the error rates, retry attempts, and overall health of the fan-out process. Alerts can be configured to notify operators of critical errors.This architecture ensures that transient errors are handled gracefully and that critical events are not lost, thereby increasing the reliability and robustness of the serverless fan-out system.

Event Triggering Mechanisms

Event triggering mechanisms are the foundation upon which a serverless fan-out architecture operates. The choice of trigger significantly impacts the system’s responsiveness, scalability, and cost-efficiency. Selecting the appropriate trigger type requires careful consideration of the application’s specific requirements, including the expected event volume, latency sensitivity, and the nature of the source events. This section delves into various event triggers suitable for initiating a fan-out process, detailing their configurations and providing practical code examples.

HTTP Requests

HTTP requests serve as a direct and synchronous trigger for fan-out processes. This approach is ideal when the initiating event is a user action, an API call, or any situation requiring an immediate response. However, the synchronous nature implies that the initiating service waits for the fan-out process to complete, potentially impacting response times.To configure an HTTP request trigger, a serverless function (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) needs to be exposed via an HTTP endpoint.

This typically involves:

- API Gateway Configuration: Setting up an API gateway (e.g., AWS API Gateway, Azure API Management, Google Cloud Endpoints) to receive incoming HTTP requests. This gateway handles routing, authentication, authorization, and rate limiting.

- Function Association: Configuring the API gateway to invoke the serverless function upon receiving a request. The function receives the request payload, headers, and other relevant information.

- Request Handling: Within the serverless function, the incoming request is parsed, and the fan-out logic is initiated. This might involve publishing messages to a message queue, triggering other serverless functions, or writing data to a database.

Here’s an example of configuring an HTTP trigger using AWS CloudFormation:“`yamlResources: MyFanOutFunction: Type: AWS::Lambda::Function Properties: FunctionName: MyFanOutFunction Handler: index.handler Runtime: nodejs18.x Role: !GetAtt LambdaExecutionRole.Arn LambdaExecutionRole: Type: AWS::IAM::Role Properties: AssumeRolePolicyDocument: Version: ‘2012-10-17’ Statement:

Effect

Allow Principal: Service: lambda.amazonaws.com Action: sts:AssumeRole Policies:

PolicyName

root PolicyDocument: Version: ‘2012-10-17’ Statement:

Effect

Allow Action:

logs

CreateLogGroup

logs

CreateLogStream

logs

PutLogEvents Resource: ‘arn:aws:logs:*:*:*’

Effect

Allow Action:

sqs

SendMessage Resource: !GetAtt MyQueue.Arn MyApiGateway: Type: AWS::ApiGateway::RestApi Properties: Name: MyFanOutApi MyApiGatewayResource: Type: AWS::ApiGateway::Resource Properties: ParentId: !GetAtt MyApiGateway.RootResourceId PathPart: fanout RestApiId: !Ref MyApiGateway MyApiGatewayMethod: Type: AWS::ApiGateway::Method Properties: HttpMethod: POST ResourceId: !Ref MyApiGatewayResource RestApiId: !Ref MyApiGateway AuthorizationType: NONE Integration: Type: AWS_PROXY IntegrationHttpMethod: POST Uri: !Sub “arn:aws:apigateway:$AWS::Region:lambda:path/2015-03-31/functions/$MyFanOutFunction.Arn/invocations” MyApiGatewayDeployment: Type: AWS::ApiGateway::Deployment DependsOn: MyApiGatewayMethod Properties: RestApiId: !Ref MyApiGateway MyApiGatewayStage: Type: AWS::ApiGateway::Stage Properties: DeploymentId: !Ref MyApiGatewayDeployment RestApiId: !Ref MyApiGateway StageName: prodOutputs: ApiEndpoint: Description: “API Endpoint” Value: !Join [ “”, [ !Sub “https://$MyApiGateway.execute-api.$AWS::Region.amazonaws.com/prod/fanout” ] ]“`This CloudFormation template defines a Lambda function, an IAM role for the function, and an API Gateway.

The API Gateway exposes a POST endpoint at `/fanout` that triggers the Lambda function. The `MyFanOutFunction` would contain the logic to parse the incoming request and initiate the fan-out process, such as sending messages to an SQS queue. The `Outputs` section provides the endpoint URL for accessing the API.

Message Queues

Message queues, such as Amazon SQS, Azure Service Bus, or Google Cloud Pub/Sub, offer an asynchronous and highly scalable trigger mechanism. This approach decouples the event producer from the consumer (the fan-out process), allowing for independent scaling and improved fault tolerance.Setting up a message queue trigger involves:

- Queue Creation: Creating a message queue within the chosen cloud provider.

- Message Publishing: Configuring the event producer (e.g., another serverless function, a batch process) to publish messages to the queue. These messages contain the data needed for the fan-out process.

- Function Subscription: Configuring a serverless function to subscribe to the queue. The function is triggered whenever a new message arrives.

- Message Processing: Within the serverless function, the message is retrieved, and the fan-out logic is executed. This might involve processing the message payload and invoking other serverless functions or services.

Here’s an example using Terraform to configure an SQS queue and a Lambda function triggered by it:“`terraformresource “aws_sqs_queue” “fanout_queue” name = “fanout-queue” visibility_timeout_seconds = 300resource “aws_lambda_function” “fanout_lambda” function_name = “fanout-lambda” handler = “index.handler” runtime = “nodejs18.x” role = aws_iam_role.lambda_role.arn filename = “lambda_function_payload.zip” # Assuming a zip file containing your Lambda coderesource “aws_lambda_permission” “allow_sqs” statement_id = “AllowExecutionFromSQS” action = “lambda:InvokeFunction” function_name = aws_lambda_function.fanout_lambda.function_name principal = “sqs.amazonaws.com” source_arn = aws_sqs_queue.fanout_queue.arnresource “aws_sqs_queue_policy” “sqs_policy” queue_url = aws_sqs_queue.fanout_queue.id policy = jsonencode( Version = “2012-10-17”, Statement = [ Effect = “Allow”, Principal = Service = “lambda.amazonaws.com” , Action = “sqs:SendMessage”, Resource = aws_sqs_queue.fanout_queue.arn, Condition = ArnLike = “aws:SourceArn” = aws_lambda_function.fanout_lambda.arn ] )resource “aws_iam_role” “lambda_role” name = “lambda_role” assume_role_policy = jsonencode( Version = “2012-10-17”, Statement = [ Action = “sts:AssumeRole”, Principal = Service = “lambda.amazonaws.com” , Effect = “Allow”, Sid = “” , ] )resource “aws_iam_role_policy_attachment” “lambda_policy” role = aws_iam_role.lambda_role.name policy_arn = “arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole”resource “aws_lambda_event_source_mapping” “sqs_trigger” function_name = aws_lambda_function.fanout_lambda.function_name event_source_arn = aws_sqs_queue.fanout_queue.arn batch_size = 10 # Process up to 10 messages at a time“`This Terraform configuration creates an SQS queue (`fanout_queue`), a Lambda function (`fanout_lambda`), and the necessary IAM roles and permissions.

The `aws_lambda_event_source_mapping` resource establishes the link between the SQS queue and the Lambda function, causing the function to be triggered whenever a message is added to the queue. The `batch_size` parameter controls how many messages are processed concurrently by the Lambda function. The `lambda_function_payload.zip` file would contain the code to process the message from the queue and perform the fan-out operations.

Scheduled Events

Scheduled events, often implemented using a cron-like syntax, provide a mechanism to trigger fan-out processes at regular intervals. This approach is suitable for tasks that need to be performed periodically, such as batch processing, data aggregation, or report generation.Configuring scheduled events typically involves:

- Scheduler Configuration: Using a scheduler service (e.g., AWS CloudWatch Events/EventBridge, Azure Logic Apps, Google Cloud Scheduler) to define the schedule (e.g., every hour, daily at midnight).

- Target Definition: Specifying the target to be invoked when the schedule triggers. This is usually a serverless function.

- Event Payload (Optional): Defining an optional event payload to be passed to the target function. This payload can contain configuration parameters or data required for the scheduled task.

Here’s an example using Azure Resource Manager (ARM) templates to set up a scheduled function trigger:“`json “$schema”: “https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#”, “contentVersion”: “1.0.0.0”, “parameters”: “functionAppName”: “type”: “string”, “defaultValue”: “myfanoutfunctionapp”, “metadata”: “description”: “Name of the Function App” , “functionName”: “type”: “string”, “defaultValue”: “FanOutFunction”, “metadata”: “description”: “Name of the function to trigger” , “scheduleCronExpression”: “type”: “string”, “defaultValue”: “0

- “, // Runs every hour at the top of the hour

“metadata”: “description”: “Cron expression for the schedule” , “resources”: [ “type”: “Microsoft.Web/sites”, “apiVersion”: “2022-03-01”, “name”: “[parameters(‘functionAppName’)]”, “location”: “[resourceGroup().location]”, “kind”: “functionapp”, “properties”: “reserved”: true, “siteConfig”: “appSettings”: [ “name”: “FUNCTIONS_EXTENSION_VERSION”, “value”: “~4” , “name”: “WEBSITE_RUN_FROM_PACKAGE”, “value”: “1” ] , “type”: “Microsoft.Web/sites/functions”, “apiVersion”: “2022-03-01”, “name”: “[concat(parameters(‘functionAppName’), ‘/’, parameters(‘functionName’))]”, “location”: “[resourceGroup().location]”, “dependsOn”: [ “[resourceId(‘Microsoft.Web/sites’, parameters(‘functionAppName’))]” ], “properties”: “config”: “bindings”: [ “type”: “timerTrigger”, “direction”: “in”, “name”: “myTimer”, “schedule”: “[parameters(‘scheduleCronExpression’)]”, “runOnStartup”: false ] ]“`This ARM template defines an Azure Function App and a function within it.

The function is configured with a timer trigger (`timerTrigger`) using the `scheduleCronExpression` parameter, which specifies the cron schedule. The function code itself (not shown here) would contain the fan-out logic to be executed at the scheduled intervals. The template deploys the infrastructure, and the function is automatically triggered according to the cron expression. For example, the default `0

- ` runs the function every hour at the top of the hour.

Implementing the Fan-Out Logic

The core of the fan-out pattern lies in efficiently distributing a single incoming event to multiple downstream processes. This section focuses on the practical implementation of this logic, detailing the methods for event splitting, task distribution, and providing code examples in different programming languages to illustrate the concepts. The objective is to ensure that events are processed concurrently and reliably, maximizing the benefits of serverless architectures.

Splitting Incoming Events into Parallel Tasks

Breaking down an incoming event into smaller, independent tasks is the first step in the fan-out process. This typically involves parsing the event data and identifying the individual units of work that need to be performed. The granularity of these tasks depends on the specific application and the nature of the event.The following considerations are crucial when splitting an event:

- Event Structure Analysis: Understanding the structure of the incoming event is paramount. This involves identifying the relevant data fields and their relationships. For example, an event representing an order might contain information about multiple line items, each of which could be processed as a separate task.

- Task Definition: Define the individual tasks based on the event data. Each task should be self-contained and independent of the others. This allows for parallel processing and simplifies error handling. For instance, each line item in an order could be a task to update inventory, calculate taxes, or send a notification.

- Task Serialization: The process of converting the extracted data into a format suitable for the worker functions is essential. This may involve creating separate messages, data structures, or payloads that can be easily consumed by the downstream services. The format should be consistent and easy to parse on the receiving end.

Methods for Distributing Tasks to Individual Worker Functions

Once the event is split into tasks, the next step is to distribute these tasks to the worker functions. Serverless platforms offer various mechanisms for achieving this, each with its own advantages and trade-offs. The choice of distribution method depends on factors such as the scale of the application, the desired level of concurrency, and the specific serverless platform being used.Here are the common methods for distributing tasks:

- Message Queues: Using a message queue (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub) is a popular approach. The fan-out function places each task as a message on the queue. Worker functions then subscribe to the queue and consume the messages. This provides asynchronous processing and decouples the fan-out function from the worker functions.

- Event Bridges/Event Routers: Event bridges (e.g., AWS EventBridge, Azure Event Grid, Google Cloud Events) can be used to route events to multiple destinations. The fan-out function publishes an event, and the event bridge routes it to multiple worker functions based on predefined rules. This is useful when the same event needs to trigger different actions.

- Direct Function Invocation: Some serverless platforms allow direct invocation of functions. The fan-out function can invoke multiple worker functions in parallel, passing the task data as input. This is simpler to implement but can be less scalable than using a message queue or event bridge. It’s suitable for cases where the number of worker functions is relatively small.

Code Examples of Fan-Out Logic Implementation

The following code examples demonstrate the implementation of the fan-out logic using different programming languages and approaches. These examples illustrate the key steps involved in splitting events, distributing tasks, and handling potential errors.

Python Example (using AWS Lambda and SQS)

This example demonstrates a Python-based fan-out function that processes an order event and sends messages to an SQS queue for individual order line items.“`pythonimport jsonimport boto3sqs = boto3.client(‘sqs’)queue_url = ‘YOUR_SQS_QUEUE_URL’ # Replace with your SQS queue URLdef lambda_handler(event, context): try: order_data = json.loads(event[‘body’]) # Assuming event body contains JSON order data for item in order_data[‘items’]: message = ‘order_id’: order_data[‘order_id’], ‘item_id’: item[‘item_id’], ‘quantity’: item[‘quantity’] sqs.send_message( QueueUrl=queue_url, MessageBody=json.dumps(message) ) return ‘statusCode’: 200, ‘body’: json.dumps(‘Order processing initiated.’) except Exception as e: print(f”Error processing event: e”) return ‘statusCode’: 500, ‘body’: json.dumps(‘Error processing order.’) “`This code receives a JSON payload, parses it, and iterates through the items, creating individual messages for each item.

Each message contains relevant information for downstream workers. The `boto3` library is used to interact with the AWS SQS service.

Node.js Example (using AWS Lambda and EventBridge)

This example shows a Node.js fan-out function that uses EventBridge to distribute events to different targets based on rules.“`javascriptconst AWS = require(‘aws-sdk’);const eventbridge = new AWS.EventBridge();exports.handler = async (event) => try const orderData = JSON.parse(event.body); const promises = orderData.items.map(item => const params = Entries: [ Source: ‘com.example.order’, // Replace with your source DetailType: ‘OrderItem’, Detail: JSON.stringify( order_id: orderData.order_id, item_id: item.item_id, quantity: item.quantity ) ] ; return eventbridge.putEvents(params).promise(); ); await Promise.all(promises); return statusCode: 200, body: JSON.stringify(‘Order processing initiated.’) ; catch (error) console.error(‘Error:’, error); return statusCode: 500, body: JSON.stringify(‘Error processing order.’) ; ;“`This code parses the order data and, for each item, constructs an event to be sent to EventBridge.

The `putEvents` method sends the event to EventBridge, which is then routed to the appropriate targets (e.g., Lambda functions) based on configured rules.

Java Example (using AWS Lambda and SQS)

This example demonstrates a Java-based fan-out function that utilizes the AWS SDK for Java to interact with SQS.“`javaimport com.amazonaws.services.lambda.runtime.Context;import com.amazonaws.services.lambda.runtime.RequestHandler;import com.amazonaws.services.sqs.AmazonSQS;import com.amazonaws.services.sqs.AmazonSQSClientBuilder;import com.amazonaws.services.sqs.model.SendMessageRequest;import com.fasterxml.jackson.databind.JsonNode;import com.fasterxml.jackson.databind.ObjectMapper;import java.util.Map;public class OrderFanOutHandler implements RequestHandler

Worker Function Design and Implementation

Designing effective worker functions is crucial for the successful implementation of the fan-out pattern. These functions are the workhorses of the architecture, responsible for processing individual tasks dispatched by the fan-out logic. Their design directly impacts performance, scalability, fault tolerance, and overall system reliability. Careful consideration of various factors is necessary to optimize their behavior.

Considerations for Designing Worker Functions

The design of worker functions involves several key considerations to ensure they are efficient, resilient, and scalable. These factors influence the function’s ability to handle tasks effectively and contribute to the overall system’s robustness.

- Idempotency: Worker functions should ideally be idempotent. This means that running the same task multiple times should produce the same result as running it once. This is crucial for handling retries and preventing data corruption or inconsistent states. Implement mechanisms to check if a task has already been completed before executing it again. For instance, if a worker function updates a database record, it should first check if the update has already been applied by querying the database for the relevant record’s current state.

- Statelessness: Worker functions should strive to be stateless. This means they should not rely on any persistent state from previous invocations. Any data required for processing a task should be provided as input. This design principle enhances scalability and fault tolerance. If a function needs to access external resources, it should obtain them each time it is invoked, rather than relying on cached connections or data.

- Input Validation: Thorough input validation is essential to prevent unexpected behavior and potential security vulnerabilities. Worker functions should validate the input data they receive to ensure it conforms to the expected format and constraints. For example, if a function expects a numerical value, it should validate that the input is indeed a number and falls within an acceptable range.

- Resource Management: Efficient resource management is vital for performance and cost optimization. Functions should release any resources they acquire as soon as they are no longer needed. This includes closing database connections, releasing memory, and terminating any background processes. Consider using connection pooling to manage database connections efficiently, avoiding the overhead of establishing a new connection for each invocation.

- Error Handling: Robust error handling is critical for resilience. Worker functions should be designed to anticipate and handle potential errors gracefully. This includes catching exceptions, logging errors with relevant context, and implementing retry mechanisms. Implement specific error handling logic based on the nature of the potential failures.

- Concurrency: Understand the concurrency model of the serverless platform. While serverless functions can handle multiple concurrent invocations, be mindful of resource limitations. Avoid excessive resource consumption within a single function invocation that could impact performance or trigger throttling. Consider using asynchronous operations and optimized code.

Handling Task Failures and Retries

Implementing robust failure handling and retry mechanisms is critical for the resilience of the fan-out architecture. When a worker function fails, the system must be able to detect the failure, potentially retry the task, and ultimately ensure that the task is either completed successfully or handled appropriately.

- Error Detection: Implement mechanisms to detect failures within the worker functions. This can include catching exceptions, checking return codes, and monitoring resource usage. Utilize structured logging to capture detailed information about the failure, including timestamps, error messages, and context data.

- Retry Mechanisms: Implement retry mechanisms to automatically re-attempt failed tasks. Retry strategies can be designed to adapt to the nature of the failure. Implement exponential backoff with jitter to prevent overwhelming dependent services during retries. Consider implementing a dead-letter queue (DLQ) for tasks that repeatedly fail after a defined number of retries.

- Error Reporting and Alerting: Establish mechanisms for reporting errors and generating alerts. Integrate with monitoring and alerting systems to notify operators of critical failures. Configure alerts based on the frequency and severity of errors.

- Idempotent Operations (Again): Reiterate the importance of idempotency. Because retries are inherent in failure handling, worker functions

-must* be idempotent to avoid data corruption or unintended side effects. - Example: Exponential Backoff with Jitter: The exponential backoff strategy with jitter is commonly employed to avoid overwhelming a failing service during retries. The backoff interval increases exponentially with each retry attempt, while jitter adds a random element to the interval to prevent multiple workers from retrying simultaneously.

Retry Interval = (Base

– 2 Attempt) + Random(0, Jitter)Where:

- `Base`: Initial delay (e.g., 1 second).

- `Attempt`: Number of retry attempts (starting from 0).

- `Jitter`: Maximum random delay (e.g., 1 second).

Strategy for Logging and Monitoring

Effective logging and monitoring are essential for gaining insights into the performance and behavior of worker functions. This enables troubleshooting, performance optimization, and proactive identification of potential issues.

- Structured Logging: Implement structured logging to capture relevant information in a consistent and searchable format. Include timestamps, function identifiers, request IDs, and context-specific data in each log entry. Utilize a logging framework that supports structured logging, such as JSON format.

- Log Levels: Use appropriate log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log messages based on their severity. Use DEBUG for detailed information during development and troubleshooting, INFO for general operational events, WARN for potential issues, and ERROR for critical failures.

- Monitoring Metrics: Collect key performance metrics to monitor the health and performance of worker functions. Metrics can include:

- Invocation count

- Execution time

- Error rates

- Resource utilization (memory, CPU)

- Integration with Monitoring Systems: Integrate logging and monitoring with a centralized monitoring system. This enables centralized log aggregation, analysis, and alerting. Use monitoring tools to create dashboards and set up alerts based on specific metrics or error patterns.

- Tracing: Implement distributed tracing to track the flow of requests across multiple worker functions and services. This enables the identification of performance bottlenecks and the diagnosis of issues that span multiple components.

- Example: Log Entry in JSON Format:

"timestamp": "2024-10-27T10:00:00.000Z", "function_name": "process_task", "request_id": "abc-123-def-456", "log_level": "ERROR", "message": "Failed to process task", "error_message": "Database connection timeout", "task_id": "12345"

Orchestration and Coordination

The fan-out pattern, while distributing tasks effectively, introduces complexities in managing the overall process. Effective orchestration and coordination are crucial for ensuring tasks are executed correctly, dependencies are handled, and the system remains resilient to failures. This section delves into the tools and techniques that enable the seamless management of the fan-out process, focusing on how to design and implement robust workflows.

Orchestration Tools and Techniques

Several serverless orchestration services provide robust capabilities for managing fan-out processes. These services allow for defining workflows, handling dependencies, and monitoring the execution of tasks. Choosing the right tool depends on the specific requirements of the application, including complexity, scalability needs, and the cloud provider being used.

- AWS Step Functions: Step Functions is a fully managed, serverless orchestration service provided by Amazon Web Services. It enables the creation of state machines that coordinate the execution of distributed applications.

- Step Functions utilizes a state machine definition, typically written in JSON, to describe the workflow. This definition specifies the sequence of steps, transitions, and error handling logic.

- Step Functions supports various state types, including tasks, choice states (for conditional branching), parallel states (for fan-out), and wait states.

- The service offers built-in integration with other AWS services, such as Lambda functions, SQS queues, and SNS topics, streamlining the integration of different components within the fan-out architecture.

- Step Functions provides comprehensive monitoring and logging capabilities through CloudWatch, allowing developers to track the execution of state machines, identify errors, and optimize performance.

- Example: Imagine a system processing image uploads. A Step Function could be designed to:

- Receive an event indicating a new image upload.

- Start a parallel state to invoke multiple Lambda functions for different image processing tasks (e.g., resizing, thumbnail generation, content analysis). This is the fan-out part.

- Wait for all the parallel tasks to complete.

- Aggregate the results and store them.

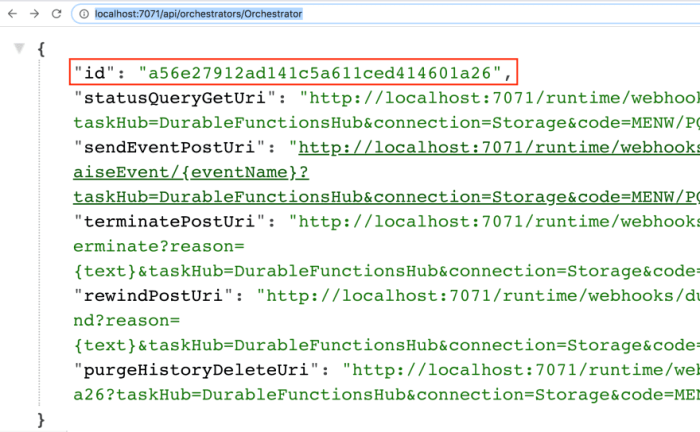

- Azure Durable Functions: Durable Functions, part of Azure Functions, provides a serverless orchestration framework for building stateful functions. It extends the capabilities of Azure Functions by allowing developers to write long-running, stateful, and complex workflows.

- Durable Functions employs a programming model based on orchestrator functions and activity functions. Orchestrator functions define the workflow, while activity functions perform the actual work.

- Durable Functions supports various patterns, including function chaining, fan-out/fan-in, asynchronous HTTP APIs, and human interaction.

- The service manages the state of the workflow internally, ensuring reliability and fault tolerance.

- Durable Functions integrates seamlessly with other Azure services, such as Azure Storage, Azure Event Hubs, and Azure Service Bus.

- Example: Consider a system for processing orders. Durable Functions could be used to:

- Receive an order request.

- Start an orchestrator function.

- Use activity functions to: validate the order, check inventory, process payment, and send confirmation emails. The inventory check and payment processing could be performed in parallel (fan-out).

- Wait for all the activities to complete.

- Update the order status.

- Google Cloud Workflows: Google Cloud Workflows is a fully managed orchestration service that allows users to automate and integrate services by defining workflows. It is designed to orchestrate serverless applications and APIs, providing a scalable and reliable way to manage complex processes.

- Workflows uses a YAML-based language to define workflows, making them easy to read and maintain.

- It supports a wide range of integrations with other Google Cloud services, including Cloud Functions, Cloud Run, and various APIs.

- Workflows offers features like error handling, retries, and conditional branching to ensure the resilience of the workflows.

- The service provides detailed monitoring and logging capabilities through Cloud Logging and Cloud Monitoring.

- Example: Imagine a system for processing customer data. Cloud Workflows could be designed to:

- Receive an event indicating new customer data.

- Call a Cloud Function to validate the data.

- If the data is valid, call a Cloud Function to enrich the data (e.g., look up demographic information).

- Fan-out to multiple Cloud Functions to perform various data analysis tasks.

- Aggregate the results and store them in BigQuery.

Comparison of Orchestration Services

The choice of orchestration service should be based on several factors, including features, cost, ease of use, and integration capabilities.

| Feature | AWS Step Functions | Azure Durable Functions | Google Cloud Workflows |

|---|---|---|---|

| Programming Model | State Machine (JSON) | Orchestrator/Activity Functions (Code-based) | YAML-based |

| Integration | Extensive AWS Services | Extensive Azure Services | Extensive Google Cloud Services |

| State Management | Managed internally | Managed internally | Managed internally |

| Error Handling | Built-in, configurable | Built-in, configurable | Built-in, configurable |

| Monitoring & Logging | CloudWatch | Azure Monitor | Cloud Logging, Cloud Monitoring |

| Ease of Use | Steeper learning curve initially | Requires understanding of function programming | Easy to learn for simple workflows |

Workflow for Managing Dependencies

Managing dependencies is critical in the fan-out process. Orchestration services enable defining dependencies between tasks, ensuring that tasks are executed in the correct order and that the system handles failures gracefully.

- Defining Task Dependencies:

- Orchestration services allow specifying the order in which tasks should be executed. This can be done through the state machine definition (Step Functions), orchestrator function code (Durable Functions), or the workflow definition (Cloud Workflows).

- Dependencies can be defined based on the output of one task being used as input for another.

- Dependencies can also be defined using wait states or other mechanisms to ensure that tasks are not started before their dependencies are met.

- Error Handling and Retries:

- Orchestration services provide mechanisms for handling errors and retrying failed tasks.

- This is crucial for ensuring the reliability of the fan-out process, as individual tasks may fail due to various reasons.

- Retries can be configured with different strategies, such as exponential backoff, to avoid overwhelming downstream services.

- Compensation Actions:

- In complex workflows, it might be necessary to implement compensation actions to undo the effects of failed tasks.

- For example, if a task to process a payment fails, a compensation action might be required to refund the customer.

- Orchestration services provide mechanisms for defining and executing compensation actions.

Message Queues and Buffering

Message queues are fundamental components in the fan-out pattern, acting as intermediaries to facilitate asynchronous communication and decouple different parts of the system. They provide a robust mechanism for handling bursts of events, managing failures, and scaling the system independently. By using message queues, the producer of events doesn’t need to know the specifics of the consumers, leading to greater flexibility and resilience.

Role of Message Queues in Fan-Out

Message queues serve as a central hub for event distribution within the fan-out architecture. Their primary function is to receive events from the event source (e.g., an API gateway, a database change event), store them, and then deliver them to multiple consumer functions. This decoupling is crucial. The event source only needs to publish messages to the queue; the consumers subscribe to the queue and process the messages independently.

- Asynchronous Communication: Message queues enable asynchronous processing. The event source can publish a message to the queue and immediately continue processing other tasks without waiting for the consumers to complete their work.

- Decoupling: The event source and consumers are decoupled, meaning changes to one component do not directly impact the others. This increases the maintainability and scalability of the system.

- Reliability: Message queues provide reliability through features like message persistence, which ensures that messages are not lost even if a consumer fails. They also offer retry mechanisms to handle temporary failures.

- Scalability: Message queues can handle a large volume of messages, allowing the system to scale horizontally. The number of consumers can be adjusted independently of the event source.

Buffering and Decoupling with Message Queues

Message queues excel at buffering and decoupling components, crucial aspects of the fan-out pattern. Buffering helps to absorb sudden spikes in event traffic, preventing the consumer functions from being overwhelmed. Decoupling, as previously mentioned, insulates components from each other, making the system more resilient and easier to manage.

- Buffering: When events are generated at a rate that exceeds the processing capacity of the consumers, the message queue acts as a buffer. Messages accumulate in the queue until consumers are available to process them. This prevents backpressure from impacting the event source.

- Decoupling: The event source only needs to put messages into the queue. Consumers subscribe to the queue and process the messages at their own pace. The event source does not need to know about the consumers, and the consumers do not need to know about the event source.

- Error Handling: Message queues often include mechanisms for handling errors. If a consumer fails to process a message, the message can be retried or moved to a dead-letter queue for further analysis.

- Rate Limiting: Message queues can implement rate limiting to control the flow of messages to the consumers. This helps prevent consumers from being overwhelmed and can be particularly useful during periods of high traffic.

Comparison of Message Queue Services

Different message queue services offer varying features and capabilities. Selecting the appropriate service depends on the specific requirements of the fan-out implementation, considering factors such as message size limits, throughput, cost, and features.

| Feature | Amazon SQS | Azure Service Bus | Google Cloud Pub/Sub |

|---|---|---|---|

| Message Size Limit | 256 KB | 256 KB | 10 MB |

| Throughput | High (Millions of messages per second) | High (Millions of messages per second) | Extremely High (Millions of messages per second) |

| Cost | Pay-per-use, based on API calls and data transfer | Pay-per-use, based on operations and tier | Pay-per-use, based on data transfer and usage |

| Message Retention | Up to 14 days | Configurable, up to 7 days | Configurable, up to 7 days |

| Message Ordering | FIFO queues available | Available with sessions | Best-effort ordering |

| Delivery Guarantees | At-least-once | At-least-once, At-most-once | At-least-once |

| Dead Letter Queue | Supported | Supported | Supported |

Error Handling and Monitoring

Robust error handling and comprehensive monitoring are critical components of a successful serverless fan-out implementation. These elements ensure system resilience, provide insights into performance bottlenecks, and facilitate rapid identification and resolution of issues. Without these measures, the system’s reliability and ability to process events effectively are significantly compromised.

Strategies for Handling Errors

Effective error handling within a fan-out architecture requires a multi-faceted approach, addressing potential failures at various stages of the event processing pipeline. This includes identifying the sources of errors, implementing retry mechanisms, and providing a safety net for unrecoverable failures.

- Identifying Error Sources: Errors can originate from various points within the fan-out process, including the event source, the event trigger, the fan-out logic, and the worker functions. Detailed logging and tracing are essential for pinpointing the source of an error. This involves logging relevant information at each stage of the event processing, such as event IDs, timestamps, function invocations, and any error messages.

Consider using distributed tracing tools to visualize the flow of events and identify the exact point where a failure occurred.

- Implementing Retry Mechanisms: Transient errors, such as temporary network issues or brief service unavailability, can often be resolved by retrying the failed operation. Implement retry mechanisms with exponential backoff to avoid overwhelming downstream services. Exponential backoff involves increasing the delay between retries, which can prevent cascading failures. For example, if a worker function fails to process an event, the system could retry the function after a delay of 1 second, then 2 seconds, then 4 seconds, and so on.

- Utilizing Dead-Letter Queues (DLQs): For events that consistently fail after multiple retries, a dead-letter queue provides a mechanism to isolate these problematic events. Events in the DLQ are not processed by the normal event processing pipeline. This prevents these events from blocking the processing of other, healthy events. The DLQ allows for manual inspection and troubleshooting of the failed events. Events can be analyzed to determine the root cause of the failure and potentially reprocessed after the issue is resolved.

For instance, if a worker function consistently fails due to a data validation error, the event can be placed in a DLQ for manual review and correction of the data before reprocessing.

- Implementing Circuit Breakers: Circuit breakers are a pattern that can prevent cascading failures by monitoring the health of downstream services. If a downstream service repeatedly fails, the circuit breaker “trips,” preventing further requests from being sent to that service. This protects the upstream service from being overwhelmed and allows the downstream service time to recover. Circuit breakers can be implemented using libraries or managed services that automatically monitor service health and control the flow of requests.

Implementing Retry Mechanisms and Dead-Letter Queues

The implementation of retry mechanisms and dead-letter queues requires careful consideration of the specific serverless technologies being used and the characteristics of the events being processed.

- Retry Implementation Examples:

- AWS Lambda with SQS: When using SQS as the event source, Lambda functions automatically handle retries. If a Lambda function fails to process a message from SQS, the message is retried based on the queue’s configuration. The maximum number of retries and the visibility timeout can be configured.

- Azure Functions with Event Grid: Azure Event Grid provides retry policies for event delivery. Event Grid automatically retries failed event deliveries based on the configured retry policy.

- Google Cloud Functions with Cloud Pub/Sub: Cloud Pub/Sub offers retry policies for message delivery. When a Cloud Function fails to acknowledge a message, Pub/Sub retries the delivery based on the configured retry policy.

- Dead-Letter Queue Implementation Examples:

- AWS SQS DLQ: Configure a DLQ for an SQS queue. If a message fails to be processed by a Lambda function after the maximum number of retries, SQS automatically moves the message to the DLQ.

- Azure Service Bus DLQ: Azure Service Bus also supports DLQs. Messages that fail to be processed after the maximum number of retries are moved to the DLQ.

- Google Cloud Pub/Sub DLQ: Cloud Pub/Sub allows you to configure a DLQ. If a message delivery fails after retries, it can be routed to a dead-letter topic.

- Configuration and Monitoring: The retry and DLQ configurations must be monitored to ensure they are functioning as expected. Monitoring the DLQ for accumulated messages is critical to identify issues and prevent data loss.

Designing a Monitoring Strategy

A comprehensive monitoring strategy provides real-time visibility into the performance and health of the fan-out system, enabling proactive identification and resolution of issues.

- Key Metrics to Monitor:

- Event Processing Latency: Measure the time it takes for events to be processed from the event source to the completion of all worker functions. High latency can indicate performance bottlenecks.

- Worker Function Execution Times: Track the execution time of individual worker functions. Identify slow-performing functions that may require optimization.

- Error Rates: Monitor the rate of errors in worker functions and the fan-out logic. A high error rate indicates potential problems with the system.

- Retry Counts: Track the number of retries for failed events. An increasing number of retries suggests underlying issues that need investigation.

- DLQ Message Counts: Monitor the number of messages in the dead-letter queues. An increasing number of messages in the DLQ indicates events that are consistently failing and require attention.

- Queue Lengths: Monitor the length of any message queues used in the system. Growing queue lengths may indicate that the system is not processing events fast enough.

- Tools and Techniques:

- CloudWatch (AWS): Utilize CloudWatch for logging, metrics, and alerting. Create custom metrics to track specific aspects of the fan-out system.

- Azure Monitor (Azure): Use Azure Monitor for monitoring, logging, and alerting. Create custom dashboards to visualize key metrics.

- Cloud Monitoring (Google Cloud): Employ Cloud Monitoring for metrics, logging, and alerting. Define custom metrics and dashboards.

- Distributed Tracing: Implement distributed tracing to track the flow of events across different services and identify performance bottlenecks.

- Alerting: Configure alerts based on key metrics, such as high error rates, long event processing times, and increasing DLQ message counts. These alerts should notify the appropriate teams to investigate and resolve issues promptly.

- Dashboards and Visualization: Create dashboards to visualize key metrics in real-time. These dashboards should provide a comprehensive overview of the system’s health and performance. A well-designed dashboard allows for quick identification of issues and facilitates effective troubleshooting.

Security Considerations

Implementing a serverless fan-out pattern introduces several security challenges that must be addressed to protect sensitive data and ensure the integrity of the system. Securing event triggers, worker functions, and the overall architecture is crucial to prevent unauthorized access, data breaches, and denial-of-service attacks. Proper security measures are essential for maintaining trust and compliance with industry regulations.

Securing Event Triggers and Worker Functions

Securing event triggers and worker functions involves multiple layers of protection to mitigate potential vulnerabilities. This includes authentication, authorization, and input validation. The choice of security mechanisms often depends on the specific serverless platform and the nature of the events and worker functions.

- Authentication of Event Sources: Verify the origin of events. This is essential to prevent malicious actors from injecting false events into the system.

- Authorization of Event Triggers: Implement role-based access control (RBAC) to restrict which entities can trigger events.

- Input Validation: Validate all incoming event data to prevent injection attacks, such as SQL injection or cross-site scripting (XSS).

- Function Access Control: Restrict access to worker functions using IAM roles and permissions. Ensure that functions have only the necessary permissions to access resources.

- Encryption: Encrypt data at rest and in transit. This protects data from unauthorized access if a breach occurs.

- Regular Security Audits: Conduct regular security audits to identify and address vulnerabilities. This includes penetration testing and code reviews.

- Example: Securing an API Gateway Trigger (AWS) An API Gateway trigger can be secured by implementing API keys, user authentication (using Cognito or other identity providers), and request validation. The API Gateway can also be configured to authorize requests based on IAM roles, restricting access to specific functions based on the caller’s identity.

- Example: Securing an S3 Event Trigger (AWS) An S3 event trigger can be secured by configuring bucket policies that restrict access to specific users or IAM roles. Additionally, the S3 bucket can be configured to encrypt data at rest, protecting data stored in the bucket.

Managing Access Control and Authentication in a Serverless Fan-Out Architecture

Effective access control and authentication are paramount in a serverless fan-out architecture to ensure that only authorized users and services can interact with the system. This involves implementing a robust identity and access management (IAM) strategy.

- IAM Roles: Use IAM roles to define the permissions for each worker function. Roles should grant the minimum necessary privileges to perform their tasks (principle of least privilege).

- Authentication Services: Integrate with identity providers (e.g., AWS Cognito, Google Identity Platform, Azure Active Directory) for user authentication.

- Authorization Policies: Define authorization policies that determine which users or services are allowed to access specific resources or trigger events. These policies can be based on roles, groups, or individual user identities.

- API Keys and Tokens: Use API keys or tokens for authenticating service-to-service communication. These keys should be securely managed and rotated regularly.

- Secure Configuration Management: Store sensitive configuration data (e.g., API keys, database credentials) securely using a secrets management service (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager).

- Network Security: Implement network security measures, such as virtual private clouds (VPCs) and security groups, to control network traffic and restrict access to resources.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security incidents. Log all access attempts and actions performed by worker functions.

- Example: IAM Role for a Worker Function (AWS) A worker function that processes image uploads to an S3 bucket should be assigned an IAM role that allows it to read from the source bucket and write to a destination bucket. The role should not have any other permissions, adhering to the principle of least privilege. The IAM role would include a policy that looks similar to the following (in JSON format):

"Version": "2012-10-17", "Statement": [ "Effect": "Allow", "Action": [ "s3:GetObject" ], "Resource": "arn:aws:s3:::source-bucket/*" , "Effect": "Allow", "Action": [ "s3:PutObject" ], "Resource": "arn:aws:s3:::destination-bucket/*" ]

Performance Optimization

Optimizing the performance of a serverless fan-out implementation is critical for achieving low latency, high throughput, and cost-effectiveness. Several techniques can be applied at different stages of the fan-out process, from event triggering to worker function execution and message queue management. Careful consideration of these optimizations is essential to handle increasing workloads efficiently and maintain a responsive system.

Event Triggering Optimization

Optimizing event triggering is vital for initiating the fan-out process promptly and efficiently. The choice of trigger mechanism significantly impacts performance.

- Choose the Right Trigger: Select event triggers optimized for the expected event volume and frequency. For example, using Amazon SQS for high-volume, asynchronous event processing can prevent overloading downstream services. Choosing a direct integration with a service like Amazon EventBridge can provide advanced filtering and routing capabilities, improving performance and reducing latency.

- Batch Processing: Configure triggers to batch events when possible. Batching allows the processing of multiple events within a single function invocation, reducing the overhead associated with individual function calls. AWS Lambda supports batching for services like Amazon SQS and Amazon Kinesis.

- Event Filtering: Implement event filtering at the trigger level to reduce unnecessary invocations. Filtering prevents the function from being triggered by irrelevant events, improving performance and reducing costs. Services like Amazon EventBridge offer sophisticated filtering capabilities.

- Optimize Trigger Configuration: Configure trigger parameters such as concurrency limits, batch sizes, and dead-letter queues appropriately. Properly configured triggers ensure efficient resource utilization and graceful handling of errors.

Fan-Out Logic Optimization

Optimizing the fan-out logic itself ensures events are distributed efficiently to worker functions.

- Minimize Fan-Out Latency: Reduce the time spent within the fan-out function. Optimize code for speed and efficiency, avoiding unnecessary operations.

- Asynchronous Operations: Utilize asynchronous calls to worker functions. Do not wait for each worker function to complete before invoking the next. This parallel execution significantly reduces overall latency.

- Efficient Data Transformation: Perform any necessary data transformations efficiently within the fan-out function. Minimize the amount of data transferred to worker functions to reduce processing time.

- Consider Concurrency: Carefully manage the concurrency of the fan-out function to avoid throttling and ensure that the system can handle the expected load.

Worker Function Optimization

Worker functions are where the actual processing of the events occurs. Optimizing these functions is essential for overall system performance.

- Code Optimization: Write efficient code within worker functions. Optimize algorithms, reduce computational complexity, and minimize resource usage.

- Resource Allocation: Configure appropriate memory and compute resources for worker functions. Over-provisioning can be wasteful, while under-provisioning can lead to performance bottlenecks.

- Caching: Implement caching mechanisms where appropriate to reduce the need to fetch data repeatedly. This can significantly improve the speed of worker function execution. For instance, caching frequently accessed data in a service like Amazon ElastiCache can drastically reduce latency.

- Connection Pooling: Use connection pooling to manage database connections efficiently, avoiding the overhead of establishing new connections for each invocation.

- Minimize Dependencies: Reduce the number of external dependencies to minimize cold start times and improve execution speed.

Message Queue Optimization

Message queues play a crucial role in buffering and distributing events. Optimizing queue usage is critical for performance.

- Choose the Right Queue Type: Select the appropriate queue type based on the requirements. For example, Amazon SQS offers standard and FIFO queues. FIFO queues guarantee message ordering, while standard queues offer higher throughput.

- Queue Configuration: Configure queue parameters such as visibility timeout, message retention period, and dead-letter queue appropriately. These settings impact message processing and error handling.

- Message Size: Optimize message size to reduce transfer times. Smaller messages are processed more quickly. Compress message payloads if necessary.

- Queue Consumption: Optimize the consumption of messages from the queue. Use parallel consumers to process messages concurrently and increase throughput.

Orchestration and Coordination Optimization

Orchestration and coordination mechanisms, such as state machines or workflows, also require optimization.

- Minimize State Transitions: Reduce the number of state transitions in the workflow to minimize latency.

- Parallel Execution: Where possible, execute steps in parallel to reduce overall execution time.

- Idempotency: Implement idempotency to ensure that operations are performed only once, even if retried. This is crucial for handling transient failures.

- Optimized State Management: Use an efficient state management service like AWS Step Functions to avoid performance bottlenecks.

Monitoring and Logging Optimization

Effective monitoring and logging are essential for identifying and addressing performance issues.

- Detailed Metrics: Implement detailed monitoring and logging to capture key performance indicators (KPIs) such as latency, throughput, error rates, and resource utilization.

- Alerting: Set up alerts to notify you of performance degradation or errors.

- Performance Profiling: Use profiling tools to identify performance bottlenecks in your code.

- Log Aggregation: Aggregate logs from different components to provide a comprehensive view of the system’s performance. Services like Amazon CloudWatch Logs can be used for this purpose.

Final Thoughts

In summary, the implementation of the fan-out pattern with serverless is a strategic approach to building scalable, resilient, and cost-effective applications. From selecting appropriate serverless services and designing efficient architectures to implementing robust error handling and performance optimization, this guide offers a comprehensive overview. By embracing these principles, developers can unlock the full potential of serverless computing, transforming complex tasks into manageable, parallel processes that drive innovation and enhance user experience.

Essential Questionnaire

What is the primary benefit of using the fan-out pattern?

The primary benefit is parallel processing, which dramatically increases the throughput and reduces the latency of processing large volumes of data or events.

How does the fan-out pattern improve scalability?

By distributing tasks across multiple worker functions, the fan-out pattern allows the system to scale horizontally, handling increased workloads without impacting performance.

What are the common event triggers used in a fan-out implementation?

Common triggers include HTTP requests, message queues (like SQS or Pub/Sub), and scheduled events (e.g., cron jobs).

How do I handle errors in a fan-out process?

Implement retry mechanisms, dead-letter queues, and robust logging to capture and address failures within worker functions and the overall orchestration process.

What are the cost considerations when choosing serverless services for fan-out?

Costs vary based on the provider (AWS, Azure, GCP), the number of invocations, execution time, and memory consumption. Comparing pricing models and optimizing function configurations are crucial.