Embark on a journey into the critical world of software development, where the trifecta of logging, monitoring, and tracing reigns supreme. This guide will illuminate how these practices work in harmony, offering developers the insights needed to build robust, resilient, and high-performing applications. From understanding the nuances of each element to mastering their practical implementation, you’ll discover the power of proactive insights and informed decision-making.

This guide will navigate through the core concepts, best practices, and essential tools. You’ll learn to capture vital application data, establish proactive monitoring systems, and implement tracing for performance optimization. This will enable you to transform raw data into actionable intelligence, enhancing your ability to diagnose issues, optimize performance, and ultimately, deliver superior software solutions.

Introduction to Logging, Monitoring, and Tracing

Implementing robust logging, monitoring, and tracing practices is crucial for the health, stability, and performance of any software system. These three pillars work together to provide valuable insights into application behavior, enabling developers and operations teams to proactively identify and resolve issues, optimize performance, and ensure a positive user experience. They are essential components of a comprehensive observability strategy.

Core Concepts of Logging, Monitoring, and Tracing

Each of these practices plays a distinct role in understanding a system’s inner workings:* Logging: This involves recording discrete events that occur within an application. Logs capture information about what happened, when it happened, and potentially why it happened. They provide a chronological record of events, useful for debugging, auditing, and understanding application behavior over time. Logs often include timestamps, severity levels (e.g., DEBUG, INFO, WARNING, ERROR), and contextual information.* Monitoring: This involves collecting and analyzing metrics to observe the system’s overall health and performance.

Metrics are quantitative measurements that can be tracked over time. Monitoring systems use these metrics to identify trends, detect anomalies, and trigger alerts when predefined thresholds are breached. Common metrics include CPU utilization, memory usage, response times, and error rates.* Tracing: This focuses on tracking individual requests as they flow through a distributed system. Tracing provides a detailed view of the path a request takes, including the different services it interacts with and the time spent in each service.

This allows for identifying performance bottlenecks and understanding the dependencies between services. Traces typically consist of spans, each representing a unit of work within a service.

Benefits of Implementing Logging, Monitoring, and Tracing

The adoption of these practices offers several significant advantages throughout the software development lifecycle:* Faster Debugging and Issue Resolution: Logging provides detailed information about errors and unexpected behavior, allowing developers to quickly identify the root cause of problems. Monitoring alerts teams to issues as they arise, enabling proactive responses. Tracing helps pinpoint performance bottlenecks in complex distributed systems.* Improved Performance and Optimization: Monitoring provides insights into resource utilization and performance bottlenecks.

Tracing reveals areas where requests are taking excessive time. By analyzing this data, developers can optimize code, improve resource allocation, and enhance overall system performance.* Enhanced System Reliability and Stability: Proactive monitoring allows for early detection of potential issues, enabling teams to take corrective actions before they impact users. Comprehensive logging provides a detailed audit trail for troubleshooting and understanding system failures.* Better User Experience: By improving performance, reducing errors, and ensuring system stability, logging, monitoring, and tracing contribute directly to a better user experience.

Faster response times, fewer errors, and a more reliable system lead to increased user satisfaction.* Compliance and Auditing: Logging is crucial for meeting compliance requirements and providing an audit trail for security and regulatory purposes. Detailed logs can be used to track user activity, identify security breaches, and demonstrate compliance with industry standards.* Data-Driven Decision Making: The data generated by logging, monitoring, and tracing provides valuable insights that can be used to inform decisions about system design, resource allocation, and feature development.

By analyzing trends and patterns, teams can make more informed decisions that lead to better outcomes.

Relationship Between Logging, Monitoring, and Tracing

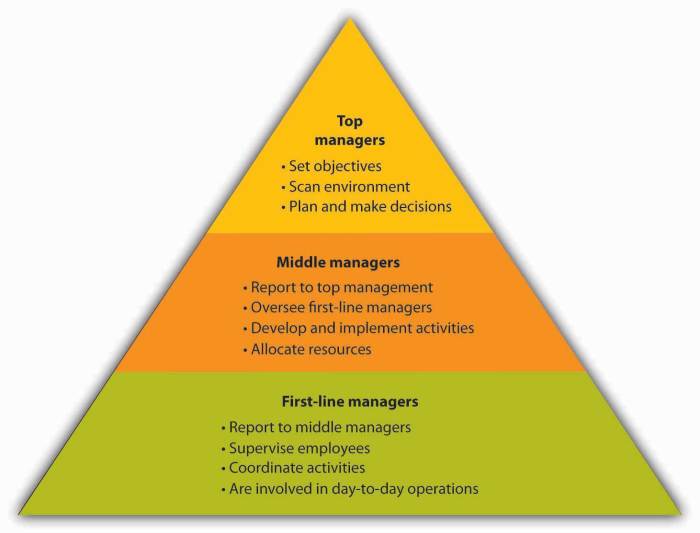

These three practices are interconnected and complementary. While they serve distinct purposes, they work together to provide a holistic view of the system. Here’s how they relate to each other:A diagram illustrates the relationship. It depicts a software system with multiple components (e.g., microservices, databases). Arrows represent the flow of requests through the system.* Logging is represented as individual events occurring within each component.

Each component generates log entries that capture details about operations.* Monitoring is depicted as a central system collecting metrics from all components. These metrics are aggregated and visualized on dashboards, providing a high-level overview of system health and performance. Alerts are triggered based on metric thresholds.* Tracing is shown as a request traversing the system.

The trace spans multiple components, showing the path of the request and the time spent in each component. This provides a detailed view of the request’s journey. The trace data is then used to understand how requests are processed across different services.The diagram visually demonstrates how logging provides the granular details, monitoring offers the aggregated view, and tracing shows the flow of requests through the system, each contributing to a comprehensive understanding of the software system.

Logging Best Practices

Effective logging is crucial for understanding application behavior, troubleshooting issues, and ensuring system reliability. Adhering to established best practices streamlines the process of collecting, analyzing, and utilizing log data. This section Artikels key principles for designing a robust and informative logging strategy.Implementing these practices ensures that logs are not only created but are also easily searchable, understandable, and actionable. This ultimately improves the maintainability and reliability of the software system.

Log Levels

Choosing the appropriate log level for each message is essential for efficient log management. Different levels of severity help categorize events, allowing developers and operations teams to quickly identify and address critical issues.Here’s a breakdown of common log levels and their intended uses:

- DEBUG: Used for detailed information, typically for development and debugging purposes. These logs are often very verbose and are usually disabled in production environments to reduce storage and performance overhead.

- INFO: Used to confirm that things are working as expected. These logs provide general information about the application’s operation, such as successful user logins or data processing completion.

- WARN: Indicates potential problems or situations that are not necessarily errors but may require attention. Examples include deprecated API usage or unusual conditions.

- ERROR: Used to log errors that prevent the successful completion of a task. These indicate a significant issue that needs investigation, such as a failed database connection.

- FATAL/CRITICAL: Used to log critical errors that cause the application to terminate or become unusable. These logs typically signal unrecoverable failures.

Properly utilizing log levels enables filtering and prioritization of log messages, allowing for focused analysis and faster troubleshooting. For example, in a production environment, only ERROR and FATAL logs might be actively monitored to alert on critical issues, while DEBUG logs are reviewed during development.

Message Formatting

Consistent and well-structured log message formatting is vital for readability and efficient log analysis. A standardized format enables automated parsing, filtering, and aggregation of log data.Here’s a summary of key aspects of effective message formatting:

- Structured Logging: Using structured formats like JSON or key-value pairs instead of plain text allows for easier parsing and querying of log data. Each piece of information is stored in a specific field, making it easier to search, filter, and analyze.

- Clear and Concise Messages: Messages should be brief but informative, providing enough context to understand the event without being overly verbose. Avoid jargon and use plain language where possible.

- Consistency: Maintaining a consistent format across all log messages simplifies analysis and reduces the learning curve for anyone reviewing the logs. This includes consistent naming conventions for fields and the overall structure of the message.

- Avoid Sensitive Data: Never log sensitive information such as passwords, API keys, or personally identifiable information (PII). Implement data masking or redaction techniques to protect sensitive data.

Adopting a structured logging approach with a consistent format improves the efficiency of log analysis. This approach facilitates the use of tools like Elasticsearch, Splunk, or Graylog for searching, filtering, and visualizing log data.

Sensitive Data Handling

Protecting sensitive data within logs is paramount for maintaining data privacy and security. Implementing appropriate measures to prevent the exposure of confidential information is a crucial aspect of logging best practices.Here are important considerations for sensitive data handling:

- Data Masking/Redaction: Masking or redacting sensitive data before logging involves replacing sensitive information with placeholder values or removing it entirely. For example, masking a credit card number by displaying only the last four digits or redacting a password entirely.

- Data Encryption: Encrypting sensitive data before logging can protect it from unauthorized access. This is particularly important when storing logs in a central location or sharing them with third parties.

- Access Control: Implementing strict access controls to limit who can view log data containing sensitive information. This includes using role-based access control (RBAC) to restrict access based on job function and responsibilities.

- Data Minimization: Logging only the necessary information to diagnose problems and avoid logging sensitive data unnecessarily. The principle of data minimization helps to reduce the risk of data breaches.

- Compliance with Regulations: Adhering to relevant data privacy regulations, such as GDPR and CCPA, which mandate the protection of sensitive data. This includes ensuring that logging practices comply with the requirements of these regulations.

Protecting sensitive data is not just a technical requirement; it is a legal and ethical obligation. Failure to handle sensitive data properly can lead to significant legal and reputational damage.

Context in Log Messages

Providing sufficient context within log messages is essential for effective troubleshooting and analysis. Contextual information allows developers and operations teams to understand the environment in which an event occurred.Here’s a breakdown of crucial context elements:

- User IDs: Including the user ID associated with an action or event helps trace user-specific activities and identify potential issues related to a particular user.

- Request IDs: A unique request ID allows you to track a specific request across multiple services and components. This is especially important in microservices architectures.

- Timestamps: Accurate timestamps are essential for correlating events and understanding the order in which they occurred. They allow for time-based analysis and troubleshooting.

- Application Version: Including the application version in log messages helps identify the specific version of the code that generated the log. This is useful when troubleshooting issues that may be related to a specific release.

- Environment Information: Logging environment details, such as the environment (e.g., production, staging, development), server name, and deployment ID, provides valuable context for understanding where the event occurred.

- Correlation IDs: Correlation IDs, similar to request IDs, are used to link related events across different components or services. They provide a means of tracing a transaction or workflow.

By incorporating relevant context, logs become significantly more valuable for understanding system behavior, identifying the root cause of issues, and improving overall system maintainability. For example, if a user reports an error, including their user ID in the logs makes it much easier to find related events and diagnose the problem.

Sample Log Message Format

The following table provides examples of a sample log message format, demonstrating how the discussed best practices can be implemented across different programming languages. This table illustrates the use of log levels, context, and message formatting.

| Language | Example Message | Log Level | Context |

|---|---|---|---|

| Python | "timestamp": "2024-02-29T10:30:00Z", "level": "INFO", "message": "User login successful", "user_id": "user123", "request_id": "abc-123-xyz" | INFO | User ID, Request ID, Timestamp |

| Java | "timestamp": "2024-02-29T10:30:01Z", "level": "ERROR", "message": "Failed to connect to database", "error_code": "DB_CONN_ERROR", "request_id": "def-456-uvw", "server": "app-server-01" | ERROR | Request ID, Server, Error Code, Timestamp |

| JavaScript (Node.js) | "timestamp": "2024-02-29T10:30:02Z", "level": "WARN", "message": "Deprecated API usage detected", "api_endpoint": "/v1/old_api", "user_agent": "Mozilla/5.0", "request_id": "ghi-789-rst" | WARN | Request ID, User Agent, API Endpoint, Timestamp |

| Go | "timestamp": "2024-02-29T10:30:03Z", "level": "DEBUG", "message": "Processing request", "request_id": "jkl-012-mno", "method": "GET", "path": "/users" | DEBUG | Request ID, Method, Path, Timestamp |

The example messages are formatted as JSON, which is a common and versatile format for structured logging. Each message includes a timestamp, log level, a descriptive message, and relevant context, such as user IDs, request IDs, and error codes. This consistent format allows for easy parsing and analysis using log aggregation and analysis tools. This approach makes it simple to filter, search, and correlate events, improving the efficiency of troubleshooting and monitoring.

Monitoring Techniques

Monitoring is crucial for maintaining the health, performance, and security of software systems. It provides real-time insights into system behavior, enabling proactive identification and resolution of issues. Effective monitoring helps to ensure a positive user experience, optimize resource utilization, and reduce downtime. This section will delve into various monitoring techniques and their applications.

Application Performance Monitoring (APM)

Application Performance Monitoring (APM) focuses on the performance of software applications. It provides detailed insights into the application’s internal workings, including code execution, database interactions, and external service calls. APM tools help identify performance bottlenecks, errors, and areas for optimization.APM typically involves the following:

- Transaction Tracing: Tracks the flow of requests through the application, providing visibility into the time spent in different components. This allows developers to pinpoint slow operations and identify the root causes of performance issues.

- Code Profiling: Analyzes the application’s code to identify performance bottlenecks at the code level. Profiling tools highlight slow methods, inefficient algorithms, and memory leaks.

- Error Tracking: Captures and analyzes application errors, providing information about the error type, frequency, and impact. Error tracking tools often integrate with bug tracking systems to streamline the debugging process.

- Dependency Mapping: Visualizes the relationships between application components and external services. This helps to understand the impact of dependencies on application performance and identify potential points of failure.

- Real User Monitoring (RUM): Collects performance data from actual user interactions with the application. This provides insights into the user experience and helps to identify performance issues that affect real users.

Infrastructure Monitoring

Infrastructure monitoring focuses on the underlying hardware and software resources that support applications. It monitors the health and performance of servers, networks, databases, and other infrastructure components. Effective infrastructure monitoring is essential for ensuring the availability and stability of the application.Infrastructure monitoring typically encompasses:

- Server Monitoring: Tracks key server metrics such as CPU usage, memory utilization, disk I/O, and network traffic. This helps to identify resource constraints and potential performance bottlenecks.

- Network Monitoring: Monitors network devices and traffic to identify network congestion, latency, and other network-related issues. This ensures that network connectivity is available and that data can flow between components.

- Database Monitoring: Monitors database performance, including query execution times, connection usage, and storage capacity. This helps to identify slow queries and optimize database performance.

- Container Monitoring: Monitors the performance and resource utilization of containerized applications. This helps to ensure that containers are running efficiently and that resources are allocated appropriately.

- Log Monitoring: Analyzes logs from infrastructure components to identify errors, warnings, and other events. This helps to detect and diagnose issues.

User Experience Monitoring

User Experience (UX) monitoring focuses on the user’s experience of the application. It measures key metrics that reflect how users perceive the application’s performance, usability, and overall satisfaction. This helps to ensure a positive user experience and identify areas for improvement.UX monitoring typically involves:

- Page Load Time: Measures the time it takes for a web page to load completely. This is a critical metric for user experience, as slow page load times can lead to user frustration and abandonment.

- Response Time: Measures the time it takes for the application to respond to user actions, such as clicking a button or submitting a form. This reflects the application’s responsiveness and perceived performance.

- Error Rate: Tracks the frequency of errors encountered by users. High error rates can indicate usability issues or underlying application problems.

- Conversion Rate: Measures the percentage of users who complete a desired action, such as making a purchase or signing up for a service. This is a key metric for business success.

- User Engagement: Measures user activity, such as time spent on the site, pages visited, and features used. This provides insights into user behavior and preferences.

Proactive vs. Reactive Monitoring Strategies

Monitoring strategies can be broadly classified as proactive or reactive. Each approach has its strengths and weaknesses.

- Proactive Monitoring: This involves actively monitoring systems and applications to identify potential issues before they impact users. It focuses on predicting and preventing problems. This includes setting up alerts based on thresholds and trends. For example, if CPU usage consistently rises above 80% during peak hours, an alert can be triggered to investigate and scale resources. This is the preferred approach.

- Reactive Monitoring: This involves responding to issues after they have already occurred. It relies on users reporting problems or on alerts triggered by critical events. This approach is less desirable as it often results in downtime and a negative user experience. For example, a reactive approach would be responding to user complaints about slow performance or application crashes.

Key Metrics to Monitor

Monitoring various metrics across different areas is crucial for a comprehensive understanding of system health and performance.

- Application Performance:

- Response Times: Time taken for the application to respond to requests.

- Error Rates: Percentage of requests that result in errors.

- Throughput: Number of requests processed per unit of time.

- Transaction Success Rate: Percentage of successful transactions.

- Database Query Times: Time taken for database queries to execute.

- Infrastructure Performance:

- CPU Usage: Percentage of CPU resources being utilized.

- Memory Usage: Amount of memory being used.

- Disk I/O: Rate of data transfer to and from disk.

- Network Latency: Delay in network communication.

- Network Throughput: Amount of data transferred over the network.

- User Experience:

- Page Load Time: Time taken for a web page to load.

- Click Through Rate (CTR): Percentage of users who click on a specific element.

- Conversion Rate: Percentage of users who complete a desired action.

- Bounce Rate: Percentage of users who leave a website after viewing only one page.

- Session Duration: Average time users spend on a website.

- Security:

- Failed Login Attempts: Number of unsuccessful login attempts.

- Unauthorized Access Attempts: Attempts to access restricted resources.

- Intrusion Detection System (IDS) Alerts: Alerts generated by the IDS system.

- Security Incident Response Time: Time taken to respond to security incidents.

- Vulnerability Scan Results: Results from vulnerability scans.

Tracing Implementation

Tracing is crucial for understanding the flow of requests through a distributed system. It provides a comprehensive view of how different services interact, allowing for the identification of performance bottlenecks and the debugging of complex issues. This section delves into the implementation of tracing, particularly in microservices architectures, and Artikels the practical steps involved.

Distributed Tracing and Performance Bottlenecks

Distributed tracing plays a vital role in microservices architectures, offering a detailed view of request paths across multiple services. Microservices, by their nature, involve requests traversing various components. Tracing enables developers to track these requests, understand their journey, and pinpoint where delays occur.The benefits of distributed tracing include:

- Identifying Performance Bottlenecks: Tracing pinpoints services or operations taking excessive time, enabling optimization efforts. For instance, if a specific database query consistently takes longer than expected, tracing can highlight this, leading to database query optimization.

- Debugging Complex Issues: When an error arises in a microservices environment, tracing allows for tracing the exact path of the request, showing which services failed and why. This makes debugging significantly faster than examining individual service logs.

- Understanding Service Dependencies: Tracing reveals how services interact, highlighting dependencies and potential points of failure. This understanding is essential for designing resilient systems.

- Optimizing Resource Allocation: By understanding the performance characteristics of each service, resources can be allocated more efficiently, preventing over-provisioning and reducing costs.

Implementing Tracing with Jaeger and Zipkin

Jaeger and Zipkin are popular open-source tools for implementing distributed tracing. They share similar functionalities but have distinct implementation details. Both tools offer features for collecting, storing, and visualizing trace data.Jaeger, originally developed by Uber, offers:

- Scalability: Designed to handle large volumes of trace data, making it suitable for high-traffic applications.

- Multiple Storage Backends: Supports various storage backends, including Cassandra, Elasticsearch, and memory.

- UI for Visualization: Provides a user-friendly interface for exploring traces, filtering, and analyzing performance.

Zipkin, developed by Twitter, offers:

- Simplicity: Known for its ease of setup and use.

- Integration with Various Libraries: Supports a wide range of instrumentation libraries, making it easy to integrate into different technology stacks.

- Trace Visualization: Provides a web UI to visualize traces and understand the flow of requests.

Both tools generally follow a similar architecture:

- Instrumentation: Code is instrumented to generate trace data. This often involves using libraries specific to the chosen tracing tool (e.g., OpenTelemetry, Jaeger client libraries, or Zipkin libraries).

- Data Collection: The instrumented code sends trace data to a collector. The collector receives spans (units of work) and organizes them into traces.

- Data Storage: The collector stores the trace data in a backend database (e.g., Cassandra, Elasticsearch, or memory).

- Visualization: A UI allows users to query the stored trace data and visualize the traces, including service interactions, timing information, and any errors.

Instrumenting a Simple API with Tracing

Instrumenting an API with tracing involves several steps, regardless of the chosen tracing tool. This procedure focuses on using OpenTelemetry, which is a vendor-neutral standard for generating and collecting telemetry data (metrics, logs, and traces).Here is a step-by-step procedure for instrumenting a simple API with tracing:

- Choose a Language and Framework: Select the programming language and framework for your API (e.g., Python with Flask or Node.js with Express).

- Install OpenTelemetry Libraries: Install the necessary OpenTelemetry libraries for your chosen language and framework. For example, in Python, you would install `opentelemetry-api`, `opentelemetry-sdk`, and a framework-specific instrumentation library (e.g., `opentelemetry-instrumentation-flask`).

- Configure OpenTelemetry: Configure OpenTelemetry to export trace data. This typically involves setting up a trace provider and a span processor. The span processor sends the trace data to a collector.

- Instrument Your Code: Instrument your API endpoints by creating spans. A span represents a unit of work. You can create spans manually or use instrumentation libraries that automatically create spans for incoming requests and outgoing calls to other services.

- Configure a Collector (e.g., Jaeger): Set up a collector (e.g., Jaeger) to receive, process, and store the trace data. This involves configuring the collector’s endpoint in your API’s OpenTelemetry configuration.

- Run and Test: Run your API and make requests. The tracing data will be sent to the collector. Use the Jaeger UI to view the traces, which show the request flow, timings, and any errors.

Choosing the Right Tools

Selecting the appropriate tools for logging, monitoring, and tracing is crucial for effectively managing and troubleshooting applications and infrastructure. The right choice can significantly impact the efficiency of debugging, performance optimization, and overall system reliability. The following sections will explore popular tools and their capabilities, helping you make informed decisions.

Popular Logging, Monitoring, and Tracing Tools and Their Key Features

Several tools have emerged as industry standards for logging, monitoring, and tracing. These tools offer a wide range of features designed to address different needs and complexities. Understanding the core functionalities of each tool is vital for making the best choice.

- Logging Tools: These tools focus on collecting, storing, and analyzing log data.

- ELK Stack (Elasticsearch, Logstash, Kibana): This open-source stack is a popular choice for centralized logging. Elasticsearch provides the search and storage capabilities, Logstash handles log ingestion and processing, and Kibana offers a user-friendly interface for visualization and analysis. A key feature is its ability to index and search vast amounts of log data quickly.

- Splunk: A commercial platform offering comprehensive logging and analytics capabilities. Splunk can ingest data from various sources, including logs, metrics, and events. It provides powerful search and reporting features, along with advanced analytics and security features.

- Graylog: An open-source log management solution. It offers a web interface for log aggregation, analysis, and alerting. Graylog supports various input sources and provides features for data enrichment and visualization.

- Monitoring Tools: Monitoring tools are designed to track the performance and health of systems and applications in real-time.

- Prometheus: An open-source monitoring and alerting toolkit. It collects metrics from various sources using a pull model. Prometheus is particularly well-suited for containerized environments and provides a powerful query language (PromQL) for data analysis.

- Grafana: An open-source data visualization and monitoring tool. Grafana integrates with various data sources, including Prometheus, Elasticsearch, and InfluxDB. It allows users to create customizable dashboards and alerts.

- Nagios: An open-source monitoring system that checks the availability and performance of servers, services, and applications. It uses plugins to monitor different aspects of the system and provides alerts based on predefined thresholds.

- Datadog: A commercial monitoring and analytics platform. Datadog offers comprehensive monitoring capabilities, including infrastructure monitoring, application performance monitoring (APM), and log management. It provides pre-built integrations with various technologies and services.

- Tracing Tools: Tracing tools focus on tracking requests as they flow through a distributed system.

- Jaeger: An open-source, distributed tracing system. It’s inspired by Google’s Dapper and provides features for visualizing request traces, identifying performance bottlenecks, and understanding service dependencies.

- Zipkin: Another open-source distributed tracing system. Zipkin is designed to collect and visualize timing data needed to troubleshoot latency problems in service architectures.

- AWS X-Ray: A service from Amazon Web Services that helps developers analyze and debug distributed applications. It provides features for tracing requests, identifying performance issues, and understanding service interactions within the AWS ecosystem.

Comparing Open-Source Versus Commercial Tools

The choice between open-source and commercial tools depends on various factors, including budget, technical expertise, and specific requirements. Both categories offer distinct advantages and disadvantages.

- Open-Source Tools:

- Advantages: Generally, open-source tools offer cost savings due to their free licensing. They often have a large community supporting them, leading to readily available documentation and a broad range of integrations. Customization is often easier, allowing for tailoring to specific needs.

- Disadvantages: Support can be community-driven, which might not provide the same level of responsiveness as commercial support. Implementation and maintenance might require more in-house expertise. The feature set might be less extensive compared to some commercial alternatives.

- Commercial Tools:

- Advantages: Commercial tools often provide comprehensive features, including advanced analytics, security features, and dedicated support. They typically offer a more user-friendly experience and ease of deployment.

- Disadvantages: They come with licensing fees, which can be significant, especially for large-scale deployments. Customization options might be limited compared to open-source tools. Vendor lock-in is a potential concern.

Comparison Table: Features, Scalability, Ease of Use, and Cost

The following table provides a comparative analysis of selected logging, monitoring, and tracing tools based on key criteria.

| Tool | Features | Scalability | Ease of Use | Cost |

|---|---|---|---|---|

| ELK Stack (Elasticsearch, Logstash, Kibana) | Centralized logging, search, visualization, log processing. | Highly scalable, designed for large datasets. | Kibana provides a user-friendly interface; Logstash can be complex to configure. | Open-source, free to use (costs associated with infrastructure). |

| Splunk | Comprehensive logging, analytics, security features, dashboards, alerting. | Scalable, designed for enterprise-level deployments. | User-friendly interface, extensive documentation. | Commercial, based on data volume. |

| Prometheus | Metric collection, alerting, time-series data storage. | Scalable, particularly suited for containerized environments. | Requires understanding of PromQL for querying data. | Open-source, free to use (costs associated with infrastructure). |

| Grafana | Data visualization, dashboards, alerting, integrates with various data sources. | Scalable, supports a wide range of data sources. | User-friendly interface, easy to create dashboards. | Open-source, free to use (costs associated with infrastructure). |

| Jaeger | Distributed tracing, visualization of request traces, service dependency analysis. | Scalable, designed for distributed systems. | Web UI for trace visualization. | Open-source, free to use (costs associated with infrastructure). |

| Zipkin | Distributed tracing, identifying performance bottlenecks. | Scalable, optimized for tracing. | Web UI for trace visualization. | Open-source, free to use (costs associated with infrastructure). |

| Datadog | Infrastructure monitoring, APM, log management, dashboards, alerting. | Scalable, designed for enterprise-level deployments. | User-friendly interface, extensive integrations. | Commercial, based on usage. |

Setting up a Centralized Logging System

A centralized logging system is crucial for effective monitoring, troubleshooting, and security analysis of your applications and infrastructure. It allows you to collect, aggregate, store, and analyze logs from various sources in a single location. This centralized approach provides a holistic view of your system’s behavior, making it easier to identify and resolve issues.

Architecture of a Centralized Logging System

The architecture of a centralized logging system typically involves several key components working together.* Log Aggregation: This is the process of collecting logs from different sources, such as servers, applications, and network devices. Log aggregators act as intermediaries, receiving logs from these sources and preparing them for further processing.

Storage

Once aggregated, logs need to be stored in a persistent and accessible manner. This typically involves a database or a distributed file system designed for storing large volumes of data. The storage solution should provide efficient indexing and search capabilities.

Analysis

The final step involves analyzing the stored logs to extract valuable insights. This can involve searching for specific events, creating dashboards, and setting up alerts. Analysis tools provide visualization and reporting features to help you understand your system’s behavior.

Setting up a Basic Centralized Logging System using ELK Stack

The ELK Stack (Elasticsearch, Logstash, Kibana) is a popular and powerful open-source solution for centralized logging. Here’s a simplified guide to setting it up.* Install Elasticsearch: Elasticsearch is a distributed, RESTful search and analytics engine. Install it on a server or cluster. It will store and index your logs.

Install Logstash

Logstash is a data processing pipeline. It receives logs from various sources, processes them (e.g., parsing, filtering), and sends them to Elasticsearch. Install it on a server.

Install Kibana

Kibana is a data visualization and exploration tool. It connects to Elasticsearch and allows you to search, analyze, and visualize your log data. Install it on a server.

Configure Logstash

Configure Logstash to receive logs from your sources (e.g., application logs, system logs), parse them, and send them to Elasticsearch.

Configure Kibana

Configure Kibana to connect to Elasticsearch and define index patterns to access your log data.

Sample Configuration File for Logstash

Here’s a sample Logstash configuration file (logstash.conf) to parse common log formats. This configuration assumes you’re receiving logs via the syslog protocol.“`input syslog port => 514 type => “syslog” filter if [type] == “syslog” grok match => “message” => “%SYSLOGTIMESTAMP:timestamp %SYSLOGHOST:hostname %DATA:program: %GREEDYMULTILINE:message” add_field => [ “received_at”, “%@timestamp” ] add_field => [ “tags”, “syslog” ] date match => [ “timestamp”, “MMM d HH:mm:ss”, “MMM dd HH:mm:ss” ] output elasticsearch hosts => [“localhost:9200”] index => “syslog-%+YYYY.MM.dd” stdout codec => rubydebug “`This configuration does the following:* Input: Defines a syslog input on port 514.

Filter

Uses the `grok` filter to parse syslog messages, extracting fields like timestamp, hostname, and program. The `date` filter converts the timestamp to a standard format.

Output

Sends the parsed logs to Elasticsearch and also prints them to the console (for debugging). The `index` setting in the Elasticsearch output configures the index name with a daily pattern.

Alerting and Notification Systems

Implementing robust alerting and notification systems is crucial for proactive incident management and maintaining system health. These systems transform collected log data and monitoring metrics into actionable insights, enabling rapid response to critical events. By automating the detection and notification of issues, organizations can minimize downtime, improve performance, and enhance the overall user experience.

Importance of Alerting and Notifications

Alerting and notification systems are vital components of a comprehensive observability strategy. They provide real-time awareness of system behavior, enabling timely intervention when anomalies or critical issues arise.

- Proactive Issue Detection: Automated alerts identify potential problems before they impact users. This proactive approach allows for preventative measures and minimizes the risk of widespread outages.

- Faster Incident Response: Timely notifications expedite the incident response process. When an issue is detected, the relevant teams are immediately notified, allowing them to begin troubleshooting and remediation efforts promptly.

- Reduced Downtime: By enabling quick responses, alerting systems help minimize downtime. Every minute of reduced downtime translates into increased productivity, revenue, and customer satisfaction.

- Improved System Reliability: Consistent monitoring and alerting contribute to overall system reliability. Regular monitoring and alerting help identify and address recurring issues, leading to a more stable and dependable infrastructure.

- Enhanced Operational Efficiency: Automation reduces the need for manual monitoring and analysis. This frees up operations teams to focus on more strategic tasks.

Common Alert Scenarios

Effective alerting systems are built upon a foundation of well-defined alert scenarios that target specific system behaviors and potential issues. The following are examples of common scenarios that warrant alerts:

- High Error Rates: An increase in the number of errors logged by an application or service often indicates underlying problems, such as code defects, infrastructure issues, or external dependencies failing. An alert can be triggered when the error rate exceeds a predefined threshold, such as 5% of requests.

- Slow Response Times: Slow response times can negatively impact the user experience. Alerts can be configured to trigger when the average response time for a service or API exceeds a specified duration, such as 2 seconds. This allows for investigation into performance bottlenecks.

- Resource Exhaustion: Alerts can be set up to monitor resource utilization, such as CPU, memory, disk space, and network bandwidth. When resource utilization reaches a critical level (e.g., 90% CPU usage), an alert can be triggered to notify administrators.

- Service Outages: Alerts can be configured to detect service outages. Monitoring tools can periodically check the availability of services and trigger alerts when a service becomes unavailable.

- Security Breaches: Unusual login attempts, unauthorized access, or other security-related events can trigger alerts. These alerts are critical for identifying and responding to potential security threats.

- Database Issues: Alerts can be configured to monitor database performance, such as query latency, connection pool exhaustion, or disk space usage.

Designing an Alerting Rule with Prometheus

Prometheus is a popular open-source monitoring system widely used for alerting. Designing a simple alerting rule involves defining a query that identifies the condition to be monitored and specifying how and when alerts should be triggered.Let’s design an alerting rule in Prometheus to detect high error rates in a hypothetical web service.

1. Metric Selection: Assume the web service exposes a metric called http_requests_total, which is a counter that increments for each HTTP request. We can also assume there’s a label called status_code, which indicates the HTTP status code. To calculate the error rate, we can use the following PromQL query:

sum(rate(http_requests_totalstatus_code=~"5.."[5m])) / sum(rate(http_requests_total[5m])) > 0.05

This query calculates the error rate over the last 5 minutes. The breakdown is as follows:

http_requests_totalstatus_code=~"5..": Selects all requests with 5xx status codes (server errors).rate(...[5m]): Calculates the per-second rate of increase of the counter over the last 5 minutes.sum(...): Sums the rates across all instances.> 0.05: The condition that triggers the alert if the error rate is greater than 5%.

2. Alert Definition in Prometheus: The following is an example of how to define this alert rule within a Prometheus configuration file (e.g., prometheus.yml):

groups:-name: web_service_alerts rules: -alert: HighErrorRate expr: sum(rate(http_requests_totalstatus_code=~"5.."[5m])) / sum(rate(http_requests_total[5m])) > 0.05 for: 5m labels: severity: critical annotations: summary: "High error rate on web service" description: "The error rate on the web service has exceeded 5% in the last 5 minutes."

3.

Explanation of the Alert Rule Components:

alert: HighErrorRate: Defines the name of the alert.expr: ...: Specifies the PromQL expression that defines the alerting condition.for: 5m: Specifies the duration for which the condition must be true before an alert is fired. This prevents transient spikes from triggering alerts.labels:: Adds metadata to the alert, such as severity.annotations:: Provides additional information about the alert, such as a summary and description. These annotations are often used in notification systems to provide context.

4. Notification System Integration: Prometheus integrates with various notification systems, such as email, Slack, PagerDuty, and others. Once an alert fires, Prometheus sends a notification to the configured system. The notification includes the alert details, labels, and annotations, allowing the operations team to quickly understand the problem and take action.

Data Retention and Security

Managing log data effectively involves not only collecting, monitoring, and analyzing it but also carefully considering how long the data is stored and how it’s protected. Data retention and security are crucial aspects of a robust logging, monitoring, and tracing strategy. They ensure compliance with regulations, protect sensitive information, and enable effective incident response.

Data Retention Policies

Data retention policies define how long log data is stored. These policies are essential for several reasons, including legal and regulatory compliance, operational efficiency, and incident investigation. The appropriate retention period depends on the type of data, industry regulations, and organizational needs.

- Compliance: Many industries, such as finance and healthcare, are subject to regulations that mandate specific data retention periods. For example, the Payment Card Industry Data Security Standard (PCI DSS) requires organizations to retain cardholder data for a specific duration. Similarly, regulations like HIPAA in the healthcare sector dictate how long patient data must be stored.

- Incident Investigation: Historical log data is vital for investigating security incidents and troubleshooting operational issues. Longer retention periods provide a more comprehensive view of past events, allowing security teams to identify patterns, understand the scope of an attack, and determine the root cause of problems.

- Operational Efficiency: While longer retention periods are often desirable for incident response, they can also lead to increased storage costs and management overhead. Data retention policies help balance the need for historical data with the practical considerations of storage capacity and cost.

- Legal Requirements: Legal proceedings or audits might require access to historical log data. A well-defined data retention policy ensures that necessary data is available when needed.

The implementation of data retention policies often involves defining retention periods for different log types, such as application logs, security logs, and audit logs. Regularly reviewing and updating these policies is essential to adapt to changing regulatory requirements and organizational needs.

Security Considerations for Logging

Securing log data is paramount to protect sensitive information and maintain the integrity of the logging system. Several security considerations must be addressed to ensure that log data is protected from unauthorized access, modification, or deletion.

- Access Control: Implementing strict access control mechanisms is crucial. Only authorized personnel, such as security analysts, system administrators, and developers, should have access to log data. Role-Based Access Control (RBAC) can be used to define different levels of access based on job responsibilities.

- Data Encryption: Encrypting log data at rest and in transit protects it from unauthorized access. Encryption at rest involves encrypting the log files stored on servers or in cloud storage. Encryption in transit involves encrypting the communication channels used to transmit log data, such as using Transport Layer Security (TLS) or Secure Shell (SSH).

- Data Integrity: Ensuring the integrity of log data is critical. Mechanisms like digital signatures or hashing can be used to verify that log data has not been tampered with. These techniques help detect any unauthorized modifications to the logs.

- Security Auditing: Regularly auditing the logging system, including access logs, configuration changes, and data access patterns, is essential. Security audits help identify potential vulnerabilities, detect unauthorized activities, and ensure that security controls are effective.

- Compliance Requirements: Adhering to relevant compliance requirements is essential. Regulations such as GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and PCI DSS (Payment Card Industry Data Security Standard) impose specific security requirements for log data, including data retention, access control, and data encryption.

Implementing these security considerations involves a combination of technical measures, organizational policies, and regular monitoring.

Redacting Sensitive Information from Log Messages

Redacting sensitive information from log messages is a crucial practice to protect privacy and comply with data protection regulations. This involves removing or masking sensitive data, such as personally identifiable information (PII), financial data, and credentials, from log entries.

- Identifying Sensitive Data: The first step is to identify the types of sensitive data that need to be redacted. This includes but is not limited to:

- Personally Identifiable Information (PII): Names, addresses, email addresses, phone numbers, Social Security numbers.

- Financial Data: Credit card numbers, bank account details, transaction amounts.

- Credentials: Passwords, API keys, access tokens.

- Health Information: Medical records, diagnoses, treatment information.

- Redaction Techniques: Several techniques can be used to redact sensitive information:

- Masking: Replacing sensitive data with a masked value (e.g., “XXXXXXXXXXXX1234” for a credit card number).

- Tokenization: Replacing sensitive data with a unique token that can be used to retrieve the original data from a secure vault.

- Anonymization: Removing or modifying data to prevent the identification of individuals.

- Pseudonymization: Replacing identifying information with pseudonyms, which allows for data analysis without revealing the original identity.

- Data Scrubbing: Removing entire fields or log entries that contain sensitive information.

- Implementation: Implementing redaction requires careful planning and execution. Consider the following:

- Log Source Configuration: Configure logging at the source to redact sensitive data before it is written to the logs. This is the most secure approach.

- Log Processing Pipelines: Use log processing pipelines to transform log data and redact sensitive information. Tools like Fluentd, Logstash, or Splunk can be used for this purpose.

- Regular Expression (Regex) Matching: Use regular expressions to identify and redact sensitive data patterns. For example, a regex can be used to identify and redact credit card numbers.

- Data Loss Prevention (DLP) Tools: Integrate DLP tools to automatically identify and redact sensitive data in log messages.

- Examples:

- Credit Card Number Redaction: A log message might contain a credit card number like “Card number: 1234-5678-9012-3456”. After redaction, the log message would become “Card number: XXXX-XXXX-XXXX-3456”.

- Email Address Redaction: A log message might contain an email address like “User logged in: [email protected]”. After redaction, the log message could become “User logged in: [email protected]”.

- Password Redaction: A log message might contain a password. After redaction, the log message would show that a password was used but would not display the actual password.

- Testing and Validation: Regularly test and validate redaction rules to ensure they are effective and do not inadvertently redact legitimate data.

Integrating with DevOps Practices

Integrating logging, monitoring, and tracing is crucial for successful DevOps implementation. These practices provide visibility into the entire software development lifecycle, enabling faster feedback loops, improved collaboration, and ultimately, more reliable and efficient software delivery. By embedding these principles within the DevOps pipeline, teams can proactively identify and resolve issues, optimize performance, and accelerate the pace of innovation.

Integrating Logging, Monitoring, and Tracing into DevOps Pipelines

Integrating logging, monitoring, and tracing within DevOps pipelines involves embedding these practices into various stages of the software development lifecycle, from code development and testing to deployment and production monitoring. This integration fosters a continuous feedback loop, enabling teams to continuously improve their software and processes.

- Code Development: Integrate logging libraries directly into the code. Developers can use logging statements to capture relevant information about the application’s behavior, including errors, warnings, and informational messages. This allows developers to understand how their code functions and to quickly identify and fix issues.

- Testing: Implement automated testing frameworks that include logging and monitoring capabilities. As tests are executed, logs can capture test results, performance metrics, and error messages. Monitoring tools can track resource utilization and identify performance bottlenecks during testing.

- Continuous Integration (CI): Integrate logging and monitoring into the CI pipeline. Each code commit triggers automated builds and tests. Logs generated during the build and test processes can be analyzed to identify build failures, test failures, and performance regressions.

- Continuous Delivery/Deployment (CD): Use monitoring and logging data to automate deployment and rollback processes. Monitor key metrics like application response time, error rates, and resource utilization during deployment. If any anomalies are detected, the deployment can be automatically rolled back to a previous stable version.

- Production Monitoring: Implement comprehensive monitoring dashboards and alerting systems in the production environment. These systems collect and analyze logs, metrics, and traces to provide real-time insights into application performance, user behavior, and potential issues.

Improving Software Delivery Speed and Reducing MTTR

Implementing logging, monitoring, and tracing significantly improves software delivery speed and reduces the Mean Time to Resolution (MTTR) by providing comprehensive visibility into the software development lifecycle. This visibility enables teams to identify and address issues more quickly and efficiently.

- Faster Issue Identification: Logs, metrics, and traces provide valuable context for understanding the root cause of issues. This allows developers and operations teams to quickly pinpoint the source of problems, reducing the time spent on debugging and troubleshooting.

- Proactive Problem Detection: Monitoring tools can detect anomalies and performance degradations before they impact users. Alerting systems can notify teams of potential issues, allowing them to take proactive measures to prevent outages and performance problems.

- Automated Rollbacks: Monitoring data can be used to automate rollbacks to previous stable versions if deployments introduce errors or performance issues. This minimizes the impact of errors on users and reduces downtime.

- Improved Collaboration: Logging, monitoring, and tracing provide a shared understanding of the system’s behavior, facilitating better collaboration between development, operations, and other teams. This improved collaboration can lead to faster issue resolution and improved software quality.

- Continuous Improvement: By analyzing logs, metrics, and traces, teams can identify areas for improvement in their software and processes. This data-driven approach to improvement fosters a culture of continuous learning and innovation.

Automating Deployments and Rollbacks Using Logging and Monitoring Data

Leveraging logging and monitoring data to automate deployments and rollbacks is a critical aspect of DevOps practices. By using these tools, teams can create more resilient and efficient deployment pipelines.

- Automated Deployment Monitoring: During deployment, monitoring tools can track key performance indicators (KPIs) such as application response time, error rates, and resource utilization.

- Threshold-Based Rollbacks: If any of the KPIs exceed predefined thresholds during deployment, the deployment can be automatically rolled back to a previous stable version. For example, if the error rate suddenly spikes above a critical level, an automated rollback can be triggered.

- Health Checks and Readiness Probes: Utilize health checks and readiness probes to determine the application’s state and readiness to serve traffic. Monitoring tools can integrate with these checks to ensure that the application is functioning correctly before and after deployment.

- Log-Based Alerting: Implement alerting systems that trigger notifications based on specific log events. For example, if a critical error is logged during deployment, an alert can be sent to the operations team, who can then take immediate action.

- Example: A real-world example of this is using a tool like Prometheus and Grafana. Prometheus scrapes metrics from deployed applications, and Grafana visualizes these metrics in real-time dashboards. If a deployment causes a sudden increase in latency or a spike in error rates, an alert can be triggered, and an automated rollback can be initiated using tools like Kubernetes’ deployment features or custom scripts.

End of Discussion

In conclusion, mastering logging, monitoring, and tracing is not just about implementing tools; it’s about cultivating a culture of observability. By embracing these practices, you equip yourself with the power to build applications that are not only functional but also maintainable, scalable, and resilient. As you integrate these techniques into your workflow, you’ll unlock a new level of control and understanding, ensuring your software thrives in today’s dynamic landscape.

Questions Often Asked

What is the difference between logging, monitoring, and tracing?

Logging is the practice of recording events and data as they occur within an application. Monitoring involves observing the application’s performance and health, often through metrics and alerts. Tracing follows the flow of requests through a distributed system to identify performance bottlenecks.

Why is logging important?

Logging provides a historical record of application behavior, enabling developers to diagnose errors, understand user interactions, and track system performance. It is essential for debugging, auditing, and compliance.

What are the best practices for writing effective log messages?

Use clear and concise language, include relevant context (e.g., user IDs, request IDs), and choose appropriate log levels (e.g., DEBUG, INFO, WARNING, ERROR). Format messages consistently for easy parsing and analysis.

How can I choose the right monitoring tools?

Consider factors such as the type of data you need to monitor (e.g., infrastructure, application performance, user experience), the scale of your environment, the features offered by the tools, and the cost. Evaluate open-source and commercial options based on your specific requirements.

What are the key benefits of implementing tracing in a microservices architecture?

Tracing helps pinpoint performance bottlenecks by visualizing the flow of requests across multiple services. It enables developers to quickly identify slow-performing services and optimize their code for better overall system performance and responsiveness.