Understanding how to handle asynchronous invocation errors in Lambda functions is crucial for building resilient and reliable serverless applications. Asynchronous invocations, offering benefits like improved scalability and decoupling, introduce complexities in error management. This necessitates a proactive approach to identify, mitigate, and recover from failures that inevitably arise in distributed systems.

This guide delves into the intricacies of error handling in asynchronous Lambda invocations. We explore common error types, configuration strategies, and best practices. We will cover the implementation of retry mechanisms, the strategic use of Dead-Letter Queues (DLQs), and the significance of robust monitoring and logging. This will enable developers to design systems that are not only scalable but also capable of gracefully managing failures, maintaining data integrity, and ensuring overall system stability.

Understanding Asynchronous Invocation in Lambda

Asynchronous invocation is a fundamental concept in AWS Lambda, offering a decoupled approach to function execution. It allows services to trigger Lambda functions without waiting for an immediate response, enhancing scalability and fault tolerance. This paradigm shift is crucial for building resilient and responsive applications.

Asynchronous Invocation Concept

Asynchronous invocation in AWS Lambda refers to the process where a service or another Lambda function triggers a Lambda function without waiting for it to complete. The invoker places the invocation request in a queue, and Lambda handles the execution asynchronously. This contrasts with synchronous invocations, where the invoker waits for the function to finish and receives a direct response.

The event source (e.g., S3, SNS, API Gateway) initiates the invocation, and Lambda processes the event. This architecture is designed to handle a high volume of events without overwhelming the system.

Benefits of Asynchronous Invocation

Asynchronous invocation excels in scenarios requiring high throughput and where immediate responses are not critical. Consider an image processing application. When a new image is uploaded to an S3 bucket, an asynchronous Lambda function can be triggered to resize the image, generate thumbnails, and store the processed versions. The initial upload process doesn’t need to wait for these tasks to complete, improving user experience and system responsiveness.

Another example is processing a large number of database records. A Lambda function can be invoked asynchronously to perform updates or calculations without blocking the main application thread. This is especially useful when dealing with computationally intensive tasks or operations that might take a long time to complete.

Differences Between Synchronous and Asynchronous Lambda Invocations

Synchronous and asynchronous Lambda invocations differ significantly in their behavior and use cases. The primary distinctions revolve around the invocation mechanism, error handling, and response patterns.

- Invocation Method: Synchronous invocations use the `Invoke` API with the `InvocationType` set to `RequestResponse`. The invoker waits for the function to complete and receives a response, including the function’s result or any error messages. Asynchronous invocations, on the other hand, use the `Invoke` API with the `InvocationType` set to `Event`. The invoker doesn’t wait for a response; the event is added to a queue managed by Lambda.

- Response Handling: Synchronous invocations provide direct responses, allowing the invoker to handle the function’s output immediately. Asynchronous invocations do not return a direct response to the invoker. Instead, Lambda manages the invocation and handles any errors using its retry policies and dead-letter queues (DLQs).

- Error Handling: In synchronous invocations, errors are directly returned to the invoker. The invoker can then handle these errors and implement retry mechanisms or other error-handling strategies. Asynchronous invocations handle errors differently. Lambda automatically retries failed invocations based on predefined policies. If the function fails repeatedly, the event may be sent to a DLQ for further investigation and resolution.

- Use Cases: Synchronous invocations are suitable for real-time operations where an immediate response is required, such as API calls or user interactions. Asynchronous invocations are ideal for background tasks, event-driven architectures, and scenarios where high throughput is essential.

Common Error Types in Asynchronous Lambda Invocations

Asynchronous Lambda invocations, while offering flexibility and scalability, introduce complexities in error handling. Understanding the common error types that can arise is crucial for building resilient and reliable serverless applications. These errors can stem from various sources, impacting the successful execution of functions and potentially leading to data inconsistencies or service disruptions.

Service-Related Errors

Service-related errors originate from the underlying AWS infrastructure or dependencies that the Lambda function interacts with. These errors are often outside the direct control of the function code and require careful monitoring and mitigation strategies.

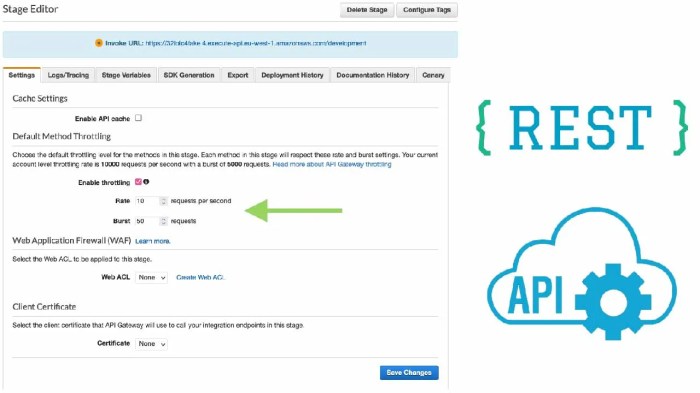

- Throttle Errors: These occur when the invocation rate exceeds the configured limits for Lambda or related services like SQS or DynamoDB. Lambda has concurrency limits that, if exceeded, result in throttling. Similarly, services that the Lambda function interacts with, such as SQS or DynamoDB, also have their own rate limits.

- Service Unavailable Errors: These errors indicate that a dependent AWS service is temporarily unavailable. This could be due to service disruptions, network issues, or internal problems within the service. For example, a transient failure in accessing DynamoDB could result in a `ServiceUnavailableException`.

- Resource Limit Errors: Lambda functions have resource limits, such as memory, execution time, and storage. Exceeding these limits results in errors. For instance, if a function attempts to allocate more memory than its configuration allows, a resource limit error will be triggered.

- Internal Errors: These are generic errors within the AWS infrastructure itself. They often indicate an unexpected issue on the service side and are usually transient. The specifics of the internal error are often not directly exposed, making diagnosis challenging.

Function-Related Errors

Function-related errors are directly tied to the code and configuration of the Lambda function. These errors are often easier to diagnose and fix, but they require thorough testing and careful code design.

- Invocation Errors: These occur when the Lambda function fails to execute due to issues within the function’s code. This can include syntax errors, runtime exceptions (e.g., `NullPointerException`, `IndexOutOfBoundsException`), or logic errors. For instance, a function that attempts to parse a malformed JSON payload will result in an invocation error.

- Timeout Errors: These happen when a Lambda function exceeds its configured execution time limit. The function is terminated if it doesn’t complete within the specified time. This can be due to inefficient code, excessive processing, or waiting for external resources.

- Configuration Errors: These arise from incorrect configuration settings for the Lambda function or its associated resources. This can include incorrect IAM permissions, invalid environment variables, or misconfigured event sources. For example, a function without the necessary permissions to access a DynamoDB table will fail.

- Concurrency Errors: These errors can occur when multiple invocations of the same function are running concurrently, leading to race conditions or data corruption. For example, if two concurrent invocations try to update the same database record without proper locking mechanisms, the final result may be inconsistent.

Impact of Errors on the Overall System

The impact of errors in asynchronous Lambda invocations can range from minor inconveniences to significant system-wide failures. Understanding these impacts is essential for prioritizing error handling strategies.

- Data Loss or Corruption: Errors can lead to data loss or corruption, especially if the function is responsible for processing critical data or updating persistent storage. For example, if a function fails to write data to a database, the data might be lost.

- Service Degradation: Errors can degrade the performance of the overall system. For instance, if a function is throttled, it can cause a backlog of messages in the event source, leading to delays in processing other tasks.

- Increased Costs: Errors, especially those that lead to retries or excessive resource consumption, can increase the cost of running the system. For example, if a function continuously retries a failed operation, it can consume more compute time and storage.

- Reduced User Experience: Errors can directly impact the user experience. If a function fails to process a user request, it can result in errors, delays, or incomplete operations, leading to user dissatisfaction.

Configuring Lambda Function for Error Handling

Effectively handling errors during asynchronous Lambda invocations is critical for maintaining application reliability and data integrity. This involves configuring the Lambda function to gracefully manage failures, implement retry mechanisms, and leverage monitoring and alerting systems. This approach ensures that transient errors are automatically resolved and that persistent failures are addressed through appropriate channels, such as dead-letter queues and human intervention.

Designing Lambda Function for Error Handling with Retry Mechanism

Implementing a robust error-handling strategy within a Lambda function that processes asynchronous invocations requires a multifaceted approach. The core principle involves the strategic use of retries to mitigate transient errors. The following details Artikel the key elements of this design:The initial step involves integrating error-handling logic within the Lambda function’s code. This includes:

- Error Detection: The function must explicitly identify potential errors. This can involve checking the input data for validity, handling exceptions raised by the function’s core logic, and monitoring the responses from any external services or resources that the function interacts with.

- Exception Handling: Implement try-except blocks or similar mechanisms to catch and handle exceptions gracefully. This allows the function to react appropriately to errors, such as logging the error details and preparing for a retry.

- Retry Logic: Implement a retry mechanism using a library or custom code. The retry strategy should include:

- Exponential Backoff: This is a key component of the retry mechanism. Exponential backoff involves increasing the delay between retries, typically doubling the delay with each attempt. This is important because it prevents overloading the downstream service or resource and gives it time to recover.

- Maximum Retry Attempts: Define a maximum number of retries to prevent infinite loops. After exceeding the maximum number of retries, the function should send the event to a dead-letter queue (DLQ).

- Randomized Jitter: Adding a small amount of randomness (jitter) to the delay between retries can prevent multiple functions from retrying simultaneously, which could overload the downstream service.

For instance, consider a scenario where a Lambda function processes messages from an SQS queue, and each message contains instructions to update a database. If a transient network issue prevents the database update, the Lambda function should retry the operation. Using exponential backoff, the function might retry after 1 second, then 2 seconds, then 4 seconds, and so on, up to a defined maximum number of retries.

If the database update fails after the maximum number of retries, the message is then sent to a DLQ for manual inspection and resolution.

Organizing Error Handling Strategy with Dead-Letter Queues (DLQs)

Dead-letter queues (DLQs) are essential for handling persistent failures in asynchronous Lambda invocations. When a Lambda function repeatedly fails to process an event, the event is routed to a DLQ, preventing it from repeatedly triggering the Lambda function and consuming resources. The following points illustrate how to effectively utilize DLQs:

- DLQ Configuration: When creating or updating a Lambda function, configure a DLQ. This is usually an SQS queue or an SNS topic. The Lambda service will automatically send failed invocation attempts to the configured DLQ.

- Error Analysis in DLQ: Regularly monitor the DLQ for failed events. Analyze the events in the DLQ to determine the root cause of the failures. This might involve examining the event payload, Lambda function logs, and any related error messages.

- Remediation Strategies: Depending on the nature of the failures, develop remediation strategies. These strategies might include:

- Manual Intervention: For data corruption or other complex issues, manual intervention might be required to correct the data or resolve the underlying problem.

- Automated Retry with Modifications: If the issue is related to data formatting or other easily fixable problems, the events in the DLQ can be modified and resubmitted to the original Lambda function.

- Event Discarding: In some cases, the events might be deemed irrelevant or unrecoverable, and they can be discarded. However, this should be a last resort.

Consider a scenario where a Lambda function processes image uploads. If an image fails to be processed after multiple retries due to an invalid format, the event (containing the image metadata) is sent to a DLQ. An operator can then review the image metadata, determine the format issue, and potentially re-upload the image in the correct format, resubmitting the event to the Lambda function for processing.

Creating Function for Monitoring and Alerting for Invocation Errors

Effective monitoring and alerting are crucial for promptly detecting and responding to errors in asynchronous Lambda invocations. Implementing these systems allows for proactive identification of issues and enables timely corrective actions. The following are key aspects of this strategy:

- Metric Collection: Implement monitoring using services like Amazon CloudWatch to collect relevant metrics. Key metrics to monitor include:

- Invocation Errors: The number of Lambda function invocation errors.

- Throttled Invocations: The number of times the Lambda function was throttled.

- DLQ Messages: The number of messages in the dead-letter queue.

- Duration: The execution time of the Lambda function.

- Alerting Rules: Configure CloudWatch alarms based on the collected metrics. These alarms should trigger notifications when specific thresholds are exceeded. For example, an alarm can be configured to trigger when the number of invocation errors exceeds a certain threshold within a specific time frame, or when the DLQ message count increases beyond a set limit.

- Notification Channels: Integrate notification channels, such as Amazon SNS or email, to receive alerts. Ensure the notifications contain relevant information, such as the function name, error type, and a link to the CloudWatch logs for further investigation.

- Log Analysis: Set up log analysis using services like CloudWatch Logs Insights or third-party logging tools. Analyze the logs to identify the root causes of errors, which will help in debugging and resolving issues.

For example, a retail company utilizes a Lambda function to process order confirmations. Monitoring is established to track the number of invocation errors and the number of messages in the DLQ. An alert is configured to send a notification to the operations team if the error rate exceeds 1% or if the DLQ contains more than 100 messages. This enables the operations team to promptly investigate the issue and prevent potential data loss or customer dissatisfaction.

Using Dead-Letter Queues (DLQs) for Error Management

Dead-Letter Queues (DLQs) provide a robust mechanism for managing failures in asynchronous Lambda invocations. When a Lambda function fails to process an event, it’s crucial to have a system to capture and store the failed event for later analysis and potential reprocessing. DLQs serve this purpose by acting as a holding area for these failed events, preventing data loss and enabling efficient error recovery.

Demonstrating the Use of DLQs to Capture and Store Failed Asynchronous Invocation Events

DLQs are essential for maintaining data integrity and enabling troubleshooting when asynchronous Lambda invocations fail. The primary function of a DLQ is to receive events that Lambda functions cannot successfully process. When an invocation fails, the event details, including the payload and relevant metadata, are automatically sent to the DLQ. This process is managed by the Lambda service itself, requiring minimal intervention from the developer once configured.

The DLQ stores these failed events, typically in a format compatible with other AWS services, such as SQS (Simple Queue Service) or SNS (Simple Notification Service), depending on the configuration. This stored information allows for detailed analysis of the failure, identifying the root cause, and enabling corrective actions. For example, a developer can examine the event payload in the DLQ to understand the data that caused the function to fail.

Subsequently, the developer can reprocess the event after addressing the underlying issue, thus ensuring that no data is lost due to transient errors or unexpected conditions. The process also provides an audit trail of failed events, aiding in compliance and operational monitoring.

Providing the Steps to Configure a DLQ for a Lambda Function

Configuring a DLQ for a Lambda function is a straightforward process, typically involving the AWS Management Console, AWS CLI, or infrastructure-as-code tools like AWS CloudFormation or Terraform. The process generally includes selecting the desired queue service and specifying the queue’s ARN (Amazon Resource Name) within the Lambda function’s configuration.

- Choose a Queue Service: Decide whether to use Amazon SQS or Amazon SNS for the DLQ. SQS is often preferred for its ability to handle a large volume of messages and its decoupling capabilities, while SNS can be useful if you need to trigger notifications based on failed invocations.

- Create the DLQ: Create an SQS queue or an SNS topic in the AWS region where the Lambda function resides. If using SQS, configure appropriate permissions for the Lambda function to send messages to the queue. For SNS, ensure the Lambda function has permissions to publish messages to the topic.

- Configure the Lambda Function: Configure the Lambda function to use the DLQ. This can be done through the AWS Management Console, the AWS CLI, or infrastructure-as-code tools. In the console, navigate to the Lambda function’s configuration, go to the “Configuration” tab, then “Asynchronous invocation,” and specify the ARN of the DLQ. Using the AWS CLI, you can update the function’s configuration using the `update-function-configuration` command and the `–dead-letter-config` parameter.

In infrastructure-as-code solutions, the DLQ configuration is defined within the function’s resource definition.

- Test the Configuration: After configuring the DLQ, test it by intentionally causing the Lambda function to fail. This can be done by providing invalid input or introducing an error within the function’s code. Verify that the failed event is sent to the DLQ. Inspect the DLQ to confirm that the event payload and metadata are stored correctly.

Detailing the Advantages of Using DLQs in Error Management

Utilizing DLQs in error management offers several advantages, contributing to enhanced operational efficiency, improved data integrity, and simplified troubleshooting processes. These benefits collectively result in a more resilient and maintainable serverless architecture.

- Data Preservation: DLQs prevent data loss by storing failed invocation events. This ensures that critical data is not lost when a Lambda function fails, enabling data integrity. The ability to retain the event payload and metadata allows for a complete record of the failed event.

- Simplified Troubleshooting: DLQs simplify troubleshooting by providing a centralized location for failed events. The event payload and associated metadata, such as the invocation ID and timestamps, offer critical information for diagnosing the root cause of failures. By examining the event data in the DLQ, developers can identify issues, such as invalid data formats or unexpected input, without needing to reproduce the failure conditions.

- Automated Retries and Reprocessing: DLQs facilitate automated retries and reprocessing of failed events. After addressing the root cause of the failure, events stored in the DLQ can be reprocessed, reducing the risk of data loss and ensuring that the function completes its intended operations. The reprocessing can be done manually or automated using a variety of tools.

- Improved Monitoring and Alerting: DLQs enhance monitoring and alerting capabilities. The presence of messages in the DLQ can trigger alerts, indicating that a Lambda function is experiencing failures. This allows for proactive identification and resolution of issues. Monitoring the DLQ’s metrics, such as the number of messages and the age of the oldest message, provides valuable insights into the health and performance of the Lambda function.

- Enhanced Compliance: DLQs support compliance requirements by providing an audit trail of failed events. The DLQ stores detailed information about failed invocations, which can be crucial for regulatory requirements. This allows for comprehensive analysis and reporting, helping to meet compliance standards.

Implementing Retries and Backoff Strategies

Handling asynchronous invocation errors in AWS Lambda functions often necessitates the implementation of retry mechanisms. These mechanisms are crucial for transient failures, where the underlying issue is temporary and self-correcting. By strategically re-attempting the invocation, the function can potentially succeed, improving overall system reliability and reducing the impact of intermittent problems.

Retry Strategies for Invocation Failures

Different retry strategies exist, each with its own characteristics and suitability for various scenarios. Choosing the right strategy depends on the nature of the errors, the acceptable latency, and the desired level of resilience.

- Fixed Delay: This is the simplest strategy, where the function retries after a fixed interval. While easy to implement, it is often inefficient as it doesn’t adapt to the failure patterns. It is suitable for predictable failure scenarios where the recovery time is known.

- Randomized Delay: This strategy introduces a random element to the fixed delay. This helps to avoid “thundering herd” problems where multiple functions retry simultaneously, potentially overwhelming a downstream service. The delay is usually within a defined range.

- Exponential Backoff: This strategy increases the delay between retries exponentially. This is the most common and often the most effective strategy for asynchronous invocations. It allows the system to recover from failures while gradually increasing the wait time, preventing overwhelming the downstream service. The formula for calculating the delay typically involves a base delay and a multiplier that increases with each retry.

- Truncated Exponential Backoff: This is a variation of exponential backoff, but it includes a maximum delay. This prevents excessively long delays, which might not be acceptable for all use cases. The retry intervals grow exponentially until the maximum delay is reached, at which point the maximum delay is used for all subsequent retries.

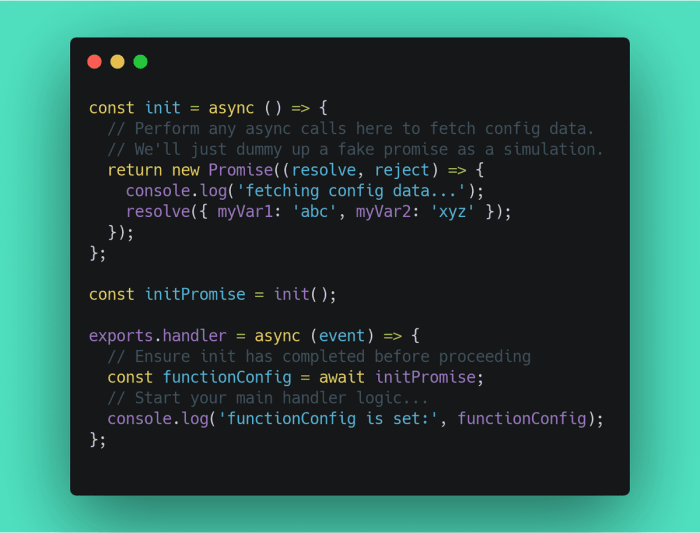

Implementing Exponential Backoff in Python

Exponential backoff is a powerful technique for handling transient failures in asynchronous Lambda invocations. The core principle involves increasing the delay between retry attempts exponentially, allowing time for the underlying issue to resolve while avoiding overwhelming the target service. Below is a Python code example demonstrating the implementation.“`python import time import random from botocore.exceptions import ClientError import boto3 def invoke_lambda_with_retry(function_name, payload, max_retries=3, base_delay=1, max_delay=60): “”” Invokes a Lambda function with exponential backoff retry.

Args: function_name (str): The name of the Lambda function to invoke. payload (dict): The payload to send to the Lambda function. max_retries (int): The maximum number of retry attempts. base_delay (int): The initial delay in seconds. max_delay (int): The maximum delay in seconds.

Returns: dict: The response from the Lambda function, or None if all retries fail. “”” client = boto3.client(‘lambda’) retries = 0 delay = base_delay while retries <= max_retries: try: response = client.invoke(FunctionName=function_name, InvocationType='Event', # Asynchronous invocation Payload=bytes(json.dumps(payload), 'utf-8')) # Successful invocation, return the response return response except ClientError as e: if e.response['Error']['Code'] in ['TooManyRequestsException', 'ServiceUnavailableException', 'InternalServerError']: # These errors are often transient and can be retried retries += 1 if retries > max_retries: print(f”Max retries exceeded for function function_name. Error: e”) return None sleep_time = min(delay

- (2

- * (retries -1)) + random.uniform(0,1), max_delay)

print(f”Retrying function function_name in sleep_time:.2f seconds (attempt retries/max_retries). Error: e”) time.sleep(sleep_time) else: # Other errors are not retriable, re-raise print(f”Non-retriable error for function function_name. Error: e”) raise except Exception as e: # Catch any other exceptions retries += 1 if retries > max_retries: print(f”Max retries exceeded for function function_name. Error: e”) return None sleep_time = min(delay

- (2

- * (retries – 1)) + random.uniform(0, 1), max_delay)

print(f”Retrying function function_name in sleep_time:.2f seconds (attempt retries/max_retries). Error: e”) time.sleep(sleep_time) print(f”All retries failed for function function_name”) return None“`The `invoke_lambda_with_retry` function encapsulates the retry logic. It attempts to invoke the specified Lambda function. If a `ClientError` occurs, it checks the error code. If the error is considered transient (e.g., `TooManyRequestsException`, `ServiceUnavailableException`, `InternalServerError`), the function calculates the delay using exponential backoff with added jitter using `random.uniform(0,1)`. The function then sleeps for the calculated delay before retrying. If the error is not transient, the exception is re-raised. The function also includes a `max_retries` parameter to prevent infinite loops. The `InvocationType=’Event’` parameter ensures an asynchronous invocation.

Comparing Different Retry Strategies

The effectiveness of different retry strategies depends heavily on the nature of the errors being encountered and the specific characteristics of the systems involved.

| Retry Strategy | Pros | Cons | Use Cases |

|---|---|---|---|

| Fixed Delay | Simple to implement; predictable retry intervals. | Inefficient for variable failure durations; may overwhelm downstream services. | Situations with known and consistent recovery times; when resource usage is predictable. |

| Randomized Delay | Avoids thundering herd problems; simple to implement. | Less effective than exponential backoff for longer failures; still lacks adaptability. | When the primary goal is to spread out retries to avoid overloading a service. |

| Exponential Backoff | Adapts well to varying failure durations; avoids overwhelming services; generally the most effective. | Can lead to long delays if failures persist; requires careful configuration of `max_delay`. | Most common use case for asynchronous Lambda invocations, for transient errors in general. |

| Truncated Exponential Backoff | Limits maximum retry delay; provides a balance between resilience and latency. | More complex to configure than simple exponential backoff; still relies on accurate configuration of the maximum delay. | When both resilience and acceptable latency are critical, for all asynchronous operations. |

Exponential backoff generally offers the best balance between resilience and efficiency for asynchronous Lambda invocations. However, the specific strategy chosen should be tailored to the specific requirements of the application and the expected error patterns. Careful monitoring and analysis of invocation failures are crucial for optimizing retry strategies and ensuring optimal performance.

Monitoring and Logging Asynchronous Invocation Errors

Effective monitoring and logging are crucial for understanding and addressing errors in asynchronous Lambda invocations. Comprehensive logging provides visibility into the function’s execution, enabling the identification of error patterns and the root causes of failures. Monitoring, on the other hand, allows for proactive detection of issues, ensuring timely intervention and minimizing the impact of errors. This section details the implementation of CloudWatch logging, metric generation, and alarming for asynchronous Lambda invocations.

Setting up CloudWatch Logging for Asynchronous Invocation Errors

Proper logging is the cornerstone of error analysis. By logging relevant information, one can reconstruct the execution flow and pinpoint the source of the problem.To configure CloudWatch logging for a Lambda function, follow these steps:

- Configure Logging within the Lambda Function Code: Within the function’s code, use the appropriate SDK (e.g., AWS SDK for JavaScript) to log messages. This involves utilizing logging methods such as `console.log()`, `console.error()`, and `console.warn()` to capture pertinent information. Ensure that these logs include:

- Invocation ID: The unique identifier of the invocation, crucial for tracing the execution path.

- Timestamp: The time at which the log entry was generated.

- Function Name: The name of the Lambda function.

- Request ID: The unique identifier for the request.

- Error Messages: Detailed descriptions of any errors encountered.

- Relevant Context: Any data or variables that might be helpful in debugging.

Example (JavaScript):

exports.handler = async (event, context) => try console.log(`Function Name: $context.functionName`); console.log(`Request ID: $context.awsRequestId`); // ... your function logic ... catch (error) console.error(`Error: $error.message`); console.error(`Stack: $error.stack`); throw error; // Re-throw the error to ensure it's handled by Lambda ; - Ensure Proper Permissions: The Lambda function’s execution role must have the necessary permissions to write logs to CloudWatch. This is achieved by attaching the `AWSLambdaBasicExecutionRole` managed policy, or a custom policy with equivalent permissions, to the execution role. This policy grants permissions to create log groups and write logs to them.

Example (IAM Policy):"Version": "2012-10-17", "Statement": [ "Effect": "Allow", "Action": [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents" ], "Resource": "arn:aws:logs:*:*:*" ] - Monitor Log Groups: Navigate to the CloudWatch console and select “Log groups”. Locate the log group associated with the Lambda function (named after the function). Examine the log streams within the log group to view the logs generated by the function.

Creating CloudWatch Metrics for Error Rates

Creating CloudWatch metrics allows for the aggregation and visualization of data, facilitating the identification of trends and anomalies. It allows you to understand the error rates and overall health of the Lambda function.

To generate CloudWatch metrics for error rates:

- Emit Custom Metrics: Within the Lambda function code, use the CloudWatch SDK to emit custom metrics. This involves calling the `putMetricData` API to publish data points to CloudWatch. Consider the following when designing metrics:

- Metric Name: Choose a descriptive name for the metric, such as “InvocationErrors”.

- Namespace: Define a namespace to categorize the metrics, for example, “LambdaFunctions”.

- Dimensions: Use dimensions to categorize the metrics further. For example, use the function name to differentiate error rates across different functions.

- Value: Set the value to ‘1’ for each error occurrence, and ‘0’ for successful invocations.

- Unit: Specify the unit for the metric (e.g., “Count”).

Example (Python):

import boto3 import os cloudwatch = boto3.client('cloudwatch') def handler(event, context): try: # ... your function logic ... print("Function executed successfully") return 'statusCode': 200, 'body': 'Success' except Exception as e: print(f"Error: e") cloudwatch.put_metric_data( Namespace='LambdaFunctions', MetricData=[ 'MetricName': 'InvocationErrors', 'Dimensions': [ 'Name': 'FunctionName', 'Value': os.environ['AWS_LAMBDA_FUNCTION_NAME'] , ], 'Value': 1, 'Unit': 'Count' , ] ) raise e - Monitor Metrics in the CloudWatch Console: After emitting metrics, navigate to the CloudWatch console and select “Metrics”. Browse the metrics by namespace and then by metric name to view the published data. Visualize the metrics using graphs to observe trends in error rates over time.

Using CloudWatch Alarms to Alert on Invocation Failures

CloudWatch alarms provide automated alerting based on metric thresholds, enabling proactive responses to failures. This ensures timely notification of issues and allows for quick intervention.

To set up CloudWatch alarms:

- Create an Alarm: In the CloudWatch console, select “Alarms” and then “Create alarm.”

- Select Metric: Choose the metric you created earlier, such as “InvocationErrors”. Specify the namespace and metric name.

- Define Threshold: Set the threshold that triggers the alarm. For example, trigger the alarm if the error rate exceeds a certain number of errors within a specific time period (e.g., 1 error in 5 minutes).

- Configure Actions: Specify the actions to be taken when the alarm transitions to the “ALARM” state. This typically involves sending notifications via an SNS topic.

- Configure SNS Topic: Create an SNS topic and subscribe to it with the desired notification endpoints (e.g., email addresses, SMS numbers). When the alarm state changes to ALARM, CloudWatch will publish a notification to the SNS topic.

- Test and Validate: Simulate errors to verify that the alarm is triggered correctly and that notifications are received. Monitor the alarm state and logs to ensure that the alerting system is functioning as expected.

Handling Errors in Event Sources (e.g., SQS, SNS)

Lambda functions frequently integrate with event sources like Simple Queue Service (SQS) and Simple Notification Service (SNS) to process events asynchronously. Errors encountered during the processing of messages from these services require specific handling strategies to ensure data integrity and prevent cascading failures. This section details error handling mechanisms tailored for SQS and SNS integrations, emphasizing the importance of robust error management in maintaining application reliability.

Handling Errors in SQS Triggered Lambda Functions

Lambda functions triggered by SQS queues can encounter errors during message processing. These errors can stem from various sources, including invalid data formats, dependencies not being available, or application logic failures. Effective error handling involves identifying the error type, implementing appropriate retry mechanisms, and managing messages that consistently fail.

The following points Artikel strategies for error management in SQS-triggered Lambda functions:

- Visibility Timeout: The visibility timeout of a message in SQS determines how long a consumer has to process and delete a message before it becomes visible to other consumers. If a Lambda function fails to process a message within the visibility timeout, the message becomes visible again, and another consumer can attempt to process it. This mechanism provides a basic level of retry functionality.

The default value is 30 seconds, but it can be configured.

- Retry Attempts: Configure the Lambda function to retry failed invocations. The Lambda service automatically retries failed invocations based on the configuration. The retry behavior is influenced by the event source’s retry settings. SQS, by default, does not have a retry mechanism in place for Lambda functions. However, the visibility timeout functions as a retry by making the message visible again.

- Dead-Letter Queues (DLQs): When a message fails repeatedly, it should be sent to a DLQ. This prevents the message from indefinitely blocking processing and allows for manual inspection and analysis of the failed messages. The DLQ is a separate SQS queue that receives messages that have failed processing after a specified number of retries.

- Error Handling in Code: Implement robust error handling within the Lambda function code. This includes catching exceptions, logging error details, and potentially using circuit breakers to prevent cascading failures if a dependency is unavailable.

- Monitoring: Monitor the function’s metrics, including error counts and DLQ message counts, to identify potential issues and assess the effectiveness of error handling strategies.

Handling Errors in SNS Triggered Lambda Functions

Lambda functions can also be triggered by SNS topics, which distribute messages to subscribers. Errors in this context can occur during message processing by the Lambda function, or due to issues within the SNS service itself, such as permission errors or delivery failures. Handling these errors requires careful consideration of message delivery and function execution.

The following points detail how to handle errors in SNS-triggered Lambda functions:

- Retry Policy: SNS offers a retry policy for message delivery. If a Lambda function fails to process a message, SNS will retry delivering the message according to its retry policy. The retry policy is configurable, allowing for adjustments to the number of retries and the delay between retries.

- Dead-Letter Queue (DLQ) for SNS: Configure a DLQ for the SNS subscription. If the Lambda function consistently fails to process a message after the SNS retry attempts are exhausted, the message is sent to the DLQ. This ensures that messages that cannot be processed are not lost and can be analyzed.

- Error Handling in Code: Implement comprehensive error handling within the Lambda function. This includes catching exceptions, logging detailed error messages, and handling scenarios such as invalid data or unavailable dependencies.

- Message Filtering: Implement message filtering on the SNS topic to reduce the number of messages sent to the Lambda function. This can prevent the function from being overloaded and reduce the likelihood of errors.

- Monitoring and Alerting: Set up monitoring and alerting to track errors and DLQ message counts. This allows for proactive identification of issues and timely intervention.

Designing a Solution for Dealing with Poison Messages in SQS

Poison messages are messages that consistently fail to be processed by a Lambda function, often due to data corruption or other unresolvable issues. Without proper handling, these messages can block the queue and prevent other valid messages from being processed. A robust solution involves several components.

The following is a solution to deal with poison messages:

- Visibility Timeout and Retry Count: Utilize the visibility timeout of SQS and Lambda function’s retry mechanism (based on visibility timeout). The default visibility timeout of 30 seconds is often a good starting point, but this should be tuned based on processing time.

- Dead-Letter Queue (DLQ): Configure a DLQ for the SQS queue. This is where messages that fail after a certain number of retries are sent.

- Maximum Receive Count: Configure the SQS queue with a maximum receive count. When a message is received and processed by a Lambda function, the function is expected to delete the message from the queue. If the message fails, the visibility timeout is triggered, and the message becomes visible again. After a specified number of attempts (defined by the maximum receive count), the message is sent to the DLQ.

This value is usually set to be greater than the number of Lambda retries.

- Message Attribute for Retry Count: The Lambda function can read the receive count from the SQS message attributes. The attribute `ApproximateReceiveCount` provides the number of times the message has been attempted to be processed. This information can be used to decide whether to reprocess the message or send it to the DLQ.

- Error Handling and Logging in Lambda: Implement comprehensive error handling within the Lambda function code. Log detailed information about the error, including the message content, error stack trace, and any relevant context.

- Analysis and Remediation: Regularly review messages in the DLQ. This analysis can identify the root cause of the poison message issue. Remediation might involve fixing the data, correcting the function code, or other appropriate actions.

Error Handling with AWS SDKs

AWS SDKs provide a programmatic interface to interact with AWS services, including Lambda. Effective error handling is crucial when using SDKs to invoke Lambda functions asynchronously, as it ensures the resilience and reliability of your applications. This section focuses on leveraging AWS SDKs for robust error management during Lambda invocations.

Error Handling with Try-Except Blocks

Implementing try-except blocks is a fundamental practice for handling exceptions that may arise during Lambda invocations using AWS SDKs. This approach allows you to gracefully catch and manage errors, preventing application failures and enabling more sophisticated error-handling strategies.

The use of try-except blocks provides a structured way to handle potential errors during asynchronous Lambda invocations. Within the `try` block, you place the code that interacts with the Lambda service, such as the invocation call. The `except` block then catches specific exception types that might occur during the invocation. This allows you to define custom error-handling logic, such as logging the error, retrying the invocation, or sending notifications.

Here is a Python example using Boto3 to illustrate this:

“`python

import boto3

import json

lambda_client = boto3.client(‘lambda’)

def invoke_lambda(function_name, payload):

try:

response = lambda_client.invoke(

FunctionName=function_name,

InvocationType=’Event’, # Asynchronous invocation

Payload=json.dumps(payload)

)

# Process successful response if needed (e.g., logging)

print(f”Invocation successful for function_name”)

except lambda_client.exceptions.ResourceNotFoundException as e:

print(f”Error: Lambda function not found: e”)

# Implement specific error handling for function not found

except lambda_client.exceptions.InvalidRequestContentException as e:

print(f”Error: Invalid request content: e”)

# Handle invalid payload content

except Exception as e:

print(f”An unexpected error occurred: e”)

# General error handling for any other exceptions

“`

The code above demonstrates how to wrap the `lambda_client.invoke()` call within a `try` block. Specific `except` blocks catch known exceptions like `ResourceNotFoundException` and `InvalidRequestContentException`. A general `except` block handles any other exceptions that might occur. Each `except` block provides an opportunity to log the error, take corrective actions, or implement retry mechanisms.

Implementing Custom Error Handling Logic

Beyond basic exception catching, AWS SDKs allow for the implementation of custom error-handling logic tailored to specific application needs. This often involves analyzing the nature of the error and determining the appropriate response.

Custom error handling can involve:

- Logging: Detailed logging of errors, including timestamps, error messages, and the context of the invocation, is crucial for debugging and monitoring. The logs should capture relevant information to understand the cause of the error.

- Retries: Implementing retry mechanisms, potentially with exponential backoff, can mitigate transient errors. This can be achieved using libraries like `boto3` and implementing custom retry logic. A common approach is to retry failed invocations a certain number of times with increasing delays between each attempt.

- Alerting: Setting up alerts (e.g., via SNS, CloudWatch alarms) to notify operators of persistent or critical errors. This allows for proactive intervention and faster resolution of issues. CloudWatch alarms can be configured to trigger based on specific error patterns detected in the logs.

- Circuit Breaker Pattern: Implementing a circuit breaker pattern to prevent cascading failures. If a Lambda function consistently fails, the circuit breaker can temporarily prevent further invocations to protect dependent systems.

- Dead-Letter Queue Integration: Routing failed invocations to a Dead-Letter Queue (DLQ) for later inspection and processing. This allows you to preserve the original event data for analysis.

For example, to implement a retry mechanism with exponential backoff, you might modify the previous code:

“`python

import boto3

import json

import time

import random

lambda_client = boto3.client(‘lambda’)

def invoke_lambda_with_retry(function_name, payload, max_retries=3, base_delay=1):

for attempt in range(max_retries + 1):

try:

response = lambda_client.invoke(

FunctionName=function_name,

InvocationType=’Event’,

Payload=json.dumps(payload)

)

print(f”Invocation successful after attempt retries for function_name”)

return # Success, exit the retry loop

except Exception as e:

print(f”Attempt attempt + 1 failed: e”)

if attempt < max_retries: delay = base_delay- (2 attempt) + random.uniform(0, 0.1

– (2 attempt)) # Exponential backoff with jitter

print(f”Retrying in delay:.2f seconds…”)

time.sleep(delay)

else:

print(f”Max retries reached for function_name.

Failed.”)

# Implement further error handling such as sending the message to a DLQ or alerting.

raise # Re-raise the exception to signal failure

“`

In this example, the `invoke_lambda_with_retry` function includes a loop that attempts the Lambda invocation multiple times. If an exception occurs, it waits for a calculated delay before retrying. The delay increases exponentially with each attempt, incorporating a small amount of random jitter to avoid synchronized retries. If the invocation continues to fail after the maximum number of retries, the exception is re-raised, and the function calling this function needs to implement further error handling.

Testing Error Handling Mechanisms

Testing error handling mechanisms is crucial for ensuring the reliability and resilience of asynchronous Lambda functions. A robust testing strategy validates the effectiveness of implemented error handling, including retries, dead-letter queues, and monitoring, under various failure scenarios. This process confirms that the system behaves as expected when errors occur, preventing data loss and minimizing operational disruptions.

Test Plan for Error Handling Validation

Developing a comprehensive test plan is essential for systematically validating the error handling capabilities of Lambda functions. The plan should Artikel specific test cases, expected outcomes, and success criteria.

- Test Case Identification: Define a set of test cases covering different error scenarios. These should include:

- Function timeouts.

- Exceptions within the function code (e.g., `NullPointerException`, `IndexOutOfBoundsException`).

- Network connectivity issues (simulated by blocking network access or injecting latency).

- Errors during resource access (e.g., S3 access denied, DynamoDB throttling).

- Event source failures (e.g., SQS message delivery failures, SNS topic publishing failures).

- Test Environment Setup: Prepare a dedicated testing environment that mirrors the production setup as closely as possible. This includes:

- Provisioning Lambda functions with appropriate configurations (e.g., memory, timeout, DLQ).

- Setting up event sources (SQS queues, SNS topics) for testing.

- Configuring monitoring and logging tools (CloudWatch, X-Ray).

- Test Execution: Execute each test case and observe the function’s behavior. Monitor logs, metrics, and the DLQ to verify error handling mechanisms.

- Expected Results and Success Criteria: Define the expected outcomes for each test case. For example:

- For a timeout error, verify that the function retries (if configured) and eventually sends the event to the DLQ.

- For an exception within the function, confirm that the error is logged and the event is sent to the DLQ.

- For successful retries, confirm that the event is processed successfully after the retries.

- Test Reporting: Document the test results, including the test case, the actual outcome, and whether the test passed or failed.

Simulating Error Scenarios

Simulating error scenarios allows for the realistic testing of error handling mechanisms. This can be achieved through various techniques, ensuring that the function behaves correctly under stress.

- Code-Based Error Injection: Modify the function code to intentionally introduce errors. For example:

- Exception Throwing: Insert `throw new RuntimeException(“Simulated error”);` within the function’s logic to simulate unexpected errors.

- Resource Access Failures: Introduce code that intentionally fails to access external resources (e.g., by providing incorrect credentials for an S3 bucket).

- Network Manipulation: Simulate network issues to test connectivity-related error handling. This includes:

- Blocking Network Access: Use tools like `iptables` (Linux) or firewall rules to block outgoing network traffic from the Lambda function.

- Introducing Latency: Inject delays in network communication to simulate slow network conditions.

- Event Source Manipulation: Simulate event source failures to validate event-driven error handling. This includes:

- SQS Message Corruption: Modify the SQS message body to introduce parsing errors.

- SNS Topic Throttling: Simulate throttling on the SNS topic by publishing a large number of messages rapidly.

- Resource Throttling: Simulate resource limitations to test throttling error handling. For instance, simulate DynamoDB throttling by increasing the read/write capacity utilization beyond provisioned limits.

Writing Unit Tests for Error Handling Code

Unit tests are essential for verifying the error handling code within Lambda functions. These tests should focus on the specific logic responsible for handling exceptions, retries, and DLQ interactions.

- Testing Exception Handling: Verify that the function correctly catches and handles exceptions.

- Mocking Dependencies: Use mocking frameworks (e.g., Mockito in Java, unittest.mock in Python) to simulate dependencies like AWS SDK clients. This allows for injecting errors during dependency calls.

- Asserting Error Handling Logic: Assert that the correct error handling logic is executed when an exception is thrown. This might involve verifying that the function logs the error, sends the event to the DLQ, or initiates a retry.

- Testing Retry Logic: Validate that the retry mechanism functions as expected.

- Simulating Transient Errors: Simulate transient errors (e.g., network timeouts) during the execution of a dependency call.

- Verifying Retry Attempts: Ensure that the retry logic attempts to re-execute the failing operation a specified number of times.

- Verifying Backoff Strategies: Test that the backoff strategy (e.g., exponential backoff) is correctly implemented.

- Testing DLQ Integration: Confirm that events are correctly sent to the DLQ when errors occur.

- Mocking DLQ Client: Mock the DLQ client (e.g., SQS client) to verify that the correct messages are sent to the DLQ.

- Verifying DLQ Message Contents: Assert that the DLQ message contains the expected error details, including the original event data and the error message.

Best Practices for Asynchronous Invocation Error Handling

Effective error handling is paramount for maintaining the reliability and resilience of asynchronous Lambda functions. Implementing robust error management strategies ensures that failures are gracefully handled, data integrity is preserved, and system downtime is minimized. This section Artikels best practices for designing and implementing these critical error handling mechanisms.

Best Practices for Asynchronous Invocation Error Handling

Adhering to a set of best practices significantly improves the robustness of asynchronous Lambda function invocations. These practices encompass various aspects, from function design to monitoring and alerting, all aimed at mitigating the impact of errors and ensuring system stability.

- Implement Dead-Letter Queues (DLQs) Strategically: Utilizing DLQs is crucial for capturing and managing failed asynchronous invocations. Configure DLQs for all Lambda functions that are invoked asynchronously. This ensures that failed events are not lost and can be analyzed, reprocessed, or handled as required. The choice of DLQ (e.g., SQS queue, SNS topic) should be based on the function’s specific needs and the desired error handling workflow.

- Define Clear Retry Strategies: Implement retry mechanisms with appropriate backoff strategies to handle transient errors. Consider the potential impact of retries on downstream services and the idempotency of your function’s operations. Use exponential backoff to avoid overwhelming dependent services. Configure the maximum number of retries and the interval between retries based on the expected failure patterns and the criticality of the function.

- Utilize Circuit Breaker Patterns: Implement circuit breakers to prevent cascading failures. When a service repeatedly fails, the circuit breaker will trip, preventing further invocations until the service recovers. This protects the system from being overwhelmed and allows time for the underlying issue to be resolved. Consider using libraries or frameworks that provide circuit breaker functionality, such as Hystrix or resilience4j.

- Comprehensive Logging and Monitoring: Implement robust logging and monitoring to track the health and performance of your Lambda functions and identify errors. Use a centralized logging service (e.g., CloudWatch Logs) to aggregate logs from all functions. Set up alerts based on error rates, DLQ message counts, and other relevant metrics. Regularly review logs to identify trends and potential issues.

- Idempotency Considerations: Ensure that your Lambda functions are idempotent, especially if they are processing messages from asynchronous event sources. Idempotency ensures that processing a message multiple times has the same effect as processing it once. This is crucial for handling retries and preventing unintended side effects. Implement mechanisms like unique request IDs or de-duplication strategies to achieve idempotency.

- Event Source Specific Handling: When using event sources such as SQS or SNS, understand the specific error handling mechanisms provided by those services. For example, SQS provides visibility timeout and retry policies. Configure these settings appropriately to handle potential errors. Leverage features like batch processing and partial failures to optimize performance and error handling.

- Limit Function Execution Time and Memory: Set appropriate execution time limits and memory allocations for your Lambda functions. This helps prevent runaway processes and limits the impact of errors. Monitor function performance and adjust these settings as needed to optimize performance and resource utilization.

- Versioning and Rollback Strategies: Implement versioning and rollback strategies to facilitate quick recovery from errors. When deploying new code, use canary deployments or blue/green deployments to minimize the risk of impacting production. Have a rollback plan in place to revert to a previous, known-good version of your function if errors occur.

- Regular Testing and Validation: Thoroughly test your error handling mechanisms. Simulate different error scenarios and verify that your functions handle them correctly. Use unit tests, integration tests, and end-to-end tests to validate the functionality of your error handling code. Automate your testing processes to ensure that error handling is consistently tested.

- Secure Error Information: Be mindful of the sensitivity of information that is included in logs and error messages. Avoid logging sensitive data, such as passwords or API keys. Implement access controls to restrict access to logs and monitoring data. Consider using encryption to protect sensitive information.

Checklist for Ensuring Robust Error Handling in Lambda Function Design

Creating a checklist helps ensure that all necessary aspects of error handling are addressed during the Lambda function design phase. This checklist provides a structured approach to building reliable and resilient asynchronous Lambda functions.

- Function Configuration:

- Is a DLQ configured for asynchronous invocations?

- Are retry attempts and backoff strategies defined?

- Are execution timeout and memory limits set appropriately?

- Logging and Monitoring:

- Is comprehensive logging implemented, including error details and context?

- Are relevant metrics tracked (e.g., error rates, DLQ message counts)?

- Are alerts configured for critical errors and performance issues?

- Idempotency:

- Is the function idempotent to handle retries safely?

- Are mechanisms in place to prevent duplicate processing of events?

- Event Source Specific Handling:

- Are event source-specific error handling features (e.g., SQS visibility timeout) configured correctly?

- Are batch processing and partial failure handling considered where applicable?

- Code Design:

- Are circuit breakers implemented to prevent cascading failures?

- Are exception handling blocks used to catch and handle potential errors?

- Is sensitive data protected in logs and error messages?

- Testing and Validation:

- Are unit tests, integration tests, and end-to-end tests implemented?

- Are error scenarios simulated to validate error handling mechanisms?

- Are tests automated to ensure consistent validation?

Common Pitfalls to Avoid When Implementing Error Handling Strategies

Avoiding common pitfalls is crucial for successful error handling implementation. Recognizing and addressing these potential issues ensures that error handling strategies are effective and contribute to system stability.

- Ignoring DLQ Monitoring: Failing to actively monitor DLQs and address messages that accumulate there. This can lead to data loss or delayed processing. Regularly review and analyze the messages in DLQs to identify the root cause of failures and take corrective action.

- Insufficient Logging: Providing insufficient or poorly formatted logs, making it difficult to diagnose errors. Ensure logs contain sufficient context, including timestamps, request IDs, and relevant data. Use structured logging to facilitate analysis and searching.

- Inadequate Retry Strategies: Implementing retry strategies without considering the impact on downstream services. Use exponential backoff to avoid overwhelming dependent services. Limit the number of retries to prevent indefinite retrying.

- Lack of Idempotency: Failing to design functions to be idempotent, leading to duplicate processing of events during retries. Implement mechanisms such as unique request IDs or de-duplication strategies.

- Ignoring Event Source Specifics: Not configuring event source-specific error handling features. For example, neglecting the visibility timeout setting in SQS.

- Overly Aggressive Retries: Implementing retries that are too aggressive, leading to a rapid increase in the number of requests to a failing service. This can exacerbate the problem and lead to further failures.

- Insufficient Testing: Not thoroughly testing error handling mechanisms, resulting in undiscovered bugs and unexpected behavior. Simulate different error scenarios and validate that the functions handle them correctly.

- Ignoring Security Considerations: Logging sensitive information or failing to secure logs and monitoring data. Avoid logging sensitive data and implement access controls to restrict access to logs.

- Ignoring Performance Impact: Failing to consider the performance implications of error handling strategies, such as excessive logging or retries. Optimize logging and retry configurations to minimize performance overhead.

- Not Automating Error Handling: Failing to automate error handling processes, such as DLQ message processing or alerting. Automate as much as possible to improve efficiency and reduce the risk of human error.

Concluding Remarks

In conclusion, effective error handling in asynchronous Lambda invocations is a multifaceted discipline, encompassing proactive configuration, strategic use of tools like DLQs, and continuous monitoring. By embracing best practices such as retry strategies, comprehensive logging, and thorough testing, developers can build robust and resilient serverless applications. Mastering these techniques not only enhances the reliability of individual Lambda functions but also contributes to the overall stability and scalability of the entire application ecosystem.

Question & Answer Hub

What is the difference between synchronous and asynchronous invocation in Lambda?

Synchronous invocation waits for the Lambda function to complete and returns the result immediately, suitable for real-time operations. Asynchronous invocation triggers the function and returns immediately without waiting for completion, ideal for background tasks and event-driven architectures. The key difference lies in the immediate response and the handling of errors; synchronous calls propagate errors back to the caller, while asynchronous invocations require specific error handling mechanisms within the Lambda function and related services.

How do I configure a Dead-Letter Queue (DLQ) for a Lambda function?

Configuring a DLQ involves specifying an SQS queue or SNS topic in the Lambda function’s configuration. This is typically done via the AWS Management Console, AWS CLI, or Infrastructure as Code tools like CloudFormation or Terraform. When a function fails to process an event asynchronously, the event payload is automatically sent to the configured DLQ, allowing for inspection and reprocessing of failed events.

What is an exponential backoff strategy, and why is it used?

An exponential backoff strategy is a retry mechanism that increases the delay between retries exponentially. This is implemented to avoid overwhelming a downstream service or resource that may be temporarily unavailable. By gradually increasing the delay, the system gives the resource time to recover, reducing the likelihood of cascading failures and improving the overall resilience of the system.

How can I monitor errors in asynchronous Lambda invocations?

Monitoring asynchronous invocation errors involves using CloudWatch Logs and CloudWatch Metrics. Lambda functions automatically log to CloudWatch Logs. Developers can create custom metrics based on error patterns and set up CloudWatch Alarms to trigger notifications when error rates exceed predefined thresholds. This proactive monitoring enables timely identification and resolution of issues.

What are some common pitfalls to avoid when handling asynchronous invocation errors?

Common pitfalls include neglecting proper error logging, failing to implement retry mechanisms, not configuring DLQs, and insufficient testing of error handling strategies. Other mistakes include not setting up adequate monitoring and alerting, ignoring the potential for poison messages in queues, and failing to consider the impact of errors on downstream systems. Avoiding these pitfalls requires a comprehensive approach to error handling, emphasizing proactive design, thorough testing, and continuous monitoring.