Finding the optimal memory size for a Lambda function is a critical aspect of serverless architecture, directly impacting performance, cost, and scalability. This guide delves into the intricacies of memory allocation, exploring its influence on execution time, cold start times, and overall function efficiency. Understanding these relationships is paramount to building robust and cost-effective serverless applications.

This exploration will navigate through various facets, from initial memory assessment and monitoring techniques to code optimization strategies and dependency management. We will dissect the impact of data processing, cold starts, and cost considerations, culminating in a set of best practices for achieving optimal memory configuration. The goal is to equip you with the knowledge and tools necessary to fine-tune your Lambda functions for peak performance and resource utilization.

Understanding Lambda Function Memory

The allocation of memory to an AWS Lambda function is a critical factor influencing its performance and cost-effectiveness. Properly configuring memory ensures efficient resource utilization, optimal execution times, and a positive user experience. This section will delve into the intricate relationship between memory allocation and Lambda function behavior, exploring its impact on execution time, cold start times, and the consequences of insufficient resources.

Relationship Between Memory Allocation and Lambda Function Performance

The memory allocated to a Lambda function directly impacts its available CPU power. AWS Lambda allocates CPU proportionally to the memory configured. Therefore, increasing the memory allocation also increases the CPU power available to the function. This relationship is not linear; it’s more of a tiered scaling, meaning the gains in CPU performance might not be perfectly proportional to memory increases, especially at lower memory settings.The performance of a Lambda function is often measured by its execution time, which is the duration it takes for the function to complete its tasks.

Several factors influence execution time, including:

- CPU-intensive operations: Operations such as image processing, data encryption/decryption, and complex calculations directly benefit from increased CPU power. Higher memory allocation translates to more CPU resources, enabling these operations to complete faster.

- Network I/O: Network latency can significantly impact execution time, particularly for functions that interact with external services like databases or APIs. While memory doesn’t directly affect network latency, a function with more CPU resources can potentially handle network requests and responses more efficiently.

- Code optimization: Well-optimized code, regardless of memory allocation, can reduce execution time. However, increased memory and CPU can allow for more complex or computationally intensive optimization strategies.

Impact of Memory on Execution Time and Cold Start Times

Memory allocation significantly influences both execution time and cold start times of Lambda functions.Execution time is the time a function takes to execute a specific task. As the allocated memory increases, the function receives more CPU power, leading to a reduction in execution time, especially for CPU-bound tasks. For example, a function processing a large dataset might see a significant reduction in execution time when the memory is increased from 128MB to 1024MB, as the increased CPU power allows for faster data processing.Cold start times are the delays incurred when a Lambda function is invoked for the first time, or after a period of inactivity.

When a function is invoked, AWS needs to provision a new execution environment, which includes initializing the runtime and loading the function code. Memory allocation plays a role in cold start times. A function with higher memory allocation might have a slightly faster cold start time because the underlying infrastructure can be provisioned and initialized more quickly. However, the impact of memory on cold start times is less pronounced than its impact on execution time.

Factors such as function code size, the complexity of dependencies, and the selected runtime environment are generally more significant determinants of cold start times.

Consequences of Insufficient Memory Allocation

Insufficient memory allocation can lead to several performance issues and operational challenges.

- Increased Execution Time: The most immediate consequence is a longer execution time. If the function requires more CPU resources than are available, it will take longer to complete its tasks. This can lead to timeouts, especially if the function is processing large amounts of data or performing computationally intensive operations.

- Function Failures: In extreme cases, insufficient memory can cause the function to fail entirely. This can happen due to out-of-memory (OOM) errors, where the function attempts to use more memory than is allocated. This results in unexpected function termination.

- Performance Degradation: Even if the function doesn’t fail, insufficient memory can lead to performance degradation. This can manifest as slower response times, increased latency, and a poor user experience.

- Higher Costs: Although it might seem counterintuitive, insufficient memory can lead to higher costs. If the function takes longer to execute, it consumes more compute time, which is directly proportional to the cost. Furthermore, frequent retries due to failures or timeouts can also increase costs.

Consider a real-world scenario: A Lambda function is designed to process images. If the function is allocated only 128MB of memory, it might struggle to process large, high-resolution images, resulting in longer processing times or even failures. However, increasing the memory allocation to, say, 512MB or 1024MB, would provide more CPU power, enabling the function to process the images more efficiently and reliably.

Identifying Memory Needs

Determining the appropriate memory allocation for a Lambda function is a crucial step in optimizing its performance and cost-effectiveness. Over-provisioning leads to unnecessary expenses, while under-provisioning can result in performance bottlenecks and function failures. A methodical approach to identifying memory needs involves an initial assessment, followed by iterative refinement based on monitoring and testing. This section Artikels the methods and factors involved in this initial assessment phase.

Methods for Estimating Initial Memory Size

Several approaches can be employed to estimate the initial memory size required by a new Lambda function. These methods provide a starting point for testing and optimization.

- Code Size Analysis: A simple starting point involves examining the size of the function’s deployment package. This provides a basic understanding of the resources required to load the code and its dependencies. A larger code size generally implies a greater need for memory, although this is not a direct correlation.

- Dependency Analysis: Analyzing the dependencies of the function, including libraries and frameworks, can help estimate memory requirements. Some dependencies, such as large machine learning models or complex data processing libraries, can significantly increase memory usage.

- Input Data Volume and Complexity: The volume and complexity of the input data that the function processes are critical factors. Functions that process large datasets or complex data structures will generally require more memory.

- Similar Function Analysis: If similar functions exist within the same environment or organization, examining their memory configurations can provide valuable insights. Analyzing their performance metrics and memory usage patterns can help estimate the needs of the new function.

- Baseline Testing: Deploying the function with a conservative initial memory setting and then running a series of baseline tests with representative input data can help establish a starting point. Monitoring memory utilization during these tests provides data for adjustments.

Factors Influencing Memory Requirements

Several factors influence the memory requirements of a Lambda function. Understanding these factors is crucial for making informed decisions about memory allocation.

- Code Size: The size of the function’s deployment package, including the code itself and its dependencies, directly impacts memory usage. Larger codebases require more memory to load and execute.

- Dependencies: The libraries, frameworks, and other dependencies used by the function can significantly impact memory usage. Some dependencies, such as large machine learning models, consume substantial memory.

- Input Data Volume: The volume of data the function processes is a primary determinant of memory requirements. Processing larger datasets requires more memory for data storage and manipulation.

- Input Data Complexity: The complexity of the input data structures also affects memory usage. Complex data structures, such as nested JSON objects or large arrays, consume more memory than simpler data types.

- Data Processing Operations: The operations performed on the input data, such as data transformation, filtering, or aggregation, influence memory usage. Complex operations require more memory.

- Concurrency: The number of concurrent function invocations affects memory consumption. Each concurrent invocation requires its own memory allocation.

- Programming Language: The programming language used to write the function can impact memory usage. Some languages are more memory-efficient than others.

- Runtime Environment: The specific runtime environment (e.g., Node.js, Python, Java) can also influence memory usage. Each runtime has its own memory management characteristics.

Calculating Baseline Memory Based on Code Size and Expected Input Data Volume

A basic calculation can be performed to estimate the baseline memory requirement based on code size and the expected input data volume. This provides a starting point for more detailed analysis.

Step 1: Estimate Code Memory: This involves assessing the memory required to load the function’s code and its dependencies. A rough estimate can be obtained by considering the deployment package size. As a rule of thumb, start with a memory setting that is at least a few times larger than the code size.

Step 2: Estimate Data Processing Memory: Estimate the memory needed to process the expected input data volume. This will depend on the size and complexity of the input data. If the input data is a large JSON file, the function may need to load the entire file into memory. For instance, if a function is expected to process JSON files averaging 10MB in size, and assuming the function needs to load the entire file into memory, allocate enough memory to accommodate this.

Account for additional memory needed for processing and temporary data structures.

Step 3: Baseline Calculation: Add the estimated code memory and data processing memory to obtain a baseline memory estimate. This is the initial memory setting for the Lambda function. Consider adding a buffer to account for unexpected memory usage.

Example: Consider a Python Lambda function with a deployment package size of 50MB. The function processes JSON input files with an average size of 2MB. The initial memory allocation could be calculated as follows:

Code Memory Estimate: 150MB (3x code size)

Data Processing Memory Estimate: 2MB (for the JSON input file)

Baseline Memory Estimate: 150MB + 2MB = 152MB

Recommended Initial Setting: 192MB (rounding up to the nearest available setting and adding a buffer).

This calculation provides an initial estimate. The actual memory usage should be monitored and optimized based on the function’s performance during testing and production.

Monitoring Lambda Function Metrics

Effective monitoring of Lambda function memory usage is crucial for optimizing performance and cost. It allows for informed adjustments to memory allocation, preventing over-provisioning (wasting resources) or under-provisioning (leading to performance bottlenecks). Implementing a robust monitoring strategy provides the data needed to understand how memory is being utilized during function invocations and to identify potential areas for improvement.

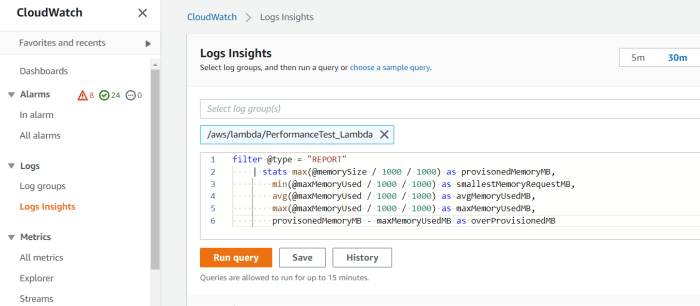

Setting Up Monitoring for Lambda Function Memory Usage

Setting up monitoring for Lambda function memory usage typically involves utilizing the cloud provider’s native monitoring services, such as Amazon CloudWatch (for AWS), Azure Monitor (for Azure), or Google Cloud Monitoring (for GCP). These services collect and aggregate metrics related to Lambda function executions.To configure monitoring:

- Enable Monitoring: Ensure that monitoring is enabled for your Lambda function. This is often enabled by default, but it’s essential to verify the configuration within the function’s settings in the cloud provider’s console.

- Choose Metrics: Select the relevant metrics to monitor. Key metrics include “Memory Used,” “Duration,” “Invocations,” “Errors,” and “Throttles.” The choice of metrics will depend on the specific needs of the function and the goals of the monitoring effort.

- Set Up Alarms: Configure alarms to trigger notifications when specific thresholds are breached. For example, an alarm can be set to alert when “Memory Used” consistently exceeds a certain percentage of the allocated memory. This helps in proactively identifying potential memory issues.

- Configure Logging: Integrate logging with the monitoring service. Lambda functions automatically send logs to CloudWatch Logs (AWS), Azure Monitor Logs (Azure), or Cloud Logging (GCP). These logs provide detailed information about function executions, including any errors or warnings that can help diagnose memory-related problems.

- Establish Dashboards: Create dashboards to visualize the collected metrics. Dashboards provide a consolidated view of the function’s performance over time, making it easier to identify trends and anomalies. They allow for a comprehensive understanding of the function’s behavior.

The specific steps for configuration will vary depending on the cloud provider. Consult the documentation for the chosen cloud provider for detailed instructions.

Interpreting Memory-Related Metrics

Understanding the meaning of memory-related metrics is essential for making informed decisions about memory allocation. Several key metrics provide insight into memory usage and performance.

- Memory Used: This metric represents the amount of memory (in MB) that the Lambda function utilized during an invocation. It is the most direct indicator of memory consumption. It is measured as the peak memory used during the function’s execution.

- Duration: This metric measures the execution time (in milliseconds) of the Lambda function. High duration can be an indicator of memory constraints, as the function may be spending more time on garbage collection or swapping. The execution time is often correlated with the memory usage.

- Invocations: This metric tracks the number of times the Lambda function has been invoked. Analyzing invocations provides context to the other metrics, such as “Memory Used” and “Duration.”

- Errors: This metric counts the number of errors that occurred during function executions. Errors can be related to memory issues, such as “OutOfMemoryError” exceptions. Tracking errors can provide a better understanding of potential memory problems.

- Throttles: This metric tracks the number of times the Lambda function was throttled due to exceeding concurrency limits. Throttling is not directly related to memory usage but can impact performance and cost.

Analyzing these metrics together is crucial. For instance, a high “Memory Used” value coupled with a high “Duration” suggests that the function might be under-provisioned for memory. A low “Memory Used” value and low “Duration” could suggest the function is over-provisioned, leading to cost inefficiencies.

Creating Custom Dashboards to Visualize Memory Performance

Custom dashboards are invaluable for visualizing and analyzing Lambda function memory performance. They allow for the aggregation of key metrics into a single, easily interpretable view. Dashboards can be customized to include graphs, charts, and tables that display the most relevant information.Here are some examples of how to create custom dashboards:

- Memory Usage Over Time: Create a graph that plots “Memory Used” over time. This allows you to identify trends, such as increasing memory consumption, and to detect anomalies, such as sudden spikes in memory usage. The time range should be adjustable to analyze different periods.

- Memory Used vs. Duration: Create a scatter plot that displays the relationship between “Memory Used” and “Duration.” This can help to identify if higher memory usage is directly correlated with longer execution times. A strong positive correlation might suggest memory pressure.

- Error Rate: Create a chart that displays the error rate over time. This can help you identify potential memory-related issues that are causing function failures. An increase in the error rate could indicate memory problems.

- Average Memory Used by Function Version: Create a table that displays the average “Memory Used” for each version of the Lambda function. This is useful for comparing the memory usage of different code deployments. This helps in identifying if a new version of the code is more or less memory efficient.

- Alerts and Notifications: Incorporate alarm states into the dashboard. This will visually highlight when any of the monitored metrics have breached predefined thresholds.

Dashboards should be designed to provide actionable insights. For example, if the “Memory Used” consistently exceeds 80% of the allocated memory, an alarm can trigger a notification, and the dashboard can be used to analyze the underlying causes and determine the appropriate memory allocation. Consider adding annotations to the dashboard to highlight specific events or code deployments.

Profiling Code for Memory Optimization

Profiling Lambda function code is a critical step in identifying and resolving memory-related performance issues. By analyzing the function’s behavior during execution, developers can pinpoint areas where memory is being consumed inefficiently, leading to improved performance and reduced costs. This section details the tools and techniques available for profiling, along with a structured approach to memory optimization.

Tools and Techniques for Profiling

Several tools and techniques are available for profiling Lambda function code. The choice of tool often depends on the programming language used and the specific memory issues being investigated.

- Language-Specific Profilers: Most programming languages offer built-in or readily available profiling tools. For instance:

- Python: The `cProfile` and `memory_profiler` modules are commonly used. `cProfile` provides call graph information, showing function execution times, while `memory_profiler` tracks memory consumption line by line.

- Node.js: The Node.js `inspector` can be used with tools like Chrome DevTools or `node-inspect` to profile memory usage and identify memory leaks.

- Java: Java Virtual Machine (JVM) tools like JProfiler, YourKit, and VisualVM can be employed to analyze memory allocation, garbage collection behavior, and identify memory leaks.

- AWS X-Ray: While primarily a distributed tracing service, AWS X-Ray can provide insights into the performance of Lambda functions, including memory usage, when integrated with the function’s code. This is particularly useful for identifying memory bottlenecks across different services within a larger application.

- Third-Party Profilers: Tools like Datadog and New Relic offer comprehensive application performance monitoring (APM) solutions that include memory profiling capabilities for Lambda functions. These tools often provide more advanced features, such as automatic anomaly detection and detailed memory usage dashboards.

Pinpointing Memory Leaks and Inefficient Data Structures

Memory leaks and inefficient data structures are common culprits behind excessive memory consumption in Lambda functions. Identifying these issues requires a systematic approach.

- Memory Leaks: Memory leaks occur when a program allocates memory but fails to release it when it’s no longer needed. This can lead to a gradual increase in memory usage over time, eventually causing the function to run out of memory.

- Detecting Leaks: Profilers can help identify memory leaks by tracking memory allocations and deallocations. Tools that provide memory snapshots at different points in time can highlight growing memory allocations.

- Common Causes: Common causes of memory leaks include:

- Unclosed file handles or database connections.

- Circular references in object graphs.

- Accumulating data in global variables.

- Inefficient Data Structures: Using inappropriate data structures can lead to unnecessary memory consumption. For example:

- Choosing the wrong data structure: Using a list when a set would be more appropriate can lead to storing duplicate data, consuming more memory.

- Over-allocating memory: Creating data structures with excessive initial sizes can waste memory, particularly if the structure is rarely fully utilized.

- Unnecessary object creation: Creating numerous objects in loops or recursive functions can quickly consume memory.

Procedure for Using a Profiler to Identify Memory-Intensive Operations

A structured procedure is essential for effectively using a profiler to identify memory-intensive operations. This procedure involves setting up the profiling environment, executing the function, analyzing the results, and iteratively optimizing the code.

- Setup the Profiling Environment:

- Choose a profiling tool compatible with the function’s programming language and deployment environment.

- Configure the profiler to collect the necessary data, such as memory allocation statistics, function call traces, and memory snapshots.

- Ensure that the profiling environment does not significantly impact the function’s performance, which could skew the results.

- Execute the Lambda Function with Profiling Enabled:

- Trigger the Lambda function with a representative workload, simulating the typical inputs and operations the function performs.

- Allow the function to run through its complete execution path, capturing the memory usage data.

- If possible, execute the function with different input sizes or workloads to observe how memory consumption scales.

- Analyze the Profiling Results:

- Examine the profiling output to identify potential memory bottlenecks. Look for:

- Functions that consume a significant amount of memory.

- Data structures with large memory footprints.

- Patterns of memory allocation and deallocation.

- Use call graphs to understand the sequence of function calls and their memory impact.

- Analyze memory snapshots to identify memory leaks and the size of allocated objects.

- Examine the profiling output to identify potential memory bottlenecks. Look for:

- Optimize the Code:

- Based on the analysis, identify the code sections that contribute most to memory consumption.

- Refactor the code to address the identified issues:

- Optimize data structures by choosing the most memory-efficient options.

- Reduce object creation by reusing existing objects where possible.

- Close file handles and database connections promptly.

- Remove circular references.

- Test the optimized code by rerunning the profiler to verify that the changes have improved memory usage.

- Iterate and Refine:

- Repeat the profiling and optimization process until the function’s memory usage is within acceptable limits and the performance meets the requirements.

Testing Memory Configurations

Experimentation is crucial for determining the optimal memory allocation for a Lambda function. It allows for empirical observation of how different memory settings affect performance characteristics like execution time and cost. A systematic approach to testing ensures reliable results and informed decision-making.

Step-by-Step Guide to Adjusting and Testing Memory

The following steps provide a structured methodology for adjusting Lambda function memory and evaluating its impact. This process should be iterated upon to refine the memory setting until the desired balance between performance and cost is achieved.

- Select the Lambda Function: Identify the Lambda function for which you want to optimize memory. This selection should be based on the findings from the previous stages of understanding memory usage and identifying potential bottlenecks.

- Access the Configuration Settings: Navigate to the AWS Management Console, select the Lambda service, and then choose the target function. Within the function’s configuration, locate the ‘Memory’ setting.

- Initial Memory Configuration: Begin with the current memory setting or a baseline value. If no prior analysis has been performed, a reasonable starting point could be the default value, or a value derived from initial profiling.

- Deploy the Initial Configuration: Save the changes to deploy the initial memory configuration. This activates the specified memory allocation for the function’s subsequent invocations.

- Define Test Data: Prepare a set of test data that reflects the typical workload of the Lambda function. This data should be representative of the function’s input and processing requirements. The data’s size and complexity should simulate real-world scenarios.

- Execute Test Runs: Invoke the Lambda function multiple times with the defined test data. Record the execution time and any other relevant metrics (e.g., cold start time, memory utilization reported by the function itself, or custom metrics). Consider running the function a sufficient number of times to account for variations.

- Record Results: Log the execution time, cost (if monitoring is enabled), and any other relevant metrics for each test run. This data forms the basis for comparing the performance of different memory configurations.

- Adjust Memory Incrementally: Increase or decrease the memory setting in increments. Common increments are 64MB or 128MB, but the specific increment should be chosen based on the initial profiling results and the expected impact of memory changes.

- Repeat Steps 4-7: Repeat the deployment, testing, and recording steps for each new memory configuration. Each iteration should use the same test data and invocation method to ensure consistency.

- Analyze the Results: Analyze the recorded data to identify the relationship between memory settings, execution time, and cost. Look for patterns and trends that indicate the optimal memory allocation.

- Iterate and Refine: Based on the analysis, iterate the process by adjusting the memory setting and repeating the tests. This iterative approach allows for fine-tuning the memory allocation and achieving the desired performance and cost balance.

Test Plan for Evaluating Memory Configurations

A well-defined test plan is essential for ensuring the reliability and reproducibility of the testing process. This plan Artikels the specific steps and parameters for evaluating different memory configurations.

- Define Memory Configuration Levels: Specify the memory settings to be tested. For example, starting at 128MB, then increasing in increments of 64MB up to 1024MB. Consider a range that encompasses expected operational boundaries.

- Establish Baseline: Run the tests using the function’s current memory setting as a baseline for comparison. This provides a reference point for evaluating the impact of changes.

- Test Data Preparation: Create a representative set of test data that reflects the function’s typical workload. The data should include different input sizes and complexities to assess performance under various conditions.

- Test Execution Strategy: Determine the number of test runs per memory configuration. Run each test configuration multiple times to account for variations and obtain statistically significant results. Consider using a load testing tool to simulate concurrent invocations.

- Metrics to be Collected: Define the metrics to be collected during each test run. This includes execution time, cost (using AWS Cost Explorer or CloudWatch metrics), memory utilization (reported by the Lambda function itself or through CloudWatch), and any other relevant custom metrics.

- Monitoring Tools: Utilize monitoring tools like AWS CloudWatch to capture performance metrics. CloudWatch provides real-time monitoring, logging, and alerting capabilities.

- Cost Estimation: Estimate the cost of each memory configuration based on execution time and memory usage. AWS provides pricing calculators for Lambda functions.

- Reporting and Analysis: Prepare a report summarizing the test results, including execution times, costs, and memory utilization for each memory configuration. Analyze the data to identify the optimal memory setting.

Measuring the Impact of Memory Changes

The impact of memory changes on execution time and cost is quantifiable and directly measurable. This section describes how to measure these impacts and interpret the results.

- Execution Time Measurement: Measure the execution time of the Lambda function for each memory configuration. This can be achieved through CloudWatch metrics, which automatically track function duration. The execution time is the time elapsed from the invocation of the function until its completion.

- Cost Calculation: Calculate the cost of each memory configuration based on execution time and memory usage. The AWS Lambda pricing model charges based on the amount of memory allocated to the function and the duration of the function’s execution.

- Data Visualization: Visualize the relationship between memory, execution time, and cost using graphs and charts. Plotting execution time and cost against memory allocation allows for easy identification of performance trends.

- Identify Optimal Memory Setting: Analyze the data to identify the memory setting that provides the best balance between performance and cost. The optimal setting is the one that delivers acceptable execution time while minimizing cost.

- Example: Consider a Lambda function processing image files. Initial testing reveals that at 128MB, the function takes 5 seconds to process a 1MB image, costing $0.0001 per invocation. Increasing the memory to 256MB reduces execution time to 3 seconds, but the cost increases to $0.00015. Further increasing to 512MB results in 2-second execution time, but the cost becomes $0.0002.

In this example, 256MB could be considered optimal, balancing reduced execution time with a manageable cost increase.

- Considerations:

- Memory-Intensive Operations: Functions performing memory-intensive operations (e.g., image processing, data transformation) will likely benefit from increased memory.

- I/O Bound Operations: Functions primarily limited by I/O (e.g., database queries, network requests) may not show significant performance improvements with increased memory.

- Cold Starts: Increasing memory can sometimes slightly increase cold start times. This trade-off needs to be considered.

Data Processing and Memory Consumption

The memory footprint of a Lambda function is heavily influenced by the nature and volume of data it processes. Efficient data handling is paramount for minimizing memory usage, ensuring function scalability, and preventing potential out-of-memory errors. Understanding the interplay between data characteristics and memory allocation is crucial for optimizing Lambda function performance.

Impact of Data Input Volume and Processing Complexity

The volume of data ingested and the complexity of the processing operations directly correlate with memory consumption. Larger datasets necessitate more memory to store the data in memory during processing. Similarly, computationally intensive tasks, such as complex transformations or aggregations, can significantly increase memory usage due to the temporary storage of intermediate results and the overhead of the processing algorithms.For example, consider a Lambda function processing log files.

If the function needs to parse and analyze a single log file of 1 MB, it will require significantly less memory than if it needs to process a 1 GB log file. The memory required will further increase if the function performs complex operations like identifying and correlating specific events across the entire log file, as this involves holding a larger working set in memory.Furthermore, the choice of processing algorithms can dramatically impact memory usage.

A naive implementation of a sorting algorithm, for example, might require loading the entire dataset into memory. In contrast, a more memory-efficient algorithm like merge sort, which processes data in smaller chunks, would minimize memory consumption.

Memory Requirements for Different Data Formats

The choice of data format influences the memory footprint of a Lambda function due to differences in data representation and the associated parsing overhead. Different formats exhibit varying levels of compression and complexity, impacting how efficiently they can be loaded and processed in memory.

- JSON (JavaScript Object Notation): JSON is a widely used, human-readable format for data interchange. However, its verbose nature can lead to larger file sizes compared to more compact formats. Parsing JSON requires a parser, which consumes memory to construct object representations in memory. The memory footprint scales with the complexity of the JSON structure and the size of the data.

- CSV (Comma-Separated Values): CSV is a simple, text-based format suitable for tabular data. CSV files typically have a smaller file size compared to JSON for equivalent data, particularly when dealing with numerical data. Parsing CSV involves reading lines and splitting them into fields, which is generally less memory-intensive than parsing JSON. However, the lack of a defined schema can make it challenging to optimize parsing.

- Binary Formats (e.g., Protocol Buffers, Avro): Binary formats are designed for efficient storage and transmission of data. They often employ compression and serialization techniques, resulting in significantly smaller file sizes compared to text-based formats. Parsing binary formats can be more complex than parsing text formats, but the reduced data size can lead to lower overall memory consumption. For instance, Protocol Buffers offer a compact representation of data and efficient serialization/deserialization, minimizing memory overhead during data processing.

For instance, consider a scenario where a Lambda function needs to process 100 MB of data. The memory usage would likely be higher if the data is stored in JSON format compared to the same data stored in a binary format like Protocol Buffers or Avro, given that the binary formats generally have a smaller file size due to their compression and encoding schemes.

Strategy for Optimizing Memory Usage with Large Datasets

Processing large datasets in a Lambda function requires a strategic approach to minimize memory consumption and prevent out-of-memory errors. Several techniques can be employed to optimize memory usage.

- Streaming Data Processing: Instead of loading the entire dataset into memory at once, process the data in smaller chunks or streams. This approach reduces the peak memory usage by only keeping a portion of the data in memory at any given time. Libraries like `csv` in Python or streaming parsers in other languages are valuable for this purpose.

- Lazy Loading: Only load data elements as they are needed. This approach can be useful when dealing with large datasets where only a subset of the data is required for a specific operation. Libraries that support lazy evaluation or generators can be leveraged to defer data loading until it’s explicitly requested.

- Data Compression: Compress the data before processing. This reduces the size of the data stored in memory. Libraries such as `gzip` or `zlib` can be used for compression and decompression. However, compression/decompression operations consume CPU resources, so the trade-off between CPU usage and memory savings must be considered.

- Choosing Efficient Data Structures and Algorithms: Select data structures and algorithms that minimize memory footprint. For instance, use efficient data structures like hash tables for lookups and avoid unnecessary object creation.

- Optimize Parsing: Choose appropriate parsing libraries and methods for the data format. Consider using optimized parsers for formats like JSON or CSV that minimize memory allocation during parsing.

- Offload Processing to External Services: For extremely large datasets or complex processing tasks, consider offloading parts of the processing to external services like Amazon S3, Amazon EMR, or AWS Glue. This allows the Lambda function to act as an orchestrator and avoids the need to handle the entire dataset within the Lambda function’s memory constraints.

An example illustrating this strategy would be processing a large CSV file containing sales data. Instead of loading the entire file into memory, the function could read the file line by line using a streaming approach, process each line, and then discard it. This method reduces the memory footprint to the size of a single line of data plus any intermediate storage required during processing, significantly reducing the risk of memory-related errors.

Furthermore, utilizing a library like `pandas` with the `chunksize` parameter enables the processing of the CSV file in manageable chunks, further optimizing memory usage.

Code Optimization Techniques for Memory Efficiency

Optimizing code for memory efficiency is crucial when working with Lambda functions, especially considering their resource constraints. Efficient code minimizes the memory footprint, leading to faster execution times, reduced costs, and improved scalability. By employing strategic coding practices, developers can significantly impact the performance and resource utilization of their Lambda functions.

Importance of Efficient Code Practices in Reducing Memory Consumption

The memory consumed by a Lambda function directly affects its performance and cost. Inefficient code leads to unnecessary memory allocation, which can cause several problems.

- Increased Execution Time: When a function exceeds its allocated memory, the Lambda runtime may experience performance degradation, potentially resulting in longer execution times.

- Higher Costs: AWS charges for Lambda functions based on memory allocation and execution time. Inefficient code leads to higher memory consumption and, consequently, increased costs.

- Scalability Challenges: Excessive memory usage can limit the number of concurrent executions a function can handle, affecting its ability to scale effectively.

- Runtime Errors: In extreme cases, memory exhaustion can lead to runtime errors and function failures.

Therefore, adopting memory-efficient coding practices is essential for optimizing Lambda function performance, reducing costs, and ensuring scalability.

Examples of Optimizing Code to Minimize Memory Footprint

Several techniques can be employed to optimize code for memory efficiency. These techniques focus on minimizing the amount of data stored in memory and reducing the frequency of memory allocation.

- Using Streams: Streams process data in a continuous flow, avoiding the need to load the entire dataset into memory at once. This is particularly beneficial when dealing with large files or datasets.

- Lazy Loading: Delaying the initialization of objects or the loading of data until it’s actually needed. This prevents unnecessary memory allocation for resources that might not be used during a function’s execution.

- Data Structures: Choosing appropriate data structures for the task at hand. For example, using sets instead of lists when dealing with unique elements can reduce memory consumption.

- Efficient String Manipulation: Using string builders or string buffers instead of repeatedly concatenating strings, which can create numerous intermediate string objects, consuming extra memory.

- Object Pooling: Reusing objects instead of creating new ones repeatedly, which reduces the overhead of object creation and garbage collection.

- Resource Management: Properly closing file handles, database connections, and other resources to release memory when they are no longer needed.

Code Snippets Demonstrating Memory-Efficient Coding Practices

The following code snippets demonstrate memory-efficient coding practices in Python, Node.js, and Java. These examples illustrate how to apply the techniques mentioned above to reduce memory consumption in Lambda functions.

Python

Example: Using a generator to process a large file line by line (lazy loading).

def process_file(filename): with open(filename, 'r') as f: for line in f: yield line.strip()for line in process_file('large_file.txt'): # Process each line without loading the entire file into memory print(line) Explanation: The `process_file` function uses a generator to read the file line by line. This avoids loading the entire file into memory, making it suitable for large files.

Node.js

Example: Using streams to process a large file (stream processing).

const fs = require('fs');const readStream = fs.createReadStream('large_file.txt', encoding: 'utf8' );let data = '';readStream.on('data', (chunk) => data += chunk; const lines = data.split('\n'); for (let i = 0; i < lines.length - 1; i++) // Process each line console.log(lines[i]); data = lines[lines.length - 1];);readStream.on('end', () => // Process the last line if (data) console.log(data); ); Explanation: This Node.js code uses the `fs.createReadStream` method to read a large file in chunks, which are then processed without loading the entire file into memory at once. The `data` variable accumulates chunks until a newline character is found, then processes the lines.

The `end` event handles any remaining data.

Java

Example: Using a BufferedReader to read a large file (stream processing).

import java.io.BufferedReader;import java.io.FileReader;import java.io.IOException;public class FileProcessor public static void main(String[] args) try (BufferedReader reader = new BufferedReader(new FileReader("large_file.txt"))) String line; while ((line = reader.readLine()) != null) // Process each line System.out.println(line); catch (IOException e) e.printStackTrace(); Explanation: This Java code uses a `BufferedReader` to read a large file line by line. The `readLine()` method reads one line at a time, minimizing memory usage. The try-with-resources statement ensures that the file is automatically closed, releasing resources.

Dependency Management and Memory Impact

The efficient management of dependencies is crucial for optimizing the memory footprint of Lambda functions. Including unnecessary dependencies or using inefficient dependency management strategies can significantly inflate the package size, leading to increased memory consumption, longer cold start times, and higher operational costs. This section delves into the relationship between dependencies and memory allocation, explores strategies for reducing dependency sizes, and compares various dependency management approaches.

Relationship Between Function Dependencies and Memory Allocation

Lambda functions execute within a container environment. When a function is invoked, the runtime environment loads the function code and all its dependencies into memory. The size of these dependencies directly impacts the memory allocated to the function. Larger dependencies mean more memory is required, which in turn can lead to higher operational costs and potentially performance bottlenecks. The impact is especially noticeable during cold starts, as the function must load all dependencies before it can begin processing requests.Consider a scenario where a Lambda function requires a large machine learning library.

If the function only uses a small subset of the library’s functionality, loading the entire library is inefficient. This unnecessary loading increases the function’s memory footprint. Furthermore, the operating system and Lambda runtime also consume memory, and the dependency size adds to the overall memory pressure.

Strategies for Reducing Dependency Sizes and Their Impact on Memory

Reducing the size of dependencies is a key strategy for optimizing Lambda function memory usage. Several techniques can be employed to achieve this goal.

- Minimize Package Size: Only include the necessary dependencies and their respective versions. Avoid importing entire libraries when only a small part of their functionality is needed. Consider alternative libraries that provide similar functionality with a smaller footprint.

- Use Lightweight Libraries: Prioritize lightweight libraries over heavier alternatives whenever possible. Evaluate the trade-offs between functionality and size. For example, when parsing JSON data, consider using a library specifically designed for efficiency, such as `orjson` in Python, over a more general-purpose library like `json`.

- Dependency Pruning: Regularly review and remove unused dependencies. Automated tools can assist in identifying and eliminating unused packages.

- Use Layers: Package common dependencies into Lambda layers. This allows multiple functions to share the same dependencies, reducing the overall size of individual function packages and minimizing redundant loading.

- Code Optimization: Optimize the function’s code to minimize the number of dependencies needed. Sometimes, functionality can be implemented directly within the function, eliminating the need for external libraries.

These strategies aim to minimize the amount of code that needs to be loaded into memory, resulting in reduced memory consumption and improved performance. The impact is most pronounced in scenarios with frequent invocations and stringent latency requirements.

Comparing Dependency Management Strategies and Their Memory Footprint

Different dependency management strategies have varying impacts on memory usage. The following table compares common approaches, considering their characteristics and their implications on memory consumption:

| Dependency Management Strategy | Description | Memory Footprint Impact | Advantages and Disadvantages |

|---|---|---|---|

| Direct Package Inclusion | Dependencies are included directly within the function’s deployment package. | Highest: Includes all dependencies within the function’s package, potentially leading to larger deployment packages and increased memory usage. |

|

| Lambda Layers | Dependencies are packaged into Lambda layers, which are then attached to the function. | Medium: Reduces the overall size of the function package by sharing dependencies across multiple functions. |

|

| Containerized Dependencies (e.g., Docker Images) | Dependencies are included within a container image, which is then deployed as the Lambda function. | Variable: Depends on the size of the container image. Can be optimized using multi-stage builds and lightweight base images. |

|

| Serverless Package Managers (e.g., Serverless Framework) | Use tools to automate the packaging and deployment of dependencies. | Medium to Low: Optimizes the packaging process by excluding unnecessary files and leveraging features like Lambda Layers. |

|

The choice of dependency management strategy should be based on the specific needs of the Lambda function, considering factors like project size, complexity, and performance requirements. For instance, small projects might benefit from direct package inclusion, while larger projects with multiple functions can benefit from using Lambda Layers or containerized deployments.

Cold Start Considerations and Memory

The cold start phenomenon, a critical performance characteristic of serverless functions, significantly impacts Lambda function execution latency. The amount of memory allocated to a Lambda function directly influences its cold start time, due to the resources available for function initialization. Optimizing memory allocation is therefore essential for minimizing cold start latency and ensuring responsive function behavior.

Memory Allocation and Cold Start Times

Memory allocation impacts cold start times by influencing the speed at which the Lambda execution environment is provisioned and the function’s code is loaded. Higher memory allocation often translates to faster cold start times, up to a certain point, as it provides the function with more resources for initialization tasks.

- Resource Allocation: Lambda functions, when invoked for the first time (or after a period of inactivity), require the creation of a new execution environment. This environment includes the allocation of CPU resources, network interfaces, and storage. Higher memory settings often correlate with more CPU power allocated, which speeds up environment setup.

- Code Loading and Initialization: The function’s code, along with any dependencies, needs to be loaded into the environment during a cold start. More memory can help accelerate this process. If a function has large dependencies or complex initialization logic, increased memory can reduce the time spent on loading and initializing these components.

- Garbage Collection Impact: In languages like Java and .NET, the garbage collection (GC) process can significantly impact cold start times. Larger memory allocations allow the GC to operate more efficiently, reducing the frequency and duration of garbage collection cycles during initialization.

- Execution Environment Configuration: The AWS Lambda service configures the execution environment based on the allocated memory. This includes the CPU shares and other resources. More memory generally means more available CPU power.

Mitigating Cold Start Impact Through Memory Adjustment

Adjusting memory settings is a direct method to mitigate the impact of cold starts. The optimal memory setting depends on the function’s specific requirements and the trade-off between cold start performance and cost.

- Experimentation: Experimentation is crucial to determine the optimal memory setting. Start with a baseline and incrementally increase or decrease memory allocation while monitoring cold start times.

- Memory Optimization for Dependencies: If the function has large dependencies, optimizing these dependencies (e.g., using smaller package sizes, lazy loading) can help reduce cold start times, even with lower memory settings.

- Proactive Warm-Up: Implement techniques like “keep-warm” strategies (e.g., using CloudWatch Events to periodically invoke the function) to keep instances warm and avoid cold starts.

- Cost-Benefit Analysis: Consider the cost implications of increased memory allocation. While higher memory might reduce cold start times, it also increases the cost per invocation. Balance performance gains with cost considerations.

- Function Code Optimization: Optimize the function code to minimize initialization time. This includes reducing the amount of work performed during initialization, such as delaying unnecessary database connections or loading large datasets only when required.

Monitoring and Analyzing Cold Start Times Based on Memory Settings

Monitoring and analyzing cold start times based on different memory settings is critical for making informed decisions about resource allocation. CloudWatch provides essential metrics for tracking cold start performance.

- CloudWatch Metrics: Use CloudWatch metrics, specifically `Duration`, to track the execution time of the function. The `Init Duration` metric provides insights into the time spent during initialization, directly reflecting cold start performance.

- Experiment Tracking: Document the memory settings used during each experiment and the corresponding cold start times. This enables comparison and analysis of the impact of memory allocation.

- Statistical Analysis: Employ statistical analysis to identify trends and correlations between memory settings and cold start times. Calculate average, median, and percentiles of cold start durations for each memory configuration.

- Visualization: Visualize the cold start times using graphs and charts to easily compare performance across different memory settings. This helps to identify the optimal memory configuration.

- Automated Monitoring: Implement automated monitoring using tools like CloudWatch Alarms to alert when cold start times exceed a predefined threshold.

- Sampling Strategies: Implement sampling strategies for cold start measurements. Because cold starts occur infrequently, it is important to gather sufficient data to ensure statistical significance.

Cost Optimization and Memory Allocation

The allocation of memory to AWS Lambda functions is a pivotal factor in determining the overall cost of serverless applications. Understanding the interplay between memory, performance, and cost is crucial for optimizing Lambda function deployments and minimizing operational expenses. This section delves into the economic implications of memory configuration, providing a framework for balancing performance requirements with cost-effectiveness.

Memory Allocation’s Influence on Lambda Function Costs

Memory allocation directly impacts the cost of running Lambda functions through two primary mechanisms: duration and resource consumption. Lambda functions are priced based on the duration of execution (measured in milliseconds) and the amount of memory allocated. Increasing the memory available to a function can potentially reduce execution time, thereby lowering costs, but it also increases the cost per millisecond.

The optimal memory setting, therefore, represents a balance between these opposing forces.

- Pricing Model: AWS Lambda’s pricing model is based on the following factors:

- Request Count: The number of invocations of the function.

- Duration: The time your code runs, measured in milliseconds.

- Memory Allocated: The amount of memory provisioned for the function.

- Cost Calculation: The total cost is the sum of the costs for requests and duration. The duration cost is calculated by multiplying the duration (in milliseconds) by the memory-per-millisecond price. The memory-per-millisecond price varies depending on the amount of memory allocated and the region.

- Impact of Memory:

- Higher Memory: Can lead to faster execution times (potentially due to more CPU power allocated in proportion to memory), but increases the per-millisecond cost.

- Lower Memory: May result in slower execution times, but reduces the per-millisecond cost.

Cost Comparison of Different Memory Configurations

Comparing the costs associated with different memory configurations involves analyzing execution times and associated pricing. The optimal memory configuration is determined by finding the setting that minimizes the total cost for a given workload.

- Scenario: Image Processing Function

Consider a Lambda function designed to process images. The function takes an image as input, resizes it, and saves it to an S3 bucket. We’ll compare three memory configurations: 128MB, 512MB, and 1024MB.

- Data Points:

- 128MB: Average execution time: 1.2 seconds, Cost per invocation: $0.000000208 (example).

- 512MB: Average execution time: 0.6 seconds, Cost per invocation: $0.000000417 (example).

- 1024MB: Average execution time: 0.4 seconds, Cost per invocation: $0.000000833 (example).

- Cost Analysis:

The total cost per invocation is calculated as the product of the execution time and the cost per millisecond, plus the cost of requests (which is relatively small). The optimal memory configuration can be determined by running the function with each memory setting and observing the cost per execution and the duration of each execution.

For instance, if a function is invoked 1 million times per month:

- 128MB: Total Cost: $0.208.

- 512MB: Total Cost: $0.417.

- 1024MB: Total Cost: $0.833.

In this example, 128MB is the most cost-effective option, assuming the image processing is not significantly impacted by the slower execution time. However, the optimal setting can change based on factors like the input image size, the complexity of the image processing algorithm, and the rate of invocations.

Designing a Method for Balancing Performance and Cost

Balancing performance and cost when determining memory size requires a methodical approach involving testing, monitoring, and iterative refinement. This process ensures that the Lambda function operates within acceptable performance bounds while minimizing operational expenses.

- Step 1: Performance Baseline:

Establish a performance baseline by testing the function with a range of memory configurations. Measure key metrics like execution time, error rates, and cold start times. This baseline helps identify the initial memory range to consider.

- Step 2: Identify Bottlenecks:

Analyze the function’s code to identify potential bottlenecks, such as inefficient algorithms or resource-intensive operations. Code profiling tools can assist in pinpointing areas where memory optimization is possible. Optimizing the code itself may reduce memory requirements.

- Step 3: Iterative Testing:

Conduct iterative testing with different memory settings, focusing on the execution time and cost per invocation. Start with lower memory settings and gradually increase the allocation, observing how performance and cost change. Implement the following formula to calculate the total cost for each memory setting:

Cost = (Requests

– RequestCost) + (Duration

– MemoryPerMillisecondCost) - Step 4: Monitoring and Optimization:

Monitor the function’s performance in a production environment. Regularly review metrics such as execution time, error rates, and cost. Make adjustments to the memory allocation as needed based on the observed performance and cost data. Continuously optimize the function’s code and dependencies to reduce memory consumption.

- Step 5: Cost-Performance Trade-off Analysis:

Analyze the trade-offs between performance and cost. Determine the memory configuration that provides the best balance between acceptable performance and minimal operational costs. Consider the frequency of invocations, the sensitivity to execution time, and the cost impact. Evaluate the cost savings compared to performance improvements. For example, a slight increase in execution time may be acceptable if it results in significant cost savings.

Best Practices for Lambda Function Memory Sizing

Optimizing the memory allocation for AWS Lambda functions is crucial for balancing performance, cost, and efficiency. The optimal memory size directly influences function execution time, cold start times, and ultimately, the total cost of operation. This section consolidates best practices to guide the process of finding the right memory configuration for your Lambda functions.

Summary of Best Practices

A systematic approach is necessary for determining the ideal memory size for a Lambda function. This involves a cycle of monitoring, analysis, adjustment, and re-evaluation.

- Start with a Reasonable Baseline: Begin with a memory allocation that is slightly above the expected requirements, perhaps double the initially estimated value. This allows for initial experimentation without the risk of immediate memory exhaustion errors.

- Monitor Function Metrics: Continuously track key metrics such as function duration, memory utilization, and errors. AWS CloudWatch provides essential data for this purpose. Identify any memory-related issues, like excessive durations or Out-of-Memory (OOM) errors.

- Analyze Memory Consumption Patterns: Profile the code to identify memory-intensive operations. Pinpoint the specific areas of the code that consume the most memory, such as data processing, object creation, or large library imports.

- Iteratively Adjust Memory Allocation: Based on the monitoring and analysis, adjust the memory setting. Increase memory if the function frequently encounters performance bottlenecks or decreases memory if utilization is consistently low.

- Test Thoroughly: After each memory adjustment, thoroughly test the function with representative workloads. This ensures that the changes do not negatively impact performance or introduce new issues.

- Consider Cold Start Impact: Be mindful of the relationship between memory and cold start times. Higher memory allocations can sometimes lead to slightly longer cold start times, although the difference might be negligible.

- Optimize Code for Memory Efficiency: Proactively optimize the code to reduce memory consumption. This may involve techniques such as lazy loading, efficient data structures, and code profiling.

- Leverage AWS Tools: Utilize AWS tools like AWS X-Ray for tracing and profiling, and CloudWatch for monitoring. These tools provide valuable insights into function behavior and performance.

Checklist for Optimizing Lambda Function Memory Allocation

Implementing a methodical checklist can help ensure a structured approach to memory optimization.

- Define Requirements: Clearly Artikel the function’s purpose, expected workload, and performance targets.

- Estimate Initial Memory: Based on the requirements, estimate an initial memory allocation. Consider factors like data size, library dependencies, and processing complexity.

- Deploy and Monitor: Deploy the function with the initial memory setting and continuously monitor performance metrics, including duration, memory utilization, and error rates.

- Analyze Metrics: Review the CloudWatch metrics to identify any performance bottlenecks or memory-related issues.

- Profile Code: Use profiling tools to identify memory-intensive code sections.

- Optimize Code: Implement code optimizations to reduce memory consumption, such as using efficient data structures and lazy loading techniques.

- Adjust Memory Allocation: Based on the analysis, adjust the memory setting incrementally.

- Test with Representative Workloads: Thoroughly test the function with representative workloads to ensure that the changes do not negatively impact performance.

- Iterate and Refine: Repeat the process of monitoring, analysis, adjustment, and testing until the function achieves optimal performance and cost efficiency.

- Document Changes: Keep a record of all memory allocation changes and their impact on performance and cost.

Recommended Workflow for Memory Tuning

A well-defined workflow streamlines the process of memory tuning, promoting efficiency and accuracy.

Phase 1: Baseline and Monitoring

- Deploy the Lambda function with an initial memory setting (e.g., double the estimated memory requirement).

- Configure CloudWatch to monitor function duration, memory utilization, and error rates.

- Simulate realistic workloads to generate representative data.

Phase 2: Analysis and Profiling

- Analyze CloudWatch metrics to identify performance bottlenecks and memory-related issues.

- Use profiling tools (e.g., AWS X-Ray, profilers within your programming language) to pinpoint memory-intensive code sections.

- Identify opportunities for code optimization.

Phase 3: Optimization and Adjustment

- Implement code optimizations to reduce memory consumption (e.g., lazy loading, efficient data structures).

- Adjust the Lambda function’s memory setting based on the analysis. Start with small increments (e.g., 64MB).

- Test the function with representative workloads after each adjustment.

Phase 4: Iteration and Validation

- Repeat phases 2 and 3 until optimal performance and cost efficiency are achieved.

- Validate the final configuration under various load conditions.

- Document the final memory setting and its impact on performance and cost.

Final Wrap-Up

In conclusion, determining the optimal memory size for a Lambda function is an iterative process that demands careful analysis, experimentation, and a deep understanding of your function’s behavior. By applying the principles Artikeld in this guide – from initial assessment and monitoring to code optimization and cost analysis – you can effectively balance performance, cost, and scalability. Mastering these techniques is essential for unlocking the full potential of serverless computing and building efficient, responsive applications.

Top FAQs

How does memory affect the execution time of a Lambda function?

Higher memory allocation typically provides more CPU power, leading to faster execution times. The relationship isn’t always linear, but generally, more memory enables quicker processing, especially for CPU-bound tasks.

What are the consequences of allocating too little memory to a Lambda function?

Insufficient memory can result in slow execution times, function timeouts, and errors. The function might struggle to complete its tasks within the allocated time, leading to poor performance and potential failures.

How can I monitor the memory usage of my Lambda functions?

Use cloud monitoring services (e.g., AWS CloudWatch) to track metrics like “Memory Used” and “Duration.” Set up alarms to alert you if memory usage exceeds predefined thresholds, indicating a need for optimization.

Is it possible to over-allocate memory to a Lambda function?

Yes, over-allocating memory can lead to higher costs without a corresponding performance benefit. While more memory might offer a small improvement, the marginal gains diminish, and the increased cost becomes unjustified.

How does code size impact memory requirements?

Larger codebases typically require more memory, especially during the function initialization phase. Larger dependencies and complex code structures increase the memory footprint, potentially affecting cold start times and execution performance.