Event-driven architectures (EDAs) are revolutionizing application design in the cloud, enabling dynamic and responsive systems. This comprehensive guide demystifies the complexities of designing robust EDAs, providing practical insights into key components, cloud platforms, and best practices. We will explore the intricacies of event production, consumption, routing, storage, and security within cloud environments.

From foundational concepts to advanced implementation strategies, this guide provides a practical roadmap for creating scalable, reliable, and secure event-driven applications. We’ll delve into specific cloud platform considerations, highlighting the strengths and weaknesses of different providers, and showcasing the integration of essential cloud services.

Introduction to Event-Driven Architectures

Event-driven architectures (EDAs) are becoming increasingly prevalent in cloud-based systems, enabling flexibility, scalability, and responsiveness to dynamic data streams. They represent a paradigm shift from traditional request-response models, leveraging events to trigger actions and drive application logic. This approach promotes loose coupling between components, allowing for independent scaling and easier integration with various services.Event-driven architectures (EDAs) emphasize asynchronous communication and decoupling.

They are particularly well-suited for applications dealing with large volumes of data and complex workflows, common in modern cloud environments. EDAs offer significant advantages in handling high-throughput data streams and enabling rapid response to changes in data, making them an attractive choice for a wide range of cloud-based applications.

Definition of Event-Driven Architectures

An event-driven architecture (EDA) is a software design pattern where applications respond to events. These events signal the occurrence of something significant, triggering specific actions or workflows. This contrasts with traditional request-response models where an explicit request initiates a specific response. EDAs promote asynchronous communication and loose coupling, enabling scalability and resilience.

Core Principles and Characteristics of EDAs

EDAs are characterized by several key principles. First, they rely on asynchronous communication, meaning that components do not wait for a response before proceeding. This asynchronous nature allows for improved performance and responsiveness. Second, EDAs emphasize decoupling. Components are independent and communicate through events, rather than direct dependencies.

This independence promotes flexibility and maintainability. Third, EDAs leverage event streams to orchestrate actions. A stream of events, often in real-time, drives the system’s logic. This promotes dynamic responses to data changes.

Benefits and Advantages of Using EDAs in Cloud Environments

Cloud environments are well-suited for EDAs due to their inherent scalability and elasticity. EDAs allow for handling large volumes of data efficiently. Scalability is enhanced because components can be scaled independently in response to varying event rates. EDAs also promote resilience, as failures in one component do not necessarily impact the entire system. This robustness is crucial in cloud environments where services can be temporarily unavailable.

Key Components and Elements of a Typical EDA

A typical EDA comprises several key components. These include:

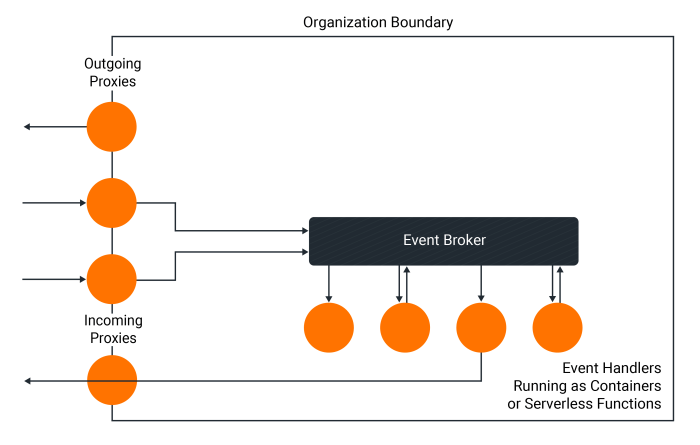

- Event Producers: These components generate events in response to internal or external stimuli. Examples include databases, microservices, or external systems. These components create events and publish them to an event bus.

- Event Bus (or Message Broker): This acts as an intermediary, receiving events from producers and delivering them to consumers. It manages event routing, guaranteeing delivery and handling potential failures. A message broker, such as Kafka or RabbitMQ, provides reliable message queuing, critical for EDAs.

- Event Consumers: These components subscribe to specific events and react to them by performing actions or triggering other workflows. Consumers can include microservices, batch processes, or data processing pipelines.

Illustrative Diagram of an EDA System

The following diagram illustrates a simplified event-driven architecture:“`+—————–+ +—————–+ +—————–+| Event Producer 1 | –> | Event Bus (Kafka) | –> | Event Consumer 1 |+—————–+ +—————–+ +—————–+ | +—————–+ | | | Event Consumer 2 | | | +—————–+ | | +—————–+ | | | Event Consumer 3 | | | +—————–+ |+—————–+ +—————–+ +—————–+| Event Producer 2 | –> | Event Bus (Kafka) | –> | …

|+—————–+ +—————–+ +—————–+“`This diagram depicts the flow of events from producers to the event bus and then to the various consumers. The event bus, in this example, is Kafka, a popular distributed streaming platform. Each consumer subscribes to specific events, allowing for highly customized responses to data changes.

Cloud Platforms for Event Handling

Cloud platforms have become indispensable for building robust event-driven architectures (EDAs). They provide scalable, reliable infrastructure and specialized services for handling events efficiently. This section details popular cloud platforms, their event streaming capabilities, and their strengths and weaknesses in the context of EDAs. Understanding these platforms is crucial for designing and deploying effective event-driven applications.Event streaming services are a key component of EDAs.

These services handle the ingestion, processing, and storage of events in a highly scalable and reliable manner. Cloud providers offer different event streaming services, each with its own characteristics and functionalities. The choice of platform depends on the specific needs of the application, such as throughput requirements, data volume, and the desired level of fault tolerance.

Popular Cloud Platforms for EDAs

Various cloud providers offer services for building and deploying EDAs. Key platforms include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each platform provides specialized event streaming services tailored to diverse application needs.

Event Streaming Services Offered

- Amazon Kinesis: AWS Kinesis provides a suite of services for building real-time data pipelines. Kinesis Data Streams is a managed service for ingesting and storing massive amounts of data streams, while Kinesis Data Firehose facilitates data delivery to other destinations. Kinesis Data Analytics allows for real-time data analysis on top of Kinesis streams.

- Azure Event Hubs: Azure Event Hubs is a highly scalable and reliable event ingestion service. It enables applications to receive and process events from various sources, including IoT devices and microservices. Event Hubs supports diverse data formats and offers high throughput and low latency. It is well-integrated with other Azure services, facilitating a seamless platform for event-driven architectures.

- Google Cloud Pub/Sub: Google Cloud Pub/Sub is a fully managed message streaming service. It enables applications to publish and subscribe to events in a scalable and resilient manner. Pub/Sub supports various data formats and provides features like message ordering, dead-letter queues, and acknowledgment mechanisms.

Comparison of Cloud Providers for EDAs

A comparison of these platforms reveals strengths and weaknesses in the context of EDAs.

| Feature | AWS Kinesis | Azure Event Hubs | Google Cloud Pub/Sub |

|---|---|---|---|

| Scalability | Excellent, supports massive data streams | Excellent, handles high volume events | Excellent, scales to meet diverse needs |

| Fault Tolerance | High, with redundancy and backups | High, with distributed architecture | High, with message persistence and redundancy |

| Integration | Well-integrated with other AWS services | Well-integrated with other Azure services | Well-integrated with other Google Cloud services |

| Cost | Cost varies based on usage and volume of data processed | Cost varies based on usage and volume of data processed | Cost varies based on usage and volume of data processed |

Integration with Other Cloud Services

Event-driven architectures often integrate with other cloud services for comprehensive functionality. Message queues, like SQS (Simple Queue Service), play a crucial role in decoupling components and handling asynchronous communication. Furthermore, various cloud storage services (like S3) can be used for storing event data.

Key Features and Functionalities

Event streaming services generally provide features like message ordering, acknowledgment mechanisms, and dead-letter queues for reliable event handling. Data persistence and fault tolerance are key considerations, especially for applications that need to process large volumes of events. Furthermore, these services often integrate with other cloud tools, offering a comprehensive platform for building and deploying event-driven architectures.

Designing Event Producers and Consumers

Event-driven architectures (EDAs) rely heavily on the seamless exchange of events between producers and consumers. A well-designed producer ensures events are accurately captured and formatted for consumption, while a robust consumer effectively processes and reacts to these events. This section dives into the intricacies of designing both producer and consumer components within a cloud-based EDA.Event producers act as the source of data, transforming it into a standardized event format consumable by the system.

Consumers, on the other hand, are responsible for processing these events, triggering actions based on the event content. Effective design of both components is paramount for the overall reliability and efficiency of an EDA.

Event Producer Design

Event producers are crucial for the proper functioning of an EDA. They transform data from various sources into standardized events, making them consumable by the event bus or message broker. Careful design ensures data integrity and facilitates scalability. Different data sources require tailored producer designs.

- Database Events: Producers designed for database events typically use triggers or change data capture (CDC) mechanisms. These mechanisms capture modifications to database tables and transform them into events. For instance, a trigger might fire an event whenever a new user account is created in a user database. The event would contain details about the new user.

- API Events: Producers for API events might employ message queues or event streaming services. For example, when a user performs an action via an API, the producer creates an event encapsulating the API request details, such as the endpoint, method, and payload. This event is then published to the event bus.

- File System Events: Producers for file system events might monitor directories or specific files. When a file is created, updated, or deleted, the producer converts this change into an event. This might be used in tasks such as image processing or file transfer applications.

Event Consumer Design

Event consumers are the recipients of events, acting upon the information contained within. Effective consumer design is vital for timely and accurate responses to events. The design must account for potential event volume and ensure responsiveness.

- Processing Logic: Consumers must include the necessary logic to process events. This logic varies depending on the event type and the desired outcome. For instance, a consumer handling a “new order” event might update inventory levels and send a confirmation email.

- Error Handling: Consumers must incorporate robust error handling to manage failures during processing. This could include retry mechanisms, dead-letter queues, or other techniques for handling exceptions. This prevents cascading failures and ensures data integrity.

- Scalability: Consumers should be designed to scale horizontally to accommodate fluctuating event volumes. Cloud-native technologies such as message brokers and distributed processing frameworks are often used for this purpose.

Producer and Consumer Patterns

The choice of producer and consumer patterns significantly impacts the design and performance of an EDA. Different patterns cater to diverse use cases.

| Pattern | Producer | Consumer | Description |

|---|---|---|---|

| Publish/Subscribe | Publishes events to a topic | Subscribes to a topic to receive events | Suitable for scenarios with multiple consumers interested in the same event type. |

| Request/Reply | Sends a request and waits for a response | Receives the request and sends a response | Useful for synchronous interactions where a producer needs a specific response from a consumer. |

| Message Queue | Sends messages to a queue | Retrieves messages from the queue | Suitable for decoupling producers and consumers and handling asynchronous interactions. |

Event Routing and Processing

Event routing and processing are critical components of a robust event-driven architecture. Efficient routing ensures that events are delivered to the appropriate consumers, while effective processing mechanisms ensure timely and accurate handling of the events. This section explores various strategies for routing and processing events, highlighting their strengths and use cases.Event routing in cloud-based architectures allows for sophisticated handling of diverse event streams, optimizing resource utilization and enabling flexibility in reacting to different event types.

A well-designed event processing strategy is essential for maintaining system responsiveness and preventing bottlenecks.

Event Routing Strategies

Event routing strategies determine how events are directed to specific consumers. Effective strategies are essential for scaling and maintainability in event-driven systems. Choosing the right routing strategy depends on the specific requirements of the application and the characteristics of the event stream.

- Fan-out Routing: This strategy distributes a single event to multiple consumers. It’s suitable for scenarios where multiple services need to react to the same event, such as updating various views of a user profile change. A common use case is broadcasting a new order to inventory, fulfillment, and billing systems.

- Fan-in Routing: This strategy aggregates events from multiple producers into a single consumer. This is helpful when multiple sources generate events related to a single entity, such as different parts of a user order workflow.

- Directed Routing: Events are routed directly to a specific consumer based on predefined criteria, such as event type or topic. This approach is beneficial when events need to be handled by a dedicated consumer. For instance, a specific service is responsible for processing orders with a particular status.

- Filter-based Routing: Events are routed based on predefined filters or criteria. Consumers are only notified about events that match specific conditions. This approach is particularly useful when managing large volumes of events, as it allows for selective processing. For example, a marketing team might only be interested in orders exceeding a certain amount.

- Load Balancing Routing: Events are distributed across multiple consumers to prevent overloading any single consumer. This strategy is vital in high-volume event scenarios, ensuring even distribution of processing tasks across available resources.

Handling Events Based on Criteria

Determining the appropriate handling strategy for events based on various criteria is crucial for effective event processing. These criteria can include event type, priority, timestamp, or other attributes.

- Event Type-based Processing: Different event types are handled by specialized consumers. This approach is crucial for managing diverse event streams efficiently. For example, different services might process order creation events, order status update events, and order cancellation events.

- Priority-based Handling: Events with higher priority are processed before events with lower priority. This approach is essential for handling time-sensitive events, such as urgent customer support requests or critical system alerts.

- Timestamp-based Filtering: Events are processed based on their timestamp, enabling the analysis of event patterns over time. This is often used for detecting anomalies or trends. For instance, analyzing customer activity within specific time windows.

- Custom Attribute-based Routing: Events can be routed based on custom attributes, allowing for highly specific filtering and routing. This approach enables customized processing of various events. For example, routing events based on product type or customer segment.

Event Routing Mechanisms in the Cloud

Several cloud platforms provide robust event routing mechanisms. These mechanisms allow for efficient event delivery and processing.

- Amazon SQS (Simple Queue Service): SQS acts as a message queue, enabling asynchronous communication between event producers and consumers. It’s particularly well-suited for decoupling services and handling large volumes of events.

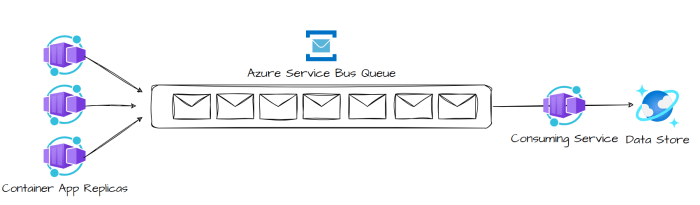

- Azure Service Bus: Similar to SQS, Azure Service Bus provides messaging services that support different routing patterns, including fan-out and topic-based subscriptions.

- Google Cloud Pub/Sub: Pub/Sub is a fully managed real-time messaging service that supports publishing and subscribing to events. It provides robust scaling capabilities and efficient event delivery.

Designing Efficient Event Processing Mechanisms

Effective event processing mechanisms are vital for ensuring the smooth operation of an event-driven architecture. These mechanisms are designed to handle the throughput and volume of events effectively.

- Asynchronous Processing: Processing events asynchronously, using message queues, enables decoupling of services and prevents blocking. This is crucial for handling high volumes of events and maintaining responsiveness.

- Batch Processing: Batching events together for processing can improve efficiency, especially for large volumes of similar events. This can reduce the number of individual processing calls.

- Fault Tolerance: Implementing fault tolerance mechanisms, such as retries and message acknowledgment, is critical for handling potential failures during event processing.

- Monitoring and Logging: Implementing robust monitoring and logging mechanisms for event processing ensures that issues can be identified and resolved promptly.

Example of Event Routing Strategies

| Routing Strategy | Use Case | Mechanism |

|---|---|---|

| Fan-out | Broadcasting an order update to multiple systems | Publish/Subscribe, message queues |

| Fan-in | Aggregating events from different services for a single entity | Message queues, aggregation services |

| Directed | Sending an event to a specific consumer based on criteria | Routing rules, message brokers |

| Filter-based | Processing events only if they meet specific criteria | Filters, conditional routing |

Event Storage and Persistence

Event storage is a critical component of event-driven architectures (EDAs). It ensures the reliable and persistent storage of events, enabling their retrieval and processing at a later time. This is crucial for various reasons, including handling high volumes of events, supporting complex event processing, and enabling fault tolerance. Effective event storage strategies directly impact the overall performance and reliability of the EDA.Properly designed event storage systems can significantly improve the efficiency and resilience of an EDA, allowing for efficient retrieval of past events for analysis and troubleshooting.

This section will delve into the strategies for storing and persisting events, the role of databases in EDAs, methods for ensuring data consistency, the importance of event history, and examples of cloud-based event storage solutions.

Strategies for Storing and Persisting Events

Event persistence strategies are chosen based on factors like volume, velocity, variety of events, and the specific needs of the application. Common strategies include using message queues, dedicated event stores, and relational databases. Message queues excel at high-throughput event ingestion, while event stores provide optimized querying and retrieval for historical event data. Relational databases can be employed when existing database infrastructure is already in place, but may not offer the same performance characteristics as specialized event stores.

Role of Databases in EDA

Databases play a vital role in EDA, particularly when combined with message queues or dedicated event stores. They can store metadata related to events, enabling efficient filtering and querying. This includes storing information like event types, timestamps, and potentially associated data. Databases can also serve as a centralized repository for event-related information, facilitating easier access and management of data across different parts of the system.

Ensuring Data Consistency in Event Storage

Maintaining data consistency in event storage is paramount for the integrity of the system. Implementations often employ techniques like transactional storage, where events are grouped into batches and processed as a unit, ensuring that either all events are successfully persisted or none are. This approach minimizes the risk of partial updates or data inconsistencies. Event validation rules can be employed to ensure that only valid events are stored, reducing the likelihood of errors and data corruption.

Atomic operations and eventual consistency mechanisms are also key considerations, depending on the chosen storage mechanism.

Importance of Event History for Debugging and Analysis

Event history provides valuable insights for debugging and analyzing system behavior. The ability to replay past events is crucial for identifying the root cause of errors or issues, pinpointing the sequence of events that led to a specific outcome. Event history is a critical resource for identifying patterns, trends, and anomalies in system behavior. By studying the historical context of events, developers and analysts can gain valuable insights that would otherwise be hidden.

Examples of Event Storage Solutions in the Cloud

Several cloud providers offer event storage solutions, each with its own strengths and weaknesses. Amazon Kinesis is a highly scalable and fault-tolerant service for handling massive volumes of streaming data, making it suitable for high-throughput event ingestion. Apache Kafka is a widely adopted open-source platform for building real-time data pipelines and streaming applications, providing a robust and flexible event streaming platform.

Azure Event Hubs is a managed service that can handle millions of events per second, offering scalable ingestion and processing capabilities. Other solutions include Google Cloud Pub/Sub, which facilitates the delivery of messages between systems, and various specialized event store solutions tailored to specific use cases.

Implementing Scalability and Reliability

Building scalable and reliable event-driven architectures (EDAs) in the cloud is crucial for handling large volumes of events and ensuring consistent system performance. This involves strategies for managing growing event streams, guaranteeing service availability, and mitigating potential failures. A robust EDA design anticipates future demands and allows for graceful degradation during periods of high load or unexpected failures.

Strategies for Building Scalable Event-Driven Systems

Scalability in event-driven systems necessitates adapting to fluctuating workloads. This involves leveraging cloud-native technologies to dynamically provision resources. Employing message queues and distributed processing frameworks is essential for distributing the load across multiple instances of event consumers. Decoupling event producers from consumers allows for independent scaling of each component.

- Horizontal Scaling of Consumers: Distributing event consumption across multiple instances allows for handling larger volumes of events. Cloud platforms offer mechanisms for easily deploying and scaling these instances based on demand. For example, a system processing financial transactions can scale consumer instances up during peak hours and down during off-peak periods, optimizing resource utilization.

- Asynchronous Processing: Utilizing message queues like Amazon SQS or Google Cloud Pub/Sub allows decoupling producers from consumers. This decoupling enables independent scaling of each component, as producers don’t need to wait for consumers to process events. A news aggregation service, for example, can publish articles to a queue and have separate consumer instances process and categorize them without blocking the publishing process.

- Load Balancing Strategies: Distributing the load of incoming events across multiple consumer instances ensures no single consumer becomes overloaded. Techniques like round-robin or weighted load balancing can be used, routing events to available instances efficiently.

Techniques for Achieving High Availability in EDAs

High availability (HA) is critical in event-driven systems. Redundancy and failover mechanisms are essential for ensuring continuous event processing, even in the event of component failures. Employing multiple instances of consumers and producers, with automatic failover to backups, ensures system continuity.

- Redundant Components: Maintaining redundant instances of producers and consumers, often geographically dispersed, enhances system availability. If one instance fails, traffic can be seamlessly routed to a backup. A stock trading application, for example, would maintain multiple servers in different locations to prevent disruptions caused by regional outages.

- Failover Mechanisms: Setting up failover mechanisms ensures that if a component fails, the system automatically switches to a backup. This is particularly important for critical event streams like real-time monitoring systems.

- Clustering and Load Balancing: Combining load balancing with clustered architectures ensures that event consumers can handle fluctuations in event volume without performance degradation. A system monitoring IoT devices can utilize multiple consumer instances clustered together, load balanced to distribute processing efficiently.

Fault Tolerance Mechanisms in Cloud-Based EDAs

Fault tolerance in cloud-based event-driven architectures is essential to maintain system stability. Implementing strategies to handle unexpected failures and data loss is critical. Utilizing message persistence and retry mechanisms ensures that events are not lost during processing or transmission.

- Message Persistence: Storing events in a durable message queue or database ensures that events are not lost if a consumer fails or the system experiences an outage. This is vital for applications like financial transactions or healthcare records where data integrity is paramount.

- Idempotency: Designing event consumers to handle duplicate events gracefully prevents unexpected side effects. Idempotent operations guarantee that processing the same event multiple times does not lead to inconsistencies.

- Retry Mechanisms: Implement retry mechanisms for failed events to ensure that processing is eventually successful. Appropriate backoff strategies are crucial to avoid overwhelming the system with retries.

Examples of Load Balancing Techniques for Event Consumers

Load balancing is essential for distributing the workload evenly across multiple event consumers. Different techniques can be employed based on the specific requirements of the application. Round-robin, weighted round-robin, and least connections are common strategies.

- Round-Robin: Distributes events sequentially to each consumer in a cyclical fashion. Simplest to implement, but not always the most efficient.

- Weighted Round-Robin: Assigns weights to each consumer, proportionally distributing events based on capacity or performance. More complex to manage, but can optimize resource utilization.

- Least Connections: Routes events to the consumer with the fewest active connections. Efficient for managing fluctuating workloads, but requires tracking consumer state.

Comparison of Scalability and Reliability Techniques

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Horizontal Scaling | Increasing the number of consumer instances | Handles increased event volume, improved performance | Potential for increased complexity in management |

| Message Queues | Decoupling producers and consumers | Independent scaling, fault tolerance | Overhead of message queuing |

| Redundancy | Duplicating components | High availability, fault tolerance | Increased resource consumption |

| Load Balancing | Distributing load among consumers | Improved performance, prevents overload | Requires monitoring and management |

Security Considerations in Cloud EDAs

Event-driven architectures (EDAs) in the cloud offer significant benefits, but also introduce unique security challenges. Properly securing these systems is crucial to protect sensitive data and maintain the integrity of the entire application. Effective security measures are essential to ensure data confidentiality, integrity, and availability.Cloud-based event-driven architectures, while highly scalable and flexible, require specific security considerations that extend beyond traditional application security.

Protecting event producers, consumers, data in transit and at rest, and access to event processing are critical components of a robust security strategy.

Security Concerns Specific to Cloud EDAs

Cloud EDAs introduce several security vulnerabilities compared to traditional architectures. These include potential unauthorized access to event data, malicious actors exploiting vulnerabilities in event producers and consumers, and insecure event routing and processing. Data breaches and system compromises can lead to significant financial losses and reputational damage.

Securing Event Producers and Consumers

Proper authentication and authorization mechanisms are vital for protecting event producers and consumers. Implement strong passwords, multi-factor authentication (MFA), and role-based access control (RBAC) to limit access to sensitive resources.

- Authentication: Employ robust authentication methods like OAuth 2.0 or API keys to verify the identity of producers and consumers. This ensures only authorized entities can interact with the event system.

- Authorization: Implement fine-grained authorization policies using RBAC to control what actions each producer and consumer can perform. For example, a producer might be allowed to publish events to specific topics, while a consumer can only subscribe to a subset of those topics.

- Input Validation: Implement validation rules on incoming events to prevent malicious payloads from entering the system. This includes checking for unexpected characters, data types, and limits to prevent injection attacks.

Securing Event Data in Transit and at Rest

Protecting event data throughout its lifecycle is crucial. Use encryption for data in transit and secure storage mechanisms for data at rest. Event pipelines should be encrypted to prevent eavesdropping.

- Data Encryption: Employ encryption at rest for all event data stored in the cloud. Utilize encryption in transit (TLS/SSL) for all communication between event producers, consumers, and the event bus.

- Data Loss Prevention (DLP): Implement DLP solutions to prevent sensitive information from leaving the system or being exposed to unauthorized personnel. Monitor event data for patterns indicative of potential threats or inappropriate disclosures.

- Secure Storage: Utilize cloud storage services with appropriate access control mechanisms and encryption options. Regularly review and update security policies for storage solutions.

Access Control Mechanisms for Event Processing

Strict access control is necessary to prevent unauthorized access to event processing resources. Use RBAC to limit the actions that different users or applications can perform on events.

- Role-Based Access Control (RBAC): Implement RBAC to define specific roles for different users and assign permissions based on their roles. For example, a data analyst might have read-only access to event data, while an administrator might have full control.

- Event Filtering: Implement event filtering to restrict which events are processed by specific consumers. This can help isolate sensitive information and prevent unauthorized access to data.

- Auditing: Implement logging and auditing mechanisms to track all access to event data and processing resources. This helps identify and respond to security incidents quickly.

Best Practices for Securing Event-Driven Systems in the Cloud

Adhering to best practices ensures the security of the entire event-driven system. Regular security assessments, proactive threat modeling, and compliance with industry standards are crucial components of a strong security posture.

- Security Assessments: Conduct regular security assessments of the event-driven system to identify and address potential vulnerabilities.

- Threat Modeling: Proactively model potential threats and vulnerabilities to the system. Develop mitigation strategies to address these threats.

- Compliance: Adhere to relevant industry standards and regulations (e.g., HIPAA, PCI DSS) to ensure compliance and protect sensitive data.

Monitoring and Debugging Event Flows

Effective monitoring and debugging are crucial for ensuring the smooth operation and resilience of event-driven architectures (EDAs). Without robust mechanisms for tracking event processing, identifying bottlenecks, and resolving issues, EDAs can become unreliable and inefficient. Comprehensive monitoring tools and strategies allow for real-time insights into event flows, enabling swift identification and resolution of problems.Thorough monitoring and debugging practices are essential for maintaining the performance and reliability of an event-driven architecture.

These practices help to proactively identify and resolve issues before they impact downstream systems, and ensure the consistent delivery of events. The ability to pinpoint bottlenecks and understand the path of events through the system is critical for maintaining a robust and scalable architecture.

Monitoring Event Processing Tools

Event processing platforms typically provide built-in monitoring tools. These tools provide real-time insights into the status of events, such as their processing time, delivery success rate, and any errors encountered. Tools such as Apache Kafka’s Kafka Manager, Apache Pulsar’s Pulsar Admin, and AWS Kinesis Data Analytics dashboards offer valuable monitoring capabilities. Furthermore, dedicated third-party tools cater to specific needs, offering advanced features like tracing and distributed debugging.

Debugging Methods for Event Flows

Debugging event flows often involves tracing the path of events through the system. This typically involves using logging mechanisms to record the state of events at various stages. For instance, logging timestamps of event arrival, processing start and end times, and the outcome of each step helps in pinpointing bottlenecks or errors. Tools that support distributed tracing, which follow the flow of events across multiple services, are invaluable in complex architectures.

These tools can track the event’s journey from its origin to its final destination, highlighting any delays or failures.

Monitoring and Logging Strategies

Effective monitoring and logging strategies are critical for identifying and resolving issues in event flows. These strategies should incorporate comprehensive logging at every stage of the event pipeline. This includes logging events with relevant metadata, such as timestamps, IDs, and the status of each processing step. Centralized logging and analysis tools are essential for aggregating logs from various components and generating actionable insights.

Furthermore, implementing structured logging with standardized formats, like JSON, facilitates easier analysis and integration with monitoring dashboards.

Dashboards and Visualizations for Tracking Event Processing

Event processing dashboards provide visual representations of event flow metrics, enabling real-time monitoring. These dashboards typically display key performance indicators (KPIs) such as throughput, latency, error rates, and message delivery success. Visualizations, such as graphs and charts, help in quickly identifying trends and anomalies. Interactive dashboards allow for drill-down analysis to investigate specific events or time periods, providing deeper insights into the event processing flow.

Tools for Identifying Bottlenecks and Performance Issues

Identifying bottlenecks and performance issues in event flows requires tools that analyze performance metrics and resource utilization. Tools such as profiling tools and performance monitoring agents provide detailed insights into the performance characteristics of event processors and consumers. Analyzing metrics such as CPU usage, memory consumption, and network latency helps pinpoint areas where performance can be optimized. Furthermore, monitoring resource allocation and identifying resource contention between different components is critical for preventing performance degradation.

Practical Example: Building an EDA for a Real-time Order Processing System

This section demonstrates the application of event-driven architecture principles to a real-world scenario: a real-time order processing system. This system will showcase how events, routed through a message broker, enable efficient and scalable order management. The design incorporates key aspects of cloud-native event-driven architectures, including event producers, consumers, storage, and routing.A real-time order processing system necessitates handling numerous orders concurrently and reacting to various events throughout the order lifecycle.

An EDA enables quick and reliable processing of these events, leading to a more responsive and efficient order fulfillment process.

Use Case Scenario: Real-time Order Processing

This system processes orders from various sources, such as online stores, mobile apps, and call centers. Each order stage (e.g., order placement, payment processing, shipping) generates a specific event. These events trigger subsequent actions and updates the order status in real-time. The system needs to handle a high volume of orders concurrently, ensuring accuracy and minimizing delays.

Architecture Design

The architecture employs a microservices-based approach, with each microservice responsible for a specific function. The system comprises:

- Order Placement Service (Event Producer): This service receives order data and publishes events to a message broker, such as Apache Kafka, upon order placement.

- Payment Processing Service (Event Consumer): This service consumes events from the message broker, processes payments, and publishes further events for the next stage.

- Shipping Service (Event Consumer): This service consumes events related to payment confirmation, generates shipping labels, and publishes shipping-related events.

- Order Management Service (Event Consumer): This service aggregates events to maintain a unified order status view and updates the database with order details.

- Message Broker (Kafka): This service acts as a central hub for event transmission, ensuring reliable delivery and handling of high volumes of events.

- Database (e.g., PostgreSQL): This stores order details and other related data.

Event Flow

The event flow illustrates how events trigger actions and updates across different services.

- Order Placement: The order placement service receives an order, validates it, and publishes an “OrderPlaced” event to Kafka.

- Payment Processing: The payment processing service consumes the “OrderPlaced” event, processes the payment, and publishes “PaymentSuccess” or “PaymentFailed” events to Kafka, depending on the outcome.

- Shipping: If “PaymentSuccess” is published, the shipping service consumes the event, generates a shipping label, and publishes “ShippingLabelGenerated” event to Kafka.

- Order Management: The order management service consumes the various events (OrderPlaced, PaymentSuccess, ShippingLabelGenerated), updates the order status in the database, and publishes “OrderStatusUpdated” event.

Implementation Steps (Pseudo-code)

- Order Placement Service:

“`java

//Publish OrderPlaced event to Kafka

kafkaProducer.sendMessage(“OrderPlaced”, orderData);

“` - Payment Processing Service:

“`java

//Subscribe to OrderPlaced event

kafkaConsumer.consumeMessage(“OrderPlaced”, (message) ->

//Process payment

if (paymentSuccessful)

kafkaProducer.sendMessage(“PaymentSuccess”, paymentData);

else

kafkaProducer.sendMessage(“PaymentFailed”, paymentData););

“` - Database Update (Order Management Service):

“`java

//Update order status in the database upon receiving PaymentSuccess event

//Using a database transaction for atomicity

db.updateOrderStatus(orderID, “PaymentProcessed”);

“`

Case Studies of Successful Cloud EDAs

Event-driven architectures (EDAs) are increasingly popular for their ability to handle large volumes of data and events in real-time. Cloud platforms provide the infrastructure and tools to implement and scale EDAs efficiently. Understanding successful implementations of cloud EDAs offers valuable insights into best practices and lessons learned.

Examples of Successful Implementations

Numerous organizations have successfully deployed cloud EDAs, demonstrating their efficacy in various domains. One example is a retail company that leveraged an EDA to process order updates in real-time. Another instance involves a financial institution that utilized an EDA to manage high-frequency trading events. These examples highlight the diverse applications of EDAs in modern businesses.

Challenges Faced and Solutions Adopted

Implementing cloud EDAs often presents challenges. One common issue is managing the volume and velocity of events. Solutions include employing efficient event routing mechanisms and strategically selecting suitable message queues. Another challenge is ensuring data consistency and reliability across different components. Implementing robust message persistence and utilizing distributed transaction protocols addresses this issue.

Benefits Achieved by Implementing the EDA

Organizations that successfully deploy cloud EDAs typically experience substantial benefits. These include enhanced responsiveness to real-time events, improved operational efficiency, and the ability to handle massive data volumes. Further, EDAs often promote agility and scalability, enabling businesses to adapt quickly to changing market demands.

Lessons Learned from the Case Studies

Several key lessons emerge from these case studies. Careful consideration of event formats and data structures is critical for efficient data processing. Choosing the right cloud platform with appropriate scalability features is essential for managing high volumes of events. Robust monitoring and debugging tools are indispensable for effective troubleshooting and optimization.

Summary Table of Case Studies

| Case Study | Domain | Challenges | Solutions | Benefits | Lessons Learned |

|---|---|---|---|---|---|

| Retail Order Processing | E-commerce | High order volume, real-time updates needed | Efficient message queues, optimized routing, real-time dashboards | Faster order processing, improved customer experience, real-time inventory management | Careful consideration of message formats, choice of appropriate cloud platform crucial |

| Financial High-Frequency Trading | Finance | Handling massive volumes of transactions, low latency requirements | High-performance message brokers, optimized event pipelines, distributed caching | Reduced latency, improved transaction speed, higher profits | Scalability and high-throughput message handling are paramount in high-frequency environments |

| Manufacturing Supply Chain Management | Manufacturing | Integrating diverse data sources, tracking parts in real-time | Event streaming platforms, real-time dashboards, distributed databases | Real-time visibility into supply chain, improved inventory management, reduced delays | Ensuring interoperability between different systems and using appropriate event formats is critical |

Future Trends in Cloud Event-Driven Architectures

Event-driven architectures (EDAs) are rapidly evolving in the cloud, driven by advancements in technologies like serverless computing, AI/ML, and enhanced data streaming capabilities. This evolution is shaping new paradigms in how applications interact and process data, leading to greater scalability, resilience, and efficiency. Understanding these emerging trends is crucial for architects and developers to design future-proof applications that leverage the full potential of the cloud.Cloud-based EDAs are transitioning from a primarily reactive model to a more proactive and intelligent approach.

The integration of AI and machine learning enables predictive analysis and automated responses to events, leading to faster decision-making and improved operational efficiency. This shift is impacting how events are generated, processed, and acted upon, ultimately transforming the landscape of cloud-based applications.

Emerging Technologies in Event-Driven Architectures

Several emerging technologies are impacting the design and implementation of EDAs. These include advancements in real-time data streaming platforms, improved event routing and processing mechanisms, and the rise of serverless functions. For instance, new streaming platforms offer enhanced fault tolerance and scalability, facilitating the processing of massive volumes of events in real time. These features are vital in scenarios like financial transactions, IoT data processing, and real-time analytics.

Impact of Serverless Computing on EDA Design

Serverless computing is significantly altering EDA design. The ability to dynamically scale event processing based on demand eliminates the need for managing and provisioning servers, leading to significant cost savings and operational efficiency. Serverless functions can be triggered by events, allowing for highly responsive and efficient handling of data streams. This approach promotes agility and reduces the operational overhead associated with traditional server-based event processing.

For example, a serverless function can be triggered by an order placed in an e-commerce platform, instantly processing the order details and initiating shipping procedures.

Role of AI and Machine Learning in Event Processing

AI and machine learning are transforming event processing by enabling intelligent responses to events. Machine learning algorithms can analyze event streams, identify patterns, and predict future events, allowing applications to proactively respond to potential issues or opportunities. This proactive approach enables faster decision-making and improves operational efficiency. For instance, in a fraud detection system, AI models can analyze transaction data in real-time, identify suspicious patterns, and flag potential fraudulent activities before they cause significant financial losses.

Evolution of Event-Driven Architectures in the Cloud

Cloud-based EDAs are evolving from simple event processing systems to sophisticated, integrated platforms that seamlessly connect different applications and services. The integration of AI and machine learning is enabling more intelligent event processing, while serverless computing is facilitating greater agility and scalability. Furthermore, advancements in data streaming technologies are enabling the handling of massive volumes of events with high throughput and low latency.

These developments enable more complex event-driven applications, such as real-time fraud detection systems, proactive maintenance scheduling, and intelligent supply chain management.

Forecast of Future Advancements in EDAs

Future advancements in cloud EDAs are expected to focus on increased automation, enhanced security, and greater intelligence. Automation will encompass the entire event lifecycle, from event generation to processing and storage, enabling more efficient and resilient applications. Enhanced security measures will address the increasing risks associated with data breaches and unauthorized access to event streams. Greater intelligence will be achieved through the application of AI/ML, allowing applications to learn from events, adapt to changing conditions, and make more informed decisions.

One example is the automated response to security threats in a real-time cybersecurity system, using machine learning to identify patterns and respond to threats faster than traditional methods.

Final Summary

In conclusion, building event-driven architectures in the cloud requires a nuanced understanding of various components and considerations. This guide has presented a comprehensive overview, equipping you with the knowledge to design and implement robust, scalable, and secure EDAs. By understanding the interplay between event producers, consumers, routing mechanisms, and storage strategies, you can leverage the power of cloud platforms to create highly responsive and adaptable applications.

Answers to Common Questions

What are the key differences between synchronous and asynchronous communication in EDAs?

Synchronous communication involves immediate responses, whereas asynchronous communication allows events to be processed independently. EDAs typically leverage asynchronous communication, enabling faster overall system responsiveness and scalability.

How do I choose the right cloud platform for my EDA?

Consider factors like scalability needs, event volume, cost considerations, and available event streaming services when selecting a cloud platform. Each platform offers different strengths, so thorough research is crucial.

What are common security vulnerabilities in cloud-based EDAs, and how can they be mitigated?

Potential vulnerabilities include unauthorized access to event data, insecure event producers/consumers, and lack of proper authentication/authorization. Strong access controls, encryption, and secure coding practices are vital for mitigation.

What are some common use cases for event-driven architectures?

EDAs excel in scenarios requiring real-time processing, such as order processing, fraud detection, and data analytics pipelines. They also facilitate microservices communication and decoupling in complex applications.