This guide provides a comprehensive overview of how to design a robust and efficient cloud data warehouse architecture. It delves into key aspects, from initial planning and data modeling to deployment, security, and ongoing maintenance. Understanding these crucial steps is essential for maximizing the value of your cloud data warehouse.

The process involves careful consideration of various cloud platforms, data ingestion strategies, security protocols, and scalability requirements. By following the detailed steps Artikeld here, you can effectively build a data warehouse that seamlessly integrates with your existing infrastructure and supports your data analytics needs.

Introduction to Cloud Data Warehousing

Cloud data warehousing leverages cloud computing infrastructure to store, process, and analyze large volumes of data. This approach offers significant advantages over traditional on-premises data warehousing, including scalability, cost-effectiveness, and accessibility. Cloud data warehouses are designed for various use cases, from business intelligence and reporting to advanced analytics and machine learning. The flexible nature of cloud environments allows organizations to adapt to evolving data needs and analytical requirements with agility.Cloud data warehousing provides a powerful platform for extracting insights from data.

Its scalability enables organizations to easily handle increasing data volumes without significant upfront investments in hardware or infrastructure. The pay-as-you-go model inherent in cloud computing further reduces costs and streamlines resource management. This approach is well-suited for companies with fluctuating data needs, such as e-commerce businesses or those experiencing rapid growth.

Key Differences Between On-Premises and Cloud Data Warehousing

On-premises data warehousing requires significant upfront investment in hardware, software, and skilled personnel for installation and maintenance. Conversely, cloud data warehousing eliminates these upfront costs and allows for rapid deployment and scaling. Cloud-based solutions often offer greater flexibility, with resources dynamically allocated to meet fluctuating demands. This contrasts with the fixed capacity of on-premises solutions. Furthermore, cloud data warehousing provides enhanced security measures and data redundancy, ensuring data integrity and business continuity.

Finally, the accessibility of cloud solutions via the internet enables remote collaboration and analysis from anywhere with an internet connection.

Common Challenges in Cloud Data Warehouse Design

Designing a robust and efficient cloud data warehouse architecture requires careful consideration of various factors. One common challenge is data integration from disparate sources, including on-premises systems and various cloud applications. Another critical challenge is ensuring data security and compliance with industry regulations. Furthermore, organizations need to optimize query performance and data retrieval for efficient analytics. Data governance and quality, ensuring the reliability and accuracy of the data stored in the warehouse, also play a vital role in effective cloud data warehouse design.

Finally, managing the complexity of different cloud services and tools, including data transformation and loading tools, can be a considerable hurdle.

Cloud Data Warehousing Services Comparison

The choice of a cloud data warehousing service often depends on factors such as data volume, budget, and specific analytical needs. Here’s a table summarizing common cloud data warehousing services:

| Service | Provider | Key Features | Strengths |

|---|---|---|---|

| Amazon Redshift | Amazon Web Services (AWS) | Columnar storage, high-performance query engine, scalability | Mature, extensive ecosystem of tools and services, strong community support |

| Azure Synapse Analytics | Microsoft Azure | Integration with other Azure services, hybrid capabilities, comprehensive analytics platform | Strong integration with existing Microsoft ecosystem, wide range of tools and functionalities |

| Google BigQuery | Google Cloud Platform (GCP) | Serverless architecture, pay-as-you-go pricing, scalable processing | Excellent performance for large datasets, robust security features |

| Snowflake | Snowflake | Data sharing, scalability, and security features | Advanced data sharing capabilities, robust security features, and flexibility |

Data Modeling for Cloud Warehouses

Data modeling is a crucial step in designing a cloud data warehouse. It dictates how data is structured, stored, and accessed, directly impacting query performance and overall system efficiency. A well-designed model ensures data consistency, allows for flexibility in handling evolving business needs, and facilitates seamless integration with existing systems. Careful consideration of data modeling techniques specific to cloud environments is essential for optimizing the warehouse’s performance and scalability.Effective data modeling in a cloud environment leverages the scalability and flexibility inherent in cloud platforms.

By employing appropriate techniques, organizations can create a data warehouse that is capable of handling large volumes of data and diverse query patterns. This adaptability is key to supporting a variety of business intelligence and analytics needs.

Different Data Modeling Techniques

Various data modeling techniques are suitable for cloud environments. The choice depends on the specific requirements of the data warehouse, including data volume, query patterns, and the need for flexibility. Popular approaches include Entity-Relationship Diagrams (ERDs), star schemas, snowflake schemas, and dimensional modeling. Each technique has its own strengths and weaknesses, influencing the overall architecture of the cloud data warehouse.

Star Schema vs. Snowflake Schema

The star schema and snowflake schema are two prevalent dimensional modeling approaches. Both aim to organize data for efficient querying, but they differ in their complexity and flexibility.

- Star Schema: A relatively simple model where fact tables are connected to dimension tables via foreign keys. This design simplifies queries but may not be ideal for complex analysis or very detailed dimensions. It’s characterized by its simplicity and speed, making it well-suited for analytical queries that need fast response times.

- Snowflake Schema: A more complex model that further normalizes the dimension tables. This allows for greater flexibility and granularity, but can lead to more complex queries and potentially slower performance compared to star schemas. It’s advantageous when dealing with detailed dimension data, enabling more intricate analyses and reducing redundancy.

Dimensional Modeling Best Practices

Implementing dimensional modeling in a cloud data warehouse demands adherence to best practices. This ensures data integrity, optimizes query performance, and supports scalability.

- Data Granularity: Carefully defining the level of detail for each dimension table is crucial. The granularity should align with the intended analytical queries. Excessive granularity can lead to data redundancy, while insufficient granularity might limit analytical capabilities.

- Data Normalization: While dimensional modeling often involves denormalization for performance, understanding the trade-offs is essential. The normalization level should be carefully balanced with query performance needs.

- Data Validation: Implementing robust data validation rules helps ensure data accuracy and consistency within the dimensional model. This ensures that data used for analysis is reliable.

- Data Governance: Establishing clear data governance policies and procedures is essential for maintaining data quality and consistency over time. These policies are necessary to manage data lineage and ensure trust in the data.

Designing a Dimensional Model

A structured approach to designing a dimensional model is crucial for success. The following table Artikels the steps involved.

| Step | Description |

|---|---|

| 1. Identify Business Requirements | Define the key business questions and analytical needs that the data warehouse will support. |

| 2. Define Dimensions and Facts | Identify the key dimensions (e.g., time, product, customer) and facts (e.g., sales, revenue) relevant to the business requirements. |

| 3. Design Dimension Tables | Determine the attributes for each dimension and the level of detail required. |

| 4. Design Fact Tables | Define the measures and keys needed for fact tables, considering data granularity. |

| 5. Establish Relationships | Define the relationships between fact and dimension tables using foreign keys. |

| 6. Validate and Refine | Thoroughly validate the model against business requirements and refine based on feedback. |

Choosing the Right Cloud Platform

Selecting the appropriate cloud platform is crucial for a successful data warehouse. Careful consideration of factors like pricing, performance, and feature availability is essential to ensure the chosen platform aligns with current and future business needs. A well-chosen platform will facilitate efficient data processing, robust analytics, and cost-effective scalability.

Key Factors for Cloud Platform Selection

Several critical factors must be assessed when selecting a cloud platform for data warehousing. These factors influence both the operational efficiency and the long-term cost-effectiveness of the solution.

- Pricing Models: Cloud providers offer diverse pricing models for data warehousing services, including pay-as-you-go, reserved instances, and dedicated capacity options. Understanding these models is essential to avoid unexpected costs and optimize resource utilization. The pay-as-you-go model, while flexible, can lead to fluctuating costs if not managed effectively. Reserved instances offer discounts for long-term commitments, making them cost-effective for consistent usage.

Dedicated capacity provides the most predictable costs but might be overkill for fluctuating needs.

- Performance Characteristics: Factors like query response time, scalability, and availability are critical to consider. Performance is directly tied to the architecture of the chosen platform, the amount of data being processed, and the complexity of the queries being run. Different cloud providers may have varying performance characteristics depending on the specific data warehouse service offered. Consider the expected volume and velocity of data ingestion and the expected query complexity to ensure the platform can handle the load.

- Features and Capabilities: Each cloud platform provides a unique set of features for data warehousing. Factors such as built-in security, integration with other cloud services, and support for various data formats influence the choice. Consider whether the platform supports the specific data formats your business uses and if it integrates well with existing tools and processes. A platform with comprehensive security features and seamless integration with other cloud services is highly desirable.

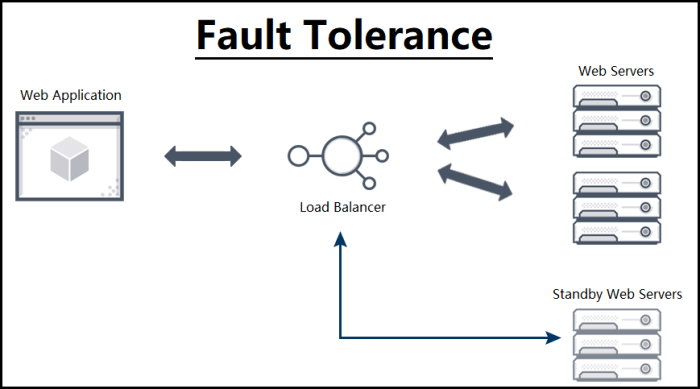

- Scalability and Availability: The platform should accommodate future growth and ensure data availability. Cloud platforms offer various scaling options to handle increasing data volumes and user demands. A well-designed data warehouse architecture should be able to handle potential spikes in data ingestion or query volume without compromising performance. High availability ensures continuous data access and minimizes downtime, which is crucial for business continuity.

Pricing Models Comparison

Cloud providers offer diverse pricing models, each with its own advantages and disadvantages. Comparing these models allows for a tailored choice that aligns with expected usage patterns.

- Amazon Web Services (AWS): AWS offers a variety of pricing options, including on-demand pricing, reserved instances, and dedicated capacity for their data warehouse services (e.g., Amazon Redshift). The pay-as-you-go model is often attractive for initial deployments, while reserved instances offer discounts for consistent usage.

- Microsoft Azure: Azure provides similar pricing options with their Synapse Analytics service, which can be priced based on various factors, including compute and storage usage. Azure also offers different pricing tiers, allowing users to select the one that best suits their needs and budget.

- Google Cloud Platform (GCP): GCP’s BigQuery offers a pay-as-you-go pricing model that can be cost-effective for projects with variable workloads. GCP also provides options for dedicated resources for higher performance and predictable costs.

Performance Characteristics of Cloud Data Warehousing Solutions

Performance evaluation is crucial to ensure the chosen platform meets the expected query response times and scalability demands.

| Cloud Provider | Data Warehouse Service | Performance Characteristics |

|---|---|---|

| AWS | Amazon Redshift | Known for high performance, especially for analytical queries. Offers good scalability and availability. |

| Azure | Azure Synapse Analytics | Provides a comprehensive suite of data warehousing and analytics tools. Features robust scalability and availability. |

| GCP | BigQuery | Known for its fast query performance, particularly for large datasets. Excellent scalability and cost-effectiveness for high-volume queries. |

Data Ingestion and ETL Processes

Effective data warehousing relies heavily on efficient data ingestion and ETL (Extract, Transform, Load) processes. These processes define how data is collected, prepared, and loaded into the cloud data warehouse, directly impacting the quality and usability of the stored information. Robust ingestion methods ensure timely and accurate data integration, enabling businesses to derive valuable insights from their data.The variety of data sources and formats, coupled with the need for consistent data quality, necessitates careful consideration of ingestion methods and ETL tools.

A well-designed ingestion strategy minimizes data loss and ensures data accuracy and consistency throughout the data pipeline. Implementing appropriate ETL tools simplifies the transformation process, allowing for the creation of a unified view of data from diverse sources.

Data Loading Methods

Data loading into a cloud data warehouse can be achieved through various methods, each with its own advantages and disadvantages. Understanding these differences is crucial for selecting the most appropriate method for a given situation.

- Batch Loading: Batch loading involves collecting data from source systems in batches and loading it into the data warehouse at scheduled intervals. This method is suitable for large volumes of data that do not require real-time processing. It’s typically cost-effective for scheduled data refreshes, ensuring consistency and reducing overhead for data integration.

- Streaming Loading: Streaming loading processes data in real-time as it arrives from source systems. This method is essential for applications requiring up-to-the-second data updates, such as fraud detection or real-time analytics. The speed and responsiveness of streaming loading, however, come at a cost, often requiring specialized infrastructure and tools.

ETL Tools and Techniques

A range of ETL tools are available for cloud data warehouses, each offering specific functionalities and capabilities. Choosing the right tool depends on the complexity of the data transformation needs and the cloud platform utilized.

- Apache Spark: A powerful open-source framework for distributed computing, Spark excels at large-scale data processing. Its ability to perform parallel processing and handle diverse data formats makes it a robust choice for complex ETL tasks in cloud environments.

- AWS Glue: Amazon Web Services (AWS) Glue is a fully managed ETL service that simplifies the process of extracting, transforming, and loading data. It provides a user-friendly interface and automates many aspects of data preparation, making it a convenient option for cloud-based data warehousing.

- Azure Data Factory: Microsoft Azure’s Data Factory is a cloud-based integration service that enables data movement and transformation across various data sources. Its comprehensive capabilities cover data ingestion, transformation, and loading, and are well-integrated with other Azure services.

Comparison of Data Ingestion Methods

The choice between batch and streaming loading hinges on the specific needs of the application. Batch loading is suitable for regular data updates, while streaming loading is crucial for real-time insights.

| Method | Description | Use Cases | Advantages | Disadvantages |

|---|---|---|---|---|

| Batch Loading | Loads data in batches at scheduled intervals. | Regular data updates, data archives. | Cost-effective for scheduled tasks, easier to manage large volumes. | Lacks real-time capability, potential for delayed insights. |

| Streaming Loading | Loads data in real-time as it arrives. | Real-time analytics, fraud detection. | Provides immediate insights, supports high-velocity data streams. | Requires specialized infrastructure, can be complex to implement. |

ETL Tool Compatibility with Cloud Platforms

The compatibility of ETL tools with various cloud platforms is a key consideration during implementation. The table below provides a glimpse of the support offered by different tools for popular cloud platforms.

| ETL Tool | AWS | Azure | Google Cloud |

|---|---|---|---|

| Apache Spark | Compatible (using EMR, EC2) | Compatible (using HDInsight) | Compatible (using Dataproc) |

| AWS Glue | Excellent integration | Limited integration | Limited integration |

| Azure Data Factory | Limited integration | Excellent integration | Limited integration |

Security and Compliance Considerations

Ensuring the security and compliance of your cloud data warehouse is paramount. A robust security posture protects sensitive data, maintains regulatory compliance, and safeguards your organization’s reputation. Ignoring these aspects can lead to significant financial penalties, legal repercussions, and reputational damage. This section details critical security measures and compliance requirements.

Data Encryption

Data encryption is a fundamental security measure in cloud data warehouses. It involves converting data into an unreadable format (ciphertext) before storage and transforming it back to its original format (plaintext) when needed. This protects sensitive data from unauthorized access even if the storage medium or network is compromised. Implementing robust encryption strategies for both data at rest and data in transit is crucial.

Access Control Mechanisms

Implementing granular access control is essential for managing who can access and modify data within the cloud data warehouse. This involves defining roles and permissions for different users and groups. These mechanisms should be tightly integrated with the overall security architecture, limiting access to only authorized personnel. Role-based access control (RBAC) is a widely used and effective method for achieving this.

Compliance Requirements

Compliance with industry regulations and standards is another critical aspect. Regulations like HIPAA, GDPR, and PCI DSS dictate how data must be stored and processed. Organizations must ensure their cloud data warehouse architecture adheres to these requirements to avoid penalties and maintain data integrity. This includes implementing audit trails, data masking, and retention policies that comply with specific regulatory frameworks.

Security Best Practices

Implementing security best practices ensures a layered approach to protection. These practices span various aspects of the data warehouse environment, encompassing data encryption, access control, monitoring, and incident response.

| Security Best Practice | Description |

|---|---|

| Data Encryption at Rest | Encrypt data stored in the cloud data warehouse to protect against unauthorized access if the storage medium is compromised. |

| Data Encryption in Transit | Encrypt data transmitted between the warehouse and clients or applications to prevent eavesdropping during transmission. |

| Principle of Least Privilege | Grant users only the necessary permissions to access data and resources, minimizing the potential impact of a security breach. |

| Regular Security Audits | Periodically review and assess the security posture of the cloud data warehouse to identify vulnerabilities and weaknesses. |

| Incident Response Plan | Develop a plan for handling security incidents, including steps for detection, containment, eradication, recovery, and post-incident analysis. |

| Multi-Factor Authentication | Enforce multi-factor authentication (MFA) for all users to add an extra layer of security and prevent unauthorized access. |

| Regular Vulnerability Scanning | Conduct regular vulnerability scans to identify potential security holes and address them promptly. |

| Security Information and Event Management (SIEM) | Implement a SIEM system to monitor security events, analyze logs, and detect potential threats. |

Scalability and Performance Optimization

Designing a cloud data warehouse for scalability and performance optimization is crucial for ensuring its effectiveness and longevity. A well-designed architecture allows for accommodating growing data volumes, increasing user demands, and handling complex analytical queries efficiently. This section details strategies for achieving these goals.Cloud data warehouses offer inherent scalability, allowing you to adapt to changing needs without significant infrastructure overhauls.

Leveraging this flexibility, combined with intelligent query optimization techniques and resource management, enables organizations to derive the maximum value from their cloud data investments.

Designing for Scalability

Efficient scalability is achieved through careful selection of cloud services and deployment strategies. Employing serverless compute, automated scaling policies, and appropriate storage options are key aspects of this design. The choice of storage tier (e.g., cold storage for infrequently accessed data) and the implementation of data partitioning strategies are crucial.

Optimizing Query Performance

Optimizing query performance is essential for ensuring responsiveness and reducing query latency. Techniques like query rewriting, using materialized views, and indexing strategies play a critical role in this. Understanding data distribution and employing appropriate query optimization tools are also vital. Using query caching and pre-aggregating data for frequent queries significantly enhances response times.

Monitoring and Managing Cloud Data Warehouse Resources

Proactive monitoring and management are essential for ensuring optimal performance and cost-effectiveness. Cloud-native monitoring tools and custom dashboards allow for real-time tracking of resource utilization, query execution times, and storage consumption. Implementing alerts for performance bottlenecks and resource thresholds is vital for maintaining performance and preventing costly issues. Regular performance analysis, coupled with capacity planning, allows for proactive adjustments to resource allocation.

Comparing Scaling Strategies

| Scaling Strategy | Description | Pros | Cons |

|---|---|---|---|

| Vertical Scaling (Scaling Up) | Increasing the resources of a single compute instance (e.g., more CPU, memory). | Quick to implement, potentially less complex setup. | Limited scalability, may not be cost-effective for large datasets or high user traffic. Potential bottleneck on a single instance. |

| Horizontal Scaling (Scaling Out) | Adding more compute instances to a cluster. | High scalability, handles larger datasets and higher traffic volumes. Distributes workload across multiple instances, increasing availability. | More complex to manage and configure, requires careful consideration of data distribution strategies. |

| Auto-Scaling | Dynamically adjusting the number of compute instances based on demand. | Adaptive to fluctuating workloads, automatically handles resource allocation. Cost-effective by only using resources when needed. | Requires careful configuration to avoid over-provisioning or under-provisioning resources, potential complexity. |

Auto-scaling is a highly recommended strategy for cloud data warehouses due to its adaptability to fluctuating workloads, and its cost-effectiveness.

Monitoring and Maintenance

Effective monitoring and maintenance are crucial for ensuring the optimal performance, security, and reliability of a cloud data warehouse. Proactive monitoring allows for timely identification and resolution of potential issues, preventing service disruptions and data loss. Regular maintenance activities, such as data quality checks and security updates, guarantee the integrity and trustworthiness of the stored data.

Monitoring Tools and Metrics

Cloud data warehouses offer a range of monitoring tools and metrics to track performance, resource utilization, and data quality. These tools provide insights into various aspects of the system, enabling administrators to identify potential bottlenecks and optimize performance. Key metrics include query execution time, data ingestion rate, storage utilization, and resource consumption.

- Query Performance Monitoring: Monitoring query execution time is essential for identifying slow queries and optimizing database performance. Tools like those provided by cloud providers (e.g., Amazon CloudWatch for AWS, Azure Monitor for Azure) provide detailed metrics on query duration, resource consumption during query execution, and query frequency. By analyzing these metrics, administrators can identify potential bottlenecks, such as inefficient queries or insufficient resources.

Example: If a specific query consistently takes longer than expected, the administrator can investigate the query’s complexity and adjust the data model or query to improve efficiency.

- Resource Utilization Monitoring: Tracking resource utilization, such as CPU, memory, and storage, is vital for ensuring sufficient capacity and preventing performance degradation. Cloud providers’ monitoring tools provide dashboards and reports on resource utilization, enabling administrators to proactively scale resources based on demand and identify potential capacity issues. Example: If the storage utilization reaches 95%, administrators can trigger an automatic scaling action to add more storage capacity to prevent performance degradation.

- Data Ingestion Rate Monitoring: Monitoring data ingestion rate helps ensure data loads are processed efficiently and without delays. Metrics like ingestion speed and throughput are essential to identify and resolve issues in the data pipeline. Example: A sudden drop in ingestion rate could indicate a problem with the source system or the data pipeline itself, requiring immediate attention.

Maintaining Data Quality and Integrity

Data quality and integrity are paramount in a cloud data warehouse. Maintaining these aspects involves implementing procedures for data validation, cleansing, and transformation. Regular checks and audits help ensure data accuracy and consistency.

- Data Validation: Regular data validation ensures data accuracy and consistency by checking for missing values, erroneous data types, or inconsistencies with defined business rules. This involves using scripts and automated checks to identify and correct discrepancies. Example: A script might check if a date field contains a valid date format or if a numerical field falls within a predefined range.

- Data Cleansing: Data cleansing involves identifying and correcting errors or inconsistencies in the data. This includes handling missing values, removing duplicates, and standardizing data formats. Example: A process might be implemented to replace null values in a column with a default value or to remove duplicate entries based on a unique identifier.

- Data Transformation: Data transformation ensures that data is in the correct format and structure for analysis. This includes converting data types, aggregating data, and transforming data into a suitable format for use in downstream applications. Example: A transformation process might convert a date format from YYYY-MM-DD to MM/DD/YYYY.

Troubleshooting Common Issues

Troubleshooting common issues in cloud data warehouses involves systematically identifying the root cause of problems and implementing appropriate solutions. This often involves analyzing logs, monitoring metrics, and reviewing system configurations.

- Slow Query Performance: If query performance is slow, review the query itself for inefficiencies, check indexing, and consider whether sufficient resources are allocated to the warehouse.

- Data Ingestion Failures: If data ingestion fails, investigate the source system, data pipeline, and data transformation process to identify the source of the error.

- Security Breaches: If security issues arise, immediately investigate the breach, implement appropriate security measures, and ensure compliance with regulations.

Common Monitoring Tools

| Tool | Provider | Key Features |

|---|---|---|

| Amazon CloudWatch | AWS | Comprehensive monitoring, logging, and metrics for various AWS services. |

| Azure Monitor | Microsoft Azure | Real-time monitoring, diagnostics, and logging for Azure resources. |

| Google Cloud Monitoring | Google Cloud Platform | Observability and management tools for Google Cloud resources. |

| Splunk | Splunk | Centralized log management and analysis platform, enabling real-time insights. |

Data Governance and Management

Effective data governance is paramount in cloud data warehouses, ensuring data quality, security, and compliance. Robust data governance frameworks establish clear policies, procedures, and roles, enabling organizations to manage and control the data lifecycle effectively. This includes defining data ownership, access rights, and quality standards, all crucial for trustworthy insights and reliable business decisions.Data governance in the cloud environment necessitates a comprehensive approach that goes beyond traditional on-premises models.

Cloud-specific considerations, such as dynamic scaling, shared responsibility models, and varying levels of access, require adaptable governance strategies. These strategies need to be aligned with organizational objectives, regulatory requirements, and the unique characteristics of the cloud platform.

Importance of Data Governance in Cloud Data Warehouses

Data governance in cloud data warehouses is critical for maintaining data quality, ensuring regulatory compliance, and promoting data security. It defines clear roles and responsibilities for data management, ensuring that data is accurate, complete, and consistent. This directly impacts the reliability of business insights derived from the warehouse.

Data Governance Strategies for Cloud Environments

Several strategies can be implemented for effective data governance in cloud environments. These include:

- Centralized Governance: A central team manages policies, standards, and access controls for all data residing in the cloud warehouse. This approach provides a consistent framework for data management across the organization. This strategy ensures consistency and avoids data silos.

- Decentralized Governance: Individual teams or departments manage their own data, while adhering to overarching organizational policies. This approach can be more agile, but requires strong communication and coordination mechanisms to avoid conflicts and maintain data quality standards.

- Hybrid Governance: A combination of centralized and decentralized approaches. Specific data sets or areas might be subject to more stringent centralized controls, while other, less critical data can be managed more autonomously. This method balances efficiency with control.

Procedures for Managing Data Lineage and Access Controls

Data lineage, documenting the origin and transformations of data, is vital for auditing and troubleshooting. Access controls define who can access specific data and what actions they can perform.

- Data Lineage Management: Implement tools and processes to track the movement and transformation of data throughout the data pipeline. This allows for tracing the source of data inaccuracies and ensures transparency in data usage. Detailed logs and metadata are crucial.

- Access Control Procedures: Establish a robust access control model, using principles like least privilege. Restrict access to sensitive data based on roles and responsibilities. Implement granular permissions to manage data access effectively and avoid unauthorized data access.

Data Governance Policies for Cloud Data Warehouses

A well-defined set of policies is essential for maintaining data quality and security. These policies should be regularly reviewed and updated to reflect evolving business needs and regulatory requirements.

| Policy Area | Policy Description | Enforcement |

|---|---|---|

| Data Quality | Define standards for data accuracy, completeness, consistency, and timeliness. | Regular data validation checks and automated quality control procedures. |

| Data Security | Establish procedures for data encryption, access controls, and disaster recovery. | Security audits and compliance checks. |

| Data Compliance | Ensure data handling adheres to relevant regulations (e.g., GDPR, HIPAA). | Regular compliance assessments and adherence to industry best practices. |

| Data Ownership | Define clear ownership responsibilities for different data sets. | Data owner identification and accountability. |

| Data Retention | Establish guidelines for data retention periods. | Automated data archiving and deletion procedures. |

Cost Optimization Strategies

Effective cloud data warehouse management necessitates proactive cost optimization strategies. Uncontrolled costs can quickly escalate, negating the benefits of cloud scalability and flexibility. A well-defined cost optimization strategy ensures efficient resource utilization and aligns spending with business needs.Cloud data warehouses offer immense scalability but also demand careful management of resources to avoid unnecessary expenses. By implementing efficient cost optimization strategies, businesses can maintain a cost-effective cloud data warehouse solution while maximizing its potential.

Cost-Effective Strategies for Managing Cloud Data Warehouse Expenses

Implementing cost-effective strategies is crucial for sustainable cloud data warehouse management. These strategies focus on minimizing expenses while maintaining the necessary performance and functionality. Careful planning and ongoing monitoring are key components of successful cost optimization.

- Right-Sizing Resources: Proactively adjusting compute resources, storage capacity, and data transfer limits to match actual usage patterns is vital. This avoids over-provisioning, which leads to unnecessary costs. Dynamic scaling based on real-time demands is a critical element of this strategy. For example, scaling down during off-peak hours or using spot instances when possible reduces costs without impacting critical operations.

- Data Compression and Optimization: Implementing data compression techniques reduces storage costs. Optimizing data models and removing redundant data minimize storage space and enhance query performance, which, in turn, reduces costs associated with storage and retrieval.

- Leveraging Reserved Instances: Reserved instances offer substantial cost savings when usage patterns are predictable. These instances provide discounts for committed usage, reducing overall operational expenses. This approach is particularly valuable for organizations with consistent data warehouse workload demands.

- Utilizing Spot Instances: Spot instances provide lower-cost compute capacity, which is suitable for less critical tasks or workloads that can tolerate interruptions. Careful planning and monitoring are necessary to ensure business continuity and avoid unexpected disruptions.

- Optimizing Data Ingestion and ETL Processes: Efficient data ingestion and ETL (Extract, Transform, Load) processes minimize the time required for loading and processing data, resulting in lower compute costs. This includes employing efficient data pipelines and optimizing query patterns.

Monitoring and Controlling Cloud Data Warehouse Costs

Effective cost monitoring and control are crucial to ensuring long-term cost-effectiveness. Implementing robust monitoring mechanisms enables proactive identification and resolution of cost overruns.

- Implementing Cost Tracking and Reporting Tools: Utilize cloud provider-provided tools or third-party solutions for detailed cost analysis. These tools provide insights into resource consumption, helping identify areas of high expenditure. Tracking resource utilization in near real-time helps pinpoint potential cost inefficiencies.

- Setting Cost Budgets and Alerts: Establish clear cost budgets for the data warehouse. Set alerts for exceeding predefined thresholds to proactively address potential cost overruns. These alerts enable swift intervention and prevent significant financial implications.

- Regular Cost Reviews: Conduct periodic reviews of data warehouse costs to identify patterns and trends. These reviews should assess the effectiveness of implemented cost optimization strategies and adapt them based on changing requirements. Adjusting strategies based on performance analysis is key to long-term cost control.

Examples of Cost Optimization Techniques

Several techniques can be applied to reduce costs without sacrificing performance. These methods involve optimizing resource allocation, leveraging cloud services, and fine-tuning data processes.

- Implementing automated scaling policies: Adjusting compute capacity dynamically based on demand ensures resources are utilized efficiently. This approach prevents over-provisioning and unnecessary costs. Real-time monitoring and intelligent scaling policies are vital for efficient cost management.

- Utilizing cloud storage optimization services: Cloud providers offer services to optimize storage costs by leveraging advanced compression algorithms and other techniques. Using these services is a cost-effective approach to managing storage needs.

Cost Optimization Strategies Table

| Strategy | Description | Benefits |

|---|---|---|

| Right-Sizing Resources | Adjusting compute resources, storage, and transfer limits to match usage | Reduces unnecessary costs, improves resource utilization |

| Data Compression | Employing compression techniques to reduce storage space | Decreases storage costs, improves query performance |

| Reserved Instances | Committing to a specific instance type for a fixed period | Significant cost savings for predictable workloads |

| Spot Instances | Utilizing lower-cost instances for less critical tasks | Reduces compute costs for flexible workloads |

| Optimized ETL | Efficient data ingestion and processing | Reduces compute time, lowers overall costs |

Closing Notes

In conclusion, designing a cloud data warehouse architecture is a multifaceted process requiring a thorough understanding of various technologies, strategies, and considerations. This guide has provided a roadmap to navigate these complexities, from choosing the optimal cloud platform to optimizing performance and ensuring security. By implementing the best practices discussed, organizations can leverage the power of cloud data warehousing to drive insightful decision-making and achieve business objectives.

FAQ Compilation

What are the typical pricing models for cloud data warehousing services?

Pricing models vary by cloud provider and service. Some providers offer pay-as-you-go models, while others provide subscription-based options. Factors like storage, compute, and data transfer costs influence the total cost. It’s essential to carefully evaluate different pricing structures to find the most cost-effective solution for your needs.

How do I choose the right cloud platform for my data warehouse?

Consider factors like your existing infrastructure, data volume, performance requirements, and budget when selecting a cloud platform. Assess the strengths and weaknesses of different providers’ services and choose the one that best aligns with your business goals and technical expertise.

What are some common challenges in cloud data warehouse design?

Common challenges include ensuring data quality, managing costs effectively, maintaining data security, and integrating with existing systems. These challenges can be mitigated through careful planning, robust security protocols, and ongoing monitoring and optimization.

How do I ensure data security in a cloud data warehouse?

Implementing robust security measures, such as encryption, access controls, and regular security audits, is crucial. Understanding and adhering to the specific security guidelines of your chosen cloud provider is essential for protecting sensitive data.