Navigating the complexities of a multi-cloud environment presents both incredible opportunities and significant security challenges. The ability to leverage diverse cloud platforms for various needs is a powerful advantage, but it also introduces a fragmented security landscape. This guide provides a detailed roadmap for establishing a unified security policy, ensuring consistent protection across all your cloud resources, and streamlining your security posture.

This Artikel delves into the critical steps required to build a robust and effective security framework. From defining the scope and objectives to implementing access management and incident response, we will explore each facet of creating a unified policy. We’ll examine the importance of understanding your current security posture, selecting appropriate security controls, and automating policy enforcement. The ultimate goal is to equip you with the knowledge to protect your data, maintain compliance, and optimize your cloud security strategy, regardless of the cloud platforms you utilize.

Defining the Scope and Objectives

Establishing a unified security policy for a multi-cloud environment requires a clear understanding of the scope and objectives. This involves defining the operational landscape, identifying critical assets, and outlining the desired security posture. This section will address these key areas to lay a solid foundation for policy development.

Defining Multi-Cloud Environments

Multi-cloud environments involve the use of multiple cloud computing services from different providers. This approach provides flexibility, redundancy, and the ability to leverage the strengths of each provider. The specific deployment models within a multi-cloud strategy are varied and can impact security considerations.Here are the common deployment models:

- Public Cloud: This involves using services offered by providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These services offer a wide range of computing, storage, and networking resources on a pay-as-you-go basis.

- Private Cloud: This refers to cloud infrastructure dedicated to a single organization, either hosted on-premises or by a third-party provider. This model offers greater control and customization but often requires significant upfront investment.

- Hybrid Cloud: This combines public and private cloud environments, allowing organizations to run different workloads in the most appropriate environment. This approach often involves integrating on-premises infrastructure with public cloud services.

- Multi-Cloud: This is a strategy that uses multiple public cloud providers. Organizations might choose to use different providers for different workloads, for redundancy, or to avoid vendor lock-in.

Identifying Critical Assets and Data

Identifying critical assets and data is crucial for prioritizing security efforts. This involves understanding what data is most valuable, where it resides, and who has access to it. This assessment should be performed across all cloud platforms to ensure a comprehensive view.The process of identifying critical assets and data typically involves the following steps:

- Data Classification: Categorizing data based on sensitivity, criticality, and regulatory requirements. Examples include Personally Identifiable Information (PII), financial data, and intellectual property. This categorization informs the level of security controls needed.

- Asset Inventory: Creating a detailed inventory of all assets, including servers, applications, databases, and network devices. This inventory should include the location of each asset and its owner.

- Risk Assessment: Evaluating the potential threats and vulnerabilities that could impact critical assets. This assessment should consider both internal and external threats.

- Data Flow Mapping: Visualizing the movement of data across different cloud platforms and services. This helps identify potential points of exposure and vulnerabilities.

Data classification is the cornerstone of asset identification, as it determines the level of protection required for each data type. For example, data classified as “Confidential” would necessitate stronger access controls and encryption compared to data classified as “Public”.

Organizing Security Goals for a Unified Policy

A unified security policy should clearly articulate the organization’s security goals. These goals should be aligned with the overall business objectives and prioritized based on their impact on the organization. The core security goals typically center around confidentiality, integrity, and availability (CIA).Here’s a breakdown of each core security goal:

- Confidentiality: Ensuring that sensitive information is accessible only to authorized individuals or systems. This involves implementing access controls, encryption, and data loss prevention (DLP) measures.

- Integrity: Maintaining the accuracy and completeness of data and systems. This requires implementing measures to prevent unauthorized modification or deletion of data, such as version control, intrusion detection systems, and regular backups.

- Availability: Ensuring that systems and data are accessible when needed. This involves implementing redundancy, disaster recovery plans, and robust monitoring systems.

These core goals are often complemented by other objectives, such as:

- Compliance: Adhering to relevant industry regulations and standards (e.g., HIPAA, GDPR, PCI DSS).

- Efficiency: Optimizing security operations to minimize costs and maximize effectiveness.

- Scalability: Ensuring the security policy can adapt to the changing needs of the multi-cloud environment.

Inventory and Assessment of Existing Security Posture

Understanding your current security posture is the critical first step toward building a unified security policy for your multi-cloud environment. This involves a comprehensive inventory of your existing security tools and services, a comparative analysis of security features across cloud providers, and a thorough identification of potential vulnerabilities. This assessment lays the foundation for informed decision-making and helps prioritize efforts to improve security.

Existing Security Tools and Services Across Cloud Providers

A detailed inventory of existing security tools and services is necessary for understanding your current capabilities. This includes identifying the specific tools and services used within each cloud provider, their configurations, and their integration with other security components.

Here’s a breakdown of typical security tools and services, often found in cloud environments:

- Identity and Access Management (IAM): Services like AWS IAM, Azure Active Directory, and Google Cloud IAM manage user identities, permissions, and access control. These services are crucial for enforcing the principle of least privilege.

- Network Security: This encompasses firewalls (e.g., AWS Security Groups, Azure Network Security Groups, Google Cloud Firewall), intrusion detection/prevention systems (IDS/IPS), and virtual private networks (VPNs). Network security controls protect against unauthorized access and malicious traffic.

- Data Security: Services like encryption (e.g., AWS KMS, Azure Key Vault, Google Cloud KMS), data loss prevention (DLP) tools, and data classification services are vital for protecting sensitive data at rest and in transit.

- Security Information and Event Management (SIEM): SIEM solutions (e.g., Splunk, Sumo Logic, Azure Sentinel, Google Chronicle) collect, analyze, and correlate security logs from various sources to detect and respond to security threats.

- Vulnerability Scanning and Management: Tools like AWS Inspector, Azure Security Center, and Google Cloud Security Command Center, along with third-party solutions, are used to identify and remediate vulnerabilities in your infrastructure and applications.

- Compliance and Governance: Services and tools assist in meeting regulatory requirements and internal security policies. These include configuration management, audit logging, and compliance reporting features, often integrated into the cloud provider’s platform.

Documenting the configuration of each tool and service, including any customizations or integrations, is also crucial. This information is vital for assessing the effectiveness of your current security controls and identifying potential gaps or misconfigurations.

Comparison of Security Features and Capabilities

Different cloud providers offer varying levels of security features and capabilities. A comparative analysis helps you understand the strengths and weaknesses of each platform and identify areas where you might need to supplement the native security controls.

Consider the following aspects when comparing security features:

- IAM Capabilities: Examine how each provider handles identity federation, multi-factor authentication (MFA), and role-based access control (RBAC). For example, Azure Active Directory offers robust integration with on-premises Active Directory, while AWS IAM provides extensive policy customization options.

- Network Security Features: Evaluate the capabilities of firewalls, intrusion detection/prevention systems, and VPN services. Consider the flexibility, scalability, and ease of management of each provider’s network security tools.

- Data Encryption and Key Management: Compare the encryption algorithms supported, key management options (e.g., hardware security modules – HSMs), and compliance certifications offered by each provider.

- Monitoring and Logging: Assess the logging capabilities, the types of security events logged, and the integration with SIEM solutions. Some providers offer more granular logging and advanced threat detection features than others.

- Compliance Certifications: Determine which compliance standards (e.g., HIPAA, PCI DSS, SOC 2) each provider supports and the level of responsibility the provider assumes for compliance.

By comparing these features, you can identify potential gaps in your security posture. For example, if one cloud provider lacks a specific feature you need, you might need to implement a third-party solution or adjust your security policies to compensate.

Identification of Potential Security Gaps and Vulnerabilities

A thorough assessment of your existing security posture will reveal potential security gaps and vulnerabilities in your multi-cloud setup. These gaps can arise from misconfigurations, inconsistent security policies, or a lack of visibility across all cloud environments.

Here are some areas to focus on when identifying vulnerabilities:

- Configuration Errors: Review the configurations of your security tools and services for any misconfigurations that could create vulnerabilities. This includes incorrect firewall rules, overly permissive IAM policies, and improperly configured encryption settings.

- Inconsistent Security Policies: Identify any inconsistencies in your security policies across different cloud providers. This could lead to gaps in protection and make it difficult to manage and enforce your security controls consistently.

- Lack of Visibility: Determine if you have sufficient visibility into security events across all your cloud environments. A lack of centralized logging and monitoring can make it difficult to detect and respond to security threats effectively.

- Unpatched Systems: Ensure that all systems and applications are patched with the latest security updates. Vulnerabilities in outdated software can be easily exploited by attackers.

- Insufficient Monitoring: Evaluate the effectiveness of your monitoring and alerting systems. Are you monitoring for the right security events? Are you receiving timely alerts when threats are detected?

- Compliance Deviations: Check for any deviations from your organization’s compliance requirements. These deviations can indicate potential security risks and expose your organization to legal or financial penalties.

Conducting regular vulnerability scans, penetration tests, and security audits is crucial for identifying and addressing these vulnerabilities. These assessments should be performed across all your cloud environments to ensure a comprehensive view of your security posture. For example, a penetration test could reveal that a misconfigured firewall rule in one cloud provider allows unauthorized access to sensitive data, highlighting a critical vulnerability that needs immediate remediation.

Implementing a continuous monitoring program and regularly reviewing security logs are also essential practices to help detect and address potential security gaps.

Policy Framework and Structure

A well-defined policy framework is crucial for establishing a unified security posture across a multi-cloud environment. This framework provides the structure and guidelines necessary to ensure consistent security practices, enabling effective risk management and compliance. The framework should be easily understood, implemented, and maintained, providing a clear roadmap for security professionals and stakeholders.

Hierarchical Structure for the Unified Security Policy

Establishing a hierarchical structure ensures the security policy is comprehensive and scalable. This structure allows for a balance between high-level principles and detailed, actionable guidelines. It promotes a clear understanding of responsibilities and facilitates effective communication across the organization.The hierarchical structure can be broken down into the following tiers:

- High-Level Principles: These are the overarching goals and objectives of the security policy. They define the organization’s commitment to security and guide the development of more specific policies and procedures. These principles are typically broad and enduring, reflecting the organization’s risk appetite and strategic direction.

- Example: “Protect all sensitive data from unauthorized access and disclosure.”

- Example: “Ensure the availability and integrity of critical systems and services.”

- Policy Statements: These statements translate the high-level principles into specific requirements. They define what must be done to achieve the security objectives. Policy statements are more detailed than principles but still provide a broad framework for action.

- Example: “Implement multi-factor authentication for all privileged user accounts.”

- Example: “Regularly back up all critical data and systems.”

- Standards: Standards provide the technical and procedural details for implementing the policy statements. They specify the specific technologies, configurations, and processes that must be used. Standards ensure consistency and interoperability across the multi-cloud environment.

- Example: “Use a password manager with a minimum password length of 16 characters.”

- Example: “Implement intrusion detection and prevention systems (IDPS) on all network segments.”

- Guidelines: Guidelines offer recommended practices and best-effort suggestions for achieving compliance with standards. They provide flexibility and allow for adaptation to specific situations. Guidelines can be updated more frequently than standards to reflect evolving threats and technologies.

- Example: “Regularly review and update security configurations based on industry best practices.”

- Example: “Conduct penetration testing at least annually.”

- Procedures: Procedures are the step-by-step instructions for carrying out specific tasks related to security. They provide detailed guidance for security personnel and other users. Procedures ensure consistency and repeatability in security operations.

- Example: “Procedure for incident response, including steps for containment, eradication, and recovery.”

- Example: “Procedure for user access provisioning and deprovisioning.”

Naming Convention and Classification System

A well-defined naming convention and classification system enhances the manageability and maintainability of the security policy. This system enables easy identification, retrieval, and organization of policy documents and related controls.Here’s a recommended approach:

- Naming Convention: Use a consistent naming convention for all policy documents and related artifacts. This convention should include a unique identifier, a descriptive title, and versioning information.

- Example: “SEC-POL-001-DataLossPrevention-v1.0”

- Classification System: Classify security policies and controls based on various criteria, such as:

- Security Domain: Identify the area of security the policy covers (e.g., Access Control, Data Security, Network Security).

- Cloud Provider: Specify the cloud providers the policy applies to (e.g., AWS, Azure, GCP, or a combination).

- Data Sensitivity: Categorize policies based on the sensitivity of the data they protect (e.g., Public, Internal, Confidential, Highly Confidential).

- Compliance Requirement: Indicate the regulatory or industry compliance requirements the policy addresses (e.g., GDPR, HIPAA, PCI DSS).

- Control Type: Classify controls based on their function (e.g., Preventive, Detective, Corrective).

Using these systems, policies and controls can be easily grouped, searched, and managed. For instance, a policy on encrypting data at rest in AWS, classified as “Data Security,” “AWS,” “Confidential,” and “GDPR compliant,” can be quickly located and updated as needed.

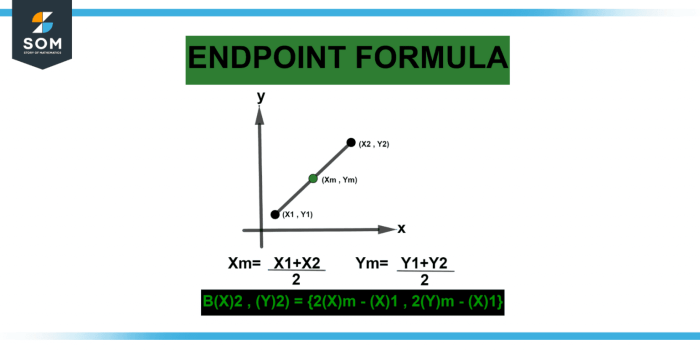

Visual Diagram of Policy Structure

A visual diagram effectively illustrates the relationships between the different components of the security policy. This diagram provides a clear overview of the policy’s structure, making it easier for stakeholders to understand and navigate.The diagram can be structured as a hierarchical flowchart, with the high-level principles at the top and the more detailed elements, such as procedures, at the bottom.

Arrows can be used to show the relationships between the components.Here’s a description of a potential visual representation:

The diagram depicts a hierarchical structure. At the top, the “High-Level Principles” are represented by a rectangular box. Arrows extend downward from this box, branching to “Policy Statements,” which are also represented by rectangular boxes. From the “Policy Statements” boxes, arrows extend to “Standards” and “Guidelines” boxes, also rectangular. Finally, arrows lead from the “Standards” and “Guidelines” boxes to “Procedures” boxes, again in rectangular form, at the bottom of the diagram.

Each box is labeled with its respective component type. The arrows visually represent the cascading effect of the policy, where the high-level principles guide the policy statements, which in turn inform the standards and guidelines, leading to the detailed procedures.

Security Controls and Implementation

Implementing consistent security controls across a multi-cloud environment is crucial for safeguarding data and applications. This involves selecting and configuring security measures that are applicable to all cloud platforms, while also considering the specific features and limitations of each. The goal is to establish a unified security posture that minimizes risk and ensures compliance.

Access Control Implementation

Access control is fundamental to security, ensuring that only authorized users and processes can access resources. Implementing robust access controls involves several key components.

- Identity and Access Management (IAM): Implementing a centralized IAM system is vital. This allows for the creation, management, and enforcement of user identities and permissions across all cloud platforms. IAM should support multi-factor authentication (MFA) to enhance security. For example, AWS IAM, Azure Active Directory, and Google Cloud IAM offer similar functionalities for managing user identities, groups, and permissions.

- Role-Based Access Control (RBAC): RBAC is a method for assigning permissions based on roles. Defining roles based on job functions and granting permissions accordingly simplifies access management and reduces the risk of unauthorized access. Each cloud provider supports RBAC, enabling consistent permission models.

- Principle of Least Privilege: Granting users and applications only the minimum necessary access to perform their tasks is crucial. Regularly reviewing and adjusting permissions based on evolving needs minimizes the potential damage from compromised accounts. This approach limits the blast radius of potential security breaches.

- Regular Auditing and Monitoring: Implementing continuous monitoring of access activities is essential. This includes logging all access attempts, both successful and failed, and setting up alerts for suspicious activities. Cloud providers offer logging and monitoring services (e.g., AWS CloudTrail, Azure Monitor, Google Cloud Audit Logs) that can be integrated with a centralized security information and event management (SIEM) system for comprehensive analysis.

Data Encryption Implementation

Data encryption protects data at rest and in transit, rendering it unreadable to unauthorized parties. The implementation of data encryption requires careful consideration of different encryption methods and key management strategies.

- Encryption at Rest: Data stored in cloud storage services should be encrypted. Most cloud providers offer built-in encryption options for storage services like AWS S3, Azure Blob Storage, and Google Cloud Storage. Encryption keys can be managed by the cloud provider or through a customer-managed key management service (KMS).

- Encryption in Transit: All data transmitted between cloud services and users should be encrypted using protocols like Transport Layer Security (TLS). Configuring TLS/SSL certificates and ensuring that all connections use HTTPS is crucial.

- Key Management: Secure key management is critical for encryption. Cloud providers offer KMS to manage encryption keys. Alternatively, organizations can implement their own KMS solutions or leverage hardware security modules (HSMs) for enhanced key protection. Key rotation policies should be established and followed to minimize the impact of compromised keys.

- Data Loss Prevention (DLP): DLP solutions can be integrated to identify and prevent sensitive data from leaving the organization’s control. DLP tools scan data at rest and in transit, and can enforce policies such as blocking the transmission of sensitive information or encrypting data before it is shared.

Network Segmentation Implementation

Network segmentation isolates different parts of the cloud environment to limit the impact of security breaches. This involves creating virtual networks, subnets, and security groups to control network traffic.

- Virtual Private Clouds (VPCs): VPCs provide isolated networks within a cloud provider’s infrastructure. They allow organizations to define their own IP address ranges, subnets, and network configurations. Each cloud provider has its own VPC implementation (e.g., AWS VPC, Azure Virtual Network, Google Cloud Virtual Private Cloud).

- Subnets: Subnets divide a VPC into smaller, manageable network segments. Placing resources in separate subnets based on their function and security requirements is crucial. For example, web servers might be in one subnet, database servers in another, and application servers in a third.

- Security Groups/Network Security Groups (NSGs): Security groups (AWS) and NSGs (Azure) act as virtual firewalls that control inbound and outbound traffic to instances. They allow or deny traffic based on source, destination, protocol, and port. Defining strict security group rules is essential to limit network access.

- Network Firewalls: Deploying network firewalls, either managed by the cloud provider or third-party solutions, can provide additional network security. Firewalls can inspect traffic, filter malicious content, and enforce network security policies.

- Microsegmentation: For enhanced security, microsegmentation divides the network into even smaller segments, isolating workloads from each other. This limits lateral movement in case of a breach. Tools like VMware NSX and Cisco ACI can be used to implement microsegmentation in multi-cloud environments.

Infrastructure-as-Code (IaC) for Security Control Configuration

IaC allows security controls to be defined and managed programmatically, ensuring consistency and repeatability across all cloud environments. IaC tools automate the deployment and configuration of security controls.

- Example with Terraform: Terraform is a popular IaC tool that supports multiple cloud providers. It can be used to define and deploy security groups, configure encryption, and manage IAM policies. A Terraform configuration file can define the desired state of the infrastructure, and Terraform will automatically provision and configure the resources to match that state.

- Example with AWS CloudFormation: CloudFormation is AWS’s IaC service. It enables the creation and management of AWS resources using templates written in JSON or YAML. CloudFormation templates can be used to define security groups, VPC configurations, and other security controls.

- Example with Azure Resource Manager (ARM) templates: ARM templates are used to deploy and manage Azure resources. They are written in JSON and can be used to define virtual networks, security groups, and other security configurations.

- Benefits of IaC: IaC provides several benefits, including increased efficiency, reduced errors, and improved consistency. IaC allows for the automated deployment of security controls, which reduces the risk of manual configuration errors. It also facilitates version control, making it easier to track changes and roll back to previous configurations.

Access Management and Identity Governance

Effective access management and identity governance are crucial for maintaining a robust security posture across a multi-cloud environment. This involves establishing consistent controls to ensure that only authorized users and services have access to the appropriate resources. Implementing these practices reduces the risk of unauthorized access, data breaches, and compliance violations.

Best Practices for Managing Identities and Access Across Multiple Cloud Providers

Managing identities and access in a multi-cloud environment presents unique challenges due to the varying identity and access management (IAM) services offered by each cloud provider. To address these complexities, several best practices should be adopted.

- Centralized Identity Provider: Utilize a centralized identity provider (IdP) such as Okta, Azure Active Directory, or a similar service. This allows for a single source of truth for user identities and streamlines the authentication process across all cloud environments. This approach simplifies user management and reduces the administrative overhead associated with maintaining separate user accounts in each cloud.

- Federated Authentication: Implement federated authentication using protocols like SAML or OpenID Connect. This allows users to authenticate once with the central IdP and then access resources in different cloud environments without re-entering their credentials. Federation enhances the user experience and improves security by reducing the risk of password-related vulnerabilities.

- Automated User Provisioning and De-provisioning: Automate the process of creating, modifying, and deleting user accounts across all cloud providers. This ensures consistency and reduces the likelihood of human error. Tools like Terraform or custom scripts can be used to provision users based on their roles and responsibilities. De-provisioning is equally critical, ensuring that access is revoked promptly when an employee leaves the organization or their role changes.

- Multi-Factor Authentication (MFA): Enforce MFA for all user accounts, regardless of the cloud environment. MFA adds an extra layer of security by requiring users to provide a second form of verification, such as a code from a mobile app or a hardware security key. This significantly reduces the risk of account compromise due to stolen credentials.

- Regular Access Reviews: Conduct regular access reviews to verify that users still require the access they have been granted. These reviews should involve the business owners of the resources being accessed to ensure that permissions align with current business needs. This helps to identify and remediate excessive or unnecessary access, minimizing the attack surface.

- Least Privilege Principle: Grant users only the minimum level of access necessary to perform their job functions. This limits the potential damage from a compromised account. Regularly review and refine permissions to ensure they remain aligned with the principle of least privilege.

- Monitoring and Auditing: Implement comprehensive monitoring and auditing across all cloud environments to track user activity and detect any suspicious behavior. Centralized logging and monitoring tools provide visibility into access attempts, permission changes, and other security-relevant events.

Role-Based Access Control (RBAC) Model for Consistent Access Permissions

A well-designed RBAC model is essential for enforcing consistent access permissions across a multi-cloud environment. RBAC simplifies access management by assigning permissions to roles, rather than individual users. This approach makes it easier to manage access at scale and ensures that permissions are consistently applied across all cloud providers.

- Define Roles: Start by identifying the various roles within the organization and the associated responsibilities. Examples include “Developer,” “Administrator,” “Security Analyst,” and “Data Scientist.”

- Map Permissions to Roles: Determine the specific permissions required for each role. This involves identifying the cloud resources and services that each role needs to access and the level of access (e.g., read, write, execute) required.

- Assign Users to Roles: Assign users to the appropriate roles based on their job functions. This is typically done through the centralized IdP or through the cloud provider’s IAM service.

- Implement Role Hierarchies: Consider implementing role hierarchies to simplify access management. For example, a “Senior Developer” role might inherit the permissions of a “Developer” role, plus additional permissions.

- Regularly Review and Update Roles: Review and update roles and permissions regularly to ensure they remain aligned with business needs and security requirements. This includes removing unnecessary permissions and adding new permissions as required.

- Use Infrastructure as Code (IaC): Define RBAC configurations using IaC tools like Terraform or AWS CloudFormation. This ensures consistency and allows for automated deployment and updates to RBAC policies across all cloud environments. IaC also enables version control, making it easier to track changes and roll back to previous configurations if necessary.

Process for Provisioning and De-provisioning User Accounts Across All Cloud Environments

A well-defined process for provisioning and de-provisioning user accounts is critical for maintaining a secure and compliant multi-cloud environment. This process should be automated as much as possible to ensure consistency and reduce the risk of errors.

The following steps Artikel a typical provisioning process:

- Request Initiation: A request for a new user account is initiated, typically through an HR system or a service desk. The request should include the user’s name, job title, department, and the required access permissions.

- Approval Workflow: The request is routed through an approval workflow to the appropriate managers and stakeholders. This ensures that the request is authorized and that the user’s access permissions are appropriate.

- Account Creation: Once approved, the user account is created in the central IdP and then synchronized to the cloud providers. The account creation process should be automated using tools like Terraform or custom scripts.

- Role Assignment: The user is assigned to the appropriate roles based on their job title and responsibilities. This can be automated based on the information provided in the request.

- Access Provisioning: The user’s access to cloud resources and services is provisioned based on their assigned roles. This includes granting access to specific applications, data, and infrastructure.

- Notification: The user is notified that their account has been created and that they have been granted access to the required resources.

The following steps Artikel a typical de-provisioning process:

- Termination Trigger: A user’s account is de-provisioned when they leave the organization, change roles, or when their access is no longer required. This is often triggered by an HR system or a manager.

- Approval Workflow (if required): In some cases, a de-provisioning request may need to be approved by a manager or other stakeholders.

- Access Revocation: The user’s access to all cloud resources and services is revoked. This includes removing their account from the central IdP, revoking their access to applications and data, and removing their access to infrastructure.

- Account Deletion: The user’s account is deleted from the central IdP and the cloud providers. This ensures that the account cannot be reactivated or misused.

- Audit Trail: All provisioning and de-provisioning activities should be logged and audited to provide a complete record of user access.

Example: Consider a scenario where a new employee, a “Data Scientist,” joins a company that uses AWS and Azure. The provisioning process would involve:

- A request initiated through the HR system.

- Approval from the Data Science team lead.

- Account creation in the central IdP (e.g., Okta).

- Synchronization of the account to AWS and Azure.

- Assignment to the “Data Scientist” role, which grants access to data storage services (e.g., AWS S3, Azure Blob Storage), data processing tools (e.g., AWS EMR, Azure Databricks), and relevant databases.

- Notification to the employee about their account and access.

When the Data Scientist leaves the company, the de-provisioning process would include:

- Notification from HR.

- Revocation of access to all cloud resources.

- Account deletion from Okta, AWS, and Azure.

- Auditing of the de-provisioning process.

These processes, when properly implemented and automated, ensure a secure and efficient access management lifecycle across a multi-cloud environment.

Data Protection and Compliance

Data protection and compliance are paramount in a multi-cloud environment. Organizations must adhere to a complex web of regulations while ensuring the confidentiality, integrity, and availability of their data across diverse cloud platforms. This section Artikels critical considerations for data protection and compliance, covering regulatory requirements, encryption strategies, and data loss prevention measures.

Identifying Data Protection Regulations and Compliance Requirements

Compliance with data protection regulations is not optional; it’s a legal and ethical imperative. Failing to comply can result in significant financial penalties, reputational damage, and legal repercussions. The specific regulations that apply depend on the organization’s industry, geographic location, and the type of data it handles.

- General Data Protection Regulation (GDPR): GDPR, applicable to organizations processing the personal data of individuals within the European Union, sets stringent requirements for data privacy, including consent, data minimization, and the right to be forgotten. Non-compliance can lead to fines of up to 4% of annual global turnover or €20 million, whichever is higher. For example, a company like Google has faced significant GDPR fines for various data privacy violations.

- California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA): These California laws grant consumers rights regarding their personal information, including the right to know, the right to delete, and the right to opt-out of the sale of their personal information. Violations can result in significant penalties and private rights of action.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA regulates the handling of protected health information (PHI) in the healthcare industry. Organizations must implement safeguards to protect the confidentiality, integrity, and availability of PHI. Failure to comply can result in substantial financial penalties and criminal charges.

- Payment Card Industry Data Security Standard (PCI DSS): PCI DSS applies to organizations that process, store, or transmit cardholder data. It requires implementing security measures to protect cardholder data from theft and fraud. Compliance is crucial to maintaining the ability to process credit card payments.

- Industry-Specific Regulations: Depending on the industry, organizations may need to comply with other regulations, such as the Gramm-Leach-Bliley Act (GLBA) for financial institutions or the Family Educational Rights and Privacy Act (FERPA) for educational institutions.

Data Encryption Methods for Multi-Cloud Data Storage

Encryption is a fundamental security control for protecting data at rest and in transit within a multi-cloud environment. It transforms data into an unreadable format, making it unintelligible to unauthorized users.

- Encryption at Rest: Encryption at rest protects data stored on cloud storage services. It involves encrypting data before it is stored and decrypting it when it is accessed. Common methods include:

- Server-Side Encryption (SSE): The cloud provider manages the encryption keys. This is generally the simplest approach, but the organization has less control over the keys.

- Client-Side Encryption (CSE): The organization encrypts the data before sending it to the cloud provider, giving the organization full control over the encryption keys.

- Key Management Systems (KMS): Organizations can use a KMS to manage encryption keys. This provides more control and flexibility.

- Encryption in Transit: Encryption in transit protects data as it moves between different locations, such as between a user’s device and a cloud server or between different cloud services. Common methods include:

- Transport Layer Security (TLS)/Secure Sockets Layer (SSL): TLS/SSL encrypts the communication channel between a client and a server. This is used to secure web traffic (HTTPS), email, and other network traffic.

- Virtual Private Network (VPN): A VPN creates an encrypted tunnel between a device and a network, protecting data as it travels over the internet.

- Key Management: Proper key management is crucial for encryption. Organizations must securely store and manage their encryption keys. This includes key rotation, access control, and disaster recovery. Consider using Hardware Security Modules (HSMs) for storing encryption keys securely.

Data Loss Prevention (DLP) Strategies Across Different Cloud Platforms

Data Loss Prevention (DLP) strategies are critical for preventing sensitive data from leaving the organization’s control. Implementing DLP requires understanding the capabilities of each cloud platform and configuring appropriate controls.

| Cloud Platform | DLP Strategy | Implementation Considerations | Example Tools/Services |

|---|---|---|---|

| Amazon Web Services (AWS) | Data classification, monitoring, and access control. | Implement data classification tags using AWS Macie to identify sensitive data. Configure S3 bucket policies to restrict access. Monitor API calls using CloudTrail for suspicious activity. | AWS Macie, AWS CloudTrail, S3 bucket policies, AWS GuardDuty |

| Microsoft Azure | Data discovery, classification, and protection. | Utilize Azure Information Protection to classify and protect sensitive data. Implement Azure Data Loss Prevention to monitor and control data exfiltration. Use Azure Security Center for threat detection. | Azure Information Protection, Azure Data Loss Prevention, Azure Security Center, Microsoft Purview |

| Google Cloud Platform (GCP) | Data loss prevention, data classification, and access control. | Employ Cloud DLP to detect and redact sensitive data. Use Cloud Data Loss Prevention to classify data in storage. Implement Identity and Access Management (IAM) to control access. | Cloud DLP, Cloud Data Loss Prevention, Cloud IAM, Google Workspace Data Loss Prevention |

| Multi-Cloud Approach | Centralized DLP management, unified policies, and cross-platform monitoring. | Use third-party DLP solutions that integrate with multiple cloud providers. Define a unified data classification taxonomy across all platforms. Implement centralized logging and monitoring. | Third-party DLP solutions (e.g., Netskope, McAfee MVISION Cloud), Security Information and Event Management (SIEM) systems |

Network Security and Segmentation

Securing a multi-cloud environment requires a robust network security strategy. This involves implementing best practices for network security, designing a network segmentation strategy, and visualizing the architecture with security controls. These measures are critical for protecting data, ensuring business continuity, and complying with regulatory requirements.

Network Security Best Practices

Implementing robust network security practices is crucial for safeguarding data and applications in a multi-cloud environment. This includes deploying firewalls, intrusion detection/prevention systems (IDS/IPS), and virtual private networks (VPNs). The combined effect of these measures creates a layered defense that protects against various threats.

- Firewalls: Firewalls act as the first line of defense, controlling network traffic based on predefined rules. They inspect incoming and outgoing traffic, blocking unauthorized access. In a multi-cloud setting, firewalls can be implemented at the perimeter of each cloud provider and within virtual networks. This ensures that traffic between different cloud environments and on-premises infrastructure is securely managed. For example, AWS offers AWS Network Firewall, Azure provides Azure Firewall, and Google Cloud offers Cloud Firewall.

- Intrusion Detection/Prevention Systems (IDS/IPS): IDS/IPS monitor network traffic for malicious activity. IDS passively detects and alerts on suspicious behavior, while IPS actively blocks or mitigates threats. Deploying IDS/IPS across the multi-cloud environment helps identify and respond to potential security incidents. These systems analyze network packets, looking for known attack signatures or anomalies. Examples include Snort and Suricata, which can be deployed in various cloud environments.

- Virtual Private Networks (VPNs): VPNs create secure, encrypted connections over public networks, allowing secure access to resources in the cloud. They are essential for remote access and for establishing secure connections between different cloud environments or between on-premises infrastructure and the cloud. VPNs encrypt all data transmitted between the user’s device or network and the cloud resources, protecting it from eavesdropping and tampering.

Examples include OpenVPN and IPsec.

Network Segmentation Strategy

Network segmentation is a critical security practice that involves dividing a network into smaller, isolated segments. This limits the impact of security breaches by containing them within a specific segment. A well-designed segmentation strategy is vital for protecting critical workloads and data in a multi-cloud environment.

- Isolate Critical Workloads: Segmenting the network allows for the isolation of critical workloads, such as databases and payment processing systems, from less sensitive areas. This prevents attackers from easily moving laterally across the network if they compromise a less critical system.

- Micro-segmentation: Implementing micro-segmentation within each cloud environment further enhances security. This involves creating granular security policies that restrict communication between individual workloads, even within the same virtual network. Tools like VMware NSX and AWS Security Groups can be used to implement micro-segmentation.

- Use of Virtual Networks: Utilizing virtual networks provided by each cloud provider is a key component of segmentation. Each virtual network (e.g., AWS VPC, Azure VNet, Google Cloud VPC) can be treated as a separate segment, with its own security controls and access policies.

- Network Security Groups/Firewall Rules: Implement network security groups (AWS) or firewall rules (Azure, Google Cloud) to control traffic flow between segments. These rules should be based on the principle of least privilege, allowing only necessary communication between segments.

- Regular Review and Updates: Network segmentation strategies must be regularly reviewed and updated to adapt to evolving threats and changes in the cloud environment. This includes updating firewall rules, security group configurations, and network access control lists.

Network Architecture with Security Controls Diagram

The following diagram illustrates a simplified multi-cloud network architecture with security controls. The diagram shows how different security components work together to protect the environment.

Diagram Description:The diagram represents a multi-cloud network architecture encompassing two cloud providers (Cloud Provider A and Cloud Provider B) and an on-premises data center. Each environment is connected via secure VPN tunnels.

On-Premises Data Center:

- The on-premises data center includes a firewall that filters all incoming and outgoing traffic.

- A VPN gateway establishes secure connections to both Cloud Provider A and Cloud Provider B.

- An IDS/IPS monitors network traffic for malicious activity.

Cloud Provider A:

- A perimeter firewall controls traffic entering and exiting the cloud environment.

- A VPN gateway provides secure access from on-premises and other cloud environments.

- A virtual network (VPC) is segmented into different subnets.

- Each subnet has its own security groups or network access control lists (ACLs) that control traffic flow.

- An IDS/IPS monitors network traffic within the cloud environment.

Cloud Provider B:

Similar to Cloud Provider A, Cloud Provider B also has a perimeter firewall, VPN gateway, virtual network (VPC), subnets with security groups, and an IDS/IPS.

Connectivity and Security Controls:

- Secure VPN tunnels connect the on-premises data center to both Cloud Provider A and Cloud Provider B.

- Firewalls are positioned at the perimeter of each cloud provider and within the on-premises data center.

- IDS/IPS systems are deployed in all environments to detect and prevent intrusions.

- Security groups or network ACLs are used to segment the virtual networks and control traffic flow between subnets.

Data Flow:

- Data can flow securely between the on-premises data center and each cloud provider via VPN tunnels.

- Traffic between different subnets within a cloud provider is controlled by security groups or network ACLs.

- Traffic between cloud providers is controlled by the respective firewalls and VPN gateways.

Overall Security Posture:

- The architecture provides a layered security approach with firewalls, VPNs, and IDS/IPS.

- Network segmentation isolates critical workloads and limits the impact of security breaches.

- Centralized management and monitoring tools can be used to manage security controls across the multi-cloud environment.

Monitoring, Logging, and Incident Response

Establishing robust monitoring, logging, and incident response capabilities is paramount for maintaining a strong security posture in a multi-cloud environment. This involves continuously observing and analyzing security events, maintaining comprehensive logs, and having a well-defined process to respond to and mitigate security incidents effectively. The ability to rapidly detect, investigate, and remediate threats across diverse cloud platforms is critical to minimizing potential damage and ensuring business continuity.

Centralized Logging and Monitoring

Centralized logging and monitoring are essential components of a proactive security strategy. They provide a unified view of security events across all cloud environments, facilitating timely detection of threats and enabling comprehensive security analysis.The advantages of centralized logging and monitoring are:

- Enhanced Visibility: Provides a consolidated view of security events from various cloud providers, allowing for easier identification of potential threats and anomalous activities.

- Faster Detection: Enables quicker detection of security incidents by aggregating and correlating logs from multiple sources in real-time.

- Improved Incident Response: Simplifies incident response by providing a single point of access for security data, enabling faster investigation and remediation.

- Simplified Compliance: Streamlines compliance efforts by providing a centralized repository of security logs for auditing and reporting purposes.

- Proactive Threat Hunting: Facilitates proactive threat hunting by enabling security teams to analyze historical log data for patterns and indicators of compromise.

Integrating Security Logs into a Centralized SIEM Solution

Integrating security logs from different cloud providers into a centralized Security Information and Event Management (SIEM) solution is a key step in achieving unified monitoring. This integration enables correlation of events, threat detection, and incident response across the entire multi-cloud infrastructure.Methods for integrating logs include:

- Native Cloud Integrations: Many cloud providers offer native integrations with popular SIEM solutions. These integrations typically involve configuring log forwarding to the SIEM platform. For example, AWS CloudWatch Logs can be directly integrated with Splunk, and Azure Monitor logs can be sent to Azure Sentinel.

- Agent-Based Collection: Agents can be installed on virtual machines or servers within each cloud environment to collect logs and forward them to the SIEM. This method provides greater flexibility and control over log collection but requires more management overhead.

- API-Based Collection: SIEM solutions can use APIs provided by cloud providers to collect logs. This method is particularly useful for collecting logs from cloud-native services that may not support agent-based collection.

- Log Forwarders: Dedicated log forwarders, such as rsyslog or Fluentd, can be deployed to collect logs from various sources and forward them to the SIEM. These forwarders can be configured to parse and normalize logs before sending them, which can improve the SIEM’s ability to analyze the data.

When integrating logs, consider these points:

- Log Format Normalization: Standardizing log formats is crucial for effective analysis. SIEM solutions often provide features for parsing and normalizing logs from different sources.

- Log Retention Policies: Define appropriate log retention policies based on compliance requirements and security needs.

- SIEM Configuration: Configure the SIEM to correlate events, detect anomalies, and generate alerts based on predefined rules and threat intelligence feeds.

- Testing and Validation: Regularly test the log integration to ensure that logs are being collected and processed correctly.

Incident Response Plan

A detailed incident response plan is essential for effectively handling security incidents in a multi-cloud environment. This plan should Artikel the steps to be taken when a security incident is detected, ensuring a coordinated and efficient response.Key components of an incident response plan:

- Preparation: Establish clear roles and responsibilities, define communication channels, and develop a playbook for common incident scenarios.

- Detection and Analysis: Monitor security logs and alerts from the SIEM and other security tools. Analyze suspicious activities to determine the scope and severity of the incident.

- Containment: Isolate affected systems or accounts to prevent further damage. This may involve disabling compromised accounts, blocking malicious traffic, or taking systems offline.

- Eradication: Remove the cause of the incident, such as malware or unauthorized access. This may involve patching vulnerabilities, removing malicious code, or resetting compromised credentials.

- Recovery: Restore affected systems and data from backups. Verify that the systems are functioning correctly and that the vulnerability has been addressed.

- Post-Incident Activity: Conduct a post-incident review to identify lessons learned and improve the incident response plan. This may involve updating security policies, improving security controls, and providing additional training.

Example of an Incident Response Workflow:

- Detection: A security alert is triggered by the SIEM, indicating a potential security incident.

- Triage: The incident response team assesses the alert to determine its validity and severity.

- Investigation: The team investigates the incident, gathering evidence and analyzing logs to understand the scope and impact.

- Containment: The team takes steps to contain the incident, such as isolating affected systems or accounts.

- Remediation: The team remediates the incident by removing malware, patching vulnerabilities, or resetting credentials.

- Recovery: The team restores affected systems and data from backups.

- Post-Incident Review: The team conducts a post-incident review to identify lessons learned and improve the incident response plan.

Effective incident response in a multi-cloud environment necessitates:

- Cross-Cloud Coordination: Establish clear communication channels and protocols for coordinating incident response across different cloud providers.

- Automation: Automate incident response tasks, such as containment and remediation, to reduce response times and improve efficiency.

- Regular Training and Drills: Conduct regular training exercises and simulations to ensure that the incident response team is prepared to handle security incidents.

- Collaboration with Cloud Providers: Establish relationships with the security teams of cloud providers to facilitate communication and collaboration during incident response.

Policy Enforcement and Automation

Automating policy enforcement is crucial for maintaining a consistent security posture across multi-cloud environments. It reduces manual effort, minimizes human error, and enables faster responses to security events. This section explores techniques for automating enforcement, auditing compliance, and maintaining the unified security policy.

Automating Security Policy Enforcement

Automating security policy enforcement streamlines operations and ensures that security controls are consistently applied across all cloud environments. This approach enhances efficiency and minimizes the risk of misconfigurations or non-compliance.

- Infrastructure as Code (IaC): IaC allows you to define and manage infrastructure resources, including security configurations, through code. Tools like Terraform, AWS CloudFormation, and Azure Resource Manager enable automated deployment and configuration of security policies. For example, you can define network security groups, access control lists, and encryption settings as code, ensuring consistent implementation across all environments.

- Configuration Management Tools: Configuration management tools such as Ansible, Chef, and Puppet can be used to automate the configuration and enforcement of security policies on servers and applications. These tools ensure that systems are configured according to predefined security standards, including patch management, software updates, and security hardening.

- Security Information and Event Management (SIEM) Integration: Integrating SIEM systems with cloud platforms enables real-time monitoring and automated response to security events. SIEM tools can analyze logs and alerts from various cloud services and trigger automated actions based on predefined rules. For example, if a SIEM detects a suspicious login attempt, it can automatically block the user account or isolate the affected system.

- Cloud-Native Security Services: Leverage the native security services provided by each cloud provider, such as AWS Security Hub, Azure Security Center, and Google Cloud Security Command Center. These services offer centralized security management, policy enforcement, and compliance monitoring capabilities. They often include automated remediation features that can automatically address security issues.

- Automated Testing and Validation: Implement automated testing to validate security configurations and ensure compliance with security policies. This includes penetration testing, vulnerability scanning, and configuration audits. Automated testing tools can identify security vulnerabilities and misconfigurations, allowing for timely remediation.

Auditing Security Controls and Policy Compliance

Regularly auditing security controls and policy compliance is essential for identifying and addressing vulnerabilities, ensuring that security measures are effective, and maintaining a strong security posture.

- Regular Vulnerability Scanning: Conduct regular vulnerability scans using automated tools to identify weaknesses in systems and applications. These scans should cover all cloud environments and assess for known vulnerabilities, misconfigurations, and outdated software.

- Configuration Audits: Perform configuration audits to verify that systems and applications are configured according to security best practices and organizational policies. This includes checking for misconfigurations, unauthorized changes, and deviations from security standards.

- Log Analysis: Regularly analyze logs from all cloud services and systems to detect security incidents, identify suspicious activities, and monitor compliance with security policies. This includes reviewing access logs, security event logs, and system logs.

- Compliance Reporting: Generate compliance reports to demonstrate adherence to regulatory requirements and internal security policies. These reports should include evidence of security controls, audit results, and remediation efforts.

- Automated Compliance Checks: Implement automated compliance checks using tools like cloud provider-specific compliance frameworks (e.g., AWS Config, Azure Policy) or third-party compliance tools. These tools continuously monitor cloud resources for compliance with predefined rules and provide alerts when violations are detected.

Updating and Maintaining the Unified Security Policy

The unified security policy is a living document that needs to be regularly reviewed and updated to reflect changes in the threat landscape, business requirements, and cloud environments. The following procedure Artikels how to maintain the policy.

Procedure for Updating and Maintaining the Unified Security Policy:

- Review and Assessment: Conduct a periodic review of the current security policy, typically annually or more frequently if there are significant changes in the environment or threat landscape. Assess the policy’s effectiveness, identify any gaps, and gather feedback from stakeholders.

- Change Management: Establish a formal change management process for updating the security policy. This process should include a clear procedure for proposing changes, reviewing and approving changes, and communicating changes to stakeholders.

- Stakeholder Involvement: Involve relevant stakeholders, including security teams, IT teams, legal and compliance teams, and business units, in the policy review and update process. This ensures that the policy aligns with the needs and requirements of the organization.

- Policy Updates: Based on the review and assessment, update the security policy to address any identified gaps, reflect changes in the environment, and incorporate new security best practices. Clearly document all changes made to the policy.

- Communication and Training: Communicate the updated security policy to all relevant stakeholders and provide training to ensure that they understand the changes and their responsibilities.

- Implementation and Enforcement: Implement the updated security policy across all cloud environments and ensure that security controls are configured and enforced accordingly.

- Monitoring and Evaluation: Continuously monitor the effectiveness of the updated security policy and evaluate its impact on the organization’s security posture. Use metrics and key performance indicators (KPIs) to measure compliance and identify areas for improvement.

Ending Remarks

In conclusion, developing a unified security policy for a multi-cloud environment is not just a best practice; it’s a necessity for modern organizations. By implementing the strategies Artikeld in this guide, you can achieve a consistent security posture, reduce your attack surface, and ensure compliance with relevant regulations. Remember that security is an ongoing process, requiring continuous monitoring, adaptation, and improvement.

Embrace automation, foster collaboration, and stay informed to keep your multi-cloud environment secure and resilient.

Q&A

What is the primary benefit of a unified security policy?

A unified policy ensures consistent security across all cloud environments, reducing vulnerabilities and simplifying security management.

How often should a unified security policy be reviewed and updated?

The policy should be reviewed and updated at least annually, or more frequently if there are significant changes in the threat landscape, cloud environments, or compliance requirements.

What are the key components of a successful incident response plan in a multi-cloud environment?

A successful plan includes clear roles and responsibilities, established communication channels, well-defined incident handling procedures, and regular testing and exercises.

How can automation help with security policy enforcement?

Automation enables consistent application of security controls, reduces manual errors, and speeds up remediation efforts.

What are the main challenges in implementing a unified security policy?

Challenges include varying security features across cloud providers, integrating different security tools, and aligning security policies with diverse business requirements.