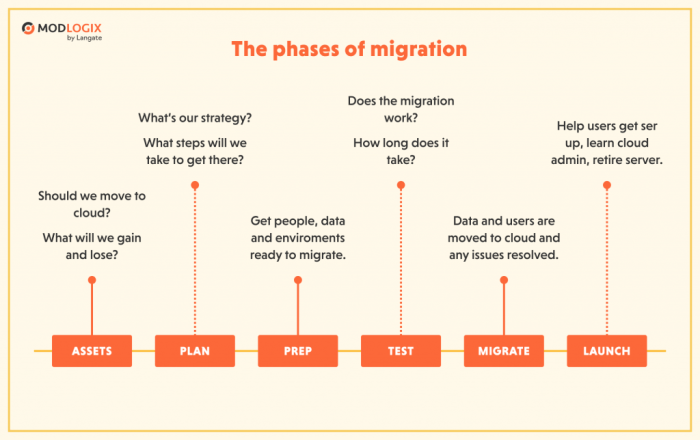

Embarking on a cloud migration journey requires meticulous planning and execution. This process, detailed in the following sections, Artikels the essential steps involved in creating a robust cloud migration plan and timeline. The objective is to move existing infrastructure, applications, and data to a cloud environment, optimizing resources and enhancing operational efficiency.

The core of successful cloud migration lies in a well-defined strategy, encompassing assessment, planning, execution, and post-migration optimization. This involves evaluating current infrastructure, defining clear business objectives, selecting the appropriate cloud provider and service model, and choosing the optimal migration strategy. A structured approach is crucial to minimize risks, control costs, and ensure a seamless transition. This guide will provide a structured approach, ensuring all facets of the migration process are addressed thoroughly.

Assessing Current Infrastructure

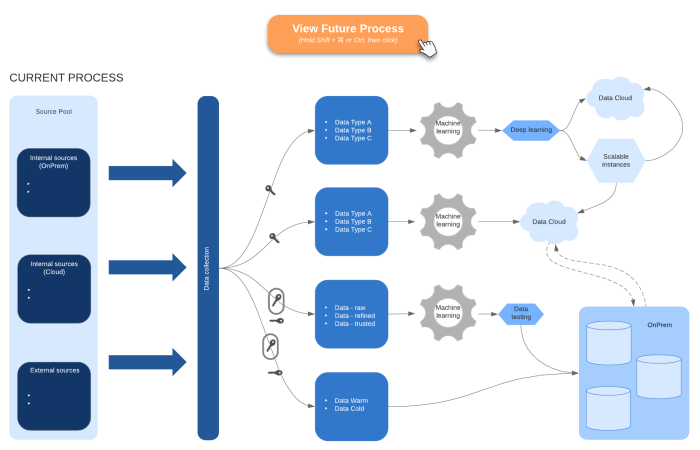

Understanding the existing on-premises infrastructure is the foundational step in cloud migration planning. This assessment provides a comprehensive view of the current IT landscape, enabling informed decisions about migration strategies and resource allocation. A thorough analysis minimizes risks, optimizes costs, and ensures a smooth transition to the cloud.

Common On-Premises Infrastructure Components

On-premises infrastructure typically encompasses a variety of hardware and software components, each playing a critical role in delivering IT services. These components must be thoroughly understood to create an effective migration plan.

- Servers: These are the core processing units, responsible for hosting applications, databases, and other critical workloads. Server types include:

- Physical Servers: Traditional servers that are dedicated hardware units.

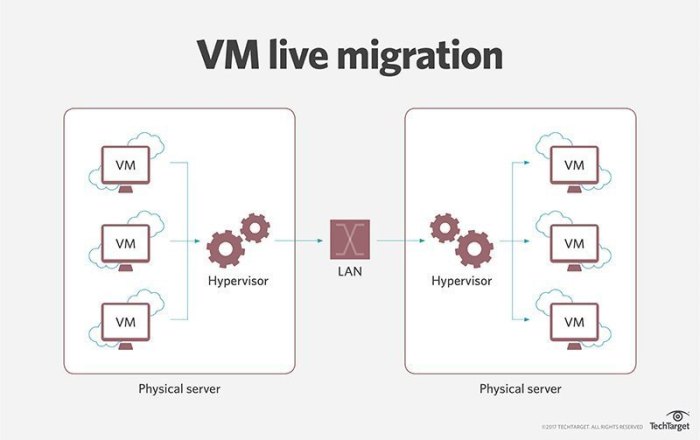

- Virtual Servers: Servers that run on virtual machines (VMs), providing greater flexibility and resource utilization.

- Storage: Storage systems provide the capacity to store data, including:

- Direct Attached Storage (DAS): Storage directly connected to a server.

- Network Attached Storage (NAS): Storage accessible over a network, typically using file-based protocols.

- Storage Area Network (SAN): High-performance storage networks using block-based protocols.

- Networking: Networking components facilitate communication and data transfer:

- Routers: Direct network traffic between different networks.

- Switches: Connect devices within a network.

- Firewalls: Protect the network from unauthorized access.

- Load Balancers: Distribute network traffic across multiple servers.

- Operating Systems: The software that manages hardware and provides a platform for applications. Common examples include Windows Server, Linux distributions (e.g., Ubuntu, CentOS), and proprietary operating systems.

- Databases: Systems for storing and managing data, such as:

- Relational Databases: Systems like Oracle, Microsoft SQL Server, and PostgreSQL.

- NoSQL Databases: Systems like MongoDB and Cassandra.

- Applications: The software applications that support business operations, including:

- Custom Applications: Applications developed specifically for the organization.

- Commercial Off-The-Shelf (COTS) Applications: Pre-packaged software like ERP and CRM systems.

- Security Infrastructure: Components that protect the IT environment, including:

- Identity and Access Management (IAM) systems: Systems for managing user identities and access rights.

- Security Information and Event Management (SIEM) systems: Systems for monitoring and analyzing security events.

Tools and Techniques for Assessing Existing IT Resources

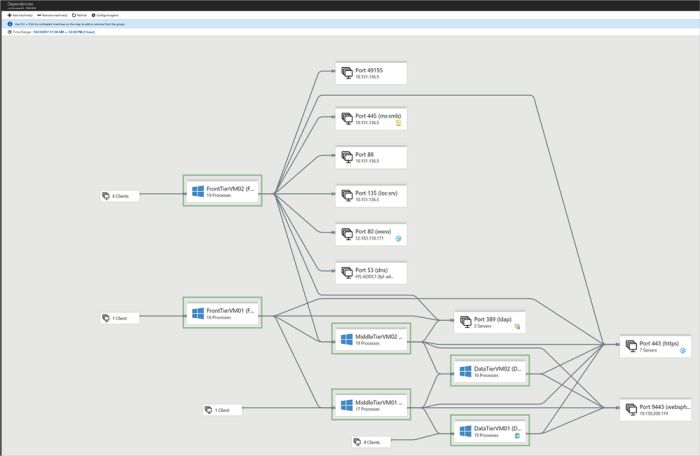

A comprehensive assessment requires the use of various tools and techniques to gather data and analyze the current infrastructure. The assessment should consider performance, utilization, dependencies, and compliance requirements.

- Inventory and Discovery Tools: These tools automatically discover and catalog hardware and software assets. Examples include:

- Network Scanners: Tools like Nmap and OpenVAS that identify devices and services on a network.

- Configuration Management Databases (CMDBs): Systems that store detailed information about IT assets.

- Performance Monitoring Tools: These tools monitor server performance, network traffic, and application behavior. Examples include:

- System Monitoring Tools: Tools like Nagios, Zabbix, and SolarWinds that track CPU usage, memory consumption, and disk I/O.

- Application Performance Monitoring (APM) Tools: Tools like New Relic and Dynatrace that provide insights into application performance and dependencies.

- Dependency Mapping Tools: These tools identify dependencies between applications, servers, and other infrastructure components. This information is crucial for planning the migration order and minimizing downtime. Examples include:

- Automated Dependency Mapping Tools: Tools that automatically discover dependencies.

- Manual Mapping: Documentation of dependencies, which can be time-consuming but often necessary for complex environments.

- Data Analysis and Reporting: Analyzing the data collected from the assessment tools to generate reports that inform the migration strategy. This includes:

- Utilization Reports: Reports showing resource utilization (CPU, memory, storage) to identify underutilized and overutilized resources.

- Performance Reports: Reports on application response times, database performance, and network latency.

- Cost Analysis: Analysis of current IT costs to identify opportunities for cost savings in the cloud.

Categorizing Infrastructure Components Based on Cloud Suitability

Categorizing infrastructure components based on their suitability for cloud migration is a crucial step in planning. This categorization informs the choice of migration strategy for each component.

| Infrastructure Component | Description | Cloud Suitability | Migration Strategy |

|---|---|---|---|

| Legacy Applications on Physical Servers | Applications running on older operating systems and hardware, often with high maintenance costs. | Low | Re-architect or Re-platform. Consider refactoring or replacing with cloud-native alternatives. |

| Virtual Machines (VMs) | Virtual machines running on a hypervisor, such as VMware or Hyper-V. | Medium | Lift-and-Shift, Re-platform, or Re-architect. Lift-and-shift is often the simplest approach for a quick migration. |

| Web Applications | Applications accessible via a web browser, often with stateless architecture. | High | Re-platform or Re-architect. Cloud-native services such as containerization or serverless can be considered. |

| Databases | Relational and NoSQL databases. | Medium to High (depending on database type and cloud provider support) | Re-platform, Re-architect, or Replace. Consider managed database services offered by cloud providers. |

| Storage Systems (NAS/SAN) | Network-attached storage and storage area networks. | Medium | Lift-and-Shift or Replace. Consider cloud-based object storage (e.g., AWS S3, Azure Blob Storage, Google Cloud Storage) for long-term storage. |

| Network Infrastructure (Firewalls, Routers) | On-premises network devices. | Medium | Re-architect. Leverage cloud-based network services, such as virtual firewalls and VPN gateways. |

| Identity and Access Management (IAM) Systems | Systems for managing user identities and access control. | High | Re-architect or Replace. Consider integrating with cloud-based IAM services. |

Defining Migration Goals and Objectives

Establishing clear business objectives is paramount for a successful cloud migration. Without well-defined goals, the migration effort can become directionless, leading to wasted resources, missed opportunities, and ultimately, failure to realize the anticipated benefits of cloud adoption. Defining objectives provides a framework for decision-making, allowing organizations to prioritize migration efforts, select appropriate cloud services, and measure the overall success of the project.

Importance of Clear Business Objectives

Clear business objectives provide a roadmap for the cloud migration process. They ensure alignment between the technical aspects of the migration and the overall strategic goals of the organization. These objectives serve as a benchmark against which progress can be measured and adjustments made as needed.

Measurable Key Performance Indicators (KPIs) for Successful Migration

KPIs are essential for quantifying the success of a cloud migration. They provide concrete metrics to track progress, identify areas for improvement, and demonstrate the value of the migration to stakeholders. Careful selection and monitoring of KPIs allow for data-driven decision-making throughout the migration lifecycle.

- Cost Reduction: The primary goal for many organizations is to reduce IT infrastructure costs.

- KPI: Percentage reduction in annual IT infrastructure spending. This KPI can be calculated by comparing the total cost of ownership (TCO) before and after the migration. For example, if an organization spends $1 million annually on on-premise infrastructure and, after migrating to the cloud, the spending is reduced to $700,000, the cost reduction is 30%.

- KPI: Cloud spending as a percentage of total IT budget. This provides a clear view of the cloud’s contribution to overall IT expenditure.

- Improved Scalability and Performance: Cloud environments offer inherent scalability.

- KPI: Percentage increase in application availability. This is often measured by tracking uptime. For instance, achieving 99.9% uptime indicates high availability.

- KPI: Reduction in application response time. This is critical for user experience. If the average response time decreases from 2 seconds to 0.5 seconds, it signifies a significant performance improvement.

- KPI: Peak load capacity increase. The capacity increase can be measured by the amount of users that can access a service.

- Enhanced Security and Compliance: Cloud providers offer robust security features and often help meet compliance requirements.

- KPI: Number of security incidents. This is a measure of the effectiveness of security measures. A decrease in the number of incidents indicates improved security posture.

- KPI: Compliance with relevant industry regulations (e.g., GDPR, HIPAA). Compliance can be measured by tracking the completion of compliance audits and the absence of any findings.

- Increased Agility and Innovation: Cloud platforms facilitate faster development cycles and enable organizations to bring new products and services to market more quickly.

- KPI: Time-to-market for new applications or features. A reduction in time-to-market indicates increased agility. If the time to launch a new application decreases from 6 months to 3 months, the organization has significantly improved its agility.

- KPI: Frequency of software releases. Frequent releases demonstrate a faster pace of innovation.

Examples of Common Cloud Migration Goals

Organizations often have multiple, overlapping goals for their cloud migrations. The specific goals will vary depending on the organization’s industry, size, and existing IT infrastructure.

- Cost Reduction: Migrating to the cloud can reduce costs by eliminating the need for on-premise hardware, reducing IT staff, and optimizing resource utilization. For instance, a study by Gartner found that organizations can reduce their IT infrastructure costs by up to 30% by migrating to the cloud.

- Improved Scalability: The cloud provides the ability to scale resources up or down on demand. This ensures that applications can handle peak loads without performance degradation. An e-commerce company can scale its infrastructure to handle the increased traffic during a holiday season.

- Enhanced Security: Cloud providers invest heavily in security, offering features like data encryption, intrusion detection, and access control. This can significantly improve an organization’s security posture. For example, many cloud providers offer security services that meet the requirements of the ISO 27001 standard.

- Increased Business Agility: Cloud platforms enable organizations to deploy new applications and services quickly, respond rapidly to changing market conditions, and innovate more effectively. This agility allows businesses to be more competitive.

- Improved Disaster Recovery: Cloud-based disaster recovery solutions provide a more reliable and cost-effective way to protect data and applications from outages. Organizations can replicate their data and applications to a cloud region and quickly recover in the event of a disaster.

- Enhanced Collaboration: Cloud-based collaboration tools can improve communication and teamwork. Employees can access data and collaborate on projects from anywhere with an internet connection.

Selecting a Cloud Provider and Service Model

Choosing the right cloud provider and service model is a critical step in the cloud migration process. This decision impacts cost, performance, security, and the overall success of the migration. A thorough evaluation of available options, considering specific workload requirements and business objectives, is essential. Understanding the nuances of each provider and service model enables informed decision-making and optimization of cloud resources.

Identifying Major Cloud Service Providers and Core Offerings

The cloud computing landscape is dominated by a few major players, each offering a comprehensive suite of services. These providers have established mature infrastructures and offer a wide array of services catering to diverse needs. The primary providers are:

- Amazon Web Services (AWS): AWS is the market leader, providing a vast array of services. Its core offerings include:

- Compute: Amazon Elastic Compute Cloud (EC2) provides virtual servers.

- Storage: Amazon Simple Storage Service (S3) offers object storage, and Amazon Elastic Block Storage (EBS) provides block storage.

- Database: Amazon Relational Database Service (RDS) offers managed relational databases, and Amazon DynamoDB provides NoSQL database services.

- Networking: Amazon Virtual Private Cloud (VPC) enables isolated network environments.

- Additional Services: AWS also offers a broad spectrum of services for analytics, machine learning, Internet of Things (IoT), and more.

- Microsoft Azure: Azure provides a comprehensive set of services, deeply integrated with Microsoft’s existing ecosystem. Key offerings include:

- Compute: Azure Virtual Machines offers virtual server instances.

- Storage: Azure Blob Storage provides object storage, and Azure Disk Storage offers block storage.

- Database: Azure SQL Database offers managed relational databases, and Azure Cosmos DB provides NoSQL database services.

- Networking: Azure Virtual Network allows the creation of private networks.

- Additional Services: Azure provides extensive services for hybrid cloud scenarios, data analytics, and artificial intelligence.

- Google Cloud Platform (GCP): GCP focuses on innovation in data analytics, machine learning, and containerization. Core offerings include:

- Compute: Google Compute Engine provides virtual machine instances.

- Storage: Google Cloud Storage offers object storage, and Persistent Disk provides block storage.

- Database: Cloud SQL offers managed relational databases, and Cloud Datastore provides NoSQL database services.

- Networking: Google Virtual Private Cloud (VPC) enables private network environments.

- Additional Services: GCP excels in data analytics, machine learning, and containerization with services like BigQuery and Kubernetes Engine.

Comparing Cloud Service Models and Workload Suitability

Cloud service models categorize how cloud services are delivered. Each model offers a different level of control, management responsibility, and cost implications. Understanding these models is essential for aligning the chosen service model with the specific requirements of each workload.

- Infrastructure as a Service (IaaS): IaaS provides the fundamental building blocks of IT infrastructure: servers, storage, and networking. The customer manages the operating system, middleware, and applications.

- Suitability: Ideal for workloads requiring high levels of control and flexibility, such as migrating existing on-premises infrastructure, testing and development, and running virtual machines.

- Example: Migrating a legacy application requiring precise configuration control.

- Platform as a Service (PaaS): PaaS provides a platform for developing, running, and managing applications. The provider manages the underlying infrastructure and operating system.

- Suitability: Well-suited for application development and deployment, providing a streamlined environment for developers.

- Example: Developing and deploying a web application using managed application servers.

- Software as a Service (SaaS): SaaS delivers software applications over the internet, typically on a subscription basis. The provider manages the entire application stack.

- Suitability: Best for applications that require minimal management overhead and are accessed by end-users.

- Example: Using a CRM system like Salesforce or a productivity suite like Microsoft 365.

Comparing Pricing Models for Compute Instances

Pricing models for compute instances vary significantly across providers. Understanding these models is crucial for cost optimization. The following table provides a simplified comparison of pricing models for compute instances, highlighting key differences:

| Provider | Pricing Model | Key Features | Considerations |

|---|---|---|---|

| AWS (EC2) | On-Demand, Reserved Instances, Spot Instances | On-Demand: Pay-as-you-go; Reserved Instances: Significant discounts for committed usage; Spot Instances: Bidding on unused capacity. | Spot Instances offer the lowest prices but can be interrupted; Reserved Instances require upfront commitment. |

| Azure (Virtual Machines) | Pay-as-you-go, Reserved Instances, Spot VMs | Pay-as-you-go: Hourly pricing; Reserved Instances: Discounted pricing for committed usage; Spot VMs: Unused capacity, with potential for eviction. | Reserved Instances provide substantial savings; Spot VMs are suitable for fault-tolerant workloads. |

| GCP (Compute Engine) | On-Demand, Committed Use Discounts, Preemptible VMs | On-Demand: Per-second pricing; Committed Use Discounts: Discounts for committed usage; Preemptible VMs: Significantly cheaper, but can be terminated. | Committed Use Discounts offer cost savings; Preemptible VMs are ideal for batch processing. |

Choosing a Migration Strategy

Selecting the optimal migration strategy is a critical decision in cloud migration planning. The choice significantly impacts the overall project cost, timeline, and the ultimate success of the cloud transformation. A well-defined strategy aligns with the defined migration goals and objectives, maximizing the benefits of cloud adoption while minimizing risks. This section details the various migration strategies and provides a structured approach to selecting the most appropriate one for a given workload.

Different Migration Strategies

The selection of a migration strategy is based on factors like application complexity, business goals, and available resources. Several strategies exist, each with its own trade-offs. Understanding these options is paramount to informed decision-making.

- Rehosting (Lift and Shift): This strategy involves moving applications and their associated data to the cloud with minimal or no changes. The existing infrastructure is essentially replicated in the cloud environment.

- Advantages:

- Fastest migration time, often leading to quicker realization of cloud benefits.

- Lowest initial cost due to minimal application code changes.

- Reduced risk as the application’s functionality remains largely unchanged.

- Disadvantages:

- Does not take full advantage of cloud-native features and services.

- May result in higher operational costs if the infrastructure is not optimized for the cloud.

- Limited scalability and performance improvements compared to other strategies.

- Example: A company moves its virtualized servers running a legacy application to an Infrastructure-as-a-Service (IaaS) platform like Amazon EC2 or Azure Virtual Machines, with minimal configuration changes.

- Replatforming (Lift, Tinker, and Shift): This strategy involves migrating an application to the cloud, making some optimizations to leverage cloud services without fundamentally changing the application’s core architecture.

- Advantages:

- Offers a balance between migration speed and cloud optimization.

- Can improve application performance and scalability.

- Often reduces operational costs compared to rehosting.

- Disadvantages:

- Requires more effort and expertise than rehosting.

- May still not fully leverage cloud-native features.

- Increased complexity compared to rehosting.

- Example: Migrating a database to a cloud-managed database service (e.g., Amazon RDS or Azure SQL Database) while keeping the application code mostly unchanged.

- Refactoring (Re-architecting): This strategy involves redesigning and rewriting an application to fully leverage cloud-native features and services. This is often the most complex and time-consuming approach.

- Advantages:

- Maximizes the benefits of cloud computing, including scalability, elasticity, and cost optimization.

- Improves application performance and resilience.

- Provides the greatest opportunity for innovation.

- Disadvantages:

- Highest cost and longest migration time.

- Significant risk due to the extensive code changes.

- Requires a high level of expertise and planning.

- Example: Rewriting a monolithic application into a microservices architecture, using cloud-native services like AWS Lambda or Azure Functions.

- Repurchase: This strategy involves replacing an existing application with a Software-as-a-Service (SaaS) solution.

- Advantages:

- Simplest and fastest migration path.

- Reduced operational overhead, as the vendor manages the application.

- Lower upfront costs compared to other strategies.

- Disadvantages:

- Loss of control over the application’s functionality and customization.

- Potential vendor lock-in.

- Data migration challenges if the SaaS solution’s data model is incompatible with the existing application.

- Example: Replacing an on-premise CRM system with Salesforce or replacing an email server with Microsoft 365.

- Retire: This strategy involves decommissioning an application that is no longer needed or used.

- Advantages:

- Reduces costs and complexity by eliminating unnecessary applications.

- Simplifies the overall cloud migration project.

- Frees up resources for more critical applications.

- Disadvantages:

- Requires careful assessment to ensure that the application is truly obsolete.

- May involve data archiving or deletion.

- Example: Shutting down an outdated application that is no longer supported or used by the business.

Selecting the Optimal Migration Strategy

Choosing the appropriate migration strategy requires a structured approach, involving a thorough assessment of the existing workload. This involves evaluating several factors to determine the best fit.

- Assess the Application: Evaluate the application’s architecture, dependencies, and business criticality.

- Determine the application’s technical complexity.

- Identify the application’s dependencies on other systems.

- Assess the application’s business value and criticality.

- Define performance, scalability, cost, and security objectives.

- Determine the desired level of cloud-native integration.

- Establish a realistic budget and timeline.

- Application Architecture: Monolithic applications might be suitable for rehosting or replatforming. Microservices architectures are ideal for refactoring.

- Application Dependencies: Applications with complex dependencies might be more suitable for rehosting or replatforming initially.

- Data Volume and Complexity: Large and complex datasets might require careful consideration during replatforming or refactoring.

- Business Requirements: Critical applications with stringent performance requirements may necessitate refactoring.

- Cost Constraints: Organizations with tight budgets might opt for rehosting or replatforming to minimize initial costs.

- Skills and Resources: The availability of skilled personnel can influence the choice. Rehosting requires less specialized expertise than refactoring.

- Rehosting: Best for applications with minimal dependencies and a need for rapid migration.

- Replatforming: Suitable for applications where some optimization is desired without significant code changes.

- Refactoring: Ideal for applications that need to fully leverage cloud capabilities and achieve maximum scalability and performance.

- Repurchase: Appropriate for applications that can be replaced by a SaaS solution.

- Retire: Best for applications that are no longer used or needed.

Creating a Detailed Migration Plan

Developing a comprehensive migration plan is crucial for a successful cloud transition. This plan serves as a roadmap, outlining the tasks, timelines, resource allocation, and responsibilities required to move applications and data to the cloud environment. A well-defined plan minimizes risks, reduces downtime, and ensures a smoother transition process.

Organizing a Comprehensive Migration Plan

A comprehensive migration plan necessitates a structured approach, breaking down the complex process into manageable components. It should meticulously Artikel all tasks, timelines, and resource allocations.

Key elements of a comprehensive migration plan include:

- Scope Definition: Clearly defining the scope of the migration, specifying which applications, data, and infrastructure components will be migrated. This establishes the boundaries of the project and prevents scope creep.

- Task Breakdown: Decomposing the migration process into individual tasks. These tasks should be granular enough to allow for accurate estimation of effort and dependencies. Examples include:

- Assessing application dependencies.

- Preparing the target cloud environment.

- Data extraction, transformation, and loading (ETL).

- Application testing and validation.

- Timeline Development: Establishing a realistic timeline for each task and the overall migration project. This includes identifying critical paths and dependencies, and incorporating buffer time for unforeseen issues.

- Resource Allocation: Identifying and allocating the necessary resources, including personnel, tools, and budget. Clearly defining roles and responsibilities ensures accountability and efficient task execution.

- Risk Management: Identifying potential risks associated with the migration, such as data loss, application downtime, and security vulnerabilities. Developing mitigation strategies for each identified risk is crucial.

- Communication Plan: Establishing a communication plan to keep stakeholders informed of the progress, challenges, and milestones. This includes defining communication channels, frequency, and responsible parties.

- Testing and Validation: Implementing thorough testing and validation procedures to ensure the migrated applications and data function correctly in the cloud environment. This involves unit testing, integration testing, and user acceptance testing (UAT).

- Rollback Plan: Developing a rollback plan to revert to the original on-premises environment in case of critical issues during the migration. This plan should include steps for restoring data and applications.

Creating a Gantt Chart for a Sample Application Migration

A Gantt chart visually represents the project schedule, illustrating the tasks, their start and end dates, and their dependencies. This visual representation aids in project management and tracking progress. For example, consider the migration of a simple e-commerce application, “ShopSmart,” to Amazon Web Services (AWS). The application consists of a web front-end, a database (e.g., PostgreSQL), and a caching layer (e.g., Redis).

The Gantt chart would illustrate the following sequential steps:

| Task | Start Date | End Date | Duration (Days) | Dependencies |

|---|---|---|---|---|

| 1. Environment Preparation (AWS) | 2024-01-08 | 2024-01-12 | 5 | None |

| 2. Database Schema Migration | 2024-01-10 | 2024-01-17 | 7 | 1. Environment Preparation |

| 3. Web Application Code Migration | 2024-01-15 | 2024-01-22 | 7 | 1. Environment Preparation |

| 4. Data Migration (ShopSmart Database) | 2024-01-18 | 2024-01-25 | 7 | 2. Database Schema Migration |

| 5. Cache Migration (Redis) | 2024-01-23 | 2024-01-28 | 5 | 1. Environment Preparation |

| 6. Application Testing and Validation | 2024-01-29 | 2024-02-05 | 7 | 3. Web Application Code Migration, 4. Data Migration, 5. Cache Migration |

| 7. User Acceptance Testing (UAT) | 2024-02-06 | 2024-02-13 | 7 | 6. Application Testing and Validation |

| 8. Cutover and Go-Live | 2024-02-14 | 2024-02-14 | 1 | 7. User Acceptance Testing (UAT) |

This Gantt chart provides a clear visual representation of the project timeline, dependencies, and potential bottlenecks. It enables the project manager to track progress, identify delays, and make necessary adjustments.

Elaborating on Team Member Roles and Responsibilities

Clearly defined roles and responsibilities are essential for efficient collaboration and accountability during the migration process. Each team member should understand their specific tasks and how their contributions impact the overall project success.

Example roles and responsibilities for a typical cloud migration team include:

- Project Manager: Oversees the entire migration project, responsible for planning, execution, and monitoring. They manage the project timeline, budget, resources, and communication. The project manager facilitates team meetings, tracks progress, and resolves issues.

- Cloud Architect: Designs the cloud infrastructure, selecting the appropriate services and configurations. They ensure the architecture meets the performance, security, and cost requirements. The cloud architect is responsible for the overall cloud design and its implementation.

- Application Developers: Migrate and refactor the application code to be compatible with the cloud environment. They may need to modify code, optimize performance, and address compatibility issues. The developers are responsible for ensuring the application functions correctly in the cloud.

- Database Administrator (DBA): Manages the database migration, including schema migration, data transfer, and performance tuning. The DBA ensures data integrity and availability throughout the migration process.

- Network Engineer: Configures and manages the network infrastructure, including connectivity, security, and routing. They ensure seamless communication between the on-premises and cloud environments during the migration.

- Security Specialist: Implements security measures to protect the application and data in the cloud. They configure security policies, manage access controls, and monitor for security threats.

- Testing and Quality Assurance (QA) Engineer: Develops and executes test plans to validate the migrated application and data. They perform unit testing, integration testing, and user acceptance testing (UAT). The QA engineer ensures the quality and reliability of the migrated application.

By clearly defining these roles and responsibilities, the migration team can work collaboratively, minimizing errors and ensuring a successful cloud transition. A well-defined RACI (Responsible, Accountable, Consulted, Informed) matrix can further clarify these roles, outlining who is responsible for each task, who approves the work, who needs to be consulted, and who needs to be informed of progress.

Data Migration and Security Considerations

Data migration and security are critical components of a successful cloud migration strategy. Ensuring data integrity, confidentiality, and availability throughout the migration process is paramount. This section Artikels the procedures for secure data migration and discusses essential security best practices for cloud environments.

Procedures for Secure Data Migration

Securing data during migration requires a multi-faceted approach. This involves encrypting data both in transit and at rest, implementing robust access controls, and rigorously validating data integrity. The following procedures provide a structured approach:

- Data Encryption in Transit: Employ Transport Layer Security (TLS) or Secure Sockets Layer (SSL) protocols to encrypt data during transfer between the on-premises environment and the cloud provider’s infrastructure. This protects data from eavesdropping and interception. Ensure that the encryption protocols used are up-to-date and resistant to known vulnerabilities. For example, using TLS 1.3, the latest version, offers enhanced security and performance compared to older versions.

- Data Encryption at Rest: Implement encryption for data stored within the cloud environment. This can be achieved using encryption keys managed by the cloud provider (e.g., AWS KMS, Azure Key Vault, Google Cloud KMS) or through customer-managed keys. Encryption protects data even if the underlying storage infrastructure is compromised. Consider using hardware security modules (HSMs) for enhanced key management and security.

- Access Control and Identity Management: Establish strict access controls using the cloud provider’s Identity and Access Management (IAM) services. Implement the principle of least privilege, granting users and applications only the minimum necessary permissions to perform their tasks. Use multi-factor authentication (MFA) for all user accounts to enhance security. Regularly review and audit access permissions to ensure compliance and prevent unauthorized access.

- Data Integrity Validation: Implement data validation checks throughout the migration process to ensure data accuracy and completeness. Use checksums, hash functions, and other integrity verification methods to detect and correct any data corruption during transfer or storage. Conduct regular data validation audits to verify the integrity of the migrated data.

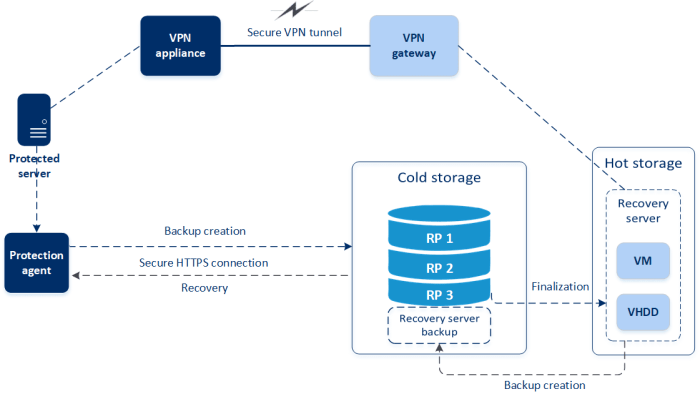

- Network Security: Configure a secure network environment within the cloud, utilizing virtual private networks (VPNs), firewalls, and intrusion detection systems (IDS). Segment the network into different security zones to isolate critical data and applications. Regularly monitor network traffic for suspicious activity.

- Data Backup and Recovery: Implement a robust data backup and recovery strategy to protect against data loss. Utilize the cloud provider’s backup services or third-party backup solutions to create regular backups of the migrated data. Test the recovery process regularly to ensure that data can be restored effectively in case of a disaster or data loss event.

- Compliance and Governance: Ensure compliance with relevant data privacy regulations (e.g., GDPR, CCPA) and industry-specific standards. Implement data governance policies and procedures to manage data throughout its lifecycle. Conduct regular security assessments and audits to identify and address any security vulnerabilities.

Data Migration Tools and Techniques

Various tools and techniques are available to facilitate data migration to the cloud. The choice of tools and techniques depends on factors such as data volume, migration complexity, and desired downtime.

- Cloud Provider-Specific Tools: Major cloud providers offer tools and services specifically designed for data migration. For example:

- AWS: AWS Database Migration Service (DMS) for database migrations, AWS Snowball for large data transfers, and AWS DataSync for online data transfer and synchronization.

- Azure: Azure Database Migration Service for database migrations, Azure Data Box for offline data transfer, and Azure Data Factory for data integration and transformation.

- Google Cloud: Google Cloud Storage Transfer Service for data transfer, BigQuery Data Transfer Service for data ingestion into BigQuery, and Storage Transfer Service for data transfer between cloud storage buckets.

These tools often provide features such as automated data transfer, schema conversion, and real-time replication.

- Third-Party Migration Tools: Several third-party tools are available to assist with data migration, offering a broader range of features and support for various data sources and targets. These tools often provide advanced capabilities such as data transformation, deduplication, and data masking. Examples include:

- Dell EMC Cloud Data Protection: Provides backup and recovery solutions for cloud environments.

- Commvault: Offers data protection and management solutions for cloud, on-premises, and hybrid environments.

- Veeam: Delivers backup and replication solutions for virtual, physical, and cloud-based workloads.

- Lift and Shift (Rehosting): This approach involves migrating applications and data to the cloud with minimal changes. It is often the fastest and easiest way to migrate workloads. However, it may not fully leverage the benefits of the cloud.

- Replatforming: This approach involves making some modifications to the application to take advantage of cloud-native services. This may involve changing the database or operating system.

- Refactoring: This approach involves redesigning and rewriting the application to fully leverage cloud-native services. This is the most complex approach but can offer the greatest benefits.

- Hybrid Cloud Migration: For organizations with sensitive data or compliance requirements, a hybrid cloud migration strategy, combining on-premises infrastructure with cloud resources, might be preferred. This involves moving some data and applications to the cloud while keeping others on-premises.

Key Security Best Practices for Cloud Environments

The following quote summarizes key security best practices for cloud environments:

“In a cloud environment, security is a shared responsibility. The cloud provider is responsible for the security

- of* the cloud (e.g., the infrastructure), while the customer is responsible for the security

- in* the cloud (e.g., data, applications, and access controls). Implementing strong security practices, including encryption, access control, and regular security assessments, is essential to protect data and applications in the cloud.”

Application Migration and Testing

Migrating applications to the cloud necessitates a methodical approach, encompassing careful planning, execution, and rigorous testing. The process aims to ensure applications function seamlessly in the new environment, preserving functionality, performance, and security. A comprehensive testing strategy is crucial for validating the migration’s success and mitigating potential risks.

Application Migration Process

The application migration process typically involves several distinct phases, each critical for a successful transition. These phases can be executed in a phased approach, migrating applications in batches to minimize disruption and allow for iterative learning and refinement.

- Assessment and Planning: This initial phase involves a thorough evaluation of the existing application, its dependencies, and the target cloud environment. Key considerations include application architecture, data storage requirements, performance characteristics, and security protocols. This stage helps define the migration strategy and identify potential challenges.

- Application Refactoring (if required): Some applications may require refactoring or modification to optimize them for the cloud environment. This might involve changes to the application’s code, architecture, or data storage mechanisms. This stage is especially important when moving from a monolithic architecture to a microservices-based approach.

- Environment Setup: The cloud environment is configured to host the migrated application. This includes setting up virtual machines, databases, networking configurations, and other necessary resources. The environment setup should align with the application’s requirements and the chosen cloud provider’s best practices.

- Data Migration: The application’s data is migrated to the cloud. This process often involves data transformation, validation, and replication. The specific techniques used depend on the volume of data, the complexity of the data schema, and the chosen cloud database services.

- Application Deployment: The application code and associated components are deployed to the cloud environment. This process may involve using containerization technologies like Docker and orchestration platforms like Kubernetes to manage the application’s deployment and scaling.

- Testing and Validation: Comprehensive testing is conducted to verify the application’s functionality, performance, and security in the cloud environment. This includes unit tests, integration tests, user acceptance testing, and performance testing.

- Cutover and Go-Live: Once testing is complete and the application is deemed ready, the application is cut over to the cloud environment. This involves redirecting user traffic to the new cloud-based application and decommissioning the on-premise application.

- Monitoring and Optimization: After the application goes live, ongoing monitoring is essential to ensure optimal performance and identify potential issues. Performance metrics are analyzed, and the application is optimized as needed.

Testing Phases and Objectives

A well-defined testing strategy is essential for a successful cloud migration. Testing should be conducted throughout the migration process, from the initial planning phase to the post-migration operational phase. The following table Artikels the different testing phases, their objectives, and the expected outcomes.

| Testing Phase | Objectives | Expected Outcomes | Metrics |

|---|---|---|---|

| Pre-Migration Testing (Assessment Phase) | Assess application compatibility with the cloud environment; Identify potential migration challenges; Establish baseline performance metrics. | Documentation of application architecture, dependencies, and potential risks; Performance benchmarks in the on-premise environment. | Response times, transaction throughput, resource utilization (CPU, memory, disk I/O). |

| Unit Testing (Development Phase) | Verify the functionality of individual code components; Ensure code changes and refactoring do not introduce regressions. | Individual code modules function correctly; Code changes are validated. | Code coverage, number of passed/failed tests. |

| Integration Testing (Deployment Phase) | Verify the interaction between different application components; Ensure data flows correctly between different services and databases. | Integrated components function as expected; Data integrity is maintained. | Number of successful/failed integration test cases; Data consistency checks. |

| System Testing (Deployment Phase) | Validate the application’s functionality as a whole; Ensure the application meets functional requirements; Verify security and compliance. | Application functions correctly; Security vulnerabilities are identified and addressed; Compliance requirements are met. | Number of successful/failed system test cases; Security scan results; Compliance reports. |

| User Acceptance Testing (UAT) (Deployment Phase) | Obtain user feedback on the application’s functionality and usability; Validate that the application meets user requirements. | User feedback and acceptance of the application; Identified usability issues. | Number of reported user issues; User satisfaction scores. |

| Performance Testing (Deployment Phase) | Measure the application’s performance under different load conditions; Identify performance bottlenecks; Ensure scalability. | Application performs within acceptable performance thresholds; Performance bottlenecks are identified and addressed; Application scales as expected. | Response times, transaction throughput, resource utilization, error rates. |

| Security Testing (Deployment Phase and Ongoing) | Identify security vulnerabilities; Ensure compliance with security policies and regulations. | Security vulnerabilities are identified and addressed; Security posture is improved. | Penetration test results; Vulnerability scan results; Compliance reports. |

| Post-Migration Testing (Ongoing) | Monitor application performance and identify any issues after the migration; Ensure the application continues to meet performance and security requirements. | Application performance remains within acceptable thresholds; Security incidents are minimized. | Application performance metrics; Security incident reports; User feedback. |

Developing a Rollback Strategy and Disaster Recovery Plan

A comprehensive cloud migration plan must account for potential failures and unforeseen events. This necessitates the creation of a robust rollback strategy to revert to the pre-migration state if issues arise, and a disaster recovery (DR) plan to ensure business continuity in the event of significant outages or data loss within the cloud environment. Both strategies are critical for minimizing downtime, data loss, and the overall risk associated with cloud migration.

Importance of a Rollback Strategy in Case of Migration Failures

A well-defined rollback strategy is crucial for mitigating the risks associated with a cloud migration. It provides a mechanism to revert to the original on-premises or existing cloud environment if problems are encountered during or after the migration process. This strategy is not merely about reverting; it’s about a structured approach that minimizes disruption and potential data loss.

- Identify Critical Components: The first step is to identify the critical applications, data, and infrastructure components that are essential for business operations. Prioritize these components for the rollback process. This analysis should be based on business impact assessments, RTO (Recovery Time Objective), and RPO (Recovery Point Objective) values.

- Data Backup and Synchronization: Implement a robust data backup and synchronization strategy. Regularly back up data both on-premises and in the cloud, ensuring that backups are consistent and up-to-date. Utilize tools that allow for incremental backups to minimize the amount of data that needs to be restored during a rollback.

- Testing the Rollback Process: Regularly test the rollback process in a non-production environment to validate its effectiveness. This involves simulating migration failures and verifying that the rollback can be executed successfully within the defined RTO. Document the entire process, including troubleshooting steps and contact information.

- Automated Rollback Mechanisms: Automate the rollback process as much as possible. Automation can reduce the time required for rollback and minimize the potential for human error. This can involve scripting, using specialized tools, or integrating with existing infrastructure management systems.

- Communication Plan: Establish a clear communication plan to keep stakeholders informed during a rollback. This includes notifying affected users, providing status updates, and coordinating with relevant teams.

Steps to Create a Disaster Recovery Plan for the Cloud Environment

A robust Disaster Recovery (DR) plan is essential for maintaining business continuity in the cloud. It provides a framework for restoring IT infrastructure and data in the event of a disaster, such as a natural disaster, hardware failure, or cyberattack. The DR plan should be designed to meet specific RTO and RPO requirements.

- Risk Assessment: Conduct a thorough risk assessment to identify potential threats and vulnerabilities that could impact the cloud environment. This includes analyzing the business impact of various disaster scenarios, such as data loss, service outages, and security breaches.

- Define Recovery Objectives: Establish clear recovery objectives, including RTO and RPO. RTO defines the maximum acceptable downtime, while RPO defines the maximum acceptable data loss. These objectives should be aligned with business requirements and the criticality of the applications.

- Select a DR Strategy: Choose a DR strategy that aligns with the recovery objectives and budget. Several DR strategies are available, including:

- Backup and Restore: This involves backing up data to a secondary location and restoring it in the event of a disaster. It is the least expensive option but can have longer RTOs.

- Pilot Light: A minimal set of resources (e.g., servers, databases) is provisioned in the secondary location. This allows for faster recovery than backup and restore.

- Warm Standby: A scaled-down version of the production environment is maintained in the secondary location, ready to be scaled up quickly.

- Hot Standby/Active-Active: A fully functional, production-ready environment is maintained in the secondary location, and data is continuously replicated. This provides the fastest recovery time but is the most expensive option.

- Design the DR Architecture: Design the DR architecture based on the chosen strategy. This includes selecting the appropriate cloud services, configuring replication mechanisms, and defining failover and failback procedures.

- Implement and Test: Implement the DR plan by configuring the necessary cloud resources and setting up replication and failover mechanisms. Regularly test the plan to validate its effectiveness and identify any areas for improvement. Testing should include failover and failback exercises.

- Documentation and Training: Document the DR plan thoroughly, including procedures, contact information, and recovery steps. Provide training to relevant personnel to ensure they understand their roles and responsibilities during a disaster.

Examples of Disaster Recovery Solutions and Their Implementation

Various DR solutions are available in the cloud, each with its strengths and weaknesses. The choice of solution depends on the specific requirements and the budget.

- Amazon Web Services (AWS): AWS offers a range of DR solutions, including:

- AWS Backup: A fully managed backup service that simplifies data protection across AWS services.

- AWS CloudEndure Disaster Recovery: A service that provides automated, block-level replication for disaster recovery. It enables near-zero RTO and RPO.

- AWS Elastic Disaster Recovery: A service for quickly recovering physical, virtual, and cloud-based servers.

Example: A company uses AWS CloudEndure Disaster Recovery to replicate its critical applications to a secondary AWS region. If the primary region experiences an outage, the company can quickly fail over to the secondary region, minimizing downtime.

- Microsoft Azure: Azure provides several DR options:

- Azure Backup: A cloud-based backup service that protects data across various Azure services.

- Azure Site Recovery: A service that enables replication, failover, and failback of virtual machines and applications.

Example: A financial institution uses Azure Site Recovery to replicate its virtual machines to a secondary Azure region. In case of a disaster, the institution can quickly fail over to the secondary region, ensuring business continuity.

- Google Cloud Platform (GCP): GCP offers DR solutions, including:

- Cloud Storage: Used for storing backups and enabling data recovery.

- Cloud SQL: Provides automated backups and replication for databases.

- Compute Engine: Can be configured with different replication and DR strategies.

Example: A healthcare provider uses Cloud SQL to replicate its database to a secondary GCP region. This ensures that patient data is protected and available in case of a disaster.

Post-Migration Optimization and Management

Post-migration optimization and management are critical phases in the cloud journey. Successfully transitioning to the cloud necessitates continuous refinement to leverage its full potential. This involves actively monitoring performance, controlling costs, and adapting to evolving business needs. Ignoring these steps can lead to underutilized resources, unexpected expenses, and diminished return on investment.

Optimizing Cloud Resources After Migration

Optimizing cloud resources post-migration focuses on maximizing efficiency, performance, and cost-effectiveness. This is an ongoing process that requires a proactive approach to ensure the cloud environment aligns with evolving business requirements.One crucial aspect is right-sizing virtual machines (VMs). This involves analyzing the actual resource utilization of VMs and adjusting their configuration to match demand. Over-provisioning leads to unnecessary costs, while under-provisioning can result in performance bottlenecks.

For instance, a company might initially migrate its application to a VM with 8 vCPUs and 32GB of RAM. After monitoring, they discover the application rarely utilizes more than 4 vCPUs and 16GB of RAM. Right-sizing allows them to downgrade the VM, saving costs without impacting performance.Another important area is the utilization of auto-scaling. Auto-scaling dynamically adjusts the number of resources based on demand.

This is particularly useful for applications with fluctuating workloads. For example, an e-commerce website experiences peak traffic during holiday seasons. Auto-scaling can automatically provision additional servers to handle the increased load, ensuring a seamless user experience. Conversely, during off-peak hours, the number of servers can be scaled down to reduce costs.Furthermore, optimizing storage configurations is vital. Cloud providers offer various storage options, each with different performance characteristics and costs.

Analyzing data access patterns helps determine the appropriate storage tier for different data types. Frequently accessed data should be stored on high-performance storage, while less frequently accessed data can be stored on lower-cost, archival storage.Implementing resource tagging is also a beneficial strategy. Tagging resources allows for better organization, cost allocation, and automation. By tagging resources with relevant metadata, such as application name, department, or environment, it becomes easier to track resource usage and costs.

This enables better cost management and facilitates the identification of underutilized resources.

Monitoring and Managing Cloud Costs

Monitoring and managing cloud costs is essential for maximizing the return on investment in cloud services. The “pay-as-you-go” model of cloud computing necessitates continuous vigilance to avoid unexpected expenses. Effective cost management involves a combination of monitoring, analysis, and proactive optimization.A fundamental step is to establish a robust cost monitoring system. Cloud providers offer various tools for tracking resource consumption and associated costs.

These tools provide detailed insights into spending patterns, allowing organizations to identify areas where costs can be reduced. For instance, cloud providers often provide dashboards that visualize cost trends, break down costs by service, and alert users to unusual spending patterns.Cost allocation is another critical aspect. This involves assigning cloud costs to specific departments, projects, or applications. By allocating costs, organizations can gain a better understanding of how cloud resources are being used and identify areas where costs are disproportionately high.

This can be achieved through resource tagging, which allows costs to be associated with specific resources.Budgeting and forecasting are also essential for managing cloud costs. Setting budgets and forecasting future spending helps organizations to control costs and avoid exceeding their financial targets. Cloud providers offer tools that allow users to set budgets and receive alerts when spending approaches or exceeds the budgeted amount.

Furthermore, historical data can be used to forecast future spending based on anticipated workload growth.Rightsizing resources, as mentioned earlier, is a key cost optimization strategy. By ensuring that resources are appropriately sized to meet demand, organizations can avoid paying for unused capacity. This involves continuously monitoring resource utilization and adjusting the configuration of resources as needed.Implementing cost-saving strategies such as reserved instances or committed use discounts can also significantly reduce cloud costs.

Reserved instances offer discounted pricing in exchange for a commitment to use a specific instance type for a specified period. Committed use discounts provide similar cost savings for compute services in exchange for a commitment to a minimum level of resource consumption.

Essential Tools for Cloud Performance Monitoring and Resource Management

Several tools are crucial for effective cloud performance monitoring and resource management. These tools provide insights into resource utilization, performance metrics, and cost data, enabling organizations to optimize their cloud environments.Here’s a list of essential tools:

- Cloud Provider Native Monitoring Tools: Each major cloud provider (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Operations Suite) offers native monitoring tools that provide detailed insights into resource utilization, performance metrics, and cost data. These tools typically integrate seamlessly with other cloud services and offer a comprehensive view of the cloud environment. For example, AWS CloudWatch allows for the creation of dashboards to visualize performance metrics like CPU utilization, memory usage, and network traffic.

Azure Monitor provides similar capabilities for monitoring resources deployed on Azure.

- Third-Party Monitoring Tools: Several third-party monitoring tools offer advanced features and integrations with various cloud providers. These tools often provide more granular monitoring capabilities, advanced analytics, and support for hybrid and multi-cloud environments. Examples include Datadog, New Relic, and Dynatrace. These tools can offer features such as real-time performance monitoring, application performance management (APM), and infrastructure monitoring.

- Cost Management Tools: Cloud providers offer cost management tools that help organizations track and manage their cloud spending. These tools provide insights into cost trends, allow for cost allocation, and enable the creation of budgets and alerts. Examples include AWS Cost Explorer, Azure Cost Management, and Google Cloud Cost Management.

- Infrastructure as Code (IaC) Tools: IaC tools, such as Terraform and AWS CloudFormation, automate the provisioning and management of cloud infrastructure. These tools enable organizations to define their infrastructure as code, making it easier to manage, version control, and automate deployments. IaC tools can also be used to optimize resource utilization by automatically scaling resources based on demand.

- Configuration Management Tools: Configuration management tools, such as Ansible, Chef, and Puppet, automate the configuration and management of cloud resources. These tools ensure that resources are configured consistently and securely. They can also be used to automate tasks such as patching and software updates.

Last Point

In summary, creating a cloud migration plan and timeline is a multifaceted endeavor that demands careful consideration of various factors. By meticulously assessing the current infrastructure, defining clear objectives, selecting the right cloud provider and strategy, and implementing robust security measures, organizations can successfully migrate to the cloud. The ultimate goal is to achieve cost savings, improve scalability, enhance security, and drive innovation.

Continuous monitoring, optimization, and management post-migration are also critical for long-term success, allowing organizations to fully leverage the benefits of cloud computing.

FAQ

What is the first step in creating a cloud migration plan?

The initial step is to conduct a comprehensive assessment of the current on-premises infrastructure, including servers, storage, and network components, to understand their capabilities and dependencies.

How do you choose the right cloud migration strategy?

The selection of a migration strategy depends on workload characteristics, business objectives, and the desired level of change. Factors like application complexity, budget, and time constraints influence the decision.

What are the key considerations for data migration?

Data migration involves securely transferring data to the cloud, ensuring data integrity and minimizing downtime. The tools and techniques employed should align with the selected migration strategy.

What is the role of a rollback strategy?

A rollback strategy is a contingency plan that allows for a return to the original on-premises environment in case of migration failures, minimizing disruption to business operations.

How do you manage cloud costs after migration?

Post-migration cost management involves monitoring resource utilization, optimizing cloud configurations, and leveraging cost-saving features offered by the cloud provider to minimize expenses.