The integration of serverless functions, specifically AWS Lambda, with relational databases represents a pivotal shift in modern application architecture. This approach offers the benefits of scalability, cost-effectiveness, and rapid deployment. Understanding the nuances of this integration, from database selection and setup to code implementation and optimization, is crucial for developers aiming to build efficient and resilient systems. This guide provides a structured, in-depth exploration of connecting Lambda functions to relational databases, addressing key considerations and practical implementation strategies.

This discussion will navigate the core functionalities of AWS Lambda functions and relational databases (MySQL, PostgreSQL, etc.), the benefits of integrating them, and delve into crucial aspects like choosing the right database and AWS services (RDS, Aurora), setting up the database, creating and deploying the Lambda function, configuring IAM roles, and securing database connections. We will also delve into connection methods, SQL query writing, error handling, performance optimization, and advanced topics like transactions and security, providing a holistic understanding of the subject.

Introduction to Lambda Functions and Relational Databases

The integration of serverless compute services, such as AWS Lambda, with relational databases presents a powerful architecture for building scalable and cost-effective applications. This approach leverages the on-demand nature of Lambda functions and the structured data management capabilities of relational databases to create dynamic and responsive systems. This combination is particularly well-suited for applications requiring event-driven processing, data transformation, and API-driven interactions with data stored in relational formats.

Core Functionalities of AWS Lambda Functions

AWS Lambda functions are event-driven, serverless compute services that allow developers to run code without provisioning or managing servers. This event-driven nature enables Lambda functions to be triggered by various AWS services, such as Amazon S3 (object storage), Amazon DynamoDB (NoSQL database), and Amazon API Gateway (API management). They execute code in response to these triggers, processing data, performing calculations, and interacting with other services.Lambda functions offer several key functionalities:

- Automatic Scaling: Lambda automatically scales compute resources based on the number of incoming requests, ensuring optimal performance and cost efficiency. This means that the function can handle a large volume of requests without manual intervention.

- Pay-per-Use Pricing: Users are charged only for the compute time consumed by their Lambda functions. This contrasts with traditional server-based infrastructure, where resources are often provisioned and paid for regardless of usage.

- Support for Multiple Programming Languages: Lambda supports a wide range of programming languages, including Node.js, Python, Java, Go, and .NET. This flexibility allows developers to choose the language that best suits their needs and expertise.

- Integration with Other AWS Services: Lambda seamlessly integrates with other AWS services, providing a comprehensive ecosystem for building and deploying applications. This includes services for storage, databases, API management, and more.

- Event-Driven Architecture: Lambda functions are triggered by events, allowing for the creation of event-driven architectures. This promotes loose coupling and enables asynchronous processing, improving the responsiveness and scalability of applications.

Overview of Relational Databases

Relational databases are a type of database that stores data in a structured format, using tables with rows and columns. They are based on the relational model, which uses SQL (Structured Query Language) for data management and retrieval. Popular examples of relational databases include MySQL, PostgreSQL, and Amazon RDS (Relational Database Service), which provides managed instances of several relational database engines.Key characteristics of relational databases include:

- Structured Data: Data is organized into tables with predefined schemas, ensuring data consistency and integrity.

- ACID Properties: Relational databases typically adhere to ACID properties (Atomicity, Consistency, Isolation, Durability), ensuring reliable transaction management.

- SQL for Data Manipulation: SQL is the standard language for querying, inserting, updating, and deleting data in relational databases.

- Relationships between Tables: Relational databases support relationships between tables, allowing for the modeling of complex data structures. This enables efficient data retrieval and management.

- Data Integrity Constraints: Constraints, such as primary keys, foreign keys, and unique constraints, enforce data integrity and prevent data corruption.

Benefits of Integrating Lambda Functions with Relational Databases

Integrating Lambda functions with relational databases offers several benefits for application development:

- Scalability: Lambda functions automatically scale to handle varying workloads, while relational databases can be scaled independently. This combination allows for efficient resource allocation and prevents bottlenecks.

- Cost-Effectiveness: The pay-per-use pricing of Lambda functions combined with the managed services offered by relational database providers, such as Amazon RDS, can significantly reduce infrastructure costs.

- Event-Driven Processing: Lambda functions can be triggered by events, such as changes in the database or API requests, enabling real-time data processing and updates. This is particularly useful for applications that require immediate responses to events.

- Simplified Operations: Serverless architectures reduce the operational overhead associated with managing servers and infrastructure. Lambda functions and managed database services simplify deployment, monitoring, and maintenance.

- Data Transformation and Enrichment: Lambda functions can be used to transform and enrich data retrieved from relational databases, providing valuable insights and preparing data for downstream applications. This includes tasks like data cleansing, aggregation, and formatting.

Choosing the Right Database and AWS Services

Selecting the appropriate relational database and associated AWS services is crucial for the efficient operation and scalability of Lambda functions interacting with data. The optimal choice depends on several factors, including data volume, read/write patterns, performance requirements, cost considerations, and operational overhead. This section will explore the critical aspects of database selection and AWS service comparison to guide informed decision-making.

Factors for Selecting a Relational Database

Choosing a relational database for a Lambda function involves a careful evaluation of several key factors. These considerations ensure the database aligns with the function’s performance, scalability, and cost requirements.

- Data Volume and Growth: The expected size of the dataset and its projected growth trajectory are paramount. A database capable of handling current and future data volumes without performance degradation is essential. Consider the need for horizontal scaling if data volume is expected to increase dramatically. For example, a rapidly growing e-commerce platform would require a database that can scale to accommodate increasing transaction data.

- Read/Write Patterns: Analyze the ratio of read operations to write operations. Databases optimized for read-heavy workloads (e.g., content delivery systems) may differ significantly from those optimized for write-heavy workloads (e.g., financial transaction processing). Consider caching strategies to improve read performance.

- Performance Requirements: Define the required latency and throughput for database operations. High-performance applications may necessitate databases with features like connection pooling, query optimization, and in-memory caching. For instance, a real-time analytics dashboard demands low-latency data retrieval.

- Consistency and Durability: Determine the required level of data consistency and durability. ACID properties (Atomicity, Consistency, Isolation, Durability) are critical for transactional systems. Consider the trade-offs between consistency and performance; for example, eventual consistency may be acceptable for some read-heavy applications.

- Cost Considerations: Evaluate the total cost of ownership (TCO), including database instance costs, storage costs, and operational overhead. Consider the pay-per-use pricing models offered by cloud providers and the impact of scaling on costs. Optimize database queries and resource utilization to minimize expenses.

- Security Requirements: Implement robust security measures, including data encryption, access control, and regular security audits. Comply with relevant data privacy regulations, such as GDPR or HIPAA, if applicable.

- Operational Overhead: Consider the ease of database management, including backups, patching, and monitoring. Managed database services offered by cloud providers can significantly reduce operational overhead.

Comparing AWS Database Services for Lambda Integration

AWS provides several relational database services that can be integrated with Lambda functions. Each service offers distinct features, performance characteristics, and cost structures. This section compares two prominent options: Amazon RDS (Relational Database Service) and Amazon Aurora.

| Feature | Amazon RDS | Amazon Aurora | Notes |

|---|---|---|---|

| Database Engines | Supports multiple database engines: MySQL, PostgreSQL, MariaDB, Oracle, Microsoft SQL Server. | Compatible with MySQL and PostgreSQL. | Aurora offers compatibility modes to leverage existing MySQL or PostgreSQL skills. |

| Performance | Performance varies based on instance size and database engine. | Designed for significantly higher performance, especially for read-heavy workloads. Uses a distributed, fault-tolerant storage system. | Aurora typically offers 5x the performance of standard MySQL and 3x the performance of standard PostgreSQL. |

| Scalability | Scalability depends on the database engine and instance type. Vertical scaling (increasing instance size) is common. | Offers automatic scaling of read replicas to handle increased read traffic. Supports horizontal scaling with up to 15 read replicas. | Aurora’s auto-scaling features provide more flexibility and agility in handling varying workloads. |

| High Availability | Supports Multi-AZ deployments for high availability. | Offers built-in high availability with automatic failover to a replica in another Availability Zone. | Aurora provides superior high availability features, including automated backups and point-in-time recovery. |

| Cost | Pricing varies based on instance type, storage, and data transfer. | Typically more expensive than RDS for similar instance sizes due to its enhanced features and performance. | Aurora’s pricing model reflects its higher performance and availability characteristics. Consider cost optimization strategies like right-sizing instances and using read replicas. |

| Storage | Uses standard storage options based on the selected database engine. | Uses a distributed, fault-tolerant storage system. Storage automatically grows as needed. | Aurora’s storage system provides higher durability and performance compared to standard storage options. |

Role of VPC in Database Connectivity

A Virtual Private Cloud (VPC) plays a crucial role in securing and managing the connection between Lambda functions and relational databases. By deploying both the Lambda function and the database within a VPC, you can control network access and enhance security.

- Network Isolation: A VPC isolates your resources from the public internet and other AWS accounts. This isolation minimizes the attack surface and protects sensitive data.

- Security Groups: Security groups act as virtual firewalls, controlling inbound and outbound traffic for both the Lambda function and the database. You can configure security groups to allow only specific traffic between the function and the database, further enhancing security.

- Private Subnets: Databases are often deployed in private subnets within a VPC, making them inaccessible from the public internet. Lambda functions can access these databases through the VPC, providing an additional layer of security.

- Database Connectivity: To connect a Lambda function to a database within a VPC, you must configure the function to reside within the same VPC and subnet as the database. You also need to configure the appropriate security group rules to allow the function to access the database.

- Example: Imagine a Lambda function processing customer orders. The function needs to access a database containing order details. By placing both the function and the database within a VPC and configuring security groups to allow traffic only between them, you create a secure, isolated environment. This prevents unauthorized access and ensures data confidentiality.

Setting Up the Database (RDS/Aurora)

To successfully integrate a Lambda function with a relational database, a properly configured database instance is essential. This section details the steps involved in setting up an RDS or Aurora instance, configuring security, and designing a suitable database schema. The choice between RDS and Aurora often depends on factors such as performance requirements, cost considerations, and the need for high availability.

Aurora, being a MySQL and PostgreSQL-compatible database, offers significant performance advantages and scalability compared to standard RDS instances, particularly for read-heavy workloads.

Creating a New RDS Instance

The creation of a new RDS instance involves several key steps within the AWS Management Console. These steps define the database engine, instance size, storage, and network configuration.

The following Artikels the process:

- Choose Database Engine: Select the desired database engine (e.g., MySQL, PostgreSQL, MariaDB, Oracle, SQL Server). The choice should align with the Lambda function’s requirements and the familiarity of the development team. For instance, MySQL is a popular choice due to its ease of use and widespread support. PostgreSQL, on the other hand, provides more advanced features, particularly useful for complex data models and stored procedures.

- Select Deployment Type: Choose between single-AZ deployment (for development or non-critical workloads) and multi-AZ deployment (for high availability and production environments). Multi-AZ deployments automatically failover to a standby instance in a different Availability Zone in case of an outage, ensuring minimal downtime.

- Configure Instance Details: Specify the instance class (determines CPU, memory, and network performance), the database instance identifier (a unique name for the instance), and the master username and password. Choose an instance class appropriate for the expected workload. Consider using a T3 instance for development and testing and scaling up to a larger instance class (e.g., M5 or R5) for production.

- Configure Storage: Select the storage type (e.g., General Purpose SSD, Provisioned IOPS SSD). Provisioned IOPS provides consistent performance for I/O-intensive workloads. The storage size should be sufficient for the database size and anticipated growth.

- Configure Network and Security: Select the VPC (Virtual Private Cloud) where the database instance will reside. Choose a security group that allows inbound traffic from the Lambda function’s security group (discussed in the next section). It is crucial to place the database in a private subnet to restrict access to the database from the public internet, enhancing security.

- Configure Database Options: Specify the database name, port, and other engine-specific settings (e.g., character set). Consider setting up automated backups and enabling monitoring.

- Launch the Instance: Review the configuration and launch the RDS instance. The instance creation process typically takes several minutes.

Configuring Security Groups to Allow Lambda Function Access

Security groups act as virtual firewalls that control inbound and outbound traffic to the RDS instance. Configuring these security groups correctly is crucial for allowing the Lambda function to access the database while maintaining security.

The configuration steps are as follows:

- Identify the Lambda Function’s Security Group: Determine the security group associated with the Lambda function. This security group will define the function’s outbound network traffic. You can find this information in the Lambda function’s configuration within the AWS Management Console.

- Create or Modify the RDS Security Group: Either create a new security group for the RDS instance or modify the existing one. The security group should be configured to allow inbound traffic from the Lambda function’s security group.

- Add an Inbound Rule: Add an inbound rule to the RDS security group. The rule should specify the following:

- Type: Custom TCP rule.

- Protocol: TCP.

- Port Range: The database port (e.g., 3306 for MySQL, 5432 for PostgreSQL).

- Source: The Lambda function’s security group ID.

- Associate the Security Group with the RDS Instance: Ensure the RDS instance is associated with the security group that allows access from the Lambda function’s security group. This is usually done during the RDS instance creation or modification process.

- Test the Connection: After configuring the security groups, test the connection between the Lambda function and the RDS instance. Deploy the Lambda function with the necessary code to connect to the database and verify that the connection is successful.

By following these steps, you create a secure and functional connection between the Lambda function and the RDS instance. Any unauthorized access will be prevented due to the restrictive security group rules.

Designing the Database Schema (Tables, Relationships) for a Specific Use Case (e.g., User Authentication)

Designing a well-structured database schema is essential for the performance, scalability, and maintainability of the application. The schema defines the tables, columns, data types, relationships, and constraints within the database.

Consider a use case for user authentication. The database schema should include the following elements:

- Users Table:

- Columns:

user_id(INT, PRIMARY KEY, AUTO_INCREMENT): Unique identifier for each user.username(VARCHAR(255), UNIQUE, NOT NULL): The user’s chosen username.password_hash(VARCHAR(255), NOT NULL): A secure hash of the user’s password (never store passwords in plain text).email(VARCHAR(255), UNIQUE, NOT NULL): The user’s email address.created_at(TIMESTAMP, DEFAULT CURRENT_TIMESTAMP): Timestamp indicating when the user account was created.last_login(TIMESTAMP, NULL): Timestamp indicating the last time the user logged in.

- Columns:

- Roles Table (optional, for role-based access control):

- Columns:

role_id(INT, PRIMARY KEY, AUTO_INCREMENT): Unique identifier for each role.role_name(VARCHAR(255), UNIQUE, NOT NULL): The name of the role (e.g., ‘admin’, ‘user’).

- Columns:

- User_Roles Table (optional, for many-to-many relationship between users and roles):

- Columns:

user_id(INT, FOREIGN KEY referencing Users.user_id, NOT NULL): The user’s ID.role_id(INT, FOREIGN KEY referencing Roles.role_id, NOT NULL): The role’s ID.PRIMARY KEY (user_id, role_id): Composite primary key to ensure uniqueness of the relationship.

- Columns:

Example SQL for creating the Users table (MySQL syntax):

CREATE TABLE Users ( user_id INT AUTO_INCREMENT PRIMARY KEY, username VARCHAR(255) UNIQUE NOT NULL, password_hash VARCHAR(255) NOT NULL, email VARCHAR(255) UNIQUE NOT NULL, created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP, last_login TIMESTAMP NULL );

This schema design provides a solid foundation for user authentication, enabling the storage and management of user credentials securely. The inclusion of timestamps allows for auditing and tracking user activity. Using a password hash instead of storing passwords directly is crucial for security.

Creating the Lambda Function

Now, the stage shifts to the development of the Lambda function itself, the computational engine that bridges the serverless environment with the relational database. This involves defining the function’s structure, writing the code to interact with the database, and incorporating error handling to ensure robustness. The following sections detail the process of crafting a Python-based Lambda function designed for this purpose.

Organizing the Lambda Function’s Code Structure

Structuring the Lambda function’s code is crucial for maintainability, readability, and efficient execution. A well-organized function is easier to debug, update, and scale.

- Handler Function: The handler function is the entry point of the Lambda function. It receives events from the triggering service (e.g., API Gateway, S3) and processes them. This function orchestrates the interaction with the database and any other required operations. The handler’s signature typically includes the event object, which contains the data triggering the function, and the context object, which provides information about the function’s execution environment.

- Dependencies: Lambda functions often rely on external libraries and packages. These dependencies must be packaged along with the function’s code. This can be achieved by creating a deployment package that includes the necessary libraries. The deployment package is uploaded to AWS, and Lambda uses it to execute the function. For Python, this usually involves using a package manager like `pip` to install dependencies and then bundling them with the function code.

- Helper Functions: Complex operations should be encapsulated within helper functions. This promotes code reuse and modularity. For instance, database connection logic, data validation, and data transformation can be separated into their own functions. This makes the code cleaner and easier to understand.

- Error Handling: Robust error handling is essential. The function should gracefully handle potential errors, such as database connection failures, incorrect data formats, and unexpected exceptions. Implementing `try-except` blocks and logging errors can help diagnose and resolve issues.

Creating a Lambda Function Using Python to Interact with the Database

Creating a Lambda function in Python involves writing the code to connect to the database, execute queries, and process the results. The following steps illustrate how to create such a function.

The code below provides a basic example of a Python Lambda function that connects to a PostgreSQL database, executes a simple query, and returns the results. This example assumes that the database connection parameters (host, username, password, database name) are stored as environment variables in the Lambda function’s configuration.

“`pythonimport psycopg2import osdef lambda_handler(event, context): “”” Lambda function to query a PostgreSQL database. “”” try: # Retrieve database connection parameters from environment variables host = os.environ[‘DB_HOST’] user = os.environ[‘DB_USER’] password = os.environ[‘DB_PASSWORD’] database = os.environ[‘DB_NAME’] # Establish a database connection conn = psycopg2.connect( host=host, user=user, password=password, database=database ) # Create a cursor object cur = conn.cursor() # Execute a SQL query cur.execute(“SELECT

FROM your_table;”)

rows = cur.fetchall() # Process the results results = [] for row in rows: results.append(row) # Close the cursor and connection cur.close() conn.close() # Return the results as JSON return ‘statusCode’: 200, ‘body’: results except psycopg2.Error as e: # Handle database connection or query errors return ‘statusCode’: 500, ‘body’: f”Database error: e” except Exception as e: # Handle other exceptions return ‘statusCode’: 500, ‘body’: f”An error occurred: e” “`

Explanation of the Code:

- Import Statements: Imports the `psycopg2` library for PostgreSQL database interaction and the `os` module to access environment variables.

- Environment Variables: The function retrieves database connection parameters (host, user, password, database name) from environment variables. This is a secure practice for storing sensitive information. These environment variables must be set in the Lambda function’s configuration in the AWS console.

- Database Connection: Establishes a connection to the PostgreSQL database using the retrieved parameters.

- Query Execution: Executes a SQL query using the cursor object.

- Result Processing: Fetches all results from the query and stores them in a list.

- Connection Closure: Closes the cursor and the database connection to release resources.

- Error Handling: Includes `try-except` blocks to handle potential errors, such as connection failures or SQL syntax errors. The error messages are returned in the response body.

- Return Value: Returns a JSON response with the query results or an error message.

Demonstrating How to Handle Database Connection Errors Within the Lambda Function

Handling database connection errors is crucial for ensuring the resilience of the Lambda function. This involves anticipating potential issues and implementing strategies to gracefully manage them.

The example code in the previous section already incorporates basic error handling using `try-except` blocks. Here’s a more detailed discussion of error handling considerations.

- Connection Errors: If the Lambda function fails to connect to the database (e.g., due to network issues, incorrect credentials, or database unavailability), the `psycopg2.Error` exception will be caught. The function can then log the error and return an appropriate error response (e.g., HTTP 500 Internal Server Error) to the caller. The error message should provide enough information to diagnose the problem.

- Query Errors: If the SQL query is invalid or encounters issues during execution, the `psycopg2.Error` exception will be caught. This might be due to syntax errors, incorrect table or column names, or data type mismatches. The function can log the specific error and return an error response.

- Connection Pooling: For increased performance and to minimize the overhead of establishing new database connections, consider using a connection pooling library. Connection pooling maintains a pool of database connections that can be reused, avoiding the need to repeatedly establish new connections.

- Retry Mechanisms: In cases of transient errors (e.g., temporary network issues), consider implementing a retry mechanism. The function can attempt to reconnect to the database a few times before giving up. Use exponential backoff to avoid overwhelming the database.

- Logging: Thorough logging is essential for debugging. The function should log detailed information about errors, including the error type, error message, and any relevant context (e.g., the SQL query that failed). Use a logging framework to structure and format the logs for easier analysis.

- Circuit Breaker Pattern: If a database is consistently failing, consider implementing a circuit breaker pattern. This prevents the function from repeatedly attempting to connect to the failing database. The circuit breaker monitors the connection attempts and, if a certain threshold of failures is reached, “opens” the circuit, preventing further connection attempts for a set period. After a timeout, the circuit “closes” and allows connection attempts again.

Configuring IAM Roles and Permissions

To successfully connect a Lambda function to a relational database, robust Identity and Access Management (IAM) configuration is paramount. This section details the essential IAM roles and permissions required, providing a step-by-step guide for creating an IAM role with the necessary policies, and outlining best practices for securing database credentials within the Lambda function’s environment. Proper configuration minimizes security vulnerabilities and ensures the Lambda function can interact with the database securely and efficiently.

IAM Roles and Permissions for Database Access

IAM roles grant permissions to AWS resources, enabling them to perform actions on other AWS resources. For a Lambda function to access a relational database, it requires an IAM role with specific permissions. These permissions authorize the Lambda function to establish a connection to the database, execute queries, and retrieve or modify data. Without the correct permissions, the function will fail to connect or operate on the database, leading to errors.To facilitate database access, the IAM role must include the following:

- Lambda Execution Role: This is the primary role assumed by the Lambda function during execution. It defines the permissions the function has to interact with other AWS services, including the database.

- Permissions for Database Access: These permissions are granted through IAM policies. The policies should allow the Lambda function to perform specific actions on the database, such as connecting, reading data, and writing data.

- Permissions for Networking (if applicable): If the database resides within a VPC, the IAM role must have permissions to access the VPC and its associated resources. This includes permissions for elastic network interfaces (ENIs) that enable the Lambda function to communicate with resources inside the VPC.

Creating an IAM Role with Required Policies

Creating an IAM role involves defining the trust relationship, which specifies which entity can assume the role, and attaching policies that grant the necessary permissions. Here’s a step-by-step guide:

- Create a New IAM Role:

In the AWS Management Console, navigate to the IAM service. Click on “Roles” and then “Create role.”

- Choose the Trusted Entity:

Select “AWS service” as the trusted entity. Then, choose “Lambda” as the service that will use this role. This configures the role to be assumable by the Lambda service.

- Attach Permissions Policies:

Attach the necessary policies. These policies define the permissions the Lambda function will have. The following policies are typically required:

- AWSLambdaBasicExecutionRole: This is a managed policy provided by AWS that grants basic permissions for Lambda function execution, including logging to CloudWatch.

- Custom Policy for Database Access: Create a custom policy that grants specific permissions to the database. This policy will vary depending on the database type (e.g., RDS, Aurora) and the operations the Lambda function needs to perform. This policy should allow actions like `rds:Connect`, `rds:ExecuteStatement`, `rds:DescribeDBInstances`, and potentially `rds:DescribeDBClusters` (for Aurora).

- VPC Access Permissions (if applicable): If the database is within a VPC, attach policies that allow the Lambda function to create and manage ENIs. This includes the `ec2:CreateNetworkInterface`, `ec2:DescribeNetworkInterfaces`, `ec2:DeleteNetworkInterface`, and `ec2:AssignPrivateIpAddresses` permissions.

- Define the Policy:

When creating the custom policy for database access, specify the actions the Lambda function can perform and the resources it can access. The following is an example of a policy granting read-only access to an RDS database:

"Version": "2012-10-17", "Statement": [ "Effect": "Allow", "Action": [ "rds:Connect", "rds:DescribeDBInstances" ], "Resource": "arn:aws:rds:REGION:ACCOUNT_ID:db:DATABASE_IDENTIFIER" , "Effect": "Allow", "Action": [ "rds:ExecuteStatement" ], "Resource": "arn:aws:rds:REGION:ACCOUNT_ID:db:DATABASE_IDENTIFIER" ]Replace `REGION`, `ACCOUNT_ID`, and `DATABASE_IDENTIFIER` with the appropriate values for your database.

- Review and Create the Role:

Review the role details, including the attached policies and the trust relationship. Then, create the role.

- Configure the Lambda Function:

In the Lambda function configuration, set the “Execution role” to the IAM role you just created. This associates the role with the function, allowing it to assume the permissions defined in the role.

Securing Database Credentials in Lambda

Protecting database credentials is a critical aspect of securing the Lambda function. Hardcoding credentials directly into the function’s code is highly discouraged, as this creates a significant security risk. Several secure methods for storing and accessing credentials include:

- AWS Secrets Manager:

Secrets Manager is a service for securely storing and managing secrets, including database credentials. You can store the database username, password, endpoint, and other sensitive information in Secrets Manager. The Lambda function can then retrieve these secrets using the AWS SDK. This approach provides encryption, access control, and the ability to rotate secrets automatically.

To use Secrets Manager, you will:

- Create a secret in Secrets Manager containing your database credentials.

- Grant the Lambda function’s IAM role permission to read the secret.

- Use the AWS SDK for Python (Boto3) or another supported language to retrieve the secret within your Lambda function code.

- AWS Systems Manager Parameter Store:

Parameter Store is another service for storing configuration data, including secrets. It offers a hierarchical structure and encryption options. Although not specifically designed for secrets management like Secrets Manager, it can be used to store and retrieve database credentials.

Using Parameter Store involves:

- Storing the database credentials as parameters in Parameter Store, encrypted if desired.

- Granting the Lambda function’s IAM role permission to read the parameters.

- Using the AWS SDK to retrieve the parameter values within the Lambda function.

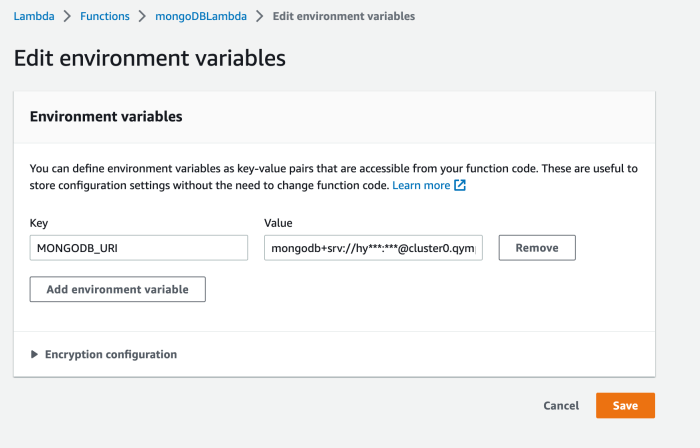

- Environment Variables:

Environment variables can be used to store database credentials. This method is simpler than Secrets Manager or Parameter Store, but it’s less secure because the credentials are stored in the function’s configuration, which might be accessible to authorized users. Encryption is not provided by default.

To use environment variables:

- Set the database credentials as environment variables in the Lambda function configuration.

- Access the environment variables within the Lambda function code using the appropriate language-specific methods (e.g., `os.environ.get()` in Python).

It is important to choose the method that best fits your security requirements and operational needs. Secrets Manager is generally recommended for its robust security features and ease of management. Regularly rotating credentials, regardless of the chosen method, is crucial for maintaining security.

Database Connection Methods

Connecting a Lambda function to a relational database is a critical aspect of building serverless applications that require data persistence. The choice of connection method significantly impacts performance, scalability, and cost-effectiveness. Understanding the various options and their trade-offs is essential for designing efficient and resilient systems.

Direct Database Connections

This approach involves establishing a new database connection for each Lambda function invocation. While seemingly straightforward, it presents several drawbacks.Direct database connections involve establishing a new connection for every function invocation, which can lead to performance bottlenecks. This is because establishing a new connection requires time and resources, especially when dealing with database authentication and initialization. The increased overhead can significantly impact the latency of each request.

Connection Pooling

Connection pooling is a technique that maintains a pool of database connections that are reused across multiple function invocations. This method mitigates the overhead of establishing new connections by reusing existing ones.Connection pooling significantly improves performance by reusing existing database connections, reducing the latency associated with establishing new connections for each function invocation. The pool maintains a set of active connections, and when a Lambda function needs to interact with the database, it borrows a connection from the pool.

After the function completes its database operations, the connection is returned to the pool for reuse by other invocations. This reduces the overhead associated with establishing new connections, leading to faster response times and improved resource utilization.

Comparison of Connection Methods

Choosing the right connection method depends on the specific requirements of the application, considering factors like expected traffic, latency sensitivity, and cost constraints. Below is a comparison of the methods discussed.

- Direct Database Connections:

- Pros: Simple to implement for basic scenarios.

- Cons: High latency due to connection establishment overhead, inefficient resource utilization, and potential for connection exhaustion under high load.

- Use Cases: Low-traffic applications or testing environments where performance is not critical.

- Connection Pooling:

- Pros: Significantly reduced latency, improved resource utilization, and better scalability.

- Cons: Requires more complex implementation and configuration. Connection leaks can degrade performance.

- Use Cases: High-traffic applications requiring low latency and efficient resource management.

Demonstration of Connection Pooling

Implementing connection pooling involves using a database connection library that supports pooling, such as those available for various programming languages (e.g., `pg` for Node.js, `psycopg2` for Python, and JDBC for Java). This demonstration will show a simplified example using Python and the `psycopg2` library, which provides connection pooling capabilities.First, the `psycopg2` library needs to be installed.“`bashpip install psycopg2-binary“`The following Python code snippet illustrates the use of connection pooling within a Lambda function:“`pythonimport psycopg2from psycopg2.pool import SimpleConnectionPoolimport os# Database connection parameters (retrieved from environment variables)db_host = os.environ.get(‘DB_HOST’)db_name = os.environ.get(‘DB_NAME’)db_user = os.environ.get(‘DB_USER’)db_password = os.environ.get(‘DB_PASSWORD’)# Create a connection poolpool = SimpleConnectionPool( minconn=1, # Minimum number of connections in the pool maxconn=5, # Maximum number of connections in the pool host=db_host, database=db_name, user=db_user, password=db_password)def lambda_handler(event, context): “”” Lambda function handler that interacts with a PostgreSQL database using connection pooling.

“”” try: # Get a connection from the pool conn = pool.getconn() cur = conn.cursor() # Execute a SQL query cur.execute(“SELECT version();”) db_version = cur.fetchone() print(f”Database version: db_version”) # Commit the transaction (if needed) conn.commit() # Close the cursor and release the connection back to the pool cur.close() pool.putconn(conn) return ‘statusCode’: 200, ‘body’: f”Database version: db_version[0]” except Exception as e: print(f”Error: e”) return ‘statusCode’: 500, ‘body’: f”Error: str(e)” “`In this example:

- A connection pool is created using `SimpleConnectionPool`. The parameters `minconn` and `maxconn` control the size of the pool.

- Inside the Lambda function handler, a connection is retrieved from the pool using `pool.getconn()`.

- After the database operations are completed, the connection is returned to the pool using `pool.putconn(conn)`.

This implementation ensures that database connections are reused, reducing the overhead associated with establishing new connections for each Lambda function invocation. The environment variables should be configured in the Lambda function configuration with the relevant database details. By implementing connection pooling, the performance and scalability of the Lambda function can be significantly improved, especially under high load.

Writing SQL Queries and Data Manipulation

Effectively querying and manipulating data within a relational database is fundamental to the functionality of a Lambda function interacting with it. This involves crafting SQL statements to retrieve, insert, update, and delete data. The following sections detail the construction of SQL queries and best practices for secure execution within a Lambda function environment.

SQL Query Construction

SQL (Structured Query Language) provides the means to interact with relational databases. Understanding the syntax and functionality of common SQL commands is crucial for data manipulation.

- SELECT: Retrieves data from one or more tables. It allows specifying which columns to retrieve, applying filtering criteria using the `WHERE` clause, and sorting results with `ORDER BY`.

- INSERT: Adds new data into a table. It specifies the table and the values to be inserted for each column.

- UPDATE: Modifies existing data in a table. The `WHERE` clause is used to specify which rows to update.

- DELETE: Removes data from a table. The `WHERE` clause is used to specify which rows to delete.

For example, consider a table named `users` with columns `id`, `name`, and `email`.

- To retrieve all users:

SELECT

- FROM users; - To insert a new user:

INSERT INTO users (name, email) VALUES ('John Doe', '[email protected]'); - To update a user’s email:

UPDATE users SET email = '[email protected]' WHERE id = 1; - To delete a user:

DELETE FROM users WHERE id = 1;

Safe Execution of SQL Queries

Security is paramount when executing SQL queries, especially within a Lambda function. Input sanitization is a critical practice to prevent SQL injection vulnerabilities. This involves validating and escaping user-provided input before incorporating it into SQL statements.A recommended procedure includes the following steps:

- Input Validation: Verify that all input data conforms to expected formats and constraints. For example, ensure that numerical inputs are indeed numbers and that strings do not exceed predefined lengths.

- Parameterization (Prepared Statements): Use parameterized queries or prepared statements. This approach separates the SQL code from the user-supplied data, preventing malicious code from being interpreted as part of the query. The database system handles the escaping and quoting of the input values.

- Error Handling: Implement robust error handling to gracefully manage exceptions. This includes logging errors and providing informative feedback (without revealing sensitive information) to the user.

Consider an example using Python with the `psycopg2` library for PostgreSQL:“`pythonimport psycopg2def execute_query(conn, query, params=None): “”” Safely executes an SQL query using parameterized queries. “”” try: with conn.cursor() as cur: cur.execute(query, params) if query.lstrip().upper().startswith(‘SELECT’): return cur.fetchall() else: conn.commit() return cur.rowcount except psycopg2.Error as e: conn.rollback() # Rollback in case of an error print(f”Database error: e”) raise # Re-raise the exception for Lambda to handle“`In this example, `execute_query` function takes a database connection (`conn`), the SQL query (`query`), and optional parameters (`params`).

The function utilizes parameterized queries by passing user input through the `params` argument. This ensures that the user input is treated as data and not as executable SQL code, mitigating the risk of SQL injection. The function also includes error handling, logging any database errors, and rolling back the transaction if necessary. The `conn.commit()` is used for `INSERT`, `UPDATE`, and `DELETE` operations to save changes.

For `SELECT` queries, `cur.fetchall()` retrieves the results.

Deploying and Testing the Lambda Function

Deploying and testing a Lambda function that interacts with a relational database is a critical step in the development lifecycle. This process validates the function’s ability to connect to the database, execute queries, and handle data, ensuring its operational readiness and reliability. Rigorous testing minimizes the risk of production errors and confirms the intended functionality.

Deploying the Lambda Function to AWS

Deploying the Lambda function involves packaging the code and its dependencies and uploading it to the AWS Lambda service. This process makes the function available for execution based on triggers or direct invocations.The deployment process typically involves these steps:

- Packaging the Code: The Lambda function code, along with any necessary dependencies (e.g., database drivers, libraries), must be packaged into a deployment package. This package can be a ZIP file or a container image. For Python functions, this often involves creating a virtual environment, installing dependencies using `pip`, and zipping the function code and the `site-packages` directory.

- Creating or Updating the Lambda Function: Using the AWS Management Console, AWS CLI, or Infrastructure as Code (IaC) tools like AWS CloudFormation or Terraform, create a new Lambda function or update an existing one. When creating a function, specify the runtime (e.g., Python 3.9), the function’s name, the handler (the entry point of the function), and the execution role. When updating a function, upload the deployment package.

- Configuring Triggers (Optional): Configure triggers to invoke the function. Common triggers include API Gateway endpoints, scheduled events (using CloudWatch Events), or events from other AWS services (e.g., S3 object creation, DynamoDB updates). The trigger configuration defines how and when the function is invoked.

- Setting Environment Variables (If applicable): Configure environment variables within the Lambda function’s configuration. These variables are used to store database connection strings, API keys, or other sensitive information. This practice enhances security and configuration management.

- Monitoring and Logging: Enable monitoring and logging for the Lambda function. AWS CloudWatch provides metrics, logs, and alarms to monitor function performance, identify errors, and troubleshoot issues. Proper logging helps to track the function’s behavior and diagnose any problems that arise during execution.

Testing the Function’s Database Connectivity

Testing database connectivity verifies the Lambda function’s ability to establish a connection to the relational database and execute queries. This step is crucial to confirm that the function can interact with the database successfully.The testing process involves:

- Unit Testing: Unit tests focus on individual components of the function, such as the database connection logic and query execution. These tests isolate the database interaction code to verify its correctness. Frameworks like `unittest` (Python) facilitate the creation of unit tests.

- Integration Testing: Integration tests verify the interaction between the Lambda function and the database. These tests involve deploying the function to a test environment and invoking it to execute database queries. They ensure that the function can connect to the database, execute queries, and retrieve or modify data as expected.

- Connectivity Tests: These tests verify the ability of the Lambda function to establish a network connection to the database. This includes checking security group configurations, VPC settings, and database access permissions. Tests can be performed by attempting to connect to the database from within the Lambda function’s execution environment.

- Data Validation: Data validation involves verifying that the data retrieved from the database is correct and that data modifications are performed as intended. This includes checking data types, values, and relationships.

- Error Handling Tests: These tests simulate various error scenarios, such as database connection failures, invalid queries, and permission issues. They ensure that the function handles errors gracefully and provides informative error messages.

Designing a Test Plan to Validate the Functionality of the Lambda Function with the Database

A comprehensive test plan ensures the Lambda function’s reliability and functionality. The plan Artikels the testing strategy, test cases, expected results, and evaluation criteria.A robust test plan should include:

- Test Objectives: Define the specific goals of the testing process. These objectives should be aligned with the function’s requirements and the expected interactions with the database. Examples include verifying database connection, validating query execution, and confirming data integrity.

- Test Cases: Develop a set of test cases that cover various scenarios and functionalities of the Lambda function. Each test case should have a clear description, input data, expected results, and pass/fail criteria. Test cases should include positive tests (valid scenarios) and negative tests (error scenarios).

- Test Data: Prepare the necessary test data to be used in the test cases. This includes creating or populating tables in the database with appropriate data. Test data should be representative of the real-world data the function will process.

- Test Environment: Specify the test environment, including the AWS region, the database instance, and the Lambda function’s configuration. The test environment should closely resemble the production environment.

- Test Execution: Execute the test cases and record the results. This involves invoking the Lambda function with the test data and comparing the actual results with the expected results.

- Result Analysis: Analyze the test results to identify any failures or issues. This includes reviewing logs, debugging code, and determining the root cause of any problems.

- Reporting: Document the test results in a report. The report should include a summary of the testing process, a list of test cases, the results of each test case, and any issues or findings.

- Example Test Case:

- Test Case ID: TC001

- Test Case Name: Verify SELECT Query

- Description: Test the function’s ability to execute a SELECT query and retrieve data from the database.

- Input Data: None (the function executes a predefined SELECT query).

- Expected Result: The function successfully connects to the database, executes the SELECT query, and returns a list of data. The data should match the data stored in the database.

- Pass/Fail Criteria: The function returns the correct data and does not generate any errors.

Error Handling and Logging

Implementing robust error handling and comprehensive logging is crucial for maintaining the stability, debuggability, and performance of Lambda functions interacting with relational databases. Proper error handling prevents unexpected function terminations, while effective logging provides insights into function behavior, facilitating issue identification and resolution. Monitoring further enhances the ability to proactively identify and address potential problems.

Implementing Error Handling Within the Lambda Function

Error handling within a Lambda function involves anticipating potential issues and implementing strategies to manage them gracefully. This includes catching exceptions, handling database connection errors, and providing informative responses.

- Exception Handling: Use `try…except` blocks to catch potential exceptions that might occur during database interactions. This prevents the function from crashing and allows for custom error handling. For instance, if a SQL query fails, the `except` block can log the error and return a user-friendly message.

- Database Connection Error Handling: Handle potential connection errors, such as connection timeouts or refused connections. Implement retry mechanisms with exponential backoff to attempt reconnecting to the database. This can prevent transient network issues from causing function failures.

- Error Propagation: When an error occurs, it’s essential to propagate the error information appropriately. This can involve logging the error details, returning an error response to the caller, and potentially sending notifications (e.g., via email or a messaging service) to alert administrators.

- Input Validation: Validate user input to prevent SQL injection vulnerabilities and other data-related issues. Ensure that the data received by the Lambda function conforms to the expected format and constraints before interacting with the database. For example, validate the data type and length of input parameters.

- Error Types: Distinguish between different error types (e.g., database connection errors, SQL syntax errors, data validation errors) to facilitate more targeted error handling and troubleshooting.

Example of exception handling in Python:

“`pythonimport psycopg2def lambda_handler(event, context): try: conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password” ) cur = conn.cursor() cur.execute(“SELECT

FROM your_table;”)

rows = cur.fetchall() conn.commit() cur.close() conn.close() return ‘statusCode’: 200, ‘body’: str(rows) except psycopg2.Error as e: print(f”Database error: e”) return ‘statusCode’: 500, ‘body’: f”Database error: str(e)” except Exception as e: print(f”Unexpected error: e”) return ‘statusCode’: 500, ‘body’: f”Unexpected error: str(e)” “`

Providing Examples of Logging Database Interactions for Debugging

Effective logging is crucial for debugging Lambda functions. It provides a detailed record of the function’s execution, including database interactions, input parameters, and any errors that occur.

- Log Levels: Use different log levels (e.g., INFO, WARNING, ERROR, DEBUG) to categorize log messages based on their severity. This allows for filtering and focusing on relevant information during troubleshooting.

- Database Connection Logging: Log the establishment and closure of database connections. Include connection parameters (masked for sensitive information) to help diagnose connection-related issues.

- SQL Query Logging: Log the SQL queries being executed, including any parameters passed to the queries. This helps to identify syntax errors, performance bottlenecks, and data-related issues. However, be mindful of logging sensitive data.

- Result Logging: Log the results of database queries, such as the number of rows affected or the data retrieved. This can help verify that the queries are producing the expected results.

- Error Logging: Log all errors that occur, including the error message, stack trace, and any relevant context. This is essential for diagnosing and resolving issues.

- Input/Output Logging: Log the input parameters received by the Lambda function and the output returned by the function. This helps to understand the function’s behavior and identify any data transformation issues.

Example of logging in Python:

“`pythonimport loggingimport psycopg2logger = logging.getLogger()logger.setLevel(logging.INFO)def lambda_handler(event, context): try: logger.info(“Received event: %s”, event) conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password” ) logger.info(“Database connection established”) cur = conn.cursor() sql_query = “SELECT

FROM your_table;”

logger.info(“Executing SQL query: %s”, sql_query) cur.execute(sql_query) rows = cur.fetchall() logger.info(“Query results: %s”, rows) conn.commit() cur.close() conn.close() logger.info(“Database connection closed”) return ‘statusCode’: 200, ‘body’: str(rows) except psycopg2.Error as e: logger.error(“Database error: %s”, e) return ‘statusCode’: 500, ‘body’: f”Database error: str(e)” except Exception as e: logger.error(“Unexpected error: %s”, e) return ‘statusCode’: 500, ‘body’: f”Unexpected error: str(e)” “`

Sharing Methods for Monitoring the Lambda Function’s Performance and Database Interactions

Monitoring is critical for proactively identifying performance bottlenecks, security issues, and potential problems with Lambda functions and their database interactions. Various methods and AWS services can be employed for effective monitoring.

- CloudWatch Metrics: Utilize Amazon CloudWatch to monitor various metrics related to the Lambda function and database interactions. This includes:

- Lambda Metrics: Monitor invocation count, errors, throttles, duration, and memory usage.

- Database Metrics: Monitor database connection usage, query execution times, and database performance metrics provided by the database service (e.g., RDS or Aurora).

- CloudWatch Logs: Access and analyze the logs generated by the Lambda function. This allows for detailed investigation of function behavior, error messages, and database interactions. Create CloudWatch log groups and configure log retention policies to manage log storage.

- CloudWatch Alarms: Set up CloudWatch alarms to trigger notifications or automated actions based on predefined thresholds for metrics. For example, create an alarm to alert administrators if the function’s error rate exceeds a certain percentage.

- X-Ray Tracing: Integrate AWS X-Ray to trace requests as they flow through the Lambda function and interact with the database. This provides a visual representation of the function’s execution path and helps to identify performance bottlenecks. X-Ray also helps in understanding dependencies between different components of your application.

- Performance Testing: Conduct regular performance testing to evaluate the function’s performance under different load conditions. This can involve simulating high traffic and measuring the function’s response time, error rate, and resource utilization. Tools like JMeter or AWS Load Balancer can be used to simulate load.

- Database Monitoring Tools: Use database-specific monitoring tools (e.g., Amazon RDS Performance Insights, or third-party tools) to gain insights into database performance, query execution times, and resource utilization. These tools can help identify slow queries and optimize database performance.

- Security Auditing: Regularly audit the Lambda function’s IAM roles and permissions to ensure that they adhere to the principle of least privilege. Monitor database access logs to detect any unauthorized access attempts or suspicious activity.

Optimizing Performance and Scalability

Optimizing the performance and scalability of a Lambda function interacting with a relational database is crucial for handling increasing workloads and maintaining a responsive application. Several strategies can be employed to minimize latency, reduce costs, and ensure the system can gracefully handle peak loads. Effective optimization involves careful consideration of database connection management, function code efficiency, and the underlying infrastructure.

Strategies for Optimizing Lambda Function Performance

Optimizing a Lambda function’s performance involves a multi-faceted approach, encompassing connection management, code optimization, and efficient resource utilization. This section details several key strategies to enhance performance.

- Connection Pooling: Implementing connection pooling significantly reduces the overhead associated with establishing and closing database connections. By reusing existing connections, the function avoids the time-consuming process of creating new connections for each invocation. This is especially important for Lambda functions, which often experience frequent invocations.

- Caching: Caching frequently accessed data, such as configuration settings or lookup tables, can reduce the number of database queries. Caching can be implemented using in-memory storage within the Lambda function’s execution environment or by leveraging external caching services like Amazon ElastiCache. This reduces the load on the database and speeds up data retrieval.

- Code Optimization: Writing efficient code is essential. This includes minimizing the amount of data transferred between the Lambda function and the database, optimizing SQL queries for performance, and avoiding unnecessary computations. Profiling tools can help identify performance bottlenecks in the code.

- Minimize Dependencies: Reduce the size of the Lambda function’s deployment package by only including necessary dependencies. Larger packages increase cold start times, impacting performance. Using tools like the AWS Lambda Layers can help share dependencies across multiple functions.

- Efficient Data Serialization/Deserialization: Optimize the serialization and deserialization of data between the Lambda function and the database. Using efficient formats like JSON or Protocol Buffers can reduce data transfer overhead and improve performance.

- Monitoring and Logging: Implement robust monitoring and logging to identify performance bottlenecks and errors. Use tools like Amazon CloudWatch to track function execution times, database query times, and error rates. This data is critical for identifying areas for improvement.

Methods for Scaling the Lambda Function

Scaling a Lambda function to handle increased database load involves understanding the function’s concurrency limits and the database’s capacity. Several methods can be employed to manage scaling effectively.

- Concurrency Limits: AWS Lambda automatically scales your function by increasing the number of concurrent executions. By default, the initial concurrency limit is 1,000, but this can be increased. Carefully monitoring concurrency is essential to prevent overloading the database.

- Provisioned Concurrency: Provisioned concurrency allows you to pre-initialize a specific number of execution environments, ensuring that your function is ready to respond to incoming requests with minimal latency. This is particularly useful for predictable workloads or when low latency is critical.

- Database Connection Limits: The database server’s connection limits must be considered. Ensure the database can handle the number of concurrent connections generated by the Lambda function. Adjusting the database instance size or implementing connection pooling can mitigate connection limit issues.

- Database Optimization: Optimize the database itself by indexing frequently queried columns, optimizing SQL queries, and ensuring the database instance has sufficient resources (CPU, memory, storage).

- Asynchronous Processing: Employ asynchronous processing using services like Amazon SQS or Amazon SNS to decouple the Lambda function from the database. This allows the function to quickly acknowledge the request and process the data in the background, improving responsiveness and reducing database load.

- Batch Processing: Aggregate multiple database operations into a single batch operation to reduce the number of round trips to the database. This can significantly improve performance, especially when dealing with large datasets.

Illustrating the Use of Database Connection Pooling

Database connection pooling is a crucial technique for optimizing the performance of Lambda functions that interact with relational databases. By reusing existing database connections, connection pooling minimizes the overhead associated with establishing new connections for each function invocation.

Example:

Consider a Lambda function that retrieves data from an Amazon RDS database. Without connection pooling, each function invocation would need to establish a new database connection. This process involves DNS lookup, TCP handshake, authentication, and connection setup, which can take a significant amount of time, especially if the database is located in a different Availability Zone or Region.

With connection pooling, the Lambda function uses a connection pool to manage database connections. When the function is invoked, it retrieves a connection from the pool. After the function completes its database operations, it returns the connection to the pool for reuse. This eliminates the overhead of creating and destroying connections for each invocation.

Implementation:

Connection pooling can be implemented using various database drivers and libraries. For example, in Python, the `psycopg2` library can be configured to use connection pooling. Similarly, Java’s `HikariCP` library provides a robust connection pooling solution. When the function initializes, the connection pool is created, and connections are established. When the function needs to interact with the database, it obtains a connection from the pool, performs its operations, and then returns the connection to the pool.

The pool manages the connections, ensuring they are available for reuse.

Benefits:

The primary benefit of connection pooling is improved performance. By reusing connections, the function can reduce the latency of database operations. Connection pooling also reduces the load on the database server, as it does not need to handle the constant creation and destruction of connections. Furthermore, connection pooling can improve the scalability of the function, as it allows the function to handle more concurrent requests without overwhelming the database.

Advanced Topics

Lambda functions interacting with relational databases require careful consideration of advanced concepts to ensure data integrity, security, and operational efficiency. This section delves into the intricacies of database transactions, securing database connections, and mitigating SQL injection vulnerabilities, providing a comprehensive understanding of these critical aspects.

Database Transactions in Lambda Functions

Database transactions are essential for maintaining data consistency, especially in scenarios involving multiple database operations. Implementing transactions within a Lambda function ensures that a series of operations either all succeed or all fail, preventing partial updates and maintaining the ACID properties (Atomicity, Consistency, Isolation, Durability) of the database.The implementation of database transactions typically involves the following steps:

- Initiating a Transaction: The transaction begins with a specific command, such as `BEGIN` or `START TRANSACTION`, depending on the database system (e.g., PostgreSQL, MySQL). This signals the start of a logical unit of work.

- Performing Database Operations: Within the transaction block, various database operations (e.g., `INSERT`, `UPDATE`, `DELETE`) are executed. These operations are treated as a single unit and are not permanently applied to the database until the transaction is committed.

- Committing or Rolling Back: After all operations are completed, the transaction is either committed (using `COMMIT`), making the changes permanent, or rolled back (using `ROLLBACK`), discarding all changes made within the transaction. The choice depends on whether all operations were successful.

For example, consider a scenario where a Lambda function needs to transfer funds from one account to another. This involves debiting the source account and crediting the destination account. Without a transaction, a failure in either operation could lead to an inconsistent state.“`pythonimport psycopg2def lambda_handler(event, context): conn = None try: conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password” ) cur = conn.cursor() # Start transaction cur.execute(“BEGIN;”) # Debit source account cur.execute(“UPDATE accounts SET balance = balance – %s WHERE account_id = %s;”, (event[‘amount’], event[‘source_account_id’])) if cur.rowcount == 0: raise Exception(“Source account not found or insufficient funds.”) # Credit destination account cur.execute(“UPDATE accounts SET balance = balance + %s WHERE account_id = %s;”, (event[‘amount’], event[‘destination_account_id’])) if cur.rowcount == 0: raise Exception(“Destination account not found.”) # Commit transaction cur.execute(“COMMIT;”) conn.commit() return ‘statusCode’: 200, ‘body’: ‘Transaction successful’ except Exception as e: if conn: conn.rollback() # Rollback in case of any exception return ‘statusCode’: 500, ‘body’: f’Error: str(e)’ finally: if conn: cur.close() conn.close()“`In this example, the `BEGIN` statement starts a transaction.

The `UPDATE` statements perform the debit and credit operations. If any error occurs during these operations, the `ROLLBACK` statement cancels all changes, ensuring that the database remains in a consistent state. If all operations are successful, the `COMMIT` statement makes the changes permanent. The `finally` block ensures that the database connection is closed, regardless of whether an error occurred.

Securing Database Connections

Securing database connections is crucial to protect sensitive data from unauthorized access and potential breaches. This involves implementing secure protocols and practices to encrypt data in transit and verify the identity of both the client and the server.Several key methods are used to secure database connections:

- SSL/TLS Encryption: SSL/TLS (Secure Sockets Layer/Transport Layer Security) encrypts the communication between the Lambda function and the database server. This prevents eavesdropping and man-in-the-middle attacks.

- Database User Authentication: Use strong passwords or, preferably, more secure authentication methods such as IAM database authentication (for AWS RDS) to verify the identity of the Lambda function.

- Network Security Groups (NSGs) and Security Groups: Configure NSGs and security groups to restrict access to the database server, allowing only the Lambda function’s subnet or security group to connect.

- Regular Security Audits: Conduct regular security audits to identify and address potential vulnerabilities in the database connection and configuration.

Implementing SSL/TLS typically involves the following steps:

- Obtain an SSL/TLS Certificate: Acquire a valid SSL/TLS certificate from a trusted Certificate Authority (CA). This certificate is used to verify the identity of the database server.

- Configure the Database Server: Configure the database server to use SSL/TLS. This involves specifying the certificate and private key files.

- Configure the Lambda Function’s Database Connection: Modify the Lambda function’s database connection string to use SSL/TLS. This typically involves specifying the SSL/TLS certificate and enabling encryption.

For example, when using `psycopg2` to connect to a PostgreSQL database with SSL/TLS, you would modify the connection string to include the SSL/TLS parameters:“`pythonimport psycopg2def lambda_handler(event, context): try: conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password”, sslmode=”require”, # or “verify-full” for more strict verification sslcert=”/path/to/client.crt”, # Optional, for client certificate authentication sslkey=”/path/to/client.key”, # Optional, for client certificate authentication sslrootcert=”/path/to/ca.crt” # Optional, for verifying the server certificate ) cur = conn.cursor() # …

(rest of your database operations) … except Exception as e: # … (error handling) … finally: if conn: cur.close() conn.close()“`In this example, `sslmode=”require”` ensures that SSL/TLS is used.

The optional parameters, such as `sslcert`, `sslkey`, and `sslrootcert`, are used for client certificate authentication and verifying the server certificate, respectively. Using `sslmode=”verify-full”` enforces stricter certificate verification, improving security.

Protecting Against SQL Injection Vulnerabilities

SQL injection is a common security vulnerability that occurs when malicious SQL code is injected into database queries, potentially allowing attackers to access, modify, or delete data. Protecting against SQL injection is a critical aspect of securing database interactions.Several strategies can be used to mitigate SQL injection vulnerabilities:

- Parameterized Queries/Prepared Statements: Use parameterized queries or prepared statements. These allow the database driver to treat user-supplied data as literal values, preventing the execution of malicious SQL code.

- Input Validation and Sanitization: Validate and sanitize all user-supplied input before using it in database queries. This helps to filter out potentially harmful characters or patterns.

- Least Privilege Principle: Grant the Lambda function only the necessary permissions to access the database. This limits the potential damage that can be caused by a successful SQL injection attack.

- Web Application Firewall (WAF): Use a WAF to detect and block SQL injection attempts at the network level.

Parameterized queries are the most effective way to prevent SQL injection. Instead of directly embedding user input into the SQL query string, placeholders are used. The database driver then substitutes the user input into these placeholders, ensuring that it is treated as a literal value.For example, consider a scenario where a Lambda function needs to retrieve user data based on a username provided in an event.

Without parameterized queries, the code might be vulnerable to SQL injection:“`pythonimport psycopg2def lambda_handler(event, context): username = event[‘username’] try: conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password” ) cur = conn.cursor() query = f”SELECT

FROM users WHERE username = ‘username’;” # Vulnerable to SQL injection

cur.execute(query) results = cur.fetchall() # … (process results) … except Exception as e: # … (error handling) … finally: if conn: cur.close() conn.close()“`If a malicious user provides `username = “‘; DROP TABLE users; –“` as input, the resulting SQL query would be:“`sqlSELECT

FROM users WHERE username = ”; DROP TABLE users; –‘;

“`This would execute the `DROP TABLE users;` command, potentially deleting the `users` table.Using parameterized queries mitigates this vulnerability:“`pythonimport psycopg2def lambda_handler(event, context): username = event[‘username’] try: conn = psycopg2.connect( host=”your_db_host”, database=”your_db_name”, user=”your_db_user”, password=”your_db_password” ) cur = conn.cursor() query = “SELECT

FROM users WHERE username = %s;” # Parameterized query

cur.execute(query, (username,)) # Pass username as a parameter results = cur.fetchall() # … (process results) … except Exception as e: # … (error handling) … finally: if conn: cur.close() conn.close()“`In this revised example, the `username` variable is passed as a parameter to the `execute()` method.

The database driver will treat the input as a literal value, preventing any malicious SQL code from being executed. Even if the user provides the same malicious input, the database driver will escape it, ensuring that it is not interpreted as SQL code.

Closure

In conclusion, connecting a Lambda function to a relational database offers significant advantages in terms of scalability, cost efficiency, and development agility. This comprehensive guide has explored the essential steps, from database selection and setup to code implementation and performance optimization. By adhering to the principles Artikeld, developers can effectively build and deploy robust, scalable applications that leverage the power of serverless computing and relational databases.

The strategies presented here provide a solid foundation for implementing this powerful architectural pattern, paving the way for future innovations.

FAQ Insights

What are the primary benefits of using a Lambda function with a relational database?

The key advantages include improved scalability, cost optimization (pay-per-use model), faster deployment cycles, and reduced operational overhead, as the serverless nature of Lambda functions eliminates the need for server management.

How do I handle database connection errors within my Lambda function?

Implement robust error handling using try-except blocks to catch exceptions related to database connections (e.g., connection timeouts, authentication failures). Log the errors for debugging purposes and implement retry mechanisms if appropriate. Consider using a connection pool to mitigate connection issues.

What is connection pooling, and why is it important for Lambda functions?

Connection pooling is a technique that reuses database connections to improve performance. It reduces the overhead of establishing new connections for each Lambda invocation, leading to faster response times and reduced database load. This is particularly important for Lambda functions, which often have short execution times and frequent invocations.

How can I secure database credentials within my Lambda function?

Never hardcode credentials directly into your code. Use AWS Secrets Manager to store database credentials securely. Retrieve the credentials from Secrets Manager within your Lambda function using the AWS SDK. This ensures that your credentials are encrypted and protected.

What are the key considerations when choosing between RDS and Aurora for a Lambda function?

Consider the performance and cost requirements. Aurora offers higher performance and availability compared to RDS, but it can be more expensive. If high performance and availability are critical, Aurora is a better choice. If cost is a primary concern and the performance needs are moderate, RDS may suffice. Evaluate the specific needs of your application before making a decision.