Embarking on the journey of selecting the right tools for your DevOps toolchain can feel like navigating a complex maze. With the rapid evolution of technologies and the diverse landscape of available solutions, the choices can be overwhelming. This guide offers a structured approach, designed to help you make informed decisions and build a robust, efficient, and secure DevOps environment tailored to your team’s unique needs.

We will delve into the core principles of DevOps, emphasizing how they shape tool selection. We will explore crucial aspects such as assessing team needs, evaluating tool categories, understanding budget considerations, addressing integration challenges, and prioritizing security, scalability, and user experience. Furthermore, this guide offers practical advice on testing, documentation, and continuous optimization to ensure long-term success with your chosen tools.

Understanding DevOps Principles and Their Impact on Tool Selection

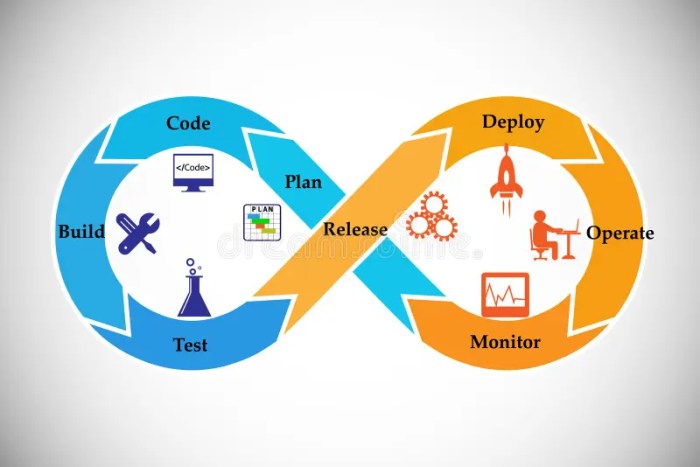

DevOps represents a significant shift in how software is developed and delivered. It emphasizes collaboration, automation, and continuous improvement to accelerate the software development lifecycle. Understanding these core principles is crucial for selecting the right tools to support a successful DevOps implementation. The chosen tools should not only facilitate the technical aspects of DevOps, such as CI/CD pipelines, but also foster the cultural changes necessary for effective collaboration and rapid iteration.

Core DevOps Principles

The foundation of DevOps rests on several key principles that guide its practices. These principles directly influence tool selection, ensuring the chosen tools align with the overall goals of faster, more reliable software delivery.

- Collaboration: DevOps emphasizes breaking down silos between development and operations teams, fostering a culture of shared responsibility and communication. Tools that promote collaboration, such as shared dashboards, collaborative coding platforms, and integrated communication channels, are essential.

- Automation: Automating repetitive tasks, from code building and testing to infrastructure provisioning and deployment, is a cornerstone of DevOps. Tools that support automation, including CI/CD pipelines, configuration management systems, and infrastructure-as-code tools, are critical for efficiency and speed.

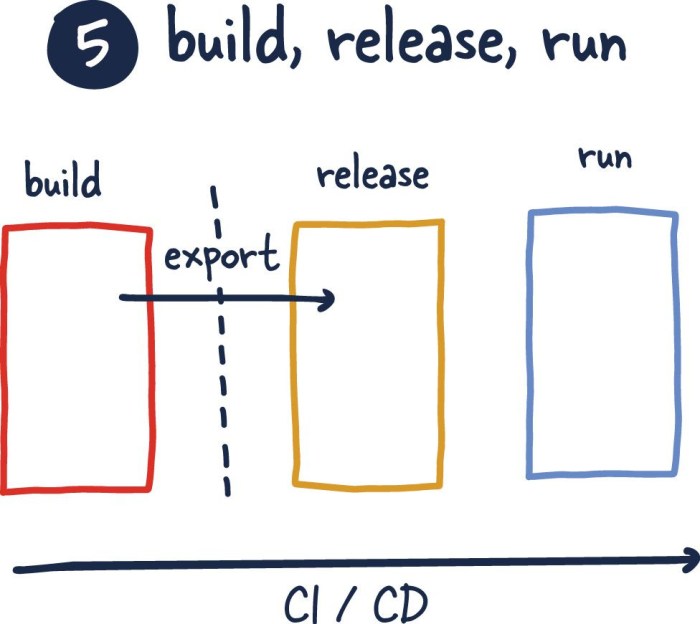

- Continuous Integration (CI): CI involves frequently merging code changes into a central repository, followed by automated builds and tests. This practice helps identify and resolve integration issues early in the development cycle. CI tools should provide robust build and testing capabilities, with integrations for version control systems.

- Continuous Delivery (CD): CD extends CI by automating the release of code changes to various environments, from development to production. CD tools facilitate automated deployments, rollback capabilities, and environment management.

- Continuous Monitoring: Monitoring applications and infrastructure in real-time is crucial for identifying and addressing issues quickly. Monitoring tools provide insights into system performance, user behavior, and potential problems.

- Infrastructure as Code (IaC): IaC treats infrastructure as code, allowing it to be version-controlled, automated, and managed in the same way as application code. IaC tools enable the consistent and repeatable provisioning of infrastructure resources.

Impact of DevOps Practices on Tool Choices

Specific DevOps practices directly influence the selection of tools. The choice of tools should align with the specific needs of each practice, ensuring effective implementation and optimal results.

- Continuous Integration and Continuous Delivery (CI/CD): CI/CD pipelines require tools that automate the build, test, and deployment processes.

- Version Control Systems: (e.g., Git, GitLab, Bitbucket) are essential for managing code changes and enabling collaboration.

- CI/CD Platforms: (e.g., Jenkins, GitLab CI, CircleCI, Azure DevOps) automate the build, test, and deployment stages.

- Build Tools: (e.g., Maven, Gradle, npm) are used to compile and package code.

- Testing Frameworks: (e.g., JUnit, pytest, Selenium) are used to automate testing.

- Artifact Repositories: (e.g., Nexus, Artifactory) store and manage built artifacts.

- Infrastructure as Code (IaC): IaC enables the automation of infrastructure provisioning and management.

- Configuration Management Tools: (e.g., Ansible, Chef, Puppet) automate the configuration of servers and applications.

- Infrastructure Provisioning Tools: (e.g., Terraform, AWS CloudFormation, Azure Resource Manager) define and provision infrastructure resources.

- Monitoring and Logging: Continuous monitoring and logging are crucial for identifying and resolving issues quickly.

- Monitoring Tools: (e.g., Prometheus, Grafana, Datadog, New Relic) provide real-time insights into system performance and application behavior.

- Logging Tools: (e.g., ELK Stack (Elasticsearch, Logstash, Kibana), Splunk) collect, store, and analyze logs for troubleshooting and auditing.

- Containerization: Containerization simplifies application deployment and management.

- Containerization Platforms: (e.g., Docker, Kubernetes) package applications and their dependencies into containers.

Benefits of Aligning Tool Selection with DevOps Philosophy

Selecting tools that align with the DevOps philosophy offers significant benefits, contributing to faster releases, improved collaboration, and enhanced software quality.

- Faster Release Cycles: Automated CI/CD pipelines and IaC enable faster and more frequent releases. For example, a company using a robust CI/CD pipeline can release updates multiple times a day, compared to the weeks or months required in traditional development models.

- Improved Collaboration: Tools that promote collaboration, such as shared dashboards and communication platforms, break down silos and foster a culture of shared responsibility.

- Reduced Errors: Automation reduces the risk of human error, leading to more reliable deployments and fewer production incidents.

- Increased Efficiency: Automation frees up developers and operations staff from repetitive tasks, allowing them to focus on more strategic work.

- Enhanced Software Quality: Continuous testing and monitoring enable early detection and resolution of issues, leading to higher-quality software.

- Improved Time to Market: Faster release cycles enable companies to respond quickly to market changes and deliver new features and products more rapidly.

Identifying Your Team’s Needs and Goals

Understanding your team’s needs and establishing clear goals are crucial first steps in building an effective DevOps toolchain. This process ensures that the selected tools align with your team’s specific challenges and aspirations, maximizing their impact on efficiency, collaboration, and overall success. Careful consideration of these factors helps avoid costly mistakes and ensures the toolchain supports the team’s objectives.

Assessing Current Workflow and Identifying Pain Points

Analyzing the current workflow allows for the identification of inefficiencies and bottlenecks that a new DevOps toolchain can address. This process involves several key steps.

- Workflow Mapping: Begin by meticulously mapping out the current software development lifecycle. This involves documenting each stage, from code creation to deployment and monitoring. Visual aids, such as flowcharts or diagrams, can be beneficial for illustrating the process.

- Process Documentation: Document all existing processes, including those for code reviews, testing, and deployment. Identify any manual steps or repetitive tasks that consume significant time and effort.

- Team Interviews and Surveys: Conduct interviews and distribute surveys to team members across different roles (developers, testers, operations) to gather insights into their daily experiences. Focus on identifying pain points, challenges, and areas where improvements are desired. Ask questions like:

- What tasks take the longest?

- What processes are most frustrating or error-prone?

- What tools are missing or underutilized?

- Performance Metrics Review: Analyze existing performance metrics, such as deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate. These metrics provide a baseline for measuring the impact of the new toolchain.

- Pain Point Prioritization: Based on the gathered data, prioritize the identified pain points. This could involve using a scoring system or a matrix to assess the severity, frequency, and impact of each issue.

Defining Clear Goals for the DevOps Toolchain

Defining clear, measurable, achievable, relevant, and time-bound (SMART) goals is essential for guiding tool selection and evaluating the success of the DevOps toolchain implementation. These goals should directly address the pain points identified in the workflow assessment.

- Specific Goals: Define specific, measurable goals. For example, instead of “improve deployment,” aim for “reduce deployment time by 50%.”

- Measurable Metrics: Establish metrics to track progress. Examples include:

- Deployment frequency: How often code is deployed to production.

- Lead time for changes: The time it takes from code commit to deployment.

- Change failure rate: The percentage of deployments that result in a failure.

- Mean Time to Recovery (MTTR): The average time to restore service after an outage.

- Achievable Objectives: Set realistic and achievable goals. Consider the team’s current capabilities and resources when setting targets.

- Relevant Targets: Ensure the goals align with the overall business objectives. For example, faster deployments can lead to quicker time-to-market and increased customer satisfaction.

- Time-Bound Deadlines: Establish deadlines for achieving the goals. This creates a sense of urgency and helps keep the project on track.

For instance, a team aiming to improve code quality might set a goal to “reduce the number of critical bugs in production by 75% within six months.” They would then measure this by tracking the number of critical bugs reported by users or identified through monitoring tools.

Designing a Template for Documenting Team Requirements and Priorities

A well-structured template helps capture team requirements and prioritize them effectively. This template serves as a central repository for information, guiding tool selection and ensuring alignment across the team.

The template can be organized using a table format, with the following columns:

| Requirement Category | Description | Priority (High/Medium/Low) | Rationale | Existing Tools (If any) | Desired Features |

|---|---|---|---|---|---|

| Code Management | The ability to manage code repositories, version control, and collaboration. | High | Essential for collaborative development and code integrity. | Git, GitHub | Improved branching strategies, automated code reviews. |

| Continuous Integration | Automated building, testing, and integration of code changes. | High | Reduce manual effort, ensure code quality. | Jenkins | Faster build times, support for parallel testing. |

| Continuous Delivery/Deployment | Automated deployment of code changes to various environments. | High | Faster releases, reduced risk of human error. | None | Support for blue/green deployments, automated rollback capabilities. |

| Monitoring and Alerting | Real-time monitoring of application performance and infrastructure health. | Medium | Proactive issue detection and resolution. | Prometheus, Grafana | Integration with CI/CD pipelines, advanced alerting rules. |

The “Requirement Category” column identifies the area of need (e.g., code management, CI/CD, monitoring). The “Description” provides a brief overview of the requirement. “Priority” indicates the importance of the requirement. The “Rationale” explains why the requirement is important. “Existing Tools” lists any current tools used for this purpose.

“Desired Features” specifies the specific features needed from the new tool.

By using this template, teams can clearly define their needs, prioritize them, and make informed decisions about tool selection, ensuring that the chosen tools effectively address their challenges and support their goals.

Evaluating Tool Categories and Features

Selecting the right DevOps tools is a critical step in building an efficient and effective software development lifecycle. This involves understanding the different categories of tools available and assessing their features to align with your team’s needs and goals. This section delves into the major tool categories, their key features, and provides a comparative analysis of popular tools within a specific category.

The selection process must be thorough to avoid inefficiencies and potential roadblocks in the development process.

Identifying Major DevOps Tool Categories

DevOps tools span a wide range of functionalities, each playing a vital role in different stages of the software development lifecycle. Understanding these categories helps in structuring your toolchain effectively.

- Version Control: Manages code changes, allowing developers to track modifications, collaborate, and revert to previous versions.

- CI/CD (Continuous Integration/Continuous Delivery): Automates the building, testing, and deployment of code changes.

- Configuration Management: Automates the configuration and management of infrastructure and applications.

- Monitoring and Logging: Provides insights into the performance and health of applications and infrastructure.

- Infrastructure as Code (IaC): Allows infrastructure to be defined and managed using code.

- Containerization: Packages applications and their dependencies into isolated containers.

- Orchestration: Manages and automates the deployment, scaling, and management of containerized applications.

- Testing: Automates various types of tests, including unit, integration, and end-to-end tests.

- Collaboration and Communication: Facilitates team communication and collaboration.

Key Features Within Each Tool Category

Each tool category has specific features that are essential for its effectiveness. Carefully evaluating these features will help in selecting the right tools.

Version Control:

- Branching and Merging: Enables developers to work on different features simultaneously and merge changes seamlessly.

- Version History and Rollback: Allows tracking of all code changes and the ability to revert to previous versions.

- Collaboration Features: Supports team collaboration with features like pull requests and code reviews.

- Security and Access Control: Controls access to the code repository and protects sensitive information.

CI/CD:

- Automated Builds: Automatically builds the application whenever code changes are pushed.

- Automated Testing: Executes various tests to ensure code quality.

- Deployment Automation: Automates the deployment process to various environments.

- Integration with Version Control: Integrates seamlessly with version control systems to trigger builds and deployments.

- Notifications and Reporting: Provides notifications on build status and generates reports.

Configuration Management:

- Infrastructure Provisioning: Automates the provisioning of servers, networks, and other infrastructure components.

- Configuration Enforcement: Ensures that infrastructure and applications are configured consistently.

- Idempotency: Ensures that configuration changes can be applied multiple times without causing errors.

- Version Control for Configurations: Manages configurations using version control systems.

Monitoring and Logging:

- Real-time Monitoring: Provides real-time insights into application and infrastructure performance.

- Alerting and Notifications: Sends alerts based on predefined thresholds.

- Log Aggregation and Analysis: Collects and analyzes logs from various sources.

- Customizable Dashboards: Provides customizable dashboards to visualize key metrics.

Infrastructure as Code (IaC):

- Declarative Configuration: Allows infrastructure to be defined in a declarative manner.

- Version Control: Manages infrastructure code using version control systems.

- Automation: Automates the creation, modification, and deletion of infrastructure resources.

- Idempotency: Ensures that infrastructure changes can be applied multiple times without causing errors.

Containerization:

- Isolation: Isolates applications and their dependencies.

- Portability: Enables applications to run consistently across different environments.

- Resource Management: Manages the resources allocated to each container.

- Image Management: Provides features for building, storing, and managing container images.

Orchestration:

- Deployment Automation: Automates the deployment of containerized applications.

- Scaling: Automatically scales applications based on demand.

- Service Discovery: Enables containers to discover and communicate with each other.

- Health Checks: Monitors the health of containers and restarts unhealthy ones.

Testing:

- Test Automation: Automates the execution of various tests.

- Test Reporting: Generates reports on test results.

- Integration with CI/CD: Integrates with CI/CD pipelines to automate testing.

- Support for Various Test Types: Supports various test types, including unit, integration, and end-to-end tests.

Collaboration and Communication:

- Real-time Communication: Facilitates real-time communication among team members.

- Project Management: Provides features for managing projects and tasks.

- Documentation: Supports documentation of projects and processes.

- Integration with other tools: Integrates with other DevOps tools.

Comparison Chart: Version Control Tools

This chart compares the strengths and weaknesses of popular version control tools. The table below offers a side-by-side comparison to aid in decision-making. Consider these factors when selecting a version control tool.

| Tool | Strengths | Weaknesses |

|---|---|---|

| Git |

|

|

| Subversion (SVN) |

|

|

| Mercurial |

|

|

Budget Considerations and Cost Analysis

Creating a realistic budget and performing a thorough cost analysis are critical steps in selecting and implementing a DevOps toolchain. A well-defined budget ensures that the chosen tools align with the organization’s financial capabilities and allows for effective resource allocation. Careful cost analysis helps in making informed decisions about tool selection, minimizing unnecessary expenses, and maximizing the return on investment (ROI) from the DevOps implementation.

This section provides guidance on developing a budget, comparing tool costs, and optimizing expenses.

Creating a DevOps Toolchain Budget

Developing a comprehensive budget for your DevOps toolchain involves considering various cost components, both initial and ongoing. This ensures financial planning covers the entire lifecycle of the tools, from acquisition to maintenance and beyond.The budget should include these key elements:

- Initial Costs: These are one-time expenses associated with acquiring and setting up the tools.

- Software Licenses: The cost of purchasing licenses for commercial tools. These can range from per-user fees to enterprise-level subscriptions.

- Hardware Costs: If the tools require on-premise infrastructure, factor in the cost of servers, storage, and networking equipment.

- Implementation Services: The cost of hiring consultants or vendors to assist with tool setup, configuration, and integration.

- Data Migration: Expenses related to migrating existing data to the new tools.

- Ongoing Costs: These are recurring expenses that ensure the toolchain’s continued operation and effectiveness.

- Subscription Fees: Recurring payments for cloud-based tools or software-as-a-service (SaaS) subscriptions.

- Maintenance and Support: Costs for tool maintenance, including updates, patches, and technical support.

- Infrastructure Costs: Ongoing expenses for maintaining on-premise infrastructure, such as electricity, cooling, and physical security.

- Personnel Costs: Salaries for DevOps engineers, administrators, and other staff responsible for managing the toolchain.

- Training Costs: Investment in training employees to effectively use and manage the chosen tools.

- Training Programs: Costs associated with enrolling employees in training courses, workshops, or certifications.

- Training Materials: Expenses for purchasing training manuals, online courses, or other learning resources.

- Travel and Accommodation: Costs for employees to attend in-person training sessions.

Consider the following example to understand the impact of each element:An organization deciding to adopt a CI/CD pipeline tool might initially spend on the software license (e.g., $10,000), implementation services (e.g., $5,000), and employee training (e.g., $2,000). Ongoing costs could include the subscription fee (e.g., $500 per month), maintenance and support fees (e.g., $1,000 per year), and the salaries of the DevOps engineers.

Comparing Open-Source vs. Commercial Tool Costs

Choosing between open-source and commercial tools involves a thorough comparison of their associated costs. While open-source tools often have no upfront licensing fees, they can still incur costs. Commercial tools typically have upfront costs but may offer additional benefits like support and ease of use.Here’s a method for comparing costs:

- Total Cost of Ownership (TCO): Calculate the TCO for each tool option over a defined period (e.g., three to five years). This includes all direct and indirect costs.

- Direct Costs: These are the readily apparent costs, such as licensing fees for commercial tools and hardware expenses for self-hosted open-source tools.

- Indirect Costs: These costs may be less obvious but can significantly impact the total cost. They include:

- Implementation Costs: The effort and resources required to set up and configure the tool.

- Maintenance Costs: Ongoing expenses for updates, security patches, and troubleshooting. Open-source tools may require more internal expertise or reliance on community support, while commercial tools may offer dedicated support.

- Training Costs: The expense of training employees on how to use and manage the tool.

- Opportunity Costs: The value of time and resources spent on tool management and maintenance, which could be used for other strategic initiatives.

- Support and Maintenance: Assess the availability and cost of support and maintenance for each tool. Commercial tools typically offer dedicated support, while open-source tools rely on community support. Consider the impact of downtime on productivity and revenue.

- Feature Comparison: Compare the features and capabilities of the tools. Ensure that the chosen tool meets the organization’s needs.

- Scalability: Evaluate the tool’s ability to scale to accommodate future growth.

Consider these real-world examples:* Scenario 1: A small startup might choose a free open-source CI/CD tool initially to minimize costs. However, as the team grows and the complexity increases, the lack of dedicated support and the need for extensive in-house expertise could lead to higher indirect costs, such as increased time spent on troubleshooting and maintenance.* Scenario 2: A large enterprise might opt for a commercial CI/CD tool with a higher upfront cost.

The enterprise values the dedicated support, comprehensive features, and ease of use, leading to lower indirect costs and increased productivity, even if the initial investment is higher.

Optimizing Toolchain Costs

Several strategies can be used to optimize toolchain costs without sacrificing functionality.

- Negotiate Pricing: Negotiate with vendors for better pricing, especially for commercial tools. This is more effective when purchasing multiple tools or committing to long-term contracts.

- Leverage Free Tiers and Trials: Take advantage of free tiers or trial periods offered by vendors to test tools and determine if they meet your needs before committing to a paid subscription.

- Optimize Resource Usage: Monitor resource utilization and optimize the configuration of tools to reduce unnecessary costs. This can involve right-sizing instances, automating resource scaling, and eliminating unused resources.

- Automate Processes: Automate repetitive tasks to reduce the need for manual intervention and improve efficiency.

- Consolidate Tools: Evaluate whether multiple tools can be replaced with a single tool that offers a wider range of functionalities. This can reduce the number of licenses and support contracts.

- Choose Cloud-Native Tools: Consider cloud-native tools that offer pay-as-you-go pricing models, which can help to reduce costs compared to on-premise solutions.

- Regularly Review and Audit: Periodically review the toolchain and audit its usage to identify opportunities for cost optimization. This could involve removing unused tools, downgrading subscriptions, or negotiating better pricing.

For example, by automating the scaling of infrastructure resources based on demand, a company can reduce cloud computing costs during off-peak hours. Another example is consolidating the source code management and CI/CD pipeline tools into a single platform to streamline operations and minimize the number of subscriptions.

Integration and Compatibility Challenges

Choosing the right DevOps tools is only half the battle. The real test comes in integrating these tools seamlessly into a cohesive workflow. Successfully navigating the integration landscape is crucial for realizing the full potential of your DevOps toolchain. However, compatibility issues, conflicting configurations, and data silos can quickly undermine your efforts, leading to inefficiencies and frustration.

Common Integration Challenges

Integrating disparate tools presents a complex set of hurdles. These challenges, if unaddressed, can severely impact the efficiency and effectiveness of your DevOps practices.

- Data Silos: Different tools often store data in incompatible formats or isolated databases. This fragmentation makes it difficult to gain a holistic view of the software development lifecycle, hindering informed decision-making and collaboration.

- Configuration Complexity: Each tool typically requires specific configurations, and managing these across multiple tools can become overwhelming. Incorrect configurations can lead to errors, inconsistencies, and system failures.

- Version Conflicts: Compatibility issues arise when different tools rely on conflicting versions of dependencies, libraries, or APIs. This can result in unpredictable behavior and break the functionality of your toolchain.

- Security Concerns: Integrating tools can introduce new security vulnerabilities if not handled carefully. Improper authentication, authorization, and data transfer protocols can expose sensitive information to unauthorized access.

- Maintenance Overhead: As your toolchain evolves, maintaining the integrations between tools becomes increasingly complex. Updates to one tool may require corresponding changes in other tools, creating a constant cycle of adjustments and testing.

Assessing Tool Compatibility

Thorough assessment of tool compatibility is vital to prevent integration nightmares. This proactive approach saves time, resources, and potential headaches down the line.

- Review Documentation: Carefully examine the official documentation of each tool to understand its integration capabilities. Look for details about supported protocols, data formats, and any specific requirements for integration with other tools.

- Check for Pre-built Integrations: Explore if the tools you are considering offer pre-built integrations with other popular DevOps tools. These pre-built integrations often simplify the integration process and reduce the need for custom development.

- Evaluate API Availability: Determine if the tools provide robust and well-documented APIs (Application Programming Interfaces). APIs are essential for enabling communication and data exchange between tools.

- Test Compatibility in a Sandbox Environment: Before deploying tools in a production environment, test their compatibility in a sandbox or staging environment. This allows you to identify and resolve any integration issues without impacting your live systems.

- Consider the Vendor Ecosystem: Evaluate the vendor’s commitment to interoperability. Do they actively participate in industry standards and support integrations with other vendors’ tools? This commitment often indicates a better long-term compatibility outlook.

Using APIs and Plugins for Effective Integration

APIs and plugins are the building blocks of effective DevOps toolchain integration. Understanding how to leverage these technologies is critical for creating a streamlined and automated workflow.

- Understanding APIs: APIs are the gateways that enable different tools to communicate with each other. They define how tools exchange data and perform actions. Most modern DevOps tools expose APIs that allow for programmatic access to their functionality.

- Leveraging Plugins: Plugins are software components that extend the functionality of a tool. They can be used to create custom integrations between tools or to automate specific tasks. Many DevOps tools support plugins, making it easier to tailor your toolchain to your specific needs.

- Example: Integrating a CI/CD pipeline with a monitoring tool.

- A Continuous Integration/Continuous Deployment (CI/CD) pipeline, such as Jenkins or GitLab CI, can be integrated with a monitoring tool like Prometheus or Datadog using their respective APIs.

- The CI/CD pipeline can be configured to send deployment events to the monitoring tool using its API.

- The monitoring tool can then correlate these events with performance metrics, allowing you to quickly identify any issues introduced by a new deployment.

- Example: Using a Plugin to Automate Security Scanning.

- A plugin for a static code analysis tool, such as SonarQube, can be integrated into your IDE (Integrated Development Environment).

- This plugin automatically scans your code for security vulnerabilities as you write it.

- The plugin can then report any issues directly in your IDE, allowing you to address them immediately.

- Choosing the Right Integration Method: The best integration method depends on the tools involved and your specific needs. Consider the following:

- Direct API calls: This is the most flexible approach, allowing you to create custom integrations between tools.

- Pre-built integrations: These integrations are often easier to set up and maintain, but they may not offer the same level of customization as direct API calls.

- Plugins: Plugins can be a good option for extending the functionality of a tool or automating specific tasks.

Security Considerations in Tool Selection

Choosing the right DevOps tools involves careful consideration of security. Integrating security into your DevOps toolchain is not just about implementing security features; it’s about making security a foundational principle. The tools you select can either fortify your security posture or introduce new vulnerabilities. Therefore, a proactive approach to security during the tool selection process is crucial for mitigating risks and ensuring the integrity of your software development lifecycle.

Identifying Security Risks Associated with DevOps Tools

DevOps tools, while offering significant benefits, introduce various security risks. These risks can stem from the tools themselves, their configurations, or the way they are used. Understanding these potential vulnerabilities is the first step in mitigating them.

- Configuration Management Tools: These tools, such as Ansible, Chef, and Puppet, can expose sensitive information if not configured securely. Weakly encrypted secrets, improper access controls, and vulnerabilities in the tool’s code itself can be exploited. For instance, a misconfigured Ansible playbook might inadvertently grant excessive permissions to users or expose API keys.

- Continuous Integration/Continuous Delivery (CI/CD) Pipelines: CI/CD pipelines are attractive targets for attackers. Compromising a pipeline can lead to the injection of malicious code into production systems. Risks include insecure storage of credentials, vulnerabilities in build tools, and lack of proper code signing. A compromised Jenkins server, for example, could allow attackers to modify build processes and inject malware.

- Containerization and Orchestration Tools: Docker and Kubernetes, while streamlining application deployment, also introduce security challenges. Vulnerabilities in container images, misconfigured Kubernetes deployments, and insecure container registries can be exploited. For example, using outdated base images or failing to properly isolate containers can create security gaps.

- Monitoring and Logging Tools: These tools collect vast amounts of data, making them potential targets for attackers seeking to gather information or disrupt operations. Improper access controls and insecure storage of logs can expose sensitive information. An example is a monitoring system that lacks proper authentication, allowing unauthorized access to critical performance data and system logs.

- Source Code Management (SCM) Tools: Tools like Git are crucial, but they can also be vulnerable. Weak authentication, lack of branch protection, and improper handling of secrets can lead to code theft or unauthorized modifications. A compromised GitHub repository, for instance, could allow attackers to inject malicious code into a project.

Elaborating on Security Features to Look For When Selecting Tools

When evaluating DevOps tools, prioritize security features. These features are essential for protecting your toolchain and the systems it supports.

- Access Control and Authentication: Strong access control mechanisms are paramount. Tools should support multi-factor authentication (MFA), role-based access control (RBAC), and integration with identity providers (IdPs) such as Active Directory or Okta. This ensures that only authorized users can access sensitive resources.

- Encryption: Data should be encrypted at rest and in transit. This includes encrypting secrets, configuration files, and communication between tools. Look for tools that support encryption protocols like TLS/SSL and offer options for managing encryption keys securely.

- Vulnerability Scanning: Tools should include built-in or integrated vulnerability scanning capabilities. This enables the identification of security flaws in code, container images, and dependencies. Integration with vulnerability scanning tools like SonarQube, Snyk, or Trivy is also essential.

- Secret Management: Securely managing secrets (passwords, API keys, tokens) is critical. Tools should offer features for storing, rotating, and accessing secrets securely. Examples include HashiCorp Vault, AWS Secrets Manager, and Azure Key Vault.

- Audit Logging: Comprehensive audit logging is necessary for tracking all actions performed within the toolchain. This allows for detecting and responding to security incidents. Audit logs should record user activities, configuration changes, and access attempts.

- Compliance and Regulatory Support: Consider tools that support industry-specific compliance standards (e.g., PCI DSS, HIPAA). These tools often have features and configurations designed to meet regulatory requirements.

- Regular Updates and Patching: Ensure the tools are regularly updated and patched to address known vulnerabilities. Tools with a strong track record of security updates are preferable.

Providing a Guide to Securing the DevOps Toolchain from Potential Threats

Securing the DevOps toolchain is an ongoing process that requires a combination of technical measures, best practices, and a security-focused culture.

- Implement a Security Policy: Establish a clear security policy that Artikels the security requirements and guidelines for the DevOps toolchain. This policy should cover access control, data protection, incident response, and compliance.

- Use Infrastructure as Code (IaC) Securely: Treat infrastructure configurations as code, and apply security best practices. This includes using secure templates, version control, and regular security audits. For instance, use tools like Terraform with secure configurations to deploy infrastructure.

- Automate Security Checks: Integrate security checks into the CI/CD pipeline. This includes static code analysis, vulnerability scanning, and security testing. Automated checks help identify and address security issues early in the development lifecycle.

- Secure Secrets Management: Centralize and secure the management of secrets. Use dedicated secret management tools and avoid hardcoding secrets in code or configuration files. Rotate secrets regularly.

- Apply the Principle of Least Privilege: Grant users and systems only the minimum necessary permissions. This reduces the impact of potential security breaches. Use RBAC to define and manage user roles and permissions.

- Monitor and Log Everything: Implement comprehensive monitoring and logging across the entire DevOps toolchain. This includes logging user activities, system events, and security incidents. Regularly review logs to detect suspicious activities.

- Regularly Update and Patch Tools: Keep all DevOps tools up-to-date with the latest security patches. Automate the patching process where possible.

- Conduct Regular Security Audits: Perform regular security audits and penetration testing to identify vulnerabilities and assess the effectiveness of security controls. Address any identified weaknesses promptly.

- Educate and Train the Team: Provide security awareness training to all team members. This training should cover security best practices, common threats, and incident response procedures.

- Establish an Incident Response Plan: Develop and test an incident response plan to address security breaches effectively. This plan should Artikel the steps to be taken in the event of a security incident, including containment, eradication, and recovery.

Scalability and Performance Requirements

Scalability and performance are critical factors when selecting tools for your DevOps toolchain. As your team and infrastructure grow, the chosen tools must be able to handle increased workloads and user demands without compromising performance or stability. Neglecting these aspects can lead to bottlenecks, delays, and ultimately, a degraded user experience.

Importance of Scalability in the DevOps Toolchain

The DevOps toolchain should be designed with scalability as a core principle. This means the tools should be able to adapt to increasing demands on resources, whether it’s more users, more data, or more frequent deployments. A scalable toolchain ensures that the development and deployment processes remain efficient, even as the organization expands.

- Accommodating Growth: Scalable tools can handle larger volumes of data, increased user traffic, and more complex operations as the team and infrastructure grow.

- Maintaining Performance: Scalability helps maintain consistent performance levels, preventing slowdowns and bottlenecks that can impact productivity and user experience.

- Ensuring Reliability: Scalable systems are more resilient to failures and can automatically adjust to maintain service availability, reducing the risk of downtime.

- Optimizing Resource Utilization: Scalable tools can efficiently utilize available resources, preventing over-provisioning and minimizing costs.

Methods for Assessing a Tool’s Performance Under Load

Evaluating a tool’s performance under load is essential to ensure it can handle the expected demands. This involves simulating real-world scenarios to measure the tool’s response time, resource consumption, and overall stability.

- Load Testing: Load testing simulates realistic user traffic to assess the tool’s performance under normal and peak loads. Tools like Apache JMeter, Gatling, and LoadView can be used to generate traffic and monitor response times.

- Stress Testing: Stress testing pushes the tool beyond its expected capacity to identify its breaking point and assess its behavior under extreme conditions. This helps determine the tool’s limits and potential failure points.

- Performance Monitoring: Continuous performance monitoring provides real-time insights into the tool’s behavior, allowing for proactive identification of performance issues. Tools like Prometheus, Grafana, and Datadog can be used to collect and visualize performance metrics.

- Benchmarking: Benchmarking involves comparing the performance of different tools under the same conditions. This can help in selecting the best-performing tool for a specific task. This might involve testing different CI/CD pipelines, for instance.

- Capacity Planning: Capacity planning involves estimating the resources needed to support future growth. This includes anticipating increased data volumes, user counts, and transaction rates. This process helps to determine the hardware and software requirements for scaling the tool.

Designing a Plan for Scaling the Toolchain as the Team Grows

A well-defined scaling plan ensures the toolchain can adapt to the evolving needs of the organization. This plan should consider both horizontal and vertical scaling strategies.

- Horizontal Scaling: Horizontal scaling involves adding more instances of a tool to handle increased load. This is often achieved through containerization (e.g., Docker, Kubernetes) and load balancing.

- Vertical Scaling: Vertical scaling involves increasing the resources (e.g., CPU, memory) allocated to a single instance of a tool. This is suitable for tools that are not easily distributed.

- Automated Provisioning: Implement automation to automatically provision and configure new tool instances as needed. This reduces manual effort and ensures consistency. Tools like Terraform and Ansible are useful for this.

- Monitoring and Alerting: Set up robust monitoring and alerting systems to detect performance issues and trigger scaling actions automatically.

- Choosing Scalable Tools: Prioritize tools that are designed for scalability from the ground up. Consider tools that support distributed architectures, horizontal scaling, and automated provisioning.

- Regular Review and Optimization: Periodically review the toolchain’s performance and make adjustments as needed. This might involve optimizing tool configurations, upgrading hardware, or migrating to more scalable tools.

- Example: Consider a CI/CD pipeline. Initially, the pipeline might run on a single server. As the team grows, the pipeline can be scaled horizontally by adding more build agents and using a load balancer to distribute the workload. This might involve a system that automatically spins up new build agents when the queue of tasks exceeds a certain threshold.

User Experience and Ease of Use

The user experience (UX) of DevOps tools significantly impacts team productivity, adoption rates, and overall success. Tools that are difficult to learn or use can hinder collaboration, increase errors, and slow down the entire development lifecycle. Prioritizing user-friendliness is, therefore, a critical aspect of DevOps tool selection.

Assessing the User-Friendliness of a DevOps Tool

Evaluating the user-friendliness of a DevOps tool involves several key considerations to ensure it aligns with the team’s needs and promotes efficient workflows.

- Intuitive Interface Design: The interface should be clean, uncluttered, and easy to navigate. Elements should be logically organized, and the tool should provide clear visual cues and feedback. Consider the use of consistent design patterns and accessible design principles to accommodate users with diverse needs.

- Ease of Learning: The tool should be easy to learn and understand, with minimal required training. This can be assessed by observing how quickly new users can perform basic tasks and how readily they can find the information they need.

- Comprehensive Documentation: Robust and accessible documentation, including tutorials, guides, and FAQs, is crucial. Documentation should be clear, concise, and up-to-date, with examples and troubleshooting tips. The documentation should be searchable and easily navigable.

- Responsiveness and Performance: The tool should respond quickly to user actions and perform efficiently, even under heavy load. Slow performance can frustrate users and negatively impact productivity.

- Customization and Personalization: The ability to customize the tool to fit individual user preferences and team workflows enhances usability. This includes options for configuring dashboards, notifications, and other settings.

- Error Handling and Feedback: Clear and helpful error messages and feedback mechanisms are essential for guiding users and preventing frustration. The tool should provide suggestions for resolving errors and offer support resources when needed.

- Community and Support: A strong community and readily available support resources can greatly enhance the user experience. This includes forums, online communities, and responsive customer support.

Examples of Tools with Intuitive Interfaces and Good Documentation

Several DevOps tools are renowned for their user-friendly interfaces and comprehensive documentation.

- GitLab: GitLab is a popular DevOps platform that provides a web-based interface for managing code repositories, CI/CD pipelines, and other DevOps tasks. Its interface is generally considered intuitive, with clear navigation and a focus on ease of use. GitLab offers extensive documentation, including tutorials, guides, and API documentation, making it easy for users to learn and get started. GitLab also offers comprehensive tutorials and a strong community.

- Jenkins: Jenkins is an open-source automation server widely used for CI/CD. Although its interface may appear less modern compared to some other tools, Jenkins provides a highly customizable and flexible platform. Its documentation is extensive, covering a wide range of plugins and use cases. Jenkins also has a large and active community that offers support and resources.

- Terraform: Terraform is an infrastructure-as-code (IaC) tool used for provisioning and managing infrastructure. Terraform’s command-line interface (CLI) is relatively straightforward to use, and its configuration files are written in a declarative language (HCL) that is easy to read and understand. Terraform’s documentation is well-organized and comprehensive, providing detailed information on all aspects of the tool.

- Docker: Docker is a platform for developing, shipping, and running applications in containers. Docker’s CLI is relatively easy to learn, and its documentation is comprehensive and well-organized. Docker also provides a range of tutorials and examples that help users get started quickly.

Strategies for Improving the User Experience of the DevOps Toolchain

Improving the user experience of the DevOps toolchain requires a proactive and ongoing effort.

- User-Centered Design: Involve end-users in the tool selection and implementation process. Gather feedback through surveys, interviews, and usability testing to understand their needs and preferences.

- Prioritize Automation: Automate repetitive tasks to reduce manual effort and potential errors. Automating tasks streamlines workflows and improves efficiency.

- Provide Training and Support: Offer comprehensive training programs and ongoing support to help users learn and effectively utilize the tools. This can include online courses, workshops, and dedicated support teams.

- Standardize Tooling: Standardize the tools used across the organization to reduce complexity and promote consistency. This can simplify onboarding, training, and troubleshooting.

- Monitor and Measure: Continuously monitor user feedback, tool usage, and performance metrics to identify areas for improvement. Use data to inform decisions about tool upgrades, customizations, and training programs.

- Integrate Tools Seamlessly: Ensure that tools integrate seamlessly with each other to create a cohesive and efficient workflow. Consider using a DevOps platform that provides a unified interface for managing various tools.

- Implement Version Control: Utilize version control for all configuration files, scripts, and code. This allows for tracking changes, rolling back to previous versions, and collaboration among team members.

- Encourage Collaboration: Foster a culture of collaboration and knowledge sharing. Encourage teams to share best practices, tips, and tricks for using the tools.

Testing and Proof of Concept

Selecting the right tools for your DevOps toolchain is a critical decision, but it shouldn’t be made without thorough testing and validation. This phase is crucial to minimize risks and ensure that the chosen tools meet your specific needs. Before fully integrating any new tool into your environment, it’s essential to perform rigorous testing and create a Proof of Concept (POC) to validate its suitability.

Testing Tools Before Implementation

Thorough testing is the cornerstone of successful tool adoption. It helps identify potential issues, compatibility problems, and performance bottlenecks before the tool is deployed across your entire infrastructure. This process involves several key steps:

- Define Testing Scope and Objectives: Clearly Artikel what you want to achieve with the testing. This includes specifying the features to be tested, performance metrics to measure, and the environment in which the testing will take place. For example, if testing a new CI/CD pipeline tool, define the types of builds to be tested (e.g., full builds, incremental builds), the target platforms, and the expected build times.

- Create a Test Plan: Develop a detailed test plan that covers various test scenarios, including functional testing (verifying that the tool’s features work as expected), performance testing (assessing the tool’s speed and efficiency), and security testing (evaluating the tool’s security vulnerabilities). The test plan should include specific test cases, expected results, and the methods for recording and analyzing the results.

- Set Up a Test Environment: Establish a dedicated test environment that closely mirrors your production environment. This includes replicating the infrastructure, networking, and application components. The test environment should be isolated from the production environment to prevent any potential disruption or data loss.

- Execute Tests and Record Results: Execute the test cases defined in the test plan and meticulously record the results. This includes documenting any issues, errors, or performance bottlenecks encountered during the testing process. Use logging and monitoring tools to capture relevant data, such as CPU usage, memory consumption, and network latency.

- Analyze Results and Identify Issues: Analyze the test results to identify any discrepancies between the expected and actual outcomes. This may involve reviewing logs, performance metrics, and error messages. Determine the root causes of any issues and prioritize them based on their severity and impact.

- Iterate and Refine: Based on the test results, iterate on the tool configuration or settings. This may involve adjusting the tool’s parameters, optimizing its performance, or addressing any security vulnerabilities. Repeat the testing process until the tool meets the defined requirements and objectives.

Methods for Creating a Proof of Concept (POC) to Validate Tool Choices

A Proof of Concept (POC) is a small-scale, focused implementation of a tool to demonstrate its feasibility and value within your specific context. It allows you to validate your tool choices in a controlled environment before making a full-scale deployment. Here’s how to create an effective POC:

- Define POC Scope and Objectives: Clearly define the goals of the POC. What specific problems are you trying to solve? What key features are you testing? For instance, if evaluating a new monitoring tool, the POC objectives might include monitoring specific application metrics, setting up alerts for critical events, and generating performance reports.

- Select a Representative Use Case: Choose a specific use case that reflects your real-world scenarios. This helps ensure that the POC is relevant and provides meaningful insights. For example, if testing a new container orchestration tool, select a representative application that you intend to containerize and deploy.

- Set Up a Limited Environment: Create a limited environment that mimics your production environment but on a smaller scale. This environment should include the necessary infrastructure, networking, and application components to test the chosen tool.

- Implement the Tool: Install and configure the tool within the POC environment. Configure the tool to address the defined use case and objectives. This may involve integrating the tool with other existing tools or systems.

- Test and Evaluate: Run the tool within the POC environment and evaluate its performance, functionality, and usability. Collect data and metrics to assess whether the tool meets the defined objectives. This may include measuring performance, assessing the ease of use, and identifying any integration challenges.

- Document Results and Findings: Document the results of the POC, including successes, failures, and lessons learned. This documentation should include detailed descriptions of the testing process, the findings, and any recommendations for future implementation.

- Present Findings and Make a Decision: Present the findings of the POC to the stakeholders, including the development team, operations team, and management. Based on the POC results, make an informed decision about whether to proceed with the full-scale deployment of the tool.

Template for Documenting the Results of the POC, Including Successes and Failures

A well-structured POC report is crucial for conveying the findings and making informed decisions. The following template provides a framework for documenting the results of your POC, including successes and failures:

| Section | Description | Example |

|---|---|---|

| 1. Executive Summary | A concise overview of the POC, including its objectives, key findings, and recommendations. | “The POC successfully demonstrated the ability of Tool X to automate our CI/CD pipeline. The tool reduced build times by 20% and improved the reliability of our deployments. However, we encountered integration challenges with our existing monitoring system.” |

| 2. Objectives | A clear statement of the goals of the POC. | “The primary objective of the POC was to evaluate the feasibility of using Tool X to automate our CI/CD pipeline and improve the efficiency of our deployments.” |

| 3. Scope | Details of the specific features and use cases covered by the POC. | “The POC focused on automating the build, test, and deployment phases of our web application using Tool X. The POC included integration with our existing Git repository, build servers, and deployment servers.” |

| 4. Environment Setup | Description of the test environment, including infrastructure, networking, and application components. | “The POC environment consisted of a dedicated server with 4 vCPUs and 8GB of RAM, running Ubuntu 20.04. The environment included a Git repository, a build server, and a deployment server.” |

| 5. Implementation Details | Step-by-step instructions on how the tool was implemented and configured. | “Tool X was installed on the build server and configured to connect to the Git repository. We created a pipeline that automatically triggered builds and tests whenever code changes were pushed to the repository. The pipeline was configured to deploy the application to the deployment server after successful testing.” |

| 6. Testing Methodology | Description of the testing methods used to evaluate the tool. | “We conducted functional testing to verify that the build, test, and deployment processes were working correctly. We also performed performance testing to measure build times and deployment times. We used logging and monitoring tools to capture performance metrics.” |

| 7. Results | A summary of the key findings, including successes and failures. | “Successes: Reduced build times by 20%, Improved deployment reliability, Automated CI/CD pipeline. Failures: Integration challenges with existing monitoring system, Steep learning curve for developers.” |

| 8. Analysis | In-depth analysis of the results, including the root causes of any issues. | “The integration challenges with the existing monitoring system were due to incompatibility between Tool X and the monitoring system’s API. The steep learning curve was attributed to the complexity of Tool X’s user interface.” |

| 9. Recommendations | Recommendations for future implementation, including any necessary adjustments or improvements. | “We recommend proceeding with the full-scale deployment of Tool X, but we also recommend addressing the integration challenges with the monitoring system and providing additional training to the development team.” |

| 10. Conclusion | A concluding statement summarizing the overall findings of the POC. | “The POC demonstrated that Tool X is a viable solution for automating our CI/CD pipeline. While there are some challenges, the benefits outweigh the drawbacks.” |

Documentation and Training Resources

Choosing the right DevOps tools is only half the battle. Equally crucial is ensuring your team can effectively utilize these tools. This requires comprehensive documentation and readily available training resources. Poor documentation and inadequate training can lead to inefficiencies, errors, and ultimately, a failure to realize the full benefits of your chosen toolchain. Investing in these areas is a key factor in maximizing the return on investment (ROI) of your DevOps tool selection.

Importance of Good Documentation for DevOps Tools

High-quality documentation is fundamental for the successful adoption and ongoing use of any DevOps tool. It serves as the primary source of information for users, guiding them through setup, configuration, usage, and troubleshooting. Without it, users are left to rely on trial and error, which is time-consuming and prone to mistakes.Documentation’s importance is multifaceted:

- Onboarding New Team Members: Clear documentation accelerates the onboarding process, enabling new team members to quickly understand and contribute to the DevOps workflows. This reduces the learning curve and minimizes the time it takes for new hires to become productive.

- Troubleshooting and Problem Solving: Well-written documentation provides solutions to common problems and helps users diagnose and resolve issues independently. This reduces reliance on external support and minimizes downtime.

- Facilitating Best Practices: Documentation Artikels best practices for tool usage, ensuring that the team consistently follows recommended procedures. This leads to more efficient and reliable operations.

- Knowledge Preservation: Documentation acts as a repository of knowledge, capturing institutional expertise and preventing knowledge loss when team members leave. This ensures the continuity of operations.

- Enabling Self-Service: Comprehensive documentation empowers users to find answers to their questions without needing to consult with others, fostering self-sufficiency and reducing the burden on support teams.

Types of Training Resources Available for Different Tools

A variety of training resources can help teams master their chosen DevOps tools. The availability and quality of these resources can significantly impact the speed and effectiveness of tool adoption. The optimal mix of training methods will depend on the tool, the team’s experience level, and the available budget.Consider these common types of training resources:

- Official Documentation: This is the cornerstone of any tool’s training resources. It typically includes detailed guides, tutorials, API references, and FAQs. The quality of the official documentation is a primary indicator of a tool’s usability.

- Online Courses: Many tools offer online courses through platforms like Udemy, Coursera, or their own websites. These courses often provide structured learning paths, hands-on exercises, and certifications. They can be a great way to gain in-depth knowledge and demonstrate proficiency.

- Video Tutorials: Video tutorials are a popular way to learn, providing visual demonstrations of how to use the tool. They are particularly helpful for understanding complex workflows and troubleshooting. YouTube and the tool’s website are common sources for video tutorials.

- Instructor-Led Training: For more complex tools or when a more interactive learning experience is desired, instructor-led training can be beneficial. This can be delivered in-person or online and often includes hands-on exercises and opportunities to ask questions.

- Workshops and Webinars: Workshops and webinars provide opportunities for interactive learning and practical application. They often focus on specific use cases or advanced features.

- Community Forums and Support Groups: Online forums and community groups provide a space for users to ask questions, share knowledge, and troubleshoot issues with other users. They can be a valuable resource for learning from the experiences of others.

- Books: Books can provide a comprehensive and in-depth understanding of a tool. While often covering the basics, they can delve into more advanced topics, providing detailed explanations of concepts and practices.

Checklist for Evaluating the Quality of Tool Documentation and Training Materials

Before selecting a DevOps tool, it is essential to assess the quality of its documentation and training materials. This checklist provides a framework for evaluating these resources, ensuring that they meet your team’s needs. A thorough evaluation will help you identify potential gaps and make informed decisions.Use this checklist to assess the available resources:

- Completeness: Does the documentation cover all aspects of the tool, including installation, configuration, usage, troubleshooting, and API references?

- Accuracy: Is the information accurate and up-to-date? Are there any known errors or omissions? Verify by cross-referencing with user experiences.

- Clarity: Is the documentation easy to understand, with clear language and concise explanations? Is the information organized logically?

- Accessibility: Is the documentation easily accessible online and offline? Is it available in multiple formats (e.g., HTML, PDF)?

- Searchability: Is the documentation easily searchable? Does it include a comprehensive index and table of contents?

- Examples and Tutorials: Does the documentation include practical examples and step-by-step tutorials? Are these examples relevant to your team’s use cases?

- Troubleshooting Guides: Does the documentation provide clear and concise troubleshooting guides for common issues?

- Training Material Availability: Are training materials available (e.g., online courses, video tutorials, instructor-led training)?

- Training Material Quality: Are the training materials well-structured, engaging, and up-to-date? Are they suitable for your team’s skill level?

- Community Support: Does the tool have an active community forum or support group where users can ask questions and share knowledge?

- Regular Updates: Is the documentation and training material regularly updated to reflect changes in the tool?

- User Feedback: Does the vendor solicit and incorporate user feedback to improve the documentation and training materials?

Ongoing Monitoring and Optimization

Regularly monitoring and optimizing your DevOps toolchain is crucial for ensuring its efficiency, security, and alignment with your evolving business needs. This continuous process allows you to identify and address performance issues, adapt to changing requirements, and maximize the return on your investment in DevOps tools. Neglecting this critical aspect can lead to bottlenecks, security vulnerabilities, and ultimately, hinder your ability to deliver value to your customers.

Importance of Monitoring Toolchain Performance

Monitoring the performance of your DevOps toolchain provides valuable insights into its overall health and effectiveness. It enables proactive identification of issues before they impact your development and deployment processes. This proactive approach leads to improved efficiency, reduced downtime, and enhanced team productivity. Effective monitoring also supports informed decision-making regarding tool selection, resource allocation, and process improvements.

Methods for Identifying and Addressing Performance Bottlenecks

Identifying and addressing performance bottlenecks requires a multi-faceted approach, combining proactive monitoring with reactive analysis. The goal is to pinpoint areas where the toolchain is slowing down the development or deployment pipeline and then implement corrective actions.

- Performance Metrics Collection: Implement comprehensive monitoring of key performance indicators (KPIs) across all tools in your toolchain. These KPIs may include:

- Build times

- Deployment frequency

- Error rates

- Resource utilization (CPU, memory, disk I/O)

- Network latency

- Centralized Logging and Alerting: Utilize a centralized logging and alerting system to collect and analyze logs from all tools. This allows for rapid identification of errors, anomalies, and performance degradations. Configure alerts to notify relevant teams when critical thresholds are breached.

- Profiling and Tracing: Employ profiling and tracing tools to analyze the execution flow of processes within the toolchain. This helps pinpoint specific components or operations that are consuming excessive resources or taking an inordinate amount of time. For example, using a tracing tool like Jaeger or Zipkin can help visualize the time spent in each service call within a microservices architecture.

- Load Testing and Simulation: Conduct load tests to simulate realistic traffic and identify performance limitations under stress. This involves simulating a large number of concurrent users or requests to assess how the toolchain performs under heavy load. The results can reveal bottlenecks that might not be apparent during normal operation.

- Root Cause Analysis: When performance issues are identified, conduct thorough root cause analysis to determine the underlying causes. This may involve examining logs, analyzing performance metrics, and collaborating with the teams responsible for the affected tools. For example, a slow build time might be traced back to inefficient code, inadequate hardware resources, or a network bottleneck.

- Optimization Strategies: Implement optimization strategies based on the identified bottlenecks. These may include:

- Code optimization: Improving code efficiency and reducing resource consumption.

- Resource scaling: Increasing the resources allocated to specific tools or components.

- Caching: Implementing caching mechanisms to reduce the load on databases or other resources.

- Process streamlining: Optimizing workflows and automating tasks to reduce manual intervention.

Plan for Continuously Optimizing the Toolchain

Continuously optimizing the toolchain requires a proactive and iterative approach, integrating monitoring, analysis, and improvement cycles. This involves establishing a clear process for identifying, prioritizing, and addressing performance issues.

- Establish Baseline Metrics: Define baseline performance metrics for each tool and component in your toolchain. This provides a benchmark for measuring performance improvements and identifying deviations from expected behavior.

- Implement Monitoring and Alerting: Deploy comprehensive monitoring and alerting systems to track performance metrics in real-time. Configure alerts to notify the relevant teams when performance thresholds are breached or anomalies are detected.

- Regular Performance Reviews: Conduct regular performance reviews, such as monthly or quarterly, to analyze performance data, identify trends, and assess the effectiveness of optimization efforts.

- Prioritize Optimization Efforts: Prioritize optimization efforts based on the impact on the overall development and deployment pipeline. Focus on addressing the bottlenecks that have the greatest impact on efficiency and productivity.

- Iterative Improvement Cycles: Implement iterative improvement cycles, including the following steps:

- Identify: Identify performance issues or areas for improvement.

- Analyze: Analyze the root causes of the issues.

- Implement: Implement solutions or improvements.

- Measure: Measure the impact of the changes and iterate as needed.

Outcome Summary

In conclusion, selecting the right tools for your DevOps toolchain is not a one-size-fits-all endeavor but a strategic process that requires careful planning, evaluation, and ongoing adaptation. By focusing on your team’s needs, understanding the available tools, and considering crucial factors like budget, security, and scalability, you can create a toolchain that empowers your team to deliver high-quality software faster and more efficiently.

Remember, the journey doesn’t end with the initial selection; continuous monitoring and optimization are key to maintaining a high-performing DevOps environment.

FAQ Explained

What are the most important factors to consider when choosing a CI/CD tool?

Key factors include ease of use, integration capabilities with existing tools, support for various programming languages and platforms, scalability, security features, and the availability of community support and documentation.

How can I ensure that my DevOps toolchain remains secure?

Prioritize tools with strong security features, such as access controls, vulnerability scanning, and regular security updates. Implement secure coding practices, regularly audit your toolchain, and educate your team on security best practices.

What is the best way to assess the scalability of a DevOps tool?

Evaluate a tool’s performance under load through testing. Consider its ability to handle increased workloads, the availability of features like auto-scaling, and the vendor’s commitment to providing scalable solutions.

How often should I review and update my DevOps toolchain?

Regularly review your toolchain, ideally at least every six to twelve months, or whenever your team’s needs or technology landscape changes significantly. This allows you to identify and address any performance bottlenecks, security vulnerabilities, or integration issues.