Maintaining parity between development and production environments is a critical aspect of modern cloud operations. This guide, “how to achieve dev/prod parity in cloud environments (factor X)”, delves into the essential strategies and techniques required to ensure that your development and production environments mirror each other as closely as possible. By understanding and implementing these practices, organizations can significantly reduce risks, accelerate development cycles, and improve overall system reliability.

We will explore core concepts, Infrastructure as Code (IaC), data synchronization, version control, monitoring, configuration management, testing strategies, networking, security considerations, and continuous improvement methodologies. Each section provides practical insights and actionable steps, enabling you to build robust and consistent cloud environments that support your business goals.

Understanding Dev/Prod Parity

Achieving dev/prod parity in cloud environments is a critical practice for modern software development and deployment. It involves aligning development, testing, and production environments to minimize discrepancies and ensure consistent application behavior. This alignment leads to increased stability, faster release cycles, and improved overall quality of the software.

Fundamental Principles

The core of dev/prod parity revolves around replicating the production environment as closely as possible in development and testing stages. This includes using the same infrastructure, configurations, and data wherever feasible. The goal is to identify and address potential issues early in the development lifecycle, reducing the likelihood of surprises when deploying to production.

- Infrastructure as Code (IaC): Using IaC tools (e.g., Terraform, Ansible, CloudFormation) to define and manage infrastructure consistently across all environments. This ensures that the same infrastructure configurations are applied, minimizing differences. For example, a specific virtual machine image, network settings, and security group rules can be defined in code and deployed identically in development, staging, and production.

- Configuration Management: Employing configuration management tools (e.g., Chef, Puppet, SaltStack) to maintain consistent application configurations. This ensures that application settings, dependencies, and runtime environments are identical across all environments. For instance, database connection strings, API keys, and logging levels can be managed and synchronized.

- Data Parity: Replicating or simulating production data in development and testing environments. This allows developers to test their applications with realistic data, identifying potential issues related to data volume, format, or complexity. Techniques include anonymizing production data, creating data subsets, or using synthetic data generation.

- Monitoring and Observability: Implementing consistent monitoring and logging practices across all environments. This allows for a unified view of application performance and behavior, enabling developers to identify and troubleshoot issues regardless of the environment. This includes using the same monitoring tools, dashboards, and alerting rules.

- Automated Testing: Automating testing processes to validate application behavior across different environments. This includes unit tests, integration tests, and end-to-end tests, which can be run automatically in each environment to ensure consistency.

Benefits of Maintaining Parity

Dev/prod parity offers numerous benefits, significantly improving the software development lifecycle and operational efficiency. These benefits translate into tangible advantages for development teams and the business as a whole.

- Reduced Deployment Risks: By testing in an environment that closely resembles production, the risk of deployment failures is significantly reduced. This minimizes the chances of unexpected issues arising in production, leading to fewer outages and rollbacks. For example, if a specific software library version is used in production, and the development environment uses a different version, there’s a high risk of unexpected behavior during deployment.

- Faster Development Cycles: Identifying and resolving issues early in the development process accelerates development cycles. Developers can iterate faster and deliver features more quickly when they can confidently test their code in a production-like environment. This leads to quicker feedback loops and more efficient use of developer time.

- Improved Application Stability: Consistent environments lead to more stable applications. When the same configurations, infrastructure, and data are used across all environments, the likelihood of unexpected behavior and bugs is significantly reduced. This translates to a more reliable and user-friendly application.

- Enhanced Debugging and Troubleshooting: Parity makes debugging and troubleshooting easier. When an issue arises in production, developers can often reproduce it in the development or staging environment, making it easier to identify the root cause and find a solution. This reduces the time spent on debugging and minimizes the impact on users.

- Increased Team Collaboration: Parity fosters better collaboration between development, operations, and testing teams. When everyone is working with similar environments and configurations, it’s easier to share knowledge, coordinate efforts, and resolve issues. This leads to a more cohesive and efficient team.

Potential Risks of Neglecting Parity

Failing to maintain dev/prod parity can lead to significant risks, impacting the quality, stability, and efficiency of software development and deployment. These risks can translate into real costs and business impacts.

- Increased Production Incidents: The most immediate risk is an increase in production incidents. When environments differ significantly, unexpected issues are more likely to surface in production, leading to outages, performance degradation, and user dissatisfaction. This can damage a company’s reputation and impact revenue.

- Slower Release Velocity: Discrepancies between environments can slow down release cycles. Developers may spend more time troubleshooting issues that only appear in production, delaying the release of new features and bug fixes. This can put a company at a competitive disadvantage.

- Higher Development Costs: Addressing production issues can be costly. The time spent by developers and operations teams on troubleshooting, debugging, and rollback operations adds to development costs. The cost of downtime and lost productivity can be substantial.

- Reduced Developer Productivity: When developers are constantly dealing with environment-specific issues, their productivity suffers. They spend less time on feature development and more time on resolving issues that could have been avoided with better parity. This leads to developer frustration and burnout.

- Security Vulnerabilities: Inconsistent configurations across environments can lead to security vulnerabilities. If security settings are not applied consistently, certain environments may be more vulnerable to attacks. For example, if a development environment uses less stringent security rules than production, it could be a target for attackers.

Infrastructure as Code (IaC) for Parity

Infrastructure as Code (IaC) is a cornerstone of achieving dev/prod parity in cloud environments. It treats infrastructure provisioning and management as code, enabling automation, version control, and reproducibility. This approach ensures that infrastructure configurations are consistent across all environments, minimizing discrepancies and streamlining the deployment process. By codifying infrastructure, organizations can eliminate manual configuration errors and enforce standardized practices, ultimately leading to more reliable and predictable deployments.

IaC’s Contribution to Consistent Infrastructure

IaC significantly contributes to infrastructure consistency by providing a declarative approach to defining and managing resources. Instead of manually configuring servers, networks, and other components, IaC allows infrastructure to be defined in code. This code can then be executed to automatically provision and configure the required resources.

- Version Control: IaC code is stored in version control systems like Git. This allows for tracking changes, reverting to previous configurations, and collaborating on infrastructure changes. Every modification is tracked, providing a complete history of the infrastructure’s evolution.

- Automation: IaC automates the entire infrastructure provisioning process. This reduces the risk of human error and ensures that infrastructure is deployed consistently across all environments.

- Reproducibility: IaC ensures that infrastructure can be consistently recreated. If an environment needs to be rebuilt, the IaC code can be executed to provision the exact same infrastructure as before.

- Standardization: IaC promotes standardization by enforcing a single source of truth for infrastructure configurations. This reduces the variability between environments and simplifies troubleshooting.

Configuration Management Tools and Environment Consistency

Configuration management tools play a crucial role in maintaining environment consistency alongside IaC. These tools automate the process of configuring and managing software and settings on servers and other infrastructure components. They ensure that the software versions, configurations, and settings are consistent across all environments.

- State Management: Configuration management tools maintain the desired state of each system. They compare the current state of a system with the desired state and automatically apply the necessary changes to bring the system into compliance.

- Idempotency: These tools are designed to be idempotent, meaning that running the same configuration multiple times has the same effect as running it once. This ensures that configurations are consistently applied regardless of the current state of the system.

- Automated Configuration Updates: Configuration management tools automate the process of updating software, applying patches, and configuring settings. This ensures that systems are always up-to-date and secure.

- Compliance Enforcement: These tools can be used to enforce compliance with security policies and other standards. They can automatically detect and remediate any non-compliant configurations.

Simple IaC Template for Web Application Deployment

A simple IaC template, using tools like Terraform or CloudFormation, can automate the deployment of a basic web application across both development and production environments. This example illustrates the core concepts of IaC and how it facilitates environment parity.Let’s consider a basic example using Terraform, which is a popular IaC tool. This template will deploy a simple web server (e.g., an Nginx instance) on an AWS EC2 instance.

Terraform Configuration (main.tf):

This configuration defines the necessary resources: an AWS EC2 instance, a security group to allow HTTP traffic, and an SSH key pair for secure access. The `count` variable allows for the creation of multiple instances.

“`terraformterraform required_providers aws = source = “hashicorp/aws” version = “~> 4.0” provider “aws” region = “us-east-1” # Replace with your desired regionresource “aws_instance” “web_server” ami = “ami-0c55b55e726620841” # Replace with a valid AMI for your region (e.g., Amazon Linux 2) instance_type = “t2.micro” count = 1 #Deploy one instance tags = Name = “web-server” vpc_security_group_ids = [aws_security_group.web_sg.id]resource “aws_security_group” “web_sg” name = “web-sg” description = “Allow HTTP inbound traffic” ingress from_port = 80 to_port = 80 protocol = “tcp” cidr_blocks = [“0.0.0.0/0”] ingress from_port = 22 to_port = 22 protocol = “tcp” cidr_blocks = [“0.0.0.0/0”] egress from_port = 0 to_port = 0 protocol = “-1” cidr_blocks = [“0.0.0.0/0”] “`

Explanation:

- The `provider “aws”` block configures the AWS provider, specifying the region where resources will be created.

- The `resource “aws_instance” “web_server”` block defines the EC2 instance. It specifies the AMI (Amazon Machine Image), instance type, and tags.

- The `resource “aws_security_group” “web_sg”` block defines a security group that allows inbound HTTP (port 80) and SSH (port 22) traffic.

Deployment Process:

- Initialization: `terraform init` initializes the Terraform working directory and downloads the necessary provider plugins.

- Planning: `terraform plan` creates an execution plan, showing the changes Terraform will make to your infrastructure.

- Application: `terraform apply` applies the plan, creating the resources defined in the configuration.

Achieving Parity:

To deploy this to both dev and prod environments, the following approaches can be used:

- Separate Terraform Workspaces: Terraform workspaces allow you to manage multiple states for different environments. You can create a `dev` and `prod` workspace, each with its own state file. This ensures that the infrastructure is managed separately for each environment.

- Variables: Use Terraform variables to define environment-specific values, such as the AMI ID, instance type, or region. This allows you to reuse the same configuration file across different environments. For instance, you could define a variable `environment` and use it in the resource names (e.g., `web-server-$var.environment`).

- Modules: Encapsulate common infrastructure components into modules. This promotes code reuse and consistency. For example, you could create a module for deploying an EC2 instance with a specific security group configuration.

By applying these principles, the same IaC code can be used to deploy the web application in both dev and prod, ensuring consistent infrastructure configurations and promoting parity. This reduces the risk of environment-specific issues and simplifies the deployment process.

Data Synchronization Strategies

Maintaining data consistency between development and production environments is crucial for effective testing, debugging, and overall application stability. However, replicating production data directly into development can pose significant security and privacy risks. This section explores various data synchronization methods, analyzing their advantages and disadvantages, and provides a practical procedure for implementing data masking to safeguard sensitive information.

Methods for Data Synchronization

Several strategies can be employed to synchronize data between development and production environments, each with its own characteristics and suitability depending on the specific requirements and constraints. The following methods are commonly used:

- Database Cloning: This involves creating a full copy of the production database, including all data and schema, and making it available in the development environment. This approach offers the most complete data representation, closely mirroring the production environment.

- Data Masking: Data masking replaces sensitive data with realistic, but anonymized, values. This allows developers to work with data that resembles production data without exposing confidential information.

- Data Subsetting: Data subsetting creates a smaller, representative subset of the production data. This method is useful when the full dataset is too large to manage in the development environment or when only a specific portion of the data is needed for testing.

- Synthetic Data Generation: Synthetic data generation creates data from scratch based on predefined rules and patterns. This is useful when no existing data is available or when the need for realistic, yet anonymized, data is paramount.

- Differential Synchronization: Instead of copying the entire database, only the changes (deltas) are synchronized between the environments. This can be more efficient in terms of time and resources, especially when dealing with large datasets and frequent updates.

Comparison of Data Synchronization Methods

Each data synchronization method possesses unique strengths and weaknesses. The choice of method depends on factors such as data volume, security requirements, performance considerations, and the specific needs of the development team. A comparative analysis is presented below:

| Method | Pros | Cons |

|---|---|---|

| Database Cloning |

|

|

| Data Masking |

|

|

| Data Subsetting |

|

|

| Synthetic Data Generation |

|

|

| Differential Synchronization |

|

|

Implementing a Data Masking Strategy

Implementing a robust data masking strategy is crucial for protecting sensitive information in the development environment. The following procedure Artikels the key steps involved:

- Identify Sensitive Data: The initial step involves identifying all sensitive data elements within the database. This includes personally identifiable information (PII), financial data, and any other data that requires protection. This process often involves reviewing data dictionaries, data models, and application code to understand where sensitive data resides.

- Choose Masking Techniques: Select appropriate masking techniques for each sensitive data element. Common techniques include:

- Data Replacement: Substituting sensitive values with realistic, but anonymized, alternatives (e.g., replacing names with fictitious names).

- Data Shuffling: Randomly shuffling data within a column (e.g., shuffling email addresses).

- Data Nullification: Replacing sensitive values with NULL values.

- Data Generalization: Reducing the granularity of data (e.g., replacing specific dates with date ranges).

- Develop Masking Rules: Create specific masking rules for each identified sensitive data element. These rules define how the data will be masked. The rules should be carefully designed to ensure data utility while maintaining data privacy.

- Implement Masking Process: Implement the masking process using specialized data masking tools or custom scripts. The implementation should be automated to ensure consistency and repeatability.

- Test and Validate: Thoroughly test the masked data to ensure that the masking process functions correctly and that the masked data meets the requirements of the development team. Validation should include checking for data integrity, referential integrity, and data usability.

- Secure the Masked Data: Secure the development environment where the masked data resides to prevent unauthorized access. Implement access controls, encryption, and monitoring to protect the masked data.

- Regularly Review and Update: Regularly review and update the data masking strategy to adapt to changes in data regulations, data structures, and application requirements. This ensures that the data masking process remains effective over time.

For example, consider a company using a data masking tool to protect customer data in its development environment. The tool identifies the ‘customer_name’ and ’email_address’ columns as sensitive. The masking rules specify that ‘customer_name’ should be replaced with a name from a pre-defined list of fictitious names, and ’email_address’ should be shuffled within the ‘customers’ table. The data masking process is automated and run regularly to refresh the development environment with masked data. The development team can then use this masked data for testing and development without exposing real customer information.

Version Control and Deployment Pipelines

Maintaining consistent code and infrastructure across development and production environments is significantly streamlined through robust version control and well-defined deployment pipelines. These practices ensure that changes are tracked, tested, and deployed in a controlled and repeatable manner, reducing the risk of errors and ensuring a smooth transition from development to production. This section focuses on the crucial role of version control and the design of automated CI/CD pipelines to achieve and maintain dev/prod parity.

Version Control Systems for Code Consistency

Version control systems are fundamental for managing code changes and ensuring consistency across different environments. Using a system like Git provides a historical record of all modifications, allowing for easy tracking of changes, collaboration, and rollback to previous versions if necessary.

- Code Tracking and Collaboration: Git enables developers to track every change made to the codebase, including who made the change, when it was made, and why. This historical record is essential for debugging and understanding the evolution of the application. Multiple developers can work on the same project simultaneously without conflicts, thanks to branching and merging capabilities.

- Branching and Merging Strategies: Git’s branching model allows developers to work on features in isolated branches, preventing unfinished code from impacting the main codebase. When a feature is complete, it can be merged back into the main branch. Popular branching strategies, such as Gitflow or GitHub Flow, provide guidelines for managing branches and releases.

- Rollback Capabilities: If a deployment introduces errors, Git allows for easy rollback to a previous, known-good version of the code. This minimizes downtime and the impact of bugs on production users.

- Code Reviews: Version control systems facilitate code reviews, where other developers can examine changes before they are merged into the main codebase. This helps catch errors, improve code quality, and ensure adherence to coding standards.

- Configuration Management Integration: Version control is crucial for managing infrastructure-as-code (IaC) configurations. Infrastructure configurations (e.g., cloud resources, server setups) can be stored in version control alongside application code, enabling the same benefits of tracking, collaboration, and rollback.

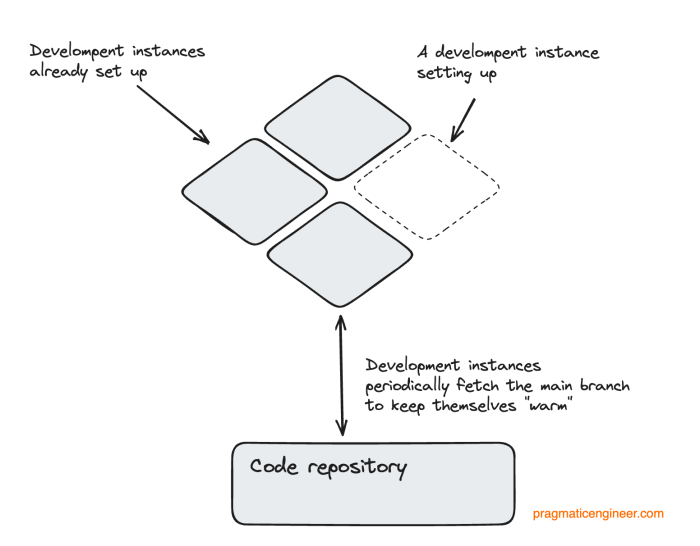

Designing a CI/CD Pipeline for Automated Deployment

A Continuous Integration and Continuous Deployment (CI/CD) pipeline automates the process of building, testing, and deploying code changes. This automation is critical for achieving rapid and reliable deployments to both development and production environments, thus fostering dev/prod parity.

The CI/CD pipeline generally consists of several stages, including:

- Code Commit: Developers commit code changes to the version control system (e.g., Git). This triggers the pipeline.

- Build Stage: The code is compiled, and dependencies are installed. Artifacts (e.g., container images, deployable packages) are created.

- Testing Stage: Automated tests (unit tests, integration tests, end-to-end tests) are executed to verify the code’s functionality.

- Deployment Stage: The tested artifacts are deployed to the target environment (e.g., development or production).

- Monitoring and Feedback: After deployment, the application is monitored for performance and errors. Feedback is provided to the developers to facilitate continuous improvement.

Here’s a simplified example using a common CI/CD tool like Jenkins or GitLab CI:

- Code Repository: The application code and infrastructure-as-code configuration files are stored in a Git repository.

- Trigger: A commit to the `main` or `develop` branch triggers the CI/CD pipeline.

- Build Job: A build job pulls the code, compiles it, and creates a container image using a Dockerfile. The image is tagged with a version number.

- Test Job: A test job launches the container and runs automated tests (unit tests, integration tests). Test results are recorded.

- Deployment Job (Development): If the tests pass, a deployment job deploys the container image to a development environment. This might involve updating Kubernetes deployments, or deploying to a cloud platform’s application service.

- Deployment Job (Production): After successful testing in the development environment and possibly manual approval, another deployment job deploys the same container image to the production environment.

Integrating Automated Testing within a Deployment Pipeline

Automated testing is an integral part of a CI/CD pipeline, ensuring that code changes are thoroughly tested before deployment. This includes different types of tests, such as unit tests, integration tests, and end-to-end tests, to cover various aspects of the application.

Here’s how automated testing is integrated into the CI/CD pipeline:

- Unit Tests: These tests verify individual components or functions of the application in isolation. They are fast to run and provide quick feedback on code changes. For example, if a function calculates the total price of items in a shopping cart, a unit test would check if the function returns the correct value for different inputs.

- Integration Tests: These tests verify the interactions between different components or modules of the application. They check if different parts of the application work together as expected. For example, an integration test would check if the shopping cart can successfully communicate with the payment gateway to process a transaction.

- End-to-End (E2E) Tests: These tests simulate user interactions with the application from start to finish, verifying the entire workflow. For example, an E2E test would simulate a user logging in, adding items to a cart, and completing the checkout process.

- Test Automation Frameworks: Tools like JUnit (for Java), pytest (for Python), and Cypress (for web applications) are used to write and run automated tests.

- Test Reporting: Test results are collected and reported, providing feedback on the success or failure of the tests. This information is used to determine whether the deployment can proceed.

- Test-Driven Development (TDD): TDD is a software development approach where tests are written before the code. This ensures that the code is designed with testability in mind and helps to catch errors early in the development process.

Example:

Consider a simple web application that displays a list of products. The CI/CD pipeline would include:

- Unit Tests: Testing individual functions that fetch product data from a database.

- Integration Tests: Testing the interaction between the product listing component and the database.

- E2E Tests: Testing the entire user flow: accessing the product listing page, viewing product details, and adding products to a cart.

If any test fails, the deployment is halted, and the developers are notified to fix the issues. This prevents broken code from reaching the production environment.

Monitoring and Logging for Parity

Maintaining consistent monitoring and logging practices across development and production environments is critical for achieving and sustaining dev/prod parity. This consistency ensures that issues are identified and addressed quickly, regardless of the environment in which they arise. Without it, debugging and troubleshooting become significantly more challenging, leading to delays in deployments and potential impacts on end-users. Effective monitoring and logging provide crucial insights into system behavior, performance, and security, enabling proactive management and informed decision-making.

Importance of Consistent Monitoring and Logging

Consistent monitoring and logging are fundamental to dev/prod parity, providing a unified view of system health and performance. This approach allows teams to identify and resolve issues rapidly, regardless of the environment. Discrepancies in monitoring or logging configurations can lead to inaccurate data, making it difficult to diagnose problems and understand their root causes. When monitoring and logging are aligned, teams can confidently:

- Rapidly Identify and Diagnose Issues: By using the same metrics and logs, teams can quickly pinpoint the source of problems, whether they occur in development or production. This is particularly important for issues that are difficult to reproduce locally.

- Improve Mean Time To Resolution (MTTR): Consistent data allows for faster troubleshooting, reducing the time it takes to resolve incidents and minimize downtime.

- Enhance Proactive Problem Solving: Monitoring trends and anomalies allows teams to predict and address potential issues before they impact users. This proactive approach helps to prevent incidents.

- Facilitate Effective Performance Tuning: Uniform monitoring provides a consistent baseline for assessing application performance and identifying areas for optimization in both environments.

- Ensure Accurate Performance Comparisons: Consistent metrics enable accurate comparisons of performance between development and production, helping to identify performance regressions or improvements.

Monitoring Tools and Techniques for Environment Health

Several tools and techniques are available to ensure consistent monitoring of environment health across development and production. These tools collect and analyze data related to application performance, infrastructure, and user experience. The choice of tools often depends on the specific cloud provider and the application’s architecture. The goal is to have a unified view of the environment, enabling rapid issue identification and resolution.

- Application Performance Monitoring (APM) Tools: APM tools track application performance metrics such as response times, error rates, and transaction throughput. Popular examples include:

- Prometheus: An open-source monitoring system that collects metrics from various sources. It is well-suited for containerized environments and offers powerful querying capabilities.

- Grafana: A data visualization and monitoring tool that integrates with various data sources, including Prometheus, to create dashboards and alerts. It allows for the visualization of metrics from both development and production.

- New Relic: A comprehensive APM platform that provides real-time insights into application performance, user experience, and infrastructure health. It supports monitoring across multiple environments.

- Datadog: A monitoring and analytics platform that provides real-time visibility into infrastructure, applications, and logs. It is designed to support modern, cloud-native architectures.

- Infrastructure Monitoring Tools: These tools monitor the underlying infrastructure, including servers, networks, and storage. Examples include:

- CloudWatch (AWS): A monitoring service provided by AWS that collects and tracks metrics, logs, and events. It can be used to monitor resources in both development and production.

- Azure Monitor (Azure): A monitoring service provided by Azure that provides comprehensive monitoring capabilities across Azure resources.

- Google Cloud Monitoring (GCP): A monitoring service provided by Google Cloud that offers real-time performance monitoring, alerting, and dashboarding.

- Synthetic Monitoring: This technique simulates user interactions to proactively identify issues with application availability and performance. Tools like Pingdom and UptimeRobot can be used to monitor the availability of web applications from various locations.

- Real User Monitoring (RUM): RUM tools track the performance and user experience of real users interacting with the application. This provides valuable insights into how users are experiencing the application in both environments.

- Health Checks: Implementing health checks allows applications to report their status. Load balancers and other infrastructure components can use these checks to route traffic to healthy instances only.

Implementing Centralized Logging and Alerting

Centralized logging and alerting are essential for effective monitoring. Centralized logging aggregates logs from various sources into a single location, making it easier to search and analyze them. Alerting systems notify teams of critical issues, enabling them to respond quickly.

- Centralized Logging Systems: These systems collect, store, and analyze logs from all environments. Examples include:

- Elasticsearch, Logstash, and Kibana (ELK Stack): A popular open-source stack for collecting, processing, and visualizing logs. Elasticsearch stores the logs, Logstash processes them, and Kibana provides a user interface for searching and analyzing.

- Splunk: A commercial platform for collecting, analyzing, and visualizing machine-generated data. It offers advanced search and analysis capabilities.

- Cloud Logging Services: Cloud providers offer logging services that integrate with their monitoring tools. Examples include: AWS CloudWatch Logs, Azure Monitor Logs, and Google Cloud Logging.

- Log Standardization: Implementing consistent log formats across all applications and services is crucial. This makes it easier to search, analyze, and correlate logs. Standardized formats include JSON and structured logging.

- Alerting Systems: Alerting systems notify teams of critical issues based on predefined rules and thresholds. They integrate with monitoring tools and logging systems to trigger alerts. Examples include:

- Alertmanager (Prometheus): An alerting component for Prometheus that handles alerts sent by the Prometheus server.

- PagerDuty: A popular incident management platform that integrates with various monitoring and alerting tools.

- Opsgenie: Another incident management platform that provides alerting, on-call scheduling, and incident response capabilities.

- Alerting Best Practices:

- Define Clear Alerting Thresholds: Establish specific thresholds for metrics and logs to trigger alerts.

- Prioritize Alerts: Categorize alerts based on severity and impact to ensure that critical issues are addressed first.

- Integrate with Incident Management Systems: Integrate alerting systems with incident management platforms to streamline the incident response process.

- Automate Alerting Rules: Use Infrastructure as Code (IaC) to define and deploy alerting rules consistently across all environments.

Configuration Management and Environment Variables

Maintaining consistent configurations across development and production environments is crucial for achieving true parity. This involves not only replicating infrastructure but also ensuring that application settings, environment variables, and other configuration aspects are identical. This section explores the role of configuration management, various approaches to managing environment variables, and a comparison of different secrets management solutions.

Configuration Management Tools in Maintaining Environment Parity

Configuration management tools automate the process of configuring and managing systems. They play a vital role in ensuring that environments are consistent, repeatable, and easily maintained. They achieve this by defining the desired state of a system and automatically configuring it to match that state.

- Automation and Consistency: Configuration management tools automate the application of configurations, reducing manual errors and ensuring consistency across all environments. This automation is critical for parity.

- Version Control: Configurations are often stored in version control systems, allowing for tracking changes, rollbacks, and collaboration. This helps in maintaining a history of changes and easily reverting to previous configurations if needed.

- Idempotency: These tools are designed to be idempotent, meaning that applying the same configuration multiple times will result in the same outcome. This is essential for ensuring that changes are applied consistently without causing unexpected side effects.

- Centralized Management: Configuration management tools provide a centralized point of control for managing configurations, making it easier to apply changes and monitor the state of environments.

Approaches to Managing Environment Variables

Environment variables are used to store configuration settings that vary between environments, such as database connection strings, API keys, and other sensitive information. Managing these variables securely and consistently across environments is critical.

- Hardcoding (Avoid): Hardcoding environment variables directly into the application code is highly discouraged. This makes it difficult to change configurations and exposes sensitive information.

- Environment-Specific Configuration Files: Creating separate configuration files for each environment (e.g., `config.dev.js`, `config.prod.js`) is a better approach than hardcoding, but it can still lead to inconsistencies and security vulnerabilities if not managed carefully.

- Environment Variables in the Operating System: Setting environment variables at the operating system level is a common practice. However, this method can be difficult to manage across multiple servers and can expose variables to unauthorized users if not protected.

- Secrets Management Solutions: Using dedicated secrets management solutions provides the most secure and manageable way to handle environment variables. These solutions offer features like encryption, access control, versioning, and auditing.

Secrets Management Solutions Comparison

Choosing the right secrets management solution depends on the specific requirements of the project and the cloud provider being used. The following table compares some popular solutions, highlighting their key features, pros, and cons.

| Feature | HashiCorp Vault | AWS Secrets Manager | Azure Key Vault | Google Cloud Secret Manager |

|---|---|---|---|---|

| Key Features | Centralized secrets storage, dynamic secrets generation, access control, audit logging, secret rotation. Supports multiple backends for storage. | Secure storage of secrets, secret rotation, integration with AWS services, access control via IAM. | Secure storage of secrets, key management, certificate management, access control via Azure Active Directory. | Secure storage of secrets, access control via IAM, automatic secret rotation, integration with Google Cloud services. |

| Pros | Highly flexible and customizable, supports a wide range of backends and integrations, open-source and community-supported. | Seamless integration with AWS services, easy to use, cost-effective for AWS users. | Strong integration with Azure services, robust security features, cost-effective for Azure users. | Tight integration with Google Cloud services, easy to use, good pricing model. |

| Cons | Requires more operational overhead, can be complex to set up and configure, requires a dedicated infrastructure. | Vendor lock-in to AWS, less flexible than HashiCorp Vault. | Vendor lock-in to Azure, limited support for other platforms. | Vendor lock-in to Google Cloud, limited support for other platforms. |

| Use Cases | Multi-cloud environments, complex security requirements, organizations with diverse infrastructure. | AWS-centric environments, organizations seeking ease of use and integration with AWS services. | Azure-centric environments, organizations seeking integration with Azure services. | Google Cloud-centric environments, organizations seeking integration with Google Cloud services. |

| Pricing | Open source with enterprise version available. Pricing varies based on the deployment model. | Pay-as-you-go based on the number of secrets stored and API calls made. | Pay-as-you-go based on the number of secrets stored and operations performed. | Pay-as-you-go based on the number of secrets stored and API calls made. |

Testing Strategies for Parity Validation

Ensuring dev/prod parity hinges on robust testing strategies. Rigorous testing validates that applications behave consistently across environments, identifying discrepancies before they impact production. This proactive approach minimizes risk and fosters confidence in deployments.

Test Plan Components

A comprehensive test plan for parity validation should encompass both functional and non-functional aspects. It defines the scope, methodologies, and expected outcomes of testing efforts.

- Test Scope: Defines the specific areas of the application and infrastructure to be tested. This includes individual components, APIs, and the overall user experience.

- Test Objectives: Artikels the goals of the testing process. These objectives should directly relate to validating parity, such as ensuring consistent functionality, performance, and security.

- Testing Methodologies: Specifies the types of tests to be performed, including integration, end-to-end, performance, and security tests.

- Test Environment: Describes the environments in which the tests will be executed. This includes details about the hardware, software, and network configurations of the dev and prod-like environments.

- Test Data: Defines the data used for testing, including the source, format, and volume. The test data should be representative of the data in the production environment.

- Test Cases: Provides detailed instructions for executing each test, including the steps to be taken, the expected results, and the criteria for passing or failing the test.

- Test Results: Specifies how the test results will be recorded, analyzed, and reported. This includes the use of test management tools and the creation of test reports.

- Test Automation: Describes the use of automated testing tools and frameworks to streamline the testing process.

Functional Testing for Parity

Functional testing validates that the application’s features and functionalities behave as expected in both development and production-like environments.

- Unit Testing: This involves testing individual components or modules of the application in isolation. Unit tests verify that each component functions correctly and produces the expected output for a given input. Unit tests are often automated and run as part of the build process. For example, a unit test might verify that a function correctly calculates the total price of items in a shopping cart.

- Integration Testing: This verifies the interactions between different components or modules of the application. Integration tests ensure that components work together seamlessly. For example, an integration test might verify that the user authentication module integrates correctly with the database.

- System Testing: This tests the entire application as a whole. System tests verify that the application meets the specified requirements and performs as expected in a production-like environment. This involves testing the application’s functionality, performance, and security.

- User Acceptance Testing (UAT): This involves testing the application by end-users to ensure that it meets their needs and expectations. UAT is typically performed in a production-like environment using real-world data.

- API Testing: This tests the application’s APIs to ensure that they function correctly and return the expected responses. API tests can validate API endpoints, request and response formats, and error handling.

Non-Functional Testing for Parity

Non-functional testing assesses aspects like performance, security, and reliability, ensuring consistent behavior across environments.

- Performance Testing: This assesses the application’s performance under various load conditions. Performance tests measure response times, throughput, and resource utilization. For example, a performance test might simulate a large number of concurrent users accessing the application to assess its ability to handle peak traffic.

- Load Testing: This evaluates the application’s ability to handle increasing levels of user traffic. Load tests help identify performance bottlenecks and ensure that the application can scale to meet demand.

- Stress Testing: This pushes the application beyond its normal operating limits to determine its breaking point. Stress tests help identify potential weaknesses and vulnerabilities in the application’s architecture.

- Security Testing: This assesses the application’s security vulnerabilities. Security tests identify potential threats and weaknesses in the application’s code, infrastructure, and configuration. This can include penetration testing, vulnerability scanning, and security audits.

- Configuration Testing: This verifies that the application’s configuration settings are consistent across environments. Configuration tests ensure that the application behaves consistently regardless of the environment.

- Disaster Recovery Testing: This tests the application’s ability to recover from a disaster. Disaster recovery tests simulate various failure scenarios and verify that the application can be restored to a functional state.

Automated Testing for Parity

Automated testing is crucial for maintaining dev/prod parity. It enables frequent, repeatable, and reliable testing, reducing manual effort and improving the speed of deployments.

- Benefits of Automation: Automated tests provide faster feedback, allowing developers to identify and fix issues early in the development cycle. Automation also reduces the risk of human error and ensures consistency in testing.

- Test Automation Tools: Several tools and frameworks are available for automating tests, including Selenium, JUnit, TestNG, and Cypress. These tools allow developers to write and execute tests for various aspects of the application.

- Continuous Integration and Continuous Delivery (CI/CD): Automated tests are integrated into the CI/CD pipeline to ensure that tests are run automatically whenever code changes are made. This helps to catch issues early and prevent them from reaching production.

- Test Data Management: Effective test data management is essential for automated testing. Test data should be representative of the production data and should be managed in a secure and efficient manner.

Networking and Security Considerations

Maintaining dev/prod parity extends beyond application code and infrastructure; it’s crucial to ensure consistency in networking and security configurations. Inconsistent network setups can lead to unexpected behavior, security vulnerabilities, and operational headaches. By replicating networking and security configurations across environments, teams can minimize risks and streamline deployments.

Consistent Networking Configurations

Establishing consistent networking configurations is fundamental for achieving dev/prod parity. This involves mirroring the structure and settings of virtual private clouds (VPCs), subnets, routing tables, and network security groups. This consistency ensures that applications behave predictably across environments.

- VPCs: Define VPCs with the same CIDR blocks, ensuring consistent IP address ranges. Inconsistent VPC configurations can cause connectivity issues and make it difficult to replicate network-related problems. For instance, if the development VPC uses a smaller CIDR block than the production VPC, applications in development might experience IP exhaustion issues that are not apparent in production.

- Subnets: Create subnets with the same configuration (availability zones, CIDR blocks) within each VPC. This enables identical network segmentation and resource allocation across environments. If the production environment has a subnet in a specific availability zone and the development environment does not, applications might fail to deploy or exhibit different behavior in development.

- Routing Tables: Replicate routing table configurations to ensure traffic flows correctly within and between environments. This includes routes for internet access, private network connectivity, and service endpoints. Inconsistencies in routing tables can prevent applications from reaching necessary resources or accessing the internet, affecting functionality and usability.

- Network Security Groups (NSGs) / Security Lists: Define and replicate NSG/security list rules to control inbound and outbound traffic. This includes rules for allowing traffic on specific ports, restricting access based on source IP addresses, and controlling access to databases and other resources. Failure to replicate NSG rules can lead to security breaches or prevent applications from functioning correctly.

Security Best Practices for Maintaining Parity

Implementing consistent security practices is critical for maintaining parity and protecting against vulnerabilities. This includes replicating firewall rules, access control policies, and encryption configurations.

- Consistent Firewall Rules: Ensure firewall rules (e.g., security group rules, network ACLs) are identical across environments. This minimizes the attack surface and ensures that applications are protected in the same way in both development and production.

- Access Control Policies: Implement consistent access control policies using Identity and Access Management (IAM) roles and policies. Grant developers and operations teams the same level of access to resources in both environments. This prevents privilege escalation and ensures that security policies are consistently enforced.

- Encryption: Use consistent encryption configurations for data at rest and in transit. This includes using the same encryption keys, algorithms, and protocols. For example, use the same TLS/SSL certificates and configurations for web servers in both environments.

- Regular Security Audits: Conduct regular security audits and penetration tests in both environments to identify and address vulnerabilities. This ensures that security configurations are effective and up-to-date.

- Automated Security Scanning: Integrate automated security scanning tools into the CI/CD pipeline to identify and remediate security vulnerabilities early in the development process. Tools like static code analyzers, vulnerability scanners, and container image scanners can help identify security issues before deployment to production.

Network Architecture Diagram for a Simple Cloud Application

A network architecture diagram illustrates the key elements of a cloud application’s network infrastructure, highlighting the parity aspects. This diagram demonstrates how consistent configurations are applied across development and production environments.

Description of the Network Architecture Diagram:

The diagram depicts a simple cloud application deployed across two environments: Development and Production. Both environments are based on a cloud provider (e.g., AWS, Azure, GCP), with the following key components:

VPC (Virtual Private Cloud): Each environment has its own VPC, representing an isolated network. The VPCs have the same CIDR blocks (e.g., 10.0.0.0/16) to ensure consistent IP addressing.

Subnets: Within each VPC, there are public and private subnets. The public subnet hosts resources that need internet access (e.g., a load balancer), while the private subnet hosts internal application components (e.g., application servers, databases). Subnets in both environments are configured identically in terms of their CIDR blocks and availability zones.

Load Balancer: A load balancer distributes traffic across multiple application servers. Both environments have a load balancer with identical configurations, including listeners, health checks, and security group rules.

Application Servers: The application servers host the application code. They reside in the private subnets and are configured identically across environments, including the same operating system, software versions, and security patches.

Database: The database stores application data. It resides in the private subnet and is configured identically across environments, including the same database type, version, and security settings.

Security Groups: Security groups control inbound and outbound traffic for each resource. The security groups are configured identically across environments, with the same rules for allowing traffic on specific ports and restricting access based on source IP addresses.

Internet Gateway (IGW): The IGW provides internet access to the public subnets. Both environments have an IGW configured in the same way.

NAT Gateway: The NAT gateway allows resources in the private subnets to access the internet for outbound traffic. Both environments have a NAT gateway configured in the same way.

Parity Aspects Highlighted:

- Identical VPC configurations (CIDR blocks, subnets, routing).

- Consistent load balancer settings (listeners, health checks, security group rules).

- Identical application server configurations (OS, software versions, security patches).

- Consistent database configurations (type, version, security settings).

- Identical security group rules.

This diagram illustrates how a consistent network architecture is maintained across both environments, enabling dev/prod parity.

Continuous Improvement and Feedback Loops

Establishing robust feedback loops and a commitment to continuous improvement are essential for maintaining and evolving dev/prod parity in cloud environments. This proactive approach allows organizations to identify and address discrepancies, optimize processes, and adapt to the ever-changing landscape of cloud technologies. Regularly reviewing and refining parity practices ensures long-term stability, efficiency, and reliability of applications.

Establishing Feedback Loops for Identifying and Addressing Parity Issues

Implementing effective feedback loops is crucial for proactively identifying and resolving dev/prod parity issues. These loops should encompass various stages of the software development lifecycle and involve multiple stakeholders.

- Automated Monitoring and Alerting: Implement comprehensive monitoring across both development and production environments. This includes monitoring key metrics such as application performance, resource utilization, error rates, and security events. Configure alerts to notify relevant teams when deviations from expected behavior occur. For example, a sudden spike in latency in production that isn’t replicated in the development environment could indicate a parity issue related to infrastructure scaling or configuration.

- User Feedback Mechanisms: Provide channels for users to report issues and provide feedback. This can include in-app feedback forms, dedicated support channels, and social media monitoring. Actively analyze user feedback to identify potential parity problems. For instance, if users report performance issues only in production, it warrants investigation into potential environmental differences.

- Post-Mortem Analysis: Conduct post-mortem reviews after significant incidents or outages in production. These reviews should analyze the root cause of the problem, identify any contributing factors related to dev/prod parity, and define corrective actions. The post-mortem should include a detailed timeline of events, the impact on users, and specific steps to prevent recurrence.

- Regular Retrospectives: Hold regular retrospectives with development, operations, and security teams. These meetings should focus on identifying areas for improvement in the dev/prod parity process. Discuss what went well, what didn’t, and what can be done differently in the future. Retrospectives should also be used to review the effectiveness of feedback loops and make adjustments as needed.

- Configuration Drift Detection: Implement tools and processes to detect configuration drift between development and production environments. These tools should automatically compare configuration settings and flag any discrepancies. For example, if the production environment has a different version of a critical library than the development environment, it could lead to unexpected behavior.

- Automated Testing in Production (with careful consideration): While using automated testing in production is a more advanced technique, consider running a subset of automated tests in production, such as smoke tests or health checks, to validate the environment’s basic functionality. However, these tests must be carefully designed to avoid impacting users and should be limited in scope. Any detected failures should trigger immediate investigation.

Creating a Process for Regularly Reviewing and Updating Dev/Prod Parity Practices

Dev/prod parity is not a one-time effort; it’s an ongoing process that requires regular review and updates to remain effective. A structured approach to reviewing and updating parity practices is essential.

- Establish a Review Schedule: Define a regular schedule for reviewing dev/prod parity practices. This could be quarterly, bi-annually, or annually, depending on the complexity of the environment and the frequency of changes.

- Assemble a Review Team: Form a dedicated review team comprising representatives from development, operations, security, and potentially other relevant stakeholders. This team will be responsible for conducting the review and making recommendations for improvement.

- Gather Data and Evidence: Collect relevant data and evidence to support the review process. This includes performance metrics, incident reports, user feedback, configuration information, and testing results.

- Assess Current Practices: Evaluate the current state of dev/prod parity practices against established goals and best practices. Identify any gaps or areas for improvement. Use a checklist (see below) to guide the assessment.

- Identify Improvement Opportunities: Based on the assessment, identify specific opportunities to improve dev/prod parity. This might involve changes to infrastructure, configuration management, testing strategies, or monitoring and alerting systems.

- Prioritize and Plan Actions: Prioritize the identified improvement opportunities based on their potential impact and feasibility. Develop a detailed action plan that Artikels the specific steps required to implement the changes, including timelines, responsibilities, and resource allocation.

- Implement and Monitor Changes: Implement the changes Artikeld in the action plan and monitor their effectiveness. Track key metrics to measure the impact of the changes and make adjustments as needed.

- Document and Communicate: Document the review process, findings, recommendations, and implemented changes. Communicate the results to all relevant stakeholders to ensure transparency and alignment.

Designing a Checklist for Evaluating the Current State of Dev/Prod Parity in a Cloud Environment

A well-defined checklist provides a structured framework for evaluating the current state of dev/prod parity. The checklist should cover key areas and provide specific questions to assess the effectiveness of parity practices.

| Area | Question | Assessment Criteria | Status | Notes/Actions |

|---|---|---|---|---|

| Infrastructure as Code (IaC) | Are infrastructure resources defined using IaC? | Yes/No. If yes, are IaC templates version-controlled and used consistently across environments? | ||

| Configuration Management | Are configuration settings managed consistently across environments? | Yes/No. Are environment variables used? Is there a mechanism for managing secrets? | ||

| Data Synchronization | Are data synchronization strategies in place to maintain data parity? | Yes/No. Are data backups and restores tested regularly? Are data masking or anonymization techniques used in development? | ||

| Version Control and Deployment Pipelines | Are version control and deployment pipelines used to ensure consistent deployments? | Yes/No. Are deployments automated? Are there rollback mechanisms? | ||

| Monitoring and Logging | Are comprehensive monitoring and logging systems in place? | Yes/No. Are alerts configured for critical metrics? Are logs centralized and analyzed? | ||

| Testing Strategies | Are testing strategies in place to validate parity? | Yes/No. Are there automated tests that cover both development and production environments? Are performance tests conducted? | ||

| Networking and Security | Are networking and security configurations consistent across environments? | Yes/No. Are security groups and firewalls configured the same way? Are access controls consistent? | ||

| Configuration Drift Detection | Are there tools in place to detect configuration drift? | Yes/No. Are configuration changes audited and tracked? | ||

| Feedback Loops | Are feedback loops in place to identify and address parity issues? | Yes/No. Are there user feedback mechanisms? Are post-mortems conducted after incidents? | ||

| Review and Update Process | Is there a process for regularly reviewing and updating dev/prod parity practices? | Yes/No. Is there a defined review schedule? Is there a dedicated review team? |

Final Summary

In conclusion, achieving dev/prod parity in cloud environments is not merely a technical exercise but a strategic imperative. By embracing IaC, robust testing, meticulous monitoring, and continuous improvement, organizations can build resilient, reliable, and efficient cloud infrastructure. Implementing the practices Artikeld in this guide, “how to achieve dev/prod parity in cloud environments (factor X)”, will pave the way for faster deployments, reduced errors, and ultimately, a more successful cloud journey.

Essential Questionnaire

What is the primary benefit of achieving dev/prod parity?

The primary benefit is reduced risk and increased reliability. When development and production environments are closely aligned, you can catch and fix issues earlier in the development lifecycle, leading to fewer surprises and disruptions in production.

How often should I synchronize data between dev and prod?

The frequency of data synchronization depends on your specific needs and the sensitivity of the data. For some, regular synchronization (e.g., daily or weekly) may be sufficient. However, you must consider data masking to protect sensitive information during the process.

What are the key components of a successful CI/CD pipeline for parity?

A successful CI/CD pipeline for parity includes automated testing, code reviews, and automated deployment. It should be designed to deploy code and infrastructure changes consistently to both environments, ensuring minimal differences between them.

How do I handle environment-specific configurations with IaC?

Use configuration management tools (e.g., Ansible, Chef, Puppet) and environment variables. These allow you to parameterize your IaC templates and customize configurations for each environment (dev, prod, etc.) without changing the core infrastructure code.

What are the best practices for monitoring in a dev/prod parity environment?

Implement consistent monitoring across both environments using the same tools and configurations. This includes metrics collection, logging, and alerting. Ensure you monitor key performance indicators (KPIs) and set up alerts for anomalies to quickly identify and address any disparities.