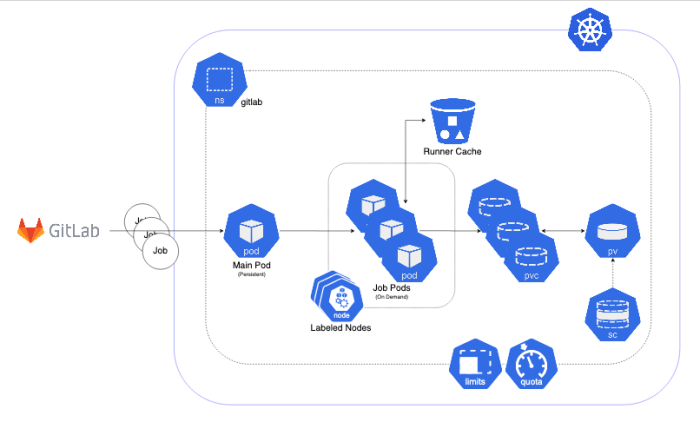

Embarking on a journey through the intricacies of Kubernetes, we delve into the fascinating world of container lifecycles. This crucial aspect of container orchestration is at the heart of Kubernetes’ power, enabling the seamless deployment, management, and scaling of applications. This guide will unravel the mechanisms behind Kubernetes’ container lifecycle management, providing a clear understanding of the processes involved, from initial creation to graceful termination.

Kubernetes, designed to automate the deployment, scaling, and management of containerized applications, relies heavily on its ability to effectively manage container lifecycles. This includes scheduling pods, pulling container images, allocating resources, implementing health checks, and handling updates and rollbacks. We will explore each of these facets in detail, uncovering how Kubernetes ensures application reliability, availability, and efficiency.

Container Creation and Scheduling

Kubernetes excels at managing the lifecycle of containers, ensuring they are deployed, running, and scaled efficiently. This section delves into the core processes involved in creating and scheduling containers within a Kubernetes cluster, focusing on the mechanisms that drive its orchestration capabilities. Understanding these processes is crucial for effectively utilizing and troubleshooting Kubernetes deployments.

Pod Scheduling Process

The scheduling of pods onto nodes within a Kubernetes cluster is a complex process governed by the Kubernetes scheduler. This process involves several stages to ensure that pods are placed on nodes that meet their resource requirements and other constraints.The scheduling process can be broken down into the following steps:

- Pod Creation and Discovery: When a user submits a pod definition, the Kubernetes API server stores this definition. The scheduler watches the API server for newly created pods that haven’t been assigned to a node (pods with `nodeName` not set).

- Filtering: The scheduler filters out nodes that do not meet the pod’s requirements. This involves checking for:

- Resource availability: The scheduler verifies that the node has sufficient CPU, memory, and other resources to accommodate the pod’s resource requests.

- Node selectors and affinity/anti-affinity rules: These rules allow pods to be scheduled on specific nodes based on labels or to avoid co-locating pods based on certain criteria.

- Taints and tolerations: Taints are applied to nodes to repel pods unless the pod has a corresponding toleration. This provides a mechanism for controlling which pods can be scheduled on which nodes.

- Scoring: The scheduler ranks the remaining nodes based on various criteria, such as:

- Resource utilization: Nodes with more available resources are generally favored.

- Pod affinity/anti-affinity: The scheduler considers existing pod placements to optimize for factors like spreading or co-location.

- Cost: In some environments, the scheduler can consider the cost of running pods on different nodes.

- Node Selection: The scheduler selects the highest-ranked node and assigns the pod to it by updating the pod’s `nodeName` field in the API server.

- Kubelet Action: The Kubelet on the assigned node detects the new pod assignment and begins the process of creating the pod’s containers.

Container Image Pulling

Pulling container images from a registry is a fundamental step in container creation. Kubernetes automates this process to ensure that the necessary images are available on the node before launching a container.The image pulling process unfolds in the following manner:

- Image Specification: When a pod is created, the pod definition specifies the container image to be used. This includes the image name and tag (e.g., `nginx:latest`).

- Image Pull Policy: The pod definition also includes an `imagePullPolicy`. This policy determines when and how the image should be pulled:

- `IfNotPresent`: The image is pulled only if it is not already present on the node.

- `Always`: The image is pulled every time the container is started.

- `Never`: The image is never pulled; it must be present on the node.

- Kubelet Action: The Kubelet, running on the assigned node, is responsible for pulling the image. It uses the container runtime (e.g., Docker, containerd, CRI-O) to perform the pull.

- Authentication: If the image is stored in a private registry, the Kubelet authenticates with the registry using credentials configured in Kubernetes secrets.

- Image Download: The container runtime downloads the image layers from the registry. This process involves fetching the image manifest and then downloading the individual layers.

- Image Caching: Once the image is downloaded, it is cached on the node. This allows subsequent container creations using the same image to be faster.

Resource Allocation During Container Creation

Resource allocation is critical for ensuring that containers have the necessary CPU, memory, and other resources to function correctly. Kubernetes provides mechanisms to specify and manage resource allocation for containers.The allocation of resources during container creation involves:

- Resource Requests and Limits: Pod definitions allow users to specify resource requests and limits for each container:

- Requests: The minimum amount of resources the container is guaranteed to receive. The scheduler uses requests to determine where to place the pod.

- Limits: The maximum amount of resources the container can consume. Limits prevent a container from monopolizing resources on a node.

- CPU Allocation:

- CPU requests are expressed in CPU units (e.g., `1` for one CPU core, `0.5` for half a core).

- The Kubelet uses cgroups to enforce CPU limits and requests.

- If a container exceeds its CPU limit, it will be throttled.

- Memory Allocation:

- Memory requests are expressed in bytes (e.g., `128Mi` for 128 mebibytes).

- The Kubelet uses cgroups to enforce memory limits and requests.

- If a container exceeds its memory limit, it may be terminated or experience out-of-memory (OOM) errors.

- Other Resource Allocation: Kubernetes also supports allocation for other resources, such as:

- Ephemeral Storage: Used for storing temporary data.

- Extended Resources: Custom resources that can be defined and managed.

- Resource Enforcement: The Kubelet, in conjunction with the container runtime, enforces the resource requests and limits specified in the pod definition. This ensures that containers operate within their allocated resources.

Container Lifecycle Hooks

Container lifecycle hooks provide a mechanism for running code at specific points in a container’s lifecycle, allowing for customization and control during startup and shutdown. These hooks are triggered by the kubelet, the agent that runs on each node in a Kubernetes cluster and manages the containers. They enable administrators to execute commands or scripts before or after a container starts or terminates, facilitating tasks like pre-configuration, health checks, and cleanup operations.

PreStart Hook

The `preStart` hook is executed immediately after a container is created, but before the container’s entrypoint is run. This hook is useful for tasks that need to be performed before the application inside the container begins its main process. It’s a crucial mechanism for ensuring that the container is correctly configured before it begins serving requests.

- Typical Uses: The `preStart` hook is typically used for the following purposes:

- Configuration: Setting up the container environment, such as creating directories, modifying configuration files, or fetching secrets. For example, a preStart hook could download a configuration file from a secret store before the application starts.

- Initialization: Initializing resources or dependencies required by the application. This might involve setting up databases, or initializing any necessary components.

- Security Setup: Performing security-related tasks, such as setting file permissions or generating SSH keys.

PostStart Hook

The `postStart` hook is executed immediately after a container has started. This hook is particularly valuable for tasks that need to be performed after the main application process has begun running, such as performing health checks, sending notifications, or warming up caches.

- Role in Container Initialization: The `postStart` hook plays a significant role in container initialization by:

- Health Checks: Running health checks to verify that the application is running correctly and ready to serve traffic.

- Cache Warming: Warming up caches to improve performance by pre-loading data. For example, a postStart hook might fetch data from an external API and store it in a local cache.

- Service Registration: Registering the container with a service discovery system.

- Monitoring and Alerting: Initializing monitoring agents or sending notifications to external systems.

Implementation of Lifecycle Hooks in a Kubernetes Deployment

Lifecycle hooks are defined within the container specification of a Kubernetes deployment. There are two ways to define hooks: using `exec` or `httpGet`.

- Using `exec`: The `exec` action executes a command inside the container.

- Example:

apiVersion: v1 kind: Pod metadata: name: lifecycle-demo spec: containers: -name: lifecycle-demo-container image: nginx:latest lifecycle: postStart: exec: command: ["/bin/sh", "-c", "echo Hello from postStart > /usr/share/nginx/html/index.html"] preStop: exec: command: ["/bin/sh", "-c", "sleep 10; echo Goodbye from preStop > /usr/share/nginx/html/index.html"]

- Using `httpGet`: The `httpGet` action makes an HTTP GET request against a container. This can be useful for health checks or other API interactions.

- Example:

apiVersion: v1 kind: Pod metadata: name: lifecycle-demo spec: containers: -name: lifecycle-demo-container image: nginx:latest lifecycle: postStart: httpGet: path: /healthz port: 80

- Important Considerations:

- Lifecycle hooks should be designed to complete quickly to avoid delaying container startup or shutdown.

- If a `preStart` hook fails, the container will not start.

- If a `postStart` hook fails, the container will still start, but the event will be logged.

- `preStop` hooks have a timeout of 30 seconds by default. If the hook doesn’t complete within this time, the kubelet will forcibly terminate the container. This timeout can be configured using the `terminationGracePeriodSeconds` field in the pod specification.

Container Health Probes

Kubernetes employs health probes to monitor the health of containers running within pods. These probes are crucial for ensuring application availability and responsiveness. They allow Kubernetes to detect and react to container failures, automatically restarting or redirecting traffic as necessary. Properly configured health probes are essential for maintaining a robust and resilient application deployment within a Kubernetes cluster.

Different Types of Kubernetes Probes

Kubernetes offers three primary types of probes, each serving a distinct purpose in assessing container health. Understanding the differences between these probes is vital for configuring them effectively.

- Liveness Probe: The liveness probe determines if a container is still running. If a liveness probe fails, Kubernetes restarts the container. This is useful for catching situations where an application has entered a deadlock or has encountered an unrecoverable error.

- Readiness Probe: The readiness probe determines if a container is ready to serve traffic. If a readiness probe fails, Kubernetes removes the container from the service endpoints, preventing traffic from being routed to it. This is crucial for ensuring that only healthy containers receive requests.

- Startup Probe: The startup probe determines if an application within a container has started. Startup probes delay other probes (liveness and readiness) until the application is ready. This is particularly useful for applications that take a long time to initialize.

Configuring a Liveness Probe to Restart a Failing Container

A liveness probe is designed to detect when an application is no longer functioning correctly and needs to be restarted. Configuring a liveness probe involves specifying how Kubernetes should check the container’s health and what action to take upon failure.

Consider a simple example using an HTTP GET request:

Here’s a snippet from a Kubernetes pod definition (YAML):

apiVersion: v1kind: Podmetadata: name: my-app-podspec: containers: -name: my-app-container image: my-app-image:latest livenessProbe: httpGet: path: /healthz port: 8080 initialDelaySeconds: 15 periodSeconds: 10

In this example:

- `httpGet` specifies an HTTP GET request to the `/healthz` path.

- `port: 8080` indicates the port to which the request should be sent.

- `initialDelaySeconds: 15` delays the probe execution for 15 seconds after the container starts. This gives the application time to initialize.

- `periodSeconds: 10` specifies that the probe should be executed every 10 seconds.

If the `/healthz` endpoint returns a status code other than 200 (OK), the liveness probe fails, and Kubernetes restarts the `my-app-container` container.

Creating a Readiness Probe that Ensures a Container is Ready to Receive Traffic

The readiness probe is critical for controlling when a container is considered ready to receive traffic from a service. This ensures that only healthy and fully initialized containers handle user requests.

Here’s a readiness probe example, similar to the liveness probe:

apiVersion: v1kind: Podmetadata: name: my-app-podspec: containers: -name: my-app-container image: my-app-image:latest readinessProbe: httpGet: path: /readyz port: 8080 initialDelaySeconds: 5 periodSeconds: 10

In this example:

- The `readinessProbe` is defined.

- It uses an HTTP GET request to the `/readyz` path on port 8080. The application must expose this endpoint and return a 200 OK status code when it’s ready to serve traffic.

- `initialDelaySeconds: 5` allows the container to start before the probe runs.

- `periodSeconds: 10` checks readiness every 10 seconds.

When the `my-app-container` is ready, the `/readyz` endpoint returns a 200 OK. Kubernetes then adds the container’s IP address to the endpoints of the associated service, allowing the service to route traffic to it. If the readiness probe fails, the container is removed from the service’s endpoints, preventing traffic from being sent to the unhealthy container.

Container Updates and Rollouts

Kubernetes provides robust mechanisms for updating containerized applications, ensuring minimal downtime and facilitating seamless transitions between different versions of your software. This is achieved through sophisticated rollout strategies and rollback capabilities. Understanding these features is critical for maintaining application availability and operational efficiency in a dynamic environment.

Rolling Update Strategy and Its Benefits

The rolling update strategy is Kubernetes’ default approach for updating deployments. It allows for updating applications without service interruption by gradually replacing old pods with new ones. This approach enhances application availability and provides flexibility during updates.The benefits of rolling updates are significant:

- Zero Downtime: Applications remain available throughout the update process. Users continue to access the service without interruption.

- Gradual Rollout: The update happens in stages. This allows for monitoring the new version’s performance before fully deploying it.

- Automatic Rollback: If issues are detected during the rollout (e.g., increased error rates, failing health checks), Kubernetes can automatically roll back to the previous version.

- Controlled Updates: Administrators can control the pace and concurrency of updates, limiting the impact on system resources.

Steps Involved in Updating a Container Image in a Deployment

Updating a container image within a Kubernetes deployment involves several coordinated steps managed by the Kubernetes controller. These steps ensure a smooth and controlled transition to the new image version.The update process unfolds as follows:

- Deployment Specification Modification: The deployment’s container image tag is updated in the deployment manifest (YAML file) to the new version. This can be done using `kubectl set image` or by directly editing the YAML file.

- Controller Detection: The Kubernetes Deployment controller detects the change in the deployment specification.

- Pod Creation: The controller creates new pods based on the updated image. These new pods are scheduled and started.

- Readiness Probe Checks: Before traffic is routed to the new pods, Kubernetes ensures they pass readiness probes, confirming they are ready to serve requests.

- Traffic Routing: Once the new pods are ready, the Kubernetes Service starts routing traffic to them.

- Old Pod Termination: After the new pods are serving traffic and are deemed healthy, the controller gradually terminates the old pods.

- Update Completion: The update is complete when all old pods have been replaced by the new pods.

Strategy for Handling Container Version Rollback in Case of Update Failures

Kubernetes provides mechanisms for rolling back to a previous version of a container image if an update fails. This ensures that the application can revert to a known working state, minimizing downtime and impact on users. A well-defined rollback strategy is crucial for maintaining application stability.Key components of a rollback strategy include:

- Deployment Revision History: Kubernetes maintains a history of deployment revisions. This allows administrators to easily revert to a previous version.

- Automatic Rollback Triggers: Kubernetes can automatically trigger a rollback if it detects update failures. This can be based on readiness probe failures, liveness probe failures, or other defined metrics.

- Manual Rollback: Administrators can manually trigger a rollback using `kubectl rollout undo deployment/

`. - Rollback Process: When a rollback is initiated, Kubernetes reverts to a previous deployment revision, recreating pods based on the old image version. The old pods are then terminated.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems is essential for quickly identifying update failures and triggering rollbacks. Monitoring tools should track metrics such as error rates, CPU usage, and response times.

For example, if an update introduces a bug that causes a significant increase in error rates, an automated rollback can be triggered, reverting the application to the last known good version. Similarly, a failed readiness probe during the update can prevent the new pods from receiving traffic and initiate a rollback automatically. This ensures the service continues to function with minimal disruption.

Container Termination and Cleanup

Kubernetes provides mechanisms to gracefully shut down containers, ensuring that running processes have a chance to complete their work before being terminated. This is crucial for maintaining data integrity and providing a smooth user experience. The process involves several steps, including the use of lifecycle hooks and pod deletion procedures.

Graceful Shutdown Mechanism

Kubernetes employs a graceful shutdown mechanism to minimize disruption when a container needs to be terminated. This process allows containers to handle pending requests and save their state before they are stopped.The process generally unfolds as follows:

- Initiation: When a pod is deleted or scaled down, Kubernetes initiates the termination process.

- Pre-Stop Hook Execution: If a `preStop` hook is defined in the container’s configuration, it’s executed. This hook allows the container to perform cleanup tasks.

- SIGTERM Signal: Kubernetes sends a SIGTERM signal to the container’s main process. This signal requests the process to shut down gracefully.

- Termination Grace Period: Kubernetes waits for a specified grace period (default: 30 seconds) for the container to terminate. This period allows the application to finish its work.

- SIGKILL Signal: If the container doesn’t terminate within the grace period, Kubernetes sends a SIGKILL signal, which immediately terminates the container.

Role of the `preStop` Hook

The `preStop` hook plays a vital role in the graceful shutdown process. It provides a mechanism for containers to perform necessary cleanup tasks before they are terminated.The `preStop` hook is defined within the container’s specification in the pod definition. It can execute a command or run a specified script.Here’s how the `preStop` hook is used:

- Cleanup Tasks: The `preStop` hook is typically used to perform tasks like:

- Closing network connections.

- Saving application state.

- Releasing resources.

- Draining traffic from the container (e.g., by removing it from a load balancer).

- Implementation: The `preStop` hook is defined within the `lifecycle` section of the container specification.

- Execution: Kubernetes executes the `preStop` hook immediately before sending the SIGTERM signal.

Example of `preStop` hook:

apiVersion: v1kind: Podmetadata: name: my-podspec: containers: -name: my-container image: nginx:latest lifecycle: preStop: exec: command: ["/bin/sh", "-c", "nginx -s quit; sleep 5"]

In this example, the `preStop` hook executes the command `nginx -s quit` to gracefully shut down the Nginx web server and then waits for 5 seconds before the container is terminated.

This gives Nginx time to finish processing requests.

Deleting a Pod and Its Associated Containers

Deleting a pod and its associated containers is a multi-step process that ensures a clean shutdown. The steps involve a series of actions to gracefully terminate the containers.The process of deleting a pod and its associated containers includes the following steps:

- Initiation: The deletion process begins when a user or a controller (e.g., a Deployment controller) requests the deletion of a pod. This is usually triggered by deleting the pod object using `kubectl delete pod

`. - Deletion Timestamp: Kubernetes marks the pod for deletion by adding a deletion timestamp to its metadata.

- Controller Actions: Controllers that manage the pod (e.g., Deployments, StatefulSets) are notified about the deletion and begin to reconcile the desired state. This often involves creating new pods to replace the deleted ones.

- Graceful Termination: Kubernetes proceeds with the graceful termination of the containers within the pod, as described in the “Graceful Shutdown Mechanism” section.

- Resource Cleanup: After the containers are terminated, Kubernetes cleans up the resources associated with the pod, such as network interfaces and volumes.

- Finalization: Once all containers are terminated and resources are cleaned up, the pod object is removed from the Kubernetes API server.

Container Networking and Communication

Kubernetes provides robust networking capabilities that are fundamental to the operation and scalability of containerized applications. These networking features enable containers to communicate with each other, both within and outside the cluster, facilitating the complex interactions required by modern applications. Understanding these mechanisms is crucial for designing, deploying, and managing applications in Kubernetes.

Communication Between Containers Within a Pod

Containers within a Kubernetes Pod share the same network namespace. This design simplifies inter-container communication.

- Shared Network Namespace: All containers within a Pod have the same IP address and port space. This allows them to communicate with each other using `localhost` or their respective container names.

- Network Isolation: While sharing a network namespace within a Pod, Pods themselves are isolated from other Pods by default. This isolation is achieved through the use of virtual networks and network policies.

- Use Cases: This model is beneficial for applications where containers need to work closely together, such as a web server container and a database container, or a frontend and a backend within the same logical unit.

Comparison of Kubernetes Networking Models

Kubernetes supports various networking models, each implementing the Container Network Interface (CNI) specification. These models offer different trade-offs in terms of performance, features, and complexity.

- Flannel: Flannel is a simple and widely used networking model. It creates a virtual network for Pods. It uses a key-value store (like etcd) to store network configuration. Flannel assigns each Pod a unique IP address from a pre-configured subnet. It then uses a user-space proxy to forward traffic between Pods on different nodes.

Advantages: Easy to set up and understand. Works well in many environments.

Disadvantages: Can introduce performance overhead due to the user-space proxy.

- Calico: Calico is a more advanced and feature-rich networking model that utilizes BGP (Border Gateway Protocol) for routing. It offers network policies and supports a wide range of network configurations. Calico assigns each Pod a unique IP address and uses a distributed routing protocol to forward traffic. It also supports network policy enforcement, allowing for fine-grained control over network traffic.

Advantages: High performance, robust network policies, and scalability.

Disadvantages: More complex to set up and manage. Requires a deeper understanding of networking concepts.

- Other Models: Other CNI plugins include Canal (combining Flannel and Calico), Weave Net, and Cilium. Each offers different features and performance characteristics, catering to various use cases and environments.

- Choosing a Model: The choice of a networking model depends on the specific requirements of the application and the Kubernetes environment. Factors to consider include performance, security, and ease of management. For example, a production environment might benefit from the advanced features of Calico, while a simpler environment might be suitable for Flannel.

Exposing Containerized Applications with Services

Kubernetes Services provide an abstraction layer that enables access to containerized applications. Services expose applications to the outside world and manage the load balancing and discovery of Pods.

- Service Types: Kubernetes offers different service types to expose applications:

- ClusterIP: Exposes the service on a cluster-internal IP address. This is the default type. The service is only accessible from within the cluster.

- NodePort: Exposes the service on each node’s IP address at a static port. Accessible from outside the cluster using `

: `. - LoadBalancer: Creates an external load balancer in the cloud provider (e.g., AWS, Google Cloud, Azure). The load balancer directs traffic to the service.

- ExternalName: Maps the service to the externalName field by returning a CNAME record with its value. This allows access to external services.

- Service Discovery: Services use labels and selectors to identify the Pods they expose. Kubernetes automatically updates the service endpoint as Pods are created, scaled, or terminated. This ensures that traffic is always routed to healthy Pods.

- Load Balancing: Services automatically load balance traffic across the Pods matching the service’s selector. This improves application availability and performance.

- Example: Consider a web application deployed in a Kubernetes cluster. The application’s Pods are managed by a Deployment. A Service of type `LoadBalancer` is created to expose the application to the internet. The cloud provider provisions a load balancer that directs traffic to the service. The service then load balances traffic across the application’s Pods.

Container Storage Management

Kubernetes provides robust mechanisms for managing storage within containerized environments. Effective storage management is crucial for data persistence, application state preservation, and enabling containerized applications to interact with data effectively. This section details how Kubernetes handles persistent storage, focusing on volume attachment, persistent volume claims, and the relationships between pods, volumes, and claims.

Methods for Attaching Persistent Volumes to Containers

Attaching persistent volumes to containers involves several methods, each with its own characteristics and use cases. The choice of method depends on the specific storage requirements of the application and the underlying storage infrastructure.

- Volumes using `emptyDir`: `emptyDir` volumes are ephemeral and created when a pod is assigned to a node. They are deleted when the pod is removed. While useful for temporary storage, they are not suitable for persistent data. This is because data stored in an `emptyDir` volume is lost when the pod is terminated or rescheduled to another node. For instance, a cache directory or a temporary working space for a data processing task might use `emptyDir`.

- Volumes using `hostPath`: `hostPath` volumes allow a pod to access a directory on the host node’s filesystem. This can be useful for testing and development but is generally discouraged in production environments due to its limitations in terms of portability and scalability. Data persists as long as the host directory exists, regardless of the pod’s lifecycle. However, if the pod is rescheduled to a different node, it will no longer have access to the original `hostPath`.

- Volumes using `PersistentVolumeClaims (PVCs)`: PVCs are the recommended method for persistent storage in Kubernetes. They abstract the underlying storage infrastructure, allowing users to request storage without knowing the specifics of the storage implementation. This is the most common and flexible approach for production environments, ensuring data persistence across pod lifecycles. PVCs bind to `PersistentVolumes (PVs)`, which represent the actual storage resources.

- Volumes using `configMap` and `secrets`: While not strictly persistent storage, `configMap` and `secrets` can store configuration data or sensitive information, such as passwords and API keys, and make them available to containers. These are typically mounted as files or environment variables within the container. They provide a mechanism to manage configuration separately from the container image.

Using PersistentVolumeClaims (PVCs) for Storage Requests

PersistentVolumeClaims (PVCs) are how users request storage resources from the Kubernetes cluster. They act as a request for storage of a specific size and access mode. Kubernetes then attempts to find a matching PersistentVolume (PV) to satisfy the claim.

The process typically involves the following steps:

- Creating a PersistentVolumeClaim: A user defines a PVC using a YAML configuration file. This file specifies the desired storage size, access modes (e.g., ReadWriteOnce, ReadOnlyMany, ReadWriteMany), and storage class.

- Storage Class: A StorageClass provides a way for administrators to describe the “classes” of storage they offer. Different storage classes might map to different storage backends, such as AWS EBS, Google Persistent Disk, or Azure Disk.

- Claim Binding: The Kubernetes control plane searches for a PV that matches the PVC’s requirements. If a suitable PV is found, the PVC is bound to it. If no matching PV exists, the PVC remains in a pending state until a matching PV becomes available, or the storage provisioner dynamically creates a PV.

- Pod Definition: The pod definition references the PVC to mount the storage volume. The pod’s containers can then read from and write to the mounted volume.

Example of a PVC definition:

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: my-pvcspec: accessModes: -ReadWriteOnce resources: requests: storage: 1Gi storageClassName: standard

In this example, the PVC requests 1 GiB of storage with `ReadWriteOnce` access mode and uses the `standard` storage class.

Interaction Between Pods, PersistentVolumes, and PersistentVolumeClaims

The interaction between pods, PersistentVolumes (PVs), and PersistentVolumeClaims (PVCs) is central to Kubernetes’ storage management. Understanding this interaction is crucial for managing persistent data effectively.

The components interact as follows:

- PersistentVolume (PV): A PV is a resource in the cluster that represents a piece of storage in the infrastructure. It is provisioned by an administrator or dynamically provisioned using a StorageClass. It has a capacity, access modes, and reclaim policy.

- PersistentVolumeClaim (PVC): A PVC is a request for storage by a user. It specifies the desired size, access modes, and optionally, a storage class. It is created in a specific namespace.

- Pod: A pod uses a PVC to mount a volume. The pod definition references the PVC by its name, and Kubernetes mounts the associated PV to the pod’s container.

- Binding: When a PVC is created, the Kubernetes control plane searches for a PV that matches the PVC’s requirements. If a matching PV is found, the PVC is bound to it. If a PV is dynamically provisioned, the PVC is automatically bound.

Consider a scenario where a database pod requires persistent storage:

- A PVC is created, requesting a specific storage size and access mode (e.g., ReadWriteOnce).

- A matching PV is either pre-provisioned by an administrator or dynamically provisioned by a storage provider.

- The PVC is bound to the PV.

- The database pod definition references the PVC.

- When the pod is created, Kubernetes mounts the PV to the pod’s container, allowing the database to store its data persistently.

This design allows for decoupling the storage infrastructure from the application, enabling portability and easier management of storage resources. It also allows for storage capacity to be scaled independently of the application’s compute resources.

Container Resource Management (CPU/Memory)

Managing container resources effectively is crucial for the stability, performance, and cost-efficiency of applications running in Kubernetes. Kubernetes provides mechanisms to control the CPU and memory allocated to containers, preventing resource contention and ensuring that applications receive the necessary resources to function correctly. Understanding and implementing these mechanisms is fundamental for operating a Kubernetes cluster successfully.

Setting Resource Requests and Limits for Containers

Resource requests and limits are defined in the container specification within a Kubernetes pod definition. These values instruct the Kubernetes scheduler on how to allocate resources to the container and how to manage its resource consumption. The `resources` field in the pod definition is used to specify these values.Requests and limits are defined using the `resources` field in the container specification.

Here’s how it works:“`yamlapiVersion: v1kind: Podmetadata: name: my-podspec: containers:

name

my-container image: my-image:latest resources: requests: cpu: “100m” memory: “128Mi” limits: cpu: “500m” memory: “512Mi”“`The `requests` section specifies the minimum amount of resources the container is guaranteed to receive.

The Kubernetes scheduler uses these values to determine where to place the pod. The `limits` section defines the maximum amount of resources the container can use. If a container exceeds its limits, it may be throttled (for CPU) or terminated (for memory).Here is a breakdown of the key components:

- `requests`: Defines the minimum amount of resources a container requires.

- `limits`: Defines the maximum amount of resources a container can use.

- CPU: Measured in CPU units (e.g., “100m” for 0.1 CPU, “1” for 1 CPU).

- Memory: Measured in bytes (e.g., “128Mi” for 128 mebibytes, “1Gi” for 1 gibibyte).

How Kubernetes Uses Resource Requests and Limits for Scheduling and Resource Allocation

Kubernetes leverages resource requests and limits extensively during scheduling and resource allocation. The scheduler considers the requests when deciding which node to place a pod on, ensuring that the node has sufficient available resources. Limits help prevent a single pod from consuming excessive resources and impacting other pods on the same node.The Kubernetes scheduler uses resource requests to make placement decisions.

The scheduler ensures that a node has enough available resources to satisfy a pod’s requests before assigning it to that node. This prevents over-provisioning and resource contention.When a pod is created, the scheduler:

- Checks Node Capacity: It examines each node’s available CPU and memory.

- Compares with Requests: It compares the pod’s resource requests with the available resources on each node.

- Selects a Suitable Node: It selects a node that has enough resources to satisfy the pod’s requests.

Resource limits, on the other hand, control the maximum resource consumption of a container.

- CPU Limits: If a container exceeds its CPU limit, Kubernetes throttles the container, preventing it from using more CPU time.

- Memory Limits: If a container exceeds its memory limit, it may be terminated by the kubelet, resulting in an `OOMKilled` (Out Of Memory Killed) error.

By using both requests and limits, Kubernetes provides a robust mechanism for managing resources and ensuring efficient resource utilization across the cluster.

Best Practices for Resource Management to Optimize Application Performance

Implementing best practices for resource management can significantly improve application performance and resource utilization in Kubernetes. This involves careful planning, monitoring, and tuning of resource requests and limits based on application behavior and workload characteristics.Effective resource management involves several key practices:

- Right-sizing Requests and Limits: Analyze application resource consumption using monitoring tools to determine appropriate request and limit values. Start with conservative estimates and adjust based on observed behavior. Avoid setting requests too high, as this can lead to inefficient resource allocation, or too low, which can lead to performance issues.

- Monitoring Resource Usage: Continuously monitor CPU and memory usage of containers using tools like Prometheus and Grafana. This data provides insights into application performance and helps identify potential resource bottlenecks.

- Horizontal Pod Autoscaling (HPA): Implement HPA to automatically scale the number of pods based on resource utilization metrics, such as CPU and memory. This ensures that the application can handle fluctuating workloads.

- Resource Quotas: Use resource quotas to limit the total resources that a namespace can consume. This prevents resource exhaustion and ensures fair resource allocation across different teams or applications.

- Avoid Unnecessary Limits: Setting excessively high limits can waste resources, while setting excessively low limits can cause performance issues. Balance limits with requests to achieve optimal resource utilization.

- Regular Review and Tuning: Regularly review and adjust resource requests and limits based on application performance and workload changes. This ensures that resource allocation remains optimal over time.

By following these best practices, you can optimize resource utilization, improve application performance, and reduce infrastructure costs in your Kubernetes environment.

Container Monitoring and Logging

Effective monitoring and logging are critical for maintaining the health, performance, and security of containerized applications within Kubernetes. They provide valuable insights into application behavior, resource utilization, and potential issues, enabling proactive troubleshooting and optimization. Robust monitoring and logging practices are essential for operational excellence in a Kubernetes environment.

Collecting Logs from Containers

Kubernetes provides several mechanisms for collecting logs from containers, each with its own advantages and use cases. Understanding these methods is crucial for establishing a comprehensive logging strategy.

- Container Logs: Kubernetes automatically captures the standard output (stdout) and standard error (stderr) streams from each container and makes them accessible. These logs can be viewed using the `kubectl logs` command. This is the simplest and most basic method for log retrieval.

- Logging Agents: Deploying a logging agent (e.g., Fluentd, Fluent Bit, Logstash) as a DaemonSet is a common approach. These agents run on each node in the cluster and collect logs from all containers running on that node. They then forward the logs to a centralized logging system for storage and analysis.

- Sidecar Containers: A sidecar container can be deployed alongside the main application container. This sidecar container is responsible for collecting logs from the application container (e.g., reading log files or listening for log messages) and forwarding them to a centralized logging system. This approach is particularly useful when the application itself does not natively support log forwarding.

- Log Volumes: Applications can write logs to a shared volume, which is then mounted by a logging agent or sidecar container for collection. This approach allows for more complex log processing and aggregation before forwarding.

Integrating a Logging Solution with Kubernetes

Integrating a logging solution with Kubernetes typically involves deploying a logging agent or configuring application containers to send logs to a centralized logging backend. Several popular logging solutions can be integrated with Kubernetes.

- Fluentd: Fluentd is a popular open-source data collector for unified logging. It can be deployed as a DaemonSet in Kubernetes to collect logs from all nodes. Fluentd can then forward the logs to various backends, such as Elasticsearch, Splunk, or cloud-based logging services.

- Elasticsearch and Kibana (EFK Stack): The EFK stack (Elasticsearch, Fluentd, and Kibana) is a widely used logging solution for Kubernetes. Fluentd collects and forwards logs to Elasticsearch, a distributed search and analytics engine. Kibana provides a web interface for visualizing and analyzing the logs stored in Elasticsearch.

- Prometheus and Grafana: While primarily used for monitoring, Prometheus and Grafana can also be used for log aggregation and visualization. Prometheus can scrape logs from various sources, and Grafana can be used to create dashboards for visualizing the logs.

- Cloud-Provider Specific Solutions: Cloud providers such as Google Cloud (Google Cloud Logging), Amazon Web Services (CloudWatch), and Microsoft Azure (Azure Monitor) offer managed logging services that can be easily integrated with Kubernetes. These services often provide advanced features such as log analysis, alerting, and compliance.

Example of a Fluentd configuration for Kubernetes:

This example demonstrates a basic Fluentd configuration (fluentd.conf) for collecting logs from containers and forwarding them to Elasticsearch:

<source> @type kubernetes_logs tag logs.* @log_level info </source> <match logs.> @type elasticsearch host elasticsearch-service port 9200 index_name fluentd type_name fluentd <buffer> @type file path /var/log/fluentd-buffer/fluentd.buffer flush_interval 5s chunk_limit_size 2M </buffer> </match>In this configuration, the `kubernetes_logs` input plugin collects logs from Kubernetes containers. The `elasticsearch` output plugin forwards the logs to an Elasticsearch instance. This configuration should be deployed as a ConfigMap and a DaemonSet within the Kubernetes cluster. The host and port settings for Elasticsearch must match the address of the Elasticsearch service.

Creating a Monitoring Strategy for Container Resource Usage

Monitoring container resource usage is crucial for optimizing application performance, ensuring resource availability, and preventing resource exhaustion. A comprehensive monitoring strategy involves tracking CPU and memory utilization.

- Resource Metrics Server: The Kubernetes Resource Metrics Server provides metrics for CPU and memory usage of pods and nodes. It collects metrics from the kubelet and makes them available through the Kubernetes API. This allows you to monitor resource usage using tools like `kubectl top pod` and `kubectl top node`.

- Prometheus: Prometheus is a powerful open-source monitoring system that can be used to collect, store, and query metrics. Prometheus can be configured to scrape metrics from the Resource Metrics Server or directly from application containers.

- Grafana: Grafana is a popular data visualization and dashboarding tool that can be used to visualize metrics collected by Prometheus. You can create dashboards to display CPU and memory utilization, as well as other relevant metrics.

- Alerting: Configure alerts based on resource usage thresholds. For example, you can set up alerts to be triggered when a container’s CPU utilization exceeds a certain percentage or when a pod’s memory usage approaches its limits. This enables proactive response to potential issues.

- Horizontal Pod Autoscaling (HPA): Kubernetes’ Horizontal Pod Autoscaler (HPA) automatically scales the number of pods in a deployment based on observed CPU utilization or other custom metrics. This ensures that your application can handle changing workloads and maintain optimal performance.

Example of a Prometheus query for CPU utilization:

This example demonstrates a Prometheus query to calculate the average CPU utilization of a pod named “my-app” over the last 5 minutes:

sum(rate(container_cpu_usage_seconds_totalpod="my-app"[5m])) by (pod)This query calculates the rate of CPU usage per second for the “my-app” pod and sums it to obtain the total CPU usage. This query would be used within a Grafana dashboard to visualize the CPU usage of the pod over time. The `rate()` function calculates the per-second rate of change of the time series, while `sum()` aggregates the results.

The `[5m]` specifies the range of time to consider for the calculation.

Last Point

In conclusion, we’ve traversed the landscape of how Kubernetes manages container lifecycles, revealing the core components that make it such a powerful orchestration tool. From the initial scheduling of containers to their eventual termination, Kubernetes provides a robust framework for managing the entire lifecycle. By understanding these mechanisms, developers and operators can leverage Kubernetes to build resilient, scalable, and efficient applications, paving the way for a modern, containerized future.

Essential Questionnaire

What is a Pod in Kubernetes?

A Pod is the smallest deployable unit in Kubernetes. It represents a single instance of a running process in your cluster, and it can contain one or more containers that share storage and network resources.

How does Kubernetes handle container restarts?

Kubernetes uses liveness probes to determine if a container is healthy. If a liveness probe fails, Kubernetes automatically restarts the container, ensuring the application remains available.

What is the difference between Requests and Limits in Kubernetes resource management?

Requests specify the minimum amount of resources (CPU and memory) a container needs to run. Limits define the maximum amount of resources a container can consume. Kubernetes uses requests for scheduling and limits to prevent resource exhaustion.

How does Kubernetes update container images in a deployment?

Kubernetes uses a rolling update strategy by default. It gradually replaces old pods with new ones, ensuring that the application remains available throughout the update process. This strategy minimizes downtime.