The evolution of cloud computing has ushered in the era of serverless architectures, promising scalability and efficiency. However, this shift presents new challenges in the realm of observability. Effective monitoring and logging are not merely supplementary activities; they are fundamental to the operational health and security of serverless applications. Understanding the nuances of serverless monitoring and logging is crucial for developers and operations teams seeking to harness the full potential of this innovative paradigm.

This guide to serverless monitoring and logging will dissect the core concepts, best practices, and essential tools required to build robust and resilient serverless applications. We will explore key metrics, logging strategies, and the implementation of alerting systems, all while addressing critical aspects of security and cost optimization. The analysis will provide actionable insights, enabling practitioners to navigate the complexities of serverless environments with confidence and precision.

Introduction to Serverless Monitoring and Logging

Serverless computing represents a paradigm shift in application development and deployment, moving away from managing infrastructure to focusing solely on code execution. This approach introduces unique challenges and opportunities for monitoring and logging, necessitating a distinct set of strategies and tools compared to traditional server-based systems. Effective serverless monitoring and logging are crucial for understanding application behavior, identifying performance bottlenecks, troubleshooting issues, and ensuring the overall health and reliability of serverless applications.

Serverless Architecture Fundamentals

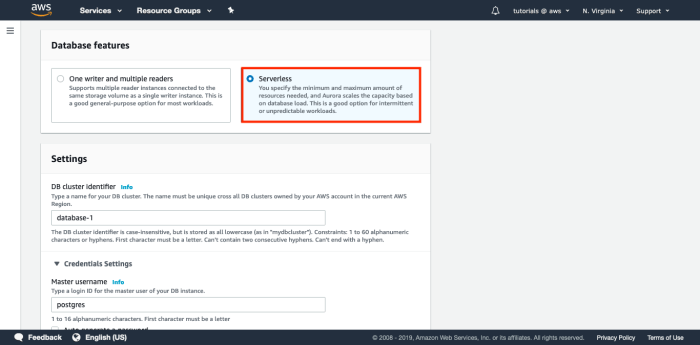

Serverless architecture is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers deploy code in the form of functions, and the cloud provider handles all aspects of server management, including provisioning, scaling, and maintenance. This contrasts sharply with traditional server-based systems, where developers are responsible for managing servers, operating systems, and related infrastructure.Key characteristics that distinguish serverless from traditional architectures include:

- No Server Management: Developers do not provision or manage servers. The cloud provider handles all infrastructure-related tasks.

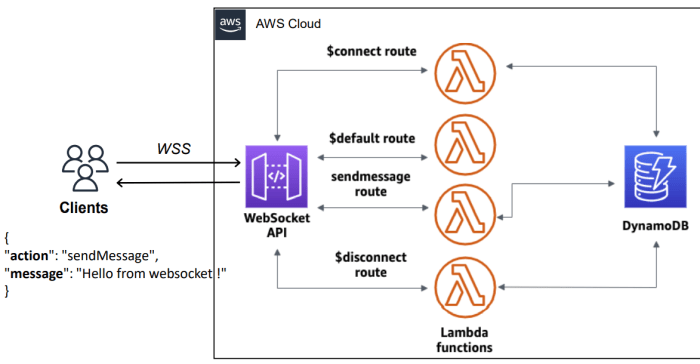

- Event-Driven: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or scheduled timers.

- Automatic Scaling: The cloud provider automatically scales the resources allocated to functions based on demand.

- Pay-per-Use: Developers are charged only for the actual compute time and resources consumed by their functions.

- Stateless Functions: Serverless functions are generally stateless, meaning they do not maintain any state between invocations. Any required state is typically stored in external services, such as databases or object storage.

Defining Serverless Monitoring and Logging

Serverless monitoring and logging involve the collection, analysis, and interpretation of data generated by serverless applications to understand their behavior, performance, and health. This encompasses tracking metrics, collecting logs, and setting up alerts to proactively identify and resolve issues. Unlike traditional monitoring, serverless monitoring must account for the ephemeral nature of functions and the distributed nature of serverless applications.Serverless logging focuses on capturing events and messages generated by serverless functions and the underlying cloud services.

This data provides insights into the application’s execution flow, errors, and performance characteristics. The logs are crucial for debugging, auditing, and gaining a comprehensive understanding of the application’s behavior.

Benefits of Effective Serverless Monitoring and Logging

Implementing robust serverless monitoring and logging practices provides numerous benefits:

- Improved Application Reliability: Monitoring allows for the early detection of issues, enabling rapid troubleshooting and reducing the risk of application downtime. By analyzing logs and metrics, developers can identify the root causes of errors and implement corrective measures.

- Enhanced Performance Optimization: Monitoring and logging provide insights into function performance, identifying bottlenecks and areas for optimization. By analyzing metrics such as execution time, memory usage, and cold start times, developers can improve function efficiency and reduce costs.

- Cost Optimization: By monitoring resource consumption, developers can identify and eliminate unnecessary costs. Analyzing logs and metrics can reveal opportunities to optimize function configurations, reduce resource usage, and minimize the overall cost of serverless applications.

- Simplified Debugging: Comprehensive logging provides detailed information about function executions, including input parameters, output values, and any errors that occurred. This information is invaluable for debugging issues and understanding the behavior of the application.

- Proactive Issue Resolution: Setting up alerts based on predefined thresholds allows for proactive identification and resolution of potential problems. Monitoring systems can automatically notify developers when critical metrics exceed defined limits, allowing them to address issues before they impact users.

- Compliance and Auditing: Logging provides a record of application activity, which is essential for meeting compliance requirements and conducting audits. Logs can be used to track user actions, identify security breaches, and ensure that the application adheres to regulatory standards.

Key Metrics to Monitor in Serverless Environments

Serverless environments introduce a paradigm shift in application architecture, demanding a refined approach to monitoring and logging. Traditional monitoring practices often fall short in these dynamic and ephemeral systems. Understanding and tracking key metrics is crucial for maintaining application health, optimizing performance, and ensuring cost-effectiveness. This section details the critical metrics that should be monitored to effectively manage serverless applications.

Essential Metrics for Serverless Functions

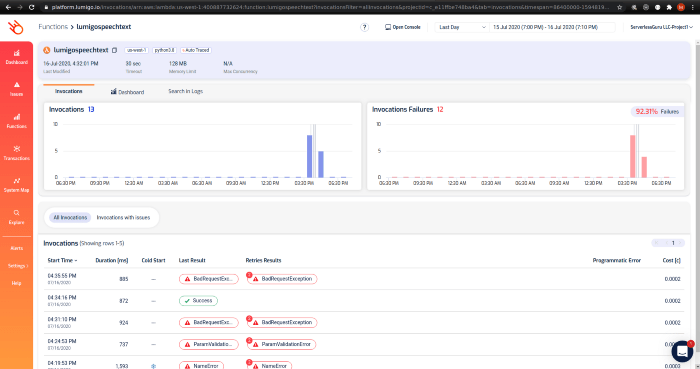

Monitoring serverless functions requires a focus on metrics that reflect their execution and resource consumption. These metrics provide insights into function performance, error rates, and overall efficiency. Analyzing these metrics allows for proactive identification of issues and optimization opportunities.

- Invocation Count: This metric represents the number of times a function is executed. A sudden increase in invocation count can indicate increased traffic or potential issues with event triggers. A consistent pattern of invocations allows for capacity planning.

- Duration: This metric measures the time a function takes to execute, from invocation to completion. Monitoring duration helps identify performance bottlenecks and inefficiencies within the function code. Prolonged durations can impact user experience and increase costs.

Duration (in seconds) = End Time – Start Time

For example, a function processing image resizing may exhibit increased duration when processing larger image files.

- Errors: Tracking the number of function errors is critical for identifying and resolving issues. Errors can indicate problems in the code, configuration, or dependencies. Monitoring error rates allows for immediate responses to application failures.

Error Rate = (Number of Errors / Total Invocations)

– 100For instance, a function interacting with a database may experience errors due to network issues or incorrect credentials.

- Throttles: Serverless platforms impose limits on function concurrency and resource usage. Monitoring throttle events reveals if a function is exceeding these limits, leading to rejected invocations. Identifying and resolving throttle issues ensures application availability.

- Cold Starts: Cold starts occur when a function is invoked and the execution environment must be initialized. This results in increased latency. Monitoring cold start metrics helps identify functions that are frequently cold-started, allowing optimization of function configuration or code. For example, a function that’s infrequently called may exhibit a higher cold start rate.

- Memory Usage: This metric tracks the amount of memory a function consumes during execution. Monitoring memory usage helps identify memory leaks or inefficient code. Optimizing memory allocation improves function performance and reduces costs.

- Concurrency: This metric reflects the number of function instances running concurrently. Monitoring concurrency is essential for understanding resource utilization and identifying potential bottlenecks.

API Gateway Metrics and Their Significance

API Gateway acts as the entry point for serverless applications, managing incoming requests and routing them to the appropriate backend services. Monitoring API Gateway metrics provides insights into the performance and health of the entire API.

- Request Latency: This metric measures the time it takes for the API Gateway to process a request and return a response. High latency can negatively impact user experience. Analyzing latency helps identify bottlenecks in the API infrastructure.

- Error Rates: Monitoring error rates at the API Gateway level reveals issues with the API endpoints or backend services. High error rates indicate problems that require immediate attention. Error rates can be categorized based on HTTP status codes (e.g., 4xx, 5xx) to provide more granular insights.

- Traffic Volume: Tracking the number of requests received by the API Gateway provides insights into API usage and traffic patterns. Monitoring traffic volume helps in capacity planning and identifying potential performance issues.

- Integration Latency: This metric measures the time spent by the API Gateway integrating with backend services. High integration latency can indicate problems with the backend service performance or network connectivity.

- Cache Hit Ratio: If API caching is enabled, monitoring the cache hit ratio indicates how effectively the cache is serving requests. A low cache hit ratio may indicate that the cache is not configured correctly or that the data is not being cached efficiently.

Serverless Application Metrics and Thresholds

The following table summarizes essential serverless application metrics and their recommended thresholds. These thresholds serve as guidelines for proactive monitoring and alerting.

| Metric | Description | Threshold | Impact | Remediation |

|---|---|---|---|---|

| Function Duration | Time taken for a function to execute | > 5 seconds (configurable based on function requirements) | Slow response times, increased costs | Optimize code, increase memory allocation |

| Function Error Rate | Percentage of failed function invocations | > 1% | Application failures, degraded user experience | Investigate and fix code errors, configuration issues |

| API Gateway Latency | Time to process an API request | > 1 second (configurable based on API requirements) | Slow response times, user frustration | Optimize backend services, caching strategies |

| API Gateway Error Rate | Percentage of failed API requests | > 1% (configurable based on API requirements) | Application unavailability, data loss | Address backend service issues, network problems |

| Cold Start Rate | Percentage of function invocations with cold starts | > 10% (configurable based on function requirements) | Increased latency, performance degradation | Optimize function configuration, provisioned concurrency |

| Throttles | Number of function invocations throttled | > 0 | Application unavailability | Increase function concurrency, optimize resource usage |

Logging Best Practices for Serverless Applications

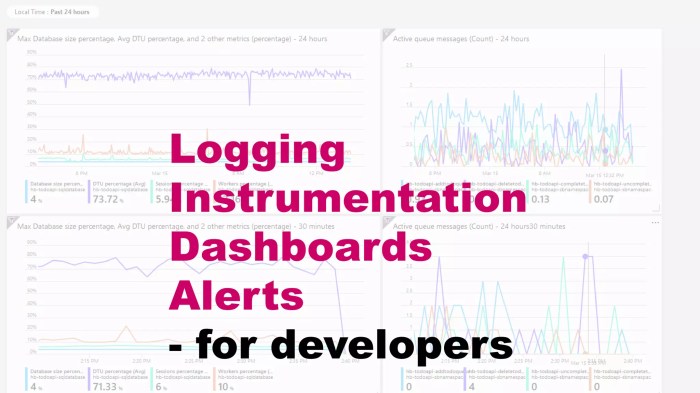

Effective logging is crucial for the operational success of serverless applications. It provides insights into application behavior, aids in debugging, and supports performance monitoring. The ephemeral nature of serverless functions necessitates a robust logging strategy that captures relevant information and facilitates efficient analysis. This section details best practices for logging in serverless environments.Structured logging, in particular, is highly recommended for its ability to simplify log analysis and improve the overall observability of serverless applications.

This approach enables the consistent formatting of log data, allowing for easier searching, filtering, and aggregation of information across different functions and services.

Effective Logging Strategies for Serverless Functions

Serverless functions often run in short-lived environments, making it imperative to adopt logging strategies that capture and retain critical information efficiently. Implementing these strategies ensures comprehensive visibility into the application’s performance and health.

- Centralized Logging: Centralizing logs from all serverless functions to a single location, such as a cloud-based logging service (e.g., AWS CloudWatch, Google Cloud Logging, Azure Monitor), is essential. This consolidation simplifies log aggregation, analysis, and correlation across the entire application. This enables the creation of a unified view of the application’s behavior, facilitating easier troubleshooting and performance monitoring.

- Asynchronous Logging: To avoid impacting function performance, use asynchronous logging. This involves writing logs to a queue or buffer and then processing them separately. This approach prevents blocking the function’s execution, ensuring optimal performance, particularly important for functions with high traffic volumes.

- Contextual Logging: Enriching logs with contextual information, such as request IDs, user IDs, and function invocation details, significantly improves the ability to trace requests across different services and functions. This contextualization aids in pinpointing the root cause of issues and understanding the flow of execution within the application.

- Automated Logging Configuration: Implement automated logging configuration using infrastructure-as-code (IaC) tools. This approach ensures consistent logging across all functions and environments, reducing the risk of misconfigurations and human error. It also simplifies the management and deployment of logging settings.

- Log Retention Policies: Establish clear log retention policies based on business requirements and compliance regulations. This involves defining how long logs should be stored and when they should be archived or deleted. Appropriate log retention policies help manage storage costs and maintain compliance with relevant standards.

Log Formats and Advantages

Choosing the right log format is critical for effective log analysis. Structured formats, particularly JSON, offer significant advantages over unstructured text-based logs.

- JSON Format: JSON (JavaScript Object Notation) is a widely adopted structured format. It enables the consistent formatting of log data with key-value pairs, facilitating easy parsing, searching, and filtering. The structured nature of JSON logs simplifies automated analysis and enables the creation of dashboards and alerts based on specific log events.

- Text-based Logs: While less structured, text-based logs can be useful for simple debugging or for capturing free-form messages. However, parsing and analyzing text-based logs can be more complex and time-consuming compared to structured formats.

Examples of Log Levels

Log levels categorize the severity of log messages, enabling developers to filter and prioritize log data. Here are examples of how different log levels might be used in a serverless function:

DEBUG: Detailed information useful for debugging. This level provides fine-grained insights into the function’s execution, including variable values and function calls.

Example:

"timestamp": "2024-10-27T10:00:00Z", "level": "DEBUG", "message": "Input parameters received: 'userId': '123', 'action': 'create' ", "functionName": "createUserFunction", "requestId": "abc-123-def"

INFO: Informational messages about the function’s execution. This level typically includes successful operations and important milestones.

Example:

"timestamp": "2024-10-27T10:00:05Z", "level": "INFO", "message": "User created successfully.", "functionName": "createUserFunction", "userId": "123", "requestId": "abc-123-def"

WARNING: Indicates a potential issue that might require attention, but does not necessarily halt execution. For instance, a deprecated API call or a resource constraint.

Example:

"timestamp": "2024-10-27T10:00:10Z", "level": "WARNING", "message": "Deprecation warning: Using deprecated API version.", "functionName": "createUserFunction", "apiVersion": "v1", "requestId": "abc-123-def"

ERROR: Indicates a critical error that prevents the function from completing its intended operation. These logs are essential for identifying and resolving issues.

Example:

"timestamp": "2024-10-27T10:00:15Z", "level": "ERROR", "message": "Failed to create user due to database connection error.", "functionName": "createUserFunction", "error": "Database connection timeout", "requestId": "abc-123-def"

Choosing the Right Monitoring and Logging Tools

Selecting the appropriate monitoring and logging tools is crucial for the effective operation and troubleshooting of serverless applications. The choice significantly impacts the ability to identify performance bottlenecks, security vulnerabilities, and operational inefficiencies. A well-chosen toolset provides actionable insights, enabling developers and operations teams to maintain application health, optimize resource utilization, and reduce costs.

This section provides a comparative analysis of popular tools, considering their features and pricing models.

Popular Monitoring and Logging Tools

Several tools are specifically designed to address the monitoring and logging requirements of serverless applications. Each tool offers a unique set of features and integrations, catering to different needs and preferences.

- AWS CloudWatch: CloudWatch is a comprehensive monitoring and observability service offered by Amazon Web Services (AWS). It provides functionalities for collecting, analyzing, and visualizing logs, metrics, and events from AWS resources, including serverless services like AWS Lambda, Amazon API Gateway, and Amazon DynamoDB. CloudWatch allows users to create dashboards, set alarms, and automate responses to operational events. It offers features such as log aggregation, filtering, and search capabilities, making it easier to identify and troubleshoot issues.

- Azure Monitor: Azure Monitor is the monitoring service provided by Microsoft Azure. It offers a unified platform for collecting, analyzing, and acting on telemetry data from various sources, including Azure services, on-premises resources, and other cloud providers. Azure Monitor provides features for monitoring application performance, infrastructure health, and security events. It supports log analytics, alerting, and visualization through dashboards. Azure Monitor integrates seamlessly with Azure serverless services like Azure Functions, Azure API Management, and Azure Cosmos DB.

- Google Cloud Logging (formerly Stackdriver): Google Cloud Logging, part of the Google Cloud Operations suite, is a fully managed logging service for Google Cloud Platform (GCP). It collects logs from various sources, including GCP services, custom applications, and third-party services. Google Cloud Logging offers powerful log search, filtering, and analysis capabilities. It also provides features for log routing, retention policies, and integration with other GCP services like Cloud Monitoring and Cloud Functions.

Comparative Analysis of Features and Pricing

The selection of a monitoring and logging tool should be guided by a thorough understanding of the available features and the associated costs. A detailed comparison helps to identify the tool that best aligns with specific requirements and budget constraints. The following table provides a comparative analysis of AWS CloudWatch, Azure Monitor, and Google Cloud Logging, focusing on key features and pricing considerations.

| Feature | AWS CloudWatch | Azure Monitor | Google Cloud Logging |

|---|---|---|---|

| Log Collection | Automatic collection from AWS services; custom log ingestion via APIs and agents. | Automatic collection from Azure services; custom log ingestion via APIs and agents. | Automatic collection from GCP services; custom log ingestion via APIs and agents. |

| Log Storage | Pay-as-you-go based on data volume and retention period. | Pay-as-you-go based on data volume and retention period. | Pay-as-you-go based on data volume and retention period. |

| Log Search and Filtering | Powerful search and filtering capabilities with structured logging support. | Log Analytics with Kusto Query Language (KQL) for advanced querying. | Advanced log search and filtering with regular expressions and structured logging support. |

| Metrics Collection | Built-in metrics for AWS services; custom metrics publishing. | Built-in metrics for Azure services; custom metrics publishing. | Built-in metrics for GCP services; custom metrics publishing. |

| Alerting | Real-time alerting based on metrics and log patterns. | Real-time alerting based on metrics and log patterns. | Real-time alerting based on metrics and log patterns. |

| Dashboards | Customizable dashboards for visualizing metrics and logs. | Customizable dashboards for visualizing metrics and logs. | Customizable dashboards for visualizing metrics and logs. |

| Pricing Model | Pay-as-you-go for data ingestion, storage, and query operations. Free tier available. | Pay-as-you-go for data ingestion, storage, and query operations. Free tier available. | Pay-as-you-go for data ingestion, storage, and query operations. Free tier available. |

| Integrations | Seamless integration with other AWS services. | Seamless integration with other Azure services. | Seamless integration with other GCP services. |

| Data Retention | Configurable retention periods, ranging from days to years. | Configurable retention periods, ranging from days to years. | Configurable retention periods, ranging from days to years. |

The pricing models of these tools are primarily based on data ingestion, storage, and query operations. Each provider offers a free tier with certain limitations, making them accessible for smaller projects or testing purposes. The cost can vary significantly depending on the volume of data generated by the serverless applications and the duration for which the logs are stored. For example, a high-traffic application generating a large volume of logs will incur higher costs compared to a low-traffic application.

Example: Consider a serverless application using AWS Lambda, API Gateway, and DynamoDB. If the application generates 100 GB of logs per month and requires a 30-day retention period, the cost for AWS CloudWatch would depend on the specific pricing tiers for log ingestion, storage, and data retrieval. The exact cost will vary depending on the region and the frequency of log queries.

Similarly, the costs for Azure Monitor and Google Cloud Logging would depend on their respective pricing structures, data volumes, and retention policies. It is essential to evaluate the pricing calculators provided by each cloud provider to estimate the costs accurately based on the anticipated data volumes and usage patterns.

Implementing Monitoring for Serverless Functions

Serverless function monitoring is crucial for understanding application behavior, identifying performance bottlenecks, and ensuring optimal resource utilization. Effective monitoring allows for proactive issue resolution, preventing potential service disruptions, and maintaining a positive user experience. This section will detail the implementation process using a specific platform and guide the configuration of alerts.

Setting up Monitoring with AWS Lambda

AWS Lambda, a widely adopted serverless compute service, provides native integration with various monitoring tools. This section Artikels the process of setting up monitoring for Lambda functions using AWS CloudWatch, the primary monitoring service within the AWS ecosystem.To effectively monitor Lambda functions, the following steps are generally undertaken:

- Function Creation and Configuration: Deploying the Lambda function involves defining its code, runtime environment, and associated configurations. Crucially, the function’s execution role must have the necessary permissions to write logs and metrics to CloudWatch. This is typically achieved by attaching a policy that grants access to CloudWatch Logs and CloudWatch Metrics.

- Enabling CloudWatch Logging: Lambda functions automatically log execution details to CloudWatch Logs. These logs contain valuable information, including function invocation requests, responses, errors, and any custom log statements added within the function’s code. The logging level can be configured within the function’s code to control the verbosity of the logs.

- Metric Collection: CloudWatch automatically collects several key metrics for each Lambda function. These include:

- Invocations: The number of times the function was invoked.

- Errors: The number of function invocations that resulted in an error.

- Duration: The amount of time the function took to execute, in milliseconds.

- Throttles: The number of times the function was throttled due to exceeding concurrency limits.

- ConcurrentExecutions: The number of function instances running simultaneously.

These metrics are accessible through the CloudWatch console and can be used to create dashboards and set up alarms.

- Creating CloudWatch Dashboards: CloudWatch dashboards provide a centralized view of function performance. Users can create dashboards to visualize key metrics, such as invocations, errors, and duration, over time. Dashboards allow for the correlation of metrics, enabling the identification of performance trends and anomalies.

- Configuring CloudWatch Alarms: CloudWatch alarms are used to monitor metrics and trigger actions when specific thresholds are breached. Alarms can be configured to send notifications, invoke other AWS services, or take automated corrective actions.

Configuring Alerts Based on Specific Metrics

Alerts are essential for proactive monitoring and rapid incident response. This section explains how to configure alerts in CloudWatch based on critical metrics.Setting up effective alerts involves defining thresholds and specifying actions to be taken when those thresholds are exceeded. The following Artikels the alert configuration process for key metrics:

- Error Rate Alerts: Errors are a critical indicator of function health. An alert should be configured to trigger when the error rate exceeds a predefined threshold. For example, an alarm could be set to trigger when the `Errors` metric exceeds a certain number of errors within a specific time period (e.g., 10 errors in 5 minutes). The alert action might involve sending an email notification to the operations team or triggering an automated incident response process.

- Duration Alerts: High function duration can indicate performance bottlenecks. An alert can be configured to trigger when the average function duration exceeds a predefined threshold. For instance, an alarm could be set to trigger if the `Duration` metric’s average value exceeds 500 milliseconds over a 5-minute period. The alert action could involve sending a notification or triggering a performance investigation.

- Throttling Alerts: Throttling indicates that the function is exceeding its concurrency limits. An alert should be configured to trigger when the `Throttles` metric indicates throttling events. For example, an alarm could trigger when the number of throttles exceeds zero within a given time frame. The alert action might involve increasing the function’s concurrency limit or scaling the underlying infrastructure.

- Invocation Alerts: Monitoring invocation volume is vital for understanding traffic patterns. An alert can be set up to notify when invocation count spikes. This helps identify potential denial-of-service attacks or unusual activity. The alert action can include sending notifications to the security team.

The following visual illustration demonstrates the setup process, using AWS CloudWatch as the example platform.

+-------------------------------------------------------------------------------------------------------------------------------------+ | AWS CloudWatch Console | +-------------------------------------------------------------------------------------------------------------------------------------+ | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Service: CloudWatch | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Navigation Pane: Metrics, Dashboards, Alarms, Logs | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Metrics Section: Select Lambda Function | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Metric Selection: | | | | -Invocations | -Errors | -Duration | -Throttles | -ConcurrentExecutions | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Dashboard Creation: | | | | -Create a new dashboard | | | | -Add widgets (e.g., line charts) to visualize selected metrics | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Alarm Configuration: | | | | -Navigate to the "Alarms" section | | | | -Create a new alarm | | | | -Select a metric (e.g., Errors, Duration, Throttles) | | | | -Define the alarm threshold (e.g., Errors > 10 in 5 minutes) | | | | -Specify the alarm state (OK, ALARM, INSUFFICIENT_DATA) | | | | -Configure actions to be taken when the alarm state changes (e.g., send notification, trigger an automated process) | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | | +-------------------------------------------------------------------------------------------------------------------------------+ | | | Alarm Notification Setup: Configure SNS topic to send alerts.| | | +-------------------------------------------------------------------------------------------------------------------------------+ | +-------------------------------------------------------------------------------------------------------------------------------------+

Detailed Description of the Visual Illustration:

The illustration represents the AWS CloudWatch console interface. The top section identifies the service, CloudWatch, and its role in the AWS ecosystem. The navigation pane, situated on the left, shows the key components: Metrics, Dashboards, Alarms, and Logs. The central section showcases the process of metric selection. Within the "Metrics Section," a Lambda function is selected, and the available metrics are listed: Invocations, Errors, Duration, Throttles, and ConcurrentExecutions.

The next part demonstrates the creation of dashboards for visualization, where widgets, such as line charts, are added to display selected metrics. The "Alarm Configuration" section details the process of creating alarms. This includes selecting a metric (e.g., Errors), defining a threshold (e.g., Errors > 10 in 5 minutes), and configuring the alarm state (OK, ALARM, INSUFFICIENT_DATA). Finally, the setup for notifications, often using an SNS topic, is highlighted, indicating how alerts are delivered to the designated recipients.

Implementing Logging for Serverless Functions

Serverless functions, by their ephemeral nature, present unique challenges for logging. Without proper implementation, valuable insights into function behavior, performance, and error occurrences can be lost. Effective logging is crucial for debugging, performance optimization, and ensuring the reliability of serverless applications. This section details the practical aspects of implementing logging within serverless functions.

Integrating Logging Libraries into Serverless Functions

Integrating logging libraries into serverless functions involves choosing a suitable library for the chosen programming language and then incorporating it into the function's code. The choice of library often depends on the specific cloud provider and desired level of control over log formatting and output.

- Python: The standard `logging` module in Python provides a flexible and extensible logging framework. Libraries like `structlog` offer structured logging capabilities, which are beneficial for easier parsing and analysis.

- Node.js: The `console` object is a simple way to log messages. However, for more advanced features, libraries like `winston` and `pino` are commonly used. These libraries allow for different log levels, formatting, and transport mechanisms.

- Java: Java utilizes logging frameworks such as `java.util.logging`, `Log4j`, and `SLF4J`. SLF4J serves as a facade, allowing for the use of different underlying logging implementations, providing flexibility in terms of logging provider selection.

The integration process generally involves the following steps:

- Import the library: Import the chosen logging library at the beginning of the function code.

- Configure the logger: Configure the logger, specifying the log level (e.g., DEBUG, INFO, WARNING, ERROR) and any desired formatting.

- Log messages: Use the logger's methods (e.g., `logger.debug()`, `logger.info()`, `logger.error()`) to log relevant information at different points in the function's execution. Include contextual information such as timestamps, function names, and request IDs.

Configuring Log Aggregation and Analysis

Effective log aggregation and analysis are crucial for extracting meaningful insights from serverless function logs. This involves collecting logs from multiple function instances, centralizing them, and then using tools to search, filter, and analyze the data. The specific configuration depends on the chosen cloud provider and monitoring tools.

- Cloud Provider's Native Logging Services: Most cloud providers offer built-in logging services, such as AWS CloudWatch Logs, Azure Monitor, and Google Cloud Logging. These services automatically collect logs from serverless functions.

- Log Aggregation: Logs are typically aggregated automatically by the cloud provider's logging service. Configure the service to retain logs for a specific period and to route them to a centralized location.

- Log Analysis: Use the cloud provider's tools or third-party services to analyze the logs. This may involve:

- Searching: Searching for specific log entries based on s, timestamps, or other criteria.

- Filtering: Filtering logs to display only entries that meet certain conditions.

- Alerting: Setting up alerts to be notified when specific errors or events occur.

- Visualization: Creating dashboards and visualizations to track key metrics and identify trends.

Code Examples: Structured Logging in Different Serverless Function Triggers

Structured logging enhances the ability to analyze logs by providing data in a consistent, machine-readable format. These examples demonstrate structured logging in various serverless function triggers, utilizing Python, Node.js, and Java, respectively.

Python (AWS Lambda with API Gateway Trigger)

This example uses the `logging` module and `json` to structure the logs. The function receives an HTTP request through API Gateway and logs details about the request.

```pythonimport jsonimport loggingimport os# Configure logginglogger = logging.getLogger()logger.setLevel(logging.INFO)def lambda_handler(event, context): try: # Log the event logger.info(json.dumps( 'message': 'Received event', 'event': event, 'request_id': context.aws_request_id, 'function_name': os.environ['AWS_LAMBDA_FUNCTION_NAME'] )) # Process the request http_method = event['httpMethod'] path = event['path'] body = event.get('body', None) # Log request details logger.info(json.dumps( 'message': 'Request details', 'http_method': http_method, 'path': path, 'body': body )) # Simulate a successful response response = 'statusCode': 200, 'body': json.dumps('message': 'Hello from Lambda!'), 'headers': 'Content-Type': 'application/json' return response except Exception as e: # Log the error logger.error(json.dumps( 'message': 'Error processing request', 'error': str(e), 'request_id': context.aws_request_id, 'function_name': os.environ['AWS_LAMBDA_FUNCTION_NAME'] )) # Return an error response return 'statusCode': 500, 'body': json.dumps('message': 'Internal Server Error') ```

Node.js (AWS Lambda with S3 Trigger)

This example uses `console.log` and structured JSON format. The function is triggered by an S3 object creation event and logs the event details.

```javascriptconst AWS = require('aws-sdk');exports.handler = async (event, context) => try // Log the event console.log(JSON.stringify( message: 'Received event', event: event, requestId: context.awsRequestId, functionName: process.env.AWS_LAMBDA_FUNCTION_NAME )); // Process the S3 event const bucket = event.Records[0].s3.bucket.name; const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' ')); // Log S3 object details console.log(JSON.stringify( message: 'S3 object details', bucket: bucket, key: key )); // Simulate processing the object console.log(JSON.stringify( message: 'Object processed successfully', bucket: bucket, key: key )); return statusCode: 200, body: JSON.stringify( message: 'Object processed successfully' ) ; catch (error) // Log the error console.error(JSON.stringify( message: 'Error processing S3 event', error: error.message, requestId: context.awsRequestId, functionName: process.env.AWS_LAMBDA_FUNCTION_NAME )); return statusCode: 500, body: JSON.stringify( message: 'Internal Server Error' ) ; ;```

Java (AWS Lambda with DynamoDB Stream Trigger)

This Java example uses the `java.util.logging` package and demonstrates logging events from a DynamoDB stream. The function logs the event details and item changes.

```javaimport com.amazonaws.services.lambda.runtime.Context;import com.amazonaws.services.lambda.runtime.events.DynamodbEvent;import com.fasterxml.jackson.databind.ObjectMapper;import java.util.logging.Level;import java.util.logging.Logger;public class DynamoDBStreamHandler private static final Logger logger = Logger.getLogger(DynamoDBStreamHandler.class.getName()); private static final ObjectMapper objectMapper = new ObjectMapper(); public void handleRequest(DynamodbEvent event, Context context) try // Log the event logger.log(Level.INFO, "Received event: 0", objectMapper.writeValueAsString(event)); // Process each record in the event event.getRecords().forEach(record -> String eventName = record.getEventName(); String tableName = record.getDynamodb().getTableName(); // Log the event name and table name logger.log(Level.INFO, "Processing event: 0 for table: 1", new Object[]eventName, tableName); // Log the image changes if (record.getDynamodb().getNewImage() != null) try String newItem = objectMapper.writeValueAsString(record.getDynamodb().getNewImage()); logger.log(Level.INFO, "New image: 0", newItem); catch (Exception e) logger.log(Level.WARNING, "Failed to serialize new image", e); if (record.getDynamodb().getOldImage() != null) try String oldItem = objectMapper.writeValueAsString(record.getDynamodb().getOldImage()); logger.log(Level.INFO, "Old image: 0", oldItem); catch (Exception e) logger.log(Level.WARNING, "Failed to serialize old image", e); ); catch (Exception e) // Log the error logger.log(Level.SEVERE, "Error processing DynamoDB event", e); ```These examples illustrate how to integrate structured logging into serverless functions using different programming languages and triggers.

The structured format allows for easier parsing, filtering, and analysis of logs, enabling better insights into function behavior and performance. The inclusion of context information such as request IDs and function names is crucial for debugging and troubleshooting. Remember to adjust log levels and content according to specific application needs.

Alerting and Notification Strategies

Proactive issue detection and timely response are critical for maintaining the health and performance of serverless applications. Setting up effective alerting and notification systems allows for immediate awareness of anomalies, performance degradations, and potential failures, minimizing downtime and ensuring a positive user experience. This section details the importance of alerting, discusses various notification channels, and provides a practical workflow design for efficient alert management.

Importance of Proactive Alerting

Alerting systems play a pivotal role in the operational stability of serverless architectures. They act as the first line of defense, identifying issues before they impact users.

- Early Problem Detection: Alerts trigger the instant a predefined threshold is crossed or an anomalous pattern is detected, such as a sudden spike in error rates or latency.

- Reduced Downtime: Rapid notification enables teams to respond swiftly, mitigating the impact of incidents and minimizing downtime. Studies have shown that prompt incident response can significantly reduce the duration of outages, translating into cost savings and improved customer satisfaction.

- Improved Performance: By monitoring key metrics and setting appropriate alerts, developers can identify performance bottlenecks and optimize resource allocation proactively.

- Automated Remediation: Some alerting systems integrate with automated remediation tools. For instance, if an alert indicates high CPU utilization, the system can automatically scale resources.

- Data-Driven Decision Making: Alerting provides valuable insights into application behavior, enabling data-driven decisions for continuous improvement.

Notification Channels for Alerts

Choosing the right notification channels is essential for ensuring that alerts reach the right people at the right time. The optimal channel depends on the severity of the issue and the team's communication preferences.

- Email: Email is a widely used channel for general notifications and less critical alerts. It's suitable for informational alerts and summaries.

- Slack: Slack is an excellent channel for real-time communication and collaboration. Alerts can be integrated into dedicated Slack channels for specific services or teams. This enables quick discussions and coordinated responses.

- PagerDuty: PagerDuty is a robust incident management platform designed for critical alerts and on-call rotations. It ensures that the right person is notified and that incidents are acknowledged and tracked through resolution.

- SMS/Text Messages: For high-priority alerts, SMS notifications can provide immediate awareness, especially when integrated with on-call schedules.

- Other Collaboration Tools: Integration with tools like Microsoft Teams, Discord, or custom-built notification systems can be utilized, depending on the organization's communication infrastructure.

Alert Notification Workflow Design

A well-defined alert notification workflow ensures that alerts are processed efficiently and that the appropriate actions are taken. The workflow involves several steps:

- Metric Monitoring: Continuous monitoring of key metrics, such as latency, error rates, and resource utilization, is the foundation of the alerting system.

- Threshold Definition: Defining clear threshold values for each metric. These thresholds represent acceptable performance boundaries. Setting thresholds requires careful consideration of the application's performance characteristics and service level agreements (SLAs).

- Alert Triggering: When a metric crosses a predefined threshold, an alert is triggered.

- Notification Delivery: The alert is delivered through the selected notification channels. This could include email, Slack, or PagerDuty, depending on the severity of the issue.

- Incident Assignment: If the alert indicates a critical issue, the notification system assigns the incident to the appropriate team or individual based on on-call schedules.

- Acknowledgement and Investigation: The assigned team or individual acknowledges the alert and begins investigating the root cause.

- Remediation: The team takes corrective actions to resolve the issue, which may include scaling resources, deploying a code fix, or rolling back a deployment.

- Resolution and Post-Mortem: Once the issue is resolved, the alert is closed, and a post-mortem analysis may be conducted to identify the root cause, prevent recurrence, and improve the alerting system.

For example, consider a serverless function responsible for processing user requests. The following steps Artikel a practical implementation:

- Monitoring: The system monitors the function's invocation duration and error rate using cloud monitoring tools.

- Thresholds: An alert is triggered if the function's average invocation duration exceeds 500 milliseconds for more than 5 minutes, or if the error rate exceeds 1% within a 1-minute window.

- Notification: When either threshold is breached, an alert is sent to a dedicated Slack channel and PagerDuty, escalating the alert based on the severity.

- Response: The on-call engineer receives the PagerDuty alert and begins investigating the logs and metrics. They might identify a performance bottleneck or a bug in the code.

- Remediation: The engineer applies a fix or scales the resources of the function to resolve the issue.

This workflow ensures that issues are detected, addressed, and resolved efficiently.

Troubleshooting Serverless Applications Using Logs and Metrics

Troubleshooting serverless applications requires a systematic approach, leveraging logs and metrics to identify and resolve issues efficiently. The ephemeral nature of serverless functions and the distributed architecture necessitate a deep understanding of how to analyze these data points to pinpoint the root cause of problems. This section details common issues, correlation techniques, and provides practical examples to facilitate effective troubleshooting.

Common Issues in Serverless Environments

Serverless environments, while offering benefits like scalability and reduced operational overhead, can present unique challenges. These challenges often manifest as performance bottlenecks, unexpected errors, or security vulnerabilities.

- Cold Starts: The time it takes for a serverless function to initialize when it hasn't been recently invoked. This can lead to increased latency, particularly during periods of low traffic.

- Timeout Errors: Functions exceeding their configured execution time limit, resulting in failed requests. This can be caused by inefficient code, external service delays, or resource constraints.

- Concurrency Limits: Exceeding the maximum number of concurrent function invocations, leading to request throttling and service degradation.

- Memory Issues: Functions running out of allocated memory, resulting in errors or unexpected behavior. This often stems from inefficient memory management or excessive resource consumption.

- Error Handling Failures: Improperly handled exceptions and errors within functions, leading to unexpected behavior and potential data loss.

- Security Vulnerabilities: Issues such as unauthorized access, injection attacks, and data breaches due to misconfigurations or flawed code.

- Dependency Conflicts: Conflicts between different versions of dependencies used by the functions, which can lead to runtime errors.

- External Service Failures: Issues with external services that the serverless functions depend on (e.g., databases, APIs), leading to function failures.

Correlating Logs and Metrics for Root Cause Analysis

Effectively troubleshooting serverless applications involves correlating logs and metrics to establish the relationships between different events and identify the root cause of problems. This correlation process can involve analyzing timestamps, request IDs, and other identifiers to trace the flow of requests and identify the source of errors.

The process often involves these steps:

- Identify the Problem: Start by observing the symptoms. This might involve user reports of slow performance, error messages in an application's user interface, or alerts triggered by monitoring tools.

- Analyze Metrics: Examine relevant metrics such as invocation count, duration, error rates, and concurrent executions. This provides an overview of the system's health and can highlight anomalies. For instance, a sudden spike in error rates might indicate a problem.

- Examine Logs: Inspect the logs associated with the affected functions and services. Logs contain detailed information about the execution of functions, including timestamps, input/output, and error messages. Look for error codes, stack traces, and other clues that might reveal the root cause.

- Correlate Logs and Metrics: Use common identifiers, such as request IDs, to link log entries to specific function invocations and time periods. This allows for tracing a request's journey through the system and identifying where failures occur.

- Investigate Dependencies: Check the health and performance of external services and resources that the functions depend on. Problems in these dependencies can often cause function failures.

- Iterate and Refine: Repeat the analysis process as needed, refining the hypotheses and exploring different aspects of the system until the root cause is found.

Troubleshooting Scenarios: Examples with Logs and Metrics

The following examples demonstrate how to troubleshoot common issues using sample logs and metrics data.

Scenario 1: Increased Function Latency

Problem: Users report slow response times for a serverless application.

Metrics Analysis:

- Function Duration: The average function duration has increased from 100ms to 500ms.

- Cold Start Count: The number of cold starts has increased significantly during peak hours.

Log Analysis:

Sample log entries from a function:

[2024-02-29T10:00:00.000Z] INFO -Function starting (Request ID: abcdef123)[2024-02-29T10:00:00.500Z] INFO -Database query complete[2024-02-29T10:00:00.600Z] INFO -Function completed (Request ID: abcdef123) Another sample log entry from a cold start:

[2024-02-29T10:15:00.000Z] INFO -Function starting (Request ID: defghi456)-Cold Start[2024-02-29T10:15:01.000Z] INFO -Database query complete[2024-02-29T10:15:01.100Z] INFO -Function completed (Request ID: defghi456) Correlation:

- The metrics indicate increased latency, and the logs confirm the presence of cold starts, adding to function execution time.

- The logs also show that database queries take a significant amount of time, which is worsened by cold starts.

Root Cause: Cold starts are contributing to increased latency, alongside potential database query performance issues.

Resolution: Implement function warm-up strategies, optimize database queries, and consider increasing function memory to improve performance.

Scenario 2: Function Timeout Errors

Problem: Functions are failing with timeout errors.

Metrics Analysis:

- Error Rate: The function's error rate has spiked, with timeout errors being the primary cause.

- Function Duration: The average function duration is consistently exceeding the configured timeout.

Log Analysis:

Sample log entry from a timeout error:

[2024-02-29T12:00:00.000Z] ERROR - Function timed out (Request ID: klmno789) after 3 seconds. Sample log entries with detailed information from the function's code:

[2024-02-29T12:00:00.000Z] INFO -Function starting (Request ID: klmno789)[2024-02-29T12:00:01.000Z] INFO -Starting external API call[2024-02-29T12:00:04.000Z] ERROR - External API call timed out[2024-02-29T12:00:04.000Z] ERROR - Function timed out (Request ID: klmno789) after 3 seconds. Correlation:

- Metrics show an increased error rate caused by timeouts.

- Logs reveal that the function is timing out, specifically during an external API call.

Root Cause: The function's timeout is triggered because an external API call is taking longer than expected.

Resolution: Implement error handling and retry mechanisms for external API calls. Increase the function timeout if appropriate, and optimize the external API's performance if possible.

Scenario 3: Memory Exhaustion

Problem: Functions are failing due to memory exhaustion.

Metrics Analysis:

- Error Rate: Increased error rate with 'out of memory' exceptions.

- Memory Utilization: Average memory utilization is close to the function's memory limit.

Log Analysis:

Sample log entries with detailed information from the function's code:

[2024-02-29T14:00:00.000Z] INFO -Function starting (Request ID: pqrst012)[2024-02-29T14:00:00.100Z] INFO -Loading large dataset[2024-02-29T14:00:01.000Z] ERROR - Out of memory error[2024-02-29T14:00:01.000Z] ERROR - Function failed (Request ID: pqrst012) Correlation:

- Metrics indicate an increase in errors, which is related to memory exhaustion.

- Logs reveal that the function fails when trying to load a large dataset.

Root Cause: The function is trying to load a dataset that is too large to fit within its allocated memory.

Resolution: Optimize the function to load data in smaller chunks, increase the function's memory allocation, or use alternative data processing methods like streaming.

Security Considerations in Serverless Monitoring and Logging

Serverless environments, while offering significant operational advantages, introduce unique security challenges concerning monitoring and logging. The distributed nature and ephemeral characteristics of serverless functions necessitate a robust security posture to protect against vulnerabilities, unauthorized access, and data breaches. Effective monitoring and logging are not merely operational necessities; they are critical components of a comprehensive security strategy. This section delves into the security aspects of serverless monitoring and logging, exploring potential risks and mitigation strategies.

Access Control for Monitoring and Logging Data

Managing access control is paramount to secure monitoring and logging data. Inadequate access control can lead to unauthorized access to sensitive information, potentially compromising confidentiality and integrity.

- Role-Based Access Control (RBAC): Implement RBAC to restrict access to monitoring and logging data based on roles and responsibilities. For instance, developers might have access to application logs, while security analysts have access to security-related logs and audit trails. This principle of least privilege minimizes the attack surface.

- IAM Policies: Utilize Identity and Access Management (IAM) policies to define permissions for accessing monitoring and logging resources, such as CloudWatch logs, S3 buckets storing logs, and monitoring dashboards. Granular policies should be defined to grant the minimum necessary permissions.

- Authentication and Authorization: Enforce strong authentication mechanisms, such as multi-factor authentication (MFA), for accessing monitoring and logging tools and dashboards. Implement authorization checks to ensure that only authorized users can perform specific actions, such as viewing, modifying, or deleting logs.

- Audit Logging: Enable audit logging for all access and modifications to monitoring and logging resources. This provides a trail of who accessed what, when, and how, enabling investigation of security incidents and compliance auditing.

Data Encryption in Transit and at Rest

Data encryption is essential to protect sensitive information within logs and monitoring data, both during transit and when stored at rest. Encryption safeguards against unauthorized access, data breaches, and compliance violations.

- Encryption in Transit: Encrypt data during transit using Transport Layer Security (TLS) or Secure Sockets Layer (SSL) protocols. This ensures that data transmitted between serverless functions, monitoring tools, and logging services is protected from eavesdropping and man-in-the-middle attacks.

- Encryption at Rest: Encrypt data stored at rest using encryption keys managed by the cloud provider or a dedicated key management service (KMS). This protects against unauthorized access to stored logs and monitoring data, even if the underlying storage infrastructure is compromised. For example, AWS CloudWatch Logs supports encryption at rest using KMS keys.

- Key Management: Implement a robust key management strategy, including key rotation, access control, and regular audits. Securely store and manage encryption keys to prevent unauthorized access and ensure data confidentiality.

- Data Masking and Redaction: Implement data masking and redaction techniques to protect sensitive information within logs. This involves replacing or removing sensitive data elements, such as personally identifiable information (PII), API keys, and passwords, before storing them in logs.

Identifying and Mitigating Security Risks Through Logging and Monitoring

Logging and monitoring play a crucial role in identifying and mitigating security risks in serverless environments. Proactive monitoring and analysis of logs and metrics enable the detection of malicious activities and vulnerabilities.

- Detecting Unauthorized Access: Monitor logs for suspicious login attempts, unauthorized API calls, and unusual user activity. Implement alerting mechanisms to notify security teams of potential security breaches.

- Identifying Vulnerabilities: Monitor logs for error messages, exceptions, and security events that indicate potential vulnerabilities, such as SQL injection attempts or cross-site scripting (XSS) attacks. Utilize vulnerability scanning tools and regularly update dependencies to mitigate vulnerabilities.

- Monitoring for Data Breaches: Monitor logs for data exfiltration attempts, such as unusual data transfer patterns or unauthorized access to sensitive data. Implement data loss prevention (DLP) strategies to detect and prevent data breaches.

- Anomaly Detection: Implement anomaly detection techniques to identify unusual patterns or deviations from normal behavior in logs and metrics. This can help detect malicious activities that might not be immediately apparent.

- Security Information and Event Management (SIEM): Integrate serverless logs and metrics with a SIEM system to centralize security monitoring, analysis, and incident response. SIEM systems provide advanced analytics and correlation capabilities to detect and respond to security threats.

Protecting Sensitive Information within Logs

Protecting sensitive information within logs is a critical security consideration. Failing to protect sensitive data can lead to data breaches, compliance violations, and reputational damage.

- Data Masking and Redaction: Implement data masking and redaction techniques to protect sensitive information within logs. This involves replacing or removing sensitive data elements, such as PII, API keys, and passwords, before storing them in logs. Consider using regular expressions or pattern matching to identify and redact sensitive data.

- Avoid Logging Sensitive Data: Avoid logging sensitive data in the first place. Instead of logging sensitive information directly, log a reference or identifier that can be used to retrieve the sensitive data from a secure data store.

- Tokenization: Use tokenization to replace sensitive data with unique, non-sensitive tokens. This allows you to track and monitor sensitive data without exposing the actual values.

- Encryption: Encrypt sensitive data before logging it. This ensures that the data is protected from unauthorized access, even if the logs are compromised.

- Access Control: Implement strict access control policies to restrict access to logs containing sensitive information. Grant access only to authorized personnel who require it for their job responsibilities.

- Log Retention Policies: Implement log retention policies to limit the storage duration of logs containing sensitive information. Regularly review and delete logs that are no longer needed.

- Regular Audits: Conduct regular audits of logs to ensure that sensitive information is being protected and that security controls are effective. Review logs for potential security vulnerabilities and compliance violations.

Cost Optimization in Serverless Monitoring and Logging

Optimizing costs in serverless monitoring and logging is crucial for maintaining a cost-effective and scalable architecture. Serverless environments, by their nature, are designed for pay-per-use models. Therefore, efficient monitoring and logging practices directly impact the overall operational expenses. Understanding the cost drivers and implementing strategies to control them is essential for maximizing the benefits of serverless computing.

Reducing the Volume of Logs and Metrics Collected

The volume of data collected significantly influences the cost of monitoring and logging. Reducing this volume without sacrificing critical insights requires a strategic approach. This involves careful consideration of data retention policies, filtering techniques, and efficient log aggregation.

- Implement Log Filtering: Implementing filtering at the source (e.g., within the serverless function code) or at the log ingestion point (e.g., using a log router) is a key cost-saving measure. This process involves selectively discarding or sampling logs that are deemed less critical or verbose. For instance, debug-level logs can be filtered out in production environments. Filtering can dramatically reduce the volume of data stored and processed, leading to lower costs.

- Adjust Log Levels: Carefully configuring the logging levels within the serverless functions is crucial. Using appropriate logging levels (e.g., INFO, WARN, ERROR) allows for a balance between capturing essential information and minimizing excessive logging. For example, logging excessive amounts of data at the DEBUG level in a production environment can significantly increase costs.

- Use Sampling for High-Volume Events: For events that generate a high volume of logs, such as request/response data for every single API call, sampling can be used. This involves collecting data from a subset of events rather than every single one. Sampling is particularly useful for understanding trends and identifying anomalies without incurring the full cost of ingesting all the data. For example, sampling 10% of API requests can still provide valuable insights into performance and errors.

- Optimize Metric Collection: Focus on collecting only the essential metrics that are needed for monitoring the health and performance of the serverless applications. Avoid collecting redundant or unnecessary metrics. For instance, instead of collecting CPU utilization at a very high frequency, it might be sufficient to collect it every few minutes, unless there's a specific performance concern.

- Implement Data Retention Policies: Define clear data retention policies for logs and metrics. Data that is no longer required should be archived or deleted to reduce storage costs. For example, keeping detailed logs for only 30 days, and then archiving them to a cheaper storage tier for a longer duration, can significantly reduce storage costs.

Setting Up Cost Alerts

Proactive cost management involves setting up alerts to detect and respond to unexpected cost increases. This allows for prompt investigation and corrective actions before costs escalate significantly.

- Define Cost Thresholds: Establish clear cost thresholds based on historical usage patterns and budget allocations. These thresholds should be specific to the monitoring and logging services being used.

- Configure Alerting Rules: Set up alerting rules that trigger notifications when costs exceed the defined thresholds. The alerting system should be integrated with the monitoring and logging platform and the chosen cloud provider's cost management tools.

- Implement Granular Alerts: Create alerts for specific components or services. This helps to pinpoint the source of cost increases more efficiently. For example, create an alert for the cost of log ingestion for a specific serverless function.

- Utilize Cloud Provider's Cost Management Tools: Leverage the cost management tools provided by the cloud provider (e.g., AWS Cost Explorer, Azure Cost Management, Google Cloud Billing) to monitor costs and set up alerts. These tools often offer pre-built dashboards and alerting capabilities.

- Monitor for Spikes in Log Volume: Set up alerts that trigger when the volume of logs ingested or the number of metrics collected exceeds expected levels. A sudden increase in log volume can indicate a problem with the application or an unexpected surge in traffic, which could lead to increased costs. For example, an alert could be triggered if log ingestion exceeds a certain GB per day.

- Regularly Review and Adjust Alerts: Periodically review the cost alerts and thresholds to ensure they remain relevant and effective. As the application evolves and usage patterns change, the alerts may need to be adjusted to maintain optimal cost management.

Concluding Remarks

In conclusion, the journey through serverless monitoring and logging reveals a landscape of sophisticated techniques and strategic considerations. From understanding essential metrics and implementing structured logging to establishing proactive alerting systems and optimizing costs, the insights provided serve as a comprehensive framework for mastering the art of serverless observability. By embracing these practices, developers and operations teams can ensure the reliability, security, and cost-effectiveness of their serverless applications, ultimately driving innovation and achieving optimal performance in the cloud.

Essential Questionnaire

What is the primary difference between serverless and traditional monitoring?

Traditional monitoring often focuses on server-level metrics (CPU, memory, disk I/O), while serverless monitoring emphasizes function-level metrics (invocations, duration, errors) and API Gateway metrics (latency, error rates) due to the ephemeral nature of serverless functions and the distributed architecture.

How does structured logging improve serverless application troubleshooting?

Structured logging, such as JSON-formatted logs, allows for easier parsing, filtering, and analysis of log data. This facilitates faster identification of issues, correlation of events, and the ability to search and aggregate logs more efficiently, leading to quicker troubleshooting.

What are the security implications of logging in a serverless environment?

Logging in serverless environments requires careful consideration of access control, data encryption, and the handling of sensitive information. Logs can inadvertently expose sensitive data if not properly secured, emphasizing the need for robust security practices to protect against unauthorized access and data breaches.

How can I optimize costs related to serverless monitoring and logging?

Cost optimization involves reducing the volume of logs and metrics collected, utilizing cost-effective logging solutions, and setting up cost alerts to prevent unexpected charges. Implementing sampling strategies and filtering unnecessary log data are also effective methods.