The realm of artificial intelligence is rapidly evolving, with token-based pricing emerging as a prevalent model for accessing AI services. This approach, where users are charged based on the number of “tokens” processed, offers a flexible and often cost-effective way to utilize powerful AI models. However, this pricing strategy presents a unique set of challenges that both AI providers and consumers must navigate to ensure fairness, transparency, and sustainable growth within the AI ecosystem.

This exploration delves into the intricacies of token-based pricing, examining issues from transparency and predictability to security and model optimization. We will dissect the complexities of defining a “token,” explore diverse pricing structures, and discuss the impact of these models on user experience and model selection. Our aim is to provide a comprehensive understanding of the current landscape and illuminate potential pathways for a more equitable and efficient future for AI.

Introduction to Token-Based Pricing in AI

Token-based pricing has emerged as a prevalent model for charging users for access to and utilization of AI models. This approach allows for granular control over costs and aligns pricing more closely with actual usage. It has become a cornerstone of the AI-as-a-Service (AIaaS) business model, enabling providers to scale their services effectively while offering users flexible and transparent pricing options.

Fundamental Concept of Token-Based Pricing

Token-based pricing in AI revolves around the concept of breaking down input and output data into discrete units called “tokens.” These tokens are then used as the basis for calculating the cost of using an AI model. The number of tokens processed, whether in the form of input prompts or generated outputs, determines the final price. This method allows for a pay-as-you-go model, where users are charged only for the resources they consume.

The definition of a token varies depending on the AI model and the specific service provider, but it generally represents a unit of text, code, or other data processed by the model.

Examples of AI Services Employing Token-Based Pricing

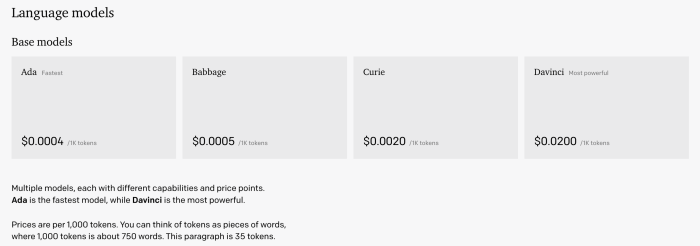

Several prominent AI services have adopted token-based pricing, showcasing its versatility and widespread adoption. These examples illustrate the diverse applications of this pricing model:

- Large Language Models (LLMs): Services like OpenAI (Kami, GPT-4) and Anthropic (Claude) utilize token-based pricing. Users are charged based on the number of tokens in their prompts (input) and the number of tokens generated by the model (output). The cost per token can vary depending on the model’s size and capabilities.

- Image Generation Models: Platforms offering image generation, such as Midjourney and Stable Diffusion, also use token-based or equivalent pricing structures. While the exact “token” definition may differ (e.g., “credits” or “generations”), the underlying principle remains the same: users pay based on the amount of computation and resources consumed for each image generated.

- Speech-to-Text and Text-to-Speech Services: Companies specializing in audio processing, such as Google Cloud Speech-to-Text and Amazon Polly, often employ token-based pricing, measured by the number of seconds or characters processed.

- Code Generation and Completion Tools: Services like GitHub Copilot and other AI-powered coding assistants often price their services based on the number of tokens processed in code suggestions, completions, or analyses.

Core Advantages of Token-Based Pricing

Token-based pricing offers several advantages for both AI model providers and consumers, contributing to its widespread adoption in the AI industry.

- For AI Model Providers:

- Scalability: Token-based pricing enables providers to scale their services more effectively. They can accommodate a larger user base without significant upfront infrastructure investments, as they only pay for the resources consumed.

- Resource Optimization: This pricing model encourages efficient resource allocation. Providers can optimize their models and infrastructure to minimize token processing costs, leading to improved profitability.

- Flexibility: It allows providers to offer different pricing tiers based on the model’s capabilities, speed, and other factors, catering to a wide range of users.

- For Consumers:

- Transparency: Token-based pricing provides a clear and transparent understanding of costs. Users can easily estimate their expenses based on their usage patterns.

- Cost Control: Users have more control over their spending. They can monitor their token consumption and adjust their usage to stay within their budget.

- Pay-as-you-go: This model eliminates the need for long-term commitments or fixed subscription fees, making AI services accessible to a broader audience, including individual developers and small businesses.

Transparency and Predictability Concerns

Token-based pricing, while offering advantages, introduces complexities regarding transparency and predictability. Users need clear insights into how their token consumption translates into cost and must be able to forecast their spending effectively. This section addresses the challenges in achieving this and explores strategies for mitigating them.

Ensuring Transparency in Token Consumption

Transparency is crucial for user trust and informed decision-making. Without it, users may feel they lack control over their spending and be hesitant to adopt token-based pricing models.

- Task-Specific Consumption Variations: Different AI tasks consume tokens at varying rates. For instance, summarization may consume fewer tokens than creative writing. The complexity of the prompt and the desired output length significantly influence token usage.

- Model-Specific Differences: Token consumption can differ across various AI models, even when performing the same task. A more sophisticated model might produce higher-quality results but also consume more tokens.

- Input and Output Length: The length of both the input prompt and the generated output directly affects token count. Longer prompts and more extensive outputs inherently require more tokens.

- Hidden Token Usage: Some AI models may have hidden token usage related to internal processes, such as pre-processing or post-processing of text, which are not always explicitly accounted for.

- Lack of Granularity in Reporting: Current reporting mechanisms may lack the granularity needed to understand precisely how tokens are used within complex workflows. This makes it difficult to identify areas for optimization.

Methods for Predicting AI Model Usage Cost

Predicting the cost of AI model usage based on token counts is essential for budgeting and resource allocation. Several methods can be employed to improve cost predictability.

- Token Counting Tools: Utilize tools to accurately count tokens in prompts and expected outputs before submitting them to the AI model. This provides a baseline for cost estimation.

- Model Documentation and Pricing Tables: Consult the AI model provider’s documentation for detailed pricing information, including token rates and any tiered pricing structures.

- Historical Data Analysis: Track past token consumption patterns for similar tasks and prompts to establish a historical baseline for cost prediction.

- Pilot Programs and Small-Scale Testing: Conduct pilot programs or small-scale tests with a representative sample of prompts to assess token usage and cost before scaling up.

- Cost Optimization Strategies: Implement strategies to minimize token consumption, such as prompt engineering, output length control, and model selection.

Unpredictable Token Consumption Scenarios and Their Impact

Several scenarios can lead to unpredictable token consumption, which can negatively impact user budgets and create financial uncertainty.

- Prompt Variations and User Input: The variability in user prompts and input data can lead to unpredictable token usage. Users may unknowingly provide longer or more complex prompts, increasing costs.

- Model Updates and Changes: Updates to AI models can alter token consumption rates. A model update that enhances performance might consume more tokens for the same task.

- Network Latency and API Errors: Network latency or API errors can lead to retries, which can consume additional tokens. These unexpected events can quickly increase costs.

- Unexpected Output Length: The AI model’s output length can be difficult to predict, especially in creative tasks. An unexpectedly long output will result in higher token consumption.

- Data Quality Issues: Poor data quality in input prompts can sometimes lead to longer and more complex responses from the AI model, increasing token consumption.

Measuring and Defining a “Token”

Understanding how AI models measure and define a “token” is crucial for effectively utilizing token-based pricing. The concept of a token varies across different models and significantly impacts the cost of using AI services. This section explores the methods used to define and measure tokens, offering users the knowledge to manage their AI usage costs more effectively.

Tokenization Methods in AI Models

Tokenization is the process of breaking down text into smaller units, or tokens, that AI models can understand and process. The choice of tokenization method directly influences the efficiency and cost-effectiveness of using these models. Different AI models employ varying tokenization strategies, each with its advantages and disadvantages.

- Character-based Tokenization: This method breaks down text into individual characters. While simple, it can lead to very long sequences for complex words, increasing computational costs. An example is when the word “tokenization” is tokenized into individual characters: “t”, “o”, “k”, “e”, “n”, “i”, “z”, “a”, “t”, “i”, “o”, “n”.

- Word-based Tokenization: This approach splits text into words. It’s intuitive but can struggle with out-of-vocabulary (OOV) words and may not capture nuances in word structure or relationships.

- Subword Tokenization: This is the most common method, breaking words into subword units. It balances the benefits of both character and word-based tokenization, handling OOV words effectively and capturing morphological information. Examples include Byte Pair Encoding (BPE), WordPiece, and SentencePiece. These methods allow the model to handle unseen words by breaking them down into known subword units.

Tokenization Differences Between Language Models

Different language models utilize different tokenization strategies, which lead to varying token counts for the same input text. These differences can directly impact the cost associated with using each model. The following table provides a comparative overview of tokenization differences between some popular language models.

| Language Model | Tokenization Method | Typical Token Length | Examples |

|---|---|---|---|

| GPT-3 (OpenAI) | Byte Pair Encoding (BPE) | Varies, but typically subwords. | “tokenization” might be broken into “token”, “ization”. “unbelievable” might be split into “un”, “believ”, “able”. |

| BERT (Google) | WordPiece | Subwords | “tokenization” could be split into “token”, “##ization”. “running” could be “run”, “##ning”. The “##” prefix indicates a subword that is part of a larger word. |

| RoBERTa (Facebook) | Byte Pair Encoding (BPE) | Subwords | Similar to GPT-3, but potentially with a different vocabulary learned from a different dataset. The split of “tokenization” or “unbelievable” will vary depending on the model’s training. |

| T5 (Google) | SentencePiece | Subwords | SentencePiece handles spaces as a special token, which can affect token counts. “Hello world” could be tokenized as ” Hello”, ” world”. |

Understanding Token Counts for Specific AI Services

To effectively manage AI costs, users need to understand how their input translates into token counts for specific AI services. Many AI service providers offer tools or APIs to estimate token usage before processing.

Here are some ways to determine token counts:

- Provider-Specific Tokenizers: Many providers offer tokenizers or libraries that allow users to tokenize text using the same method as the AI model. This lets users calculate token counts offline. For instance, OpenAI provides a tokenizer tool for its models.

- API Responses: When using an AI service, the API response often includes the number of tokens used for the input and output. This allows users to monitor their usage in real time.

- Online Token Counters: Several online tools provide token counting functionality for different AI models. Users can input their text and get an estimated token count. These tools are useful for quick estimations.

Consider the following example:

A user wants to translate the English sentence “The quick brown fox jumps over the lazy dog” using an AI service that charges per token. Using the OpenAI tokenizer for GPT-3, the sentence might be tokenized into 13 tokens (including spaces). If the service charges $0.0002 per 1000 tokens, the cost for this sentence would be approximately $0.0000026 (13 tokens / 1000 tokens$0.0002). However, using a different model, the token count could vary, therefore the cost would also change.

Pricing Structures and Fairness

Token-based pricing models, while offering a degree of transparency, present significant challenges related to fairness. The structure of these pricing systems, how they account for the inherent complexities of different inputs, and the measures taken to mitigate biases are all crucial for establishing equitable access and cost. This section will explore diverse pricing structures, the potential for unfairness, and strategies for creating a more balanced token-based pricing system.

Diverse Pricing Structures for Tokens

Various pricing models can be employed for token-based AI services. Each has its own advantages and disadvantages, impacting how costs are perceived and managed by users. Understanding these different approaches is essential for selecting the most appropriate model for a specific application.

- Tiered Pricing: This structure offers different price points based on the volume of tokens consumed. Users pay a lower per-token rate as their usage increases, incentivizing higher consumption. For example, a model might charge $0.001 per 1,000 tokens for the first 1 million tokens, $0.0008 per 1,000 tokens for the next 5 million, and $0.0006 for anything above that. This is common in cloud computing and other usage-based services.

- Pay-as-you-go (PAYG) Pricing: Users are charged only for the tokens they consume, without any upfront commitment or subscription fees. This model is flexible and suitable for infrequent or unpredictable usage patterns. The price per token is typically higher than in tiered or subscription models. For instance, a model could charge $0.0012 per 1,000 tokens, billed monthly.

- Subscription-Based Pricing: Users pay a fixed fee for a certain number of tokens or a specific level of access over a defined period (e.g., monthly or annually). This model provides cost predictability for regular users. For example, a subscription might offer 1 million tokens per month for $10, with overage charges for additional tokens.

- Reserved Instance Pricing: Similar to reserved instances in cloud computing, this model allows users to pre-purchase a certain amount of tokens at a discounted rate, committing to their use over a specific period. This is suitable for users with predictable, high-volume usage.

- Hybrid Pricing: This approach combines elements from multiple models. For example, a service might offer a basic subscription with a set number of tokens and then apply pay-as-you-go pricing for any usage exceeding the subscription limit.

Potential for Price Unfairness

Price unfairness can arise in token-based AI models due to variations in input complexities and language processing requirements. The complexity of the input text, the specific language used, and the task being performed can all influence the number of tokens required and, consequently, the cost incurred by the user.

- Input Complexity: Longer, more complex inputs generally require more tokens. For example, processing a detailed legal document will likely consume more tokens than summarizing a short news article. This can lead to discrepancies in cost depending on the type of content being processed.

- Language Variations: Different languages have varying tokenization requirements. Languages like Chinese and Japanese, which do not use spaces to separate words, often require different tokenization strategies and can result in a higher token count for equivalent information compared to English. This disparity can lead to higher costs for users who primarily work with these languages.

- Task Complexity: Different tasks demand varying levels of computational resources. For example, tasks like text generation or image captioning often require significantly more tokens than simpler tasks like sentiment analysis. A user performing complex tasks would naturally incur a higher cost than one performing simpler ones.

- Model Efficiency: The underlying efficiency of the AI model itself impacts token usage. More efficient models will generally consume fewer tokens for the same task, leading to lower costs for users. However, model efficiency can vary widely across different providers and versions.

Strategies for Creating a Fair Token-Based Pricing System

Mitigating price unfairness requires implementing strategies that promote transparency, consider input variations, and provide users with control over their costs. A combination of the following strategies can contribute to a fairer pricing system.

- Transparent Token Definitions: Clearly defining how tokens are measured and calculated is essential. Providers should publish detailed information about their tokenization process, including how they handle special characters, whitespace, and other linguistic elements.

- Language-Specific Considerations: Pricing models should consider the tokenization differences between languages. This might involve adjusting the per-token price based on the language used or offering specialized models optimized for certain languages.

- Complexity-Aware Pricing: Pricing could be adjusted based on the complexity of the task being performed or the type of input being processed. This might involve charging a premium for complex tasks or providing discounts for simpler ones. For instance, a provider could offer a lower per-token rate for summarization compared to text generation.

- Usage Monitoring and Reporting: Providing users with detailed reports on their token usage, including breakdowns by input type, language, and task, empowers them to understand and manage their costs.

- Cost Control Mechanisms: Implementing cost control features, such as usage limits and budget alerts, allows users to prevent unexpected charges.

- Benchmarking and Performance Metrics: Regularly benchmarking model performance and providing metrics like “tokens per output” can give users insights into the efficiency of different models and tasks.

- A/B Testing of Pricing Models: Testing different pricing models with user groups can help providers identify and refine models that are perceived as fair and effective. This can involve experimenting with tiered pricing, volume discounts, or subscription options.

- Open Source Tokenizers: Providing or utilizing open-source tokenizers ensures transparency and allows users to understand and potentially customize the tokenization process.

Security and Abuse Prevention

Token-based pricing models, while offering several advantages, introduce new security vulnerabilities and opportunities for abuse. Protecting AI models and the underlying infrastructure from malicious actors is paramount. Implementing robust security measures and abuse mitigation techniques is crucial for maintaining the integrity and sustainability of these pricing systems. This section will explore potential threats and the strategies to counteract them.

Potential Security Vulnerabilities

Token-based systems are susceptible to various security threats that can lead to financial losses, service disruptions, and reputational damage. Understanding these vulnerabilities is the first step toward effective protection.

- Token Generation Manipulation: Attackers might exploit vulnerabilities in the token generation process to create an excessive number of tokens without legitimate usage. This could involve bypassing rate limits or exploiting flaws in the token issuance logic. For example, a vulnerability in a smart contract responsible for issuing tokens could allow an attacker to mint an unlimited supply, devaluing the tokens and potentially overwhelming the AI model’s resources.

- Token Theft and Fraud: If tokens are tradable or transferable, they become targets for theft and fraud. Attackers could employ phishing, social engineering, or direct hacking attempts to steal tokens from legitimate users. Stolen tokens could then be used to abuse the AI model, generating fraudulent outputs or consuming excessive resources.

- Denial-of-Service (DoS) Attacks: Attackers might launch DoS attacks by flooding the AI model with token requests, overwhelming its capacity and making it unavailable to legitimate users. This could involve submitting a large volume of requests, each consuming a small number of tokens, or submitting a few extremely large requests.

- Malicious Prompt Injection: Attackers could craft malicious prompts designed to exploit vulnerabilities in the AI model’s input processing. These prompts could cause the model to generate inappropriate content, leak sensitive information, or even crash the model. The attacker might use a large number of tokens to repeatedly test and refine these malicious prompts.

- Token Inflation Attacks: If the value of a token is directly tied to the AI model’s performance, an attacker might attempt to manipulate the system to artificially inflate the token’s value. This could involve submitting specific inputs designed to improve the model’s perceived performance, allowing the attacker to later sell the inflated tokens for a profit.

Methods for Preventing Abuse of AI Models Through Token Manipulation

Preventing abuse requires a multi-layered approach that includes robust security measures, proactive monitoring, and effective response mechanisms. Implementing these methods helps safeguard the AI model and the token-based ecosystem.

- Strong Authentication and Authorization: Implementing strong authentication mechanisms, such as multi-factor authentication (MFA), helps verify the identity of users and prevent unauthorized access to token accounts. Proper authorization controls should restrict the actions users can perform based on their roles and token holdings.

- Rate Limiting and Usage Quotas: Rate limiting restricts the number of requests a user can make within a specific timeframe. Usage quotas limit the total number of tokens a user can spend during a defined period. These measures help prevent DoS attacks and limit the potential damage from compromised accounts. For instance, a system might limit a user to a maximum of 100 requests per minute or a spending limit of 10,000 tokens per day.

- Anomaly Detection and Monitoring: Continuous monitoring of token usage patterns and model outputs is essential for detecting suspicious activity. Anomaly detection algorithms can identify unusual spending habits, unexpected API calls, or the generation of potentially harmful content. For example, the system could flag an account that suddenly begins submitting requests at a significantly higher rate than its historical average.

- Input Validation and Sanitization: Thoroughly validating and sanitizing user inputs helps prevent malicious prompt injection attacks. This includes filtering out potentially harmful characters, limiting input length, and implementing other security checks to ensure the input is safe and appropriate.

- Smart Contract Security Audits (if applicable): If tokens are managed through smart contracts (e.g., on a blockchain), regular security audits are crucial to identify and fix vulnerabilities in the contract code. These audits should be conducted by independent security experts to ensure the contract is secure and resistant to attacks.

- Token Burn Mechanisms: Implementing a token burn mechanism, where a portion of the tokens is permanently removed from circulation, can help control token inflation and reduce the incentive for abuse. This could be triggered by specific actions, such as the generation of inappropriate content or the violation of terms of service.

Importance of Rate Limiting and Other Abuse Mitigation Techniques

Rate limiting and other abuse mitigation techniques are fundamental for maintaining the stability, fairness, and security of token-based pricing systems. They are critical in protecting both the AI model and its users.

- Preventing Resource Exhaustion: Rate limiting prevents attackers from overwhelming the AI model’s resources, ensuring that the service remains available to legitimate users. Without rate limiting, a single malicious actor could potentially consume all available resources, causing a service outage.

- Mitigating Financial Risks: Abuse mitigation techniques help reduce the financial risks associated with token-based pricing. By limiting the amount of tokens an attacker can use, these techniques minimize the potential damage from compromised accounts or malicious activities.

- Promoting Fair Usage: Rate limiting and quotas ensure that all users have fair access to the AI model, preventing a small number of users from monopolizing the resources. This helps create a more equitable and sustainable ecosystem.

- Detecting and Preventing Abuse: Rate limiting, combined with monitoring and anomaly detection, helps identify and prevent abusive behavior. Unusual patterns of token usage can be flagged, allowing administrators to investigate potential abuse and take appropriate action.

- Protecting Against Malicious Attacks: These techniques serve as a defense against various types of attacks, including DoS attacks, prompt injection attacks, and token manipulation schemes. They provide a layer of security that protects the AI model and its users from harm. For instance, a system could automatically block or temporarily suspend accounts that exceed their rate limits or exhibit suspicious behavior, providing a safety net against attacks.

Model Optimization and Efficiency

Improving the efficiency of AI models is crucial in token-based pricing. Optimization directly affects the number of tokens consumed for a given task, which in turn influences the cost for users. Efficient models translate to lower costs and better performance, making them more attractive to users and fostering wider adoption of AI applications.

Impact of AI Model Optimization on Token Consumption

Model optimization significantly reduces token consumption by streamlining the computational processes within the AI model. Optimizations can involve various techniques, each with a distinct impact on token usage.

- Reducing Model Size: Smaller models, achieved through techniques like pruning or quantization, require fewer parameters to process input and generate output. This directly translates to fewer tokens being processed during each interaction. For instance, consider a large language model (LLM) initially requiring 100,000 tokens to answer a query. After pruning, the model might perform the same task using only 70,000 tokens, leading to a 30% reduction in token consumption and cost.

- Optimizing Input Processing: Efficient input processing, such as data preprocessing and feature engineering, can reduce the amount of input data needed. By cleaning and transforming data before it’s fed into the model, the model needs to process fewer irrelevant tokens. An example would be sentiment analysis; by removing stop words and irrelevant characters, the model processes only the key terms and their context, reducing the total token count needed for analysis.

- Improving Inference Speed: Faster inference times, achieved through hardware acceleration or optimized algorithms, indirectly reduce token consumption by enabling quicker processing of user requests. This can prevent scenarios where users need to resubmit requests or abandon tasks due to slow response times, thus avoiding unnecessary token usage.

- Fine-tuning for Specific Tasks: Fine-tuning a pre-trained model on a specific task can improve its accuracy and efficiency. This can reduce the number of tokens needed to achieve the desired outcome compared to using a more general model. For example, a general-purpose LLM might require 500 tokens to generate a product description, while a fine-tuned model for e-commerce might generate the same description using only 300 tokens due to its specialized knowledge.

Token Efficiency Comparison of Different Model Architectures

Different model architectures have varying levels of token efficiency for similar tasks. This difference is often tied to the complexity of the model and the underlying algorithms. Comparing the token efficiency of different models provides insights into the trade-offs between accuracy, speed, and cost.

Consider the task of machine translation from English to French. The token efficiency can be compared across different model architectures, each with varying performance characteristics:

| Model Architecture | Approximate Tokens per Translation (Example Sentence) | Accuracy (BLEU Score) | Cost per Translation (USD) |

|---|---|---|---|

| Recurrent Neural Network (RNN) | 50 | 25 | $0.0005 |

| Transformer (Base) | 35 | 35 | $0.0007 |

| Transformer (Optimized) | 25 | 37 | $0.0006 |

| Large Language Model (LLM) | 60 | 40 | $0.0012 |

The data shows that, for this specific task, the Transformer architecture (Optimized) offers the best balance of accuracy and token efficiency. RNNs, while potentially cheaper per token, may sacrifice accuracy, leading to unsatisfactory results, which can lead to a user trying again with the same request and increasing token usage. LLMs, despite higher accuracy, may be more expensive per token, highlighting the need to choose a model that fits the task’s specific requirements.

Designing a Process for Estimating Token Usage

Providing users with a reliable way to estimate token usage before running a complex AI task is crucial for transparency and cost management. This process involves several steps, from analyzing the input to calculating the estimated token count and potential cost.

- Input Analysis: Users must analyze the input data. This includes understanding the nature of the input, such as the length of text, the number of images, or the complexity of the task.

- Model Selection: The choice of the AI model significantly impacts token usage. Different models have different token consumption rates for the same task. Selecting the appropriate model based on the task’s requirements is crucial.

- Token Counting Mechanism: Implement a system that counts the tokens in the input data. This can be achieved through:

- Text-based models: Utilize tokenizers, which are tools that break down text into tokens based on the model’s vocabulary. These tools provide a count of the tokens in the input text.

- Image-based models: Estimate the number of tokens based on the image resolution, complexity, and the model’s processing requirements.

- Combined input types: If the input includes text and images, calculate the token count for each type and sum them up.

- Usage Estimation Tool: Develop a user-friendly tool that integrates the token counting mechanism. This tool should allow users to:

- Input their data or describe the task.

- Select the model they intend to use.

- Receive an estimated token count.

- See an estimated cost based on the model’s pricing.

- Providing Example Cases: Offer example cases and benchmarks. Show how different inputs and model choices affect token usage. Include real-world examples and scenarios. For example, provide a comparison of token usage for different document lengths, image resolutions, or complex tasks.

- Feedback and Refinement: Collect user feedback and regularly refine the token estimation process. Track actual token usage against estimated values to identify discrepancies and improve accuracy. This ensures that the tool remains reliable and useful over time.

The Impact on Model Selection

Token-based pricing significantly reshapes the landscape of AI model selection, forcing users to carefully consider the interplay between model performance, cost, and efficiency. This shift necessitates a more nuanced approach to choosing AI solutions, prioritizing not only accuracy but also the economic implications of token consumption.

Model Choice Influenced by Token Pricing

Token pricing directly influences the selection of AI models by introducing a financial dimension to model performance. Users are no longer solely focused on the highest accuracy; they must also evaluate the token consumption rate of a model for their specific application.The factors to consider include:

- Accuracy vs. Token Consumption: A highly accurate model might be prohibitively expensive if it consumes a large number of tokens per task. Conversely, a less accurate, more token-efficient model might be preferable for applications where minor inaccuracies are acceptable, such as content summarization or basic chatbots.

- Application Specificity: The nature of the application dictates the acceptable trade-offs. For critical applications like medical diagnosis or financial analysis, accuracy is paramount, and users might be willing to pay a premium for a more accurate, but potentially more token-intensive, model. For less critical tasks, such as generating marketing copy, a balance between accuracy and cost is more likely to be prioritized.

- Model Size and Complexity: Larger, more complex models often offer higher accuracy but typically consume more tokens. Smaller, more efficient models might be sufficient for simpler tasks, providing a cost-effective solution. For example, a large language model (LLM) like GPT-4 might be preferred for complex creative writing tasks, while a smaller model like a distilled version of GPT-3.5 could be adequate for generating short social media posts.

- Real-World Examples: Consider a small business wanting to build a customer service chatbot. They could choose a highly accurate, but expensive, LLM for complex queries, or a more cost-effective model trained on a specific dataset for common questions. The choice depends on the business’s budget and the complexity of customer interactions.

Trade-offs Between Accuracy, Token Consumption, and Cost

Making informed decisions requires a careful evaluation of the trade-offs between accuracy, token consumption, and overall cost. This involves understanding how these factors interact and how they affect the total cost of ownership.The key trade-offs are:

- Accuracy and Cost: Generally, higher accuracy often correlates with higher token consumption and, therefore, higher cost. Users must determine the acceptable level of accuracy for their application and choose a model that balances accuracy with cost-effectiveness.

- Token Consumption and Model Efficiency: Different models have varying levels of efficiency. Some models are optimized to consume fewer tokens for a given task. Selecting an efficient model can significantly reduce costs, even if it means a slight reduction in accuracy.

- Cost and Scalability: The cost per token directly impacts the scalability of an application. A model with a high cost per token might become unsustainable as the application grows and processes more data. Conversely, a token-efficient model allows for greater scalability within a given budget.

- Formula for Cost Calculation: The total cost can be calculated by multiplying the number of tokens consumed by the cost per token:

Total Cost = Number of Tokens

– Cost per Token

Accessibility of AI for Small Businesses and Individual Users

Token-based pricing has significant implications for the accessibility of AI, particularly for small businesses and individual users with limited budgets. The pricing model can either broaden or restrict access to AI tools and services.The impacts are:

- Potential for Increased Accessibility: Token-based pricing can democratize access to AI by offering pay-as-you-go models. This allows small businesses and individual users to experiment with and utilize AI without the upfront costs of traditional licensing or subscription models. They can pay only for the tokens they consume, aligning costs with actual usage.

- Risk of Exclusivity: If token prices are set too high, they can create a barrier to entry for users with limited financial resources. High token costs can make it challenging for small businesses to integrate AI into their operations, particularly for applications requiring large-scale data processing or frequent usage.

- Impact on Innovation: Affordable token pricing encourages experimentation and innovation. Small businesses and individual developers can explore new applications of AI without significant financial risk. This can lead to the development of new products and services and accelerate the adoption of AI across various industries.

- Examples of Impact: A small e-commerce business could use a token-based AI model to generate product descriptions. If the token costs are low, the business can afford to generate a large number of descriptions. If the token costs are high, the business might be limited in its ability to leverage AI for this task. Another example is an individual user who can leverage a token-based model to write code, or translate a text.

User Experience and Interface Design

Designing user interfaces that effectively communicate token usage and associated costs is crucial for user satisfaction and trust in token-based AI models. A well-designed interface empowers users to understand and manage their AI interactions, fostering transparency and preventing unexpected charges. This section Artikels guidelines and best practices for creating intuitive and informative interfaces.

Guidelines for Displaying Token Usage and Cost Information

Clear and accessible display of token usage and cost information is paramount. Users should readily understand how their actions translate into token consumption and financial implications.

- Prominent Placement: Token usage and cost information should be displayed prominently within the user interface. Avoid burying this information in obscure settings or secondary menus.

- Clear Labeling: Use straightforward and unambiguous labels such as “Tokens Used,” “Cost per Request,” and “Total Cost.” Avoid technical jargon that might confuse users.

- Contextual Information: Provide context for token usage. For example, indicate the token cost associated with specific features or actions within the AI model.

- Units and Currency: Clearly specify the units of measurement for tokens (e.g., “tokens,” “characters,” or “words”) and the currency used for cost calculations (e.g., USD, EUR).

- Summarized History: Offer a history of token usage, including dates, times, actions performed, and corresponding costs. This enables users to track their consumption patterns.

- Visual Representations: Utilize visual aids, such as charts and graphs, to illustrate token usage trends and spending over time. This can help users quickly grasp their consumption behavior.

Best Practices for Real-Time Feedback on Token Consumption

Providing real-time feedback on token consumption is essential for empowering users to manage their AI interactions proactively. This ensures they can make informed decisions during model usage.

- Dynamic Updates: The interface should dynamically update token usage and cost information as users interact with the AI model.

- Progress Indicators: Employ progress bars or other visual indicators to show token consumption during lengthy AI operations, such as content generation or image processing.

- Threshold Warnings: Implement threshold warnings to alert users when their token usage approaches predefined limits or spending thresholds. This prevents unexpected costs.

- Real-Time Calculation: Provide real-time cost calculations based on the current token usage and pricing structure.

- Interactive Controls: Offer interactive controls that allow users to adjust input parameters or features to control token consumption. For example, allow users to select image resolution or text length.

- Clear Error Messages: Display clear and informative error messages if token limits are reached or if there are issues with token consumption.

UI Elements for Communicating Token Pricing

Several UI elements can effectively communicate token pricing to users. The choice of elements depends on the specific AI model and the target audience.

- Token Counters: Display a counter showing the number of tokens used in real-time. For instance, a text generation interface could display a counter showing the number of words or characters entered.

- Cost Estimates: Provide estimated costs for specific actions or features. For example, a tool for image generation could show the estimated cost per image based on the chosen resolution and complexity.

- Pricing Tables: Present pricing tables that clearly Artikel the token costs for different features, tiers, or usage levels.

- Budget Trackers: Integrate budget trackers that allow users to set spending limits and monitor their expenses. These trackers can display visual representations of spending against the set budget.

- Usage Dashboards: Offer comprehensive usage dashboards that display token consumption, cost, and other relevant metrics over time. These dashboards can include charts, graphs, and tables to facilitate analysis.

- Tooltips and Pop-ups: Utilize tooltips or pop-ups to provide detailed explanations of token pricing and usage. These elements can appear when users hover over or click on specific features or interface elements.

- Examples: Provide concrete examples of how different actions translate into token consumption. For instance, “Generating a 500-word article costs approximately 1,000 tokens.”

Consider an example of a text summarization tool. The interface might display a token counter showing the number of characters in the input text and the estimated cost per summary. A progress bar would indicate the progress of the summarization process, and a budget tracker would show the user’s remaining token balance. A tooltip could explain the token cost per character, providing a clear understanding of the pricing model.

Future Trends and Innovations

The landscape of token-based pricing for AI models is dynamic, with continuous advancements shaping its evolution. Several emerging trends and potential innovations are poised to address current challenges and redefine how users access and utilize AI services. These developments aim to enhance transparency, efficiency, and user experience, ultimately fostering a more robust and accessible AI ecosystem.

Dynamic Token Allocation and Real-Time Adjustments

The static nature of many current token-based pricing models may be replaced by dynamic systems that adjust token allocation in real-time. This shift would enable more flexible pricing based on several factors, including model complexity, resource utilization, and demand fluctuations.

- Adaptive Pricing Algorithms: Implementing algorithms that automatically adjust token prices based on real-time factors such as server load, model performance, and the number of concurrent users. For instance, during peak hours, token prices might increase slightly to manage demand and maintain service quality, while prices could decrease during off-peak times.

- Usage-Based Token Consumption: Moving beyond fixed token rates per request to a model where token consumption is directly tied to the actual computational resources used. This approach would allow users to pay only for the exact resources consumed, promoting fairness and cost-effectiveness. For example, a user submitting a complex query that requires significantly more computational power would consume more tokens than a simple query.

- Personalized Token Bundles: Offering personalized token bundles tailored to individual user needs and usage patterns. AI service providers could analyze user behavior and recommend bundles that optimize cost and performance. This would cater to diverse user profiles, from casual users to power users with specific needs.

Decentralized and Transparent Token Systems

Decentralization and enhanced transparency are key future trends. Blockchain technology could play a pivotal role in creating more transparent and verifiable token-based systems.

- Blockchain-Based Token Transactions: Utilizing blockchain to record all token transactions, providing an immutable and auditable history of token usage and payments. This would reduce the risk of fraud and increase trust in the pricing system. Every transaction would be transparently recorded, allowing users to verify their token consumption and payment history.

- Smart Contracts for Automated Pricing: Implementing smart contracts to automate token pricing, allocation, and distribution. Smart contracts could automatically adjust token prices based on predefined conditions, such as resource availability or model performance metrics. This would reduce the need for manual intervention and increase the efficiency of the pricing process.

- Decentralized AI Marketplaces: Creating decentralized marketplaces where AI models are traded using tokens. This would empower AI model creators to directly monetize their models and allow users to access a wider range of models at competitive prices. The marketplace would be governed by transparent rules and algorithms, fostering fairness and competition.

Advanced Token Metrics and Analytics

Improved data analytics and more sophisticated token metrics are expected to offer deeper insights into AI model usage and pricing.

- Granular Token Usage Tracking: Implementing detailed tracking of token usage, including metrics like processing time, memory consumption, and the specific model features used. This would provide users with a clearer understanding of how tokens are consumed and enable more accurate cost analysis.

- Performance-Based Token Rewards: Rewarding AI model creators with additional tokens based on the performance of their models. This would incentivize the development of high-quality models and promote continuous improvement. Metrics such as accuracy, speed, and user satisfaction could be used to determine token rewards.

- Predictive Analytics for Token Consumption: Utilizing predictive analytics to forecast future token consumption based on user behavior and model usage patterns. This would allow users to proactively manage their token budgets and optimize their AI usage. For example, a user could receive alerts when their predicted token consumption is likely to exceed their current budget.

Future Scenario: The Transparent AI Ecosystem

Imagine a future where token-based AI pricing is fully transparent, efficient, and user-centric. This ecosystem would be characterized by several key features:

- A Unified Token Standard: A universally accepted token standard would enable seamless interoperability between different AI models and platforms. Users could use the same tokens to access various AI services, simplifying the user experience.

- Real-Time Performance Metrics: AI models would provide real-time performance metrics, such as accuracy, latency, and resource utilization, directly accessible to users. This transparency would allow users to make informed decisions about model selection and token consumption.

- Dynamic Pricing Algorithms: Dynamic pricing algorithms would automatically adjust token prices based on demand, resource availability, and model performance. Prices would fluctuate in real-time, reflecting the true cost of AI services.

- Decentralized Governance: A decentralized governance system would ensure that all stakeholders, including users, model creators, and platform providers, have a voice in shaping the future of the AI ecosystem. This collaborative approach would promote fairness and innovation.

- AI-Powered Budgeting Tools: AI-powered budgeting tools would help users manage their token budgets effectively. These tools would provide insights into token consumption, predict future costs, and offer recommendations for optimizing AI usage.

In this future scenario, the focus shifts from merely purchasing tokens to actively participating in a transparent and efficient AI ecosystem, where users have complete control over their AI consumption and costs.

Last Recap

In conclusion, while token-based pricing offers significant advantages in the AI domain, its implementation is fraught with challenges. From ensuring transparency in token consumption to mitigating potential security vulnerabilities, the path forward requires continuous innovation and a commitment to user-centric design. By addressing these challenges, we can foster an AI ecosystem that is not only powerful and accessible but also fair and sustainable, paving the way for a future where the benefits of AI are broadly realized.

FAQ Overview

What exactly is a “token” in the context of AI pricing?

A token is a unit of text or code that an AI model processes. The definition of a token varies between models, often representing words, parts of words (subwords), or punctuation marks. The specific tokenization method impacts how input text is converted into tokens, and consequently, the cost.

How can I estimate the token usage of my AI tasks before running them?

Many AI service providers offer token calculators or tools to estimate token usage based on your input text. You can also use online tokenizers to see how your text is broken down into tokens by specific models. Experimentation with shorter inputs is recommended before undertaking larger tasks.

Are there any strategies to reduce token costs when using AI models?

Yes, there are several strategies. You can optimize your input text by using concise language, avoiding unnecessary words or phrases. Choosing more efficient AI models that consume fewer tokens for the same task is also beneficial. Additionally, pre-processing and summarizing your data can significantly reduce token counts.

How does the complexity of my input affect token pricing?

The complexity of your input can greatly influence token consumption. Longer and more detailed inputs generally require more tokens. Furthermore, certain models may tokenize complex or less common language differently, impacting the total token count and cost.

What are the risks associated with using AI models with token-based pricing?

Risks include the potential for unpredictable costs if token consumption is not carefully managed, the possibility of security vulnerabilities related to malicious input designed to inflate token usage, and the challenge of selecting the most cost-effective model for a given task. Always monitor your usage and understand the pricing structure.